Abstract

The histopathological diagnosis of mycobacterial infection may be improved by a comprehensive analysis using artificial intelligence. Two autopsy cases of pulmonary tuberculosis, and forty biopsy cases of undetected acid-fast bacilli (AFB) were used to train AI (convolutional neural network), and construct an AI to support AFB detection. Forty-two patients underwent bronchoscopy, and were evaluated using AI-supported pathology to detect AFB. The AI-supported pathology diagnosis was compared with bacteriology diagnosis from bronchial lavage fluid and the final definitive diagnosis of mycobacteriosis. Among the 16 patients with mycobacteriosis, bacteriology was positive in 9 patients (56%). Two patients (13%) were positive for AFB without AI assistance, whereas AI-supported pathology identified eleven positive patients (69%). When limited to tuberculosis, AI-supported pathology had significantly higher sensitivity compared with bacteriology (86% vs. 29%, p = 0.046). Seven patients diagnosed with mycobacteriosis had no consolidation or cavitary shadows in computed tomography; the sensitivity of bacteriology and AI-supported pathology was 29% and 86%, respectively (p = 0.046). The specificity of AI-supported pathology was 100% in this study. AI-supported pathology may be more sensitive than bacteriological tests for detecting AFB in samples collected via bronchoscopy.

1. Introduction

Tuberculosis (TB) is an airborne disease caused by Mycobacterium tuberculosis, resulting in 10 million cases and 1.4 million deaths worldwide annually [1,2]. Additionally, an increased number of nontuberculous mycobacterial (NTM) infections caused by acid-fast bacilli (AFB) have been recently observed in many countries, particularly in Asia [3,4,5,6]. NTM infections affect the lungs, skin, and lymph nodes, which are difficult to manage, cementing it as a significant health problem, similar to TB [7].

AFB infections, such as TB and NTM, are diagnosed either by detection of the mycobacteria through microscopy or culture tests. The most common route of transmission of TB and NTM infections is the respiratory tract; therefore, sputum examination is initially performed [2,3]. Fluorescence microscopy is the most accurate method for sputum smear examination, with a sensitivity of >70% and >50% for TB and NTM, respectively [2,8]. However, in >20–40% of cases, the sputum smear test shows false negatives. In these cases, a lung biopsy using bronchoscopy may be performed, but is not recommended routinely due to its invasive nature [2,3]. However, the sensitivity of transbronchial lung biopsy (TBLB) for the diagnosis of TB only ranges from 42–63%, even when only used to visualize granulomas, not the mycobacteria [9,10].

The effectiveness of artificial intelligence (AI) in pathological diagnosis, especially in oncology, has recently been established [11,12,13,14], and is associated with technological innovations, whole slide imaging (WSI) advancements, and digital capturing of histopathological slides [15,16,17]. AI is desirable in diagnosing mycobacteria histologically, as its comprehensiveness may reduce false negatives; however, obtaining a clear image using WSI is limited by the small size of AFB (0.2–0.6 μm × 1–10 μm). To address this limitation, recent studies have applied AI-based detection of AFB on WSIs [18,19,20]. However, the clinical use of AI in the pathology of mycobacteriosis has not been reported.

This study compared bacteriology and AI-supported pathology in TBLB to validate the clinical usefulness of AI in supporting the pathological diagnosis of mycobacteriosis.

2. Materials and Methods

2.1. Study Subjects

We selected two representative autopsy cases of pulmonary TB as training data to develop AI-assisted detection of AFB from tissues. In these autopsy cases, we used one typical section where numerous AFBs were detected by Ziehl–Neelsen staining. Additionally, we randomly selected 40 cases who underwent biopsy and had no AFB on Ziehl–Neelsen staining to train other histopathological specimens: eight surgical lung biopsies, 20 TBLBs, six transbronchial mediastinal lymph node needle biopsies, and six bone marrow clots or bone marrow biopsies. Subsequently, 14 consecutive cases that were not used as training data were selected as validation data; these patients underwent bronchoscopy to diagnose mycobacteria. All TBLBs were performed with 2.0-mm forceps, and the number of biopsies was 3–5. We scanned the Ziehl–Neelsen stained tissues at 400× magnification using Motic EasyScan (Motic, Hong Kong, China), and processed them into WSI.

Additionally, patient information, interferon-gamma releasing assay (IGRA) results, bacteriological test results, clinical course, and mycobacteriosis onset were collected from the medical records of the 14 patients used for AI validation.

2.2. Annotation

Annotation was performed by a consensus of three evaluators with expertise in pulmonary pathology (YZ, YK, and JF). We conducted pre-training of the AI using the Ziehl–Neelsen stained tissue. At this stage, we examined artifacts that the AI identified as AFB, but the evaluators judged as false positives compared with WSIs in 40×. Subsequently, we determined two representative patterns of artifacts that the AI misidentified as AFB: artifact 1, blue-to-black-stained nuclei of type I epithelial cells resembling AFB in shape; artifact 2, part of the fibrin and hyaline membrane, which stained pale-purple-to-pale-blue on Ziehl–Neelsen, and shaped similar to AFB. Subsequently, we annotated the AFB and two patterns of artifacts in the WSI as training data for AI (Figure 1). Annotation was performed by importing the WSI tissues into the HALO software (version 3.0; Indica Lab, Corrales, CA, USA), a quantitative image analysis platform. Short bacilli, with a length of 2–10 µm and a width of 0.3–0.6 µm, which stained red on Ziehl–Neelsen staining, were annotated as AFB. In the two autopsy cases used as training data, we annotated 506 AFBs contained in an area of approximately 15 mm2. In the 40 biopsy cases, we annotated two patterns of artifacts for all specimens.

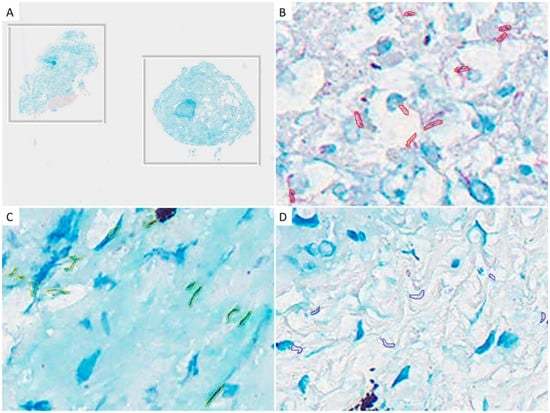

Figure 1.

Examples of annotations: (A) Ziehl–Neelsen staining tissues, which do not contain acid-fast bacilli (AFB), for the purpose of training the background other than AFB. No annotation was performed; (B) We annotated short-rod-shaped bacilli that were stained red in Ziehl–Neelsen staining as AFB; (C) Nuclei of type I epithelial cells showing AFB-like morphology, annotated as artifact 1; (D) Part of the fibrin-stained purple, annotated as artifact 2.

2.3. Construction of AI-Assisted Pathology

We used HALO AI (CNN, Dense network) to construct an AI-assisted pathology using WSI annotated on the HALO software (version 3.0; Indica Lab, Corrales, CA, USA). To account for the small size of AFB, the WSI resolution was set at 0.25 µm/px. In this study, we tried to build an AI algorithm that could detect AFB by transfer learning from an AI trained to detect malignant diseases, which was constructed in a previous study [21]. The pre-trained HALO AI was integrated with the annotated AFB and two patterns of artifacts. First, 50,000 integrations were performed for AFB and background lung annotations; subsequently, 73,800 integrations were performed, adding the two types of artifacts to build the AI algorithm for AFB detection. The analyzed images were automatically annotated with AFB classified by the AI. The evaluators judged each of these annotations as true or false positives.

This HALO AI used the MXNet engine, DenseNet-121, pre-trained on ImageNet backbone with the firt max pooling layer removed, and with a custom semantic segmentation head. The semantic segmentation head extracted features after each max pooling layer, and before the final fully connected layer. Each feature was passed through (BN-Relu-Conv) block resulting in a 256-channel feature map, which is upsampled by a factor of 2×, and summed with the next higher resolution embedded feature map. During training, auxiliary losses are added at the features extracted at lower resolutions by using a linear layer to predict the correct output, whereas during analysis, only the highest resolution output is used. The training was conducted on 256 × 256 patches at the defined resolution, which were generated by selecting a random class (with equal probability for each class), a random image containing annotations for the selected class (with equal probability), and a random point inside a region of the selected class and image. The patches were cropped surrounding the selected point, and were further augmented with random rotations and shifts in hue, saturation, contrast, and brightness. The model was pre-trained on ImageNet, and thereafter, trained for the defined number of iterations using Adam using an annealed cyclic learning rate (max learning rate, 2 × 10−4; minimum learning rate, 1 × 10−6; 100 iterations of warmup; initial cycle length, 1000 iterations; cycle length decay, 2.0; cycle magnitude decay, 0.5) (delta of 0.9) with a learning rate of 1 × 10−3, which was reduced by 10% every 10,000 iterations along with an L2 regularization of 1 × 10−4. During analysis, the tile size was increased to 896 × 896 without significantly altering the output, while increasing the performance.

2.4. Validation of the AI-Assisted Pathology

In the 42 patients who underwent bronchoscopy due to suspected mycobacteriosis, or to rule out mycobacteriosis, one pathologist (JF) first evaluated the Ziehl–Neelsen stained tissue using manual light microscopy to diagnose the presence of AFB. Subsequently, the WSIs of these patients were comprehensively analyzed using the constructed AI to identify AFB. For each annotation the AI recognized as AFB, six evaluators (YZ, YK, SI, YK, HSY, and JF) determined by consensus whether it was a true or false positive. Similar to ordinary pathological diagnosis, a positive slide contained at least one true positive annotation, and a negative slide contained no true positives (Figure 2). The AI-supported pathology results were compared with bacteriological tests, such as mycobacterium smear, culture, and nucleic acid amplification test (NAAT) using bronchial lavage fluid (BLF). TBLB for pathological evaluation, and BLF collection for bacteriology, were performed at the same bronchus branch. Mycobacterium smear, culture, and NAAT were performed using auramine-rhodamine staining, mycobacteria growth indicator tubes (Nippon Becton Dickinson Co., Ltd. Tokyo, Japan), and polymerase chain reaction (Loopamp; Eiken Chemical Co., Ltd. Tokyo, Japan), respectively. Additionally, we collected information regarding mycobacteriosis development from the patients’ medical records. Chest computed tomography (CT) was performed, and two experienced pulmonologists (YZ and MO) determined the following imaging features of mycobacteriosis [22]: nodular shadows, bronchiectasis, consolidation, cavity formation, and lymph node enlargement.

Figure 2.

Examples of acid-fast bacilli (AFB) recognized by AI in the study cohort: (A) AFB observed in a relatively large group (middle magnification); (B) High magnification; (C) AFB was observed sporadically at high magnification; (D) An example in which AI determined AFB, but the pathologist determined it as a false positive.

The histopathological and bacteriological examinations sensitivities were compared with the final diagnosis (ground truth), which defined true positives as patients with a confirmed diagnosis of mycobacteriosis using bronchoscopy, or patients who developed mycobacteriosis during the follow-up period after bronchoscopy; all other patients were considered true negatives. Mycobacteriosis was diagnosed by bacteriological tests according to the guidelines [2,3].

2.5. Statistical Analysis

The patient characteristics data are presented as median values with a 25–75% interquartile range. The difference between the bacteriological and pathological test sensitivity was analyzed using the McNemar test. Statistical significance was defined as p < 0.05, and all statistical analyses were performed using JMP 16.0 (SAS Institute, Cary, NC, USA).

All numerical data are presented as median values with a 25–75% interquartile range. Statistical significance of the difference between two or three groups was analyzed using the Wilcoxon rank-sum test, Mann–Whitney U test, or Fisher’s exact test, where applicable. Statistical significance was defined as p < 0.05, and all statistical analyses were performed using JMP 14.0 (SAS Institute, Cary, NC, USA).

3. Results

3.1. Patient Characteristics

The information of the 42 patients used for AI validation study is shown in Table 1. All patients underwent bronchoscopy as the clinical course, and other tests did not provide a definitive diagnosis. In the mycobacteriosis group, seven patients (44%) each were diagnosed with TB and NTM infection; two patients (12%) were not confirmed by subsequent follow-up, but mycobacteriosis was strongly suspected. Chest CT scan revealed multiple nodular shadows in 13 (81%) patients, consolidation in nine (56%), cavity formation in three (19%), and bronchiectasis in six (38%) in the mycobacteriosis group.

Table 1.

Patient characteristics.

3.2. Bacteriological Examination by Bronchoscopy

Using BLF, antibacterial smear, antibacterial culture, and NAAT were performed for all patients (Table 2). In the non-mycobacteriosis group, no cases were positive in the bacteriological tests. In the mycobacteriosis group, bacteriological examination of BLF showed a positive smear test in four patients (25%); among them, Mycobacterium tuberculosis and NTM were detected in one and three patients, respectively. Mycobacterium culture was positive in seven (44%) patients, among which, two had TB, and five had NTM infection. Mycobacterium NAAT test was positive in two NTM infections, in addition to the positive cases in culture. Among the 16 mycobacteriosis patients, 9 (56%) were positive for bacteriological tests. Seven cases (44%) had positive smear or culture tests which led to a definitive diagnosis.

Table 2.

Results of the bacteriological and pathological examinations.

3.3. Pathological Examination

Initially, one pathologist screened all patients to detect the presence of AFB using oil immersion microscopy, without AI; among them, two were positive for AFB. Subsequently, all patients underwent AFB evaluation using WSI with AI support, which identified 11 patients positive for AFB. Additionally, the two patients the pathologist diagnosed with AFB were also identified by the AI-supported pathology.

3.4. Comparison between Pathology, Bacteriology, and Final Diagnosis

The results of the bacteriological tests and AI-supported pathology in the mycobacteriosis and non-mycobacteriosis groups are shown in Table 3. Among all mycobacteriosis, seven cases (44%) showed positive results in smear or culture, which confirmed the diagnosis. Nine cases (56%) were positive in bacteriological tests, including NAAT. AI-supported pathology was positive in 11 cases (69%). There was no significant difference between the results of bacteriological tests and AI-supported pathology in all mycobacteriosis cases.

Table 3.

Comparison of bacteriological tests and AI-supported pathology.

When limited to TB, AI-supported pathology had significantly better sensitivity compared with bacteriological testing using bronchoscopy (86% vs. 29%; p = 0.046). In NTM infection cases, AI-supported pathology was less sensitive than in TB cases, as only three cases (43%) were positive (p = 0.046).

3.5. Comparison of the Number of AFB Detected by AI and Bacteriological Tests

This study also compared the number of AFB observed using bacteriological tests, radiological findings, and the final diagnosis (Table 4). Two patients demonstrated >100 AFB in their histopathological specimens, and had positive bacteriological tests, such as smear test, culture test, and NAAT. Additionally, among the seven patients with <10 AFB, three were diagnosed with TB, two with NTM, and two were under follow-up. In the seven patients with mycobacteriosis and no evidence of progressive disease, such as consolidation or cavitary formation on radiology, the sensitivity of AI-supported pathology was 86%, which was significantly higher than that of bacteriological tests (29%, p = 0.046).

Table 4.

Results of radiological, bacteriological, and pathological examinations in mycobacteriosis cases.

4. Discussion

This study validated the usefulness of AI-supported pathology in diagnosing mycobacteriosis. AI-supported pathology in TBLB had higher sensitivity compared with bacteriological tests using BLF. This study included 42 patients for validation, and showed AI-supported pathology had significantly higher sensitivity compared with bacteriological tests when limited to TB. Furthermore, the specificity of AI-supported pathology in this study was 100%. This study showed the usefulness of AI in comprehensively screening AFB, which is frequently missed using bacteriology.

This study also shows the clinical utility of AI-supported pathology. Generally, the sensitivity of the pathology for mycobacteriosis is approximately 50–80% lower compared with bacteriological tests [2,23]. However, this study detected AFB using comprehensive AI screening despite negative results when AI was not used. Notably, Pantanowitz et al. reported easier diagnosis using AI-assisted review, due to its higher sensitivity, negative predictive value, and accuracy compared with light microscopy and WSI evaluation without AI [24]. The current study showed that pathological diagnosis may be better at detecting AFB than traditionally indicated.

Furthermore, we found a low positive predictive value (PPV) of bacteriological tests (1/7, 14%) when AFB was low in pathological specimens, even when using AI comprehensive analysis. Additionally, the PPV of bacteriological tests was high (78%) in nine patients diagnosed with mycobacteriosis who demonstrated cavitary lesions or consolidation on radiology, which are suggestive of progressive disease; the PPV was low (29%) in the seven patients with mycobacteriosis with no signs of progressive disease on radiology, which is consistent with a previous study that found a higher AFB detection rate when cavity formation was found in TB [25]. In contrast, AI-supported pathology showed an 86% higher sensitivity than bacteriological tests in early-stage mycobacteriosis with no cavitary lesions or consolidation on CT, suggesting the superiority of AI-supported technology in detecting early-stage bacterial infections. Particularly, multiple modalities, using AI-supported pathology and bacteriological tests, may be useful in diagnosing early-stage mycobacteriosis, especially when using bronchoscopy.

Few reports examine the usefulness of bronchoscopy, especially TBLB, in mycobacteriosis. Bronchoscopy in TB diagnosis is mainly recommended for bronchial brushing and BLF, whereas TBLB is recommended for patients who require rapid diagnosis [2]. However, some studies on HIV patients only had TBLB as the available tool to diagnose TB in 10–23% of patients [9,26]; these studies considered TB diagnosis using characteristic histological findings, such as caseating granuloma, even if no AFB was found. Among those diagnosed with TB, AFB was observed in 14–57% of patients [2].

This study demonstrated that comprehensive AI analysis detected AFB in many patients, even if pathologists without AI were unable to. AI-supported pathology may detect AFB in patients that can only be diagnosed by TBLB. The small size of the TBLB specimen required only <1 min of WSI examination of the specimen. Therefore, AI-supported pathological diagnosis can be performed without changing daily practice and prolonging diagnostic time. Additionally, NAAT and whole-genome sequencing (WGS) have recently been used to determine drug resistance [27,28,29]. One study found the usefulness of reverse transcription-polymerase chain reaction in identifying species using tissue biopsy [30]. The combination of NAAT and WGS in AI-supported pathology-confirmed tissue may also allow for the measurement of drug susceptibility, which is important in developing treatment strategies, even in negative antibiotic culture tests. Additionally, performing NAAT and WGS after identifying tissue confirmed to have AFB by AI-supported pathology may be more cost-effective than performing these tests on all specimens.

This study showed the superior sensitivity of comprehensive AI analysis compared with a pathologist in detecting AFB. To screen all tissue samples with AI, it is necessary to process them into WSI. However, AFB detection by AI is greatly affected by the availability of high-quality images during WSI processing, which may greatly reduce AFB detection sensitivity. The usefulness of AI-supported pathology can be cited through the disadvantages and advantages of an increased AFB detection rate. This study demonstrated the usefulness of AI-supported technology through its advantages. However, other deep learning models and improved scanning quality for WSI may be used to build more useful models.

This study has several limitations. First, we only studied WSI produced by a single scanner and deep learning in one model. It may be important to compare this AI with other algorithms for the detection of AFB in the future. Second, only autopsy cases of TB were used as AFB-positive training data to construct the AI; additionally, NTM patients were not used as training data due to the rarity of biopsies with numerous microorganisms. In this study, the sensitivity of AI-supported pathology in NTM infection was very low. Different results may be obtained if NTM infection is added as training data. Third, the AI detects a high frequency of false-positive AFB: approximately 200–500 false positives for every true positive. We tried to reduce the number of false positives by recognizing the typical artifacts separately; however, numerous false positives remained. Previous reports described methods of combining multiple AI algorithms to improve AI diagnostic accuracy. Xiong et al. reported that compared with a pathologist, the AI diagnostic sensitivity using a CNN model was 86%, but increased to 98% after combining random forest classifiers [18], which was consistent with another study [19]; these reports show the possibility of reducing false-positive AFBs by combining AI algorithms. However, attempting to reduce false positives may cause true positives to become false negatives. The clinical utility of AI-assisted pathology requires a low false-negative rate.

5. Conclusions

AI-supported pathology may be more sensitive than bacteriological tests for detecting AFB in samples collected by bronchoscopy, especially in the early stages of the disease. Furthermore, combining multiple modalities may be necessary to detect AFB, since bacteriological tests may be insufficient.

Author Contributions

Conceptualization, Y.Z. and J.F.; methodology, Y.Z. and J.F.; validation, Y.Z. and Y.K. (Yuki Kanahori); formal analysis, Y.Z., Y.K. (Yuki Kanahori), S.I., Y.K. (Yuka Kitamura), H.-S.Y. and J.F.; investigation, M.O. and H.M.; resources, M.O. and H.M.; data curation, Y.Z. and Y.K. (Yuki Kanahori); writing—original draft preparation, Y.Z. and Y.K. (Yuki Kanahori); writing—review and editing, all authors; supervision, A.B., T.H. and J.F.; project administration, J.F.; funding acquisition, Y.K. (Yuka Kitamura) and J.F. All authors have read and agreed to the published version of the manuscript.

Funding

This study was partially financed by a research grant (approval No. 324) from KYUTEC provided by the Fukuoka Financial Group (Fukuoka, Japan).

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki, and approved by the Nagasaki University Hospital Clinical Research Ethics Committee (No. 19012108).

Informed Consent Statement

Informed consent was obtained in the form of an opt-out methodology between the date of certification and 31 March 2021.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

Yuka Kitamura is the CEO of N Lab Co. Ltd. All the other authors have no conflict of interest to disclose.

References

- World Health Organization. Global Tuberculosis Report 2020; WHO: Geneva, Switzerland, 2020. [Google Scholar]

- Lewinsohn, D.M.; Leonard, M.K.; LoBue, P.A.; Cohn, D.L.; Daley, C.L.; Desmond, E.; Keane, J.; Lewinsohn, D.A.; Loeffler, A.M.; Mazurek, G.H.; et al. Official American Thoracic Society/Infectious Diseases Society of America/Centers for Disease Control and Prevention Clinical Practice Guidelines: Diagnosis of tuberculosis in adults and children. Clin. Infect. Dis. 2017, 64, 111–115. [Google Scholar] [CrossRef] [PubMed]

- Griffith, D.E.; Aksamit, T.; Brown-Elliott, B.A.; Catanzaro, A.; Daley, C.; Gordin, F.; Holland, S.M.; Horsburgh, R.; Huitt, G.; Iademarco, M.F.; et al. An official ATS/IDSA statement: Diagnosis, treatment, and prevention of nontuberculous mycobacterial diseases. Am. J. Respir. Crit. Care. Med. 2007, 175, 367–416. [Google Scholar] [CrossRef] [PubMed]

- Falkinham, J.O., 3rd. Nontuberculous mycobacteria in the environment. Clin. Chest. Med. 2002, 23, 529–551. [Google Scholar] [CrossRef]

- von Reyn, C.F.; Waddell, R.D.; Eaton, T.; Arbeit, R.D.; Maslow, J.N.; Barber, T.W.; Brindle, R.J.; Gilks, C.F.; Lumio, J.; Lähdevirta, J.; et al. Isolation of Mycobacterium avium complex from water in the United States, Finland, Zaire, and Kenya. J. Clin. Microbiol. 1993, 31, 3227–3230. [Google Scholar] [CrossRef] [PubMed]

- Prevots, D.R.; Shaw, P.A.; Strickland, D.; Jackson, L.A.; Raebel, M.A.; Blosky, M.A.; Montes de Oca, R.; Shea, Y.R.; Seitz, A.E.; Holland, S.M.; et al. Nontuberculous mycobacterial lung disease prevalence at four integrated health care delivery systems. Am. J. Respir. Crit. Care. Med. 2010, 182, 970–976. [Google Scholar] [CrossRef]

- O’Brien, R.J.; Geiter, L.J.; Snider, D.E., Jr. The epidemiology of nontuberculous mycobacterial diseases in the United States. Results from a national survey. Am. Rev. Respir. Dis. 1987, 135, 1007–1014. [Google Scholar] [PubMed]

- Wright, P.W.; Wallace, R.J., Jr.; Wright, N.W.; Brown, B.A.; Griffith, D.E. Sensitivity of fluorochrome microscopy for detection of Mycobacterium tuberculosis versus nontuberculous mycobacteria. J. Clin. Microbiol. 1998, 36, 1046–1049. [Google Scholar] [CrossRef]

- Miro, A.M.; Gibilara, E.; Powell, S.; Kamholz, S.L. The role of fiberoptic bronchoscopy for diagnosis of pulmonary tuberculosis in patients at risk for AIDS. Chest 1992, 101, 1211–1214. [Google Scholar] [CrossRef]

- Kennedy, D.J.; Lewis, W.P.; Barnes, P.F. Yield of bronchoscopy for the diagnosis of tuberculosis in patients with human immunodeficiency virus infection. Chest 1992, 102, 1040–1044. [Google Scholar] [CrossRef]

- Niazi, M.K.K.; Parwani, A.V.; Gurcan, M.N. Digital pathology and artificial intelligence. Lancet Oncol. 2019, 20, e253–e261. [Google Scholar] [CrossRef]

- Colling, R.; Pitman, H.; Oien, K.; Rajpoot, N.; Macklin, P.; CM-Path AI in Histopathology Working Group; Snead, D.; Sackville, T.; Verrill, C. Artificial intelligence in digital pathology: A roadmap to routine use in clinical practice. J. Pathol. 2019, 249, 143–150. [Google Scholar] [CrossRef] [PubMed]

- Salto-Tellez, M.; Maxwell, P.; Hamilton, P. Artificial intelligence-the third revolution in pathology. Histopathology 2019, 74, 372–376. [Google Scholar] [CrossRef] [PubMed]

- Sakamoto, T.; Furukawa, T.; Lami, K.; Pham, H.H.N.; Uegami, W.; Kuroda, K.; Kawai, M.; Sakanashi, H.; Cooper, L.A.D.; Bychkov, A.; et al. A narrative review of digital pathology and artificial intelligence: Focusing on lung cancer. Transl. Lung Cancer Res. 2020, 9, 2255–2276. [Google Scholar] [CrossRef]

- Aeffner, F.; Zarella, M.D.; Buchbinder, N.; Bui, M.M.; Goodman, M.R.; Hartman, D.J.; Lujan, G.M.; Molani, M.A.; Parwani, A.V.; Lillard, K.; et al. Introduction to digital image analysis in whole-slide imaging: A white paper from the digital pathology association. J. Pathol. Inform. 2019, 10, 9. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Yang, D.M.; Rong, R.; Zhan, X.; Fujimoto, J.; Liu, H.; Minna, J.; Wistuba, I.I.; Xie, Y.; Xiao, G. Artificial intelligence in lung cancer pathology image analysis. Cancers 2019, 11, 1673. [Google Scholar] [CrossRef] [PubMed]

- Madabhushi, A.; Lee, G. Image analysis and machine learning in digital pathology: Challenges and opportunities. Med. Image Anal. 2016, 33, 170–175. [Google Scholar] [CrossRef] [PubMed]

- Xiong, Y.; Ba, X.; Hou, A.; Zhang, K.; Chen, L.; Li, T. Automatic detection of mycobacterium tuberculosis using artificial intelligence. J. Thorac. Dis. 2018, 10, 1936–1940. [Google Scholar] [CrossRef]

- Yang, M.; Nurzynska, K.; Walts, A.E.; Gertych, A. A CNN-based active learning framework to identify mycobacteria in digitized Ziehl-Neelsen stained human tissues. Comput. Med. Imaging Graph. 2020, 84, 101752. [Google Scholar] [CrossRef]

- Asay, B.C.; Edwards, B.B.; Andrews, J.; Ramey, M.E.; Richard, J.D.; Podell, B.K.; Gutiérrez, J.F.M.; Frank, C.B.; Magunda, F.; Robertson, G.T.; et al. Digital image analysis of heterogeneous tuberculosis pulmonary pathology in non-clinical animal models using deep convolutional neural networks. Sci. Rep. 2020, 10, 6047. [Google Scholar] [CrossRef]

- Pham, H.H.N.; Futakuchi, M.; Bychkov, A.; Furukawa, T.; Kuroda, K.; Fukuoka, J. Detection of Lung Cancer Lymph Node Metastases from Whole-Slide Histopathologic Images Using a Two-Step Deep Learning Approach. Am. J. Pathol. 2019, 189, 2428–2439. [Google Scholar] [CrossRef]

- Im, J.G.; Itoh, H.; Shim, Y.S.; Lee, J.H.; Ahn, J.; Han, M.C.; Noma, S. Pulmonary tuberculosis: CT findings—Early active disease and sequential change with antituberculous therapy. Radiology 1993, 186, 653–660. [Google Scholar] [CrossRef] [PubMed]

- Santos, N.; Geraldes, M.; Afonso, A.; Almeida, V.; Correia-Neves, M. Diagnosis of tuberculosis in the wild boar (Sus scrofa): A comparison of methods applicable to hunter-harvested animals. PLoS ONE 2010, 5, e12663. [Google Scholar] [CrossRef] [PubMed]

- Pantanowitz, L.; Wu, U.; Seigh, L.; LoPresti, E.; Yeh, F.C.; Salgia, P.; Michelow, P.; Hazelhurst, S.; Chen, W.Y.; Hartman, D.; et al. Artificial intelligence-based screening for mycobacteria in whole-slide images of tissue samples. Am. J. Clin. Pathol. 2021, 156, 117–128. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Hou, X.F.; Gao, L.; Deng, G.F.; Zhang, M.X.; Deng, Q.Y.; Ye, T.S.; Yang, Q.T.; Zhou, B.P.; Wen, Z.H.; et al. Indicators for prediction of Mycobacterium tuberculosis positivity detected with bronchoalveolar lavage fluid. Infect. Dis. Poverty 2018, 7, 22. [Google Scholar] [CrossRef]

- Salzman, S.H.; Schindel, M.L.; Aranda, C.P.; Smith, R.L.; Lewis, M.L. The role of bronchoscopy in the diagnosis of pulmonary tuberculosis in patients at risk for HIV infection. Chest 1992, 102, 143–146. [Google Scholar] [CrossRef]

- Walker, T.M.; Kohl, T.A.; Omar, S.V.; Hedge, J.; Elias, C.D.O.; Bradley, P.; Iqbal, Z.; Feuerriegel, S.; Niehaus, K.E.; Wilson, D.J.; et al. Whole-genome sequencing for prediction of Mycobacterium tuberculosis drug susceptibility and resistance: A retrospective cohort study. Lancet Infect. Dis. 2015, 15, 1193–1202. [Google Scholar] [CrossRef]

- Xie, Y.L.; Chakravorty, S.; Armstrong, D.T.; Hall, S.L.; Via, L.E.; Song, T.; Yuan, X.; Mo, X.; Zhu, H.; Xu, P.; et al. Evaluation of a rapid molecular drug-susceptibility test for tuberculosis. N. Engl. J. Med. 2017, 377, 1043–1054. [Google Scholar] [CrossRef]

- Cohen, K.A.; Manson, A.L.; Desjardins, C.A.; Abeel, T.; Earl, A.M. Deciphering drug resistance in Mycobacterium tuberculosis using whole-genome sequencing: Progress, promise, and challenges. Genome Med. 2019, 11, 45. [Google Scholar] [CrossRef]

- Jiang, F.; Huang, W.; Wang, Y.; Tian, P.; Chen, X.; Liang, Z. Nucleic acid amplification testing and sequencing combined with acid-fast staining in needle biopsy lung tissues for the diagnosis of smear-negative pulmonary tuberculosis. PLoS ONE 2016, 11, e0167342. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).