Automated Bone Marrow Cell Classification for Haematological Disease Diagnosis Using Siamese Neural Network

Abstract

:1. Introduction

2. Literature Review

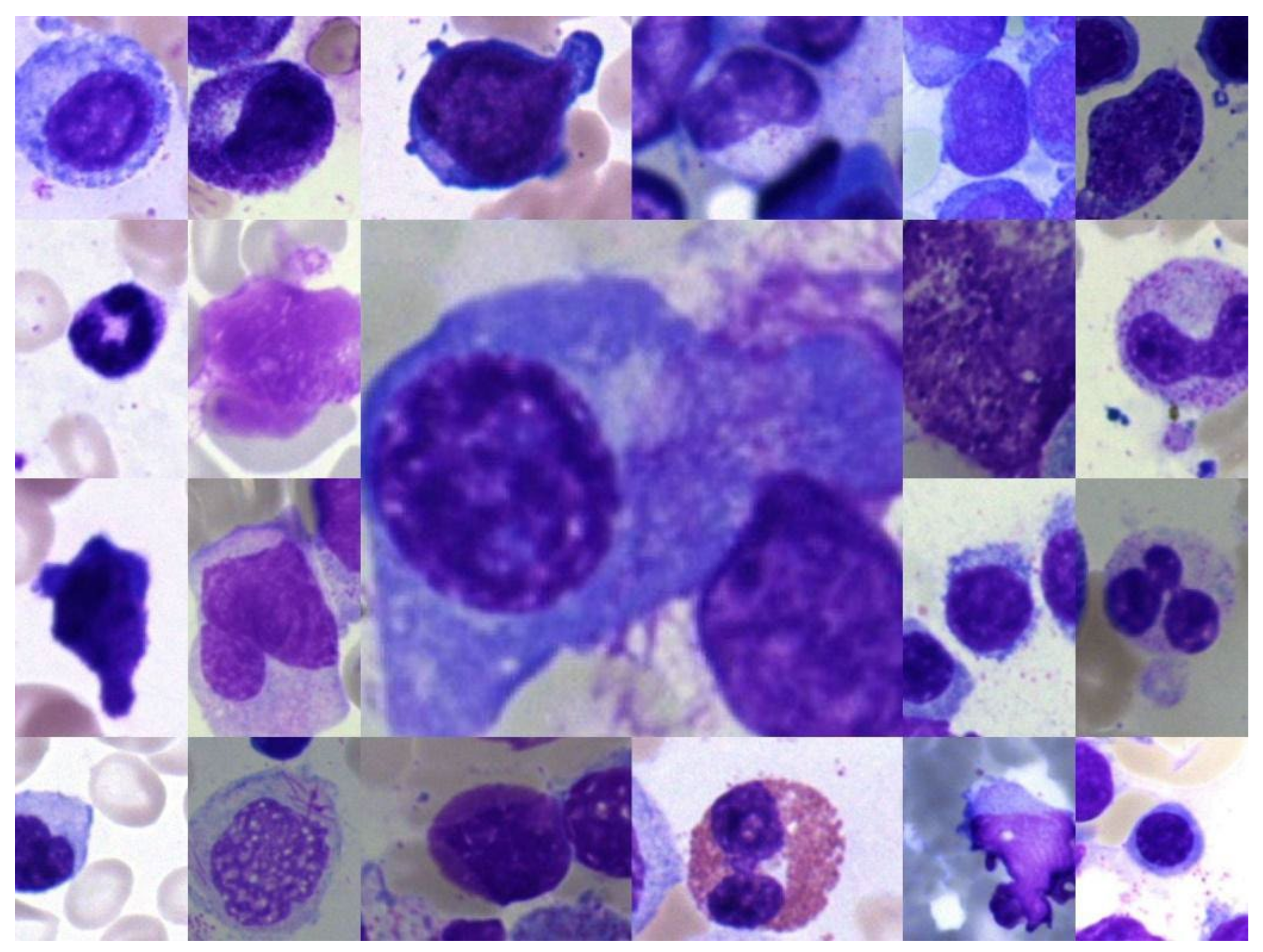

3. Material and Methods

3.1. Siamese Network

3.2. Algorithm

3.2.1. Forward Propagation

Convolution Layer

Max Pooling Layer

Flatten Layer

Dense Layer

3.2.2. Backward Propagation

3.2.3. Update

4. Experimental Results

4.1. Siamese Neural Network

4.1.1. Loss, Validation Loss, Accuracy and Validation Accuracy against Number of Epochs for Cell Classification

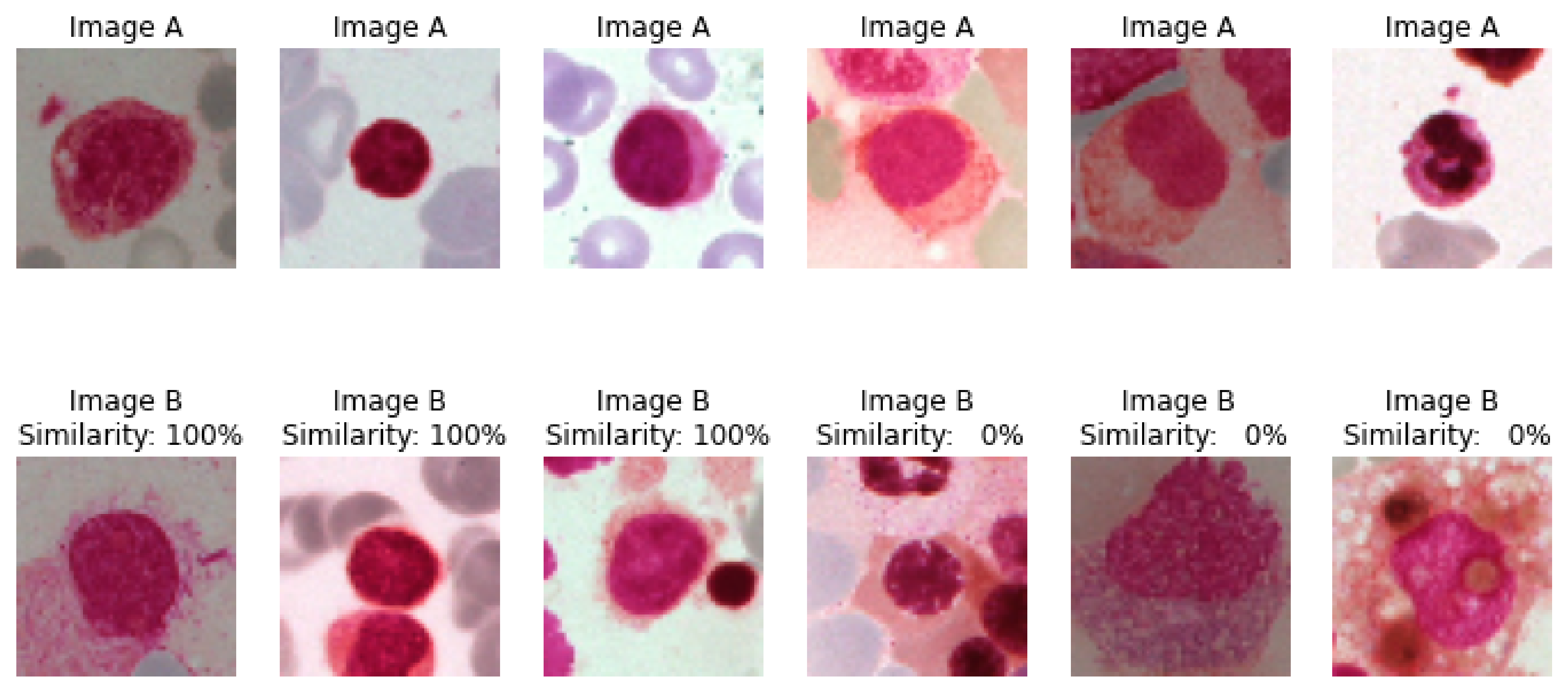

4.1.2. Similarity between Class Images

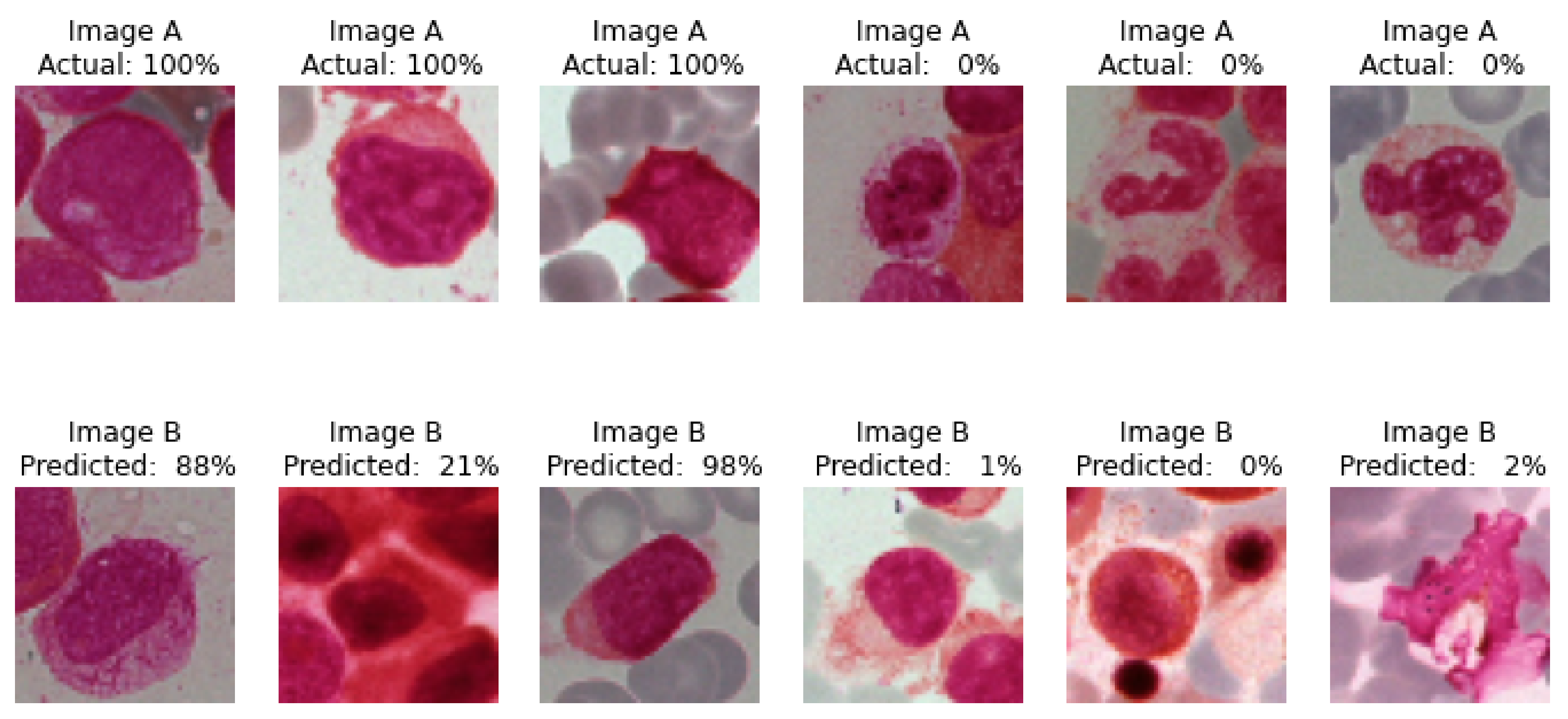

4.1.3. Actual and Predicted Class Images

4.1.4. Confusion Matrix

4.1.5. Evaluation Metrics

4.1.6. Comparison among Various Models Used

4.1.7. Confusion Matrix

5. Discussion and Future Scope

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Guo, L.; Huang, P.; Huang, D.; Li, Z.; She, C.; Guo, Q.; Zhang, Q.; Li, J.; Ma, Q.; Li, J. A classification method to classify bone marrow cells with class imbalance problem. Biomed. Signal Processing Control. 2022, 72, 103296. [Google Scholar] [CrossRef]

- Chandradevan, R.; Aljudi, A.A.; Drumheller, B.R.; Kunananthaseelan, N.; Amgad, M.; Gutman, D.A.; Cooper, L.A.D.; Jaye, D.L. Machine-based detection and classification for bone marrow aspirate differential counts: Initial development focusing on nonneoplastic cells. Lab. Investig. 2020, 100, 98–109. [Google Scholar] [CrossRef] [PubMed]

- Boes, K.; Durham, A. Bone Marrow, Blood Cells, and the Lymphoid/Lymphatic System1. Pathol. Basis Vet. Dis. 2017, 724–804.e2. [Google Scholar]

- Theera-Umpon, N. White blood cell segmentation and classification in microscopic bone marrow images. In Proceedings of the International Conference on Fuzzy Systems and Knowledge Discovery, Changsha, China, 27–29 August 2005; Springer: Berlin/Heidelberg, Germnay, 2005; pp. 787–796. [Google Scholar]

- Wang, C.W.; Huang, S.C.; Lee, Y.C.; Shen, Y.J.; Meng, S.I.; Gaol, J.L. Deep learning for bone marrow cell detection and classification on whole-slide images. Med. Image Anal. 2022, 75, 102270. [Google Scholar] [CrossRef]

- Dorini, L.B.; Minetto, R.; Leite, N.J. White blood cell segmentation using morphological operators and scale-space analysis. In Proceedings of the XX Brazilian Symposium on Computer Graphics and Image Processing (SIBGRAPI 2007), Belo Horizonte, Brazil, 7–10 October 2007; pp. 294–304. [Google Scholar]

- Wang, W.; Song, H. Cell cluster image segmentation on form analysis. In Proceedings of the Third International Conference on Natural Computation (ICNC 2007), Haikou, China, 24–27 August 2007; Volume 4, pp. 833–836. [Google Scholar]

- Ma, L.; Shuai, R.; Ran, X.; Liu, W.; Ye, C. Combining DC-GAN with ResNet for blood cell image classification. Med. Biol. Eng. Comput. 2020, 58, 1251–1264. [Google Scholar] [CrossRef]

- Vyshnav, M.T.; Sowmya, V.; Gopalakrishnan, E.A.; Menon, V.K. Deep learning based approach for multiple myeloma detection. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; pp. 1–7. [Google Scholar]

- Waris, A.; Niazi, I.K.; Jamil, M.; Englehart, K.; Jensen, W.; Kamavuako, E.N. Multiday evaluation of techniques for EMG-based classification of hand motions. IEEE J. Biomed. Health Inform. 2018, 23, 1526–1534. [Google Scholar] [CrossRef] [Green Version]

- Acevedo, A.; Merino, A.; Boldú, L.; Molina, Á.; Alférez, S.; Rodellar, J. A new convolutional neural network predictive model for the automatic recognition of hypogranulated neutrophils in myelodysplastic syndromes. Comput. Biol. Med. 2021, 134, 104479. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Julaiti, A.; Ran, W.; He, Y. Bone marrow mesenchymal stem cells-derived exosomal microRNA-19b-3p targets SOCS1 to facilitate progression of esophageal cancer. Life Sci. 2021, 278, 119491. [Google Scholar] [CrossRef] [PubMed]

- Sarikhani, M.; Firouzamandi, M. Cellular senescence in cancers: Relationship between bone marrow cancer and cellular senescence. Mol. Biol. Rep. 2022, 49, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Mori, J.; Kaji, S.; Kawai, H.; Kida, S.; Tsubokura, M.; Fukatsu, M.; Harada, K.; Noji, H.; Ikezoe, T.; Maeda, T.; et al. Assessment of dysplasia in bone marrow smear with convolutional neural network. Sci. Rep. 2020, 10, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Jin, H.; Fu, X.; Cao, X.; Sun, M.; Wang, X.; Zhong, Y.; Yang, S.; Qi, C.; Peng, B.; He, X.; et al. Developing and preliminary validating an automatic cell classification system for bone marrow smears: A pilot study. J. Med. Syst. 2020, 44, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Warnat-Herresthal, S.; Perrakis, K.; Taschler, B.; Becker, M.; Baßler, K.; Beyer, M.; Günther, P.; Schulte-Schrepping, J.; Seep, L.; Klee, K.; et al. Scalable prediction of acute myeloid leukemia using high-dimensional machine learning and blood transcriptomics. Iscience 2020, 23, 100780. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hellmich, C.; Moore, J.A.; Bowles, K.M.; Rushworth, S.A. Bone marrow senescence and the microenvironment of hematological malignancies. Front. Oncol. 2020, 10, 230. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Radhachandran, A.; Garikipati, A.; Iqbal, Z.; Siefkas, A.; Barnes, G.; Hoffman, J.; Mao, Q.; Das, R. A machine learning approach to predicting risk of myelodysplastic syndrome. Leuk. Res. 2021, 109, 106639. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Billet, S.; Choudhury, D.; Cheng, R.; Haldar, S.; Fernandez, A.; Biondi, S.; Liu, Z.; Zhou, H.; Bhowmick, N.A. Bone marrow mesenchymal stem cells interact with head and neck squamous cell carcinoma cells to promote cancer progression and drug resistance. Neoplasia 2021, 23, 118–128. [Google Scholar] [CrossRef] [PubMed]

- Truong, K.H.; Minh, D.N.; Trong, L.D. Automatic White Blood Cell Classification Using the Combination of Convolution Neural Network and Support Vector Machine. In Proceedings of the International Conference on Hybrid Intelligent Systems, Virtual Event, India, 14–16 December 2020; Springer: Cham, Switzerland, 2020; pp. 720–728. [Google Scholar]

- Matek, C.; Krappe, S.; Münzenmayer, C.; Haferlach, T.; Marr, C. Highly accurate differentiation of bone marrow cell morphologies using deep neural networks on a large image data set. Blood J. Am. Soc. Hematol. 2021, 138, 1917–1927. [Google Scholar] [CrossRef] [PubMed]

- Matek, C.; Krappe, S.; Münzenmayer, C.; Haferlach, T.; Marr, C. An Expert-Annotated Dataset of Bone Marrow Cytology in Hematologic Malignancies [Data set]. Cancer Imaging Arch. 2021. [Google Scholar] [CrossRef]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef] [PubMed]

| Reference | Algorithm/Model Used | Performance Metric | Number of Bone Marrow Classes/Labels | Remark |

|---|---|---|---|---|

| 7 | DC-GAN + Resnet | Accuracy | 4 (eosinophils, lymphocytes, monocytes and neutrophils) | 91.70% |

| 8 | CNN + VGG16 | Accuracy | 6 (neutrophils, eosinophils, basophils, monocytes, lymphocytes (T and B cells)) | 94% |

| 9 | ROI Processing+ DL | Recall | 16 | 84.20% |

| 10 | RCNN | Recall | 85 images | 92.68% |

| 11 | Syn-ADHA | Precision | 16 | 87.12% |

| 18 | XGBoost | Accuracy | 790,470 images | 88% |

| 10 | Unet | Recall | 85 images | 73.17% |

| 20 | CNN + SVM | Accuracy | 16 | 97.80% |

| S. No. | Class Label Acronym | Class Label Description |

|---|---|---|

| 1 | ABE | Abnormal eosinophil |

| 2 | ART | Artefact |

| 3 | BAS | Basophil |

| 4 | BLA | Blast |

| 5 | EBO | Erythroblast |

| 6 | EOS | Eosinophil |

| 7 | FGC | Faggott cell |

| 8 | HAC | Hairy cell |

| 9 | KSC | Smudge cell |

| 10 | LYI | Immature lymphocyte |

| 11 | LYT | Lymphocyte |

| 12 | MMZ | Metamyelocyte |

| 13 | MON | Monocyte |

| 14 | MYB | Myelocyte |

| 15 | NGB | Band neutrophil |

| 16 | NGS | Segmented neutrophil |

| 17 | NIF | Not identifiable |

| 18 | PEB | Proerythroblast |

| 19 | PLM | Plasma cell |

| 20 | PMO | Promyelocyte |

| 21 | OTH | Other cell |

| Cell Class | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| LYI | 1.00 | 0.67 | 0.81 | 553 |

| PEB | 1.00 | 0.77 | 0.87 | 2261 |

| FGC | 1.00 | 0.56 | 0.71 | 532 |

| MYB | 1.00 | 0.90 | 0.94 | 4660 |

| OTH | 1.00 | 0.69 | 0.81 | 651 |

| BLA | 0.99 | 0.94 | 0.96 | 8076 |

| MMZ | 0.99 | 0.79 | 0.88 | 2455 |

| MON | 1.00 | 0.83 | 0.91 | 3113 |

| ART | 0.39 | 0.97 | 0.56 | 5065 |

| BAS | 1.00 | 0.36 | 0.53 | 778 |

| PMO | 0.97 | 0.94 | 0.96 | 7954 |

| EOS | 1.00 | 0.88 | 0.94 | 4265 |

| KSC | 1.00 | 0.75 | 0.86 | 529 |

| ABE | 1.00 | 0.61 | 0.02 | 492 |

| PLM | 1.00 | 0.91 | 0.95 | 5349 |

| NGB | 0.99 | 0.93 | 0.96 | 6817 |

| HAC | 1.00 | 0.35 | 0.52 | 740 |

| EBO | 0.97 | 0.97 | 0.97 | 17,526 |

| NIF | 1.00 | 0.81 | 0.90 | 2778 |

| LYT | 0.95 | 0.97 | 0.96 | 16,413 |

| NGC | 0.97 | 0.97 | 0.97 | 18,663 |

| Accuracy | 0.91 | 109,670 | ||

| Weighted average | 0.95 | 0.92 | 0.93 | 109,670 |

| Cell Class | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| LYI | 0.91 | 0.74 | 0.81 | 250 |

| PEB | 0.84 | 0.63 | 0.72 | 656 |

| FGC | 0.53 | 0.67 | 0.59 | 271 |

| MYB | 0.89 | 0.79 | 0.84 | 1322 |

| OTH | 0.21 | 0.65 | 0.31 | 237 |

| BLA | 0.89 | 0.89 | 0.89 | 2157 |

| MMZ | 0.81 | 0.63 | 0.71 | 711 |

| MON | 0.49 | 0.61 | 0.54 | 584 |

| ART | 0.92 | 0.94 | 0.93 | 3477 |

| BAS | 0.35 | 0.34 | 0.35 | 274 |

| PMO | 0.93 | 0.90 | 0.91 | 2234 |

| EOS | 0.93 | 0.81 | 0.87 | 1219 |

| KSC | 1.00 | 0.63 | 0.77 | 239 |

| ABE | 1.00 | 0.54 | 0.70 | 218 |

| PLM | 0.93 | 0.85 | 0.89 | 1515 |

| NGB | 0.85 | 0.87 | 0.86 | 1760 |

| HAC | 0.77 | 0.61 | 0.33 | 262 |

| EBO | 0.82 | 0.96 | 0.88 | 4249 |

| NIF | 0.82 | 0.70 | 0.76 | 751 |

| LYT | 0.62 | 0.95 | 0.75 | 3078 |

| NGC | 0.97 | 0.96 | 0.96 | 5373 |

| Accuracy | 0.84 | 30,837 | ||

| Weighted average | 0.93 | 0.91 | 0.91 | 30,837 |

| Class | Siamese | CNN-SVM | CNN-XGBoost |

|---|---|---|---|

| LYI | 0.81 | 0.00 | 0.00 |

| PEB | 0.72 | 0.00 | 0.08 |

| FGC | 0.59 | 0.00 | 0.00 |

| MYB | 0.84 | 0.00 | 0.00 |

| OTH | 0.31 | 0.00 | 0.00 |

| BLA | 0.89 | 0.00 | 0.30 |

| MMZ | 0.71 | 0.00 | 0.00 |

| MON | 0.54 | 0.00 | 0.00 |

| ART | 0.93 | 0.09 | 0.26 |

| BAS | 0.35 | 0.00 | 0.00 |

| PMO | 0.91 | 0.07 | 0.31 |

| EOS | 0.87 | 0.00 | 0.00 |

| KSC | 0.77 | 0.00 | 0.00 |

| ABE | 0.70 | 0.00 | 0.00 |

| PLM | 0.89 | 0.00 | 0.00 |

| NGB | 0.86 | 0.00 | 0.00 |

| HAC | 0.33 | 0.00 | 0.00 |

| EBO | 0.88 | 0.42 | 0.52 |

| NIF | 0.76 | 0.00 | 0.02 |

| LYT | 0.75 | 0.32 | 0.46 |

| NGC | 0.96 | 0.39 | 0.50 |

| Accuracy | 0.84 | 0.28 | 0.38 |

| Weighted average | 0.91 | 0.20 | 0.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ananthakrishnan, B.; Shaik, A.; Akhouri, S.; Garg, P.; Gadag, V.; Kavitha, M.S. Automated Bone Marrow Cell Classification for Haematological Disease Diagnosis Using Siamese Neural Network. Diagnostics 2023, 13, 112. https://doi.org/10.3390/diagnostics13010112

Ananthakrishnan B, Shaik A, Akhouri S, Garg P, Gadag V, Kavitha MS. Automated Bone Marrow Cell Classification for Haematological Disease Diagnosis Using Siamese Neural Network. Diagnostics. 2023; 13(1):112. https://doi.org/10.3390/diagnostics13010112

Chicago/Turabian StyleAnanthakrishnan, Balasundaram, Ayesha Shaik, Shivam Akhouri, Paras Garg, Vaibhav Gadag, and Muthu Subash Kavitha. 2023. "Automated Bone Marrow Cell Classification for Haematological Disease Diagnosis Using Siamese Neural Network" Diagnostics 13, no. 1: 112. https://doi.org/10.3390/diagnostics13010112