A Comprehensive Review of Recent Advances in Artificial Intelligence for Dentistry E-Health

Abstract

:1. Introduction

- Discussions related to the problems unique to dental disease diagnosis and the challenges associated with those techniques.

- Propose a taxonomy classifying the existing literature in X-ray and near-infrared imaging, identifying current trends.

- An in-depth analysis of the recently employed dentistry techniques represents a systematic understanding of the advancement within this field.

- Performance analysis of the current approaches on existing benchmark datasets.

- Recommendations and future directions towards the standardization of artificial intelligence in the field of dental medicine.

2. Materials and Methods

2.1. Protocol

2.2. Electronic Search Strategy

2.3. Eligibility Criteria

2.3.1. Inclusion Criteria

- Timeline: manuscripts from the last fourteen years (2009–2022) focused on the application of artificial neural networks, machine learning, and deep learning in dentistry.

- Language: manuscripts that are available in English were included irrespective of country of origin.

- Data and Outcome: studies with proper mention of datasets used along with predictive and measurable outcomes for quantification of the proposed model.

2.3.2. Exclusion Criteria

- Type of Data Used: studies without clear information on data modalities.

- Methodology: studies without sufficient details of computer vision, machine-learning and deep-learning methods, and techniques employed. Language: manuscripts that are available in English were included irrespective of country of origin.

- Outcome: studies that did not report measurable outcomes.

2.4. Study Selection and Items Collected

3. Imaging Modalities for Dental Disease Diagnosis

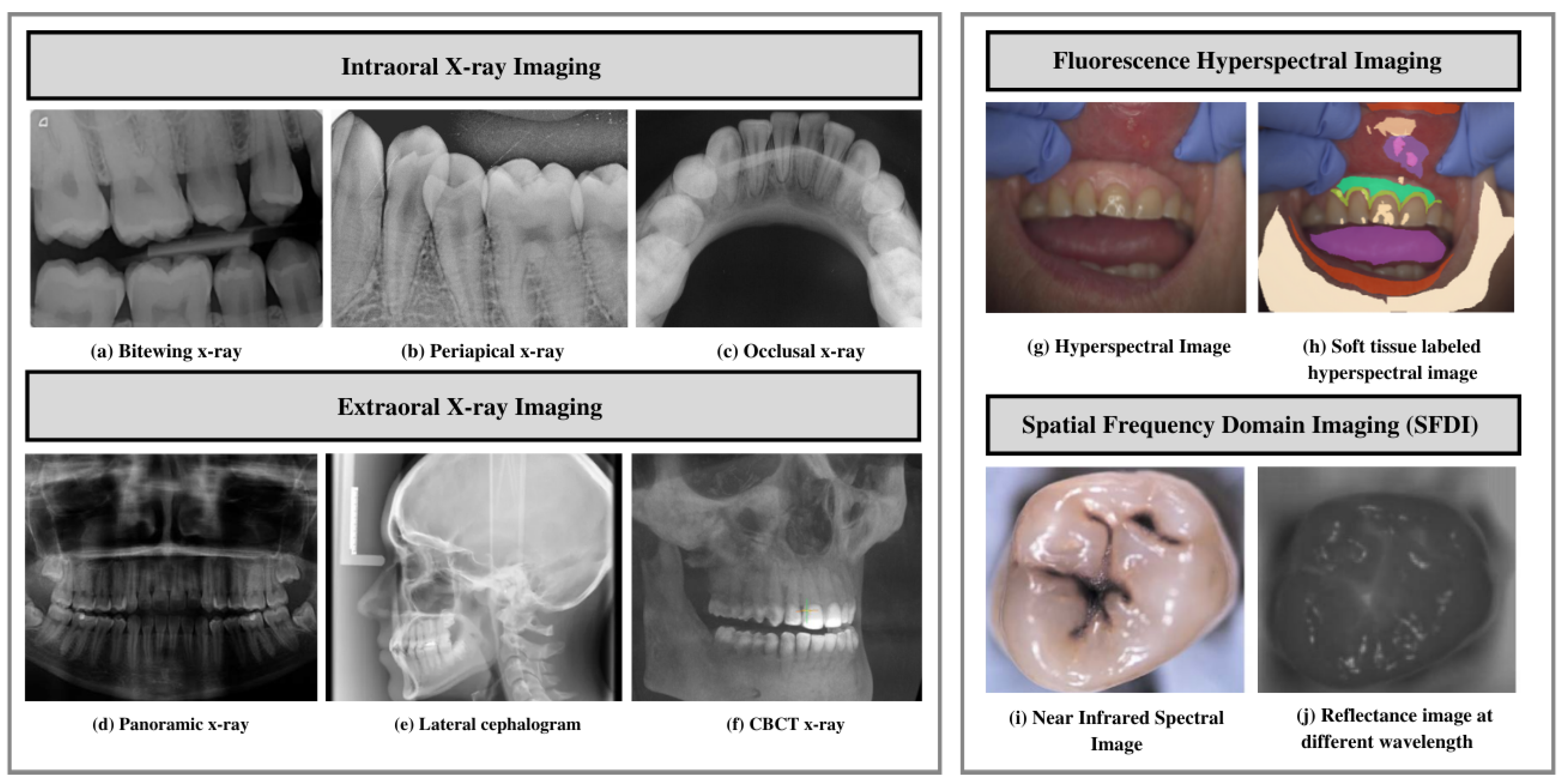

3.1. X-ray Imaging Systems

3.1.1. Intraoral X-ray Imaging

- Bitewing X-ray provides a detailed account of maxillary and mandibular dental arches in a certain region of supporting bone. Bitewing radiographs aid in detecting tooth decay variations, finding dental decay, and identifying restorations.

- Periapical X-ray portrays teeth in a full-dimensional view of one of either dental arches. The radiograph allows for detecting issues in a specific set of teeth and identifying root structure abnormalities, and detecting the surrounding bone structure.

- Occlusal X-ray shows tooth positioning and their subsequent development in the dental arches of either the maxilla or mandible.

3.1.2. Extraoral X-ray Imaging

- On a single radiograph, a panoramic X-ray gives a two-dimensional view of the oral cavity including both the maxilla and mandible. These types of X-rays help identify impacted teeth and diagnose dental tumors [32].

- Cone-beam computed tomography (CBCT) offers a substantial solution to the conventional radiography demerits. CBCT imaging is used. This type of imaging shows the interior body structures as (three-dimensional) 3-D images and enables identifying fractures and tumors in face bones. This imaging aids surgeons in avoiding after-surgery complications [35].

3.2. Near Infrared Imaging Systems

- Fluorescence hyperspectral imaging system is a non-contact approach to dental tissue diagnostics. It helps degenerate raw data in a sizeable amount making it suitable for computer vision processing [39]. This imaging system combines spatial and spectral information, enabling dentists to obtain a precise optical characterization of dental issues, including dental plaque. The images are captured using a line scanning camera with 400–1000 nm spectral direction with a 5 nm sampling interval and spatial resolution of 22 m. In addition, the hyperspectral imaging modality helps assess dental caries severity [40].

- Spatial frequency domain imaging (SFDI) is a quantitative imaging technique [41] that enables the separation of components that are scattered and the optical absorption of a sample. This imaging modality relies on modulating project fringe patterns’ depth at varying frequencies and phases.

3.3. Spectral Ranges

- Near-infrared, mid-infrared, and long-infrared: These spectral ranges provide valuable information about the chemical composition and molecular structure of dental tissues; this helps in the detection and characterization of dental lesions. Infrared is divided into three spectral regions, mainly near infrared ranging between 4000 and 14,000 cm−1, mid-infrared (MIR) ranging between 400 and 4000 cm−1, and far infrared, ranging between 25 and 400 cm−1 [42].

- Radio frequency (RF) range: Non-ionizing radio frequency pulse with a range of frequencies is used in the presence of a controlled magnetic field for generating MRI [46]. The MRIs generated have found applications in implant dentistry, providing more precise information related to bone density, contour, and bone height [47].

3.4. Challenges in Automated Dental Disease Diagnosis

- Limited Data Availability and Comprehensiveness: Due to data protection concerns, medical, especially dental, data is not readily accessible. Moreover, certain challenges including lack in terms of structure and relatively smaller size hinder applications of artificial intelligence techniques [11]. Thus, data availability affects the extent to which deep-learning-based approaches can be employed in this field.

- Data Annotation: Medical data annotation requires specialized knowledge from healthcare professionals. Moreover, data labeling requires an adequate workforce and the process is cost intensive. In the absence of progressive flow and accurately annotated data, deep-learning algorithms cannot make correct interpretations and accurate predictions [48].

- Limited Generalizability: Varying imaging characteristics lead to limited deep-learning model generalizability [49]. The underlying possible generalizability deficits must be elucidated to facilitate the development of improved modeling strategies.

- Class Imbalance: The predominant occurrence of standard samples as compared to abnormal samples leads to class imbalance [50]. The imbalanced data lead to learning bias in the majority class.

- External Validation: Lack of external validation leads to issues in the replication and transparency of AI-based models within dentistry. The community standards for model sharing, benchmarking, and reproducibility must be adhered to [51].

- Interpretability: Lack in terms of interpretability and transparency makes it challenging to predict failures. Interpretability must be ensured to build a proper rapport between technology and humans, and generalize algorithms for specific tasks [8].

- Expertise Gap: The ability to make accurate diagnoses and treatment plans relies on expertise derived from the extensive knowledge and practical experience. AI may not be able to fully replicate the nuanced decision-making that experienced clinicians possess. Bridging the gap between human expertise and AI capabilities poses a significant challenge in automated dental disease diagnosis.

- Sensitivity and Specificity Limitations: Due to variations in image quality and anatomical structures, AI models may have limitations in achieving high sensitivity and specificity.

- Image Interpretation Issues: The overlapping structures and presence of artifacts make interpreting dental images a daunting task. AI models should overcome these challenges to ensure accurate and reliable interpretation of dental images.

- Variations in Pathology Presentation: Dental diseases manifest in different ways. These variations can be in terms of size, shape, or appearance. AI models are required to be able to take into account these variations accurately to provide accurate detection and classification of different pathologies.

4. Dataset and Evaluation Metrics

4.1. Benchmarks and Datasets

4.1.1. ISBI2015 Grand Challenge Dental Dataset

- Cephalogram Dataset [52] consisting of 400 cephalograms taken from 400 patients. The images were acquired using CRANEX excel ceph machine and are saved in TIFF format. Two experienced doctors evaluated and manually marked 19 landmarks on the images to generate ground truth masks. The size of each image is 1935 × 2400. The goal of this dataset is to enable researchers to make accurate landmark predictions for practical cephalometric analysis.

- Bitewing Radiograph Dataset [53] comprising 120 bitewing radiographic images collected from 120 patients. The dataset includes seven color-coded areas indicating caries using different colors [54,55]. Moreover, images are marked manually after being reviewed by experienced medical doctors. The dataset aims to enable researchers to investigate a suitable automated segmentation method for identifying seven different areas of the tooth.

| Dataset (Ref) | Dataset Specifications | Research Challenges | |||

|---|---|---|---|---|---|

| Size and Modality | Disease Category | Format | Other Qualities | ||

| ISBI-2015 grand challenged dental dataset [53] | 120 bitewing images 400 cephalograms | Dental caries (enamel, dentin, pulp) Landmark detection | JPEG TIFF | High data variances | Feature extraction and classification, caries detection and landmark identification |

| Panoramic dental X-ray dataset [56] | 2000 panoramic radiographs | Intraosseous mandible lesions | BMP of 2900 × 1250 pixels | A few low-quality images (blurred or malposed) | Mandible segmentation Identification of anatomical structures |

| UFBA-UESC dental image dataset [57] | 1500 panoramic radiographs | Restoration and dental appliance | JPEG of 1991 × 1127 pixels | High data variability and imbalance in terms of number of images and number of pixels per class | Semantic segmentation |

| Tufts multimodal panoramic X-ray dataset [58] | 1000 panoramic radiographs | Tooth abnormalities | Images and ground truth masks: TIFF/JPEG of 840 × 1615 | Instance segmentation and numbering. Short textual descriptions of abnormalities present in each radiograph. Gaze plots from eye-tracking data | Image enhancement, tooth segmentation, and abnormality detection |

| Oral and dental spectral image database (ODSI-db) [59] | 316 spectral images with 215 annotation masks | Occlusal surfaces of lower and upper teeth, face surrounding the mouth, and oral mucosa | Multipage TIFF of 1392 × 1040 pixels | Highly imbalanced in terms of number of images and number of pixels per class | Organ segmentation |

4.1.2. Panoramic Dental X-ray Dataset [56]

4.1.3. UFBA-UESC Dental Image Data Set [57]

4.1.4. Tufts Multimodal Panoramic X-ray Dataset [58]

4.2. Evaluation Metrics

- True Positive (TP): both the ground truth and method prediction correspond to positive.

- True Negative (TN): both the ground truth and method prediction correspond to negative.

- False Positive (FP): the ground truth is negative, but method prediction corresponds to positive.

- False Negative (FN): the ground truth is positive, but method prediction corresponds to negative.

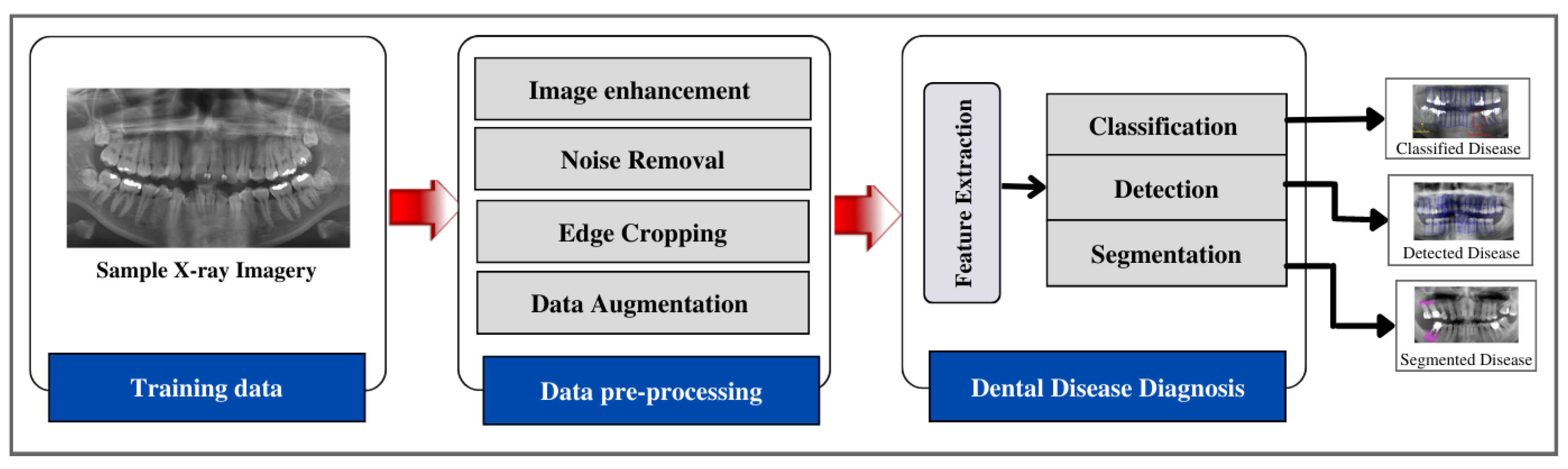

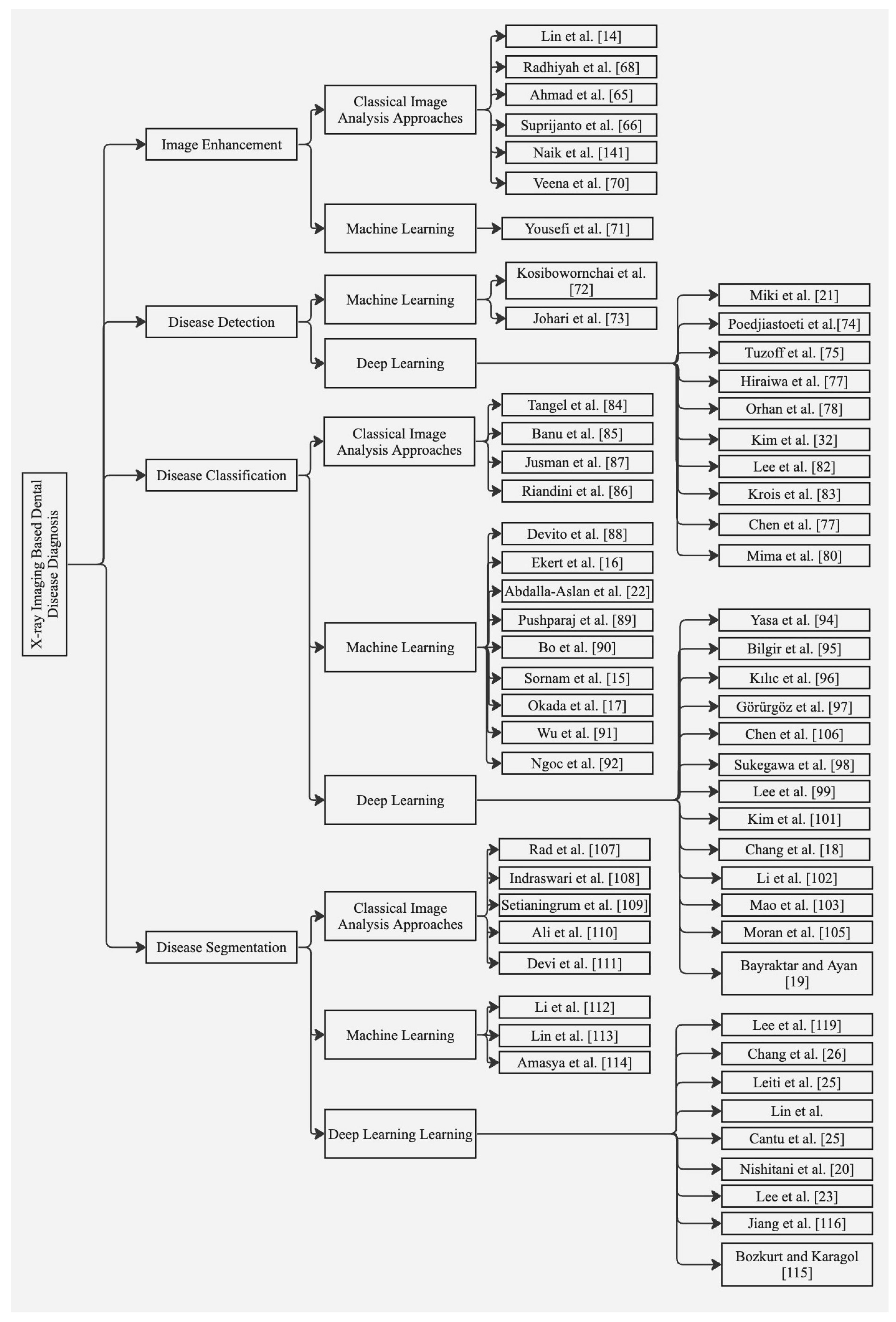

5. Approaches to Dental Disease Diagnosis Using X-ray Imaging

5.1. Image Enhancement

5.2. Disease Detection

5.3. Disease Classification

5.4. Disease Segmentation

5.5. Benchmarking of X-ray Based Dental Disease Diagnosis Approaches

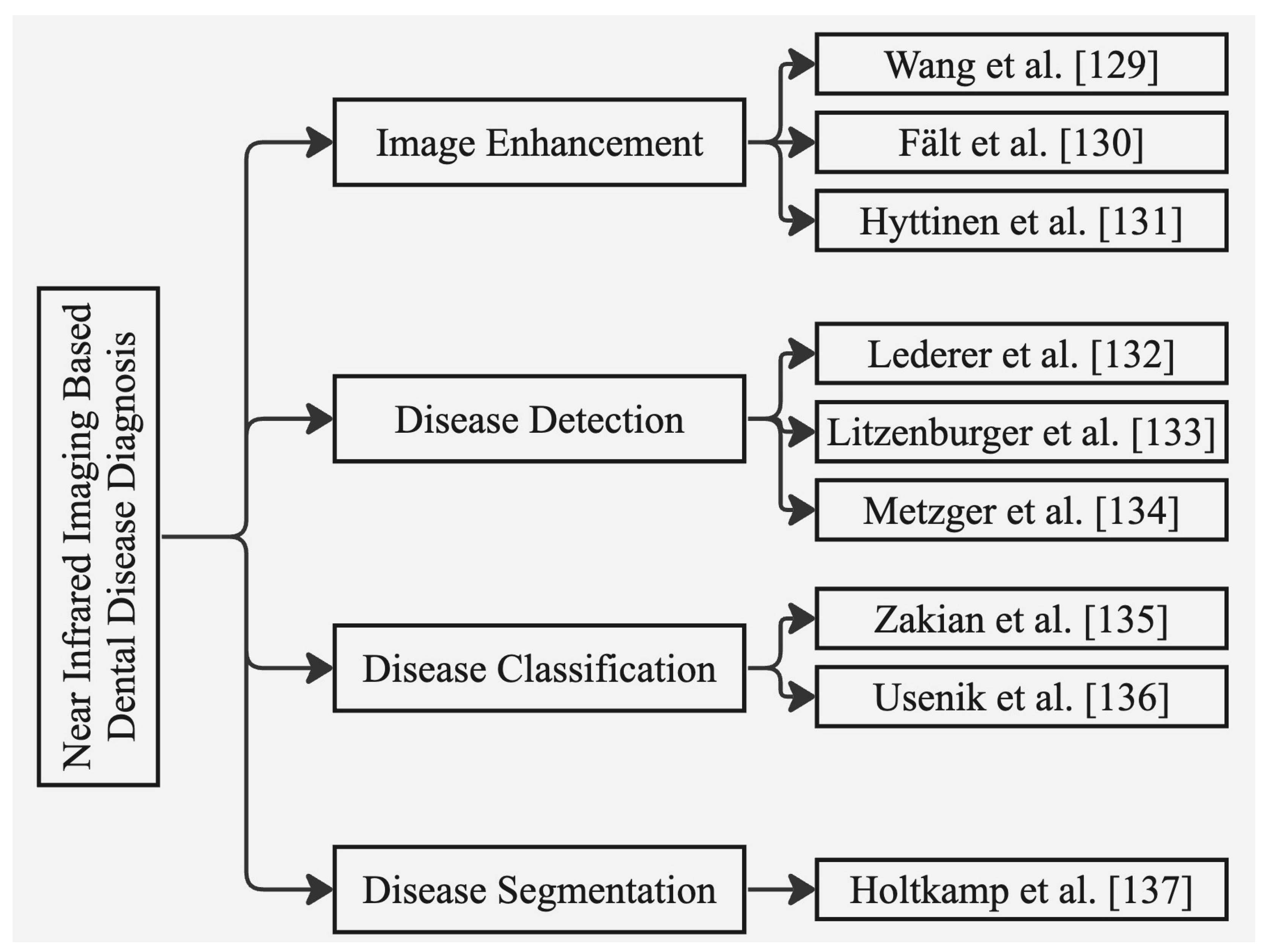

6. Approaches to Dental Disease Diagnosis Using n1ear Infrared Imaging Systems

6.1. Image Enhancement

6.2. Disease Detection

6.3. Disease Classification

6.4. Disease Segmentation

6.5. Benchmarking of Near Infrared Based Dental Disease Diagnosis

6.6. Assessment of Risk Bias

7. Ethical Considerations and Future Research Directions

7.1. Ethical Considerations

7.2. Research Gaps and Future Research Directions

7.2.1. Data Insufficiency

7.2.2. Class Imbalance Learning

7.2.3. Personalized Dental Medicine

7.2.4. Tele-Dentistry

7.2.5. Internet of Dental Things (IoDT)

8. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analysis |

| AI | Artificial Intelligence |

| CNN | Convolutional Neural Network |

| SVM | Support Vector Machine |

| DL | Deep Learning |

| CAD | Computer-Aided Diagnosis |

| ROI | Region of Interest |

| CBCT | Cone-Beam Computed Tomography |

| CNNs | Convolutional Neural Networks |

| MLP | Multilayer Perceptron |

| DNN | Deep Neural Network |

| ANN | Artificial Neural Network |

| SVMs | Support Vector Machines |

| RF | Random Forest |

| KNN | K-Nearest Neighbors |

| LDA | Linear Discriminant Analysis |

| PCA | Principal Component Analysis |

| ROC | Receiver Operating Characteristic |

| AUC | Area Under the Curve |

| TP | True Positive |

| TN | True Negative |

| FP | False Positive |

| FN | False Negative |

References

- Sodhi, G.K.; Kaur, S.; Gaba, G.S.; Kansal, L.; Sharma, A.; Dhiman, G. COVID-19: Role of Robotics, Artificial Intelligence and Machine Learning During the Pandemic. Curr. Med Imaging Former. Curr. Med Imaging Rev. 2022, 18, 124–134. [Google Scholar] [CrossRef] [PubMed]

- Artificial Intelligence in Medicine|IBM. Available online: https://www.ibm.com/topics/artificial-intelligence-medicine/ (accessed on 25 August 2022).

- Rahman, M.M.; Khatun, F.; Uzzaman, A.; Sami, S.I.; Bhuiyan, M.A.A.; Kiong, T.S. A Comprehensive Study of Artificial Intelligence and Machine Learning Approaches in Confronting the Coronavirus (COVID-19) Pandemic. Int. J. Health Serv. 2021, 51, 446–461. [Google Scholar] [CrossRef] [PubMed]

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69, S36–S40. [Google Scholar] [CrossRef]

- Barua, P.D.; Muhammad Gowdh, N.F.; Rahmat, K.; Ramli, N.; Ng, W.L.; Chan, W.Y.; Kuluozturk, M.; Dogan, S.; Baygin, M.; Yaman, O.; et al. Automatic COVID-19 detection using exemplar hybrid deep features with X-ray images. Int. J. Environ. Res. Public Health 2021, 18, 8052. [Google Scholar] [CrossRef] [PubMed]

- Tuncer, I.; Barua, P.D.; Dogan, S.; Baygin, M.; Tuncer, T.; Tan, R.S.; Yeong, C.H.; Acharya, U.R. Swin-textural: A novel textural features-based image classification model for COVID-19 detection on chest computed tomography. Inform. Med. Unlocked 2023, 36, 101158. [Google Scholar] [CrossRef] [PubMed]

- Meghil, M.M.; Rajpurohit, P.; Awad, M.E.; McKee, J.; Shahoumi, L.A.; Ghaly, M. Artificial intelligence in dentistry. Dent. Rev. 2022, 2, 100009. [Google Scholar] [CrossRef]

- Shan, T.; Tay, F.; Gu, L. Application of Artificial Intelligence in Dentistry. J. Dent. Res. 2020, 100, 232–244. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Al-ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry – A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.T.; Larrivée, N.; Lee, A.; Bilaniuk, O.; Durand, R. Use of artificial intelligence in dentistry: Current clinical trends and research advances. J. Can. Dent. Assoc. 2021, 87, 1488–2159. [Google Scholar] [CrossRef]

- Schwendicke, F.; Samek, W.; Krois, J. Artificial Intelligence in Dentistry: Chances and Challenges. J. Dent. Res. 2020, 99, 769–774. [Google Scholar] [CrossRef]

- Carrillo-Perez, F.; Pecho, O.E.; Morales, J.C.; Paravina, R.D.; Bona, A.D.; Ghinea, R.; Pulgar, R.; del Mar Pérez, M.; Herrera, L.J. Applications of artificial intelligence in dentistry: A comprehensive review. J. Esthet. Restor. Dent. 2021, 34, 259–280. [Google Scholar] [CrossRef] [PubMed]

- Ossowska, A.; Kusiak, A.; Świetlik, D. Artificial Intelligence in Dentistry—Narrative Review. Int. J. Environ. Res. Public Health 2022, 19, 3449. [Google Scholar] [CrossRef] [PubMed]

- Lin, X.; Hong, D.; Zhang, D.; Huang, M.; Yu, H. Detecting Proximal Caries on Periapical Radiographs Using Convolutional Neural Networks with Different Training Strategies on Small Datasets. Diagnostics 2022, 12, 1047. [Google Scholar] [CrossRef] [PubMed]

- Sornam, M.; Prabhakaran, M. A new linear adaptive swarm intelligence approach using back propagation neural network for dental caries classification. In Proceedings of the 2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI), Chennai, India, 21–22 September 2017. [Google Scholar] [CrossRef]

- Ekert, T.; Krois, J.; Meinhold, L.; Elhennawy, K.; Emara, R.; Golla, T.; Schwendicke, F. Deep Learning for the Radiographic Detection of Apical Lesions. J. Endod. 2019, 45, 917–922.e5. [Google Scholar] [CrossRef]

- Okada, K.; Rysavy, S.; Flores, A.; Linguraru, M.G. Noninvasive differential diagnosis of dental periapical lesions in cone-beam CT scans. Med. Phys. 2015, 42, 1653–1665. [Google Scholar] [CrossRef] [Green Version]

- Chang, J.; Chang, M.F.; Angelov, N.; Hsu, C.Y.; Meng, H.W.; Sheng, S.; Glick, A.; Chang, K.; He, Y.R.; Lin, Y.B.; et al. Application of deep machine learning for the radiographic diagnosis of periodontitis. Clin. Oral Investig. 2022, 26, 6629–6637. [Google Scholar] [CrossRef]

- Bayraktar, Y.; Ayan, E. Diagnosis of interproximal caries lesions with deep convolutional neural network in digital bitewing radiographs. Clin. Oral Investig. 2021, 26, 623–632. [Google Scholar] [CrossRef]

- Nishitani, Y.; Nakayama, R.; Hayashi, D.; Hizukuri, A.; Murata, K. Segmentation of teeth in panoramic dental X-ray images using U-Net with a loss function weighted on the tooth edge. Radiol. Phys. Technol. 2021, 14, 64–69. [Google Scholar] [CrossRef]

- Miki, Y.; Muramatsu, C.; Hayashi, T.; Zhou, X.; Hara, T.; Katsumata, A.; Fujita, H. Classification of teeth in cone-beam CT using deep convolutional neural network. Comput. Biol. Med. 2017, 80, 24–29. [Google Scholar] [CrossRef]

- Abdalla-Aslan, R.; Yeshua, T.; Kabla, D.; Leichter, I.; Nadler, C. An artificial intelligence system using machine-learning for automatic detection and classification of dental restorations in panoramic radiography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 130, 593–602. [Google Scholar] [CrossRef]

- Lee, J.H.; Han, S.S.; Kim, Y.H.; Lee, C.; Kim, I. Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 129, 635–642. [Google Scholar] [CrossRef] [PubMed]

- Leite, A.F.; Gerven, A.V.; Willems, H.; Beznik, T.; Lahoud, P.; Gaêta-Araujo, H.; Vranckx, M.; Jacobs, R. Artificial intelligence-driven novel tool for tooth detection and segmentation on panoramic radiographs. Clin. Oral Investig. 2020, 25, 2257–2267. [Google Scholar] [CrossRef] [PubMed]

- Cantu, A.G.; Gehrung, S.; Krois, J.; Chaurasia, A.; Rossi, J.G.; Gaudin, R.; Elhennawy, K.; Schwendicke, F. Detecting caries lesions of different radiographic extension on bitewings using deep learning. J. Dent. 2020, 100, 103425. [Google Scholar] [CrossRef]

- Chang, H.J.; Lee, S.J.; Yong, T.H.; Shin, N.Y.; Jang, B.G.; Kim, J.E.; Huh, K.H.; Lee, S.S.; Heo, M.S.; Choi, S.C.; et al. Deep Learning Hybrid Method to Automatically Diagnose Periodontal Bone Loss and Stage Periodontitis. Sci. Rep. 2020, 10, 7531. [Google Scholar] [CrossRef]

- Lee, C.T.; Kabir, T.; Nelson, J.; Sheng, S.; Meng, H.W.; Dyke, T.E.V.; Walji, M.F.; Jiang, X.; Shams, S. Use of the deep learning approach to measure alveolar bone level. J. Clin. Periodontol. 2021, 49, 260–269. [Google Scholar] [CrossRef] [PubMed]

- Dental Imaging Systems–Cameras | X-rays | Scanners-Eclipse Dental Engineering Ltd. Available online: https://eclipse-dental.com/dental-blog-articles/dental-imaging-systems-\T1\textendash–-cameras-x-rays-scanners/ (accessed on 4 August 2022).

- Dental X-Rays: Purpose, Procedure, and Risks. Available online: https://www.healthline.com/health/dental-x-rays (accessed on 4 August 2022).

- Intraoral Radiographs | FOR.org. Available online: https://www.for.org/en/treat/treatment-guidelines/edentulous/diagnostics/diagnostic-imaging/intra-oral-radiographs (accessed on 4 August 2022).

- Dental X-rays | WebMD. Available online: https://www.webmd.com/oral-health/dental-x-rays/ (accessed on 4 August 2022).

- Kim, J.; Lee, H.S.; Song, I.S.; Jung, K.H. DeNTNet: Deep Neural Transfer Network for the detection of periodontal bone loss using panoramic dental radiographs. Sci. Rep. 2019, 9, 17615. [Google Scholar] [CrossRef] [Green Version]

- Helal, N.M.; Basri, O.A.; Baeshen, H.A. Significance of cephalometric radiograph in orthodontic treatment plan decision. J. Contemp. Dent. Pract. 2019, 20, 789–7793. [Google Scholar] [CrossRef]

- Rischen, R.J.; Breuning, K.H.; Bronkhorst, E.M.; Kuijpers-Jagtman, A.M. Records needed for orthodontic diagnosis and treatment planning: A systematic review. PLoS ONE 2013, 8, e74186. [Google Scholar] [CrossRef] [Green Version]

- Patel, S.; Kanagasingam, S.; Mannocci, F. Cone Beam Computed Tomography (CBCT) in Endodontics. Dent. Update 2010, 37, 373–379. [Google Scholar] [CrossRef]

- Kühnisch, J.; Söchtig, F.; Pitchika, V.; Laubender, R.; Neuhaus, K.W.; Lussi, A.; Hickel, R. In vivo validation of near-infrared light transillumination for interproximal dentin caries detection. Clin. Oral Investig. 2015, 20, 821–829. [Google Scholar] [CrossRef]

- Simon, J.C.; Lucas, S.A.; Staninec, M.; Tom, H.; Chan, K.H.; Darling, C.L.; Fried, D. Transillumination and reflectance probes for in vivo near-IR imaging of dental caries. In Proceedings of the Lasers in Dentistry XX, San Francisco, CA, USA, 1–6 February 2014; Rechmann, P., Fried, D., Eds.; SPIE: Bellingham, WA, USA, 2014. [Google Scholar] [CrossRef] [Green Version]

- Bussaneli, D.G.; Restrepo, M.; Boldieri, T.; Pretel, H.; Mancini, M.W.; Santos-Pinto, L.; Cordeiro, R.C.L. Assessment of a new infrared laser transillumination technology (808 nm) for the detection of occlusal caries—An in vitro study. Lasers Med. Sci. 2014, 30, 1873–1879. [Google Scholar] [CrossRef]

- Thiem, D.G.; Römer, P.; Gielisch, M.; Al-Nawas, B.; Schlüter, M.; Plaß, B.; Kämmerer, P.W. Hyperspectral imaging and artificial intelligence to detect oral malignancy–part 1-automated tissue classification of oral muscle, fat and mucosa using a light-weight 6-layer deep neural network. Head Face Med. 2021, 17, 1–9. [Google Scholar] [CrossRef]

- Bounds, A.D.; Girkin, J.M. Early stage dental caries detection using near infrared spatial frequency domain imaging. Sci. Rep. 2021, 11, 2433. [Google Scholar] [CrossRef]

- Urban, B.E.; Subhash, H.M. Multimodal hyperspectral fluorescence and spatial frequency domain imaging for tissue health diagnostics of the oral cavity. Biomed. Opt. Express 2021, 12, 6954. [Google Scholar] [CrossRef] [PubMed]

- Moraes, L.G.P.; Rocha, R.S.F.; Menegazzo, L.M.; Araújo, E.B.d.; Yukimito, K.; Moraes, J.C.S. Infrared spectroscopy: A tool for determination of the degree of conversion in dental composites. J. Appl. Oral Sci. 2008, 16, 145–149. [Google Scholar] [CrossRef] [PubMed]

- Alasiri, R.A.; Algarni, H.A.; Alasiri, R.A. Ocular hazards of curing light units used in dental practice—A systematic review. Saudi Dent. J. 2019, 31, 173–180. [Google Scholar] [CrossRef] [PubMed]

- Angmar-Mansson, B.; Ten Bosh, J. Advances in methods for diagnosing coronal caries—A review. Adv. Dent. Res. 1993, 7, 70–79. [Google Scholar] [CrossRef] [PubMed]

- Sadasiva, K.; Kumar, K.S.; Rayar, S.; Shamini, S.; Unnikrishnan, M.; Kandaswamy, D. Evaluation of the efficacy of visual, tactile method, caries detector dye, and laser fluorescence in removal of dental caries and confirmation by culture and polymerase chain reaction: An in vivo study. J. Pharm. Bioallied Sci. 2019, 11, S146. [Google Scholar] [CrossRef]

- Idiyatullin, D.; Corum, C.; Moeller, S.; Prasad, H.S.; Garwood, M.; Nixdorf, D.R. Dental magnetic resonance imaging: Making the invisible visible. J. Endod. 2011, 37, 745–752. [Google Scholar] [CrossRef] [Green Version]

- Niraj, L.K.; Patthi, B.; Singla, A.; Gupta, R.; Ali, I.; Dhama, K.; Kumar, J.K.; Prasad, M. MRI in dentistry-A future towards radiation free imaging–systematic review. J. Clin. Diagn. Res. 2016, 10, ZE14. [Google Scholar] [CrossRef]

- Rasmussen, C.B.; Kirk, K.; Moeslund, T.B. The Challenge of Data Annotation in Deep Learning—A Case Study on Whole Plant Corn Silage. Sensors 2022, 22, 1596. [Google Scholar] [CrossRef]

- Krois, J.; Cantu, A.G.; Chaurasia, A.; Patil, R.; Chaudhari, P.K.; Gaudin, R.; Gehrung, S.; Schwendicke, F. Generalizability of deep learning models for dental image analysis. Sci. Rep. 2021, 11, 6102. [Google Scholar] [CrossRef] [PubMed]

- Li, D.C.; Liu, C.W.; Hu, S.C. A learning method for the class imbalance problem with medical data sets. Comput. Biol. Med. 2010, 40, 509–518. [Google Scholar] [CrossRef] [PubMed]

- Aittokallio, T. What are the current challenges for machine learning in drug discovery and repurposing? Expert Opin. Drug Discov. 2022, 17, 423–425. [Google Scholar] [CrossRef]

- Kaur, A.; Singh, C. Automatic cephalometric landmark detection using Zernike moments and template matching. Signal Image Video Process. 2013, 9, 117–132. [Google Scholar] [CrossRef]

- Grand Challenges in Dental X-ray Image Analysis. 2014. Available online: http://www-o.ntust.edu.tw/ISBI2015/challenge2/index.html (accessed on 4 August 2022).

- Wang, C.W.; Huang, C.T.; Lee, J.H.; Li, C.H.; Chang, S.W.; Siao, M.J.; Lai, T.M.; Ibragimov, B.; Vrtovec, T.; Ronneberger, O.; et al. A benchmark for comparison of dental radiography analysis algorithms. Med Image Anal. 2016, 31, 63–76. [Google Scholar] [CrossRef]

- Reddy, P.K.; Kanakatte, A.; Gubbi, J.; Poduval, M.; Ghose, A.; Purushothaman, B. Anatomical Landmark Detection using Deep Appearance-Context Network. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Jalisco, Mexico, 1–5 November 2021. [Google Scholar] [CrossRef]

- Panoramic Dental Xray Dataset|Kaggle. Available online: https://www.kaggle.com/datasets/daverattan/dental-xrary-tfrecords/ (accessed on 4 August 2022).

- Silva, G.; Oliveira, L.; Pithon, M. Automatic segmenting teeth in X-ray images: Trends, a novel data set, benchmarking and future perspectives. Expert Syst. Appl. 2018, 107, 15–31. [Google Scholar] [CrossRef] [Green Version]

- The Tufts Dental Database. Available online: http://tdd.ece.tufts.edu/ (accessed on 4 August 2022).

- Hyttinen, J.; Fält, P.; Jäsberg, H.; Kullaa, A.; Hauta-Kasari, M. Oral and Dental Spectral Image Database—ODSI-DB. Appl. Sci. 2020, 10, 7246. [Google Scholar] [CrossRef]

- Panetta, K.; Rajendran, R.; Ramesh, A.; Rao, S.; Agaian, S. Tufts Dental Database: A Multimodal Panoramic X-Ray Dataset for Benchmarking Diagnostic Systems. IEEE J. Biomed. Health Inform. 2022, 26, 1650–1659. [Google Scholar] [CrossRef]

- Understanding Dice Coefficient. Available online: https://www.kaggle.com/code/yerramvarun/understanding-dice-coefficient/ (accessed on 4 August 2022).

- Karacan, M.H.; Yucebas, S.C. A Deep Learning Model with Attention Mechanism for Dental Image Segmentation. In Proceedings of the 2022 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 9–11 June 2022. [Google Scholar] [CrossRef]

- Lin, P.; Lai, Y.; Huang, P. An effective classification and numbering system for dental bitewing radiographs using teeth region and contour information. Pattern Recognit. 2010, 43, 1380–1392. [Google Scholar] [CrossRef]

- Lin, P.; Huang, P.; Cho, Y.; Kuo, C. An automatic and effective tooth isolation method for dental radiographs. Opto Electron. Rev. 2013, 21, 126–136. [Google Scholar] [CrossRef] [Green Version]

- Ahmad, S.A.; Taib, M.N.; Khalid, N.E.A.; Taib, H. An Analysis of Image Enhancement Techniques for Dental X-ray Image Interpretation. Int. J. Mach. Learn. Comput. 2012, 2, 292–297. [Google Scholar] [CrossRef] [Green Version]

- Suprijanto; Gianto; Juliastuti, E.; Azhari; Epsilawati, L. Image contrast enhancement for film-based dental panoramic radiography. In Proceedings of the 2012 International Conference on System Engineering and Technology (ICSET), Bandung, Indonesia, 11–12 September 2012. [Google Scholar] [CrossRef]

- Widodo, H.B.; Soelaiman, A.; Ramadhani, Y.; Supriyanti, R. Calculating Contrast Stretching Variables in Order to Improve Dental Radiology Image Quality. IOP Conf. Ser. Mater. Sci. Eng. 2016, 105, 012002. [Google Scholar] [CrossRef]

- Radhiyah, A.; Harsono, T.; Sigit, R. Comparison study of Gaussian and histogram equalization filter on dental radiograph segmentation for labelling dental radiograph. In Proceedings of the 2016 International Conference on Knowledge Creation and Intelligent Computing (KCIC), Manado, Indonesia, 15–17 November 2016. [Google Scholar] [CrossRef]

- Geetha, V.; Aprameya, K.S. Textural Analysis Based Classification of Digital X-ray Images for Dental Caries Diagnosis. Int. J. Eng. Manuf. 2019, 9, 44–54. [Google Scholar] [CrossRef]

- Veena Divya, K.; Anand, J.; Revan, J.; Deepu Krishna, S. Characterization of dental pathologies using digital panoramic X-ray images based on texture analysis. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017. [Google Scholar] [CrossRef]

- Yousefi, B.; Hakim, H.; Motahir, N.; Yousefi, P.; Hosseini, M.M. Visibility enhancement of digital dental X-ray for RCT application using Bayesian classifier and two times wavelet image fusion. J. Am. Sci. 2012, 8, 7–13. [Google Scholar]

- Kositbowornchai, S.; Plermkamon, S.; Tangkosol, T. Performance of an artificial neural network for vertical root fracture detection: An vivo study. Dent. Traumatol. 2012, 29, 151–155. [Google Scholar] [CrossRef]

- Johari, M.; Esmaeili, F.; Andalib, A.; Garjani, S.; Saberkari, H. Detection of vertical root fractures in intact and endodontically treated premolar teeth by designing a probabilistic neural network: An ex vivo study. Dentomaxillofac. Radiol. 2017, 46, 20160107. [Google Scholar] [CrossRef] [Green Version]

- Poedjiastoeti, W.; Suebnukarn, S. Application of Convolutional Neural Network in the Diagnosis of Jaw Tumors. Healthc. Inform. Res. 2018, 24, 236. [Google Scholar] [CrossRef]

- Tuzoff, D.V.; Tuzova, L.N.; Bornstein, M.M.; Krasnov, A.S.; Kharchenko, M.A.; Nikolenko, S.I.; Sveshnikov, M.M.; Bednenko, G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, K.; Lyu, P.; Li, H.; Zhang, L.; Wu, J.; Lee, C.H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019, 9, 3840. [Google Scholar] [CrossRef] [Green Version]

- Hiraiwa, T.; Ariji, Y.; Fukuda, M.; Kise, Y.; Nakata, K.; Katsumata, A.; Fujita, H.; Ariji, E. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac. Radiol. 2019, 48, 20180218. [Google Scholar] [CrossRef] [PubMed]

- Orhan, K.; Bayrakdar, I.S.; Ezhov, M.; Kravtsov, A.; Özyürek, T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. Int. Endod. J. 2020, 53, 680–689. [Google Scholar] [CrossRef] [PubMed]

- Chung, M.; Lee, J.; Park, S.; Lee, M.; Lee, C.E.; Lee, J.; Shin, Y.G. Individual tooth detection and identification from dental panoramic X-ray images via point-wise localization and distance regularization. Artif. Intell. Med. 2021, 111, 101996. [Google Scholar] [CrossRef] [PubMed]

- Mima, Y.; Nakayama, R.; Hizukuri, A.; Murata, K. Tooth detection for each tooth type by application of faster R-CNNs to divided analysis areas of dental panoramic X-ray images. Radiol. Phys. Technol. 2022, 15, 170–176. [Google Scholar] [CrossRef] [PubMed]

- Morishita, T.; Muramatsu, C.; Seino, Y.; Takahashi, R.; Hayashi, T.; Nishiyama, W.; Zhou, X.; Hara, T.; Katsumata, A.; Fujita, H. Tooth recognition of 32 tooth types by branched single shot multibox detector and integration processing in panoramic radiographs. J. Med. Imaging 2022, 9, 034503. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Krois, J.; Ekert, T.; Meinhold, L.; Golla, T.; Kharbot, B.; Wittemeier, A.; Dörfer, C.; Schwendicke, F. Deep Learning for the Radiographic Detection of Periodontal Bone Loss. Sci. Rep. 2019, 9, 8495. [Google Scholar] [CrossRef] [Green Version]

- Tangel, M.L.; Fatichah, C.; Yan, F.; Betancourt, J.P.; Widyanto, M.R.; Dong, F.; Hirota, K. Dental classification for periapical radiograph based on multiple fuzzy attribute. In Proceedings of the 2013 Joint IFSA World Congress and NAFIPS Annual Meeting (IFSA/NAFIPS), Edmonton, AB, Canada, 24–28 June 2013; pp. 304–309. [Google Scholar]

- Banu, A.F.S.; Kayalvizhi, M.; Arumugam, B.; Gurunathan, U. Texture based classification of dental cysts. In Proceedings of the 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumari, India, 10–11 July 2014. [Google Scholar] [CrossRef]

- Riandini; Delimayanti, M.K. Feature extraction and classification of thorax x-ray image in the assessment of osteoporosis. In Proceedings of the 2017 4th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Yogyakarta, Indonesia, 19–21 September 2017. [Google Scholar] [CrossRef]

- Jusman, Y.; Anam, M.K.; Puspita, S.; Saleh, E.; Kanafiah, S.N.A.M.; Tamarena, R.I. Comparison of Dental Caries Level Images Classification Performance using KNN and SVM Methods. In Proceedings of the 2021 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Terengganu, Malaysia, 13–15 September 2021. [Google Scholar] [CrossRef]

- Devito, K.L.; de Souza Barbosa, F.; Filho, W.N.F. An artificial multilayer perceptron neural network for diagnosis of proximal dental caries. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2008, 106, 879–884. [Google Scholar] [CrossRef]

- Pushparaj, V.; Gurunathan, U.; Arumugam, B. An Effective Dental Shape Extraction Algorithm Using Contour Information and Matching by Mahalanobis Distance. J. Digit. Imaging 2012, 26, 259–268. [Google Scholar] [CrossRef] [Green Version]

- Bo, C.; Liang, X.; Chu, P.; Xu, J.; Wang, D.; Yang, J.; Megalooikonomou, V.; Ling, H. Osteoporosis prescreening using dental panoramic radiographs feature analysis. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, VIC, Australia, 18–21 April 2017. [Google Scholar] [CrossRef]

- Wu, Y.; Xie, F.; Yang, J.; Cheng, E.; Megalooikonomou, V.; Ling, H. Computer aided periapical lesion diagnosis using quantized texture analysis. In Proceedings of the SPIE Proceedings, San Diego, CA, USA, 4–9 February 2012; van Ginneken, B., Novak, C.L., Eds.; SPIE: Bellingham, WA, USA, 2012. [Google Scholar] [CrossRef]

- Ngoc, V.T.; Viet, D.H.; Anh, L.K.; Minh, D.Q.; Nghia, L.L.; Loan, H.K.; Tuan, T.M.; Ngan, T.T.; Tra, N.T. Periapical Lesion Diagnosis Support System Based on X-ray Images Using Machine Learning Technique. World J. Dent. 2021, 12, 189–193. [Google Scholar] [CrossRef]

- Niño-Sandoval, T.C.; Perez, S.V.G.; González, F.A.; Jaque, R.A.; Infante-Contreras, C. An automatic method for skeletal patterns classification using craniomaxillary variables on a Colombian population. Forensic Sci. Int. 2016, 261, 159.e1–159.e6. [Google Scholar] [CrossRef] [PubMed]

- Yasa, Y.; Çelik, Ö.; Bayrakdar, I.S.; Pekince, A.; Orhan, K.; Akarsu, S.; Atasoy, S.; Bilgir, E.; Odabaş, A.; Aslan, A.F. An artificial intelligence proposal to automatic teeth detection and numbering in dental bite-wing radiographs. Acta Odontol. Scand. 2020, 79, 275–281. [Google Scholar] [CrossRef] [PubMed]

- Bilgir, E.; Bayrakdar, İ.Ş.; Çelik, Ö.; Orhan, K.; Akkoca, F.; Sağlam, H.; Odabaş, A.; Aslan, A.F.; Ozcetin, C.; Kıllı, M.; et al. An artifıcial ıntelligence approach to automatic tooth detection and numbering in panoramic radiographs. BMC Med. Imaging 2021, 21, 124. [Google Scholar] [CrossRef]

- Kılıc, M.C.; Bayrakdar, I.S.; Çelik, Ö.; Bilgir, E.; Orhan, K.; Aydın, O.B.; Kaplan, F.A.; Sağlam, H.; Odabaş, A.; Aslan, A.F.; et al. Artificial intelligence system for automatic deciduous tooth detection and numbering in panoramic radiographs. Dentomaxillofac. Radiol. 2021, 50, 20200172. [Google Scholar] [CrossRef] [PubMed]

- Görürgöz, C.; Orhan, K.; Bayrakdar, I.S.; Çelik, Ö.; Bilgir, E.; Odabaş, A.; Aslan, A.F.; Jagtap, R. Performance of a convolutional neural network algorithm for tooth detection and numbering on periapical radiographs. Dentomaxillofac. Radiol. 2022, 51, 20210246. [Google Scholar] [CrossRef]

- Sukegawa, S.; Yoshii, K.; Hara, T.; Yamashita, K.; Nakano, K.; Yamamoto, N.; Nagatsuka, H.; Furuki, Y. Deep Neural Networks for Dental Implant System Classification. Biomolecules 2020, 10, 984. [Google Scholar] [CrossRef]

- Lee, J.H.; Jeong, S.N. Efficacy of deep convolutional neural network algorithm for the identification and classification of dental implant systems, using panoramic and periapical radiographs. Medicine 2020, 99, e20787. [Google Scholar] [CrossRef]

- Sukegawa, S.; Yoshii, K.; Hara, T.; Matsuyama, T.; Yamashita, K.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; Furuki, Y. Multi-Task Deep Learning Model for Classification of Dental Implant Brand and Treatment Stage Using Dental Panoramic Radiograph Images. Biomolecules 2021, 11, 815. [Google Scholar] [CrossRef]

- Kim, H.S.; Ha, E.G.; Kim, Y.H.; Jeon, K.J.; Lee, C.; Han, S.S. Transfer learning in a deep convolutional neural network for implant fixture classification: A pilot study. Imaging Sci. Dent. 2022, 52, 219. [Google Scholar] [CrossRef]

- Li, C.W.; Lin, S.Y.; Chou, H.S.; Chen, T.Y.; Chen, Y.A.; Liu, S.Y.; Liu, Y.L.; Chen, C.A.; Huang, Y.C.; Chen, S.L.; et al. Detection of Dental Apical Lesions Using CNNs on Periapical Radiograph. Sensors 2021, 21, 7049. [Google Scholar] [CrossRef]

- Mao, Y.C.; Chen, T.Y.; Chou, H.S.; Lin, S.Y.; Liu, S.Y.; Chen, Y.A.; Liu, Y.L.; Chen, C.A.; Huang, Y.C.; Chen, S.L.; et al. Caries and Restoration Detection Using Bitewing Film Based on Transfer Learning with CNNs. Sensors 2021, 21, 4613. [Google Scholar] [CrossRef]

- Li, S.; Liu, J.; Zhou, Z.; Zhou, Z.; Wu, X.; Li, Y.; Wang, S.; Liao, W.; Ying, S.; Zhao, Z. Artificial intelligence for caries and periapical periodontitis detection. J. Dent. 2022, 122, 104107. [Google Scholar] [CrossRef] [PubMed]

- Moran, M.; Faria, M.; Giraldi, G.; Bastos, L.; Oliveira, L.; Conci, A. Classification of Approximal Caries in Bitewing Radiographs Using Convolutional Neural Networks. Sensors 2021, 21, 5192. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Li, H.; Zhao, Y.; Zhao, J.; Wang, Y. Dental disease detection on periapical radiographs based on deep convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 649–661. [Google Scholar] [CrossRef] [PubMed]

- Rad, A.E.; Rahim, M.S.M.; Norouzi, A. Digital Dental X-Ray Image Segmentation and Feature Extraction. TELKOMNIKA Indones. J. Electr. Eng. 2013, 11, 3109–3114. [Google Scholar] [CrossRef]

- Indraswari, R.; Arifin, A.Z.; Navastara, D.A.; Jawas, N. Teeth segmentation on dental panoramic radiographs using decimation-free directional filter bank thresholding and multistage adaptive thresholding. In Proceedings of the 2015 International Conference on Information & Communication Technology and Systems (ICTS), Surabaya, Indonesia, 16 September 2015. [Google Scholar] [CrossRef]

- Setianingrum, A.H.; Rini, A.S.; Hakiem, N. Image segmentation using the Otsu method in Dental X-rays. In Proceedings of the 2017 Second International Conference on Informatics and Computing (ICIC), Jayapura, Indonesia, 1–3 November 2017. [Google Scholar] [CrossRef]

- Ali, M.; Son, L.H.; Khan, M.; Tung, N.T. Segmentation of dental X-ray images in medical imaging using neutrosophic orthogonal matrices. Expert Syst. Appl. 2018, 91, 434–441. [Google Scholar] [CrossRef] [Green Version]

- Devi, R.K.; Dawood, M.S.; Murugan, R.; Lenamika, R.; Kaviya, S.; Laxmi Vasini, K. Fuzzy based Regional Thresholding for Cyst Segmentation in Dental Radiographs. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 13–15 May 2020. [CrossRef]

- Li, S.; Fevens, T.; Krzyżak, A.; Li, S. Automatic clinical image segmentation using pathological modeling, PCA and SVM. Eng. Appl. Artif. Intell. 2006, 19, 403–410. [Google Scholar] [CrossRef]

- Lin, P.L.; Huang, P.Y.; Huang, P.W. An automatic lesion detection method for dental x-ray images by segmentation using variational level set. In Proceedings of the 2012 International Conference on Machine Learning and Cybernetics, Xi’an, China, 15–17 July 2012. [Google Scholar] [CrossRef]

- Amasya, H.; Yildirim, D.; Aydogan, T.; Kemaloglu, N.; Orhan, K. Cervical vertebral maturation assessment on lateral cephalometric radiographs using artificial intelligence: Comparison of machine learning classifier models. Dentomaxillofac. Radiol. 2020, 49, 20190441. [Google Scholar] [CrossRef]

- Bozkurt, M.H.; Karagol, S. Jaw and Teeth Segmentation on the Panoramic X-Ray Images for Dental Human Identification. J. Digit. Imaging 2020, 33, 1410–1427. [Google Scholar] [CrossRef]

- Jiang, L.; Chen, D.; Cao, Z.; Wu, F.; Zhu, H.; Zhu, F. A two-stage deep learning architecture for radiographic staging of periodontal bone loss. BMC Oral Health 2022, 22, 106. [Google Scholar] [CrossRef]

- Zeng, M.; Yan, Z.; Liu, S.; Zhou, Y.; Qiu, L. Cascaded convolutional networks for automatic cephalometric landmark detection. Med Image Anal. 2021, 68, 101904. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Qiao, X.; Iwamoto, Y.; wei Chen, Y. Automatic Cephalometric Landmark Detection on X-ray Images Using a Deep-Learning Method. Appl. Sci. 2020, 10, 2547. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.H.; Yu, H.J.; Kim, M.j.; Kim, J.W.; Choi, J. Automated cephalometric landmark detection with confidence regions using Bayesian convolutional neural networks. BMC Oral Health 2020, 20, 1–10. [Google Scholar] [CrossRef]

- Qian, J.; Cheng, M.; Tao, Y.; Lin, J.; Lin, H. CephaNet: An Improved Faster R-CNN for Cephalometric Landmark Detection. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019. [Google Scholar] [CrossRef]

- Lindner, C.; Wang, C.W.; Huang, C.T.; Li, C.H.; Chang, S.W.; Cootes, T.F. Fully Automatic System for Accurate Localisation and Analysis of Cephalometric Landmarks in Lateral Cephalograms. Sci. Rep. 2016, 6, 33581. [Google Scholar] [CrossRef] [PubMed]

- Ibragimov, B.; Likar, B.; Pernus, F.; Vrtovec, T. Computerized Cephalometry by Game Theory with Shape-and Appearance-Based Landmark Refinement. In Proceedings of the International Symposium on Biomedical Imaging (ISBI), Bridge, NY, USA, 16–19 April 2015. [Google Scholar]

- Chu, C.; Chen, C.; Wang, C.W.; Huang, C.T.; Li, C.H.; Nolte, L.P.; Zheng, G. Fully Automatic Cephalometric X-ray Landmark Detection Using Random Forest Regression and Sparse Shape Composition. In Proceedings of the International Symposium on Biomedical Imaging (ISBI), Beijing, China, 29 April—2 May 2014. [Google Scholar]

- Nashold, L.; Pandya, P.; Lin, T. Multi-Objective Processing of Dental Panoramic Radiographs. Available online: http://cs231n.stanford.edu/reports/2022/pdfs/118.pdf (accessed on 25 May 2022).

- Yamanakkanavar, N.; Choi, J.Y.; Lee, B. Multiscale and Hierarchical Feature-Aggregation Network for Segmenting Medical Images. Sensors 2022, 22, 3440. [Google Scholar] [CrossRef]

- Chen, Q.; Zhao, Y.; Liu, Y.; Sun, Y.; Yang, C.; Li, P.; Zhang, L.; Gao, C. MSLPNet: Multi-scale location perception network for dental panoramic X-ray image segmentation. Neural Comput. Appl. 2021, 33, 10277–10291. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, P.; Gao, C.; Liu, Y.; Chen, Q.; Yang, F.; Meng, D. TSASNet: Tooth segmentation on dental panoramic X-ray images by Two-Stage Attention Segmentation Network. Knowl.-Based Syst. 2020, 206, 106338. [Google Scholar] [CrossRef]

- Koch, T.L.; Perslev, M.; Igel, C.; Brandt, S.S. Accurate Segmentation of Dental Panoramic Radiographs with U-NETS. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019. [Google Scholar] [CrossRef]

- Wang, H.C.; Tsai, M.T.; Chiang, C.P. Visual perception enhancement for detection of cancerous oral tissue by multi-spectral imaging. J. Opt. 2013, 15, 055301. [Google Scholar] [CrossRef]

- Fält, P.; Hyttinen, J.; Fauch, L.; Riepponen, A.; Kullaa, A.; Hauta-Kasari, M. Spectral Image Enhancement for the Visualization of Dental Lesions. In Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 490–498. [Google Scholar]

- Hyttinen, J.; Falt, P.; Jasberg, H.; Kullaa, A.; Hauta-Kasari, M. Computational Filters for Dental and Oral Lesion Visualization in Spectral Images. IEEE Access 2021, 9, 145148–145160. [Google Scholar] [CrossRef]

- Lederer, A.; Kunzelmann, K.H.; Heck, K.; Hickel, R.; Litzenburger, F. In-vitro validation of near-infrared reflection for proximal caries detection. Eur. J. Oral Sci. 2019, 127, 515–522. [Google Scholar] [CrossRef] [Green Version]

- Litzenburger, F.; Heck, K.; Kaisarly, D.; Kunzelmann, K.H. Diagnostic validity of early proximal caries detection using near-infrared imaging technology on 3D range data of posterior teeth. Clin. Oral Investig. 2021, 26, 543–553. [Google Scholar] [CrossRef] [PubMed]

- Metzger, Z.; Colson, D.G.; Bown, P.; Weihard, T.; Baresel, I.; Nolting, T. Reflected near-infrared light versus bite-wing radiography for the detection of proximal caries: A multicenter prospective clinical study conducted in private practices. J. Dent. 2022, 116, 103861. [Google Scholar] [CrossRef]

- Zakian, C.; Pretty, I.; Ellwood, R. Near-infared hyperspectral imaging of teeth for dental caries detection. J. Biomed. Opt. 2009, 14, 064047. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Usenik, P.; Bürmen, M.; Fidler, A.; Pernuš, F.; Likar, B. Automated Classification and Visualization of Healthy and Diseased Hard Dental Tissues by Near-Infrared Hyperspectral Imaging. Appl. Spectrosc. 2012, 66, 1067–1074. [Google Scholar] [CrossRef]

- Holtkamp, A.; Elhennawy, K.; de Oro, J.E.C.G.; Krois, J.; Paris, S.; Schwendicke, F. Generalizability of Deep Learning Models for Caries Detection in Near-Infrared Light Transillumination Images. J. Clin. Med. 2021, 10, 961. [Google Scholar] [CrossRef]

- Casalegno, F.; Newton, T.; Daher, R.; Abdelaziz, M.; Lodi-Rizzini, A.; Schürmann, F.; Krejci, I.; Markram, H. Caries Detection with Near-Infrared Transillumination Using Deep Learning. J. Dent. Res. 2019, 98, 1227–1233. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schwendicke, F.; Elhennawy, K.; Paris, S.; Friebertshäuser, P.; Krois, J. Deep learning for caries lesion detection in near-infrared light transillumination images: A pilot study. J. Dent. 2020, 92, 103260. [Google Scholar] [CrossRef] [PubMed]

- Hossam, A.; Mohamed, K.; Tarek, R.; Elsayed, A.; Mostafa, H.; Selim, S. Automated Dental Diagnosis using Deep Learning. In Proceedings of the 2021 16th International Conference on Computer Engineering and Systems (ICCES), Cairo, Egypt, 15–16 December 2021. [Google Scholar] [CrossRef]

- Naik, N.; Hameed, B.M.Z.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Ibrahim, S.; Patil, V.; Smriti, K.; et al. Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Front. Surg. 2022, 9, 862322. [Google Scholar] [CrossRef]

- Use of Artificial Intelligence (AI) in Dentistry-Dental News. Available online: https://www.dentalnews.com/2021/10/08/artificial-intelligence-ai-dentistry/ (accessed on 4 August 2022).

- Mörch, C.; Atsu, S.; Cai, W.; Li, X.; Madathil, S.; Liu, X.; Mai, V.; Tamimi, F.; Dilhac, M.; Ducret, M. Artificial Intelligence and Ethics in Dentistry: A Scoping Review. J. Dent. Res. 2021, 100, 1452–1460. [Google Scholar] [CrossRef]

- Yang, J.; Xie, Y.; Liu, L.; Xia, B.; Cao, Z.; Guo, C. Automated Dental Image Analysis by Deep Learning on Small Dataset. In Proceedings of the 2018 IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC), Tokyo, Japan, 23–27 July 2018. [Google Scholar] [CrossRef]

- Yu, H.; Cho, S.; Kim, M.; Kim, W.; Kim, J.; Choi, J. Automated Skeletal Classification with Lateral Cephalometry Based on Artificial Intelligence. J. Dent. Res. 2020, 99, 249–256. [Google Scholar] [CrossRef]

- Wu, H.; Wu, Z. A Few-Shot Dental Object Detection Method Based on a Priori Knowledge Transfer. Symmetry 2022, 14, 1129. [Google Scholar] [CrossRef]

- Kumar, P.; Bhatnagar, R.; Gaur, K.; Bhatnagar, A. Classification of Imbalanced Data:Review of Methods and Applications. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1099, 012077. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- You, A.; Kim, J.K.; Ryu, I.H.; Yoo, T.K. Application of generative adversarial networks (GAN) for ophthalmology image domains: A survey. Eye Vis. 2022, 9, 6. [Google Scholar] [CrossRef] [PubMed]

- Harron, K.L.; Doidge, J.C.; Knight, H.E.; Gilbert, R.E.; Goldstein, H.; Cromwell, D.A.; van der Meulen, J.H. A guide to evaluating linkage quality for the analysis of linked data. Int. J. Epidemiol. 2017, 46, 1699–1710. [Google Scholar] [CrossRef] [Green Version]

- Garcia, I.; Kuska, R.; Somerman, M. Expanding the Foundation for Personalized Medicine. J. Dent. Res. 2013, 92, S3–S10. [Google Scholar] [CrossRef]

- Viswanathan, A.; Patel, N.; Vaidyanathan, M.; Bhujel, N. Utilizing Teledentistry to Manage Cleft Lip and Palate Patients in an Outpatient Setting. Cleft Palate-Craniofac. J. 2021, 59, 675–679. [Google Scholar] [CrossRef]

- Sharma, H.; Suprabha, B.S.; Rao, A. Teledentistry and its applications in paediatric dentistry: A literature review. Pediatr. Dent. J. 2021, 31, 203–215. [Google Scholar] [CrossRef]

- Estai, M.; Bunt, S.; Kanagasingam, Y.; Tennant, M. Cost savings from a teledentistry model for school dental screening: An Australian health system perspective. Aust. Health Rev. 2018, 42, 482. [Google Scholar] [CrossRef] [Green Version]

- Joda, T.; Yeung, A.; Hung, K.; Zitzmann, N.; Bornstein, M. Disruptive Innovation in Dentistry: What It Is and What Could Be Next. J. Dent. Res. 2020, 100, 448–453. [Google Scholar] [CrossRef]

- Salagare, S.; Prasad, R. An Overview of Internet of Dental Things: New Frontier in Advanced Dentistry. Wirel. Pers. Commun. 2019, 110, 1345–1371. [Google Scholar] [CrossRef]

- Liu, L.; Xu, J.; Huan, Y.; Zou, Z.; Yeh, S.C.; Zheng, L.R. A Smart Dental Health-IoT Platform Based on Intelligent Hardware, Deep Learning, and Mobile Terminal. IEEE J. Biomed. Health Inform. 2020, 24, 898–906. [Google Scholar] [CrossRef] [PubMed]

- Vellappally, S.; Al-Kheraif, A.A.; Anil, S.; Basavarajappa, S.; Hassanein, A.S. Maintaining patient oral health by using a xeno-genetic spiking neural network. J. Ambient. Intell. Humaniz. Comput. 2018. [Google Scholar] [CrossRef]

- Sannino, G.; Sbardella, D.; Cianca, E.; Ruggieri, M.; Coletta, M.; Prasad, R. Dental and Biological Aspects for the Design of an Integrated Wireless Warning System for Implant Supported Prostheses: A Possible Approach. Wirel. Pers. Commun. 2016, 88, 85–96. [Google Scholar] [CrossRef]

- Kim, I.H.; Cho, H.; Song, J.S.; Park, W.; Shin, Y.; Lee, K.E. Assessment of Real-Time Active Noise Control Devices in Dental Treatment Conditions. Int. J. Environ. Res. Public Health 2022, 19, 9417. [Google Scholar] [CrossRef] [PubMed]

- Vitale, M.C.; Gallo, S.; Pascadopoli, M.; Alcozer, R.; Ciuffreda, C.; Scribante, A. Local anesthesia with SleeperOne S4 computerized device vs traditional syringe and perceived pain in pediatric patients: A randomized clinical trial. J. Clin. Pediatr. Dent. 2023, 47, 82–90. [Google Scholar] [CrossRef] [PubMed]

| Element | Description |

|---|---|

| Research question | What are the clinical applications and diagnostic performance of artificial intelligence in dentistry? |

| Population | Dental imagery related to X-ray images (bitewing, periapical, occlusal, panoramic, cephalograms, cone-beam computed tomography (CBCT)) near-infrared light transillumination (NILT) images, fluorescence hyperspectral images, spatial frequency domain images. |

| Intervention | AI-based models for diagnosis, detection, classification, and segmentation. |

| Comparison | Different algorithms to predict dental diseases. |

| Outcome | Measurable and predictive outcomes that include accuracy, specificity, sensitivity, F1 score, intersection over union (IoU), dice coefficient, regression co-efficient receiver operating characteristic curve (ROC), area under the curve (AUC), and successful detection rate (SDR). |

| Application | Technique | Target Problem and Study Number |

|---|---|---|

| Image enhancement | Classical image analysis approaches Machine learning | Contrast adjustment [63,64,65,66,67,68,69], image sharpening [70] |

| Visibility enhancement [71] | ||

| Deep learning | - | |

| Disease detection | Machine learning | Vertical root fracture [72,73] |

| Deep learning | Periapical pathosis [21], dental tumors [74], tooth numbering [75,76,77,78], tooth detection and identification [79,80,81], periodontal bone loss [32,82,83] | |

| Disease classification | Classical image analysis approaches | Tooth detection [84,85], osteoporosis assessment [86], dental caries [87] |

| Machine learning | Dental caries [88], proximal dental caries [14], molar and pre-molar teeth [89], osteoporosis [90], dental caries [15], periapical lesions [16,17], dental restorations [22], periapical roots [91], teeth with root [92], sagittal patterns [93] | |

| Deep learning | Tooth numbering [94,95,96,97,98,99], dental implant stages [100], implant fixture [101], bone loss [18], periapical periodontitis [102,103,104,105], dental decay [106], approximal dental caries [19] | |

| Disease segmentation | Classical image analysis approaches | Feature extraction [107], tooth edge reinforcement [108], tooth decay [109,110], dental cyst delineation [111] |

| Machine learning | Bone loss and tooth decay detection [112,113], dental caries [85], assess maxillary structure variation [114] | |

| Deep learning | Identification of molars and premolars [23,24,25], identification of degraded and fragmented human remains [115], diagnosing early lesions [20], alveolar bone level [26,27], tooth localization [116] |

| Author, Year (Ref) | Architecture | Task | Dataset Size and Split | Data Augmentation | Hyperparameters | ||||

|---|---|---|---|---|---|---|---|---|---|

| Train Set | Valid Set | Test Set | Loss Function | Optimizer | Learning Rate | ||||

| Zeng et al., 2021 [117] | Three stage cascaded CNN | Landmark detection | 150 | 150 | 100 | Affine transformation | - | Adam | 0.001 |

| Song et al., 2020 [118] | CNN with pre-trained ResNet50 | Landmark detection | 150 | 150 | 100 | Affine transformation | - | Adam | 0.001 |

| Lee et al., 2020 [119] | Bayesian CNN (BCNN) | Landmark detection | 150 | 250 | - | Affine transformation | Softmax cross entropy | Adam | 0.001 |

| Qian et al., 2019 [120] | Faster R-CNN | Landmark detection | 150 | 150 | 100 | - | Custom loss function | Stochastic gradient descent (SGD) | 0.001 |

| Lindner et al. [121] | Random Forest, regression, voting | Landmark detection | 150 | 250 | - | - | - | - | - |

| Ibragimov et al., 2014 [122] | Shape and appearance based landmark refinement with game theory | Landmark detection | 150 | 150 | 100 | - | - | - | - |

| Chu et al., 2014 [123] | Random forest, regression | Landmark detection | 150 | 150 | 100 | - | - | - | - |

| Author, Year (Ref) | Architecture | Task | Dataset Size and Split | Data Augmentation | Hyperparameters | |||

|---|---|---|---|---|---|---|---|---|

| Train Set | Test Set | Loss Function | Optimizer | Learning Rate | ||||

| Pannetta et al., 2022 [60] | UNet with three backbones | Tooth segmentation | 85– | 150 | - | Cross entropy | Adam | 0.0001 |

| Nashold et al., 2022 [124] | Multi-objective model | Abnormality detection and localization | 900 | 100 | Affine transformation | Binary cross entropy | Adam | 0.0001 |

| Karacan et al., 2022 [62] | Tooth segmentation | - | - | - | - | - | - | - |

| Author, Year (Ref) | Architecture | Task | Dataset Size and Split | Data Augmentation | Hyperparameters | ||||

|---|---|---|---|---|---|---|---|---|---|

| Train Set | Valid Set | Test Set | Loss Function | Optimizer | Learning Rate | ||||

| Yamanakkana et al., 2022 [125] | Two feature aggregation module | Tooth segmentation | 1200 | 150 | 150 | Affine transformation | - | - | - |

| Chen et al., 2021 [126] | Multiscale structural similarity | Tooth segmentation root boundary extraction | 1200 | 150 | 150 | - | Custom hybrid loss | Adam | 0.0001 |

| Zhao et al., 2020 [127] | Two stage attention segmentation network | Tooth segmentation | 1200 | 150 | 150 | - | Custom hybrid loss | Adam | 0.001 |

| Kosh et al., 2019 [128] | Ensemble U-Net | Tooth segmentation | 1200 | - | 300 | Affine transformation | Cross entropy | Adam | 0.0001 |

| Silva et al., 2018 [57] | Mask R-CNN | Tooth segmentation | 753 | 452 | 295 | - | - | - | - |

| Author, Year (Ref) | Performance Evaluation Metrics | |||||||

|---|---|---|---|---|---|---|---|---|

| Successful Detection Rate (%) | ||||||||

| 2 mm | 2.5 mm | 3 mm | 4 mm | |||||

| Testset1 | Testset2 | Testset1 | Testset2 | Testset1 | Testset2 | Testset1 | Testset2 | |

| Zeng et al., 2021 [117] | 81.3 | 70.5 | 89.9 | 79.5 | 93.7 | 86.5 | 97.8 | 93.3 |

| Song et al., 2020 [118] | 86.4 | 74.0 | 91.7 | 81.3 | 94.8 | 87.5 | 97.8 | 94.3 |

| Lee et al., 2020 [119] | 82.1 | 82.1 | 88.6 | 88.6 | 92.2 | 92.2 | 95.9 | 95.9 |

| Qian et al., 2019 [120] | 82.5 | 72.4 | 86.2 | 76.1 | 89.3 | 79.6 | 90.6 | 85.9 |

| Lindner et al., 2016 [121] | 73.6 | 66.1 | 80.2 | 72.0 | 85.1 | 77.6 | 91.4 | 87.4 |

| Ibragimov et al., 2014 [122] | 71.7 | 62.7 | 77.4 | 70.4 | 81.9 | 76.5 | 88.0 | 85.1 |

| Chu et al. [123] | 39.7 | 44.1 | 51.7 | 57.0 | 62.1 | 68.0 | 77.7 | 83.8 |

| Author, Year (Ref) | Task | Performance Evaluation Metrics | ||||

|---|---|---|---|---|---|---|

| Accuracy (%) | IoU (%) | Dice Co-Efficient (%) | F1 Score | Recall | ||

| Pannetta et al., 2022 [60] | Tooth segmentation (5 categories) | 95.01 | 86.1 | 91.6 | - | - |

| Nashold et al., 2022 [124] | Abnormality detection and localization (5 categories) | 94.9 | 91.2 | - | 70.5 | - |

| Karacan et al., 2022 [62] | Tooth segmentation (teeth and maxillomandibular) | - | 91.8 | 95.7 | - | - |

| Author, Year (Ref) | Task | Performance Evaluation Metrics | |||||

|---|---|---|---|---|---|---|---|

| Accuracy (%) | Specificity (%) | Precision (%) | F1 Score (%) | Recall (%) | Dice Score (%) | ||

| Yamanakkanavar et al., 2022 [125] | Tooth segmentation (10 categories) | 97.0 | - | - | - | - | - |

| Chen et al., 2021 [126] | Tooth segmentation and root boundary extraction | 97.3 | 98.45 | 93.35 | - | 92.97 | 93.01 |

| Zhao et al., 2020 [127] | Tooth segmentation (10 categories) | 96.94 | 97.81 | 94.97 | - | 93.77 | 92.7 |

| Koch et al., 2019 [128] | Tooth segmentation (10 categories) | 97.2 | 98.3 | 92.9 | - | - | 93.6 |

| Silva et al., 2018 [57] | Tooth segmentation (10 categories) | 92.08 | 96.12 | 83.73 | 76.19 | 79.44 | - |

| Application | Target Problem and Study Number | Image Type |

|---|---|---|

| Image enhancement | Contrast enhancement | Spectral reflectance imaging |

| Disease detection | Dental caries | Near-infrared imaging |

| Disease classification | Early caries | Near-infrared hyperspectral imaging |

| Disease segmentation | Proximal and occlusal lesion | Near-infrared transillumination imaging |

| Ref, Year | Architecture | Task | Dataset Size | Pre-Processing | Hyperparameters | Metric | |||

|---|---|---|---|---|---|---|---|---|---|

| Train Set | Test Set | Loss | Optimizer | Epochs | Accuracy | ||||

| [140], 2021 | CenterNet ResNet | Classification and localization (17 categories) | 19,215 hyperspectral images | 2135 | Re-labeled masks using custom algorithm | - | Adam | 10,000 | 62.81% |

| [131], 2021 | Principal component analysis (PCA) | Image enhancement | Spectral images per class | - | Contrast stretching | - | - | - | - |

| Application | Technique | Target problem and study number |

|---|---|---|

| Image enhancement | Classical image analysis approaches | Contrast adjustment [63,64,65,66,67,68,69] (low), image sharpening [70] (low) |

| Machine learning | Visibility enhancement [71] (moderate) | |

| Disease detection | Machine learning | Vertical root fracture [72,73] (low) |

| Deep learning | Periapical pathosis [21] (moderate), dental tumors [74] (high), tooth numbering [75,76,77,78] (low), tooth detection and identification [79,80,81] (moderate), periodontal bone loss [32,82,83] (moderate) | |

| Disease classification | Classical image analysis approaches | Tooth detection [84,85] (low), osteoporosis assessment [86] (low), dental caries [87] (low) |

| Machine learning | Dental caries [88] (low), proximal dental caries [14] (moderate), molar and pre-molar teeth [73] (low), dental implants [98] (low), dental periapical lesions [17] (moderate) |

| Application | Technique | Target problem and study number |

|---|---|---|

| Image enhancement | Classical image analysis approaches | Contrast enhancement (low) [129,130,131] |

| Machine learning | Spectral image enhancement for dental disease diagnosis (low) [131] | |

| Disease detection | Machine learning | Dental caries detection using NIR imaging (low) [132,133,134] |

| Disease classification | Classical image analysis approaches | Dental tissue classification using NIR hyperspectral imaging (low) [135,136] |

| Deep learning | Dental caries classification using CNNs (moderate) [137] | |

| Disease segmentation | Deep learning | Lesion segmentation using deep CNN (moderate) [138,139] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shafi, I.; Fatima, A.; Afzal, H.; Díez, I.d.l.T.; Lipari, V.; Breñosa, J.; Ashraf, I. A Comprehensive Review of Recent Advances in Artificial Intelligence for Dentistry E-Health. Diagnostics 2023, 13, 2196. https://doi.org/10.3390/diagnostics13132196

Shafi I, Fatima A, Afzal H, Díez IdlT, Lipari V, Breñosa J, Ashraf I. A Comprehensive Review of Recent Advances in Artificial Intelligence for Dentistry E-Health. Diagnostics. 2023; 13(13):2196. https://doi.org/10.3390/diagnostics13132196

Chicago/Turabian StyleShafi, Imran, Anum Fatima, Hammad Afzal, Isabel de la Torre Díez, Vivian Lipari, Jose Breñosa, and Imran Ashraf. 2023. "A Comprehensive Review of Recent Advances in Artificial Intelligence for Dentistry E-Health" Diagnostics 13, no. 13: 2196. https://doi.org/10.3390/diagnostics13132196