Artificial Intelligence in Surgical Training for Kidney Cancer: A Systematic Review of the Literature

Abstract

:1. Introduction

2. Materials and Methods

2.1. Search Strategy

2.2. Search Criteria

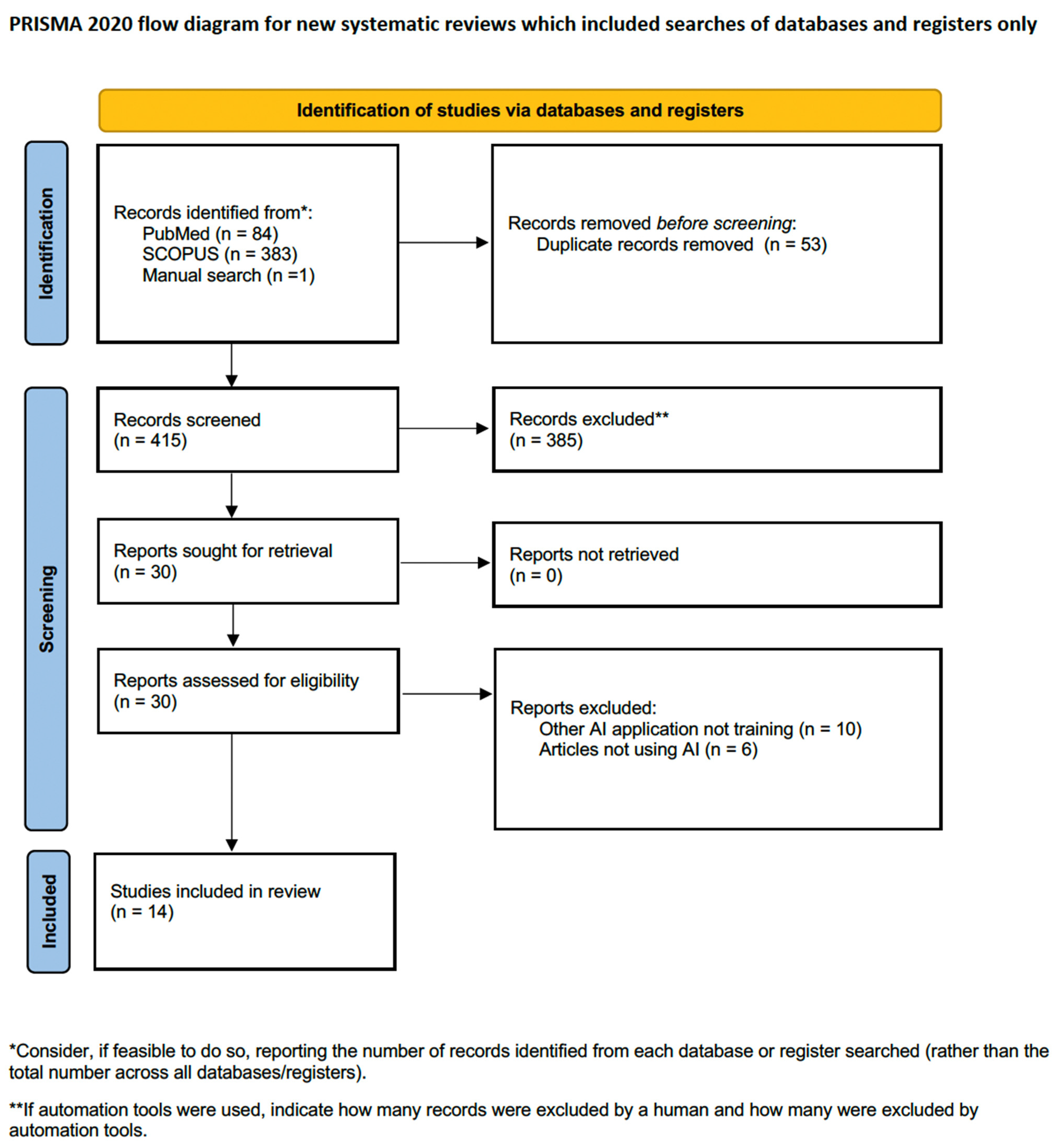

2.3. Screening and Article Selection

2.4. Data Extraction

2.5. Level of Evidence

3. Results

3.1. Search Results

3.2. AI and Surgical Training for Kidney Cancer

3.2.1. AI and Performance Assessment

3.2.2. AI and AR

3.2.3. AI and 3D-Printing

4. Discussion

4.1. AI Limitations

4.2. Future Perspectives

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Thorstenson, A.; Bergman, M.; Scherman-Plogell, A.-H.; Hosseinnia, S.; Ljungberg, B.; Adolfsson, J.; Lundstam, S. Tumour characteristics and surgical treatment of renal cell carcinoma in Sweden 2005–2010: A population-based study from the National Swedish Kidney Cancer Register. Scand. J. Urol. 2014, 48, 231–238. [Google Scholar] [CrossRef] [PubMed]

- Tahbaz, R.; Schmid, M.; Merseburger, A.S. Prevention of kidney cancer incidence and recurrence. Curr. Opin. Urol. 2018, 28, 62–79. [Google Scholar] [CrossRef]

- Moch, H.; Cubilla, A.L.; Humphrey, P.A.; Reuter, V.E.; Ulbright, T.M. The 2016 WHO Classification of Tumours of the Urinary System and Male Genital Organs—Part A: Renal, Penile, and Testicular Tumours. Eur. Urol. 2016, 70, 93–105. [Google Scholar] [CrossRef] [PubMed]

- Ljungberg, B.; Albiges, L.; Abu-Ghanem, Y.; Bedke, J.; Capitanio, U.; Dabestani, S.; Fernández-Pello, S.; Giles, R.H.; Hofmann, F.; Hora, M.; et al. European Association of Urology Guidelines on Renal Cell Carcinoma: The 2022 Update. Eur. Urol. 2022, 82, 399–410. [Google Scholar] [CrossRef] [PubMed]

- El Sherbiny, A.; Eissa, A.; Ghaith, A.; Morini, E.; Marzotta, L.; Sighinolfi, M.C.; Micali, S.; Bianchi, G.; Rocco, B. Training in urological robotic surgery. Future perspectives. Arch. Españoles Urol. 2018, 71, 97–107. [Google Scholar]

- Kowalewski, K.F.; Garrow, C.R.; Proctor, T.; Preukschas, A.A.; Friedrich, M.; Müller, P.C.; Kenngott, H.G.; Fischer, L.; Müller-Stich, B.P.; Nickel, F. LapTrain: Multi-modality training curriculum for laparoscopic cholecystectomy—Results of a randomized controlled trial. Surg. Endosc. 2018, 32, 3830–3838. [Google Scholar] [CrossRef]

- Kowalewski, K.-F.; Minassian, A.; Hendrie, J.D.; Benner, L.; Preukschas, A.A.; Kenngott, H.G.; Fischer, L.; Müller-Stich, B.P.; Nickel, F. One or two trainees per workplace for laparoscopic surgery training courses: Results from a randomized controlled trial. Surg. Endosc. 2019, 33, 1523–1531. [Google Scholar] [CrossRef]

- Nickel, F.; Brzoska, J.A.; Gondan, M.; Rangnick, H.M.; Chu, J.; Kenngott, H.G.; Linke, G.R.; Kadmon, M.; Fischer, L.; Müller-Stich, B.P. Virtual Reality Training Versus Blended Learning of Laparoscopic Cholecystectomy. Medicine 2015, 94, e764. [Google Scholar] [CrossRef]

- Kowalewski, K.-F.; Hendrie, J.D.; Schmidt, M.W.; Proctor, T.; Paul, S.; Garrow, C.R.; Kenngott, H.G.; Müller-Stich, B.P.; Nickel, F. Validation of the mobile serious game application Touch SurgeryTM for cognitive training and assessment of laparoscopic cholecystectomy. Surg. Endosc. 2017, 31, 4058–4066. [Google Scholar] [CrossRef]

- Kowalewski, K.-F.; Hendrie, J.D.; Schmidt, M.W.; Garrow, C.R.; Bruckner, T.; Proctor, T.; Paul, S.; Adigüzel, D.; Bodenstedt, S.; Erben, A.; et al. Development and validation of a sensor- and expert model-based training system for laparoscopic surgery: The iSurgeon. Surg. Endosc. 2017, 31, 2155–2165. [Google Scholar] [CrossRef]

- Garrow, C.R.B.; Kowalewski, K.-F.; Li, L.B.; Wagner, M.; Schmidt, M.W.; Engelhardt, S.; Hashimoto, D.A.; Kenngott, H.G.M.; Bodenstedt, S.; Speidel, S.; et al. Machine Learning for Surgical Phase Recognition. Ann. Surg. 2021, 273, 684–693. [Google Scholar] [CrossRef]

- Kowalewski, K.-F.; Garrow, C.R.; Schmidt, M.W.; Benner, L.; Müller-Stich, B.P.; Nickel, F. Sensor-based machine learning for workflow detection and as key to detect expert level in laparoscopic suturing and knot-tying. Surg. Endosc. 2019, 33, 3732–3740. [Google Scholar] [CrossRef]

- Moglia, A.; Georgiou, K.; Georgiou, E.; Satava, R.M.; Cuschieri, A. A systematic review on artificial intelligence in robot-assisted surgery. Int. J. Surg. 2021, 95, 106151. [Google Scholar] [CrossRef]

- Chen, J.; Remulla, D.; Nguyen, J.H.; Dua, A.; Liu, Y.; Dasgupta, P.; Hung, A.J. Current status of artificial intelligence applications in urology and their potential to influence clinical practice. BJU Int. 2019, 124, 567–577. [Google Scholar] [CrossRef]

- Kowalewski, K.-F.; Egen, L.; Fischetti, C.E.; Puliatti, S.; Juan, G.R.; Taratkin, M.; Ines, R.B.; Abate, M.A.S.; Mühlbauer, J.; Wessels, F.; et al. Artificial intelligence for renal cancer: From imaging to histology and beyond. Asian J. Urol. 2022, 9, 243–252. [Google Scholar] [CrossRef]

- Shah, M.; Naik, N.; Somani, B.K.; Hameed, B.Z. Artificial intelligence (AI) in urology-Current use and future directions: An iTRUE study. Turk. J. Urol. 2020, 46, S27–S39. [Google Scholar] [CrossRef]

- Suarez-Ibarrola, R.; Hein, S.; Reis, G.; Gratzke, C.; Miernik, A. Current and future applications of machine and deep learning in urology: A review of the literature on urolithiasis, renal cell carcinoma, and bladder and prostate cancer. World J. Urol. 2020, 38, 2329–2347. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Brennan, S.E.; Moher, D.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Rethlefsen, M.L.; Kirtley, S.; Waffenschmidt, S.; Ayala, A.P.; Moher, D.; Page, M.J.; Koffel, J.B.; PRISMA-S Group. PRISMA-S: An extension to the PRISMA Statement for Reporting Literature Searches in Systematic Reviews. Syst. Rev. 2021, 10, 39. [Google Scholar] [CrossRef] [PubMed]

- Howick, J.; Chalmers, I.; Glasziou, P.; Greenhalgh, T.; Heneghan, C.; Liberati, A.; Moschetti, I.; Phillips, B.; Thornton, H.; Goddard, O.; et al. The Oxford 2011 Levels of Evidence. CEBM 2011. Available online: https://www.cebm.ox.ac.uk/resources/levels-of-evidence/ocebm-levels-of-evidence (accessed on 16 August 2023).

- Howick, J.; Chalmers, I.; Glasziou, P.; Greenhalgh, T.; Heneghan, C.; Liberati, A.; Moschetti, I.; Phillips, B.; Thornton, H. The 2011 Oxford CEBM Evidence Levels of Evidence (Introductory Document) Oxford Cent Evidence-Based Med n.d. Available online: http://www.cebm.net/index.aspx?o=5653 (accessed on 14 September 2023).

- Yang, H.; Wu, K.; Liu, H.; Wu, P.; Yuan, Y.; Wang, L.; Liu, Y.; Zeng, H.; Li, J.; Liu, W.; et al. An automated surgical decision-making framework for partial or radical nephrectomy based on 3D-CT multi-level anatomical features in renal cell carcinoma. Eur. Radiol. 2023, 2023, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Nakawala, H.; Bianchi, R.; Pescatori, L.E.; De Cobelli, O.; Ferrigno, G.; De Momi, E. “Deep-Onto” network for surgical workflow and context recognition. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 685–696. [Google Scholar] [CrossRef] [PubMed]

- Padovan, E.; Marullo, G.; Tanzi, L.; Piazzolla, P.; Moos, S.; Porpiglia, F.; Vezzetti, E. A deep learning framework for real-time 3D model registration in robot-assisted laparoscopic surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2022, 18, e2387. [Google Scholar] [CrossRef] [PubMed]

- Nakawala, H.; De Momi, E.; Bianchi, R.; Catellani, M.; De Cobelli, O.; Jannin, P.; Ferrigno, G.; Fiorini, P. Toward a Neural-Symbolic Framework for Automated Workflow Analysis in Surgery. In Proceedings of the XV Mediterranean Conference on Medical and Biological Engineering and Computing–MEDICON 2019, Coimbra, Portugal, 26–28 September 2019; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1551–1558. [Google Scholar] [CrossRef]

- Casella, A.; Moccia, S.; Carlini, C.; Frontoni, E.; De Momi, E.; Mattos, L.S. NephCNN: A deep-learning framework for vessel segmentation in nephrectomy laparoscopic videos. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: Piscataway Township, NJ, USA, 2021; pp. 6144–6149. [Google Scholar] [CrossRef]

- Gao, Y.; Tang, Y.; Ren, D.; Cheng, S.; Wang, Y.; Yi, L.; Peng, S. Deep Learning Plus Three-Dimensional Printing in the Management of Giant (>15 cm) Sporadic Renal Angiomyolipoma: An Initial Report. Front. Oncol. 2021, 11, 724986. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Wang, J.; Wang, T.; Ji, X.; Shen, Y.; Sun, Z.; Zhang, X. A markerless automatic deformable registration framework for augmented reality navigation of laparoscopy partial nephrectomy. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1285–1294. [Google Scholar] [CrossRef]

- Amir-Khalili, A.; Peyrat, J.-M.; Abinahed, J.; Al-Alao, O.; Al-Ansari, A.; Hamarneh, G.; Abugharbieh, R. Auto Localization and Segmentation of Occluded Vessels in Robot-Assisted Partial Nephrectomy. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2014: 17th International Conference, Boston, MA, USA, 14–18 September 2014; pp. 407–414. [Google Scholar] [CrossRef]

- Nosrati, M.S.; Amir-Khalili, A.; Peyrat, J.-M.; Abinahed, J.; Al-Alao, O.; Al-Ansari, A.; Abugharbieh, R.; Hamarneh, G. Endoscopic scene labelling and augmentation using intraoperative pulsatile motion and colour appearance cues with preoperative anatomical priors. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1409–1418. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, Z.; Dai, J.; Morgan, T.N.; Garbens, A.; Kominsky, H.; Gahan, J.; Larson, E.C. Evaluating robotic-assisted partial nephrectomy surgeons with fully convolutional segmentation and multi-task attention networks. J. Robot. Surg. 2023, 17, 2323–2330. [Google Scholar] [CrossRef]

- De Backer, P.; Van Praet, C.; Simoens, J.; Lores, M.P.; Creemers, H.; Mestdagh, K.; Allaeys, C.; Vermijs, S.; Piazza, P.; Mottaran, A.; et al. Improving Augmented Reality Through Deep Learning: Real-time Instrument Delineation in Robotic Renal Surgery. Eur. Urol. 2023, 84, 86–91. [Google Scholar] [CrossRef]

- Amparore, D.; Checcucci, E.; Piazzolla, P.; Piramide, F.; De Cillis, S.; Piana, A.; Verri, P.; Manfredi, M.; Fiori, C.; Vezzetti, E.; et al. Indocyanine Green Drives Computer Vision Based 3D Augmented Reality Robot Assisted Partial Nephrectomy: The Beginning of “Automatic” Overlapping Era. Urology 2022, 164, e312–e316. [Google Scholar] [CrossRef]

- De Backer, P.; Eckhoff, J.A.; Simoens, J.; Müller, D.T.; Allaeys, C.; Creemers, H.; Hallemeesch, A.; Mestdagh, K.; Van Praet, C.; Debbaut, C.; et al. Multicentric exploration of tool annotation in robotic surgery: Lessons learned when starting a surgical artificial intelligence project. Surg. Endosc. 2022, 36, 8533–8548. [Google Scholar] [CrossRef]

- Yip, M.C.; Lowe, D.G.; Salcudean, S.E.; Rohling, R.N.; Nguan, C.Y. Tissue Tracking and Registration for Image-Guided Surgery. IEEE Trans. Med. Imaging 2012, 31, 2169–2182. [Google Scholar] [CrossRef] [PubMed]

- Amir-Khalili, A.; Hamarneh, G.; Peyrat, J.-M.; Abinahed, J.; Al-Alao, O.; Al-Ansari, A.; Abugharbieh, R. Automatic segmentation of occluded vasculature via pulsatile motion analysis in endoscopic robot-assisted partial nephrectomy video. Med. Image Anal. 2015, 25, 103–110. [Google Scholar] [CrossRef] [PubMed]

- Amisha, M.P.; Pathania, M.; Rathaur, V.K. Overview of artificial intelligence in medicine. J. Fam. Med. Prim. Care 2019, 8, 2328–2331. [Google Scholar] [CrossRef] [PubMed]

- Ward, T.M.; Mascagni, P.; Madani, A.; Padoy, N.; Perretta, S.; Hashimoto, D.A. Surgical data science and artificial intelligence for surgical education. J. Surg. Oncol. 2021, 124, 221–230. [Google Scholar] [CrossRef] [PubMed]

- Veneziano, D.; Cacciamani, G.; Rivas, J.G.; Marino, N.; Somani, B.K. VR and machine learning: Novel pathways in surgical hands-on training. Curr. Opin. Urol. 2020, 30, 817–822. [Google Scholar] [CrossRef] [PubMed]

- Heller, N.; Weight, C. “The Algorithm Will See You Now”: The Role of Artificial (and Real) Intelligence in the Future of Urology. Eur. Urol. Focus 2021, 7, 669–671. [Google Scholar] [CrossRef]

- Cacciamani, G.E.; Nassiri, N.; Varghese, B.; Maas, M.; King, K.G.; Hwang, D.; Abreu, A.; Gill, I.; Duddalwar, V. Radiomics and Bladder Cancer: Current Status. Bladder Cancer 2020, 6, 343–362. [Google Scholar] [CrossRef]

- Sugano, D.; Sanford, D.; Abreu, A.; Duddalwar, V.; Gill, I.; Cacciamani, G.E. Impact of radiomics on prostate cancer detection: A systematic review of clinical applications. Curr. Opin. Urol. 2020, 30, 754–781. [Google Scholar] [CrossRef]

- Aminsharifi, A.; Irani, D.; Tayebi, S.; Jafari Kafash, T.; Shabanian, T.; Parsaei, H. Predicting the Postoperative Outcome of Percutaneous Nephrolithotomy with Machine Learning System: Software Validation and Comparative Analysis with Guy’s Stone Score and the CROES Nomogram. J. Endourol. 2020, 34, 692–699. [Google Scholar] [CrossRef]

- Feng, Z.; Rong, P.; Cao, P.; Zhou, Q.; Zhu, W.; Yan, Z.; Liu, Q.; Wang, W. Machine learning-based quantitative texture analysis of CT images of small renal masses: Differentiation of angiomyolipoma without visible fat from renal cell carcinoma. Eur. Radiol. 2018, 28, 1625–1633. [Google Scholar] [CrossRef]

- Birkmeyer, J.D.; Finks, J.F.; O’Reilly, A.; Oerline, M.; Carlin, A.M.; Nunn, A.R.; Dimick, J.; Banerjee, M.; Birkmeyer, N.J. Surgical Skill and Complication Rates after Bariatric Surgery. N. Engl. J. Med. 2013, 369, 1434–1442. [Google Scholar] [CrossRef] [PubMed]

- Hung, A.J.; Chen, J.; Ghodoussipour, S.; Oh, P.J.; Liu, Z.; Nguyen, J.; Purushotham, S.; Gill, I.S.; Liu, Y. A deep-learning model using automated performance metrics and clinical features to predict urinary continence recovery after robot-assisted radical prostatectomy. BJU Int. 2019, 124, 487–495. [Google Scholar] [CrossRef] [PubMed]

- Stulberg, J.J.; Huang, R.; Kreutzer, L.; Ban, K.; Champagne, B.J.; Steele, S.R.; Johnson, J.K.; Holl, J.L.; Greenberg, C.C.; Bilimoria, K.Y. Association Between Surgeon Technical Skills and Patient Outcomes. JAMA Surg. 2020, 155, 960. [Google Scholar] [CrossRef] [PubMed]

- Amato, M.; Eissa, A.; Puliatti, S.; Secchi, C.; Ferraguti, F.; Minelli, M.; Meneghini, A.; Landi, I.; Guarino, G.; Sighinolfi, M.C.; et al. Feasibility of a telementoring approach as a practical training for transurethral enucleation of the benign prostatic hyperplasia using bipolar energy: A pilot study. World J. Urol. 2021, 39, 3465–3471. [Google Scholar] [CrossRef] [PubMed]

- Maybury, C. The European Working Time Directive: A decade on. Lancet 2014, 384, 1562–1563. [Google Scholar] [CrossRef] [PubMed]

- Foell, K.; Finelli, A.; Yasufuku, K.; Bernardini, M.Q.; Waddell, T.K.; Pace, K.T.; Honey, R.J.D.; Lee, J.Y. Robotic surgery basic skills training: Evaluation of a pilot multidisciplinary simulation-based curriculum. Can. Urol. Assoc. J. 2013, 7, 430. [Google Scholar] [CrossRef]

- Gallagher, A.G. Metric-based simulation training to proficiency in medical education: What it is and how to do it. Ulster Med. J. 2012, 81, 107–113. [Google Scholar]

- Gallagher, A.G.; Ritter, E.M.; Champion, H.; Higgins, G.; Fried, M.P.; Moses, G.; Smith, C.D.; Satava, R.M. Virtual reality simulation for the operating room: Proficiency-based training as a paradigm shift in surgical skills training. Ann. Surg. 2005, 241, 364–372. [Google Scholar] [CrossRef]

- Hameed, B.; Dhavileswarapu, A.S.; Raza, S.; Karimi, H.; Khanuja, H.; Shetty, D.; Ibrahim, S.; Shah, M.; Naik, N.; Paul, R.; et al. Artificial Intelligence and Its Impact on Urological Diseases and Management: A Comprehensive Review of the Literature. J. Clin. Med. 2021, 10, 1864. [Google Scholar] [CrossRef]

- Andras, I.; Mazzone, E.; van Leeuwen, F.W.B.; De Naeyer, G.; van Oosterom, M.N.; Beato, S.; Buckle, T.; O’sullivan, S.; van Leeuwen, P.J.; Beulens, A.; et al. Artificial intelligence and robotics: A combination that is changing the operating room. World J. Urol. 2020, 38, 2359–2366. [Google Scholar] [CrossRef]

- Sarikaya, D.; Corso, J.J.; Guru, K.A. Detection and Localization of Robotic Tools in Robot-Assisted Surgery Videos Using Deep Neural Networks for Region Proposal and Detection. IEEE Trans. Med. Imaging 2017, 36, 1542–1549. [Google Scholar] [CrossRef] [PubMed]

- Fard, M.J.; Ameri, S.; Darin Ellis, R.; Chinnam, R.B.; Pandya, A.K.; Klein, M.D. Automated robot-assisted surgical skill evaluation: Predictive analytics approach. Int. J. Med. Robot. Comput. Assist. Surg. 2018, 14, e1850. [Google Scholar] [CrossRef] [PubMed]

- Hung, A.J.; Chen, J.; Gill, I.S. Automated Performance Metrics and Machine Learning Algorithms to Measure Surgeon Performance and Anticipate Clinical Outcomes in Robotic Surgery. JAMA Surg. 2018, 153, 770. [Google Scholar] [CrossRef]

- Fazlollahi, A.M.; Bakhaidar, M.; Alsayegh, A.; Yilmaz, R.; Winkler-Schwartz, A.; Mirchi, N.; Langleben, I.; Ledwos, N.; Sabbagh, A.J.; Bajunaid, K.; et al. Effect of Artificial Intelligence Tutoring vs Expert Instruction on Learning Simulated Surgical Skills Among Medical Students. JAMA Netw. Open 2022, 5, e2149008. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.K.; Ryu, H.; Kim, M.; Kwon, E.; Lee, H.; Park, S.J.; Byun, S. Personalised three-dimensional printed transparent kidney model for robot-assisted partial nephrectomy in patients with complex renal tumours (R.E.N.A.L. nephrometry score ≥ 7): A prospective case-matched study. BJU Int. 2021, 127, 567–574. [Google Scholar] [CrossRef]

- Shirk, J.D.; Kwan, L.; Saigal, C. The Use of 3-Dimensional, Virtual Reality Models for Surgical Planning of Robotic Partial Nephrectomy. Urology 2019, 125, 92–97. [Google Scholar] [CrossRef]

- Wake, N.; Rosenkrantz, A.B.; Huang, R.; Park, K.U.; Wysock, J.S.; Taneja, S.S.; Huang, W.C.; Sodickson, D.K.; Chandarana, H. Patient-specific 3D printed and augmented reality kidney and prostate cancer models: Impact on patient education. 3D Print Med. 2019, 5, 4. [Google Scholar] [CrossRef]

- Rocco, B.; Sighinolfi, M.C.; Menezes, A.D.; Eissa, A.; Inzillo, R.; Sandri, M.; Puliatti, S.; Turri, F.; Ciarlariello, S.; Amato, M.; et al. Three-dimensional virtual reconstruction with DocDo, a novel interactive tool to score renal mass complexity. BJU Int. 2020, 125, 761–762. [Google Scholar] [CrossRef]

- Mitsui, Y.; Sadahira, T.; Araki, M.; Maruyama, Y.; Nishimura, S.; Wada, K.; Kobayashi, Y.; Watanabe, M.; Watanabe, T.; Nasu, Y. The 3-D Volumetric Measurement Including Resected Specimen for Predicting Renal Function AfterRobot-assisted Partial Nephrectomy. Urology 2019, 125, 104–110. [Google Scholar] [CrossRef]

- Antonelli, A.; Veccia, A.; Palumbo, C.; Peroni, A.; Mirabella, G.; Cozzoli, A.; Martucci, P.; Ferrari, F.; Simeone, C.; Artibani, W. Holographic Reconstructions for Preoperative Planning before Partial Nephrectomy: A Head-to-Head Comparison with Standard CT Scan. Urol. Int. 2019, 102, 212–217. [Google Scholar] [CrossRef]

- Michiels, C.; Khene, Z.-E.; Prudhomme, T.; de Hauteclocque, A.B.; Cornelis, F.H.; Percot, M.; Simeon, H.; Dupitout, L.; Bensadoun, H.; Capon, G.; et al. 3D-Image guided robotic-assisted partial nephrectomy: A multi-institutional propensity score-matched analysis (UroCCR study 51). World J. Urol. 2021, 41, 303–313. [Google Scholar] [CrossRef] [PubMed]

- Macek, P.; Cathelineau, X.; Barbe, Y.P.; Sanchez-Salas, R.; Rodriguez, A.R. Robotic-Assisted Partial Nephrectomy: Techniques to Improve Clinical Outcomes. Curr. Urol. Rep. 2021, 22, 51. [Google Scholar] [CrossRef] [PubMed]

- Veccia, A.; Antonelli, A.; Hampton, L.J.; Greco, F.; Perdonà, S.; Lima, E.; Hemal, A.K.; Derweesh, I.; Porpiglia, F.; Autorino, R. Near-infrared Fluorescence Imaging with Indocyanine Green in Robot-assisted Partial Nephrectomy: Pooled Analysis of Comparative Studies. Eur. Urol. Focus 2020, 6, 505–512. [Google Scholar] [CrossRef] [PubMed]

- Villarreal, J.Z.; Pérez-Anker, J.; Puig, S.; Pellacani, G.; Solé, M.; Malvehy, J.; Quintana, L.F.; García-Herrera, A. Ex vivo confocal microscopy performs real-time assessment of renal biopsy in non-neoplastic diseases. J. Nephrol. 2021, 34, 689–697. [Google Scholar] [CrossRef]

- Rocco, B.; Sighinolfi, M.C.; Cimadamore, A.; Bonetti, L.R.; Bertoni, L.; Puliatti, S.; Eissa, A.; Spandri, V.; Azzoni, P.; Dinneen, E.; et al. Digital frozen section of the prostate surface during radical prostatectomy: A novel approach to evaluate surgical margins. BJU Int. 2020, 126, 336–338. [Google Scholar] [CrossRef]

- Su, L.-M.; Kuo, J.; Allan, R.W.; Liao, J.C.; Ritari, K.L.; Tomeny, P.E.; Carter, C.M. Fiber-Optic Confocal Laser Endomicroscopy of Small Renal Masses: Toward Real-Time Optical Diagnostic Biopsy. J. Urol. 2016, 195, 486–492. [Google Scholar] [CrossRef]

- Puliatti, S.; Eissa, A.; Checcucci, E.; Piazza, P.; Amato, M.; Ferretti, S.; Scarcella, S.; Rivas, J.G.; Taratkin, M.; Marenco, J.; et al. New imaging technologies for robotic kidney cancer surgery. Asian J. Urol. 2022, 9, 253–262. [Google Scholar] [CrossRef]

- Amparore, D.; Piramide, F.; De Cillis, S.; Verri, P.; Piana, A.; Pecoraro, A.; Burgio, M.; Manfredi, M.; Carbonara, U.; Marchioni, M.; et al. Robotic partial nephrectomy in 3D virtual reconstructions era: Is the paradigm changed? World. J. Urol. 2022, 40, 659–670. [Google Scholar] [CrossRef]

- Zadeh, S.M.; Francois, T.; Calvet, L.; Chauvet, P.; Canis, M.; Bartoli, A.; Bourdel, N. SurgAI: Deep learning for computerized laparoscopic image understanding in gynaecology. Surg. Endosc. 2020, 34, 5377–5383. [Google Scholar] [CrossRef]

- Farinha, R.; Breda, A.; Porter, J.; Mottrie, A.; Van Cleynenbreugel, B.; Sloten, J.V.; Mottaran, A.; Gallagher, A.G. International Expert Consensus on Metric-based Characterization of Robot-assisted Partial Nephrectomy. Eur. Urol. Focus 2023, 9, 388–395. [Google Scholar] [CrossRef]

- Farinha, R.; Breda, A.; Porter, J.; Mottrie, A.; Van Cleynenbreugel, B.; Sloten, J.V.; Mottaran, A.; Gallagher, A.G. Objective assessment of intraoperative skills for robot-assisted partial nephrectomy (RAPN). J. Robot. Surg. 2023, 17, 1401–1409. [Google Scholar] [CrossRef] [PubMed]

- Collins, J.W.; Marcus, H.J.; Ghazi, A.; Sridhar, A.; Hashimoto, D.; Hager, G.; Arezzo, A.; Jannin, P.; Maier-Hein, L.; Marz, K.; et al. Ethical implications of AI in robotic surgical training: A Delphi consensus statement. Eur. Urol. Focus 2022, 8, 613–622. [Google Scholar] [CrossRef] [PubMed]

- Brodie, A.; Dai, N.; Teoh, J.Y.-C.; Decaestecker, K.; Dasgupta, P.; Vasdev, N. Artificial intelligence in urological oncology: An update and future applications. Urol. Oncol. Semin. Orig. Investig. 2021, 39, 379–399. [Google Scholar] [CrossRef] [PubMed]

- Varoquaux, G.; Cheplygina, V. Machine learning for medical imaging: Methodological failures and recommendations for the future. NPJ Digit. Med. 2022, 5, 48. [Google Scholar] [CrossRef]

- Sarkar, A.; Yang, Y.; Vihinen, M. Variation benchmark datasets: Update, criteria, quality and applications. Database 2020, 2020, baz117. [Google Scholar] [CrossRef]

- Chanchal, A.K.; Lal, S.; Kumar, R.; Kwak, J.T.; Kini, J. A novel dataset and efficient deep learning framework for automated grading of renal cell carcinoma from kidney histopathology images. Sci. Rep. 2023, 13, 5728. [Google Scholar] [CrossRef]

- Heller, N.; Sathianathen, N.; Kalapara, A.; Walczak, E.; Moore, K.; Kaluzniak, H.; Rosenberg, J.; Blake, P.; Rengel, A.; Weight, C.; et al. The KiTS19 Challenge Data: 300 Kidney Tumor Cases with Clinical Context, CT Semantic Segmentations, and Surgical Outcomes. arXiv 2019, arXiv:1904.00445. [Google Scholar]

- Heller, N.; Isensee, F.; Trofimova, D.; Tejpaul, R.; Zhao, Z.; Chen, H.; Wang, L.; Golts, A.; Khapun, D.; Weight, C.; et al. The KiTS21 Challenge: Automatic segmentation of kidneys, renal tumors, and renal cysts in corticomedullary-phase CT. arXiv 2023, arXiv:2307.01984. [Google Scholar]

| Reference | N. of Cases | AI Tool | Study Summary | LE |

|---|---|---|---|---|

| Instruments and objects annotation/segmentation | ||||

| Amir-Khalili A, et al., 2014 [30] | Dataset obtained from eight RAPN videos | Computer vision | Using phase-based video magnification for automated identification of faint motion invisible to human eyes (resulting from small blood vessels hidden within the fat around the renal hilum), thus facilitating the identification of renal vessels during surgery. | 5 |

| Amir-Khalili A, et al., 2015 [37] | Validation in 15 RAPN videos | Computer vision | The authors used color and texture visual cues in combination with pulsatile motion to automatically identify renal vessels (especially those concealed by fat) during minimally invasive partial nephrectomy (an extension of their previous study [27]). The area under the ROC curve for this technique was 0.72. | 5 |

| Nosrati M. S, et al., 2016 [31] | Dataset obtained from 15 RAPN videos | Machine learning (random decision forest) | Using data from the preoperative imaging studies (to estimate the 3D pose and deformities of anatomical structures) together with color and texture visual cues (obtained from the endoscopic field) for automatic real-time tissue tracking and identification of occluded structures. This technique improved structure identification by 45%. | 5 |

| Nakawala H, et al., 2019 [24] | 9 RAPN | Deep learning (CRNN and CNN-HMM) | The authors used a dataset obtained from splitting nine RAPN videos into small videos of 30 s and annotated them to train the Deep-Onto model to identify the surgical workflow of RAPN. The model resulted in the identification of 10 RAPN steps with an accuracy of 74.29%. | 5 |

| Nakawala H, et al., 2020 [26] | 9 RAPN | Deep learning (CNN and LTSM and ILP) | The authors extended their previous work [21] on automatic analysis of the surgical workflow of RAPN. The authors successfully introduced new AI algorithms to use relational information between surgical entities to predict the current surgical step and the corresponding surgical step. | 5 |

| Casella A, et al., 2020 [27] | 8 RAPN videos | Deep learning (Fully CNNs) | The authors trained a 3D Fully convolutional neural network using 741,573 frames extracted from eight RAPN for segmentation of renal vessels. Subsequently, 240 frames were used for validation, and the last 240 frames were used for testing the algorithm. | 5 |

| De Backer P, et al., 2022 [35] | 82 videos of RAPN | Deep learning | The authors presented a “bottom-up” framework for the annotation of surgical instruments in RAPN. Subsequently, the images annotated using this framework were validated for use by a deep learning algorithm. | 5 |

| De Backer P, et al., 2023 [33] | 10 RAPN patients | Deep learning (ANN) | 65,927 labeled instruments in 15,100 video frames obtained from 57 RAPN videos were used to train an ANN model to correctly annotate surgical instruments. This model aims for real-time identification of robotic instruments during augmented reality-guided RAPN. | 5 |

| Tissue tracking and 3D registration/augmented reality | ||||

| Yip M.C., et al., 2012 [36] | Laparoscopic partial nephrectomy videos | Computer vision | Using different methods of computer vision for real-time tissue tracking during minimally invasive surgeries, both in vitro (porcine models) and in vivo (laparoscopic partial nephrectomy videos), in order to provide more accurate registration of 3D models. | 5 |

| Zhang X, et al., 2019 [29] | 1062 images from 9 different laparoscopic partial nephrectomy | Computer vision and Deep learning (CNN) | Computer vision and machine learning were used to develop an automatic markerless, deformable registration framework for laparoscopic partial nephrectomy. The proposed technique was able to provide automatic segmentation with an accuracy of 94.4%. | 5 |

| Gao Y, et al., 2021 [28] | 31 patients with Giant RAML | Deep learning | The authors used deep learning segmentation algorithms to aid in the creation of 3D-printed models of patients with giant renal angiomyolipoma. Subsequently, they compared surgeries performed with the aid of deep learning—3D models—versus standard surgeries (without the aid of models). Deep learning—3D-printed models resulted in higher rates of partial nephrectomy compared to routine surgeries. | 3b |

| Padovan E, et al., 2022 [25] | 9 RAPN videos | Deep Learning (CNN) | The segmentation CNN model uses the RGB images from the endoscopic view to differentiate between different structures in the field. Subsequently, a rotation CNN model is used to calculate the rotation values based on a rigid instrument or an organ. Finally, the information from both models is used for automatic registration and orientation of the 3D model over the endoscopic field during RAPN. This model showed good performance. | 5 |

| Amparore D, et al., 2022 [34] | 10 RAPN patients | Computer vision | During surgery, super-enhancement of the kidney using ICG was performed to differentiate it from surrounding structures in the firefly mode of the DaVinci robot. Registration of the AR model was performed using the kidney as an anchoring site (fine tuning can be performed by a professional operator), followed by automatic anchoring during the surgery. This technique was successfully applied for superimposing the 3D model over the kidney during seven RAPN cases with completely endophytic tumors. | 5 |

| Skills assessment | ||||

| Wang Y, et al., 2023 [32] | 872 images from 150 segmented RAPN videos | Deep learning (multi-task CNN) | Segmented RAPN videos were initially evaluated by human reviewers using GEARS and OSATS scores. Subsequently, a human reviewer labeled each portion of the robotic instruments in a subset of videos. These labels were used to train the semantic segmentation network. A multi-task CNN was used to predict the GEARS and OSATS scores. The model performed well in terms of prediction of force sensitivity and knowledge of instruments, but further training of the model is required to improve its overall evaluation of surgical skills. | 5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rodriguez Peñaranda, N.; Eissa, A.; Ferretti, S.; Bianchi, G.; Di Bari, S.; Farinha, R.; Piazza, P.; Checcucci, E.; Belenchón, I.R.; Veccia, A.; et al. Artificial Intelligence in Surgical Training for Kidney Cancer: A Systematic Review of the Literature. Diagnostics 2023, 13, 3070. https://doi.org/10.3390/diagnostics13193070

Rodriguez Peñaranda N, Eissa A, Ferretti S, Bianchi G, Di Bari S, Farinha R, Piazza P, Checcucci E, Belenchón IR, Veccia A, et al. Artificial Intelligence in Surgical Training for Kidney Cancer: A Systematic Review of the Literature. Diagnostics. 2023; 13(19):3070. https://doi.org/10.3390/diagnostics13193070

Chicago/Turabian StyleRodriguez Peñaranda, Natali, Ahmed Eissa, Stefania Ferretti, Giampaolo Bianchi, Stefano Di Bari, Rui Farinha, Pietro Piazza, Enrico Checcucci, Inés Rivero Belenchón, Alessandro Veccia, and et al. 2023. "Artificial Intelligence in Surgical Training for Kidney Cancer: A Systematic Review of the Literature" Diagnostics 13, no. 19: 3070. https://doi.org/10.3390/diagnostics13193070