Deep Learning in Optical Coherence Tomography Angiography: Current Progress, Challenges, and Future Directions

Abstract

1. Introduction

2. Deep Learning-Based Algorithms for OCT-A Image Quality Control

2.1. Image Quality Grading

2.2. Image Reconstruction

3. Deep Learning-Based Algorithms for OCT-A Image Segmentation

3.1. Foveal Avascular Zone Area

3.2. Vessel Segmentation

3.3. Non-Perfusion Area

3.4. Neovascularization

4. Deep Learning-Based Algorithms for OCT-A Image Classification

4.1. The Classification of Artery and Vein

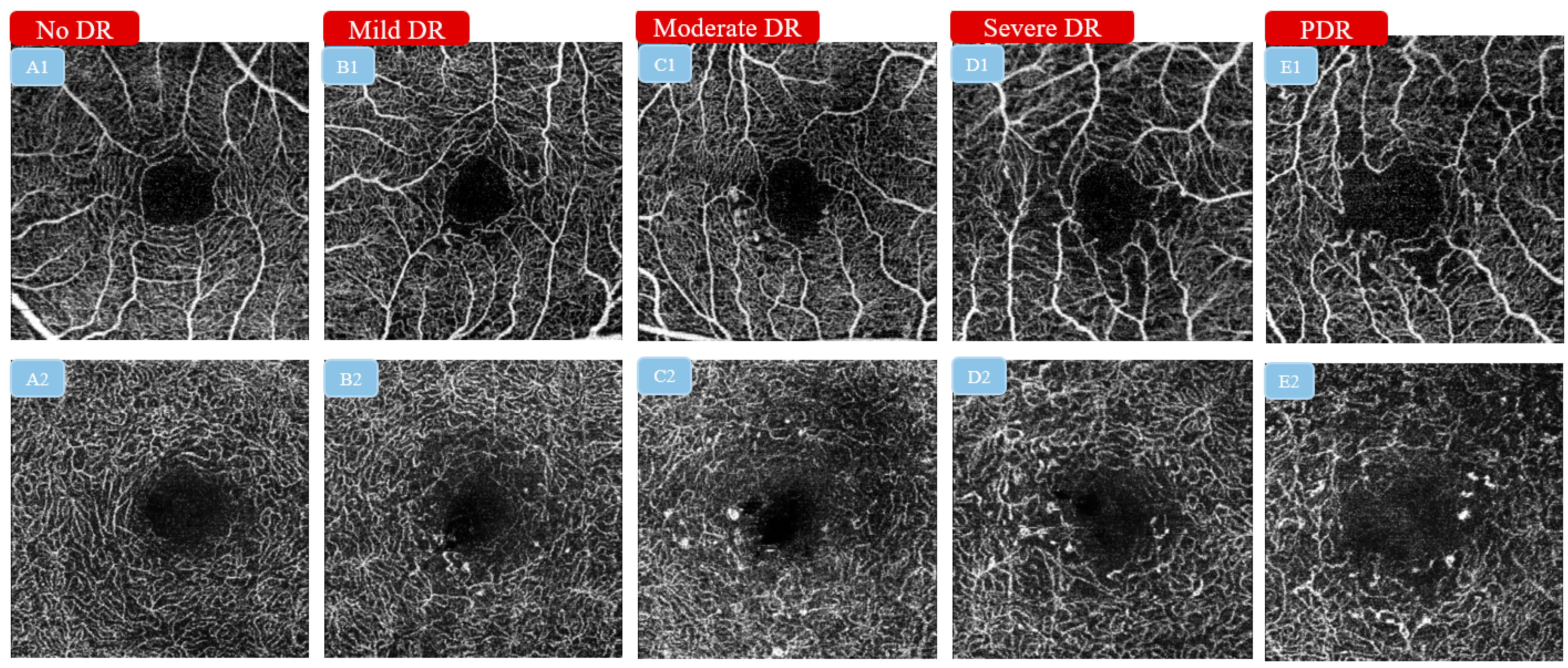

4.2. The Classification of Diabetic Retinopathy Severity

4.3. The Classification of the Presence or Absence of Diabetic Macular Ischemia

4.4. The Classification of Healthy Eyes and Glaucoma

5. Discussion

- The nomenclature of OCT-A metrics should be further standardized.

- 2.

- The normal range of OCT-A metrics should be established.

- The training sample size should be expanded to avoid biased models.

- 2.

- External testing should be performed with data privacy and security being fully addressed.

- 3.

- Domain shift should also be handled properly among different OCT-A devices to increase model robustness.

- 4.

- The value of using three-dimensional volumetric OCT-A scans is worth further exploration.

- 5.

- The interpretability of the output from the DL algorithm should be further improved.

6. Conclusions

7. Literature Search

| Authors, Year | Input | Output | Datasets | Imaging Device | Model | Data Set-Up | Performance | Visualization | Generalizability Validation |

|---|---|---|---|---|---|---|---|---|---|

| Image quality grading | |||||||||

| Lauermann et al., 2019 [24] | 3 × 3 mm2 SCP | Sufficient vs. Insufficient | (1) Training and validation: 80 images for both groups, respectively (2) Testing dataset: 20 images for both groups, respectively | Optovue | CNN (TensorFlow) | Pre-training+ training +testing | Training accuracy 97%, validation accuracy 100%, and cross entropy 0.12 | \ | \ |

| Yang et al., 2022 [25] | 3 × 3 mm2 SCP and DCP | Ungradable; gradable but unmeasurable; gradable and measurable | (1) Training and validation: over 3500 SCP and DCP images, respectively (2) Testing: 480 SCP and DCP images, respectively | Triton and Optovue | CNN (DenseNet) | Training + tuning + primary validation + external validation | AUROC > 0.948 and AUPRC > 0.866 for the gradability assessment, AUROC > 0.960 and AUPRC > 0.822 for the measurability assessment | CAM | Two external validation datasets |

| Dhodapkar et al., 2022 [26] | 6 × 6 and 8 × 8 mm2 SCP | High quality vs. low quality | (1) Training and validation: 347 SCP scans (2) Testing: 32 SCP scans | Zeiss | CNN (ResNet152) | Training + tuning + primary validation + external validation | AUROC = 0.99 (95%CI 0.98–1.00) for low-quality image identification and AUROC = 0.97 (95%CI 0.96–0.99) for high-quality image identification | CAM | One external validation dataset |

| Image reconstruction | |||||||||

| Gao et al., 2020 [28] | 3 × 3 and 6 × 6 mm2 SVC | Reconstructed HR images | (1) Training: 210 paired 3 × 3 and 6 × 6 mm2 SCP images (2) Testing: 88 paired 3 × 3 and 6 × 6 mm2 SCP images | Optovue | CNN (self-developed architecture) | Training + testing | Significantly lower noise intensity, stronger contrast, and better vascular connectivity than the original images | Reconstructed HR 6 × 6 mm2 SCP images | \ |

| Gao et al., 2021 [29] | 3 × 3 and 6 × 6 mm2 ICP, DCP | Reconstructed HR images | (1) Training and validation: 173 paired 3 × 3 and 6 × 6 mm2 ICP and DCP images (2) Testing: 101 paired 3 × 3 and 6 × 6 mm2 ICP and DCP images | Optovue | CNN (self-developed architecture) | Training + validation + testing | Significantly reduced noise intensity, improved vascular connectivity, and enhanced Weber contrast when compared to the original images | Reconstructed HR 6 × 6 mm2 ICP and DCP images | \ |

| Zhang et al., 2022 [30] | 3 × 3 mm2 and 6 × 6 mm2 SCP | Reconstructed HR images | (1) Training: 296 paired HR and LD images (2) Testing: 279 HR images | Optovue | GAN | Training + testing | Improved PSNR, SSIM, and normalized mutual information | Reconstructed HR 6 × 6 mm2 SCP images | \ |

| FAZ segmentation | |||||||||

| Prentašic et al., 2016 [33] | 1 × 1 mm2 images | Segmentation map | (1)Training: 2/3 out of 80 images (2) Testing: 1/3 out of 80 images | Unspecified prototype | CNN (self-developed architecture) | Three-fold cross-validation | A maximum mean accuracy of 0.83 when comparing the automated results with the manually segmented ones | FAZ segmentation map | \ |

| Mirshahi et al., 2021 [34] | 3 × 3 mm2 images | Segmentation map | (1) Training and validation: 126 images (2) Testing: 37 images | Optovue | CNN (ResNet50) | Training + validation + testing | A mean DSC of 0.94 ± 0.04 when compared to the results produced by the device’s built-in software | FAZ segmentation map | \ |

| Guo et al., 2019 [35] | 3 × 3 mm2 SCP | Segmentation map | (1) Training: 4/5 out of 405 images (2) Testing: 1/5 out of 405 images | Zeiss | CNN (U-Net) | Five-fold cross-validation | A maximum mean DSC of 0.976 ± 0.01 when comparing the automatic segmentation against the ground truth | FAZ segmentation | \ |

| Vessel segmentation | |||||||||

| Ma et al., 2021 [38] | 3 × 3 mm2 SVC, DVC angiograms | Segmentation map | (1) Training: 180 images from two datasets (2) Testing: 49 images from two datasets | Optovue | CNN (ResNet) | Training + testing | The proposed OCT-A-Net yielded better vessel segmentation performance than both traditional and other deep learning methods | Vessel segmentation map | \ |

| Liu et al., 2022 [39] | 3 × 3 mm2 SVP | Segmentation map | (1) Training: 330 scans for disentanglement, 207 scans for segmentation (2) Testing: 124 scans for segmentation | Optovue; Cirrus; Triton; Heidelberg | CNN (self-developed architecture) | Training + testing | The proposed mode achieved AUROC > 0.945, ACC > 0.924, kappa > 0.743, DSC > 0.788 for vessel segmentation in different validation datasets. | Vessel segmentation map | Three external validation datasets |

| Guo et al., 2021 [40] | 2 × 2 mm2 volumetric scans | Segmentation map | (1) Training and validation: 76 cases (2) Testing: 12 cases | Unspecified | CNN (U-Net) | Training + validation + testing | F1 score > 90% for vessel segmentation in the SVP | Vessel segmentation map | \ |

| Non-perfusion area segmentation | |||||||||

| Nagasato et al., 2019 [43] | 3 × 3 mm2 SCP and DCP | Distribution map | A total of 144 normal controls and 174 RVO OCT-A images were included | Unspecified | CNN (VGG-16) | Eight-fold cross-validation | The mean AUROC, sensitivity, specificity, and average required time for distinguishing RVO OCT-A images with an NPA from normal OCT-A images were 0.986, 93.7%, 97.3%, and 176.9 s | CAM | \ |

| Guo et al., 2021 [44] | 6 × 6 mm2 volumetric scans | Distribution map | A total of 978 volumetric OCT-A scans | Optovue | CNN (U-Net) | Five-fold cross-validation | A mean standard deviation F1 score of 0.78 ± 0.05 in nasal, 0.82 ± 0.07 in macular, and 0.78 ± 0.05 in temporal scans | NPA distribution map | \ |

| Neovascularization segmentation | |||||||||

| Wang et al., 2020 [46] | OCT-A volumetric scans | Segmentation map | (1) Training: 1566 scans (2) Testing: 110 scans | Optovue | CNN (self-developed architecture) | Training + testing | All CNV cases were diagnosed from non-CNV controls with 100% sensitivity and 95% specificity. The mean intersection over union of CNV membrane segmentation was as high as 0.88 | Saliency map | \ |

| Thakoor et al., 2021 [47] | OCT-B scans and OCT-A volumetric scans | Non-AMD, non-neovascular AMD, and neovascular AMD | (1) Training and validation: 277 cubes/B scan images (2) Testing: 69 cubes/B scan images | Unspecified | CNN (self-developed architecture) | Training + validation + testing | The hybrid 3D–2D CNNs achieved accuracy up to 77.8% in multiclass categorical classification of non-AMD eyes, eyes having non-neovascular AMD, and eyes having neovascular AMD | CAM | \ |

| The classification of artery and vein | |||||||||

| Alam et al., 2020 [53] | 6 × 6 mm2 OCT/OCT-A images | Artery–vein map | A total of 50 images | Optovue | CNN (U-Net) | Five-fold cross validation | The AV-Net achieved an average accuracy of 86.71% and 86.80%, respectively, for artery and vein on the test data, mean IOU was 70.72%, and F1 score was 82.81% | Artery–vein map | \ |

| Gao et al., 2022 [54] | Montaged wide-field OCT-A | Artery–vein map | (1) Training: 240 angiograms (2) Testing: 302 angiograms | Optovue | CNN (U-Net) | Training + testing | For classification and identification of arteries, the algorithm achieved average sensitivity of 95.3%, specificity of 99.6%, F1 score of 94.2%, and IoU of 89.3%. For veins, the algorithm achieved average sensitivity of 94.4%, specificity of 99.7%, F1 score of 94.1%, and IoU of 89.2% | Artery–vein segmentation results | One external validation dataset |

| The classification of different DR severities | |||||||||

| Ryu et al., 2021 [55] | Both 3 × 3 and 6 × 6 mm2 SCP and DCP | DR vs. non-DR; referable DR vs. non-referable DR | (1) Training: 240 sets of images (comprising both 3 × 3 and 6 × 6 mm2) (2) Testing: 120 sets | Optovue | CNN (ResNet) | Training + testing | The proposed CNN classifier achieved an accuracy of 91–98%, a sensitivity of 86–97%, a specificity of 94–99%, and AUROCs of 0.919–0.976 | CAM | \ |

| Le et al., 2020 [56] | 6 × 6 mm2 SCP and DCP | Healthy, no DR, and DR eyes | (1) Training and validation: 131 OCT-A images (2) Testing: 46 OCT-A images | Optovue | CNN (VGG-16) | Training + internal validation + external testing | The cross-validation accuracy of the retrained classifier for differentiating among healthy, no DR, and DR eyes was 87.27%, with 83.76% sensitivity and 90.82% specificity. The AUC metrics for binary classification of healthy, no DR, and DR eyes were 0.97, 0.98, and 0.97, respectively. | \ | One external testing dataset |

| Zang et al., 2021 [57] | 3 × 3 mm2 images | Three-level classifiers | A total of 303 eyes from 250 participants | Optovue | CNN (self-developed architecture) | Ten-fold cross-validation | The overall classification accuracies of the three levels were 95.7%, 85.0%, and 71.0%, respectively | CAM | \ |

| The classification of the presence or absence of diabetic macular ischemia | |||||||||

| Yang et al., 2022 [25] | 3 × 3 mm2 SCP and DCP | DMI vs. no DMI | (1) Training: 3307 SCP and 3135 DCP images (2) Testing: 421 SCP and 408 DCP images | Triton and Optovue | CNN (DenseNet) | Training + tuning + primary validation + external validation | For DMI detection, the DL system achieved AUROCs of 0.999 and 0.987 for SCP and DCP, respectively, in primary validation, and AUROCs > 0.939 in external datasets | CAM | Two external testing datasets |

| The classification of normal and glaucoma cases | |||||||||

| Bowd et al., 2022 [61] | 4.5 × 4.5 ONH | Glaucoma vs. no glaucoma | A total of 130 eyes of 80 healthy individuals and 275 eyes of 185 glaucoma patients | Optovue | CNN (VGG-16) | Five-fold cross-validation | The adjusted AUPRC using CNN analysis of en face vessel density images was 0.97 (95%CI: 0.95–0.99) | \ | \ |

| Schottenhamml et al., 2021 [62] | 3 × 3 mm2 SVC, ICP, and DCP | Glaucoma vs. no glaucoma | 259 eyes of 199 subjects, 75 eyes of 74 healthy subjects, and 184 eyes of 125 glaucoma patients | Heidelberg | CNN (DenseNet and ResNet) | Five-fold cross-validation | The DL model attained AUROC of 0.967 on the SVP projection for differentiating glaucoma patients, which is comparable to the best reported values in the literature | CAM | \ |

Funding

Conflicts of Interest

References

- Spaide, R.F.; Fujimoto, J.G.; Waheed, N.K.; Sadda, S.R.; Staurenghi, G. Optical coherence tomography angiography. Prog. Retin. Eye Res. 2018, 64, 1–55. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Yang, D.; Tang, Z.; Ng, D.S.; Cheung, C.Y. Optical coherence tomography angiography in diabetic retinopathy: An updated review. Eye 2021, 35, 149–161. [Google Scholar] [CrossRef] [PubMed]

- Cavichini, M.; Dans, K.C.; Jhingan, M.; Amador-Patarroyo, M.J.; Borooah, S.; Bartsch, D.U.; Nudleman, E.; Freeman, W.R. Evaluation of the clinical utility of optical coherence tomography angiography in age-related macular degeneration. Br. J. Ophthalmol. 2021, 105, 983–988. [Google Scholar] [CrossRef]

- Wang, Y.M.; Shen, R.; Lin, T.P.H.; Chan, P.P.; Wong, M.O.M.; Chan, N.C.Y.; Tang, F.; Lam, A.K.N.; Leung, D.Y.L.; Tham, C.C.Y.; et al. Optical coherence tomography angiography metrics predict normal tension glaucoma progression. Acta Ophthalmol. 2022, 100, e1455–e1462. [Google Scholar] [CrossRef] [PubMed]

- Yeung, L.; Wu, W.C.; Chuang, L.H.; Wang, N.K.; Lai, C.C. Novel Optical Coherence Tomography Angiography Biomarker in Branch Retinal Vein Occlusion Macular Edema. Retina 2019, 39, 1906–1916. [Google Scholar] [CrossRef] [PubMed]

- Borrelli, E.; Battista, M.; Sacconi, R.; Querques, G.; Bandello, F. Optical Coherence Tomography Angiography in Diabetes. Asia Pac. J. Ophthalmol. 2021, 10, 20–25. [Google Scholar] [CrossRef]

- Yang, D.W.; Tang, Z.Q.; Tang, F.Y.; Szeto, S.K.; Chan, J.; Yip, F.; Wong, C.Y.; Ran, A.R.; Lai, T.Y.; Cheung, C.Y. Clinically relevant factors associated with a binary outcome of diabetic macular ischaemia: An OCTA study. Br. J. Ophthalmol. 2022; Epub ahead of print. [Google Scholar] [CrossRef]

- Tang, F.Y.; Chan, E.O.; Sun, Z.; Wong, R.; Lok, J.; Szeto, S.; Chan, J.C.; Lam, A.; Tham, C.C.; Ng, D.S.; et al. Clinically relevant factors associated with quantitative optical coherence tomography angiography metrics in deep capillary plexus in patients with diabetes. Eye Vis. 2020, 7, 7. [Google Scholar] [CrossRef]

- Tang, F.Y.; Ng, D.S.; Lam, A.; Luk, F.; Wong, R.; Chan, C.; Mohamed, S.; Fong, A.; Lok, J.; Tso, T.; et al. Determinants of Quantitative Optical Coherence Tomography Angiography Metrics in Patients with Diabetes. Sci. Rep. 2017, 7, 2575. [Google Scholar] [CrossRef]

- Sun, Z.; Tang, F.; Wong, R.; Lok, J.; Szeto, S.K.H.; Chan, J.C.K.; Chan, C.K.M.; Tham, C.C.; Ng, D.S.; Cheung, C.Y. OCT Angiography Metrics Predict Progression of Diabetic Retinopathy and Development of Diabetic Macular Edema: A Prospective Study. Ophthalmology 2019, 126, 1675–1684. [Google Scholar] [CrossRef]

- Guo, X.; Chen, Y.; Bulloch, G.; Xiong, K.; Chen, Y.; Li, Y.; Liao, H.; Huang, W.; Zhu, Z.; Wang, W.; et al. Parapapillary choroidal microvasculature predicts diabetic retinopathy progression and diabetic macular edema development: A three-year prospective study. Am. J. Ophthalmol. 2023, 245, 164–173. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- De Fauw, J.; Ledsam, J.R.; Romera-Paredes, B.; Nikolov, S.; Tomasev, N.; Blackwell, S.; Askham, H.; Glorot, X.; O’Donoghue, B.; Visentin, D.; et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 2018, 24, 1342–1350. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- He, J.; Baxter, S.L.; Xu, J.; Xu, J.; Zhou, X.; Zhang, K. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 2019, 25, 30–36. [Google Scholar] [CrossRef] [PubMed]

- Ting, D.S.W.; Peng, L.; Varadarajan, A.V.; Keane, P.A.; Burlina, P.M.; Chiang, M.F.; Schmetterer, L.; Pasquale, L.R.; Bressler, N.M.; Webster, D.R.; et al. Deep learning in ophthalmology: The technical and clinical considerations. Prog. Retin. Eye Res. 2019, 72, 100759. [Google Scholar] [CrossRef]

- Ting, D.S.W.; Pasquale, L.R.; Peng, L.; Campbell, J.P.; Lee, A.Y.; Raman, R.; Tan, G.S.W.; Schmetterer, L.; Keane, P.A.; Wong, T.Y. Artificial intelligence and deep learning in ophthalmology. Br. J. Ophthalmol. 2019, 103, 167–175. [Google Scholar] [CrossRef]

- Ting, D.S.W.; Cheung, C.Y.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; San Yeo, I.Y.; Lee, S.Y.; et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations with Diabetes. JAMA 2017, 318, 2211–2223. [Google Scholar] [CrossRef]

- Tang, F.; Wang, X.; Ran, A.R.; Chan, C.K.M.; Ho, M.; Yip, W.; Young, A.L.; Lok, J.; Szeto, S.; Chan, J.; et al. A Multitask Deep-Learning System to Classify Diabetic Macular Edema for Different Optical Coherence Tomography Devices: A Multicenter Analysis. Diabetes Care 2021, 44, 2078–2088. [Google Scholar] [CrossRef] [PubMed]

- Yim, J.; Chopra, R.; Spitz, T.; Winkens, J.; Obika, A.; Kelly, C.; Askham, H.; Lukic, M.; Huemer, J.; Fasler, K.; et al. Predicting conversion to wet age-related macular degeneration using deep learning. Nat. Med. 2020, 26, 892–899. [Google Scholar] [CrossRef]

- Ran, A.R.; Cheung, C.Y.; Wang, X.; Chen, H.; Luo, L.Y.; Chan, P.P.; Wong, M.O.M.; Chang, R.T.; Mannil, S.S.; Young, A.L.; et al. Detection of glaucomatous optic neuropathy with spectral-domain optical coherence tomography: A retrospective training and validation deep-learning analysis. Lancet Digit. Health 2019, 1, e172–e182. [Google Scholar] [CrossRef]

- Lujan, B.J.; Calhoun, C.T.; Glassman, A.R.; Googe, J.M.; Jampol, L.M.; Melia, M.; Schlossman, D.K.; Sun, J.K.; Network, D.R. Optical Coherence Tomography Angiography Quality Across Three Multicenter Clinical Studies of Diabetic Retinopathy. Transl. Vis. Sci. Technol. 2021, 10, 2. [Google Scholar] [CrossRef] [PubMed]

- Holmen, I.C.; Konda, S.M.; Pak, J.W.; McDaniel, K.W.; Blodi, B.; Stepien, K.E.; Domalpally, A. Prevalence and Severity of Artifacts in Optical Coherence Tomographic Angiograms. JAMA Ophthalmol. 2020, 138, 119–126. [Google Scholar] [CrossRef] [PubMed]

- Lauermann, J.L.; Treder, M.; Alnawaiseh, M.; Clemens, C.R.; Eter, N.; Alten, F. Automated OCT angiography image quality assessment using a deep learning algorithm. Graefes Arch. Clin. Exp. Ophthalmol. 2019, 257, 1641–1648. [Google Scholar] [CrossRef]

- Yang, H.; Chen, C.; Jiang, M.; Liu, Q.; Cao, J.; Heng, P.A.; Dou, Q. DLTTA: Dynamic Learning Rate for Test-time Adaptation on Cross-domain Medical Images. IEEE Trans. Med. Imaging 2022, 41, 3575–3586. [Google Scholar] [CrossRef] [PubMed]

- Dhodapkar, R.M.; Li, E.; Nwanyanwu, K.; Adelman, R.; Krishnaswamy, S.; Wang, J.C. Deep learning for quality assessment of optical coherence tomography angiography images. Sci. Rep. 2022, 12, 13775. [Google Scholar] [CrossRef]

- Zhu, Y.; Cui, Y.; Wang, J.C.; Lu, Y.; Zeng, R.; Katz, R.; Wu, D.M.; Eliott, D.; Vavvas, D.G.; Husain, D.; et al. Different Scan Protocols Affect the Detection Rates of Diabetic Retinopathy Lesions by Wide-Field Swept-Source Optical Coherence Tomography Angiography. Am. J. Ophthalmol. 2020, 215, 72–80. [Google Scholar] [CrossRef]

- Gao, M.; Guo, Y.; Hormel, T.T.; Sun, J.; Hwang, T.S.; Jia, Y. Reconstruction of high-resolution 6x6-mm OCT angiograms using deep learning. Biomed. Opt. Express 2020, 11, 3585–3600. [Google Scholar] [CrossRef]

- Gao, M.; Hormel, T.T.; Wang, J.; Guo, Y.; Bailey, S.T.; Hwang, T.S.; Jia, Y. An Open-Source Deep Learning Network for Reconstruction of High-Resolution OCT Angiograms of Retinal Intermediate and Deep Capillary Plexuses. Transl. Vis. Sci. Technol. 2021, 10, 13. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, D.; Cheung, C.Y.; Chen, H. Frequency-Aware Inverse-Consistent Deep Learning for OCT-Angiogram Super-Resolution. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2022, Singapore, 18–22 September 2022; pp. 645–655. [Google Scholar]

- Lee, J.; Moon, B.G.; Cho, A.R.; Yoon, Y.H. Optical Coherence Tomography Angiography of DME and Its Association with Anti-VEGF Treatment Response. Ophthalmology 2016, 123, 2368–2375. [Google Scholar] [CrossRef]

- Balaratnasingam, C.; Inoue, M.; Ahn, S.; McCann, J.; Dhrami-Gavazi, E.; Yannuzzi, L.A.; Freund, K.B. Visual Acuity Is Correlated with the Area of the Foveal Avascular Zone in Diabetic Retinopathy and Retinal Vein Occlusion. Ophthalmology 2016, 123, 2352–2367. [Google Scholar] [CrossRef] [PubMed]

- Prentasic, P.; Heisler, M.; Mammo, Z.; Lee, S.; Merkur, A.; Navajas, E.; Beg, M.F.; Sarunic, M.; Loncaric, S. Segmentation of the foveal microvasculature using deep learning networks. J. Biomed. Opt. 2016, 21, 75008. [Google Scholar] [CrossRef] [PubMed]

- Mirshahi, R.; Anvari, P.; Riazi-Esfahani, H.; Sardarinia, M.; Naseripour, M.; Falavarjani, K.G. Foveal avascular zone segmentation in optical coherence tomography angiography images using a deep learning approach. Sci. Rep. 2021, 11, 1031. [Google Scholar] [CrossRef]

- Guo, M.; Zhao, M.; Cheong, A.M.Y.; Dai, H.; Lam, A.K.C.; Zhou, Y. Automatic quantification of superficial foveal avascular zone in optical coherence tomography angiography implemented with deep learning. Vis. Comput. Ind. Biomed. Art 2019, 2, 21. [Google Scholar] [CrossRef] [PubMed]

- Selvam, S.; Kumar, T.; Fruttiger, M. Retinal vasculature development in health and disease. Prog. Retin. Eye Res. 2018, 63, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Wagner, S.K.; Fu, D.J.; Faes, L.; Liu, X.; Huemer, J.; Khalid, H.; Ferraz, D.; Korot, E.; Kelly, C.; Balaskas, K.; et al. Insights into Systemic Disease through Retinal Imaging-Based Oculomics. Transl. Vis. Sci. Technol. 2020, 9, 6. [Google Scholar] [CrossRef]

- Ma, Y.; Hao, H.; Xie, J.; Fu, H.; Zhang, J.; Yang, J.; Wang, Z.; Liu, J.; Zheng, Y.; Zhao, Y. ROSE: A Retinal OCT-Angiography Vessel Segmentation Dataset and New Model. IEEE Trans. Med. Imaging 2021, 40, 928–939. [Google Scholar] [CrossRef]

- Liu, Y.; Carass, A.; Zuo, L.; He, Y.; Han, S.; Gregori, L.; Murray, S.; Mishra, R.; Lei, J.; Calabresi, P.A.; et al. Disentangled Representation Learning for OCTA Vessel Segmentation with Limited Training Data. IEEE Trans. Med. Imaging 2022, 41, 3686–3698. [Google Scholar] [CrossRef]

- Guo, Y.; Hormel, T.T.; Pi, S.; Wei, X.; Gao, M.; Morrison, J.C.; Jia, Y. An end-to-end network for segmenting the vasculature of three retinal capillary plexuses from OCT angiographic volumes. Biomed. Opt. Express 2021, 12, 4889–4900. [Google Scholar] [CrossRef]

- Sim, D.A.; Keane, P.A.; Zarranz-Ventura, J.; Fung, S.; Powner, M.B.; Platteau, E.; Bunce, C.V.; Fruttiger, M.; Patel, P.J.; Tufail, A.; et al. The effects of macular ischemia on visual acuity in diabetic retinopathy. Investig. Ophthalmol. Vis. Sci. 2013, 54, 2353–2360. [Google Scholar] [CrossRef]

- Cheung, C.M.G.; Fawzi, A.; Teo, K.Y.; Fukuyama, H.; Sen, S.; Tsai, W.S.; Sivaprasad, S. Diabetic macular ischaemia—A new therapeutic target? Prog. Retin. Eye Res. 2022, 89, 101033. [Google Scholar] [CrossRef] [PubMed]

- Nagasato, D.; Tabuchi, H.; Masumoto, H.; Enno, H.; Ishitobi, N.; Kameoka, M.; Niki, M.; Mitamura, Y. Automated detection of a nonperfusion area caused by retinal vein occlusion in optical coherence tomography angiography images using deep learning. PLoS ONE 2019, 14, e0223965. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Hormel, T.T.; Gao, L.; You, Q.; Wang, B.; Flaxel, C.J.; Bailey, S.T.; Choi, D.; Huang, D.; Hwang, T.S.; et al. Quantification of Nonperfusion Area in Montaged Widefield OCT Angiography Using Deep Learning in Diabetic Retinopathy. Ophthalmol. Sci. 2021, 1, 100027. [Google Scholar] [CrossRef]

- Jian, L.; Panpan, Y.; Wen, X. Current choroidal neovascularization treatment. Ophthalmologica 2013, 230, 55–61. [Google Scholar] [CrossRef]

- Wang, J.; Hormel, T.T.; Gao, L.; Zang, P.; Guo, Y.; Wang, X.; Bailey, S.T.; Jia, Y. Automated diagnosis and segmentation of choroidal neovascularization in OCT angiography using deep learning. Biomed. Opt. Express 2020, 11, 927–944. [Google Scholar] [CrossRef] [PubMed]

- Thakoor, K.; Bordbar, D.; Yao, J.; Moussa, O.; Chen, R.; Sajda, P. Hybrid 3d-2d Deep Learning for Detection of Neovascularage-Related Macular Degeneration Using Optical Coherence Tomography B-Scans And Angiography Volumes. In Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), Nice, France, 13–16 April 2021; pp. 1600–1604. [Google Scholar]

- Woo, S.C.; Lip, G.Y.; Lip, P.L. Associations of retinal artery occlusion and retinal vein occlusion to mortality, stroke, and myocardial infarction: A systematic review. Eye 2016, 30, 1031–1038. [Google Scholar] [CrossRef]

- Cheung, C.Y.; Biousse, V.; Keane, P.A.; Schiffrin, E.L.; Wong, T.Y. Hypertensive eye disease. Nat. Rev. Dis. Prim. 2022, 8, 14. [Google Scholar] [CrossRef] [PubMed]

- Cheung, C.Y.; Ikram, M.K.; Sabanayagam, C.; Wong, T.Y. Retinal microvasculature as a model to study the manifestations of hypertension. Hypertension 2012, 60, 1094–1103. [Google Scholar] [CrossRef]

- Cheung, C.Y.; Sabanayagam, C.; Law, A.K.; Kumari, N.; Ting, D.S.; Tan, G.; Mitchell, P.; Cheng, C.Y.; Wong, T.Y. Retinal vascular geometry and 6 year incidence and progression of diabetic retinopathy. Diabetologia 2017, 60, 1770–1781. [Google Scholar] [CrossRef]

- Cheung, C.Y.; Ikram, M.K.; Klein, R.; Wong, T.Y. The clinical implications of recent studies on the structure and function of the retinal microvasculature in diabetes. Diabetologia 2015, 58, 871–885. [Google Scholar] [CrossRef]

- Alam, M.; Le, D.; Son, T.; Lim, J.I.; Yao, X. AV-Net: Deep learning for fully automated artery-vein classification in optical coherence tomography angiography. Biomed. Opt. Express 2020, 11, 5249–5257. [Google Scholar] [CrossRef]

- Gao, M.; Guo, Y.; Hormel, T.T.; Tsuboi, K.; Pacheco, G.; Poole, D.; Bailey, S.T.; Flaxel, C.J.; Huang, D.; Hwang, T.S.; et al. A Deep Learning Network for Classifying Arteries and Veins in Montaged Widefield OCT Angiograms. Ophthalmol. Sci. 2022, 2, 100149. [Google Scholar] [CrossRef]

- Ryu, G.; Lee, K.; Park, D.; Park, S.H.; Sagong, M. A deep learning model for identifying diabetic retinopathy using optical coherence tomography angiography. Sci. Rep. 2021, 11, 23024. [Google Scholar] [CrossRef]

- Le, D.; Alam, M.; Yao, C.K.; Lim, J.I.; Hsieh, Y.T.; Chan, R.V.P.; Toslak, D.; Yao, X. Transfer Learning for Automated OCTA Detection of Diabetic Retinopathy. Transl. Vis. Sci. Technol. 2020, 9, 35. [Google Scholar] [CrossRef] [PubMed]

- Zang, P.; Gao, L.; Hormel, T.T.; Wang, J.; You, Q.; Hwang, T.S.; Jia, Y. DcardNet: Diabetic Retinopathy Classification at Multiple Levels Based on Structural and Angiographic Optical Coherence Tomography. IEEE Trans. Biomed. Eng. 2021, 68, 1859–1870. [Google Scholar] [CrossRef]

- Sun, J.K.; Aiello, L.P.; Abramoff, M.D.; Antonetti, D.A.; Dutta, S.; Pragnell, M.; Levine, S.R.; Gardner, T.W. Updating the Staging System for Diabetic Retinal Disease. Ophthalmology 2021, 128, 490–493. [Google Scholar] [CrossRef]

- Jia, Y.; Wei, E.; Wang, X.; Zhang, X.; Morrison, J.C.; Parikh, M.; Lombardi, L.H.; Gattey, D.M.; Armour, R.L.; Edmunds, B.; et al. Optical coherence tomography angiography of optic disc perfusion in glaucoma. Ophthalmology 2014, 121, 1322–1332. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.P.H.; Wang, Y.M.; Ho, K.; Wong, C.Y.K.; Chan, P.P.; Wong, M.O.M.; Chan, N.C.Y.; Tang, F.; Lam, A.; Leung, D.Y.L.; et al. Global assessment of arteriolar, venular and capillary changes in normal tension glaucoma. Sci. Rep. 2020, 10, 19222. [Google Scholar] [CrossRef]

- Bowd, C.; Belghith, A.; Zangwill, L.M.; Christopher, M.; Goldbaum, M.H.; Fan, R.; Rezapour, J.; Moghimi, S.; Kamalipour, A.; Hou, H.; et al. Deep Learning Image Analysis of Optical Coherence Tomography Angiography Measured Vessel Density Improves Classification of Healthy and Glaucoma Eyes. Am. J. Ophthalmol. 2022, 236, 298–308. [Google Scholar] [CrossRef] [PubMed]

- Schottenhamml, J.; Wurfl, T.; Mardin, S.; Ploner, S.B.; Husvogt, L.; Hohberger, B.; Lammer, R.; Mardin, C.; Maier, A. Glaucoma classification in 3 × 3 mm en face macular scans using deep learning in a different plexus. Biomed. Opt. Express 2021, 12, 7434–7444. [Google Scholar] [CrossRef] [PubMed]

- Hsiao, C.C.; Hsu, H.M.; Yang, C.M.; Yang, C.H. Correlation of retinal vascular perfusion density with dark adaptation in diabetic retinopathy. Graefes Arch. Clin. Exp. Ophthalmol. 2019, 257, 1401–1410. [Google Scholar] [CrossRef]

- Lommatzsch, C.; Rothaus, K.; Koch, J.M.; Heinz, C.; Grisanti, S. OCTA vessel density changes in the macular zone in glaucomatous eyes. Graefes Arch. Clin. Exp. Ophthalmol. 2018, 256, 1499–1508. [Google Scholar] [CrossRef] [PubMed]

- Munk, M.R.; Giannakaki-Zimmermann, H.; Berger, L.; Huf, W.; Ebneter, A.; Wolf, S.; Zinkernagel, M.S. OCT-angiography: A qualitative and quantitative comparison of 4 OCT-A devices. PLoS ONE 2017, 12, e0177059. [Google Scholar] [CrossRef]

- Munk, M.R.; Kashani, A.H.; Tadayoni, R.; Korobelnik, J.F.; Wolf, S.; Pichi, F.; Tian, M. Standardization of OCT Angiography Nomenclature in Retinal Vascular Diseases: First Survey Results. Ophthalmol. Retina 2021, 5, 981–990. [Google Scholar] [CrossRef] [PubMed]

- Pichi, F.; Salas, E.C.; de Smet, M.D.; Gupta, V.; Zierhut, M.; Munk, M.R. Standardisation of optical coherence tomography angiography nomenclature in uveitis: First survey results. Br. J. Ophthalmol. 2021, 105, 941–947. [Google Scholar] [CrossRef]

- Jung, J.J.; Lim, S.Y.; Chan, X.; Sadda, S.R.; Hoang, Q.V. Correlation of Diabetic Disease Severity to Degree of Quadrant Asymmetry in En Face OCTA Metrics. Investig. Ophthalmol. Vis. Sci. 2022, 63, 12. [Google Scholar] [CrossRef]

- Zhang, B.; Chou, Y.; Zhao, X.; Yang, J.; Chen, Y. Early Detection of Microvascular Impairments with Optical Coherence Tomography Angiography in Diabetic Patients without Clinical Retinopathy: A Meta-analysis. Am. J. Ophthalmol. 2021, 222, 226–237. [Google Scholar] [CrossRef]

- Chlap, P.; Min, H.; Vandenberg, N.; Dowling, J.; Holloway, L.; Haworth, A. A review of medical image data augmentation techniques for deep learning applications. J. Med. Imaging Radiat. Oncol. 2021, 65, 545–563. [Google Scholar] [CrossRef] [PubMed]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.S.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F.; et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 2018, 172, 1122–1131. [Google Scholar] [CrossRef]

- Burlina, P.; Paul, W.; Mathew, P.; Joshi, N.; Pacheco, K.D.; Bressler, N.M. Low-Shot Deep Learning of Diabetic Retinopathy with Potential Applications to Address Artificial Intelligence Bias in Retinal Diagnostics and Rare Ophthalmic Diseases. JAMA Ophthalmol. 2020, 138, 1070–1077. [Google Scholar] [CrossRef]

- Ng, D.; Lan, X.; Yao, M.M.; Chan, W.P.; Feng, M. Federated learning: A collaborative effort to achieve better medical imaging models for individual sites that have small labelled datasets. Quant. Imaging Med. Surg. 2021, 11, 852–857. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Liu, H.; Gong, S.; Tang, Z.; Xie, Y.; Yin, H.; Niyoyita, J.P. Automated cardiac segmentation of cross-modal medical images using unsupervised multi-domain adaptation and spatial neural attention structure. Med. Image Anal. 2021, 72, 102135. [Google Scholar] [CrossRef]

- Armanious, K.; Jiang, C.; Fischer, M.; Kustner, T.; Hepp, T.; Nikolaou, K.; Gatidis, S.; Yang, B. MedGAN: Medical image translation using GANs. Comput. Med. Imaging Graph. 2020, 79, 101684. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Hormel, T.T.; Xiong, H.; Wang, J.; Hwang, T.S.; Jia, Y. Automated Segmentation of Retinal Fluid Volumes from Structural and Angiographic Optical Coherence Tomography Using Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 54. [Google Scholar] [CrossRef]

- Li, Y.F.; Guo, L.Z.; Zhou, Z.H. Towards Safe Weakly Supervised Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 334–346. [Google Scholar] [CrossRef]

- Huff, D.T.; Weisman, A.J.; Jeraj, R. Interpretation and visualization techniques for deep learning models in medical imaging. Phys. Med. Biol. 2021, 66, 04TR01. [Google Scholar] [CrossRef]

- Fuhrman, J.D.; Gorre, N.; Hu, Q.; Li, H.; El Naqa, I.; Giger, M.L. A review of explainable and interpretable AI with applications in COVID-19 imaging. Med. Phys. 2022, 49, 1–14. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, D.; Ran, A.R.; Nguyen, T.X.; Lin, T.P.H.; Chen, H.; Lai, T.Y.Y.; Tham, C.C.; Cheung, C.Y. Deep Learning in Optical Coherence Tomography Angiography: Current Progress, Challenges, and Future Directions. Diagnostics 2023, 13, 326. https://doi.org/10.3390/diagnostics13020326

Yang D, Ran AR, Nguyen TX, Lin TPH, Chen H, Lai TYY, Tham CC, Cheung CY. Deep Learning in Optical Coherence Tomography Angiography: Current Progress, Challenges, and Future Directions. Diagnostics. 2023; 13(2):326. https://doi.org/10.3390/diagnostics13020326

Chicago/Turabian StyleYang, Dawei, An Ran Ran, Truong X. Nguyen, Timothy P. H. Lin, Hao Chen, Timothy Y. Y. Lai, Clement C. Tham, and Carol Y. Cheung. 2023. "Deep Learning in Optical Coherence Tomography Angiography: Current Progress, Challenges, and Future Directions" Diagnostics 13, no. 2: 326. https://doi.org/10.3390/diagnostics13020326

APA StyleYang, D., Ran, A. R., Nguyen, T. X., Lin, T. P. H., Chen, H., Lai, T. Y. Y., Tham, C. C., & Cheung, C. Y. (2023). Deep Learning in Optical Coherence Tomography Angiography: Current Progress, Challenges, and Future Directions. Diagnostics, 13(2), 326. https://doi.org/10.3390/diagnostics13020326