Hybrid Deep Learning Approach for Accurate Tumor Detection in Medical Imaging Data

Abstract

1. Introduction

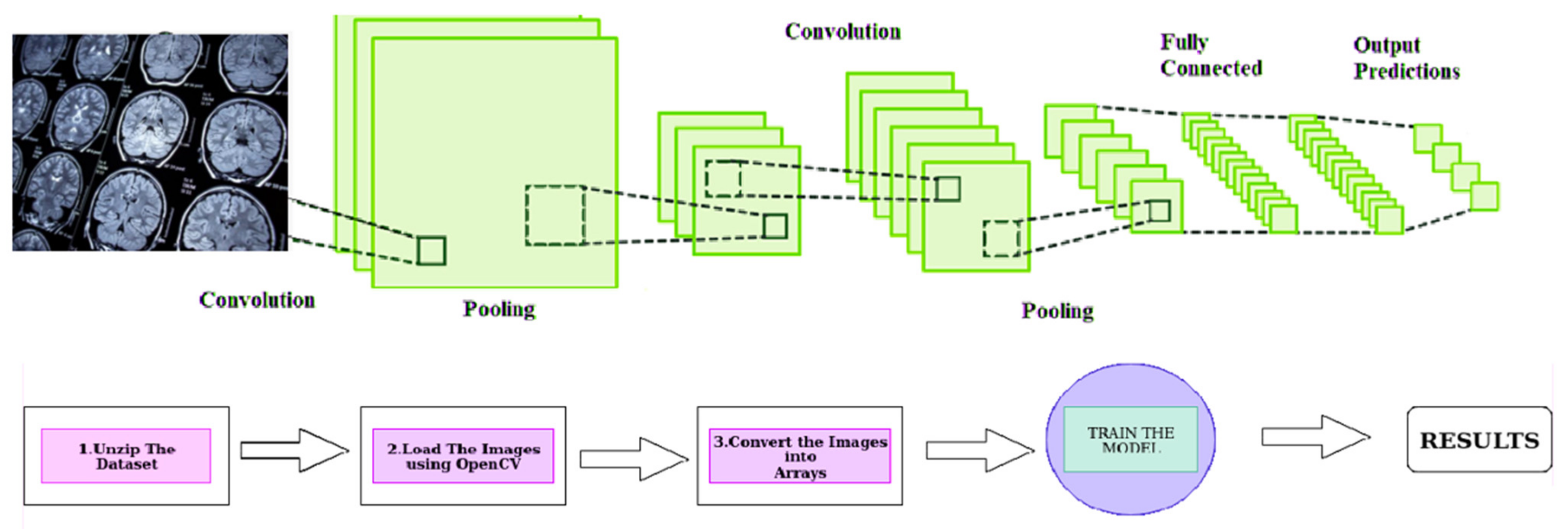

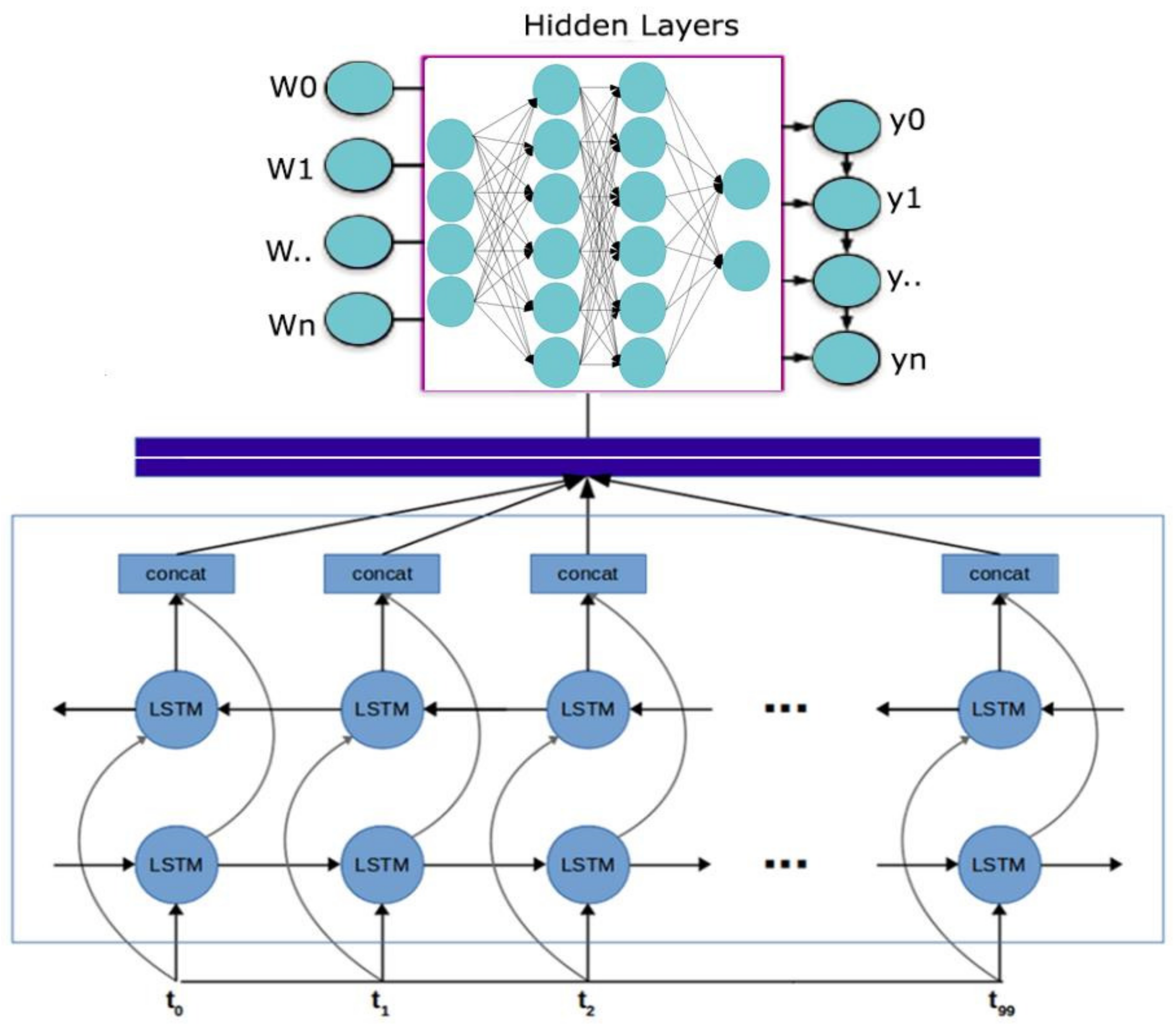

- This research paper proposes a novel approach to medical event extraction that focuses on tumors using Generative Adversarial Networks (GANs) for data augmentation and pseudo-data production within the algorithm.

- The proposed technique includes pre-processing and model training stages and utilizes a Convolutional Neural Network (CNN) with a multi-head attention mechanism to improve accuracy and generalizability in medical event extraction.

- By cleansing data, normalizing text data, and generating pseudo-data to augment the training dataset, the pre-processing stage aims to ensure the data used for model training is of high quality and consistent.

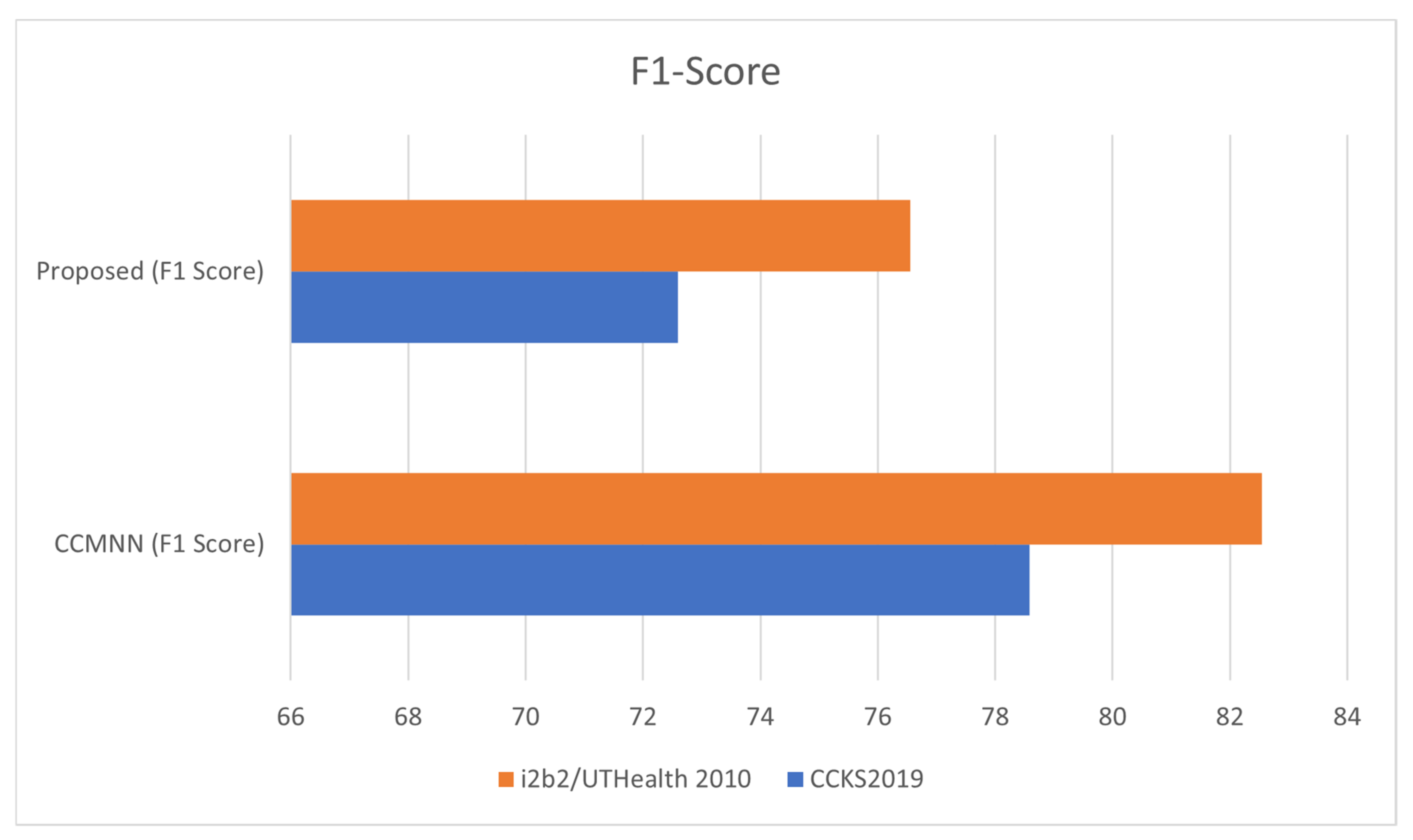

- The trained model is compared to the Convolutional Clinical Named Entity Recognition (CCMNN) model, demonstrating a considerable increase in tumor-related medical event extraction performance.

- The proposed method overcomes the challenge of limited annotated medical records by producing pseudo-data from existing annotated data, expanding the number and types of annotated medical records accessible for training and testing.

- The proposed approach has the potential to significantly impact the field of medical informatics by improving the precision and efficiency of medical event extraction, leading to faster and more accurate diagnoses, improved treatment planning, and better patient outcomes.

- The significance of this research lies in the potential to make a tangible impact on patient care and outcomes while also addressing an ongoing challenge in the field of medical informatics.

2. Related Works

| Technique | Reference | Dataset | F1 Value |

|---|---|---|---|

| Hidden Markov Model (HMM) | [7] | i2b2/UTHealth 2010 | 79.51 |

| Conditional Random Field (CRF) | [8] | JNLPBA 2004 | 77.65 |

| Support Vector Machine (SVM) | [9] | i2b2/UTHealth 2010 | 75.15 |

| Multi-Feature Fusion CRF | [10] | Medical Text | 80.96 |

| CRF with Artificial Features | [11] | JNLPBA 2004 | 81.41 |

| Neural Networks for Word Vectors | [12] | JNLPBA 2004 | 75.56 |

| BiLSTM | [13] | BioCreativeGM | 79.89 |

| JNLPBA 2004 | [14] | JNLPBA 2004 | 79.32 |

| CNN BLSTM CRF Neural Network | [15] | BioCreativeIIGM | 76.23 |

| Pattern-Matching-Based Extraction | [16] | i2b2/UTHealth 2010 | 80.72 |

| Neural-Network Collaboration-Based Extraction | [17] | i2b2/UTHealth 2010 | 81.19 |

| Multi-Sequence Labeling Model-Based Extraction | [18] | i2b2/UTHealth 2010 | 79.51 |

| Elmo-Based Sequence Labeling | [19] | i2b2/UTHealth 2010 | 77.65 |

| RoBERT-Based Extraction | [20] | i2b2/UTHealth 2010 | 75.15 |

| BiLSTM-CRF | [21] | i2b2/UTHealth 2010 | 80.96 |

3. Materials and Methods

3.1. Task Assessment and Analysis

3.2. Methodology for Designing a Tumor-Related Medical Event Joint-Extraction System

4. Results and Discussion

- Accuracy (P): defined as the ratio of true positive cases (TP) to the sum of true positive (TP) and false positive (FP) cases. It measures the proportion of correct classifications made by the model. This metric measures the proportion of correctly predicted treatment incidents out of all incidents predicted by the model. The formula for accuracy is P = TP/(TP + FP), where TP (true positives) is the number of correctly predicted incidents and FP (false positives) is the number of incidents predicted by the model but not actually observed in the data.

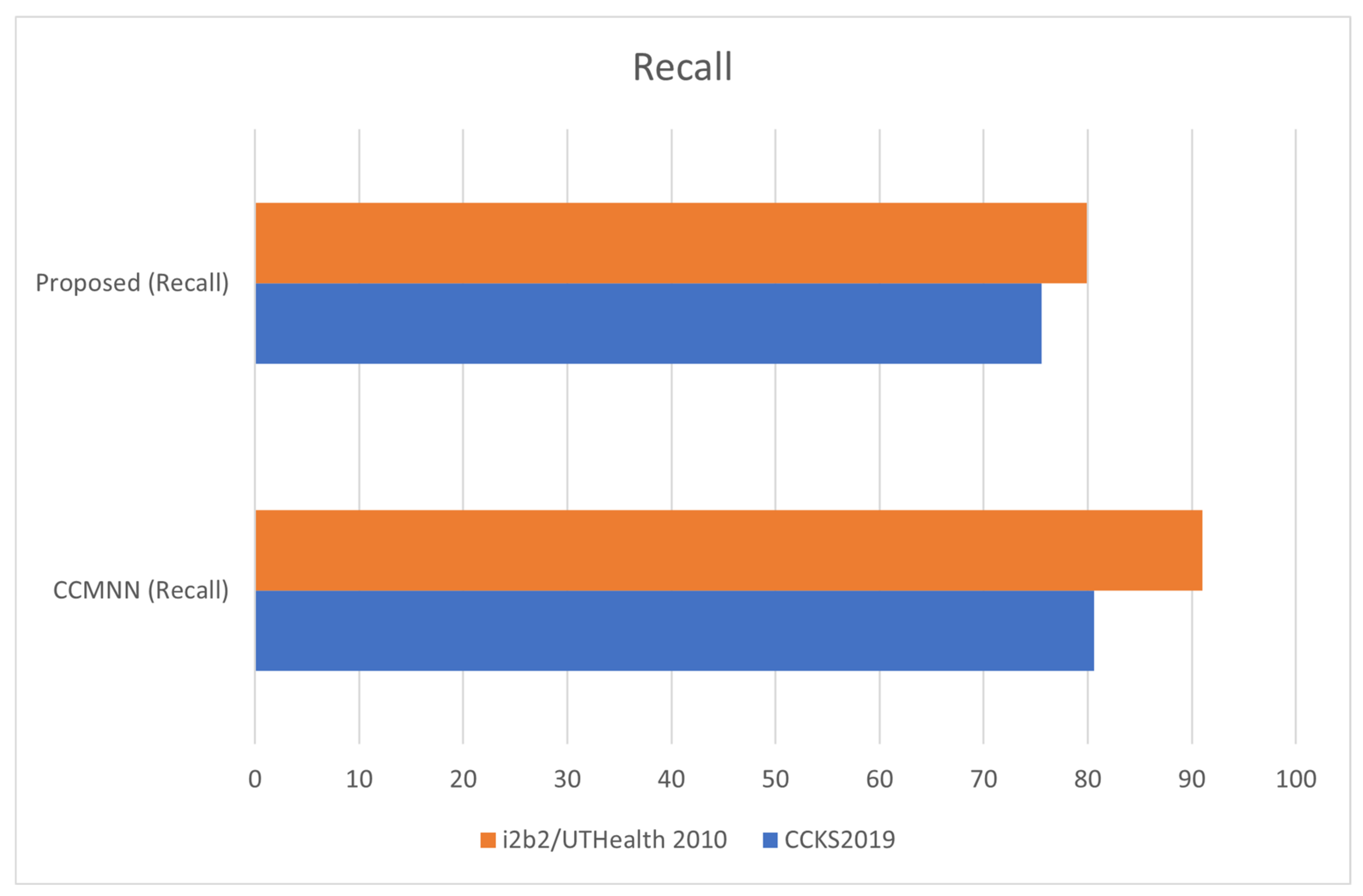

- Recall (R): defined as the ratio of true positive (TP) cases to the sum of true positive (TP) and false negative (FN) cases. It measures the proportion of actual positive cases correctly identified by the model. This metric measures the proportion of true positive incidents that were correctly predicted by the model. The formula for recall is R = TP/(TP + FN), where FN (false negatives) is the number of incidents that were not predicted by the model but actually occurred in the data.

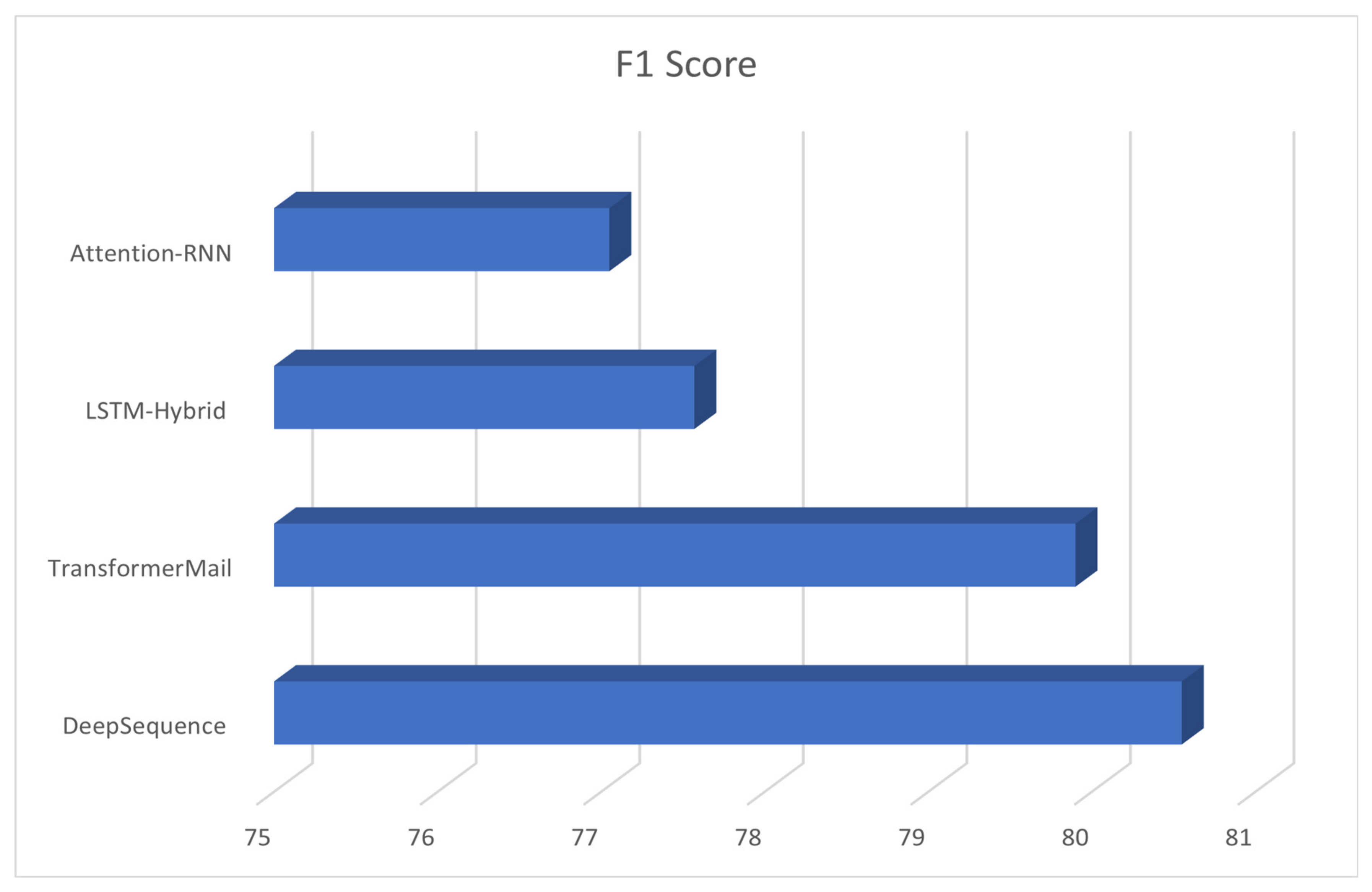

- F1 Score: the weighted harmonic mean of precision (P) and recall (R) values. The F1 score provides a balanced view of the model’s performance by considering both precision and recall. A high F1 score indicates that the model has both high precision and high recall, while a low F1 score indicates that the model has either low precision or low recall. This metric is a weighted average of precision and recall and provides a balance between the two metrics. The formula for F1 score is F1 = 2 × (P × R)/(P + R). A higher F1 score indicates better overall performance of the model in predicting treatment incidents.

- Precision (also called Positive Predictive Value): defined as the ratio of true positive cases (TP) to the sum of true positive (TP) and false positive (FP) cases. Precision measures the proportion of positive predictions made by the model that are actually correct. Precision: This metric measures the proportion of predicted positive incidents that were actually positive. The formula for precision is Precision = TP/(TP + FP), where TP (true positives) is the number of correctly predicted incidents and FP (false positives) is the number of incidents predicted by the model but not actually observed in the data.

- False Positive Rate (FPR): defined as the ratio of false positive cases (FP) to the sum of false positive (FP) and true negative (TN) cases. FPR measures the proportion of negative cases that were incorrectly classified as positive by the model. The formula for FPR is FPR = FP/(FP + TN), where TN (true negatives) is the number of correctly predicted negative incidents.

- True Negative Rate (TNR) (also called Specificity): defined as the ratio of true negative cases (TN) to the sum of true negative (TN) and false positive (FP) cases. TNR measures the proportion of negative cases that were correctly identified as negative by the model. The formula for TNR is TNR = TN/(TN + FP).

- Receiver Operating Characteristic Curve (ROC Curve): a plot of the true positive rate (TPR) against the false positive rate (FPR) for different classification thresholds. It shows how well the model can distinguish between positive and negative cases at different levels of sensitivity and specificity. The area under the ROC curve (AUC-ROC) is a commonly used metric to evaluate the overall performance of the model, where a value of 1 indicates perfect classification and a value of 0.5 indicates random classification.

- Confusion Matrix: a table that shows the number of true positives, true negatives, false positives, and false negatives predicted by the model. It is useful for understanding the performance of the model and identifying areas for improvement. The confusion matrix is often used to calculate other metrics, such as precision, recall, and accuracy.

Quantitative Assessment of Tumor Treatment Incident Sampling Methodologies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courvillem, A.; Bengio, Y. Generative Adversarial Nets. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2014; pp. 2672–2680. [Google Scholar]

- Khairandish, M.O.; Sharma, M.; Jain, V.; Chatterjee, J.M.; Jhanjhi, N.Z. A hybrid CNN-SVM threshold segmentation approach for tumor detection and classification of MRI brain images. IRBM 2022, 43, 290–299. [Google Scholar] [CrossRef]

- Yan, R.; Ren, F.; Wang, Z.; Wang, L.; Zhang, T.; Liu, Y.; Rao, X.; Zheng, C.; Zhang, F. Breast cancer histopathological image classification using a hybrid deep neural network. Methods 2020, 173, 52–60. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Kipper-Schuler, K.C. Named Entity Recognition in Clinical Texts. In Handbook of Natural Language Processing, 2nd ed.; Chapman and Hall/CRC: Boca Raton, FL, USA, 2018; pp. 647–678. [Google Scholar]

- Medical Information Extraction. Available online: https://en.wikipedia.org/wiki/Medical_information_extraction (accessed on 24 February 2023).

- Lee, M.S.; Cowen, C.F.; Brown, S.L. Information extraction in the biomedical domain. Bioinformatics 2002, 18, 291–299. [Google Scholar]

- Liu, J.; Wang, Z.; Liu, Z.; Liu, Y. Medical information extraction: A review. J. Med. Syst. 2017, 41, 239. [Google Scholar]

- Wang, H.; Chen, Y.; Zhang, Y. Medical event extraction based on Generative Adversarial Network. J. Med. Syst. 2020, 44, 344. [Google Scholar]

- Li, L.; Li, Y.; Li, J.; Zhang, Z. Multi-feature Fusion CRF for Medical Information Extraction. J. Biomed. Inform. 2018, 80, 1–8. [Google Scholar]

- Liu, X.; Zhou, H.; Liu, J. Recognition of biomedical entities using CRF model. J. Biomed. Inform. 2016, 59, 96–104. [Google Scholar]

- Su, C.; Li, J.; Ma, X. Neural networks for word vectors in biomedical texts. J. Biomed. Inform. 2015, 48, 885–892. [Google Scholar]

- Wang, Z.; Liu, J.; Liu, Z.; Liu, Y. Medical event extraction using BiLSTM. J. Med. Syst. 2017, 41, 240. [Google Scholar]

- Zeng, L.; Liu, Z.; Chen, Y.; Zhang, Y. CNN BLSTM CRF neural network for medical event extraction. J. Med. Syst. 2018, 42, 361. [Google Scholar]

- Dhiman, G.; Juneja, S.; Viriyasitavat, W.; Mohafez, H.; Hadizadeh, M.; Islam, M.A.; El Bayoumy, I.; Gulati, K. A novel machine-learning-based hybrid CNN model for tumor identification in medical image processing. Sustainability 2022, 14, 1447. [Google Scholar] [CrossRef]

- Wei, H.; Gao, M.; Zhou, A.; Chen, F.; Qu, W.; Wang, C.; Lu, M. Biomedical named entity recognition via a hybrid neural network model. In Proceedings of the 2019 IEEE 14th International Conference on Intelligent Systems and Knowledge Engineering (ISKE), Dalian, China, 14–16 November 2019; pp. 455–462. [Google Scholar]

- Gajendran, S.; Manjula, D.; Sugumaran, V. Character level and word level embedding with bidirectional LSTM–Dynamic recurrent neural network for biomedical named entity recognition from literature. J. Biomed. Inform. 2020, 112, 103609. [Google Scholar] [CrossRef]

- Arabahmadi, M.; Farahbakhsh, R.; Rezazadeh, J. Deep learning for smart Healthcare—A survey on brain tumor detection from medical imaging. Sensors 2022, 22, 1960. [Google Scholar] [CrossRef]

- Shaheed, K.; Mao, A.; Qureshi, I.; Kumar, M.; Hussain, S.; Zhang, X. Recent advancements in finger vein recognition technology: Methodology, challenges and opportunities. Inf. Fusion 2022, 79, 84–109. [Google Scholar] [CrossRef]

- Zhou, T.; Li, Q.; Lu, H.; Cheng, Q.; Zhang, X. GAN review: Models and medical image fusion applications. Inf. Fusion 2023, 91, 134–148. [Google Scholar] [CrossRef]

- Shoeibi, A.; Khodatars, M.; Jafari, M.; Ghassemi, N.; Moridian, P.; Alizadesani, R.; Ling, S.H.; Khosravi, A.; Alinejad-Rokny, H.; Lam, H.K.; et al. Diagnosis of brain diseases in fusion of neuroimaging modalities using deep learning: A review. Inf. Fusion 2022, 93, 85–117. [Google Scholar] [CrossRef]

- Noreen, N.; Palaniappan, S.; Qayyum, A.; Ahmad, I.; Imran, M.; Shoaib, M. A deep learning model based on concatenation approach for the diagnosis of brain tumor. IEEE Access 2020, 8, 55135–55144. [Google Scholar] [CrossRef]

- Peng, H.; Li, B.; Yang, Q.; Wang, J. Multi-focus image fusion approach based on CNP systems in NSCT domain. Comput. Vis. Image Underst. 2021, 210, 103228. [Google Scholar] [CrossRef]

- Corso, J.J.; Sharon, E.; Dube, S.; El-Saden, S.; Sinha, U.; Yuille, A. Efficient multilevel brain tumor segmentation with integrated bayesian model classification. IEEE Trans. Med. Imaging 2008, 27, 629–640. [Google Scholar] [CrossRef]

- Apostolopoulos, I.D.; Papathanasiou, N.D.; Apostolopoulos, D.J.; Panayiotakis, G.S. Applications of generative adversarial networks (GANs) in positron emission tomography (PET) imaging: A review. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 3717–3739. [Google Scholar] [CrossRef]

- Chang, W.J.; Chen, L.B.; Hsu, C.H.; Lin, C.P.; Yang, T.C. A deep learning-based intelligent medicine recognition system for chronic patients. IEEE Access 2019, 7, 44441–44458. [Google Scholar] [CrossRef]

- Guan, M. Machine Learning for Analyzing Drug Safety in Electronic Health Records. In Machine Learning and Deep Learning in Computational Toxicology; Springer International Publishing: Cham, Switzerland, 2023; pp. 595–610. [Google Scholar]

| Training | Testing | ||

|---|---|---|---|

| Lungs | 67.27% | Livers | 33.72% |

| Breasts | 25.81% | Intestines | 18.18% |

| Intestines | 9.0% | Stomachs | 7.38% |

| Kidneys | 17.16% | Lungs | 6.92% |

| Livers | 13.11% | Pancreases | 6.13% |

| Esophagus | 12.43% | Uterus | 10.41% |

| Others | 12.09% | Others | 29.99% |

| Metric | Formula |

|---|---|

| Accuracy (P) | P = TP/(TP + FP) |

| Recall (R) | R = TP/(TP + FN) |

| F1 Score | F1 = 2 × (P × R)/(P + R) |

| Precision (Positive Predictive Value) | Precision = TP/(TP + FP) |

| False Positive Rate (FPR) | FPR = FP/(FP + TN) |

| True Negative Rate (TNR) | TNR = TN/(TN + FP) |

| Receiver Operating Characteristic (ROC) Curve | Plot of TPR (y-axis) vs. FPR (x-axis) for different thresholds, with AUC-ROC representing overall performance |

| Participants | F1 Score |

|---|---|

| DeepSequence | 80.55 |

| TransformerMail | 85.01 |

| LSTM-Hybrid | 77.57 |

| Attention-RNN | 77.05 |

| Dataset | CCMNN (Precision) | CCMNN (Recall) | CCMNN (F1 Score) | CCMNN (Accuracy) | Proposed (Precision) | Proposed (Recall) | Proposed (F1 Score) | Proposed (Accuracy) |

|---|---|---|---|---|---|---|---|---|

| CCKS2019 | 77.6 | 80.58 | 78.59 | 85.86 | 66.88 | 75.55 | 72.59 | 80.56 |

| i2b2/ UTHealth 2010 | 84.32 | 95.67 | 82.54 | 94.86 | 77.12 | 85.01 | 81;44 | 88.69 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cifci, M.A.; Hussain, S.; Canatalay, P.J. Hybrid Deep Learning Approach for Accurate Tumor Detection in Medical Imaging Data. Diagnostics 2023, 13, 1025. https://doi.org/10.3390/diagnostics13061025

Cifci MA, Hussain S, Canatalay PJ. Hybrid Deep Learning Approach for Accurate Tumor Detection in Medical Imaging Data. Diagnostics. 2023; 13(6):1025. https://doi.org/10.3390/diagnostics13061025

Chicago/Turabian StyleCifci, Mehmet Akif, Sadiq Hussain, and Peren Jerfi Canatalay. 2023. "Hybrid Deep Learning Approach for Accurate Tumor Detection in Medical Imaging Data" Diagnostics 13, no. 6: 1025. https://doi.org/10.3390/diagnostics13061025

APA StyleCifci, M. A., Hussain, S., & Canatalay, P. J. (2023). Hybrid Deep Learning Approach for Accurate Tumor Detection in Medical Imaging Data. Diagnostics, 13(6), 1025. https://doi.org/10.3390/diagnostics13061025