Leveraging Deep Learning Decision-Support System in Specialized Oncology Center: A Multi-Reader Retrospective Study on Detection of Pulmonary Lesions in Chest X-ray Images

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

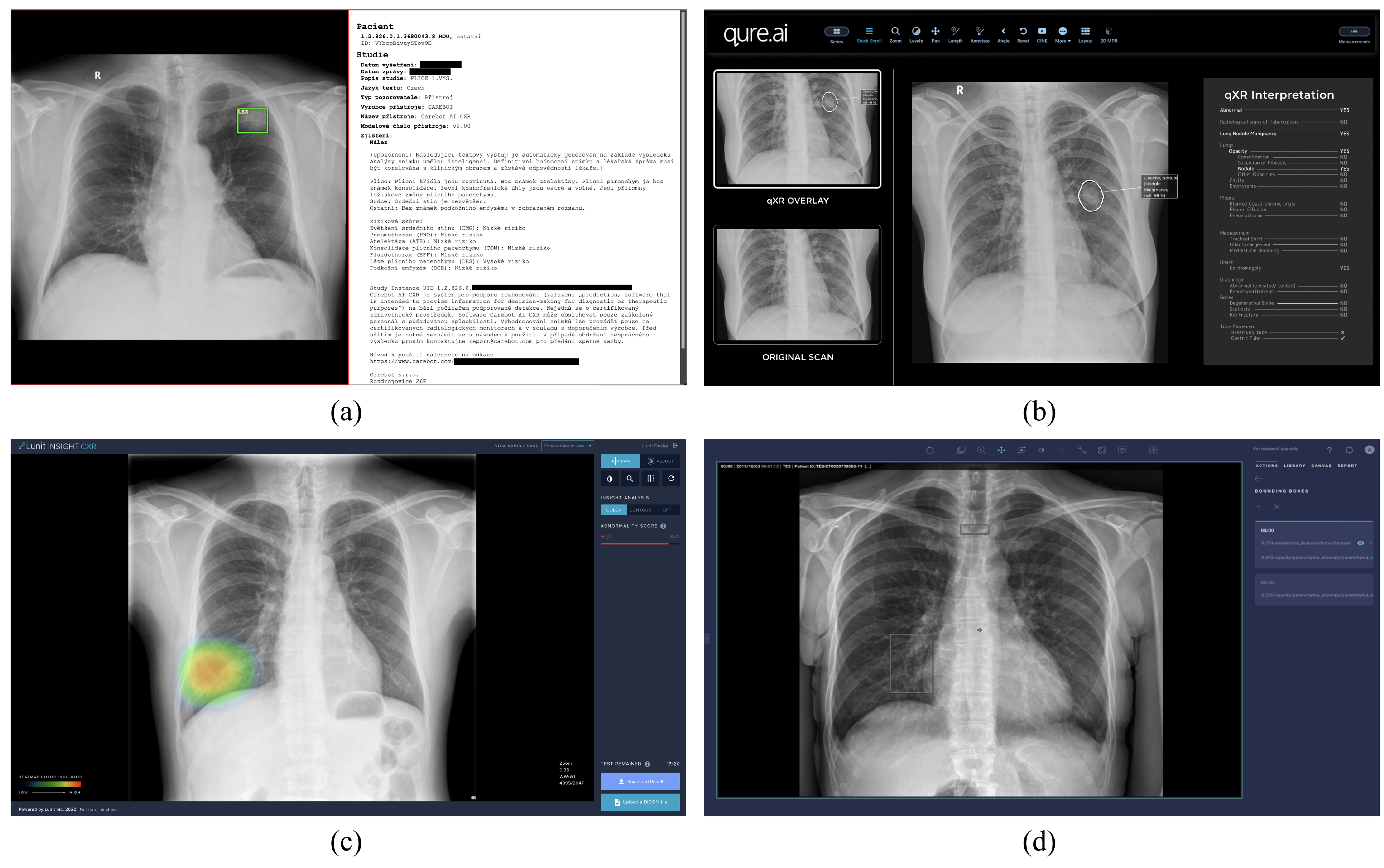

3.1. Software

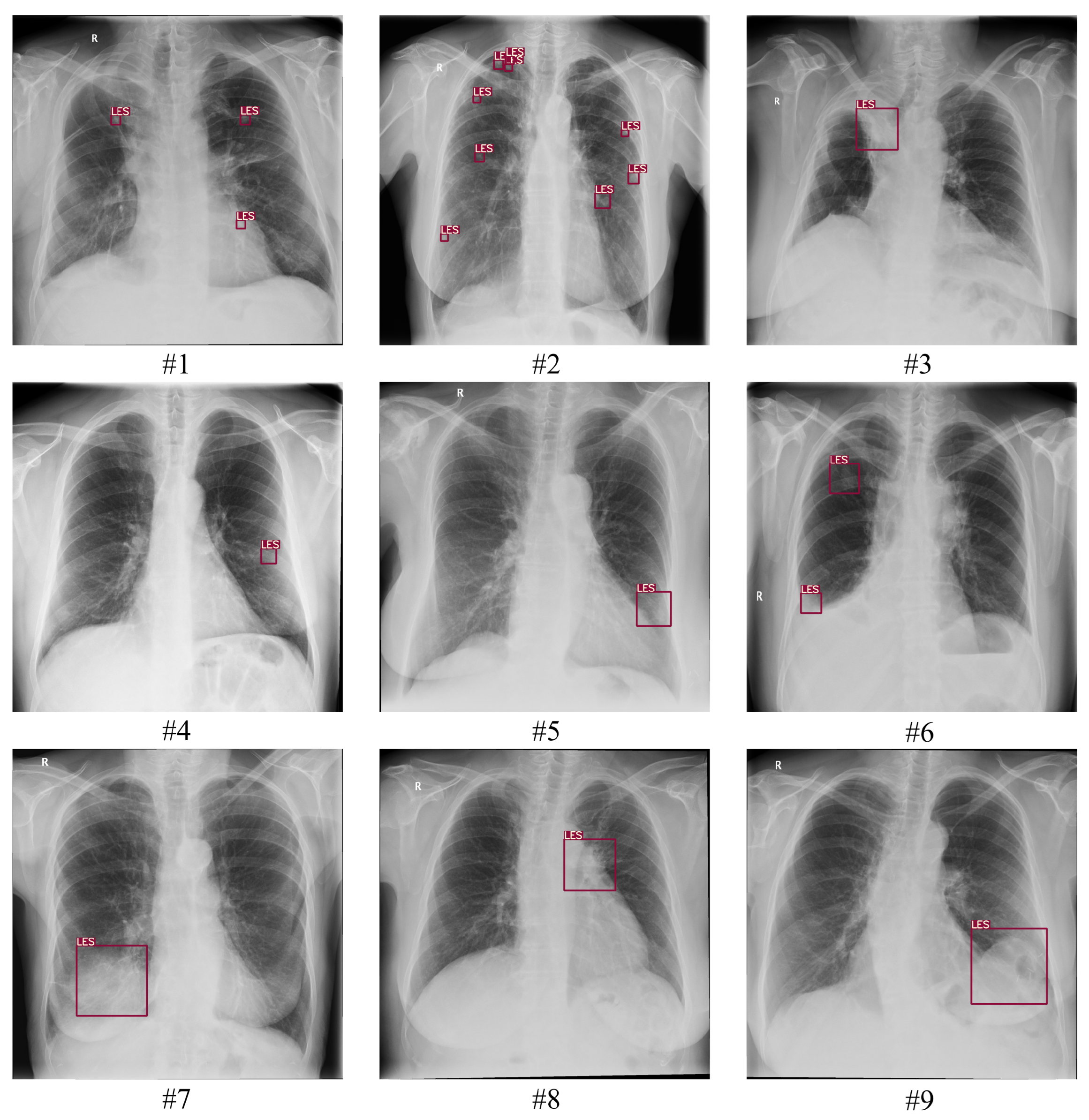

3.1.1. Training Data

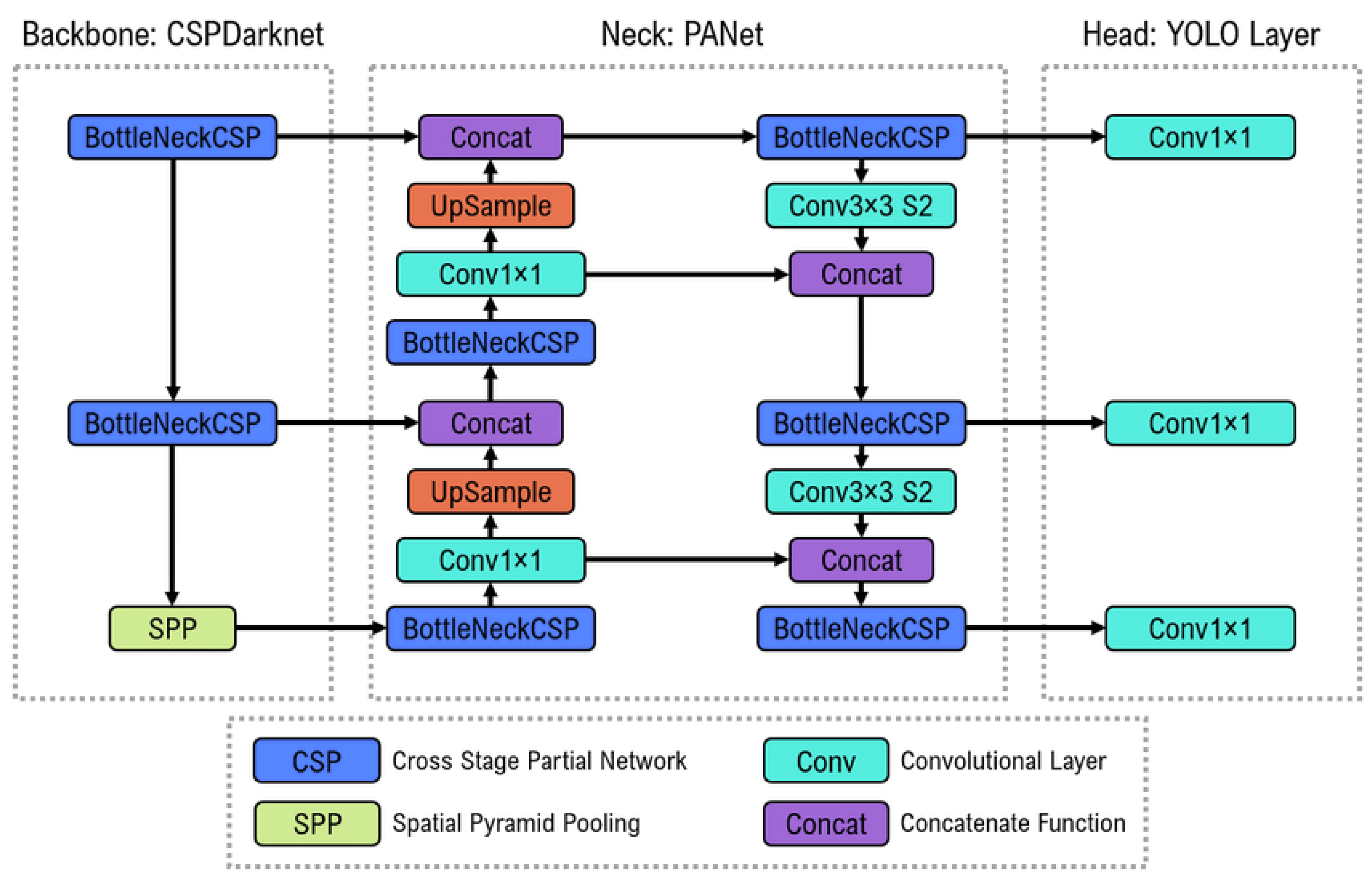

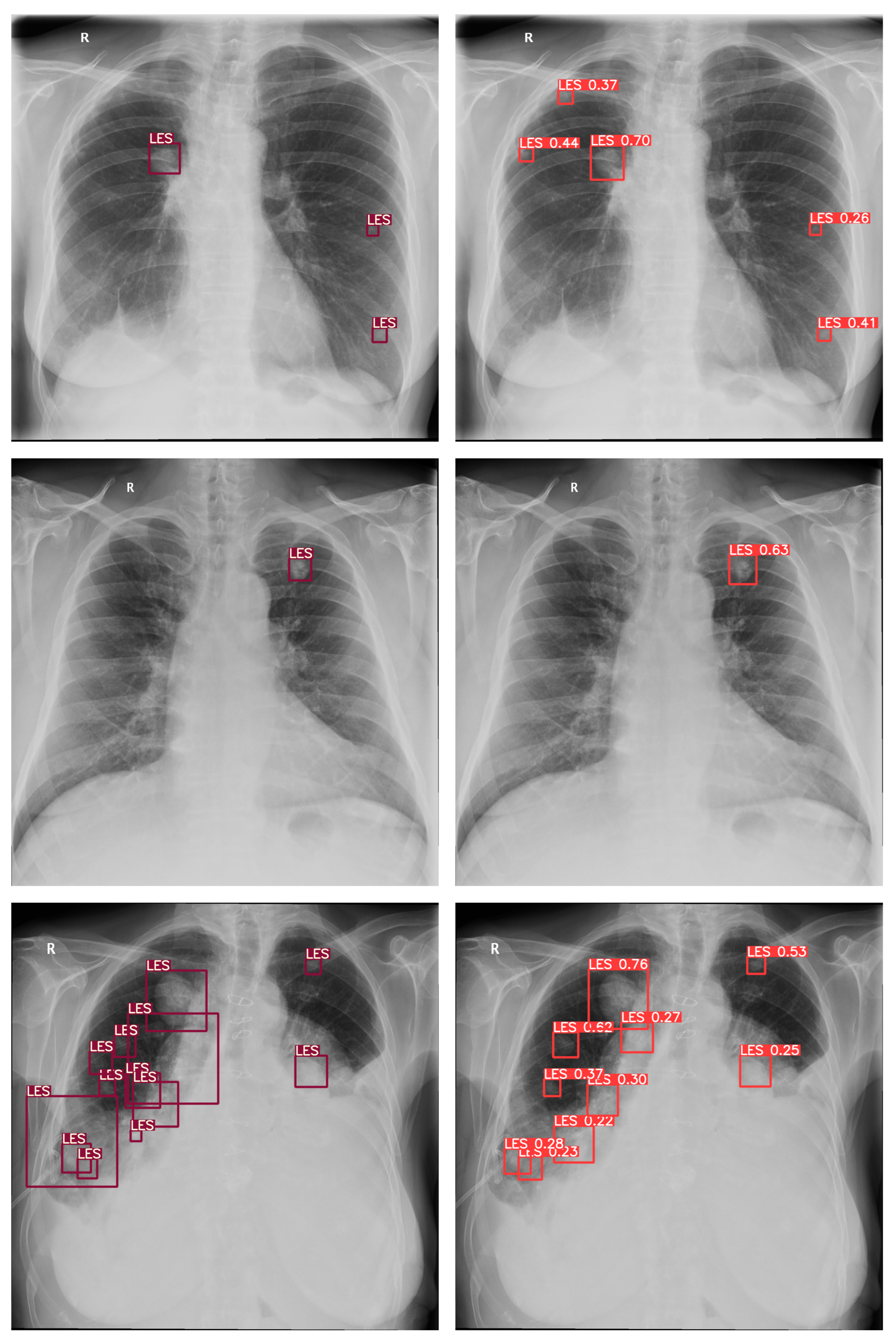

3.1.2. Model Architecture

3.1.3. Communication Protocol

3.2. Data Collection

3.3. Ground Truth

3.4. Assessment

3.5. Statistical Analysis

4. Results

5. Discussion

Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CI | Confidence Interval |

| CNN | Convolutional Neural Network |

| CXR | Chest X-ray |

| DL | Deep Learning |

| DLAD | Deep Learning–based Automatic Detection Algorithm |

| Se | Sensitivity |

| Sp | Specificity |

| BA | Balanced Accuracy |

| TP | True Positive |

| FP | False Positive |

| TN | True Negative |

| FN | False Negative |

| LR | Likelihood Ratio |

| PLR | Positive Likelihood Ratio |

| NLR | Negative Likelihood Ratio |

| PV | Predictive Value |

| PPV | Positive Predictive Value |

| NPV | Negative Predictive Value |

Appendix A

References

- Sone, S.; Takashima, S.; Li, F.; Yang, Z.; Honda, T.; Maruyama, Y.; Hasegawa, M.; Yam, A.T.; Kubo, K.; Hanamura, K.; et al. Mass screening for lung cancer with mobile spiral computed tomography scanner. Lancet 1998, 351, 1242–1245. [Google Scholar] [CrossRef]

- Hansell, D.M.; Bankier, A.A.; MacMahon, H.; McLoud, T.C.; Müller, N.L.; Remy, J. Fleischner Society: Glossary of terms for thoracic imaging. Radiology 2008, 246, 697–722. [Google Scholar] [CrossRef]

- Herring, W. Learning Radiology: Recognizing the Basics; Elsevier Health Sciences: Oxford, UK, 2019. [Google Scholar]

- Gamboa, A.; Ethun, C.; Switchenko, J.; Lipscomb, J.; Poultsides, G.; Grignol, V.; Howard, J.; Gamblin, T.; Roggin, K.; Votanopoulos, K.; et al. Lung surveillance strategy for high-grade soft tissue sarcomas: Chest X-ray or CT scan? J. Am. Coll. Surg. 2019, 229, 449–457. [Google Scholar] [CrossRef] [PubMed]

- Muhm, J.R.; Miller, W.E.; Fontana, R.S.S.; Erson, D.R.; Uhlenhopp, M.A. Lung cancer detected during a screening program using four-month chest radiographs. Radiology 1983, 148, 609–615. [Google Scholar] [CrossRef]

- Albert, R.; Russell, J. Evaluation of the solitary pulmonary nodule. Am. Fam. Physician 2009, 80, 827–831. [Google Scholar]

- Goo, J.M.; Park, C.M.; Lee, H.J. Ground-glass nodules on chest CT as imaging biomarkers in the management of lung adenocarcinoma. AJR Am. J. Roentgenol. 2011, 196, 533–543. [Google Scholar] [CrossRef] [PubMed]

- Tang, A.W.; Moss, H.A.; Robertson, R.J. The solitary pulmonary nodule. Eur. J. Radiol. 2003, 45, 69–77. [Google Scholar] [CrossRef]

- Molina, P.; Hiken, J.; Glazer, H. Imaging evaluation of obstructive atelectasis. J. Thorac. Imaging 1996, 11, 176–186. [Google Scholar] [CrossRef]

- Vaaler, A.; Forrester, J.; Lesar, M.; Edison, M.; Johnson, B.; Venzon, D. Obstructive atelectasis in patients with small cell lung cancer: Incidence and response to treatment. Chest 1997, 111, 115–120. [Google Scholar] [CrossRef]

- Strollo, D.; Christenson, L.; Jett, J. Primary mediastinal tumors: Part ll. Tumors of the middle and posterior mediastinum. Chest 1997, 112, 1344–1357. [Google Scholar] [CrossRef] [PubMed]

- Chastre, J.; Trouillet, J.; Vuagnat, A.; Joly-Guillou, M.; Clavier, H.; Dombret, M.; Gibert, C. Nosocomial pneumonia in patients with acute respiratory distress syndrome. Am. J. Respir. Crit. Care Med. 1998, 157, 1165–1172. [Google Scholar] [CrossRef] [PubMed]

- Seemann, M.; Staebler, A.; Beinert, T.; Dienemann, H.; Obst, B.; Matzko, M.; Pistitsch, C.; Reiser, M. Usefulness of morphological characteristics for the differentiation of benign from malignant solitary pulmonary lesions using HRCT. Eur. Radiol. 1999, 9, 409–417. [Google Scholar] [CrossRef]

- Janzen, D.; Padley, S.; Adler, B.; Müller, N. Acute pulmonary complications in immunocompromised non-AIDS patients: Comparison of diagnostic accuracy of CT and chest radiography. Clin. Radiol. 1993, 47, 159–165. [Google Scholar] [CrossRef] [PubMed]

- Okada, M.; Nishio, W.; Sakamoto, T.; Uchino, K.; Yuki, T.; Nakagawa, A.; Tsubota, N. Effect of tumor size on prognosis in patients with non–small cell lung cancer: The role of segmentectomy as a type of lesser resection. J. Thorac. Cardiovasc. Surg. 2005, 129, 87–93. [Google Scholar] [CrossRef]

- Kim, Y.; Cho, Y.; Wu, C.; Park, S.; Jung, K.; Seo, J.; Lee, H.; Hwang, H.; Lee, S.; Kim, N. Short-term reproducibility of pulmonary nodule and mass detection in chest radiographs: Comparison among radiologists and four different computer-aided detections with convolutional neural net. Sci. Rep. 2019, 9, 18738. [Google Scholar] [CrossRef] [PubMed]

- Ausawalaithong, W.; Thirach, A.; Marukatat, S.; Wilaiprasitporn, T. Automatic lung cancer prediction from chest X-ray images using the deep learning approach. In Proceedings of the 2018 11th Biomedical Engineering International Conference (BMEiCON), Chaing Mai, Thailand, 21–24 November 2018; pp. 1–5. [Google Scholar]

- Li, X.; Shen, L.; Xie, X.; Huang, S.; Xie, Z.; Hong, X.; Yu, J. Multi-resolution convolutional networks for chest X-ray radiograph based lung nodule detection. Artif. Intell. Med. 2020, 103, 101744. [Google Scholar] [CrossRef]

- Nasrullah, N.; Sang, J.; Alam, M.; Mateen, M.; Cai, B.; Hu, H. Automated lung nodule detection and classification using deep learning combined with multiple strategies. Sensors 2019, 19, 3722. [Google Scholar] [CrossRef]

- Hryniewska, W.; Bombiński, P.; Szatkowski, P.; Tomaszewska, P.; Przelaskowski, A.; Biecek, P. Checklist for responsible deep learning modeling of medical images based on COVID-19 detection studies. Pattern Recognit. 2021, 118, 108035. [Google Scholar] [CrossRef]

- Oakden-Rayner, L. Exploring large-scale public medical image datasets. Acad. Radiol. 2020, 27, 106–112. [Google Scholar] [CrossRef]

- Nam, J.; Park, S.; Hwang, E.; Lee, J.; Jin, K.; Lim, K.; Vu, T.; Sohn, J.; Hwang, S.; Goo, J.; et al. Development and validation of deep learning–based automatic detection algorithm for malignant pulmonary nodules on chest radiographs. Radiology 2019, 290, 218–228. [Google Scholar] [CrossRef]

- Schalekamp, S.; Ginneken, B.; Koedam, E.; Snoeren, M.; Tiehuis, A.; Wittenberg, R.; Karssemeijer, N.; Schaefer-Prokop, C. Computer-aided detection improves detection of pulmonary nodules in chest radiographs beyond the support by bone-suppressed images. Radiology 2014, 272, 252–261. [Google Scholar] [CrossRef]

- Arterys Retrospective Study X-ray Chest AI Whitepaper. Arterys. 2020. Available online: https://www.arterys.com/retrospective-study-x-ray-chest-ai-wp (accessed on 15 January 2023).

- Sim, Y.; Chung, M.; Kotter, E.; Yune, S.; Kim, M.; Do, S.; Han, K.; Kim, H.; Yang, S.; Lee, D.; et al. Deep convolutional neural network–based software improves radiologist detection of malignant lung nodules on chest radiographs. Radiology 2020, 294, 199–209. [Google Scholar] [CrossRef] [PubMed]

- Homayounieh, F.; Digumarthy, S.; Ebrahimian, S.; Rueckel, J.; Hoppe, B.; Sabel, B.; Conjeti, S.; Ridder, K.; Sistermanns, M.; Wang, L.; et al. An Artificial Intelligence–Based Chest X-ray Model on Human Nodule Detection Accuracy from a Multicenter Study. JAMA Netw. Open 2021, 4, e2141096. [Google Scholar] [CrossRef] [PubMed]

- Mahboub, B.; Tadepalli, M.; Raj, T.; Santhanakrishnan, R.; Hachim, M.; Bastaki, U.; Hamoudi, R.; Haider, E.; Alabousi, A. Identifying malignant nodules on chest X-rays: A validation study of radiologist versus artificial intelligence diagnostic accuracy. Adv. Biomed. Health Sci. 2022, 1, 137. [Google Scholar] [CrossRef]

- Monkam, P.; Qi, S.; Ma, H.; Gao, W.; Yao, Y.; Qian, W. Detection and classification of pulmonary nodules using convolutional neural networks: A survey. IEEE Access. 2019, 7, 78075–78091. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Into Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Whaley, J.; Pressman, B.; Wilson, J.; Bravo, L.; Sehnert, W.; Foos, D. Investigation of the variability in the assessment of digital chest X-ray image quality. J. Digit. Imaging 2013, 26, 217–226. [Google Scholar] [CrossRef] [PubMed]

- Gavelli, G.; Giampalma, E. Sensitivity and specificity of chest X-ray screening for lung cancer. Cancer 2000, 89, 2453–2456. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, C.; Tao, Y.; Liang, J.; Li, K.; Chen, Y. Object detection based on YOLO network. In Proceedings of the 2018 IEEE 4th Information Technology Furthermore, Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 December 2018; pp. 799–803. [Google Scholar]

- Katsamenis, I.; Karolou, E.; Davradou, A.; Protopapadakis, E.; Doulamis, A.; Doulamis, N.; Kalogeras, D. TraCon: A novel dataset for real-time traffic cones detection using deep learning. In Novel & Intelligent Digital Systems, Proceedings of the 2nd International Conference (NiDS 2022), Athens, Greece, 29–30 September 2022; Sprigner: Berlin/Heidelberg, Germany, 2022; pp. 382–391. [Google Scholar]

- Janssen-Heijnen, M.; Schipper, R.; Razenberg, P.; Crommelin, M.; Coebergh, J. Prevalence of co-morbidity in lung cancer patients and its relationship with treatment: A population-based study. Lung Cancer 1998, 21, 105–113. [Google Scholar] [CrossRef]

- Low, S.; Eng, P.; Keng, G.; Ng, D. Positron emission tomography with CT in the evaluation of non-small cell lung cancer in populations with a high prevalence of tuberculosis. Respirology 2006, 11, 84–89. [Google Scholar] [CrossRef]

- Margerie-Mellon, C.; Chassagnon, G. Artificial intelligence: A critical review of applications for lung nodule and lung cancer. Diagn. Interv. Imaging 2022, 104, 11–17. [Google Scholar] [CrossRef] [PubMed]

- Bi, W.; Hosny, A.; Schabath, M.; Giger, M.; Birkbak, N.; Mehrtash, A.; Allison, T.; Arnaout, O.; Abbosh, C.; Dunn, I.; et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J. Clin. 2019, 69, 127–157. [Google Scholar] [CrossRef] [PubMed]

| Class | Inclusion Criteria |

|---|---|

| LES+ Abnormal | A consensus of 2/3 is required to confirm the presence of one or more pulmonary lesions. In addition, CXR may contain other pathological abnormalities. A consensus of 2/3 is required to confirm these. |

| LES− Abnormal | A consensus of 3/3 is required to confirm the absence of any pulmonary lesions. In addition, CXR contained other pathological abnormalities. A consensus of 2/3 is required to confirm these. |

| Normal | A consensus of 3/3 is required to confirm the CXR did not show any pathological abnormalities. |

| Data | LES+ Abnormal | LES− Abnormal | Normal |

|---|---|---|---|

| Total | 100 | 100 | 100 |

| Patient’s Sex | |||

| Female (♀) | 55 | 68 | 79 |

| Male (♂) | 45 | 32 | 21 |

| F:M Ratio | 1.22:1 | 2.13:1 | 3.76:1 |

| Prevalence | |||

| LES only | 40 | 0 | 0 |

| With other findings | 60 | 100 | 0 |

| Findings | |||

| Atelectasis | 5 | 6 | 0 |

| Consolidation | 21 | 21 | 0 |

| Cardiomegaly | 5 | 38 | 0 |

| Fracture | 1 | 10 | 0 |

| Mediastinal widening | 1 | 0 | 0 |

| Pneumoperitoneum | 0 | 1 | 0 |

| Pneumothorax | 0 | 0 | 0 |

| Pulmonary edema | 0 | 9 | 0 |

| Pleural effusion | 21 | 34 | 0 |

| Pulmonary lesion | 100 | 0 | 0 |

| Hilar enlargement | 2 | 2 | 0 |

| Subcutaneous emphysema | 0 | 1 | 0 |

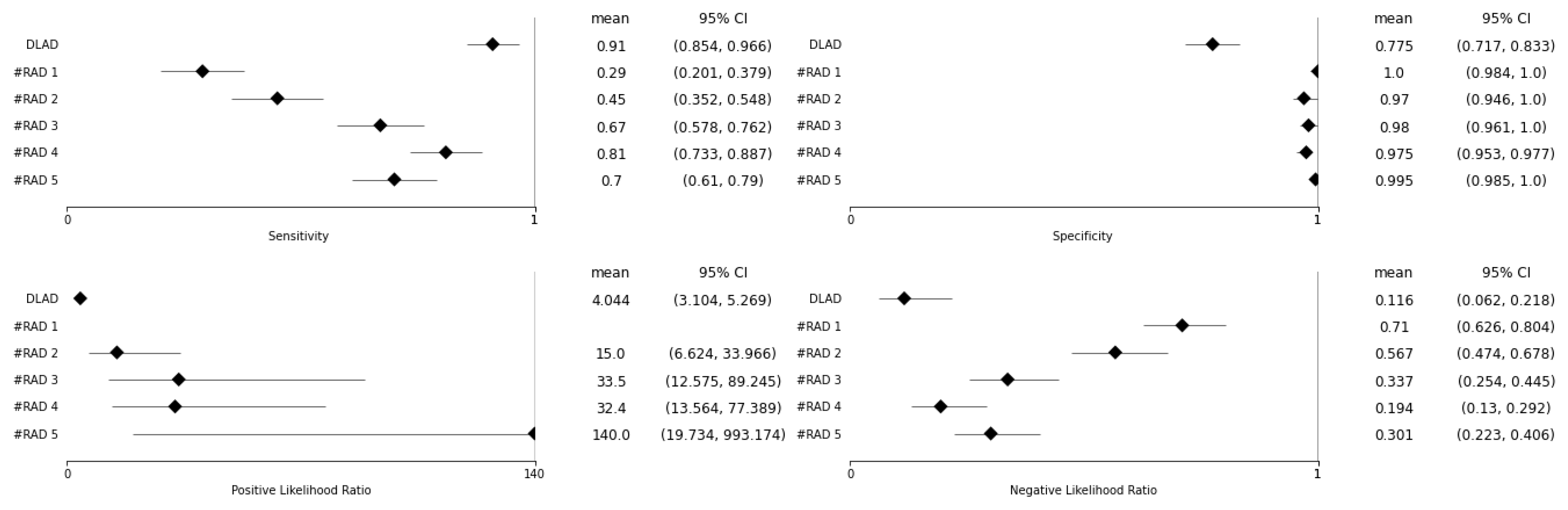

| BA | Se (95% CI) | Sp (95% CI) | Se p-Value | Sp p-Value | |

|---|---|---|---|---|---|

| DLAD | 0.843 | 0.910 (0.854–0.966) | 0.775 (0.717–0.833) | ||

| RAD 1 | 0.645 | 0.290 (0.201–0.379) | 1.000 (0.984–1.000) | <0.001 | <0.001 |

| RAD 2 | 0.710 | 0.450 (0.352–0.548) | 0.970 (0.946–0.994) | <0.001 | <0.001 |

| RAD 3 | 0.825 | 0.670 (0.578–0.762) | 0.980 (0.961–1.000) | <0.001 | <0.001 |

| RAD 4 | 0.893 | 0.810 (0.733–0.887) | 0.975 (0.953–0.997) | 0.025 | <0.001 |

| RAD 5 | 0.848 | 0.700 (0.610–0.790) | 0.995 (0.985–1.000) | <0.001 | <0.001 |

| PLR | NLR | PLR p-Value | NLR p-Value | |

|---|---|---|---|---|

| DLAD | 4.044 (3.104–5.269) | 0.116 (0.062–0.218) | ||

| RAD 1 | N/A | 0.710 (0.626–0.804) | N/A | <0.001 |

| RAD 2 | 15.000 (6.624–33.966) | 0.567 (0.474–0.678) | 0.002 | <0.001 |

| RAD 3 | 33.500 (12.575–89.245) | 0.337 (0.254–0.445) | <0.001 | <0.001 |

| RAD 4 | 32.400 (13.564–77.389) | 0.194 (0.130–0.292) | <0.001 | 0.132 |

| RAD 5 | 140.000 (19.734–993.174) | 0.301 (0.223–0.406) | <0.001 | 0.003 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kvak, D.; Chromcová, A.; Hrubý, R.; Janů, E.; Biroš, M.; Pajdaković, M.; Kvaková, K.; Al-antari, M.A.; Polášková, P.; Strukov, S. Leveraging Deep Learning Decision-Support System in Specialized Oncology Center: A Multi-Reader Retrospective Study on Detection of Pulmonary Lesions in Chest X-ray Images. Diagnostics 2023, 13, 1043. https://doi.org/10.3390/diagnostics13061043

Kvak D, Chromcová A, Hrubý R, Janů E, Biroš M, Pajdaković M, Kvaková K, Al-antari MA, Polášková P, Strukov S. Leveraging Deep Learning Decision-Support System in Specialized Oncology Center: A Multi-Reader Retrospective Study on Detection of Pulmonary Lesions in Chest X-ray Images. Diagnostics. 2023; 13(6):1043. https://doi.org/10.3390/diagnostics13061043

Chicago/Turabian StyleKvak, Daniel, Anna Chromcová, Robert Hrubý, Eva Janů, Marek Biroš, Marija Pajdaković, Karolína Kvaková, Mugahed A. Al-antari, Pavlína Polášková, and Sergei Strukov. 2023. "Leveraging Deep Learning Decision-Support System in Specialized Oncology Center: A Multi-Reader Retrospective Study on Detection of Pulmonary Lesions in Chest X-ray Images" Diagnostics 13, no. 6: 1043. https://doi.org/10.3390/diagnostics13061043

APA StyleKvak, D., Chromcová, A., Hrubý, R., Janů, E., Biroš, M., Pajdaković, M., Kvaková, K., Al-antari, M. A., Polášková, P., & Strukov, S. (2023). Leveraging Deep Learning Decision-Support System in Specialized Oncology Center: A Multi-Reader Retrospective Study on Detection of Pulmonary Lesions in Chest X-ray Images. Diagnostics, 13(6), 1043. https://doi.org/10.3390/diagnostics13061043