Abstract

A pneumothorax is a condition that occurs in the lung region when air enters the pleural space—the area between the lung and chest wall—causing the lung to collapse and making it difficult to breathe. This can happen spontaneously or as a result of an injury. The symptoms of a pneumothorax may include chest pain, shortness of breath, and rapid breathing. Although chest X-rays are commonly used to detect a pneumothorax, locating the affected area visually in X-ray images can be time-consuming and prone to errors. Existing computer technology for detecting this disease from X-rays is limited by three major issues, including class disparity, which causes overfitting, difficulty in detecting dark portions of the images, and vanishing gradient. To address these issues, we propose an ensemble deep learning model called PneumoNet, which uses synthetic images from data augmentation to address the class disparity issue and a segmentation system to identify dark areas. Finally, the issue of the vanishing gradient, which becomes very small during back propagation, can be addressed by hyperparameter optimization techniques that prevent the model from slowly converging and poorly performing. Our model achieved an accuracy of 98.41% on the Society for Imaging Informatics in Medicine pneumothorax dataset, outperforming other deep learning models and reducing the computation complexities in detecting the disease.

1. Introduction

A pneumothorax is a medical condition in which air or gas is present in the pleural space, the potential space between the lung and the chest wall. The presence of air or gas in this space can cause the lung to collapse, resulting in a reduced lung function and difficulty breathing. A pneumothorax can be classified based on the cause, such as spontaneous, traumatic, iatrogenic, or secondary. A spontaneous pneumothorax occurs without any obvious cause, typically in people with a history of lung disease or in those who smoke. It can happen when a small air pocket in the lung ruptures, causing air to leak into the pleural space. A traumatic pneumothorax is caused by an injury to the chest, an iatrogenic pneumothorax results from a medical procedure, such as a lung biopsy or the insertion of a chest tube, and a secondary pneumothorax occurs as a result of underlying lung diseases, such as emphysema, chronic obstructive pulmonary disease (COPD), or cystic fibrosis, which make the lung more susceptible to collapse. The diagnosis of a pneumothorax is typically made by physical examination and confirmed by radiologic imaging, such as chest X-rays or computed tomography (CT) scans.

Breathlessness and a sharp pain in the chest are the symptoms of a pneumothorax [1]. Prompt diagnosis of these symptoms is crucial because they may be dangerous in some circumstances. A serious pneumothorax can also result in dehydration, trauma, and perhaps even loss of life. Early detection is necessary for the rapid treatment of this illness. It is a common condition, with 35% prevalence and incidence in men, according to the authors of [2,3,4,5,6].

Song et al. [7] stated that while there are several medical methods available to detect pneumothoraces, such as magnetic resonance imaging, chest tomography scans, and ultrasound imaging, chest X-ray imaging is the most cost-effective option in terms of radiology. Chest X-rays are considered as the standard diagnostic tool for pneumothoraces due to their affordability and clear visual representation. In addition, chest X-rays are commonly used to diagnose and monitor various medical conditions, including tuberculosis, asthma, and disorders related to lung damage [8,9].

Pneumothorax detection is difficult because X-ray images have a low pixel resolution. If air is present even in low quantities in the laceration area (minor tear in the lung tissue due to injury), it becomes difficult to identify the disease. The assessment accuracy entirely depends on the doctors and medical practitioners involved. It is a challenging task to find skilled radiologists to review chest X-rays. Furthermore, each chest X-ray needs to be reviewed, and a report needs to be written by a qualified radiologist. As most radiologists are required to work long hours, fatigue-related misdiagnoses have increased. Hence, a computer-supported detection system is required to detect the disease on time with high accuracy to save human lives.

After the establishment of machine learning (ML) techniques, extensive research has been conducted in the medical sector on detecting hard-to-diagnose diseases within a short amount of time with the highest accuracy. The combined use of image processing, medical imaging, and computer technologies to identify health disorders in humans has led to significant breakthroughs. For example, the identification of melanoma [10], tachycardia, [11], and diabetic nephropathy [12] were among the first investigated using ML algorithms. Disease detection by chest radiographs is also eliciting considerable research attention. DL-based approaches for identifying lung nodules from X-ray images have been previously suggested by Huang et al. [13]. Through a 121-layered dense network to achieve an expert-level performance in detecting pneumonia and predicting numerous pulmonary illnesses by extracting the features from chest X-ray images, Zhu et al.’s work [14] demonstrated excellent performance in the detection of pneumonia using chest X-rays.

Since pneumothorax identification involves finding tiny amounts of air stuck in between the chest and lungs, only a few research works have been conducted using chest X-rays. We propose to use chest X-ray images with profound DL algorithms to detect pneumothoraces and build a model by identifying several drawbacks in the existing approaches, which are detailed in the following. (1) Initially, the features of the chest X-ray images need to be extracted and should be classified to accurately detect pneumothoraces. The accuracy is fully dependent upon the quality of the noise free features extracted from the chest X-rays. The higher the quality of the input images, the higher the accuracy. The majority of existing approaches are inefficient at extracting the features from X-ray images without noise. (2) A lack of data augmentation results in a higher error rate. (3) Analyzing and extracting the features of X-ray images only in a single dimension also reduces the model’s accuracy.

This investigation was aimed towards the early diagnosis of a pneumothorax through X-rays by using a profound DL model with the highest accuracy by addressing the aforementioned drawbacks found in existing approaches.

- We proposed a DL model, PneumoNet, to classify X-ray images.

- We utilized a profound and publicly available dataset, the Society for Imaging Informatics in Medicine (SIIM) pneumothorax dataset from Kaggle [15], which is specifically for pneumothorax analyses.

- We proposed a channel optimization technique (COT) to improve the quality of the input image.

- We also used the affine image enhancement tool for image augmentation and noise removal.

- Our proposed PneumoNet model analyzed the input image in the obverse and flip sides of the image, thereby further improving the recognition rate.

- The results of our proposed approach were analyzed using various machine learning parameters to assess its accuracy. The results prove that our proposed approach outperforms the existing DL approaches in detecting pneumothoraces.

2. Related Work

2.1. The Machine Learning Literature

Stein et al. [16] compared a deep CNN with a speed link to automatically detect and classify TB, which is similar to a pneumothorax, on the same two datasets. Nevertheless, the localization result produced using a feature map was not as impressive as the initially disclosed work, in which the greatest area under the curve (AUC) obtained was 0.93. Although all CNN models produced satisfactory results in the initial two trials for diagnosing pulmonary TB, the results of the following tests, which tackled the tricky but necessary task of detecting TB among an array of lung diseases, highlighted the difficultly of this task.

In previous studies [17,18], pretrained CNN models were combined using the qualified majority to detect pneumothoraces. To create a method for detecting pneumothoraces by using chest X-rays images, Ruizhi et al. [19] tested conventional CNN architectures using open chest X-ray databases.

Lindsey et al. [20] used ultrasound images for detecting diseases. The authors of [21] used DL models and CNNs in the health sector to identify an application for which the models can be trained to recognize the given input. [22] used the support vector machine to train a model and segregated the input image into three dimensions to aid in identification of the affected region.

2.2. The Deep Learning Literature

Th authors of [23] used a CNN technique based on pixel classification with a training set of around 120 chest X-rays to detect pneumothoraces. When assessing a test set of 96 chest X-rays, around 95% accuracy was achieved. To detect pneumothoraces, a surface interpretation method was integrated with the K-nearest neighbor algorithm. This suggested framework was tested on a dataset with 108 X-ray images, and the results indicated an 81% specificity and an 87% sensitivity. To regulate and extract the location of abnormalities in X-ray images using the U-Net framework and ResNet encoder, Sundaram et al. [24] used the SIIM–American College of Radiology (ACR) pneumothorax dataset with the AlexNet model to detect the disease. The initial step was to choose a symptoms that have a significant influence on diagnosis using recursive feature selection, and the algorithm then eliminates features that do not have as much impact as other factors. The second phase involves feeding the best diagnostic features to the AlexNet classification algorithm.

DL architectures such as AlexNet, GoogleNet, and ResNet were combined by Stefanus and Yaakob et al. [25] to categorize pneumothoraces. They tested different models and constructed the models using simple linear averaging, enabling it to produce around 92% accuracy. Dietterich et al. [26] used AlexNet and GoogleNet and discovered that a pretrained model is more precise. They used the composite index of the probability scores for every image to combine these models.

The researchers in [27] proposed computer-aided diagnostic approaches using a chest X-ray-based methodology to detect the initial condition of COVID-19 and compared it with other options (e.g., PCR, CT scan, etc.). They presented a heed DL model by using VGG-16. With the attention module, the spatial relationships of ROI are captured. The authors of [28] used the VGG-16 model’s fourth pooling layer and the attentive module to develop a novel DL technique for perfect classification. The results and evaluation demonstrated that the proposed method outperforms state-of-the-art methods with promising accuracy in detecting COVID-19 through X-ray images.

2.3. Heuristic and Hybrid Methods

Medical image segmentation was modeled using the heuristic red fox optimization algorithm by Jaszcz et al. [29]. Due to its heuristic characteristics, the red fox optimization algorithm automatically processes skin and retinal pictures. It models local and global movement using a random selection of Euclidean distance metrics. The suggested technique changes each pixel in a picture to black or white and monitors the color change and intensity. To evaluate the proposed method, an analysis of the thresholding values and their final value on X-ray images to generate a mask for segmentation was conducted. Each parameter variant had an accuracy of less than 80%. The segmentation effectiveness increased slightly from 20 to 50 people.

Kadry et al. [30] trained the InceptionV3 algorithm to identify pneumonia in chest X-ray images. Phases 1 through 3 of this technique are image collection, phase 2 is image enhancement via InceptionV3, phase 3 is feature compression using the Firefly algorithm, and phases 4 and 5 are multi-class classification and validation, respectively.

The suggested method uses a four-class classifier and five-fold cross-validation to categories the X-ray into regular, moderate, medium, and extreme classes. The K-nearest neighbor (KNN) classifier’s experimental results showed that this method provided a classification accuracy of 85.18%.

Conventional feature extraction approaches have been used in early pneumothorax detection technologies. Edge detection of images has been used to determine the internal thoracic boundary in X-ray images [31], surface knowledge has been used to estimate portal hypertension cues [32], and the Cox transform [33] has been used to predict the occurrence of a pneumothorax in local intensity histograms. The diagnosis accuracy of such algorithms is currently relatively low, owing to the reddened appearance, which leads to the inability to distinguish between different human lungs and pneumothoraces.

Hamde [34] conceived and demonstrated machines that develop methods to assess pulmonary disease severity by using chest X-rays of congestive heart failure with edemas by utilizing a clinical -imaging dataset with around 30,000 patient images. From X-rays, Riasatian’s team [35] isolated the lung region based on the Gabor selection algorithm on an X-ray image by using anatomical techniques. The roughness and the form of the segmented dataset’s characteristics were computed.

Park et al. [36] proposed end-to-end pneumothorax classification, which analyzes the entire chest X-ray in a quick pass-through patch-based technique which applies a neural network recursively. This technique categorizes specific X-ray fragments to predict whether every fragment includes a pneumothorax. The authors concluded that although the proposed method produces acceptable accuracy, it cannot segregate specific spots in the image. Chest X-rays are extensively used and are inexpensive, enabling easy design of DL techniques. An overview of a few of the existing works is presented in Table 1.

Table 1.

Overview of related work.

3. Methods and Materials

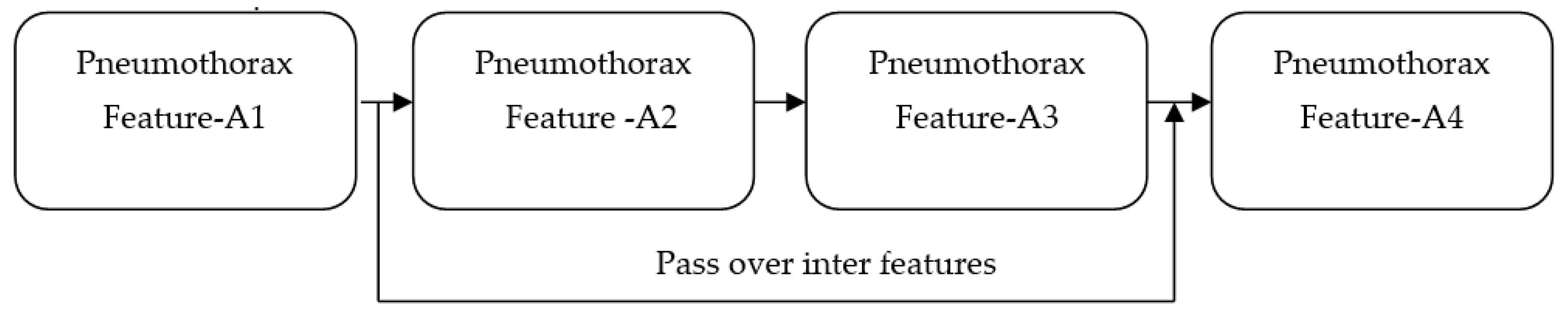

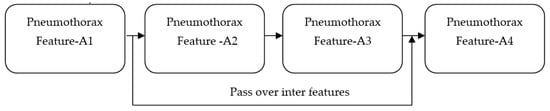

We propose a DL method to identify the existence of a pneumothorax in X-ray images. Our proposed work utilized the features of ImageNet and ResNet pretrained models. Although the accuracies achieved by both models are around 93% and 95%, respectively, the feature maps obtained from both models suffer from vanishing gradient issues [38] owing to the back propagation of the output through the input layers. Any changes made in the input layer are not fully reflected in the output feature map that is back propagated to the input layer. For all propagations, the gradient value (change in the input layer) decreases stepwise and finally reaches zero. Accordingly, it does not reflect the change in the input layer. The vanishing gradient issue quickly occurs if the depth of the model is too large. It makes the training the model biased. If the model is made to be shallow to avoid the vanishing gradient issue, the network performance becomes too weak. Overall, this makes the model unable to identify dark regions in the input X-ray images. This major limitation is addressed by our proposed PneumoNet model. The residual part presented in the proposed model effectively reduces this problem. Figure 1 shows how the problem is addressed. When gradient reduction occurs in feature map 1, 2, and 3, the gradient value can be directly sent to feature map 1, thereby making the cross-layer propagation effective.

Figure 1.

Addressing the vanishing gradient problem.

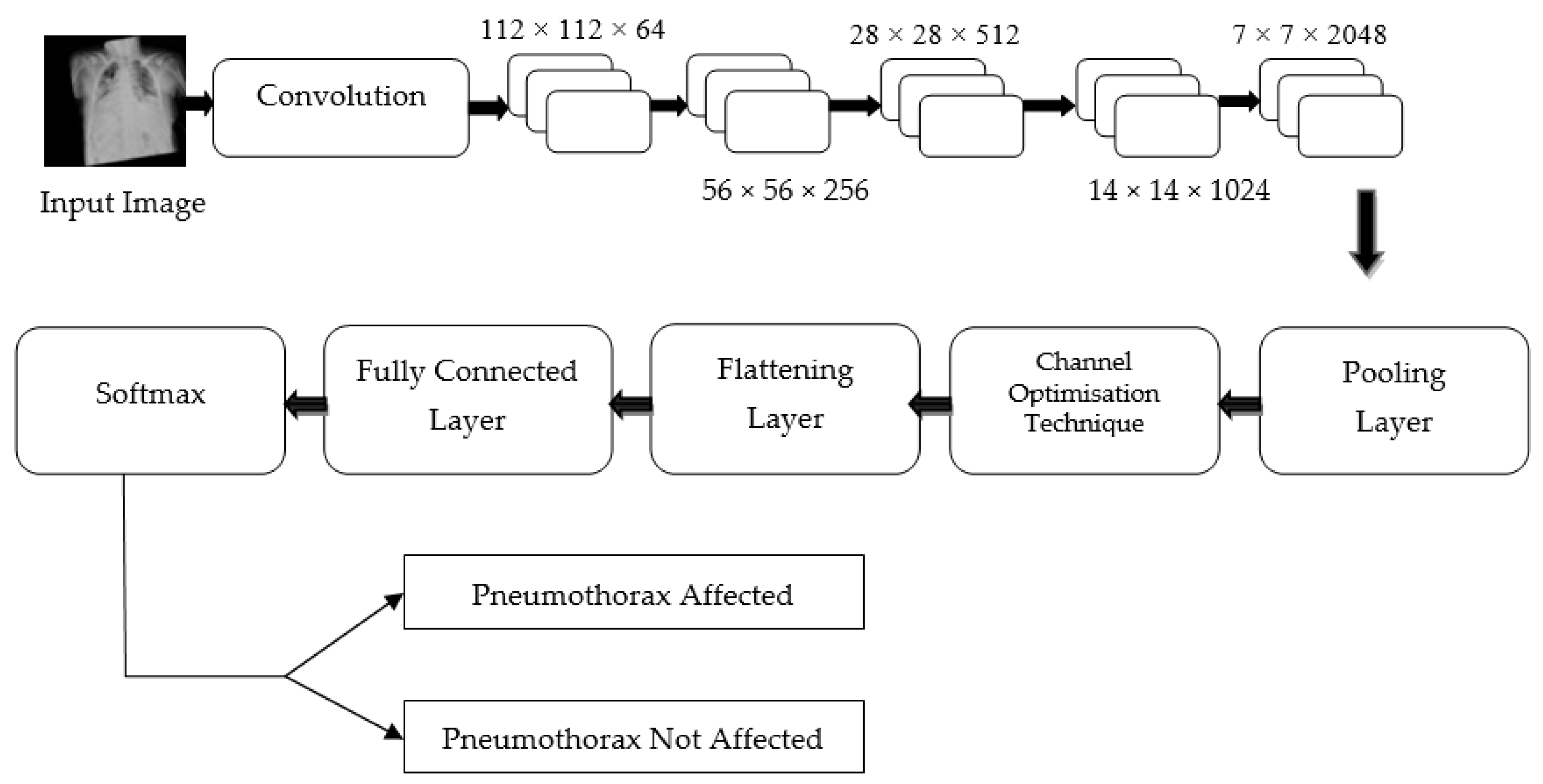

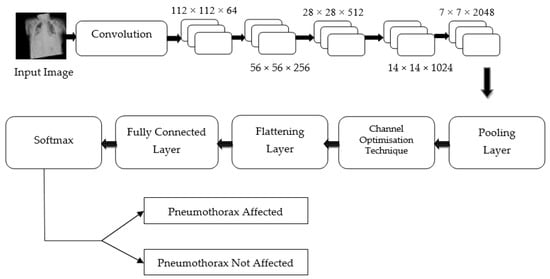

Hence, regardless of the size of the gradient (change in input feature), the residual block in the proposed PneumoNet model retains the exact value of the weight, which is closer to the values changed in the input layer. Consequently, the gradient value becomes the same as the one present in the input feature map. The proposed architecture is shown in Figure 2.

Figure 2.

Proposed architecture.

3.1. Dataset

We used the SIIM-ACR dataset for our investigation. The medical health body for those interested in the present and potential applications of informatics in medical imaging is the SIIM. Their goal is to promote medical diagnostic informatics throughout the organization through scientific research, training, and creativity. The information present in the dataset comprises images formatted using the digital imaging and communications in medicine program with run-length-encoded masks and image identification as inscriptions. It is a globally used format to represent images in digital format, specifically medical images. Each image is encoded with a binary mask and stored in a separate folder. The meta information of every image contains the file location, the test person’s age, and the duration of disease, as well as the time of identifying the modality of the patient with their sex, date, and time of examination on a particular part of the body.

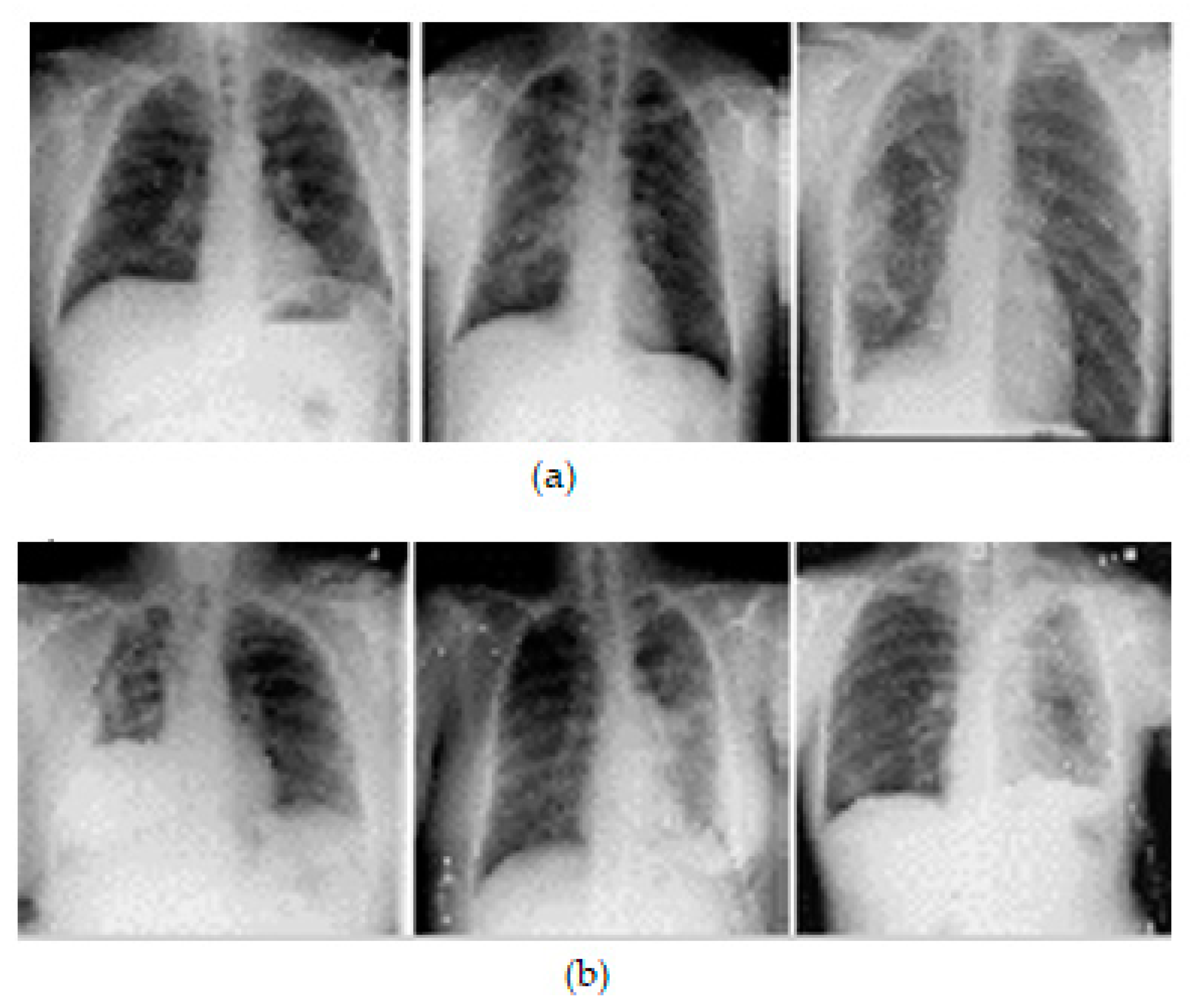

Pneumothorax cases are visible in some images, and they are denoted by encapsulated digital overlays within annotations. Several annotations can be found on some training images. The dataset is open source and can be downloaded from Kaggle. The dataset contains around 3116 X-ray images of the chest in .png format of 1024 × 1024 image size labeled for training and testing the model. After data augmentation, around 9400 images are labeled for training, in which 8300 are pneumothorax-affected images and the remaining 1100 are unaffected. We used 655 original images (non-augmented images) to test the model, where 382 images were affected with pneumothorax and 273 samples were without pneumothorax. All the images were resized to 124 × 124 pixel size. The dataset is provided with run-length encode value, so it can be utilized for segmentation later.

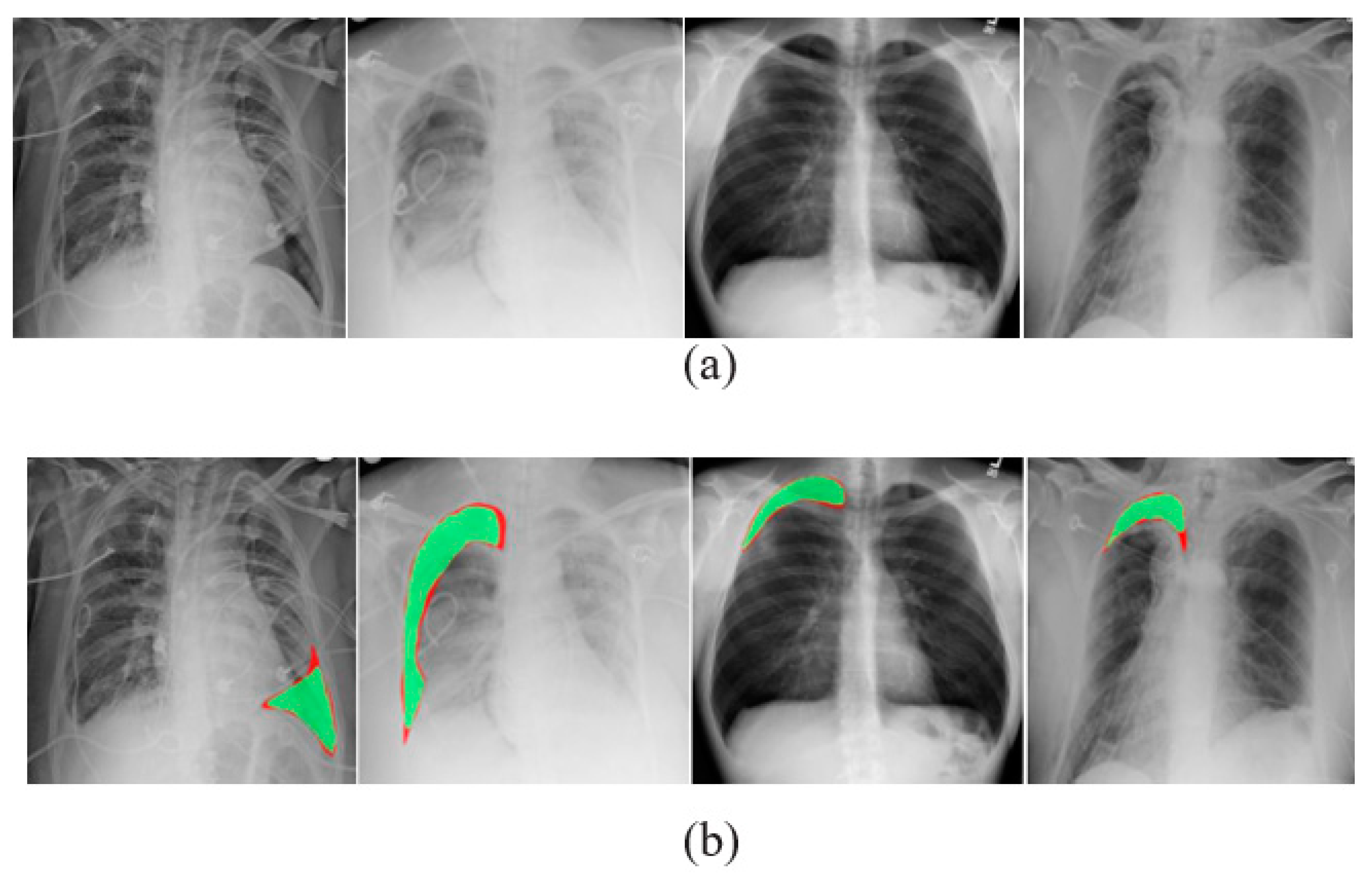

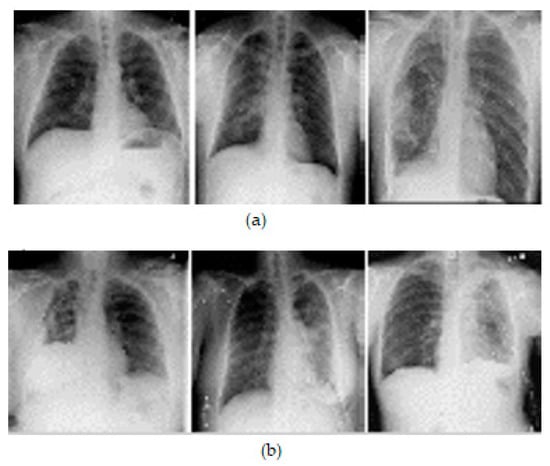

A comma separated value file was created for all images present in the dataset. It is encoded with a mask value of −1 if the DICOM image does not contain a pneumothorax. If the image marks the presence of a pneumothorax, the pixels of the images are completely encoded and a null-valued mask is created for all images. This way of masking the image makes the segmentation process in line with the run-length encoding values. Table 2 lists the dataset details. Samples of chest X-ray images from the dataset are shown in Figure 3.

Table 2.

Dataset parameters.

Figure 3.

Samples of X-ray images: (a) pneumothorax affected and (b) pneumothorax not affected.

3.2. Data Preprocessing

The original size of the image was 1024 × 1024, which was resized to the pixel resolution of 124 × 124. The benefit of this reduced size is that only a small amount of model parameters are required and the model can be trained faster. We incorporated the bicubic interpolation method to verify the importance of the resized image. The affine enhancement tool was used for image augmentation. Using the affine tool is powerful for OpenCV as the framework of this tool is simple and can be adapted for image segmentation, classification, and augmentation. It also supported noisy image fine tuning by converting it pixel by pixel.

3.3. Data Augmentation

Given that the performance increases with increased size of dataset, data augmentation plays crucial role in image classification.

Data augmentation is a method for increasing the number of data items in a dataset used to train a model. The technique augments them using fundamental image processing techniques such as panning, spinning, scaling, and padding. These altered images derived from the existing image collection are then added to the dataset, thereby increasing the size of the dataset used to train the model.

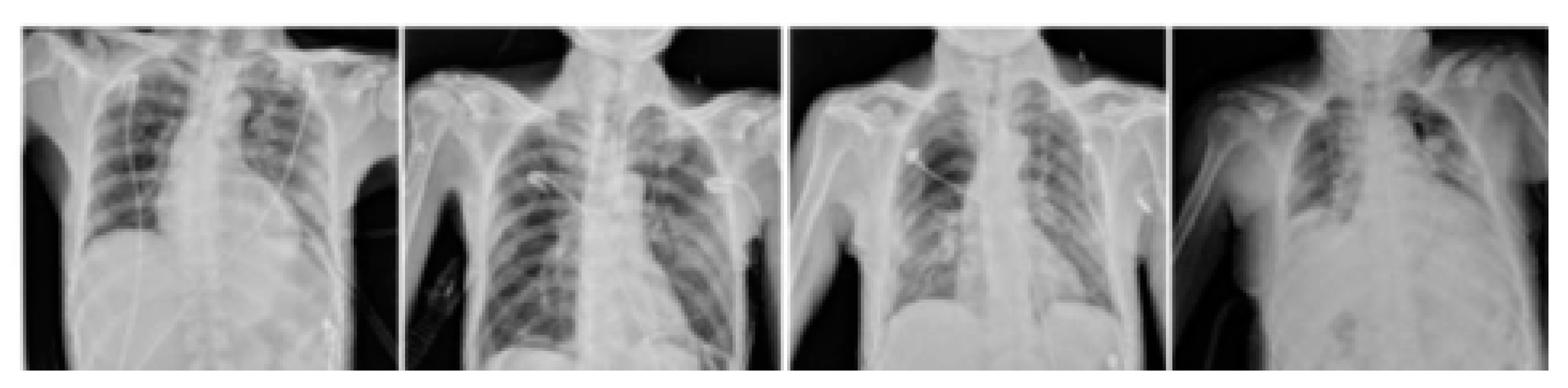

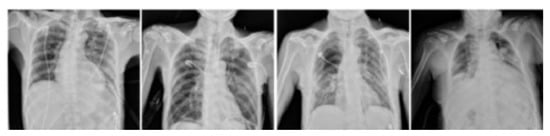

In addition to expanding the dataset, this method provided the learning model with additional learning features. This study utilized two image processing operations, spinning and inversion, to augment the data. During the first data enhancement phase, 120 X-ray images were inverted to produce an additional 120 images. This operation resulted in an increase of 240 images in the resulting dataset. During the second phase, a 90° rotation was applied to the original 120 images to produce 120 additional images, then by 180° to again produce 120 additional images, and finally by 270° to produce a further 120 additional images. Similarly, images were spun, resulting in the same amount of additional images. The output of these operations was a dataset comprising 720 chest X-ray images. The process was continued until the dataset contained 3000 image samples. The image quality was further increased by resetting some parameters as follows: (1) the brightness was augmented to a max factor of 0.1, (2) the contrast augmented by rescaling the original image to 5%, (3) the gamma factor was augmented to 125, and (4) the contrast was augmented to 0.1. Some samples of the augmented images are shown in Figure 4. Table 3 contains the augmented data of the chest X-ray images.

Figure 4.

Preprocessed samples of chest X-ray images using the affine augmentation tool.

Table 3.

Augmented chest x-ray images.

One augmented image from every folder was selected to verify the correctness of the augmented image. A Gaussian noise variance with the range of 15 to 35, a maximum standard deviation with 0.5 probability, and a kernel size of 5 were the parameters used to verify the correctness of the augmented image. All augmented images were flipped 20° horizontally, and 0.02 is fixed as the relative distance for image shifting. Then, the augmented image was input into the proposed classifier during training. If the resultant response was positive, other images in the augmented folder would be completely accepted for training the model further.

3.4. Image Segmentation

The proposed PneumoNet architecture consists of a contracting path and an expanding path, which allows for the efficient capture of contextual information and the learning of complex features from the X-ray images. The contracting path of the PneumoNet architecture consists of convolutional and max pooling layers that reduce the spatial resolution of the input image, while increasing the number of feature channels. This path captures the context of the image and learns the features that are important for segmentation. The expanding path of the PneumoNet architecture consists of deconvolutional and up-sampling layers that increase the spatial resolution of the output image while decreasing the number of feature channels. This path uses the features learned in the contracting path to generate an accurate segmentation map. This ensures the model can accurately classify the dark portions present in the input chest X-ray images performed at different convolutions. This is achieved through the augmented data resulting from data augmentation techniques such as panning, spinning, scaling, and padding. The classification results obtained by the proposed work are better than the existing approaches that did not use any segmentation methods. This ensures the proposed architecture performs accurate segmentation with limited training data and makes it a useful tool for pneumothorax detection, where annotated data may be limited.

3.5. Proposed PneumoNet Model

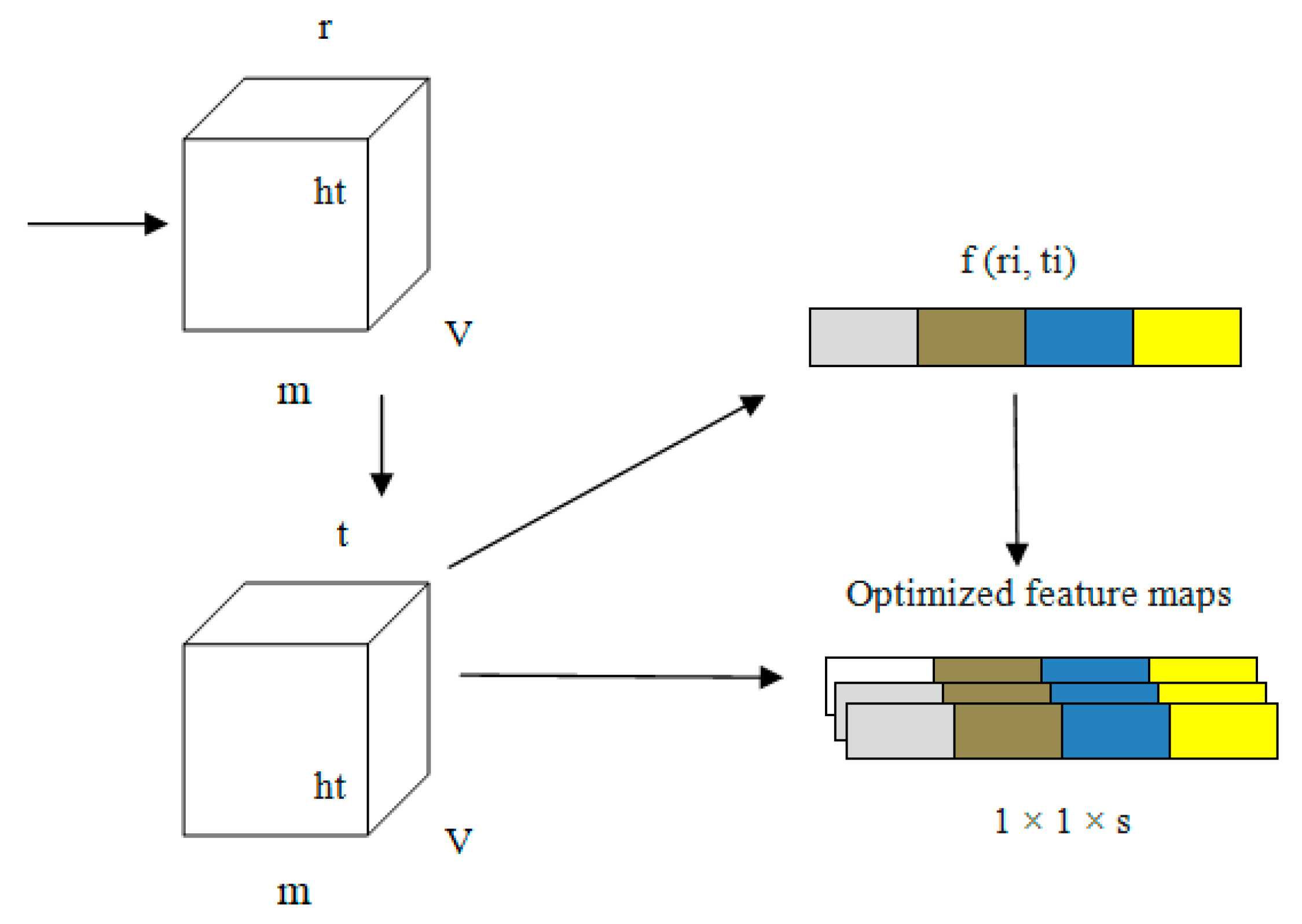

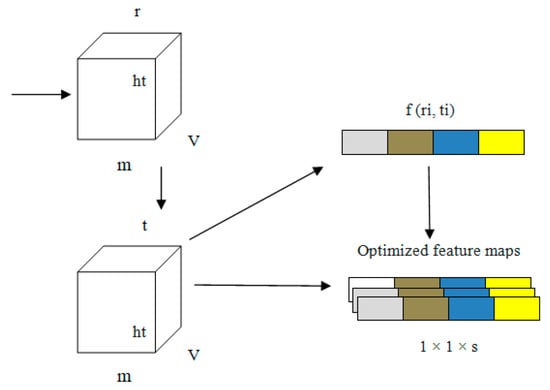

Every channel presented in our proposed PneumoNet is sufficiently deep, with a huge number of feature maps such that it is capable of recognizing every feature of pneumothoraces with different patterns. We added COT to further improve our proposed network. COT reduced the loss occurring at every channel owing to the change in input feature map during convolution. By simulating the dependability of every channel and adapting the features one by one through all channels, the COT block enhances the network’s pneumothorax identification abilities. Through this process, the network can be trained to discriminately improve the features that contain valuable data and subdue those that do not use relevant data related to pneumothorax image classification. A structural representation of COT is shown in Figure 5.

Figure 5.

Structure of the channel optimizing technique (COT).

Equations (1)–(4) express how the COT improves network performance. In these equations, ‘G’ is the input to the model; ‘ri’ and ‘ti’ are the values before and after optimizing the channel, respectively; ‘wt’ are the weights of the input; ‘m’ stores the average of weights; ‘v’ and ‘w’ are the activation function value and average pooling value, respectively, and ‘k’ is the counting value for every input ‘i,j’.

The information related to the characteristics ‘G’ of the input is represented as 1 × 1 × s from v × w owing to the pooling operation. In the first fully connected layer, the value of ‘r’ is stored as 16 in ‘G’. In the second layer of the fully connected layer, ‘G’ changes to 1 × 1 × s. The convolution output is obtained from ‘ri (i, j)’.

In the first step, an explanatory channel is formed by collecting all spatial characteristics of each and every channel present the model. Second, based on the dependency of every channel, the feature map ‘fn’ is fine tuned. This fine-tuned feature map is the output of the PneumoNet model. The advantages of the COT have effects on the entire network. The image is flattened using a flattening layer.

The classification of the input X-ray image is prepared using the fully connected layer. Ultimately, the results are obtained through Equation (5), which is the SoftMax function, ‘S’:

Apart from the COT, PneumoNet is built with an encode and decode pack. The encode pack supports the extraction of more features from the images obtained from the COT. In other words, the image is fine-tuned again for accurate classification. This downscaling method is conducted using the pooling layers with a stride size of less than one. The decode pack of the model performs the up-scaling process for the input image. The generation and extraction of features are performed using the encoding and decoding pack of the model. Convergence of the input image is achieved by collecting all convolution layers through batch normalization. Our model uses a chest X-ray image as input and classifies the image as pneumothorax affected or unaffected. Binary cross-entropy serves as the loss function (Equation (6)). In some cases, asymmetric values, of positive and negative instances, , can be reduced using the loss function, and the estimated gradient additionally becomes more stable for initial value ‘i’ to end input ‘n’. All three branches used binary cross-entropy as their loss function, but their weighting indices are unique.

A step-by-step process is performed to classify the pneumothorax-affected or -unaffected images. For the model to view the X-ray image at all angles and to perform accurate labeling, all augmented input images underwent various geometrical transformations, G. Our proposed approach stores the cumulative weights of the best network and memorizes it every time the model reaches the local optimum, L. The average of L is the overall classification accuracy of the model.

We conducted a five-fold cross-validation for this investigation. The data were equally separated into five distinct folds. A data point is selected in such a way that no data crosses the other fold’s data point. The ratio for training and testing is 8:2. The accuracy is calculated as the average of all five-fold results.

3.6. Hyper Parameter Optimization

Tensor flow and Keras were used to investigate with Python using NVIDIA, 3080RTX, Ti. We used the Adam optimizer with a learning rate of 0.0001 and a batch size of 64 for 60 epochs. Grid search was utilized to fine tune the model. The model performance was optimized by verifying the output for the continuous training of five epochs. If the performance was not improved, the learning rate was condensed to 1/10 of its initial value. To prevent our model from overfitting, training was stopped if the performance was not improved after 15 epochs.

We found that the maximum validation accuracy reached its highest point after employing 64 batches, and then began to decline on either side. This is because at a particular batch size, the capability of the proposed model to extrapolate starts to deteriorate; the accuracy rate was 96.82% for a batch size of 128 and 91.02% for a batch size of 256. When the batch size was fixed at 64, the accuracy was 98.81%, and thus it was decided that the batch size should be 64.

We began our search for the best learning rate using the PneumoNet model and 64 as our batch size, rather than assuming that 0.0004 was the correct value. The accuracy rate reached its highest point at 0.00017, and subsequent values were either higher or lower. If the learning rate is set too low, the model will take more time to arrive at the best solution, and it is possible that it will even misinterpret the data. On the contrary, if the learning rate is too high, the model will not be able to converge because the strides are too big. With only a learning rate of 0.0001, we were able to reach the best possible validation accuracy of 98.81%.

Dropout is a method for improving accuracy and avoiding overfitting in DL models by arbitrarily removing units from layers in the training process. This forces a neural network to learn with less data, which improves its generalization abilities. The optimal solution using the proposed PneumoNet model resulted in a validation accuracy of 98.41%, with a dropout rate of 10.59%, a learning rate of 0.0001, and a batch size of 64. The obtained results are shown in Table 4.

Table 4.

Results of hyperparameter optimization.

3.7. Computational Complexities

The computational complexities of the proposed approach are as follows. The inference time for the proposed approach is 0.381 ms and the model size is 32.11 KB. The proposed PneumoNet model outperformed Efficient Net and MobileNet in terms of accuracy while using considerably less computational complexity (12.5 G FLOPS) and fewer parameters (7.61 million) than both of these networks. Table 5 contains the computational complexity details of our approach.

Table 5.

Computational complexities of the proposed approach.

4. Results

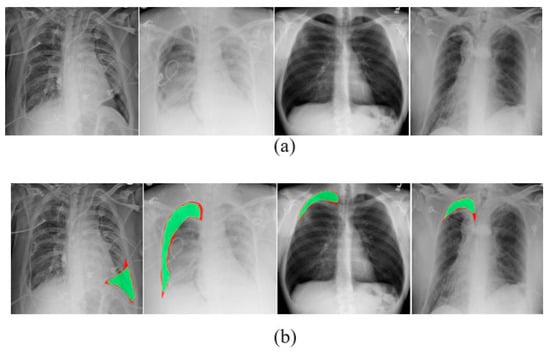

The results produced by our proposed PneumoNet model are presented and discussed in this section. Figure 6 shows the outcome of the proposed classifier when it has identified the disease.

Figure 6.

(a) Visually identified pneumothorax and (b) pneumothorax identified in X-ray images by PneumoNet.

The performance of the proposed model was analyzed using a few DL parameters. We used Tr. Pt (True Positive), Tr. Nt (True Negative), Fs. Nt (False Negative), and Fs. Pt (False Positive) metrics to classify the predicted images.

Tr. Pt (True Positive): This group contains around 9817 correctly classified pneumothorax images, which contains 99% air pockets in the lungs (affected).

Tr. Nt (True Negative): This group contains 26 images classified as having air pockets in the lungs but in reality they do not have air pockets (unaffected).

Fs. Nt (False Negative): This group contains chest X-ray images that are incorrectly classified as having air pockets (affected).

Fs. Pt (False Positive): This group contains chest X-ray images that are correctly classified as not having air pockets (unaffected).

Equations (7)–(10) are used to calculate the model performance.

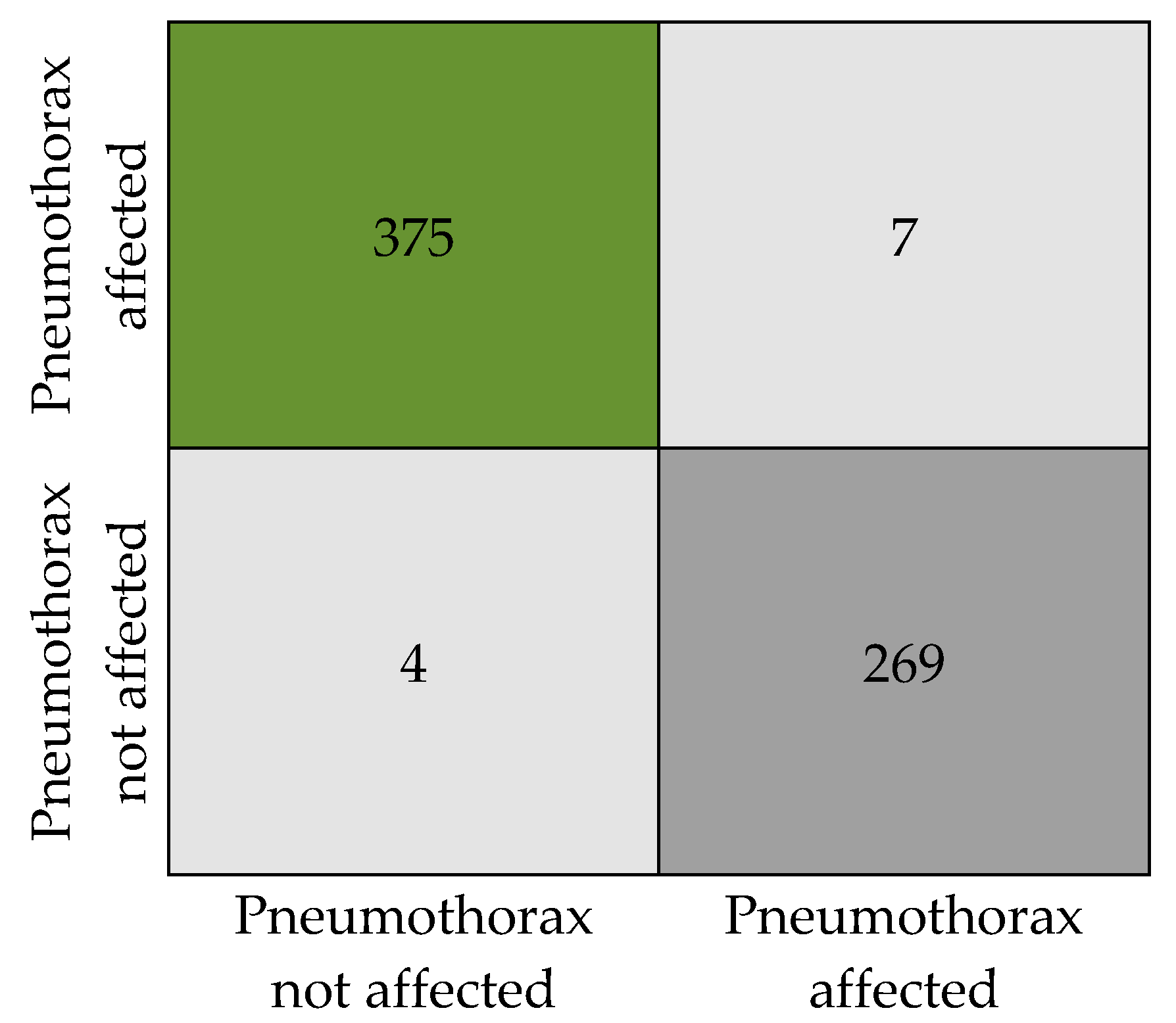

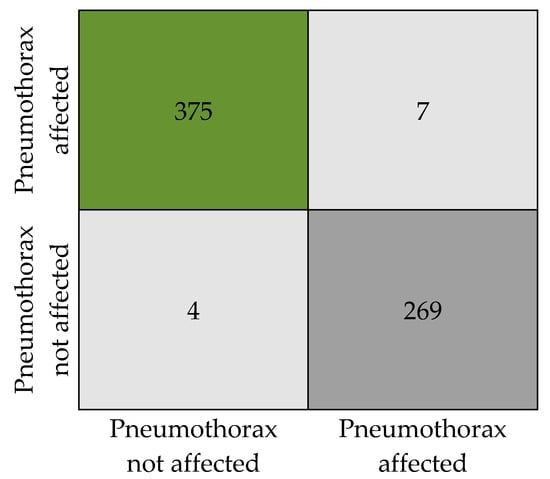

Figure 7 presents the numeric details of the classification results in the form of a confusion matrix. The matrix expresses the total count of X-ray images that are classified as pneumothorax affected and unaffected.

Figure 7.

Confusion matrix.

The classification results obtained by the proposed model are tabulated in Table 6. Our model has produced an overall accuracy of 98.41%, an F1 score of 0.98, a precision of 0.98, and a recall of 0. 984. We tested the model with a total of 655 sample images (382 with pneumothorax and 273 without pneumothorax, as in Table 2) from the dataset without augmentation to ensure the classification accuracy. Out of 382 affected images, 375 images were correctly predicted as affected by the model and 7 images were wrongly predicted as not affected, when in reality they are affected. Similarly, in 273 not affected samples, 269 images were correctly predicted as not affected and 4 images were predicted as affected when they are actually not affected. An attempt was made to analyze the relation between disease affected and not affected. We observed that the SoftMax function used for binary recognition at the final stage of the model uses a time interval of 0:1 to adjoin the output available in the multiple neurons of the layer when the X-ray image is given as input to the PneumoNet model. Similarly, the probability of identifying the presence of the disease is 0.53, which is not affected by the pneumothorax probability at the output of the SoftMax function, which is 0.47.

Table 6.

Overall results obtained from the proposed model.

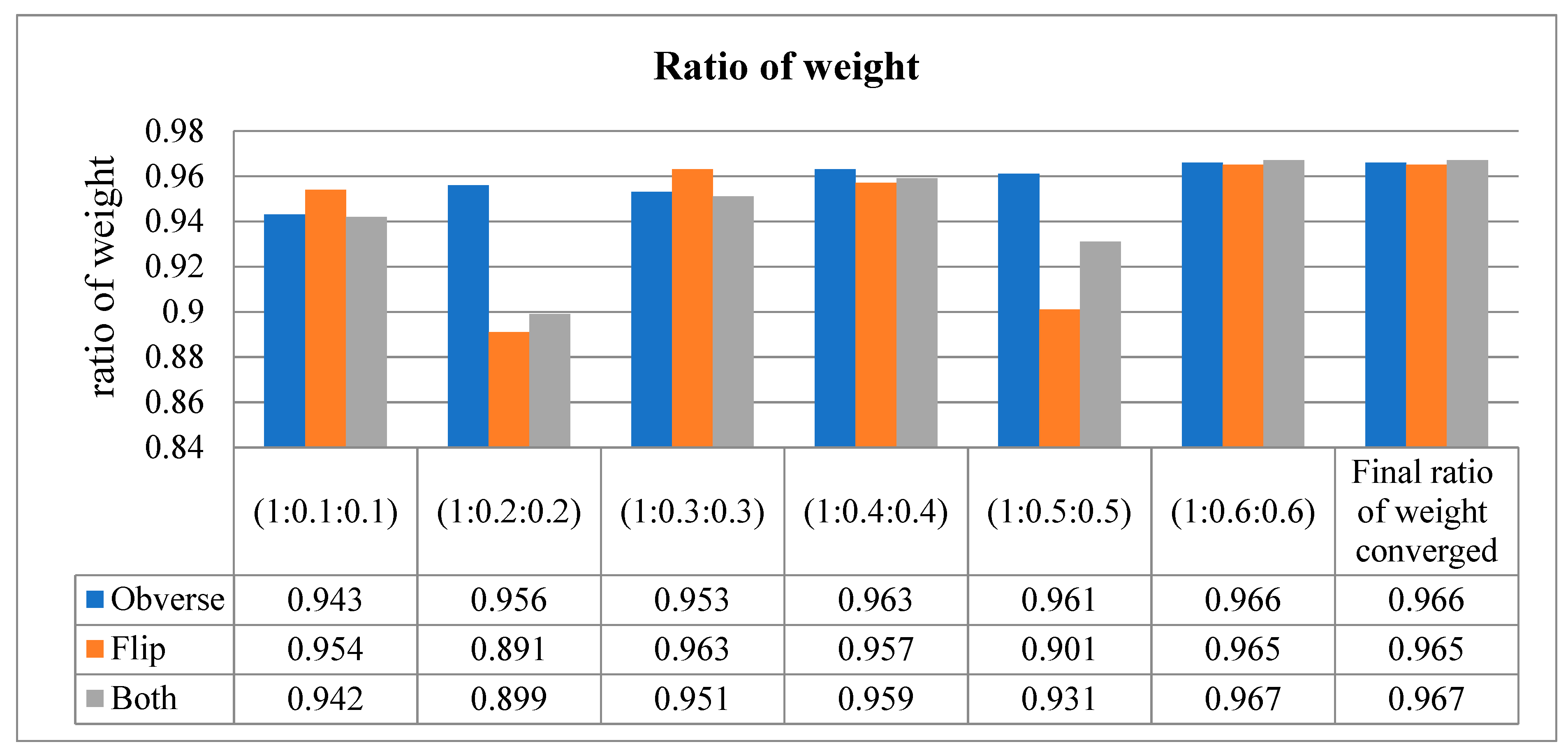

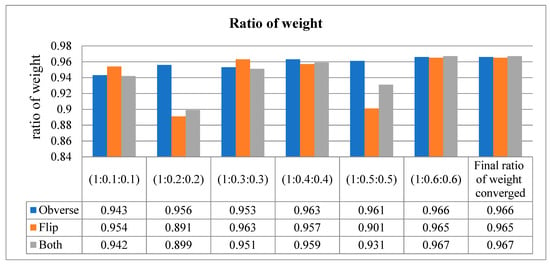

Our proposed model uses the method of assessing the input image in three different dimensions. In other words, the model is trained with the obverse side of the X-ray image, followed by the flipside of the X-ray image. Finally, it congregates the values on both sides of the X-ray image and performs classification. This way of training results in an increased training loss, which effects the accuracy. Hence, in order to not compromise the accuracy of our model, the weight ratio between the three sides (obverse, flip, and both) of the image was adjusted to reduce the loss function.

The loss function was adjusted to 1:0.6:0.6 for all three sides, respectively, resulting in a higher area under the curve (AUC).

This metric is used to describe the performance of the model. It is used to defy the disparity of the count in the input samples in a little amount of time. As per the values in Table 7, when the sequence of training is obverse–flip–both, the maximum accuracy is recorded as an average of 0.97. If the sequence is flip–obverse–both, the average accuracy is around 0.96. Similarly, when the sequence of training is performed on both sides, the average AUC value is recorded as 0.97. Correspondingly, the data presented in Table 8 express the ratio of weight of the proposed model tested at every moment of the input. Initially, the ratio is checked from 1 to 0.1 (on both sides), then the weight is gradually increased up to 1 to 0.6. The average ratio weight is stopped at 0.6 since the maximum accuracy level is reached and remains the same in other sequence ratios. Hence, only ratios up to 1:0.6:0.6 are recorded in Table 8.

Table 7.

Area under the curve values for all three sides of the input X-ray image.

Table 8.

Ratio of weight obtained at all three sides of the input X-ray image.

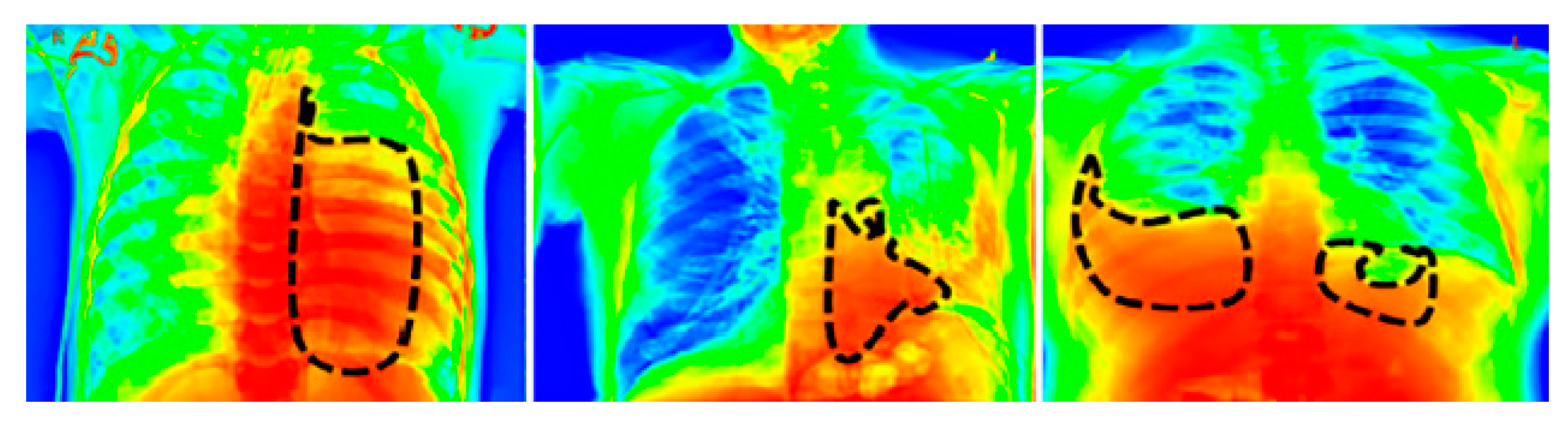

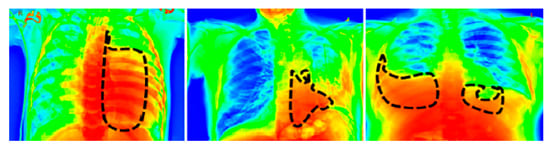

For a visual representation of the proposed model output, we used the Grad-CAM [39] heat map method. The primary purpose of the heat map is to emphasize the significant portion of the chest X-rays that the model prioritizes highlighting. To aid in the target identification of chest X-ray images in detecting pneumothorax and the subsequent course of action, we employed the Grad-CAM approach that presents the output image in a colored representation. Any of the convolution layers can have Grad-CAM applied to it. Grad-CAM is typically applied to the final convolution operation in a network. The images in Figure 8 are very clear; thus, a radiologist can clearly observe color with Grad-CAM to help them work quickly and confidently. For an example of a heat map generated by the proposed model, see Figure 8, where the black patterned line indicates the affected region of the lungs.

Figure 8.

Grad-Cam heat map of the proposed model’s output.

We observe that when the ratio of weights between three sides if the X-ray image is adjusted, it results in an increased weighted average and macro value of the proposed PneumoNet model.

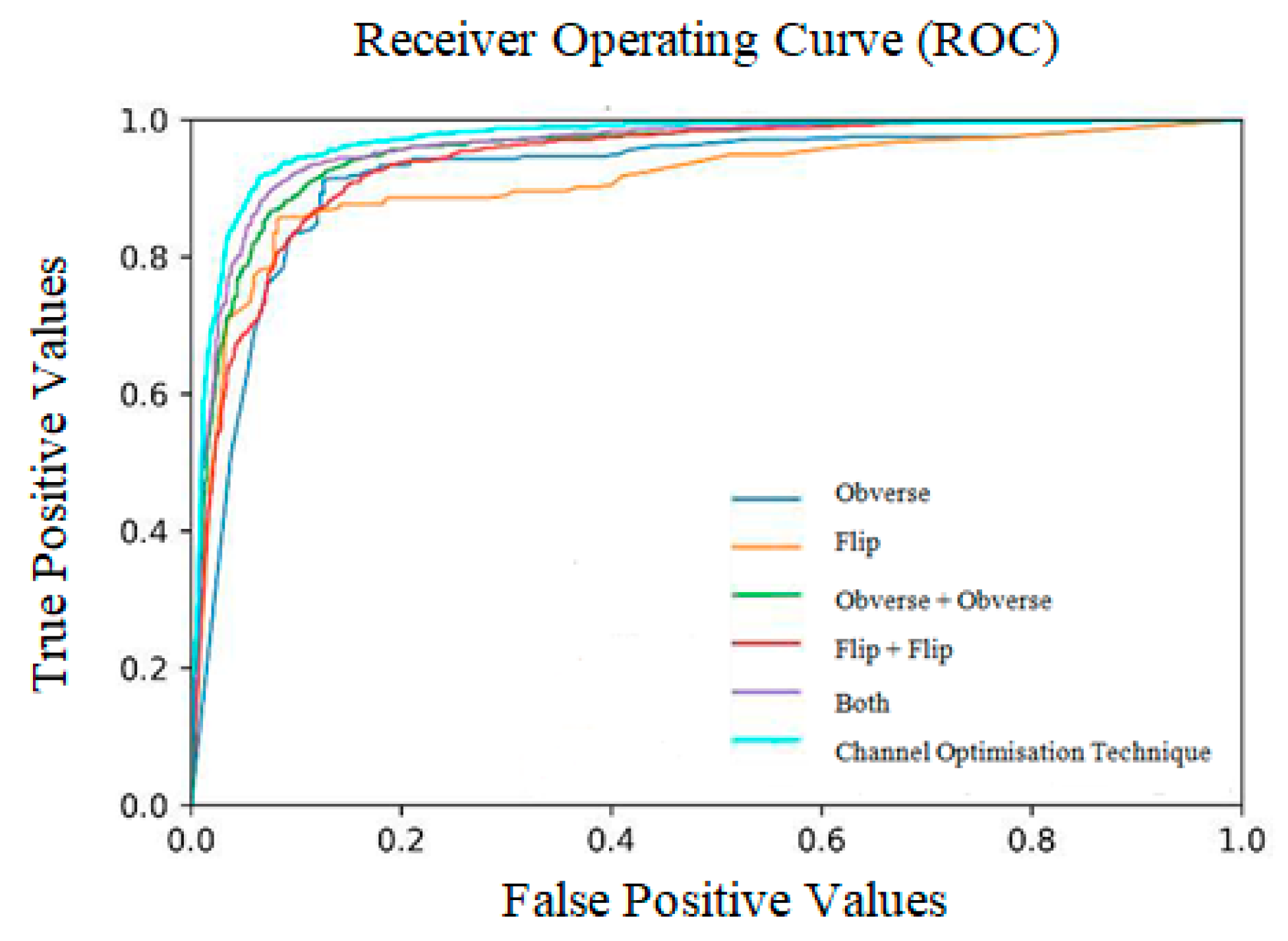

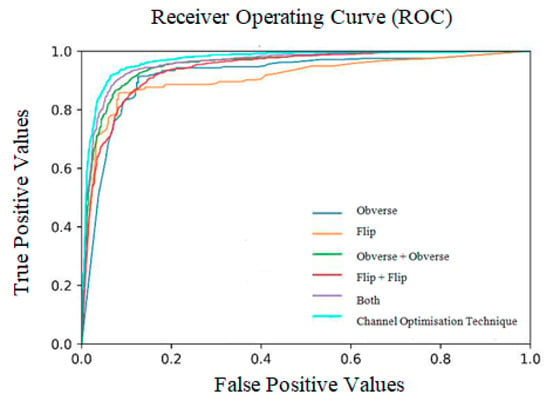

The ratio of weight obtained at all three sides is shown in Table 9 and Figure 9. The receiver operating characteristic curves obtained for the entire model are shown in Figure 10. Notably, the outcome of the model is promising because data augmentation is conducted. If the model is trained with new positive samples, it leads to model overfitting, which is reflected as a reduced recognition rate and hence a reduced accuracy. The value of AUC supports the use of uneven input samples. Hence, the output obtained at various stages of our PneumoNet model is promising as it achieved 98.41% accuracy in recognizing the presence of a pneumothorax.

Table 9.

Proposed method’s results for different sized pneumothoraces.

Figure 9.

Ratio of weight (converged at 1:0.6:0.6).

Figure 10.

Receiver operating curves (ROC).

4.1. Analysis of Model’s Accuracy for Various Pneumothoraces

To evaluate the proposed model’s classification accuracy, we performed the analysis as described in the following. Undersized pneumothoraxes had up to 1 cm of visceral-parietal pleura separation in one lung area. A separation greater than 1 cm and less than 2 cm is classified as an average-sized pneumothorax. Similarly, separations greater than 2 cm were classified as a large pneumothorax [40]. We assessed our proposed approach with these three classifications with respect to the accuracy, specificity, and sensitivity of the model. The results are shown in Table 9. The results obtained show that the proposed model is highly effective in classifying outsized and moderate pneumothoraces, but its accuracy is reduced when recognizing undersized pneumothoraces. The specificity of the proposed approach is 97.2% for outsized pneumothoraces, with a sensitivity of 98.06% and an accuracy of 98.13%. Similarly, the sensitivity, specificity, and accuracy were 97.3%, 97.1%, and 98.1%, respectively, for average-sized pneumothoraces. Finally, we obtained a reduced specificity of 96.8%, a sensibility of 95.6%, and accuracy of 97.81% for undersized pneumothoraces. The other parameters, such as F1 score, recall, and precision, remain high for outsized and average pneumothoraces and lower for undersized pneumothoraces.

4.2. Ablation Study

Four experiments were performed as part of an ablation investigation by altering different parts of the proposed PneumoNet model. By altering different components, it is feasible to produce a more reliable design with an increased recognition rate. An ablation study was undertaken with the following variations: varying the dropout value, modifying both the dense and convolution layers, and adjusting the activation function and accuracy rate with and without the COT.

4.3. Ablation Study_1: Modifying the Number of Dense and Convolution Layers

We have presented the results obtained in Table 10 when the dense and convolution layers are modified. The table contains training accuracy, validation accuracy, and the inference obtained from the results. Seven convolution layers and five dense layers provided a training accuracy of 65.21% and a validation accuracy of 69.79%, which is the lowest obtained accuracy. Similarly, with six convolution layers [41] and four dense layers, the training and validation accuracies are 90.71% and 72.65%, respectively. When the numbers of convolution and dense layers are 5 and 4, the training and validation accuracies are 92.67% and 91.51%, and when the numbers of convolution and dense layers are 4 and 3, the training and validation accuracies are 93.87% and 93.88%, respectively. When the number of convolution layers is two and the number of dense layers is three, we achieved the highest training and validation accuracies of 98.4% and 98.3%, respectively.

Table 10.

Results of ablation study 1, modifying both the number of dense and convolution layers.

4.4. Ablation Study_2: Adjusting the Activation Function

In Table 11, the data obtained while adjusting the activation function are shown. We used the ReLu, tanh, and Sigmoid activation functions to find the best result for training and validation [37]. When tanh was used, we achieved 88.92% and 90.62% as the training and validation accuracies, respectively. When the sigmoid activation function was used, the training accuracy was 91.32% and validation accuracy was 90.87%. Finally, we obtained the highest training and validation accuracies of 97.99% and 96.89%, respectively, when ReLu was used. Hence, ReLu was chosen as activation function for the proposed model.

Table 11.

Results of ablation study 2, adjusting the activation function.

4.5. Ablation Study_3: Varying the Dropout Value

Table 12 contains the results obtained for various dropout rates. When the dropout rate was 0.1, the model obtained a 98.39% training accuracy and a 98.36% validation accuracy, which is the highest accuracy. When the dropout rate was changed to 0.2, the validation and training accuracies were 94.72% and 91.75%, respectively. For a dropout rate of 0.15, the validation and training accuracies were 94.61% and 90.41%, respectively.

Table 12.

Results of ablation study 3, varying the dropout value.

4.6. Ablation Study_4: With and without Channel Optimization

Table 13 contains the results obtained when the model was trained with and without the COT. When channel optimization is performed, the model produced the highest accuracy of 98.41% and an F1 score of 98.32%. On the other hand, when the model was trained without the COT, the accuracy and F1 score dropped to 94.68% and 93.98%, respectively.

Table 13.

Results of ablation study 4, models without channel optimization.

By optimizing the channels, the issue of losing data due to differences in the significance of various channels in the feature maps during convolution pooling has been solved. The COT block enhanced the network’s ability to represent data by considering the connections between each channel and adjusting the features on a per channel basis. This enabled the network to learn how to selectively enhance the most relevant features while suppressing irrelevant ones using global information. Consequently, the network’s performance was improved.

5. Discussion

The objective of our proposed work is to provide a DL model to detect the presence of pneumothorax in X-ray images. Most of the existing methods are unable to extract the features of all the sides of the input X-ray image and the darker part of the image. Our proposed approach handles this issue using the COT to address the limitations found in several of the existing methods and by using hyperparameter optimization. Our accuracy results show the proposed model outperforms the existing models in detecting the disease, with the highest accuracy and minimum time. We compared our work with some existing DL approaches that use X-ray images for model training. The comparison results with other models are shown in Table 14. To verify the obtained results for their correctness, we also performed the test with different sized images of under and over 1 cm. The results obtained show that the proposed model is highly effective in classifying outsized and moderate pneumothoraces.

Table 14.

Performance comparison of existing and proposed methods.

We found several limitations in our proposed approach. Pneumothorax can be difficult to spot on a chest X-ray image if there are overlapping structures present in the image. As a result, in these cases, the model cannot easily categorize the presence of the disease. Furthermore, the visual symptoms of a pneumothorax, which include a narrow line at the lung’s edges and a variation in the lung’s texture, might be challenging for our model to identify.

6. Conclusions

We propose a modest DL model, PneumoNet, to identify the existence of a pneumothorax in the human lungs. Although many methods using machine learning and DL models have been proposed, the results obtained from our proposed model outperform the existing approaches. If accuracy is used as the indicator to assess performance, then our model is the best approach. If the AUC, another measure to assess the model performance, is used, then our proposed model is also better at recognizing the presence of the disease. To assess our model, we use common machine learning and DL parameters, demonstrating the good performance of our proposed approach. We used the COT to improve the acknowledgment rate of the model. Data augmentation helps support the accurate augmentation of the images. If image augmentation is not conducted, the loss function increases. We used the affine augmentation tool to fine-tune the quality of the input X-ray images. Our model addresses the vanishing gradient issue, which is the most frequently observed problem in most existing approaches. The issue is solved with our COT method. Overall, our PneumoNet model can be effectively applied in the healthcare sector for recognizing the deadly disease of pneumothorax and can play a vital role in the field of the Internet of Medical Things.

Author Contributions

Conceptualization, V.D.K.; methodology, V.D.K. and P.R.; formal analysis, all authors; investigation and medical advice, O.G., M.D.C., and R.F.; resources, V.D.K., P.R., M.A., and O.G.; data curation, M.A. and O.G.; writing—original draft, V.D.K., P.R., R.F., M.D.C., and O.G.; writing—review and editing, all authors; supervision, O.G. and M.A.; project administration, all authors; funding acquisition, O.G., M.D.C., and R.F. All the authors contributed to conceptualization, methodology, and writing the work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset utilized in this investigation is publicly available and can be downloaded from https://kaggle.com/competitions/siim-acr-pneumothorax-segmentation, accessed on 10 February 2022.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zarogoulidis, P.; Kioumis, I.; Pitsiou, G.; Porpodis, K.; Lampaki, S.; Papaiwannou, A.; Katsikogiannis, N.; Zaric, B.; Branislav, P.; Secen, N.; et al. Pneumothorax: From definition to diagnosis and treatment. J. Thorac. Dis. 2014, 6 (Suppl. 4), S372–S376. [Google Scholar] [CrossRef]

- Gupta, D.; Hansell, A.; Nichols, T.; Duongb, T.; Ayresa, J.G.; Strachanb, D. Epidemiology of pneumothorax in England. Thorax 2000, 55, 666–671. [Google Scholar] [CrossRef]

- Gang, P.; Zhen, W.; Zeng, W.; Gordienko, Y.; Kochura, Y.; Alienin, O.; Rokovyi, O.; Stirenko, S. Dimensionality reduction in deep learning for chest X-ray analysis of lung cancer. In Proceedings of the 2018 Tenth International Conference on Advanced Computational Intelligence (ICACI), Xiamen, China, 29–31 March 2018; pp. 878–883. [Google Scholar] [CrossRef]

- Cai, J.; Lu, L.; Harrison, A.P.; Shi, X.; Chen, P.; Yang, L. Iterative attention mining for weakly supervised thoracic disease pattern localization in chest X-rays. In International Conference on Medical Image Computing and Computer-Assisted Intervention, Granada, Spain, 16–20 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 589–598. [Google Scholar]

- Mohan, A.P. Deep Convolutional Neural Networks in Detecting Lung Mass From Chest X-Ray Images. Int. J. Appl. Res. Bioinform. 2021, 11, 22–30. [Google Scholar] [CrossRef]

- Shin, H.-C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef] [PubMed]

- Qin, C.; Yao, D.; Shi, Y.; Song, Z. Computer-aided detection in chest radiography based on artificial intelligence: A survey. Biomed. Eng. 2018, 17, 113. [Google Scholar] [CrossRef] [PubMed]

- Setio, A.A.; Ciompi, F.; Litjens, G.; Gerke, P.; Jacobs, C.; van Riel, S.J.; Wille, M.M.; Naqibullah, M.; Sanchez, C.I.; van Ginneken, B. Pulmonary Nodule Detection in CT Images: False Positive Reduction Using Multi-View Convolutional Networks. IEEE Trans. Med Imaging 2016, 35, 1160–1169. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, L.; Jin, Q. Enhanced Diagnosis of Pneumothorax with an Improved Real-Time Augmentation for Imbalanced Chest X-rays Data Based on Deep Conv. Neural NW” IEEE/ACM Trans. Compute. Biol. B. Infs. 2021, 18, 951–962. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Hannun, A.Y.; Rajpurkar, P.; Haghpanahi, M.; Tison, G.H.; Bourn, C.; Turakhia, M.P.; Ng, A.Y. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat. Med. 2019, 25, 65–69. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Huang, X. Lung nodule detection in CT using 3D CNN. In Proceedings of the IEEE 14th International Symposium on Biomedical Imaging (ISBI), Melbourne, VIC, Australia, 18–21 April 2017; pp. 379–383. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K. Chex Net: Radiologist-Level Pneumonia Detection on Chest X-Rays with DL. Comput. Vis. Pattern Recognit. 2017, 10, 276–281. [Google Scholar] [CrossRef]

- Zawacki; Carol; George; Elliott; Fomitchev; Hussain; Lakhani; Culliton; Siim-Acr-Pneumothorax-Segmentation; Bao; Kaggle. 2019. Available online: https://kaggle.com/competitions/siim-acr-pneumothorax-segmentation (accessed on 10 February 2022).

- Filice, R.W.; Stein, A.; Wu, C.C.; Arteaga, V.A.; Borstelmann, S.; Gaddikeri, R.; Galperin-Aizenberg, M.; Gill, R.R.; Godoy, M.C.; Hobbs, S.B.; et al. Crowdsourcing pneumothorax annotations using machine learning annotations on the NIH chest X-ray dataset. J. Digit. Imaging 2019, 33, 490–496. [Google Scholar] [CrossRef] [PubMed]

- Kumar, D.R.; Krishna, T.A.; Wahi, A. Health Monitoring Framework for in Time Recognition of Pulmonary Embolism Using Internet of Things. J. Comput. Theor. Nanosci. 2018, 15, 1598–1602. [Google Scholar] [CrossRef]

- Pasa, F.; Golkov, V.; Pfeiffer, F.; Cremers, D. Efficient Deep Network Architectures for Fast Chest X-Ray Tuberculosis Screening and Visualization. Sci. Rep. 2019, 9, 6268. [Google Scholar] [CrossRef] [PubMed]

- Liao, R.; Rubin, J.; Lam, G.; Berkowitz, S.; Dalal, S.; Wells, W.; Horng, S.; Golland, P. Semi-supervised learning for quantification of pulmonary edema in chest x-ray images. Comput. Vis. Pat. Recogn. 2019, 3, 1319–1326. [Google Scholar]

- Lindsey, T.; Lee, R.; Grisell, R.; Grisell, R.; Vega, S.; Veazey, S. Automated Pneumothorax Diagnosis Using Deep Neural Networks. In Proceedings of the 23rd Iberoamerican Congress, CIARP 2018, Madrid, Spain, 3 March 2019; Springer: Cham, Switzerland, 2018; pp. 723–731. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Networks Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef]

- Chan, Y.-H.; Zeng, Y.-Z.; Wu, H.-C.; Wu, M.-C.; Sun, H.-M. Effective Pneumothorax Detection for Chest X-Ray Images Using Local Binary Pattern and Support Vector Machine. J. Healthc. Eng. 2018, 2018, 2908517. [Google Scholar] [CrossRef]

- Li, Z.; Zuo, J.; Zhang, C.; Sun, Y. Pneumothorax Image Segmentation and Prediction with UNet++ and MSOF Strategy. IEEE International Conference on Consumer Electronics & Computer Engineering (ICCECE), Guangzhou, China, 15–17 January 2021; pp. 710–713. [Google Scholar] [CrossRef]

- Sundaram, B.; Lakhani, P. Deep Learning at Chest Radiography: Automated Classification of Pulmonary Tuberculosis by Using Convolutional Neural Networks. Radiology 2017, 284, 574–582. [Google Scholar] [CrossRef]

- Yaakob, S.R. Ensemble deep learning for tuberculosis detection. IAES Int. J. AI 2018, 10, 429–435. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble Methods in Machine Learning. In Multiple Classifier Systems, Lecturer Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2019; Volume 1857. [Google Scholar] [CrossRef]

- Jaeger, S.; Wáng, Y. Two public chest X-ray datasets for computer-aided screening of pulmonary diseases. Quant. Image. Med. Surg. 2014, 4, 475–477. [Google Scholar]

- Li, X.; Thrall, J.H.; Digumarthy, S.R.; Kalra, M.K.; Pandharipande, P.V.; Zhang, B.; Nitiwarangkul, C.; Singh, R.; Khera, R.D.; Li, Q. Deep learning-enabled system for rapid pneumothorax screening on chest CT. Eur. J. Radiol. 2019, 120, 108692. [Google Scholar] [CrossRef] [PubMed]

- Jaszcz, A.; Połap, D.; Damaševičius, R. Lung X-Ray Image Segmentation Using Heuristic Red Fox Optimization Algorithm. Sci. Program. 2022, 2022, 4494139. [Google Scholar] [CrossRef]

- Rajinikanth, V.; Kadry, S.; Damaševičius, R.; Pandeeswaran, C.; Mohammed, M.A.; Devadhas, G.G. Pneumonia Detection in Chest X-ray using InceptionV3 and Multi-Class Classification. In Proceedings of the 3rd International Conference on Intelligent Computing Instrumentation and Control Technologies, Kannur, India, 11–12 August 2022; pp. 972–976. [Google Scholar] [CrossRef]

- Blumenfeld, A.; Greenspan, H.; Konen, E. Pneumothorax detection in chest radiographs using convolutional neural networks. Proc. SPIE 2018, 10575, 3–12. [Google Scholar] [CrossRef]

- Sanada, S.; Doi, K.; MacMahon, H. Image feature analysis and computer-aided diagnosis in digital radiography: Automated detection of pneumothorax in chest images. Med. Phys. 1992, 19, 1153–1160. [Google Scholar] [CrossRef] [PubMed]

- Geva, O.; Zimmerman-Moreno, G.; Lieberman, S.; Konen, E.; Greenspan, H. Pneumothorax detection in chest radiographs using local and global texture signatures. Proc. SPIE 2015, 15, 94141. [Google Scholar] [CrossRef]

- Singh, N.; Hamde, S. Tuberculosis detection using shape and texture features of chest X-rays. In Innovations in Electronics and Communication Engineering; Springer: Greer, SC, USA, 2019; pp. 43–50. [Google Scholar]

- Riasatian, A.; Tizhoosh, H.R. Searching for pneumothorax in x-ray images using auto encoded deep features. Sci. Rep. 2021, 11, 9817. [Google Scholar] [CrossRef]

- Park, S.; Lee, S.M.; Kim, N.; Choe, J.; Cho, Y.; Do, K.-H.; Seo, J.B. Application of deep learning–based computer-aided detection system: Detecting pneumothorax on chest radiograph after biopsy. Eur. Radiol. 2019, 29, 5341–5348. [Google Scholar] [CrossRef]

- Munishamaiaha, K.; Rajagopal, G.; Venkatesan, D.K.; Arif, M.; Vicoveanu, D.; Chiuchisan, I.; Izdrui, D.; Geman, O. Robust Spatial–Spectral Squeeze–Excitation AdaBound Dense Network (SE-AB-Densenet) for Hyperspectral Image Classification. Sensors 2022, 22, 3229. [Google Scholar] [CrossRef]

- Sitaula, C.; Hossain, M.B. Attention-based VGG-16 model for COVID-19 chest X-ray image classification. Appl. Intell. 2020, 51, 2850–2863. [Google Scholar] [CrossRef]

- Malik, H.; Anees, T.; Din, M.; Naeem, A. CDC_Net: Multi-classification convolutional neural network model for detection of COVID-19, pneumothorax, pneumonia, lung Cancer, and tuberculosis using chest X-rays. Multimedia Tools Appl. 2022, 20, 1–26. [Google Scholar] [CrossRef]

- Taylor, A.G.; Mielke, C.; Mongan, J. Automated detection of moderate and large pneumothorax on frontal chest X-rays using deep convolutional neural networks: A retrospective study. PLOS Med. 2018, 15, e1002697. [Google Scholar] [CrossRef] [PubMed]

- Pandian, J.A.; Kanchanadevi, K.; Kumar, D.; Geman, O. Deep Convolutional Generative Adversarial Network for Metastatic Tissue Diagnosis in Lymph Node Section. In System Design for Epidemics Using Machine Learning and Deep Learning; Springer International Publishing: Berlin/Heidelberg, Germany, 2023; pp. 153–166. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).