Deep Learning Empowers Endoscopic Detection and Polyps Classification: A Multiple-Hospital Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection Procedures

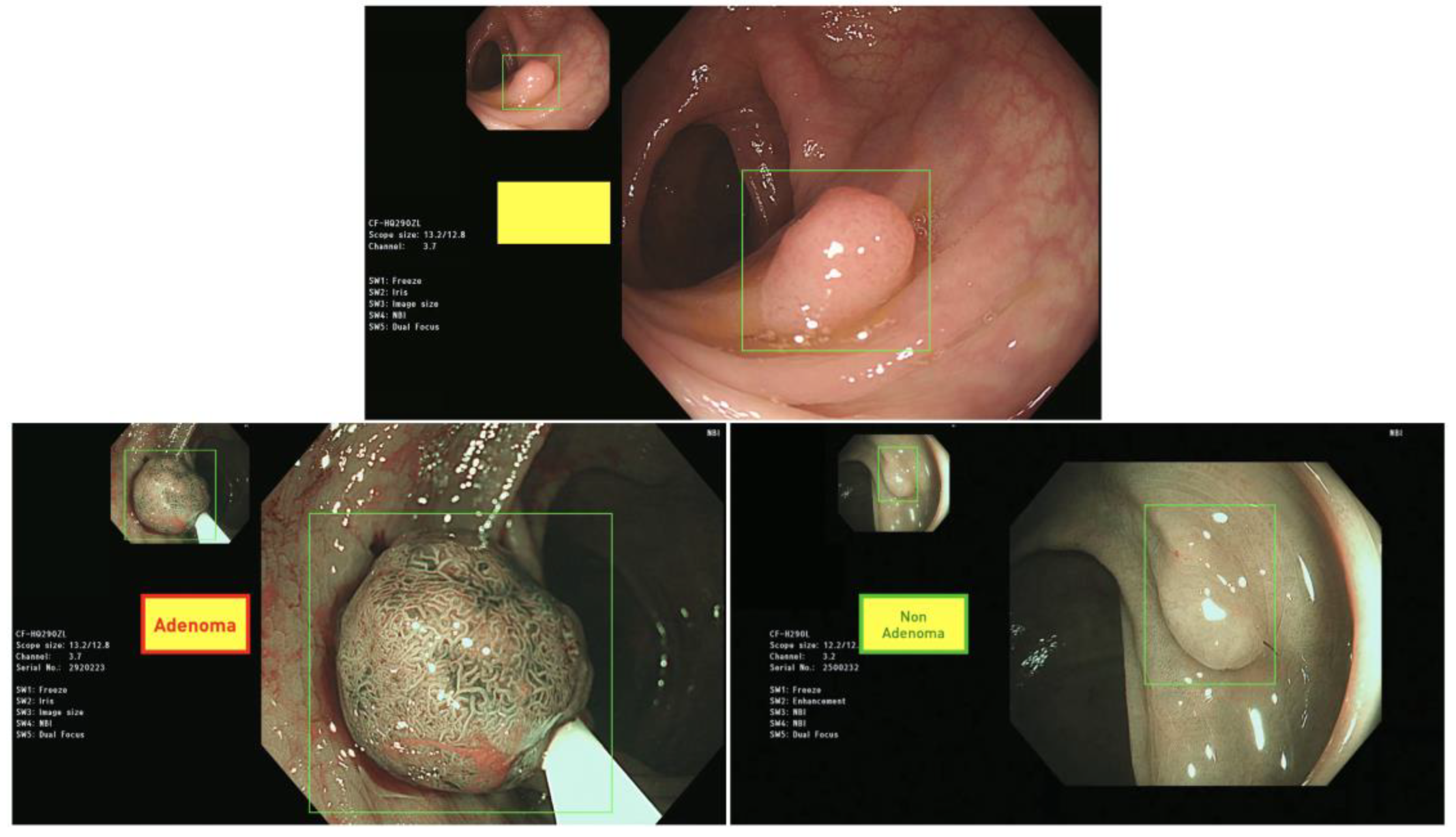

2.2. Polyp Annotation and Classification Protocol

2.3. Deep Learning Algorithms

2.3.1. Model Architecture

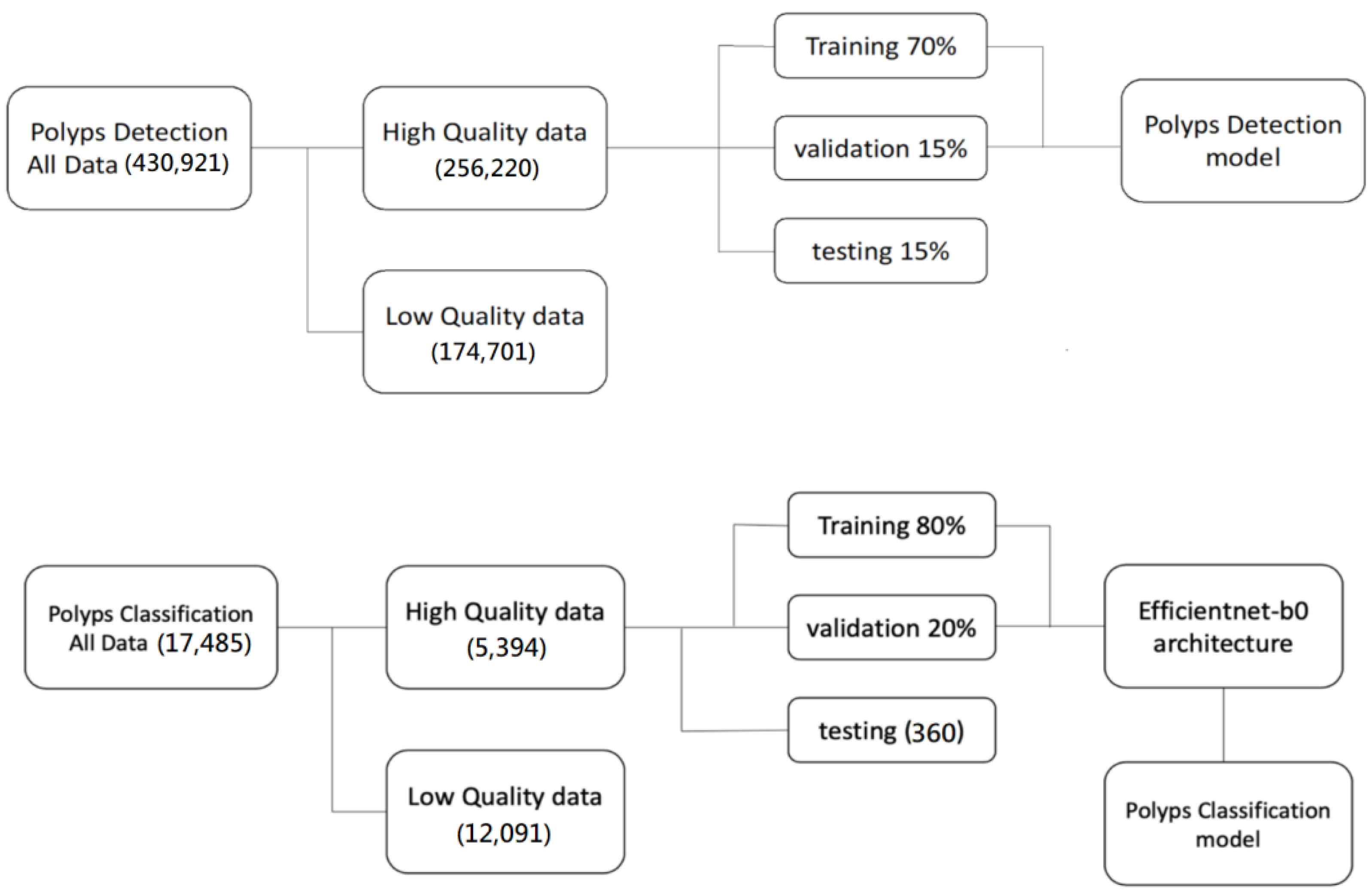

Polyps Detection Model

Polyp Classification Model

Model Validation

Evaluation Metrics

2.4. Graphic User Interface for Model Deployment

2.5. Hardware

2.6. External Validation Using Multiple-Hospital Datasets from Both Prospective and Retrospective Approaches

3. Results

3.1. Deep Learning Detection Model Performance

3.2. High Sensitivity and Specificity Deep Learning Classification Model

3.3. Illustration of the Deployed Deep Learning Models into GUI

3.4. Model Benchmark Using Multiple Hospital Datasets

3.4.1. Prospective Datasets for Polyp Detection Testing

3.4.2. Retrospective Datasets for Polyp Classification Testing

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Colucci, P.M.; Yale, S.H.; Rall, C.J. Colorectal polyps. Clin. Med. Res. 2003, 1, 261–262. [Google Scholar] [CrossRef] [PubMed]

- Longacre, T.A.; Fenoglio-Preiser, C.M. Mixed hyperplastic adenomatous polyps/serrated adenomas. A distinct form of colorectal neoplasia. Am. J. Surg. Pathol. 1990, 14, 524–537. [Google Scholar] [CrossRef] [PubMed]

- Levine, J.S.; Ahnen, D.J. Adenomatous polyps of the colon. N. Engl. J. Med. 2006, 355, 2551–2557. [Google Scholar] [CrossRef] [PubMed]

- Chubak, J.; McLerran, D.; Zheng, Y.; Singal, A.G.; Corley, D.A.; Doria-Rose, V.P.; Doubeni, C.A.; Kamineni, A.; Haas, J.S.; Halm, E.A. Receipt of Colonoscopy Following Diagnosis of Advanced Adenomas: An Analysis within Integrated Healthcare Delivery SystemsColonoscopy Following Advanced Adenoma. Cancer Epidemiol. Biomark. Prev. 2019, 28, 91–98. [Google Scholar] [CrossRef] [PubMed]

- Health Promotion Administration. Ministry of Health and Welfare. Cancer Registry Annual Report. 2016. Available online: https://www.hpa.gov.tw/Pages/ashx/File.ashx?FilePath=%7E/File/Attach/7425/File_6951.pdf (accessed on 25 December 2019).

- Doubeni, C.A.; Corley, D.A.; Quinn, V.P.; Jensen, C.D.; Zauber, A.G.; Goodman, M.; Johnson, J.R.; Mehta, S.J.; Becerra, T.A.; Zhao, W.K. Effectiveness of screening colonoscopy in reducing the risk of death from right and left colon cancer: A large community-based study. Gut 2018, 67, 291–298. [Google Scholar] [CrossRef]

- Becq, A.; Chandnani, M.; Bharadwaj, S.; Baran, B.; Ernest-Suarez, K.; Gabr, M.; Glissen-Brown, J.; Sawhney, M.; Pleskow, D.K.; Berzin, T.M. Effectiveness of a deep-learning polyp detection system in prospectively collected colonoscopy videos with variable bowel preparation quality. J. Clin. Gastroenterol. 2020, 54, 554–557. [Google Scholar] [CrossRef]

- Corley, D.A.; Jensen, C.D.; Marks, A.R.; Zhao, W.K.; Lee, J.K.; Doubeni, C.A.; Zauber, A.G.; de Boer, J.; Fireman, B.H.; Schottinger, J.E. Adenoma detection rate and risk of colorectal cancer and death. N. Engl. J. Med. 2014, 370, 1298–1306. [Google Scholar] [CrossRef]

- Leufkens, A.; Van Oijen, M.; Vleggaar, F.; Siersema, P. Factors influencing the miss rate of polyps in a back-to-back colonoscopy study. Endoscopy 2012, 44, 470–475. [Google Scholar] [CrossRef]

- Kuznetsov, K.; Lambert, R.; Rey, J.-F. Narrow-band imaging: Potential and limitations. Endoscopy 2006, 38, 76–81. [Google Scholar] [CrossRef]

- Komeda, Y.; Kashida, H.; Sakurai, T.; Asakuma, Y.; Tribonias, G.; Nagai, T.; Kono, M.; Minaga, K.; Takenaka, M.; Arizumi, T. Magnifying narrow band imaging (NBI) for the diagnosis of localized colorectal lesions using the Japan NBI Expert Team (JNET) classification. Oncology 2017, 93, 49–54. [Google Scholar] [CrossRef]

- Tsuneki, M. Deep learning models in medical image analysis. J. Oral Biosci. 2022, 64, 312–320. [Google Scholar] [CrossRef] [PubMed]

- Turan, M.; Durmus, F. UC-NfNet: Deep learning-enabled assessment of ulcerative colitis from colonoscopy images. Med. Image Anal. 2022, 82, 102587. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Hinton, G.E. Deep belief networks. Scholarpedia 2009, 4, 5947. [Google Scholar] [CrossRef]

- Soffer, S.; Klang, E.; Shimon, O.; Nachmias, N.; Eliakim, R.; Ben-Horin, S.; Kopylov, U.; Barash, Y. Deep learning for wireless capsule endoscopy: A systematic review and meta-analysis. Gastrointest. Endosc. 2020, 92, 831–839.e838. [Google Scholar] [CrossRef]

- Yamada, M.; Saito, Y.; Imaoka, H.; Saiko, M.; Yamada, S.; Kondo, H.; Takamaru, H.; Sakamoto, T.; Sese, J.; Kuchiba, A. Development of a real-time endoscopic image diagnosis support system using deep learning technology in colonoscopy. Sci. Rep. 2019, 9, 14465. [Google Scholar] [CrossRef]

- Ozawa, T.; Ishihara, S.; Fujishiro, M.; Kumagai, Y.; Shichijo, S.; Tada, T. Automated endoscopic detection and classification of colorectal polyps using convolutional neural networks. Ther. Adv. Gastroenterol. 2020, 13, 1756284820910659. [Google Scholar] [CrossRef]

- Nadimi, E.S.; Buijs, M.M.; Herp, J.; Kroijer, R.; Kobaek-Larsen, M.; Nielsen, E.; Pedersen, C.D.; Blanes-Vidal, V.; Baatrup, G. Application of deep learning for autonomous detection and localization of colorectal polyps in wireless colon capsule endoscopy. Comput. Electr. Eng. 2020, 81, 106531. [Google Scholar] [CrossRef]

- Sun, X.; Zhang, P.; Wang, D.; Cao, Y.; Liu, B. Colorectal polyp segmentation by u-net with dilation convolution. In Proceedings of the 2019 18th IEEE International Conference On Machine Learning And Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 851–858. [Google Scholar]

- Dodge, S.; Karam, L. Understanding how image quality affects deep neural networks. In Proceedings of the 2016 Eighth International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016; pp. 1–6. [Google Scholar]

- Thompson, N.C.; Greenewald, K.; Lee, K.; Manso, G.F. The computational limits of deep learning. arXiv 2020, arXiv:2007.05558. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Mori, Y.; Kudo, S.-e. Detecting colorectal polyps via machine learning. Nat. Biomed. Eng. 2018, 2, 713–714. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Xiao, X.; Glissen Brown, J.R.; Berzin, T.M.; Tu, M.; Xiong, F.; Hu, X.; Liu, P.; Song, Y.; Zhang, D. Development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy. Nat. Biomed. Eng. 2018, 2, 741–748. [Google Scholar] [CrossRef] [PubMed]

- Urban, G.; Tripathi, P.; Alkayali, T.; Mittal, M.; Jalali, F.; Karnes, W.; Baldi, P. Deep learning localizes and identifies polyps in real time with 96% accuracy in screening colonoscopy. Gastroenterology 2018, 155, 1069–1078.e1068. [Google Scholar] [CrossRef] [PubMed]

- Nogueira-Rodríguez, A.; Domínguez-Carbajales, R.; López-Fernández, H.; Iglesias, Á.; Cubiella, J.; Fdez-Riverola, F.; Reboiro-Jato, M.; Glez-Peña, D. Deep neural networks approaches for detecting and classifying colorectal polyps. Neurocomputing 2021, 423, 721–734. [Google Scholar] [CrossRef]

- Kaminski, M.F.; Regula, J.; Kraszewska, E.; Polkowski, M.; Wojciechowska, U.; Didkowska, J.; Zwierko, M.; Rupinski, M.; Nowacki, M.P.; Butruk, E. Quality indicators for colonoscopy and the risk of interval cancer. N. Engl. J. Med. 2010, 362, 1795–1803. [Google Scholar] [CrossRef] [PubMed]

- Siegel, R.L.; Ward, E.M.; Jemal, A. Trends in Colorectal Cancer Incidence Rates in the United States by Tumor Location and Stage, 1992–2008 Colorectal Cancer Incidence Trends by Tumor Location. Cancer Epidemiol. Biomark. Prev. 2012, 21, 411–416. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- He, J.; Zhou, C.; Ma, X.; Berg-Kirkpatrick, T.; Neubig, G. Towards a unified view of parameter-efficient transfer learning. arXiv 2021, arXiv:2110.04366. [Google Scholar]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12299–12310. [Google Scholar]

- Chaib, S.; Yao, H.; Gu, Y.; Amrani, M. Deep feature extraction and combination for remote sensing image classification based on pre-trained CNN models. In Proceedings of the Ninth International Conference on Digital Image Processing (ICDIP 2017), Hong Kong, China, 19–22 May 2017; pp. 712–716. [Google Scholar]

- Shin, Y.; Qadir, H.A.; Aabakken, L.; Bergsland, J.; Balasingham, I. Automatic colon polyp detection using region based deep CNN and post learning approaches. IEEE Access 2018, 6, 40950–40962. [Google Scholar] [CrossRef]

- Qadir, H.A.; Shin, Y.; Solhusvik, J.; Bergsland, J.; Aabakken, L.; Balasingham, I. Polyp detection and segmentation using mask R-CNN: Does a deeper feature extractor CNN always perform better? In Proceedings of the 2019 13th International Symposium on Medical Information and Communication Technology (ISMICT), Oslo, Norway, 8–10 May 2019; pp. 1–6. [Google Scholar]

- Qadir, H.A.; Balasingham, I.; Solhusvik, J.; Bergsland, J.; Aabakken, L.; Shin, Y. Improving automatic polyp detection using CNN by exploiting temporal dependency in colonoscopy video. IEEE J. Biomed. Health Inform. 2019, 24, 180–193. [Google Scholar] [CrossRef]

- Su, J.-R.; Li, Z.; Shao, X.-J.; Ji, C.-R.; Ji, R.; Zhou, R.-C.; Li, G.-C.; Liu, G.-Q.; He, Y.-S.; Zuo, X.-L. Impact of a real-time automatic quality control system on colorectal polyp and adenoma detection: A prospective randomized controlled study (with videos). Gastrointest. Endosc. 2020, 91, 415–424.e414. [Google Scholar] [CrossRef] [PubMed]

- Silva, J.; Histace, A.; Romain, O.; Dray, X.; Granado, B. Toward embedded detection of polyps in wce images for early diagnosis of colorectal cancer. Int. J. Comput. Assist. Radiol. Surg. 2014, 9, 283–293. [Google Scholar] [CrossRef] [PubMed]

- Bernal, J.; Sánchez, J.; Vilarino, F. Towards automatic polyp detection with a polyp appearance model. Pattern Recognit. 2012, 45, 3166–3182. [Google Scholar] [CrossRef]

- Vázquez, D.; Bernal, J.; Sánchez, F.J.; Fernández-Esparrach, G.; López, A.M.; Romero, A.; Drozdzal, M.; Courville, A. A benchmark for endoluminal scene segmentation of colonoscopy images. J. Healthc. Eng. 2017, 2017, 4037190. [Google Scholar] [CrossRef]

- Wang, W.; Tian, J.; Zhang, C.; Luo, Y.; Wang, X.; Li, J. An improved deep learning approach and its applications on colonic polyp images detection. BMC Med. Imaging 2020, 20, 83. [Google Scholar] [CrossRef]

- Lee, J.Y.; Jeong, J.; Song, E.M.; Ha, C.; Lee, H.J.; Koo, J.E.; Yang, D.-H.; Kim, N.; Byeon, J.-S. Real-time detection of colon polyps during colonoscopy using deep learning: Systematic validation with four independent datasets. Sci. Rep. 2020, 10, 8379. [Google Scholar] [CrossRef]

- Pogorelov, K.; Randel, K.R.; Griwodz, C.; Eskeland, S.L.; de Lange, T.; Johansen, D.; Spampinato, C.; Dang-Nguyen, D.-T.; Lux, M.; Schmidt, P.T. Kvasir: A multi-class image dataset for computer aided gastrointestinal disease detection. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; pp. 164–169. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Grosu, S.; Wesp, P.; Graser, A.; Maurus, S.; Schulz, C.; Knösel, T.; Cyran, C.C.; Ricke, J.; Ingrisch, M.; Kazmierczak, P.M. Machine Learning–based differentiation of benign and premalignant colorectal polyps detected with CT colonography in an asymptomatic screening population: A proof-of-concept study. Radiology 2021, 299, 326–335. [Google Scholar] [CrossRef]

| Sensitivity (95% CI) | 0.9709 (0.9646–0.9757) |

| Specificity (95% CI) | 0.9701 (0.9663–0.9749) |

| AUC | 0.9902 |

| mAP 0.5:0.95 | 0.8845 |

| Sensitivity | 0.9889 |

| Specificity | 0.9778 |

| AUC (95% CI) | 0.9989 (0.9954–1.00) |

| F1 score | 0.9834 |

| Parameters | Hospital A | Hospital B | Hospital C | 3 Hospitals |

|---|---|---|---|---|

| Lesion-based Sensitivity (95% CI) | 0.9817 (0.9476, 0.9938) | 0.9389 (0.8939, 0.9655) | 0.9360 (0.8891, 0.9639) | 0.9516 (0.9295–0.9670) |

| Frame-based Specificity (95% CI) | 0.9676 (0.9665, 0.9687) | 0.9833 (0.9824, 0.9840) | 0.9605 (0.9589, 0.9620) | 0.9720 (0.9713–0.9726) |

| Parameters | Hospital A | Hospital B | Hospital C | 3 Hospitals |

|---|---|---|---|---|

| AUC (95% CI) | 0.9947 (0.9817–1.00) | 0.9497 (0.9123, 0.9871) | 0.9207 (0.8749, 0.9665) | 0.9521 (0.9308, 0.9734) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, M.-H.; Huang, C.-C.; Chen, Y.-T.; Tsai, Y.-J.; Liou, F.-M.; Chang, S.-C.; Phan, N.N. Deep Learning Empowers Endoscopic Detection and Polyps Classification: A Multiple-Hospital Study. Diagnostics 2023, 13, 1473. https://doi.org/10.3390/diagnostics13081473

Shen M-H, Huang C-C, Chen Y-T, Tsai Y-J, Liou F-M, Chang S-C, Phan NN. Deep Learning Empowers Endoscopic Detection and Polyps Classification: A Multiple-Hospital Study. Diagnostics. 2023; 13(8):1473. https://doi.org/10.3390/diagnostics13081473

Chicago/Turabian StyleShen, Ming-Hung, Chi-Cheng Huang, Yu-Tsung Chen, Yi-Jian Tsai, Fou-Ming Liou, Shih-Chang Chang, and Nam Nhut Phan. 2023. "Deep Learning Empowers Endoscopic Detection and Polyps Classification: A Multiple-Hospital Study" Diagnostics 13, no. 8: 1473. https://doi.org/10.3390/diagnostics13081473

APA StyleShen, M.-H., Huang, C.-C., Chen, Y.-T., Tsai, Y.-J., Liou, F.-M., Chang, S.-C., & Phan, N. N. (2023). Deep Learning Empowers Endoscopic Detection and Polyps Classification: A Multiple-Hospital Study. Diagnostics, 13(8), 1473. https://doi.org/10.3390/diagnostics13081473