Artificial Intelligence (AI) Applications for Point of Care Ultrasound (POCUS) in Low-Resource Settings: A Scoping Review

Abstract

1. Introduction

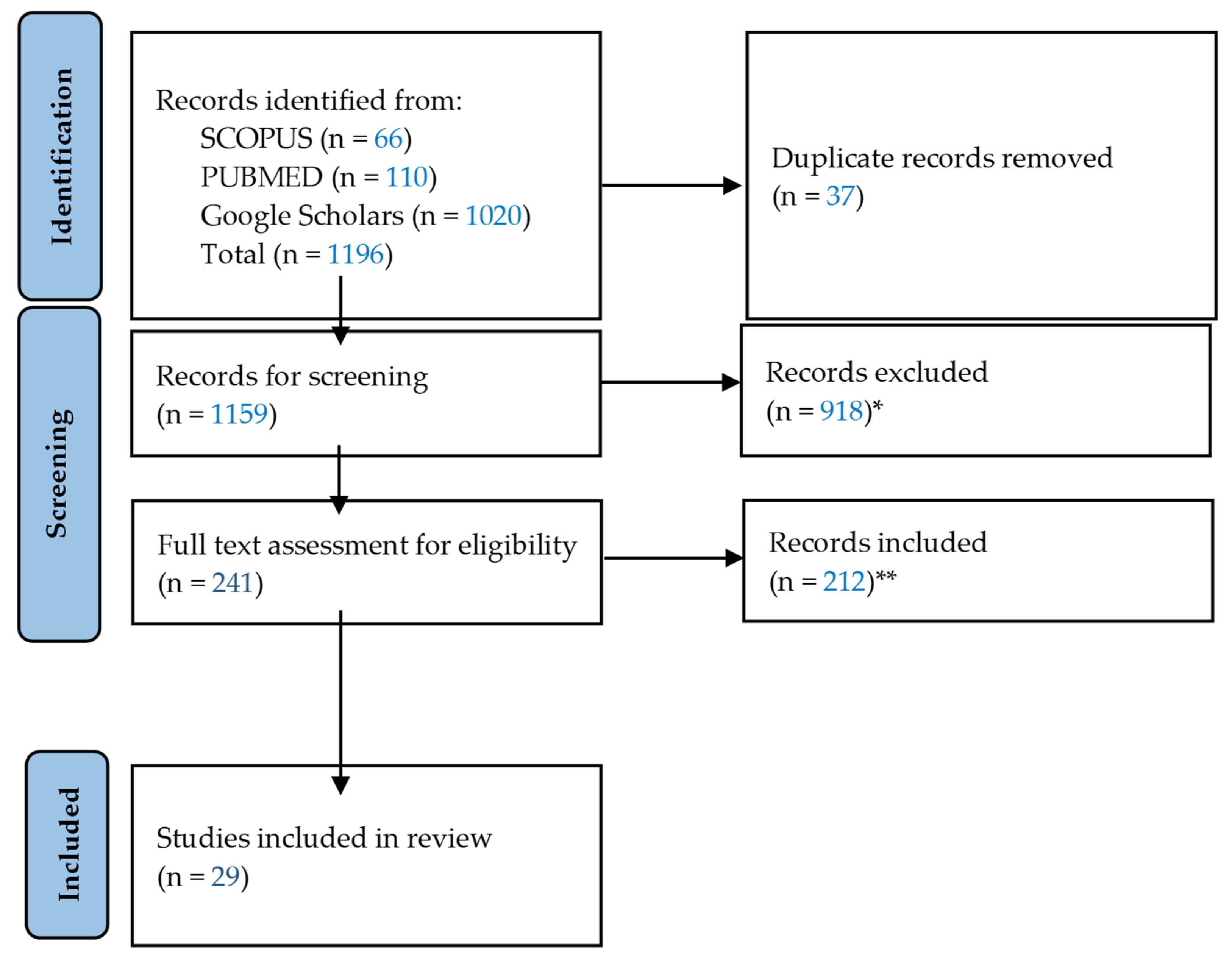

2. Materials and Methods

3. Results

3.1. Results

3.2. Types of Populations and Locations

3.3. Types of Low-Resource Settings

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Conflicts of Interest

References

- Kim, D.-M.; Park, S.-K.; Park, S.-G. A Study on the Performance Evaluation Criteria and Methods of Abdominal Ultrasound Devices Based on International Standards. Safety 2021, 7, 31. [Google Scholar] [CrossRef]

- Diagnostic Ultrasound Market Size to Hit USD 11 Bn by 2033. Available online: https://www.precedenceresearch.com/diagnostic-ultrasound-market (accessed on 27 March 2024).

- Stewart, K.A.; Navarro, S.M.; Kambala, S.; Tan, G.; Poondla, R.; Lederman, S.; Barbour, K.; Lavy, C. Trends in Ultrasound Use in Low and Middle Income Countries: A Systematic Review. Int. J. Matern. Child Health AIDS (IJMA) 2019, 9, 103–120. [Google Scholar] [CrossRef] [PubMed]

- Yoshida, T.; Noma, H.; Nomura, T.; Suzuki, A.; Mihara, T. Diagnostic accuracy of point-of-care ultrasound for shock: A systematic review and meta-analysis. Crit. Care 2023, 27, 200. [Google Scholar] [CrossRef] [PubMed]

- Lee, L.; DeCara, J.M. Point-of-Care Ultrasound. Curr. Cardiol. Rep. 2020, 22, 149. [Google Scholar] [CrossRef]

- Jhagru, R.; Singh, R.; Rupp, J. Evaluation of an emergency medicine point-of-care ultrasound curriculum adapted for a resource-limited setting in Guyana. Int. J. Emerg. Med. 2023, 16, 57. [Google Scholar] [CrossRef]

- Ganchi, F.A.; Hardcastle, T.C. Role of Point-of-Care Diagnostics in Lower- and Middle-Income Countries and Austere Environments. Diagnostics 2023, 13, 1941. [Google Scholar] [CrossRef] [PubMed]

- Dana, E.; Nour, A.M.; Kpa’hanba, G.A.; Khan, J.S. Point-of-Care Ultrasound (PoCUS) and Its Potential to Advance Patient Care in Low-Resource Settings and Conflict Zones. Disaster Med. Public Health Prep. 2023, 17, e417. [Google Scholar] [CrossRef] [PubMed]

- Fritz, F.; Tilahun, B.; Dugas, M. Success criteria for electronic medical record implementations in low-resource settings: A systematic review. J. Am. Med. Inform. Assoc. 2015, 22, 479–488. [Google Scholar] [CrossRef] [PubMed]

- Venkatayogi, N.; Gupta, M.; Gupta, A.; Nallaparaju, S.; Cheemalamarri, N.; Gilari, K.; Pathak, S.; Vishwanath, K.; Soney, C.; Bhattacharya, T.; et al. From Seeing to Knowing with Artificial Intelligence: A Scoping Review of Point-of-Care Ultrasound in Low-Resource Settings. Appl. Sci. 2023, 13, 8427. [Google Scholar] [CrossRef]

- Wanjiku, G.W.; Bell, G.; Wachira, B. Assessing a novel point-of-care ultrasound training program for rural healthcare providers in Kenya. BMC Health Serv. Res. 2018, 18, 607. [Google Scholar] [CrossRef]

- Reynolds, T.A.; Amato, S.; Kulola, I.; Chen, C.-J.J.; Mfinanga, J.; Sawe, H.R. Impact of point-of-care ultrasound on clinical decision-making at an urban emergency department in Tanzania. PLoS ONE 2018, 13, e0194774. [Google Scholar] [CrossRef]

- Burleson, S.L.; Swanson, J.F.; Shufflebarger, E.F.; Wallace, D.W.; Heimann, M.A.; Crosby, J.C.; Pigott, D.C.; Gullett, J.P.; Thompson, M.A.; Greene, C.J. Evaluation of a novel handheld point-of-care ultrasound device in an African emergency department. Ultrasound J. 2020, 12, 53. [Google Scholar] [CrossRef]

- Valderrama, C.E.; Marzbanrad, F.; Stroux, L.; Martinez, B.; Hall-Clifford, R.; Liu, C.; Katebi, N.; Rohloff, P.; Clifford, G.D. Improving the Quality of Point of Care Diagnostics with Real-Time Machine Learning in Low Literacy LMIC Settings. In Proceedings of the 1st ACM SIGCAS Conference on Computing and Sustainable Societies, COMPASS ’18, San Jose, CA, USA, 20–22 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; Volume 20, pp. 1–11. [Google Scholar] [CrossRef]

- Dreyfuss, A.; Martin, D.A.; Farro, A.; Inga, R.; Enriquez, S.; Mantuani, D.; Nagdev, A. A Novel Multimodal Approach to Point-of-Care Ultrasound Education in Low-Resource Settings. West. J. Emerg. Med. 2020, 21, 1017–1021. [Google Scholar] [CrossRef]

- Vinayak, S.; Temmerman, M.; Villeirs, G.; Brownie, S.M. A Curriculum Model for Multidisciplinary Training of Midwife Sonographers in a Low Resource Setting. J. Multidiscip. Healthc. 2021, 14, 2833–2844. [Google Scholar] [CrossRef] [PubMed]

- Maw, A.M.; Galvin, B.; Henri, R.; Yao, M.; Exame, B.; Fleshner, M.; Fort, M.P.; Morris, M.A. Stakeholder Perceptions of Point-of-Care Ultrasound Implementation in Resource-Limited Settings. Diagnostics 2019, 9, 153. [Google Scholar] [CrossRef]

- Dougherty, A.; Kasten, M.; DeSarno, M.; Badger, G.; Streeter, M.; Jones, D.C.; Sussman, B.; DeStigter, K. Validation of a Telemedicine Quality Assurance Method for Point-of-Care Obstetric Ultrasound Used in Low-Resource Settings. J. Ultrasound Med. 2021, 40, 529–540. [Google Scholar] [CrossRef]

- Chen, J.; Dobron, A.; Esterson, A.; Fuchs, L.; Glassberg, E.; Hoppenstein, D.; Kalandarev-Wilson, R.; Netzer, I.; Nissan, M.; Ovsiovich, R.S.; et al. A randomized, controlled, blinded evaluation of augmenting point-of-care ultrasound and remote telementored ultrasound in inexperienced operators. Isr. Med. Assoc. J. 2022, 24, 596–601. [Google Scholar] [PubMed]

- Dreizler, L.; Wanjiku, G.W. Tele-ECHO for Point-of-Care Ultrasound in Rural Kenya: A Feasibility Study. Rhode Island Med. J. 2019, 102, 28–31. [Google Scholar]

- Kaur, P.; Mack, A.A.; Patel, N.; Pal, A.; Singh, R.; Michaud, A.; Mulflur, M. Unlocking the Potential of Artificial Intelligence (AI) for Healthcare. In Artificial Intelligence in Medicine and Surgery—An Exploration of Current Trends, Potential Opportunities, and Evolving Threats; IntechOpen: London, UK, 2023; Volume 1. [Google Scholar] [CrossRef]

- Drukker, L.; Noble, J.A.; Papageorghiou, A.T. Introduction to artificial intelligence in ultrasound imaging in obstetrics and gynecology. Ultrasound Obstet. Gynecol. 2020, 56, 498–505. [Google Scholar] [CrossRef]

- Brattain, L.J.; Pierce, T.T.; Gjesteby, L.A.; Johnson, M.R.; DeLosa, N.D.; Werblin, J.S.; Gupta, J.F.; Ozturk, A.; Wang, X.; Li, Q.; et al. AI-Enabled, Ultrasound-Guided Handheld Robotic Device for Femoral Vascular Access. Biosensors 2021, 11, 522. [Google Scholar] [CrossRef]

- Wu, G.-G.; Zhou, L.-Q.; Xu, J.-W.; Wang, J.-Y.; Wei, Q.; Deng, Y.-B.; Cui, X.-W.; Dietrich, C.F. Artificial intelligence in breast ultrasound. World J. Radiol. 2019, 11, 19–26. [Google Scholar] [CrossRef] [PubMed]

- Hareendranathan, A.R.; Chahal, B.; Ghasseminia, S.; Zonoobi, D.; Jaremko, J.L. Impact of scan quality on AI assessment of hip dysplasia ultrasound. J. Ultrasound 2021, 25, 145–153. [Google Scholar] [CrossRef] [PubMed]

- Shaddock, L.; Smith, T. Potential for use of portable ultrasound devices in rural and remote settings in australia and other developed countries: A systematic review. J. Multidiscip. Health 2022, 15, 605–625. [Google Scholar] [CrossRef] [PubMed]

- Becker, D.M.; Tafoya, C.A.; Becker, S.L.; Kruger, G.H.; Tafoya, M.J.; Becker, T.K. The use of portable ultrasound devices in low- and middle-income countries: A systematic review of the literature. Trop. Med. Int. Health 2015, 21, 294–311. [Google Scholar] [CrossRef] [PubMed]

- Covidence—Better Systematic Review Management. Covidence. Available online: https://www.covidence.org/ (accessed on 27 March 2024).

- Nhat, P.T.H.; Van Hao, N.; Tho, P.V.; Kerdegari, H.; Pisani, L.; Thu, L.N.M.; Phuong, L.T.; Duong, H.T.H.; Thuy, D.B.; McBride, A.; et al. Clinical benefit of AI-assisted lung ultrasound in a resource-limited intensive care unit. Crit. Care 2023, 27, 257. [Google Scholar] [CrossRef] [PubMed]

- Libon, J.; Ng, C.; Bailey, A.; Hareendranathan, A.; Joseph, R.; Dulai, S. Remote diagnostic imaging using artificial intelligence for diagnosing hip dysplasia in infants: Results from a mixed-methods feasibility pilot study. Paediatr. Child Health 2023, 28, 285–290. [Google Scholar] [CrossRef] [PubMed]

- Cho, H.; Song, I.; Jang, J.; Yoo, Y. A Lightweight Deep Learning Network on a System-on-Chip for Wearable Ultrasound Bladder Volume Measurement Systems: Preliminary Study. Bioengineering 2023, 10, 525. [Google Scholar] [CrossRef] [PubMed]

- Sultan, L.R.; Haertter, A.; Al-Hasani, M.; Demiris, G.; Cary, T.W.; Tung-Chen, Y.; Sehgal, C.M. Can Artificial Intelligence Aid Diagnosis by Teleguided Point-of-Care Ultrasound? A Pilot Study for Evaluating a Novel Computer Algorithm for COVID-19 Diagnosis Using Lung Ultrasound. AI 2023, 4, 875–887. [Google Scholar] [CrossRef] [PubMed]

- Perera, S.; Adhikari, S.; Yilmaz, A. Pocformer: A Lightweight Transformer Architecture for Detection of COVID-19 Using Point of Care Ultrasound. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 195–199. [Google Scholar]

- Aujla, S.; Mohamed, A.; Tan, R.; Magtibay, K.; Tan, R.; Gao, L.; Khan, N.; Umapathy, K. Classification of lung pathologies in neonates using dual-tree complex wavelet transform. Biomed. Eng. Online 2023, 22, 115. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Hawash, H.; Alnowibet, K.A.; Mohamed, A.W.; Sallam, K.M. Interpretable Deep Learning for Discriminating Pneumonia from Lung Ultrasounds. Mathematics 2022, 10, 4153. [Google Scholar] [CrossRef]

- Jana, B.; Biswas, R.; Nath, P.K.; Saha, G.; Banerjee, S. Smartphone Based Point-of-Care System Using Continuous Wave Portable Doppler. IEEE Trans. Instrum. Meas. 2020, 69, 8352–8361. [Google Scholar] [CrossRef]

- Hannan, D.; Nesbit, S.C.; Wen, X.; Smith, G.; Zhang, Q.; Goffi, A.; Chan, V.; Morris, M.J.; Hunninghake, J.C.; Villalobos, N.E.; et al. MobilePTX: Sparse Coding for Pneumothorax Detection Given Limited Training Examples. Proc. AAAI Conf. Artif. Intell. 2023, 37, 15675–15681. [Google Scholar] [CrossRef]

- Ekambaram, K.; Hassan, K. Establishing a Novel Diagnostic Framework Using Handheld Point-of-Care Focused-Echocardiography (HoPE) for Acute Left-Sided Cardiac Valve Emergencies: A Bayesian Approach for Emergency Physicians in Resource-Limited Settings. Diagnostics 2023, 13, 2581. [Google Scholar] [CrossRef] [PubMed]

- Khan, N.H.; Tegnander, E.; Dreier, J.M.; Eik-Nes, S.; Torp, H.; Kiss, G. Automatic measurement of the fetal abdominal section on a portable ultrasound machine for use in low and middle income countries. In Proceedings of the 2016 IEEE International Ultrasonics Symposium (IUS), Tours, France, 18–21 September 2016; pp. 1–4. [Google Scholar] [CrossRef]

- van den Heuvel, T.L.A.; Petros, H.; Santini, S.; de Korte, C.L.; van Ginneken, B. Automated Fetal Head Detection and Circumference Estimation from Free-Hand Ultrasound Sweeps Using Deep Learning in Resource-Limited Countries. Ultrasound Med. Biol. 2019, 45, 773–785. [Google Scholar] [CrossRef] [PubMed]

- Jafari, M.; Girgis, H.; Van Woudenberg, N.; Liao, Z.; Rohling, R.; Gin, K.; Abolmaesumi, P.; Tsang, T. Automatic Biplane Left Ventricular Ejection Fraction Estimation with Mobile Point-of-Care Ultrasound Using Multi-Task Learning and Adversarial Training. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1027–1037. [Google Scholar] [CrossRef]

- Al-Zogbi, L.; Singh, V.; Teixeira, B.; Ahuja, A.; Bagherzadeh, P.S.; Kapoor, A.; Saeidi, H.; Fleiter, T.; Krieger, A. Autonomous Robotic Point-of-Care Ultrasound Imaging for Monitoring of COVID-19-Induced Pulmonary Diseases. Front. Robot. AI 2021, 8, 645756. [Google Scholar] [CrossRef]

- Blaivas, M.; Arntfield, R.; White, M. DIY AI, deep learning network development for automated image classification in a point-of-care ultrasound quality assurance program. J. Am. Coll. Emerg. Physicians Open 2020, 1, 124–131. [Google Scholar] [CrossRef]

- Baloescu, C.; Toporek, G.; Kim, S.; McNamara, K.; Liu, R.; Shaw, M.M.; McNamara, R.L.; Raju, B.I.; Moore, C.L. Automated Lung Ultrasound B-line Assessment Using a Deep Learning Algorithm. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2020, 67, 2312–2320. [Google Scholar] [CrossRef] [PubMed]

- Cheema, B.S.; Walter, J.; Narang, A.; Thomas, J.D. Artificial Intelligence–Enabled POCUS in the COVID-19 ICU. JACC Case Rep. 2021, 3, 258–263. [Google Scholar] [CrossRef]

- Blaivas, M.; Blaivas, L.N.; Campbell, K.; Thomas, J.; Shah, S.; Yadav, K.; Liu, Y.T. Making Artificial Intelligence Lemonade Out of Data Lemons. J. Ultrasound Med. 2021, 41, 2059–2069. [Google Scholar] [CrossRef]

- Cho, H.; Kim, D.; Chang, S.; Kang, J.; Yoo, Y. A system-on-chip solution for deep learning-based automatic fetal biometric measurement. Expert Syst. Appl. 2024, 237, 121482. [Google Scholar] [CrossRef]

- Zemi, N.Z.; Bunnell, A.; Valdez, D.; Shepherd, J.A. Assessing the feasibility of AI-enhanced portable ultrasound for improved early detection of breast cancer in remote areas. In Proceedings of the 17th International Workshop on Breast Imaging (IWBI), Chicago, IL, USA, 9–12 June 2024; Volume 13174, pp. 88–94. [Google Scholar] [CrossRef]

- Karlsson, J.; Arvidsson, I.; Sahlin, F.; Åström, K.; Overgaard, N.C.; Lång, K.; Heyden, A. Classification of point-of-care ultrasound in breast imaging using deep learning. In Proceedings of the Medical Imaging 2023: Computer-Aided Diagnosis, San Diego, CA, USA, 19–23 February 2023; Volume 12465, pp. 191–199. [Google Scholar] [CrossRef]

- MacLean, A.; Abbasi, S.; Ebadi, A.; Zhao, A.; Pavlova, M.; Gunraj, H.; Xi, P.; Kohli, S.; Wong, A. COVID-Net US: A Tailored, Highly Efficient, Self-Attention Deep Convolutional Neural Network Design for Detection of COVID-19 Patient Cases from Point-of-Care Ultrasound Imaging. In Domain Adaptation and Representation Transfer, and Affordable Healthcare and AI for Resource Diverse Global Health; Springer: Cham, Switzerland, 2021; pp. 191–202. [Google Scholar] [CrossRef]

- Adedigba, A.P.; Adeshina, S.A. Deep Learning-based Classification of COVID-19 Lung Ultrasound for Tele-operative Robot-assisted diagnosis. In Proceedings of the 2021 1st International Conference on Multidisciplinary Engineering and Applied Science (ICMEAS), Abuja, Nigeria, 15–16 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Pokaprakarn, T.; Prieto, J.C.; Price, J.T.; Kasaro, M.P.; Sindano, N.; Shah, H.R.; Peterson, M.; Akapelwa, M.M.; Kapilya, F.M.; Sebastião, Y.V.; et al. AI Estimation of Gestational Age from Blind Ultrasound Sweeps in Low-Resource Settings. NEJM Evid. 2022, 1, EVIDoa2100058. [Google Scholar] [CrossRef]

- Karnes, M.; Perera, S.; Adhikari, S.; Yilmaz, A. Adaptive Few-Shot Learning PoC Ultrasound COVID-19 Diagnostic System. In Proceedings of the IEEE Biomedical Circuits and Systems Conference (BioCAS), Berlin, Germany, 7–9 October 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Viswanathan, A.V.; Pokaprakarn, T.; Kasaro, M.P.; Shah, H.R.; Prieto, J.C.; Benabdelkader, C.; Sebastião, Y.V.; Sindano, N.; Stringer, E.; Stringer, J.S.A. Deep learning to estimate gestational age from fly-to cineloop videos: A novel approach to ultrasound quality control. Int. J. Gynecol. Obstet. 2024, 165, 1013–1021. [Google Scholar] [CrossRef] [PubMed]

- Zeng, E.Z.; Ebadi, A.; Florea, A.; Wong, A. COVID-Net L2C-ULTRA: An Explainable Linear-Convex Ultrasound Augmentation Learning Framework to Improve COVID-19 Assessment and Monitoring. Sensors 2024, 24, 1664. [Google Scholar] [CrossRef]

- Abhyankar, G.; Raman, R. Decision Tree Analysis for Point-of-Care Ultrasound Imaging: Precision in Constrained Healthcare Settings. In Proceedings of the 2024 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 24–26 April 2024; pp. 782–787. [Google Scholar] [CrossRef]

- Madhu, G.; Kautish, S.; Gupta, Y.; Nagachandrika, G.; Biju, S.M.; Kumar, M. XCovNet: An Optimized Xception Convolutional Neural Network for Classification of COVID-19 from Point-of-Care Lung Ultrasound Images. Multimed. Tools Appl. 2023, 83, 33653–33674. [Google Scholar] [CrossRef]

- Le, M.-P.T.; Voigt, L.; Nathanson, R.; Maw, A.M.; Johnson, G.; Dancel, R.; Mathews, B.; Moreira, A.; Sauthoff, H.; Gelabert, C.; et al. Comparison of four handheld point-of-care ultrasound devices by expert users. Ultrasound J. 2022, 14, 27. [Google Scholar] [CrossRef]

- Blaivas, M.; Blaivas, L.N.B.; Tsung, J.W. Deep Learning Pitfall: Impact of Novel Ultrasound Equipment Introduction on Algorithm Performance and the Realities of Domain Adaptation. J. Ultrasound Med. 2022, 41, 855–863. [Google Scholar] [CrossRef]

| Inclusion Criteria | Exclusion Criteria |

|---|---|

|

|

| Author | Population | Geography/ Country | Low-Resource Setting Type/ Department | AI Used | Objective |

|---|---|---|---|---|---|

| Nhat et al., 2023 [29] | Doctors, clinicians | Vietnam | LMIC/Intensive care unit (ICU) | Deep learning | Develop an AI solution that assists lung ultrasound (LUS) practitioners, especially with LUS interpretation, and assess its usefulness in a low-resource ICU. |

| Libon et al., 2023 [30] | Infants | Canada | Remote/Pediatrics | US FDA-cleared artificial intelligence (AI) screening device for infant hip dysplasia (DDH) | Evaluate the feasibility of implementing an artificial intelligence-enhanced portable ultrasound tool for infant hip dysplasia (DDH) screening in primary care by determining its effectiveness in practice and evaluating patient and provider feedback. |

| Cho et al., 2023 [31] | N/A | South Korea | Lack of computing resources/Urology | Deep learning | Develop a system for measuring bladder volume in ultrasound images that could be used in point-of-care settings. Create a system based on deep learning optimized for low-resource system-on-chip (SoC) due to its speed and accuracy, even on devices with limited computing power. This could improve bladder disorder diagnosis by making bladder volume assessment easier in situations when access to complex equipment is limited. |

| Sultan et al., 2023 [32] | Clinicians, patients | United States | Remote/Pulmonology | Deep learning | Propose the use of teleguided POCUS supported by AI technologies for monitoring COVID-19 patients by non-experienced personnel, including self-monitoring by the patients themselves in a remote setting. |

| Perera et al., 2021 [33] | N/A | United States | Rural and LMIC/Pulmonology | Deep learning | Present an image-based solution that automatically tests for COVID-19. This will allow for rapid mass testing to be conducted with or without a trained medical professional, which can be applied to rural environments and third-world countries. |

| Aujla et al., 2023 [34] | N/A | Canada | Remote and LMIC/Pulmonology and neonatology | Machine learning | Propose an automated point-of-care tool for classifying and interpreting neonatal lung ultrasound (LUS) images, which will be useful in remote or developing countries with a lack of well-trained clinicians. |

| Abdel-Basset et al., 2022 [35] | N/A | Egypt | Lack of computing resources/Pulmonology | Deep learning | Present a novel, lightweight, and interpretable deep learning framework that discriminates COVID-19 infection from other cases of pneumonia and normal cases suitable for deployment in point-of-care and/or resource-constrained settings. |

| Jana et al., 2020 [36] | Patients | India | Lack of computing resources/Cardiology | Machine learning | Develop a smartphone-based portable continuous-wave Doppler ultrasound system for diagnosis of peripheral arterial diseases based on the hemodynamic features in a way that is more cost-effective and power-efficient, making it suitable for low-resource settings with limited energy and computing resources. |

| Hannan et al., 2023 [37] | N/A | United States | Emergency/Emergency medicine | Deep learning | Develop a deep learning-driven classifier that can aid medical professionals in diagnosing whether a patient has pneumothorax based on POCUS images. Design the classifier to perform in a mobile phone using little training data to train the model, making it suitable for low-resource settings such as emergency and acute-care settings. |

| Ekambaram and Hassan, 2023 [38] | Patients | South Africa | LMIC and rural/Emergency medicine | Bayesian machine learning | Propose a novel, Bayesian-inspired, iterative diagnostic framework that uses point-of-care-focused echocardiography to evaluate the conditions of patients with acute cardiorespiratory failure and suspected severe left-sided valvular lesions. This overcomes the current limitation that diagnostic protocols cannot perform sufficient quantitative assessments of the left-sided heart valves. |

| Khan et al., 2016 [39] | 16 to 41-week-old fetuses | Norway | LMIC and rural/Obstetrics | Computer vision (OpenCV, Kalman-based tracker) | Develop an automatic method for localization of the presented section through the abdomen and measurement of the mean abdominal diameter (MAD) of a fetus designed to be operational in both traditional ultrasound settings and the rural areas of low- and middle-income countries. |

| Heuvel et al., 2019 [40] | Pregnant women | Ethiopia | LMIC/Obstetrics | Deep learning | Present a system that can automatically estimate the fetal head circumference (HC) from the point-of-care ultrasound image data obtained using the obstetric sweep protocol (OSP) to overcome the limitation of pregnant women in developing countries having no access to ultrasound imaging as it requires a trained sonographer to acquire and interpret the image. |

| Jafari et al., 2019 [41] | N/A | Canada | Lack of computing resources/Cardiology | Deep learning | Present a computationally efficient deep learning-based application for accurate left ventricular ejection fraction (LVEF) estimation. This application runs in real time on Android mobile devices that have either a wired or wireless connection to a cardiac POCUS device, making it suitable for a resource-limited environment. |

| Al-Zogbi et al., 2021 [42] | N/A | United States | Emergency/Pulmonology | Deep learning | Propose an autonomous robotic solution that enables point-of-care ultrasound scanning of COVID-19 patients’ lungs for diagnosis and staging through the development of an algorithm that can estimate the optimal position and orientation of an ultrasound probe on a patient’s body to image target points in lungs. This is useful in low-resource settings such as emergency situations where contact between healthcare workers and patients is not feasible (e.g., COVID-19 infection risk). |

| Blaivas et al., 2020 [43] | N/A | United States, Canada | Lack of computing resources/Various departments | Deep learning | Create and test a “do-it-yourself” (DIY) deep learning algorithm to classify ultrasound images to enhance the quality assurance workflow for POCUS programs to enable those in low-resource settings to leverage AI applications for medical images usually owned by large and well-funded companies. |

| Baloescu et al., 2020 [44] | N/A | United States | Lack of trained personnel/Emergency medicine | Deep learning | Develop and test a deep learning (DL) algorithm to quantify the assessment of B-lines in point-of-care lung ultrasound, which helps in diagnosing shortness of breath, a very common chief complaint in the emergency department (ED). This is useful in resource-limited settings where not enough experienced users are available as B-line identification and quantification can be a challenging skill for novice ultrasound users. |

| Cheema et al., 2021 [45] | Patients | United States | Lack of trained personnel/Cardiology | Deep learning | Present the novel use of a deep learning-derived technology trained on the skilled hand movements of cardiac sonographers that guides novice users to acquire high-quality bedside cardiac ultrasound images. This technology can have a role in resource-limited settings where cardiac sonographers are not readily available. |

| Blaivas et al., 2021 [46] | N/A | United States | Lack of data/Cardiology | Deep learning | Uses unrelated ultrasound window data (only apical 4-chamber views) to train a point-of-care ultrasound (POCUS) machine learning algorithm with fair mean absolute error (MAE) using data manipulation to simulate a different ultrasound examination. The outcome measured is the left ventricular ejection fraction. This may help future POCUS algorithm designs to overcome a paucity of POCUS databases. |

| Cho et al., 2024 [47] | Fetuses | South Korea | Lack of computing resources/Obstetrics | Deep learning | Proposes deep learning-based efficient automatic fetal biometry measurement method for the system-on-chip (SoC) solution. Results show feasibility in low-resource hardware settings such as portable ultrasound systems. |

| Zemi et al., 2024 [48] | N/A | United States | Remote/Oncology | Deep learning | Explores the feasibility of integrating artificial intelligence algorithms for breast cancer detection into a portable, point-of-care ultrasound device. Achieved a performance benchmark of at least 15 frames/second and suggests the usefulness of the proposed framework in remote settings. |

| Karlsson et al., 2023 [49] | N/A | United States | Lack of computing resources and LMIC/Obstetrics | Deep learning | Early detection of breast cancer is crucial for reducing morbidity and mortality, yet access to breast imaging is limited in low- and middle-income countries. This study explores the use of pocket-sized portable ultrasound devices (POCUS) combined with deep learning algorithms to classify breast lesions as a cost-effective solution. This study utilized a dataset of 1100 POCUS images, enhanced with synthetic images generated by CycleGAN, and achieved a high accuracy rate with a 95% confidence interval for AUC between 93.5% and 96.6%. |

| MacLean et al., 2021 [50] | COVID-19 patients | Canada | Lack of computing resources/Pulmonology | Deep learning | Introduces COVID-Net US, a deep convolutional neural network for COVID-19 screening using lung POCUS images. This network is a highly efficient and a high-performing deep neural network architecture that is small enough to be implemented on low-cost devices, allowing for limited additional resources needed when used with POCUS devices in low-resource environments. |

| Adedigba et al., 2021 [51] | COVID-19 patients | Nigeria | Lack of computing resources/Pulmonology | Deep learning | Develops a tele-operated robot to be deployed for diagnosing COVID-19 at the Nigerian National Hospital, Abuja, driven by a deep learning-based algorithm that automatically classifies lung ultrasound images for rapid, efficient, and accurate diagnosis of patients. The gantry-style positioning unit of the robot combined with the efficient deep learning algorithm is less costly to fabricate and is better suited for low-resource regions than robotic arms used in the status quo. |

| Pokaprakarn et al., 2022 [52] | Pregnant women | United States, Zambia | Lack of computing resources and LMIC/Obstetrics | Deep learning | Ultrasound is crucial for estimating gestational age but is limited in low-resource settings due to high costs and the need for trained sonographers. This study develops a deep learning algorithm based on the blind ultrasound sweeps acquired from 4695 pregnant women in North Carolina and Zambia, showing a mean absolute error (MAE) of 3.9 days compared with 4.7 days for standard biometry. The AI model’s accuracy is comparable to trained sonographers, even when using low-cost devices and untrained users in Zambia. |

| Karnes et al., 2021 [53] | COVID-19 patients | United States | Lack of computing resources and LMIC/Pulmonology | Deep learning | Introduces an innovative ultrasound imaging point-of-care (PoC) COVID-19 diagnostic system that employs few-shot learning (FSL) to create encoded disease state models. The system uses a novel vocabulary-based feature processing method to compress ultrasound images into discriminative descriptions, enhancing computational efficiency and diagnostic performance in PoC settings. The results suggest the ability of the FSL-based system in extending the accessibility of rapid LUS diagnostics to resource-limited clinics. |

| Viswanathan et al., 2024 [54] | Fetuses | United States, Zambia | Lack of computing resources and LMIC/Obstetrics | Deep learning | Develops a deep learning AI model to estimate gestational age (GA) from brief ultrasound videos (fly-to cineloops) with the aim of improving the quality and consistency of obstetric sonography in low-resource settings by the model, which outperformed expert sonographers in GA estimation and can flag grossly inaccurate measurements, providing a no-cost quality control tool that can be integrated into both low-cost and commercial ultrasound devices. This innovation is crucial for enhancing ultrasound access and accuracy, particularly for novice users in low-resource environments. |

| Zeng et al., 2024 [55] | COVID-19 patients | Canada | Lack of computing resources and lack of trained personnel/Pulmonology | Deep learning | Proposes COVID-Net L2C-ULTRA, a deep neural network framework designed to handle the heterogeneity of ultrasound probes by using extended linear-convex ultrasound augmentation learning. Experimental results show significant performance improvements in test accuracy, AUC, recall, and precision, making it an effective tool for enhancing COVID-19 assessment in resource-limited settings owing to its portability, safety, and cost-effectiveness. |

| Abhyankar et al., 2024 [56] | N/A | India | Lack of computing resources/Various departments | Machine learning | Presents an intelligent decision support system for point-of-care ultrasound imaging, emphasizing resource-limited healthcare settings. Utilizing a decision tree algorithm on a Raspberry Pi-powered portable ultrasound device enhances image quality and diagnostic accuracy by making informed decisions during image capture and processing. Continuous data collection and user input allow for adaptive learning and optimization, ensuring reliability and regulatory compliance. This system aims to provide cost-effective, high-quality ultrasound imaging, improving healthcare accessibility and quality in underserved areas. |

| Madhu et al., 2023 [57] | COVID-19 patients | India | Lack of equipment/Pulmonology | Deep learning | Proposes an optimized Xception convolutional neural network (XCovNet) for COVID-19 detection from POCUS images. Depth-wise spatial convolution layers are used to accelerate convolution computation in the XCovNet model, which performs better on POCUS imaging than on other models, including COVID-19 classification. The results of the trial demonstrate that the proposed technique achieves the best performance among recent deep learning studies on POCUS imaging. POCUS is a viable option for developing COVID-19 screening systems based on medical imaging in resource-constrained settings where traditional testing methods may be scarce and where CT or X-ray screening is unavailable. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Fischetti, C.; Guy, M.; Hsu, E.; Fox, J.; Young, S.D. Artificial Intelligence (AI) Applications for Point of Care Ultrasound (POCUS) in Low-Resource Settings: A Scoping Review. Diagnostics 2024, 14, 1669. https://doi.org/10.3390/diagnostics14151669

Kim S, Fischetti C, Guy M, Hsu E, Fox J, Young SD. Artificial Intelligence (AI) Applications for Point of Care Ultrasound (POCUS) in Low-Resource Settings: A Scoping Review. Diagnostics. 2024; 14(15):1669. https://doi.org/10.3390/diagnostics14151669

Chicago/Turabian StyleKim, Seungjun, Chanel Fischetti, Megan Guy, Edmund Hsu, John Fox, and Sean D. Young. 2024. "Artificial Intelligence (AI) Applications for Point of Care Ultrasound (POCUS) in Low-Resource Settings: A Scoping Review" Diagnostics 14, no. 15: 1669. https://doi.org/10.3390/diagnostics14151669

APA StyleKim, S., Fischetti, C., Guy, M., Hsu, E., Fox, J., & Young, S. D. (2024). Artificial Intelligence (AI) Applications for Point of Care Ultrasound (POCUS) in Low-Resource Settings: A Scoping Review. Diagnostics, 14(15), 1669. https://doi.org/10.3390/diagnostics14151669