Deep Learning-Based Joint Effusion Classification in Adult Knee Radiographs: A Multi-Center Prospective Study

Abstract

:1. Introduction

2. Materials and Methods

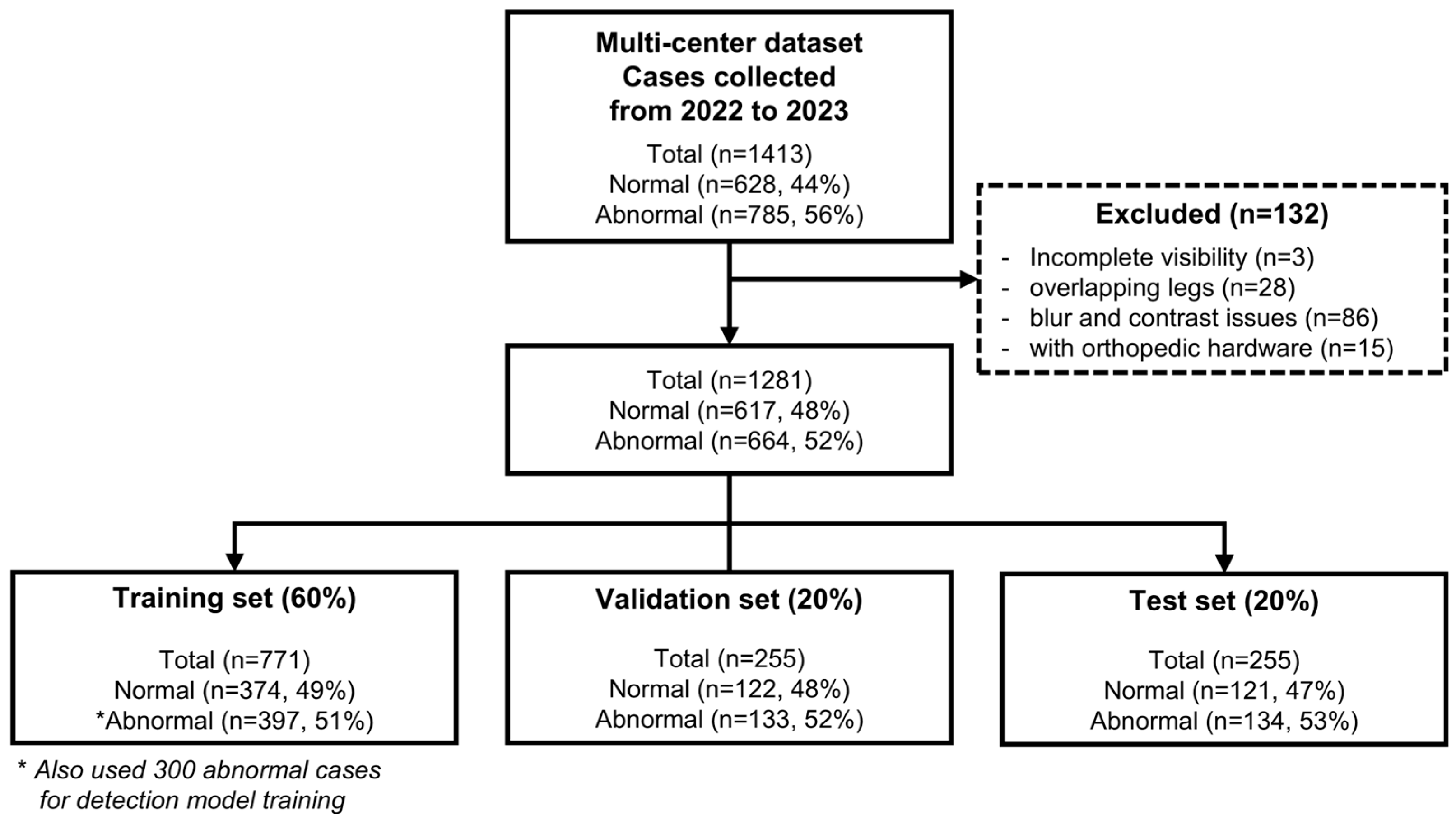

2.1. Patient Population

2.2. X-ray Acquisition Parameters

2.3. Methodology

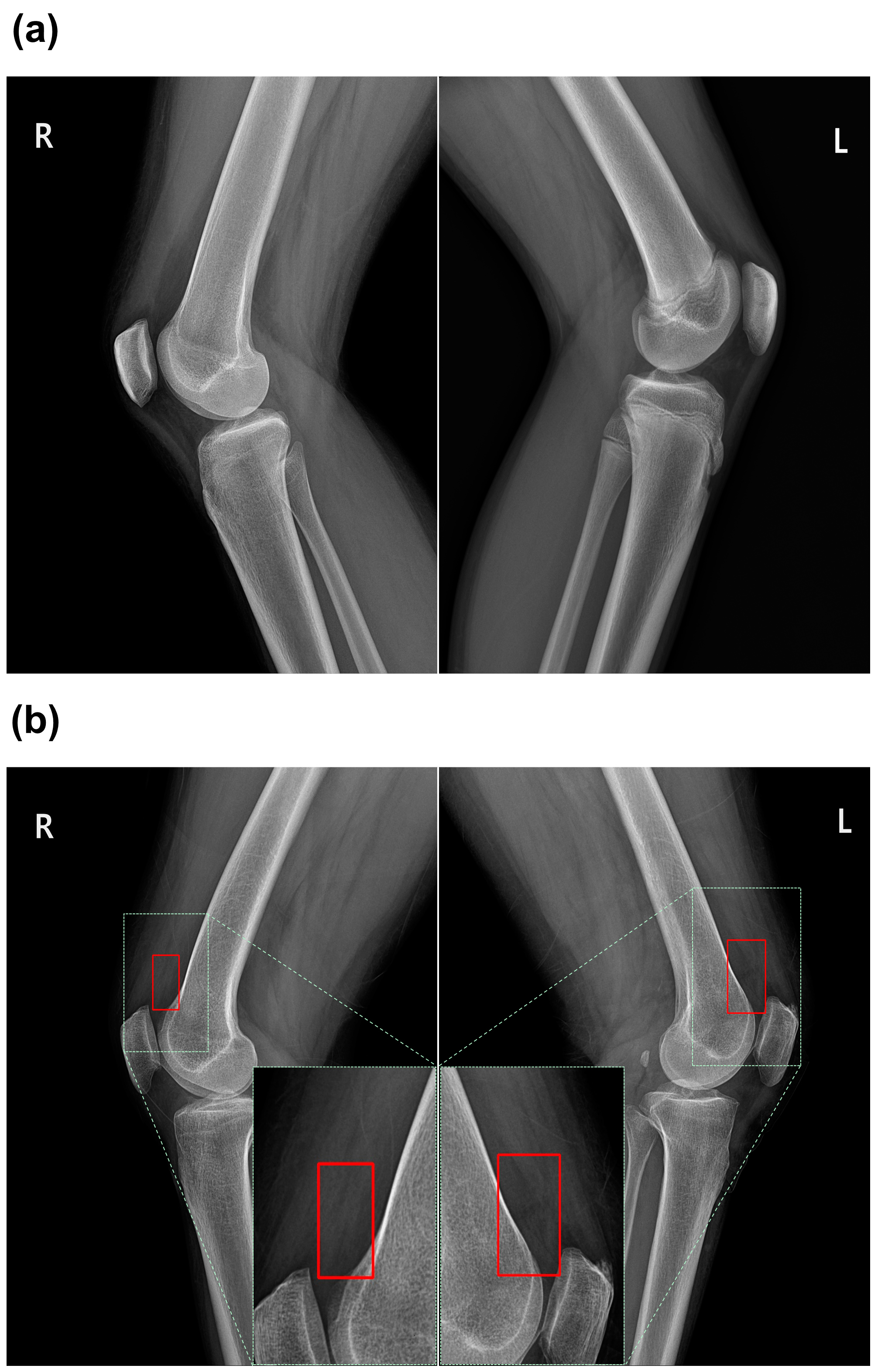

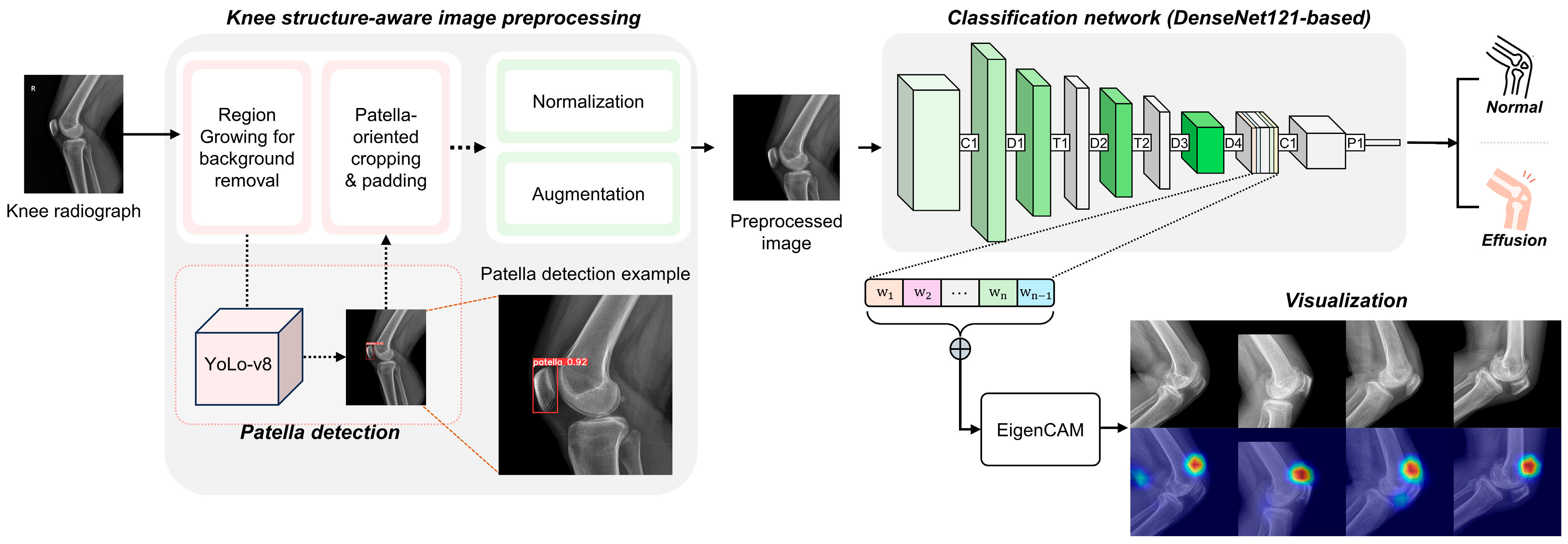

2.3.1. Knee Structure-Aware Image Preprocessing

2.3.2. DL Architecture

2.3.3. Model Specifications

2.4. Statistical Analysis

3. Results

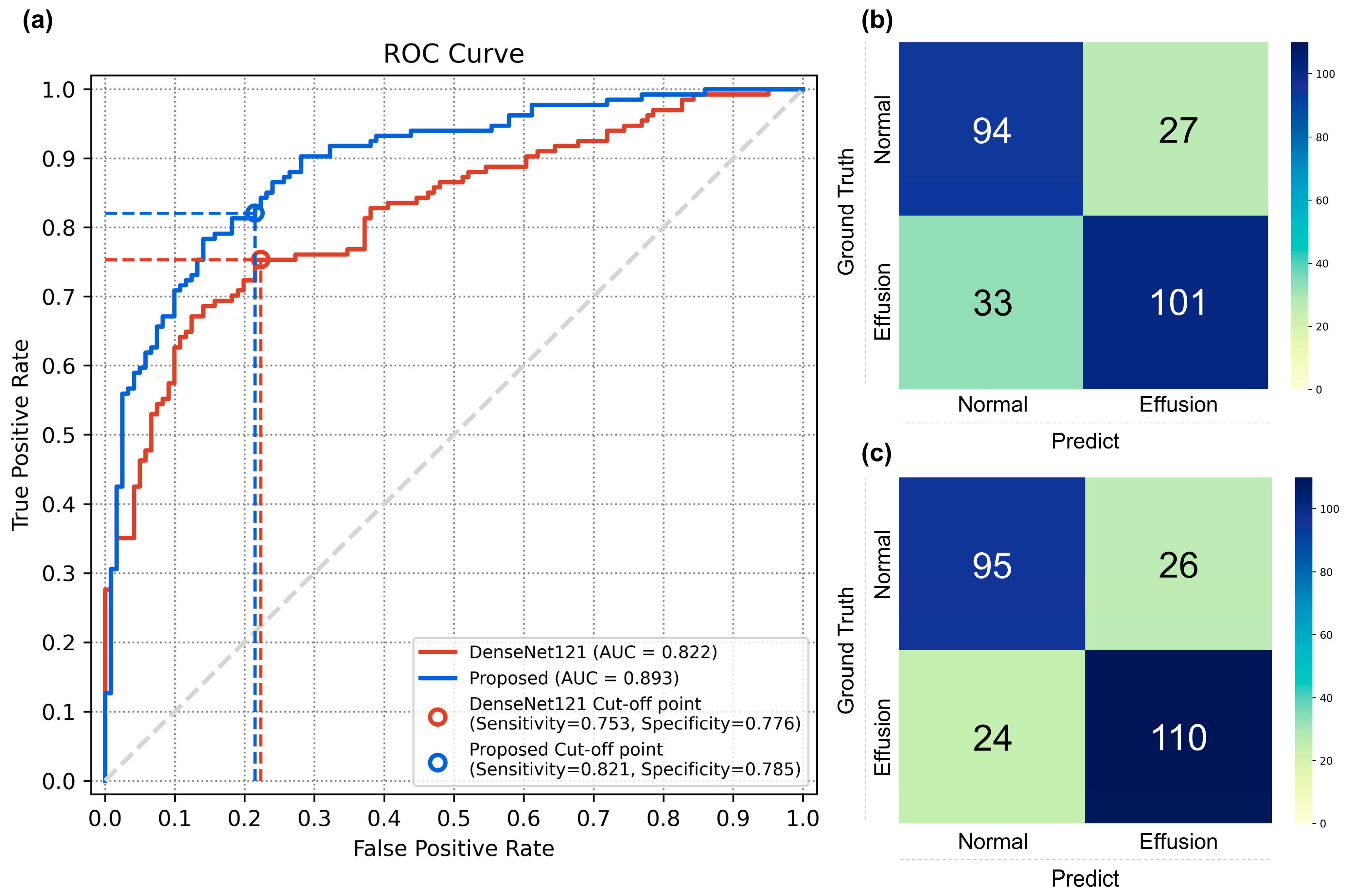

3.1. Performance of the Classification Models

3.2. Comparison with Physician Evaluation

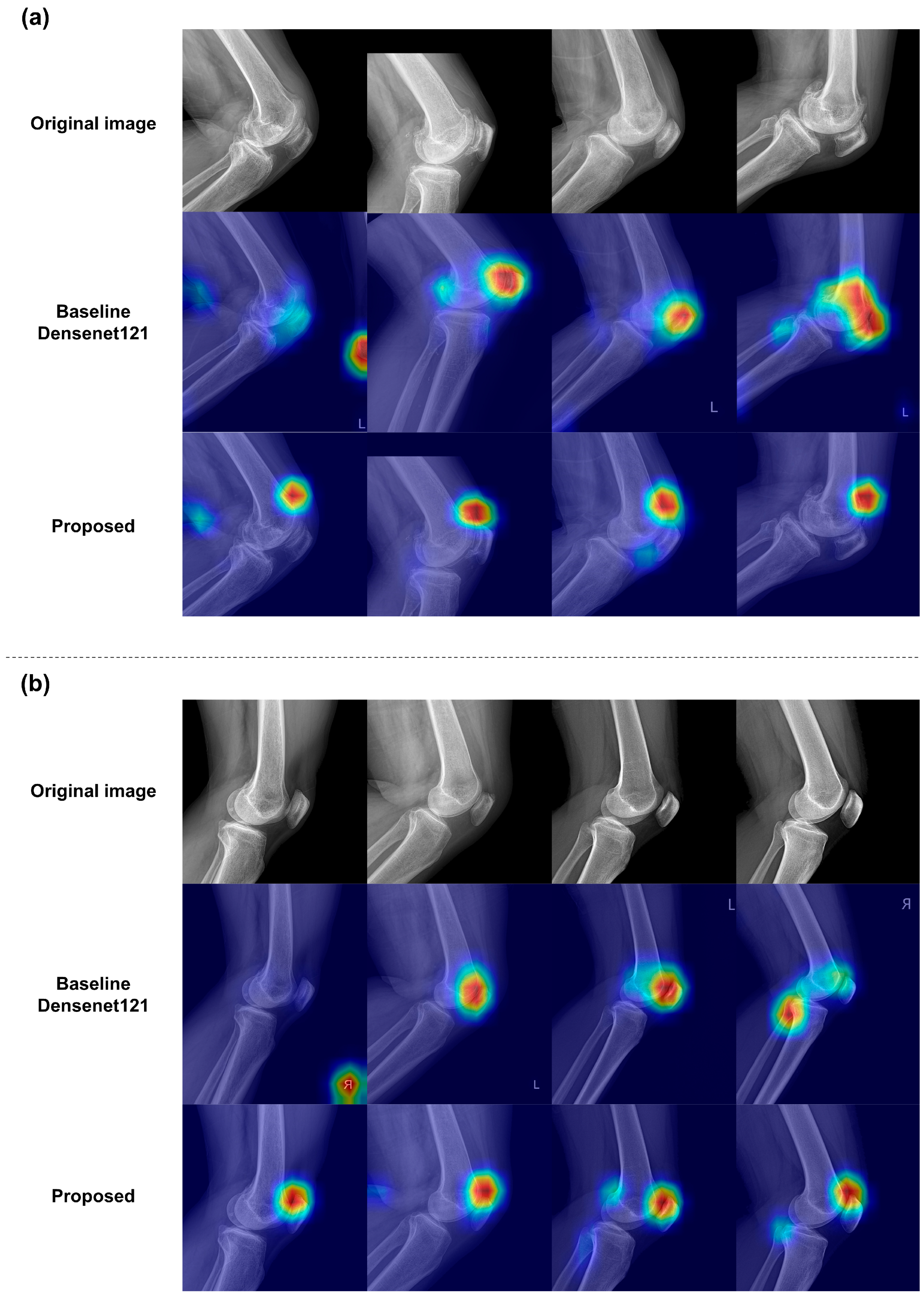

3.3. Qualitative Results of the Classification Models

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hill, C.L.; Gale, D.C.; Chaisson, C.E.; Skinner, K.; Kazis, L.; Gale, M.E.; Felson, D.T. Knee effusions, popliteal cysts, and synovial thickening: Association with knee pain in osteoarthritis. J. Rheumatol. 2001, 28, 1330–1337. [Google Scholar] [PubMed]

- Calmbach, W.L.; Hutchens, M. Evaluation of patients presenting with knee pain: Part I. History, physical examination, radiographs, and laboratory tests. Am. Fam. Physician 2003, 68, 907–912. [Google Scholar] [PubMed]

- Cole, B.J.; Harner, C.D. Degenerative arthritis of the knee in active patients: Evaluation and management. JAAOS J. Am. Acad. Orthop. Surg. 1999, 7, 389–402. [Google Scholar] [CrossRef] [PubMed]

- Stratford, P. Electromyography of the quadriceps femoris muscles in subjects with normal knees and acutely effused knees. Phys. Ther. 1982, 62, 279–283. [Google Scholar] [CrossRef] [PubMed]

- Scanzello, C.R.; Goldring, S.R. The role of synovitis in osteoarthritis pathogenesis. Bone 2012, 51, 249–257. [Google Scholar] [CrossRef]

- Chiba, D.; Ota, S.; Sasaki, E.; Tsuda, E.; Nakaji, S.; Ishibashi, Y. Knee effusion evaluated by ultrasonography warns knee osteoarthritis patients to develop their muscle atrophy: A three-year cohort study. Sci. Rep. 2020, 10, 8444. [Google Scholar] [CrossRef]

- Bachman, A.L. Roentgen diagnosis of knee-joint effusion. Radiology 1946, 46, 462–469. [Google Scholar] [CrossRef]

- Maricar, N.; Callaghan, M.J.; Parkes, M.J.; Felson, D.T. Clinical assessment of effusion in knee osteoarthritis—A systematic review. Semin. Arthritis Rheum. 2016, 45, 556–563. [Google Scholar] [CrossRef] [PubMed]

- Engelstad, B.L.; Friedman, E.M.; Murphy, W.A. Diagnosis of joint effusion on lateral and axial projections of the knee. Investig. Radiol. 1981, 16, 188–192. [Google Scholar] [CrossRef]

- Cecava, N.D.; Dieckman, S.; Banks, K.P.; Mansfield, L.T. Traumatic knee injury: Correlation of radiographic effusion size with the presence of internal derangement on magnetic resonance imaging. Emerg. Radiol. 2018, 25, 479–487. [Google Scholar] [CrossRef]

- Ehlke, M. 3d Reconstruction of Anatomical Structures from 2d X-ray Images. Ph.D. Thesis, Technische Universität Berlin, Berlin, Germany, 2021. [Google Scholar]

- Tiulpin, A.; Thevenot, J.; Rahtu, E.; Lehenkari, P.; Saarakkala, S. Automatic knee osteoarthritis diagnosis from plain radiographs: A deep learning-based approach. Sci. Rep. 2018, 8, 1727. [Google Scholar] [CrossRef] [PubMed]

- Kawathekar, P.P.; Karande, K.J. Severity analysis of Osteoarthritis of knee joint from X-ray images: A Literature review. In Proceedings of the 2014 International Conference on Signal Propagation and Computer Technology (ICSPCT 2014), Ajmer, India, 12–13 July 2014. [Google Scholar]

- Majidi, H.; Niksolat, F.; Anbari, K. Comparing the accuracy of radiography and sonography in detection of knee osteoarthritis: A diagnostic study. Open Access Maced. J. Med. Sci. 2019, 7, 4015. [Google Scholar] [CrossRef]

- Saleem, M.; Farid, M.S.; Saleem, S.; Khan, M.H. X-ray image analysis for automated knee osteoarthritis detection. Signal Image Video Process. 2020, 14, 1079–1087. [Google Scholar] [CrossRef]

- Rana, M.; Bhushan, M. Machine learning and deep learning approach for medical image analysis: Diagnosis to detection. Multimed. Tools Appl. 2023, 82, 26731–26769. [Google Scholar] [CrossRef] [PubMed]

- Choi, E.; Park, D.; Son, G.; Bak, S.; Eo, T.; Youn, D.; Hwang, D. Weakly supervised deep learning for diagnosis of multiple vertebral compression fractures in CT. Eur. Radiol. 2023, 34, 3750–3760. [Google Scholar] [CrossRef]

- Shin, H.; Kim, H.; Kim, S.; Jun, Y.; Eo, T.; Hwang, D. SDC-UDA: Volumetric unsupervised domain adaptation framework for slice-direction continuous cross-modality medical image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Park, D.; Jang, R.; Chung, M.J.; An, H.J.; Bak, S.; Choi, E.; Hwang, D. Development and validation of a hybrid deep learning–machine learning approach for severity assessment of COVID-19 and other pneumonias. Sci. Rep. 2023, 13, 13420. [Google Scholar] [CrossRef]

- Shin, H.; Park, J.E.; Jun, Y.; Eo, T.; Lee, J.; Kim, J.E.; Lee, D.H.; Moon, H.H.; Park, S.I.; Kim, S.; et al. Deep learning referral suggestion and tumour discrimination using explainable artificial intelligence applied to multiparametric MRI. Eur. Radiol. 2023, 33, 5859–5870. [Google Scholar] [CrossRef]

- Barshooi, A.H.; Amirkhani, A. A novel data augmentation based on Gabor filter and convolutional deep learning for improving the classification of COVID-19 chest X-ray images. Biomed. Signal Process. Control 2022, 72, 103326. [Google Scholar] [CrossRef]

- Nasser, Y.; El Hassouni, M.; Hans, D.; Jennane, R. A discriminative shape-texture convolutional neural network for early diagnosis of knee osteoarthritis from X-ray images. Phys. Eng. Sci. Med. 2023, 46, 827–837. [Google Scholar] [CrossRef]

- Rutherford, D.J.; Baker, M. Knee moment outcomes using inverse dynamics and the cross product function in moderate knee osteoarthritis gait: A comparison study. J. Biomech. 2018, 78, 150–154. [Google Scholar] [CrossRef]

- Gaj, S.; Yang, M.; Nakamura, K.; Li, X. Automated cartilage and meniscus segmentation of knee MRI with conditional generative adversarial networks. Magn. Reson. Med. 2020, 84, 437–449. [Google Scholar] [CrossRef]

- Astuto, B.; Flament, I.; Namiri, N.K.; Shah, R.; Bharadwaj, U.; MLink, T.; DBucknor, M.; Pedoia, V.; Majumdar, S. Automatic deep learning–assisted detection and grading of abnormalities in knee MRI studies. Radiol. Artif. Intell. 2021, 3, e200165. [Google Scholar] [CrossRef]

- Huhtanen, J.T.; Nyman, M.; Doncenco, D.; Hamedian, M.; Kawalya, D.; Salminen, L.; Sequeiros, R.B.; Koskinen, S.K.; Pudas, T.K.; Kajander, S.; et al. Deep learning accurately classifies elbow joint effusion in adult and pediatric radiographs. Sci. Rep. 2022, 12, 11803. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, ICML, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Muhammad, M.B.; Yeasin, M. Eigen-cam: Class activation map using principal components. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020. [Google Scholar]

- DeLong, E.R.; DeLong, D.M.; Clarke-Pearson, D.L. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics 1988, 44, 837–845. [Google Scholar] [CrossRef] [PubMed]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Belaid, O.N.; Loudini, M.; Nakib, A. Brain tumor classification using DenseNet and U-net convolutional neural networks. In Proceedings of the 2024 8th International Conference on Image and Signal Processing and their Applications (ISPA), Biskra, Algeria, 21–22 April 2024. [Google Scholar]

- Pattanaik, R.K.; Mishra, S.; Siddique, M.; Gopikrishna, T.; Satapathy, S. Breast Cancer Classification from Mammogram Images Using Extreme Learning Machine-Based DenseNet121 Model. J. Sens. 2022. [Google Scholar] [CrossRef]

- Vabo, S.; Kjerstad, E.; Hunskaar, S.; Steen, K.; Brudvik, C.; Morken, T. Acute management of fractures in primary care-a cost minimisation analysis. BMC Health Serv. Res. 2023, 23, 1291. [Google Scholar] [CrossRef]

- Johnson, M.W. Acute knee effusions: A systematic approach to diagnosis. Am. Fam. Physician 2000, 61, 2391–2400. [Google Scholar]

- Halbreiner, U.; Scariano, V.; Suppnig, A.; Haimburger, E.; Suppanz, M. How do Trained and Prospective Physiotherapists and Radiologic Technologists Face Knee Joint Effusion Profession-Specifically and Interdisciplinary?—A Cross-Sectional Study. J. Orth. Clin. Res. 2023, 1, 87–102. [Google Scholar] [CrossRef] [PubMed]

- Radiopaedia.org. X-ray Interpretation: Knee Injuries. Available online: https://radiopaedia.org/courses/x-ray-interpretation-knee-injuries/pages/2042#1 (accessed on 8 August 2024).

- Kiraly, A.P.; Cunningham, C.A.; Najafi, R.; Nabulsi, Z.; Yang, J.; Lau, C.; Ledsam, J.R.; Ye, W.; Ardila, D.; McKinney, S.M.; et al. Assistive AI in Lung Cancer Screening: A Retrospective Multinational Study in the United States and Japan. Radiol. Artif. Intell. 2024, 6, e230079. [Google Scholar] [CrossRef] [PubMed]

- He, P.; Chen, W.; Bai, M.Y.; Li, J.; Wang, Q.Q.; Fan, L.H.; Zheng, J.; Liu, C.T.; Zhang, X.R.; Yuan, X.R.; et al. Clinical Application of Computer-Aided Diagnosis System in Breast Ultrasound: A Prospective Multicenter Study. World J. Surg. 2023, 47, 3205–3213. [Google Scholar] [CrossRef] [PubMed]

- Kaur, D.; Uslu, S.; Durresi, M.; Durresi, A. LLM-Based Agents Utilized in a Trustworthy Artificial Conscience Model for Controlling AI in Medical Applications. In Advanced Information Networking and Applications, Proceedings of the 38th International Conference on Advanced Information Networking and Applications (AINA-2024), Kitakyushu, Japan, 17–19 April 2024; Springer: Berlin/Heidelberg, Germany, 2024; Volume 3. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Jiang, P.T.; Zhang, C.B.; Hou, Q.; Cheng, M.M.; Wei, Y. LayerCAM: Exploring Hierarchical Class Activation Maps for Localization. IEEE Trans. Image Process. 2021, 30, 5875–5888. [Google Scholar] [CrossRef]

| Characteristics | Hospital A (S.T.) (n = 280) | Hospital B (S.S.T.) (n = 233) | Hospital C (G.S.T.) (n = 450) | Hospital D (C.T. Hospital) (n = 450) |

|---|---|---|---|---|

| Sex | ||||

| Male | 118 | 67 | 189 | 185 |

| Female | 162 | 166 | 261 | 265 |

| Age (mean ± SD) | 62 ± 4 | 62 ± 5 | 62 ± 2 | 63 ± 3 |

| Number of Image (disease statue) | ||||

| Normal | 168 (60%) | 139 (59.7%) | 150 (33.3%) | 150 (33.3%) |

| Abnormal | 112 (40%) | 94 (40.3%) | 300 (66.7%) | 300 (66.7%) |

| X-ray parameter | ||||

| Tube potential (kVp) | 60 | 60–70 | 60 | 60 |

| Tube intensity (mA) | 100 | 100 | 100 | 100 |

| Exposure time (s) | 0.125 | 0.300 | 0.125 | 0.125 |

| Focus to detector distance (cm) | ||||

| Supine | 85 | 100 | 100 | 100 |

| Erect | 78 | 100 | 100 | 100 |

| Metric (±95% CI) | DenseNet121 | Proposed Method | p-Value † |

|---|---|---|---|

| AUC | 0.821 (0.770–0.872) | 0.892 (0.853–0.931) | <0.001 |

| Accuracy | 0.764 (0.707–0.815) | 0.803 (0.749–0.850) | 0.133 |

| Sensitivity | 0.753 (0.686–0.835) | 0.820 (0.753–0.880) | 0.052 |

| Specificity | 0.776 (0.619–0.785) | 0.785 (0.710–0.851) | 1.000 |

| Metric (±95% CI) | Proposed Method | Non-Orthopedic | |||

|---|---|---|---|---|---|

| Physician 1 | p-Value † | Physician 2 | p-Value † | ||

| Accuracy | 0.803 (0.749–0.850) | 0.568 (0.505–0.630) | <0.001 | 0.568 (0.505–0.630) | <0.001 |

| Sensitivity | 0.820 (0.753–0.880) | 0.701 (0.626–0.776) | 0.020 | 0.723 (0.641 –0.798) | 0.048 |

| Specificity | 0.785 (0.710–0.851) | 0.421 (0.330–0.512) | <0.001 | 0.396 (0.314–0.479) | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Won, H.; Lee, H.S.; Youn, D.; Park, D.; Eo, T.; Kim, W.; Hwang, D. Deep Learning-Based Joint Effusion Classification in Adult Knee Radiographs: A Multi-Center Prospective Study. Diagnostics 2024, 14, 1900. https://doi.org/10.3390/diagnostics14171900

Won H, Lee HS, Youn D, Park D, Eo T, Kim W, Hwang D. Deep Learning-Based Joint Effusion Classification in Adult Knee Radiographs: A Multi-Center Prospective Study. Diagnostics. 2024; 14(17):1900. https://doi.org/10.3390/diagnostics14171900

Chicago/Turabian StyleWon, Hyeyeon, Hye Sang Lee, Daemyung Youn, Doohyun Park, Taejoon Eo, Wooju Kim, and Dosik Hwang. 2024. "Deep Learning-Based Joint Effusion Classification in Adult Knee Radiographs: A Multi-Center Prospective Study" Diagnostics 14, no. 17: 1900. https://doi.org/10.3390/diagnostics14171900