Abstract

Background: Depression is a pervasive mental health condition, particularly affecting older adults, where early detection and intervention are essential to mitigate its impact. This study presents an explainable multi-layer dynamic ensemble framework designed to detect depression and assess its severity, aiming to improve diagnostic precision and provide insights into contributing health factors. Methods: Using data from the National Social Life, Health, and Aging Project (NSHAP), this framework combines classical machine learning models, static ensemble methods, and dynamic ensemble selection (DES) approaches across two stages: detection and severity prediction. The depression detection stage classifies individuals as normal or depressed, while the severity prediction stage further classifies depressed cases as mild or moderate-severe. Finally, a confirmation depression scale prediction model estimates depression severity scores to support the two stages. Explainable AI (XAI) techniques are applied to improve model interpretability, making the framework more suitable for clinical applications. Results: The framework’s FIRE-KNOP DES algorithm demonstrated high efficacy, achieving 88.33% accuracy in depression detection and 83.68% in severity prediction. XAI analysis identified mental and non-mental health indicators as significant factors in the framework’s performance, emphasizing the value of these features for accurate depression assessment. Conclusions: This study emphasizes the potential of dynamic ensemble learning in mental health assessments, particularly in detecting and evaluating depression severity. The findings provide a strong foundation for future use of dynamic ensemble frameworks in mental health assessments, demonstrating their potential for practical clinical applications.

1. Introduction

Major depressive disorder (MDD) is a pervasive and severe mental health condition that affects individuals globally, affecting all age groups, demographics, and socioeconomic boundaries. In 2023, more than 280 million people worldwide suffered from depression, making it one of the leading causes of mental disability [1]. In the United States, by 2020, the incremental economic burden of adults with MDD was around USD 326.2 billion, which includes direct costs, suicide-related costs, and workplace costs [2]. This substantial economic impact, along with the significant number of affected individuals, highlights the urgent need for early intervention strategies to alleviate the extensive personal, societal, and financial burdens of depression. The elderly population is particularly vulnerable to depression, experiencing significant impacts despite lower overall prevalence rates compared to younger age groups. In the United States, by 2021, approximately 4.5% of adults aged 50 years and older suffer from MDD [3]. This represents a substantial number of individuals who face depression, with possible significant under-reporting due to social stigma [4]. Factors such as chronic illness, loss of loved ones, and social isolation exacerbate depression in seniors. The impact on this age group is severe, leading to reduced quality of life, increased risk of physical illness, and higher mortality rates [5]. Furthermore, senior people with depression are more likely to experience functional impairments and a decrease in their ability to live independently, adding to the social and economic burden [5]. Early detection of depression is critical for several reasons. First, timely identification enables more effective treatment interventions [6]. Second, people with more severe depression often exhibit resistance to treatment, making early intervention crucial to prevent further deterioration [7,8,9,10]. Predicting the severity of depression is equally essential, as it allows for personalized care. Patients with mild depression can receive tailored interventions, while those with severe depression can be prioritized for more intensive treatment [11,12]. In addition, severe depression is strongly associated with an increased risk of suicide, making it vital to identify and prioritize these cases for urgent intervention [13]. Therefore, the ability to detect depression early and accurately predict its severity is essential for effective treatment and prevention strategies.

Currently, depression screening is heavily based on self-report tools such as the Patient Health Questionnaire 9 (PHQ-9), which is the most widely used screening instrument [14]. Although these tools have proven effective, they can be limited by factors such as delayed diagnosis due to individuals not being aware of their condition or unwilling to seek help due to stigma [4,15]. This can result in undiagnosed depression, and individuals can fall deeper into the disorder without timely intervention. Therefore, identifying additional factors that contribute to depression could help clinicians improve detection and severity prediction without relying solely on self-report measures.

In this study, we explore a promising approach to exploiting data from indirect self-reporting questionnaires on emotional health, social interactions, and daily experiences with machine learning (ML) to detect the presence of depression and predict the severity of depression. This approach also helps mitigate diagnosis delays, which often occur when individuals are either unaware of their condition or face barriers such as stigma or limited access to healthcare. Furthermore, self-report measures provide a noninvasive, private way for older adults to assess their mental health, encouraging more proactive engagement without the fear of judgment. Compared to clinical evaluations, indirect self-report measures are less expensive and more readily available, especially in community or telehealth settings. This approach promotes self-awareness and preventive care, empowering older adults to track their well-being and take timely action, whether through professional help or lifestyle changes.

A growing body of literature has explored the application of ML models to detect depression [16,17] and predict its severity [18,19]. However, a review of the existing literature reveals several limitations. Most studies mainly employ classical and static classifiers, which are limited in their adaptability and may not fully exploit the diversity of data [20,21]. Ensemble methods have proven their superior performance compared to classical methods [22,23,24,25]. However, static ensembles are based on a fixed pool of base classifiers, which might not be suitable for all test examples. As a result, the generalizability of these models is not good. The dynamic ensemble algorithms represent a more advanced approach, dynamically selecting and combining multiple models based on specific criteria for each instance or subset of instances [26,27]. Unlike static ensembles, which rely on a fixed set of models, dynamic ensembles adaptively choose the most appropriate prediction models, improving accuracy and performance [28]. This adaptability makes dynamic ensembles particularly suited for complex tasks such as depression detection. To our knowledge, no study in the literature has used dynamic ensembles to predict depression. Improving the model’s performance is insufficient to achieve a physician’s trustworthiness. There is a lack of personalized assessment in which the model can provide customized and tailored decisions for different individuals. In addition, model explainability is crucial in clinical settings to build trust and improve decision-making [29].

Many studies lack explainable artificial intelligence (XAI) techniques to understand how models detect depression or predict its severity, and they fail to identify the specific features or concepts that contribute to an individual’s depression. Our study aims to address these gaps by incorporating dynamic ensemble models and XAI techniques to provide a more comprehensive understanding of depression detection and severity prediction. In summary, the study contributes to the field with these points:

- We propose an explainable, multilayer framework for the detection and prediction of the severity of depression using dynamic ensemble selection (DES) techniques. The proposed framework comprises three distinct layers. The first layer functions as a detection model designed to predict the presence of depression. The second layer focuses on the severity prediction, predicting the degree of depression in patients already identified as depressed. Finally, the confirmation layer is a regression layer that estimates an individual’s PHQ-9 score based on their specific features. By integrating these three layers, our framework provides a comprehensive decision support framework that not only detects depression but also assesses its severity and validates the precision of the predictions through the estimation of the PHQ-9 score.

- In the detection and severity prediction phases, we assess the effectiveness of various ML models using the National Social Life, Health, and Aging Project (NSHAP) dataset, which comprises a diverse range of questionnaire responses from domains such as social networks and relationships, as well as biomarker data like saliva samples and blood pressure readings from older adults in the United States. This variety of features provides valuable insights into which factors most strongly influence depression. The models explored classical ML algorithms, static ensemble techniques, and, notably, a selection of DES methods. The inclusion of DES represents a novel approach, as it aims to assess whether DES can further optimize and enhance the performance of classical ML and static ensemble models.

- In the scale prediction layer, we evaluate the performance of various static ensemble regression models, alongside a voting regression model, in predicting the PHQ-9 score from the same dataset. This approach validates the results of both the detection and severity prediction layers, offering an additional confirmation of the accuracy and reliability of the overall predictions.

- We enhance the framework by incorporating XAI features to assist physicians in understanding the model’s decision-making processes and provide insight into what factors contribute to an individual’s depression presence or the severity of depression, especially among older adults. This is achieved by applying XAI techniques to the best-performing models in each of the three layers.

The study is organized as follows. Section 2 reviews the literature, Section 3 presents the proposed model, Section 4 describes the experimental setup, Section 5 describes the results of the three layers, Section 6 provides the model explainability and relevant factors contributing to depression, and finally, Section 7 concludes the study.

2. Related Work

This section offers an exhaustive review of recent advancements in the detection of depression, the prediction of severity of depression, and the prediction of depression scales using ML techniques. In addition, it examines the potential of dynamic ensemble methods, particularly within the mental health domain, highlighting studies that exhibit improved accuracy and adaptability in predictive modeling. The related work analyzed herein forms the basis for understanding the current research landscape and discerning the gaps that our study seeks to fill.

2.1. Depression Detection

The detection of depression involves evaluating individuals to determine the presence of MDD, often classified as normal or depressed. In clinical settings, standardized methods have been developed to diagnose depression, typically conducted in two primary ways. First, clinical interviews serve as a conventional approach in which a mental health professional evaluates an individual’s mood, behaviors, thoughts, and physical symptoms over a defined period (typically the last two weeks or more). Clinicians often rely on the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-5), as a diagnostic checklist to identify depression symptoms. DSM-5 has shown significant reliability through test–retest studies, particularly in mood disorders. However, its reliability is relatively lower when diagnosing disorders such as MDD or generalized anxiety disorder compared to conditions such as autism spectrum disorder or borderline personality disorder [30]. In addition, numerous factors hinder people from seeking a clinical diagnosis, including societal stigma [4], financial barriers, perceived lack of emotional distress, and concerns about the efficacy of treatment [31]. Although clinical interviews remain the gold standard for diagnosis, associated barriers make large-scale depression detection difficult. As a result, self-report screening tools have emerged as a complementary solution.

Self-report screening instruments such as the PHQ-9 [32] and the Beck Depression Inventory (BDI) [33] are extensively used for depression detection. These instruments enable individuals to self-evaluate their mental health, providing a cost-effective and accessible alternative to clinical consultations. Empirical evidence demonstrates that these tools, particularly the PHQ-9, maintain reliability even in non-English-speaking countries [34,35]. Consequently, due to its established reliability, the PHQ-9 is employed as the principal metric in our research. Nonetheless, the self-administrative nature of these instruments necessitates that individuals actively seek evaluation and treatment, a task that can be challenging for those experiencing depressive symptoms [31]. In addition to how different cultures interpret and respond to PHQ-9 items, PHQ-9 alone can overdiagnose by generating false positives, particularly in patients with conditions such as bipolar disorder, anxiety, or other psychiatric disorders [36]. Although PHQ-9 serves as a valuable instrument, it is recommended that its results be utilized alongside other robust diagnostic assessment tools to achieve a more comprehensive evaluation of depression, including severe cases, and avoid false positives [37,38]. This study integrates ML techniques applied to multiple diagnostic and clinical assessments to proactively identify individuals at risk, thereby avoiding exclusive dependence on items from PHQ-9.

In recent years, numerous studies have explored the application of ML techniques to detect depression. These approaches span various modalities, from audiovisual data to neurophysiological responses and social media activity, each aimed at improving the precision and timeliness of depression detection [39,40]. For example, Min et al. [16] leveraged audiovisual features from YouTube videos, demonstrating the potential of combining audio and visual data for detecting depressive behaviors. Their XGBoost model achieved a 75.85% accuracy, highlighting that audiovisual features were particularly effective in early detection on social media platforms. However, while this approach underscores the value of integrating YouTube videos in mental health research, it is limited by the dependence on user-generated content, which may lack consistency and introduce noise into the data. In contrast, Li et al. [17] focused on a more controlled setting, using neurophysiological data as electrophysiological responses (ERP) during a dot-probe task to study attentional bias in patients with MDD. Their study used correlated feature selection to enhance the classification accuracy, achieving a high rate of 94% with the K-Nearest Neighbor classifier. This method of using ERP data, particularly the P300 component, provided more reliable signals directly linked to brain activity. However, despite the high accuracy, ERP-based methods require specialized equipment, making them less scalable and more resource-intensive compared to audiovisual data analysis. A different modality, social media texts, was explored by Govindasamy and Palanichamy [41], who applied sentiment analysis on Twitter data to detect depression using Naive Bayes and hybrid Naive Bayes Decision Tree classifiers. Their study achieved accuracies of 92.34% and 97.31% on datasets of 1000 and 3000 tweets, respectively. This approach benefits from the large volume of publicly available social media data, allowing for scalable depression detection. However, sentiment analysis is highly dependent on the quality of the labeled data, and the models may struggle with nuances in language or cultural differences in expressing emotions, which could affect their generalizability across different populations. Malik, Shabaz, and Asenso [42] took yet another approach by applying ML techniques to survey-based data, combining Decision Tree, K-Nearest Neighbor, and Naive Bayes classifiers to analyze responses from 1694 individuals. Their study found the K-Nearest Neighbor to be the most effective, with an accuracy of 92.32%. This method, relying on structured survey responses, provided an accessible way to detect depression. However, surveys might not fully capture the dynamic nature of depressive symptoms, unlike continuous monitoring via physiological data. When comparing these traditional ML approaches, it is evident that each study offers specific strengths but also faces unique limitations based on the type of data used. For instance, models relying on structured data like surveys or sociodemographic factors may offer simplicity and accessibility but risk missing out on the complexity of depressive behaviors, which could be captured better through neurophysiological, behavioral data, or health exam data. On the other hand, studies using neurophysiological signals or audiovisual data offer greater accuracy but at the cost of requiring more specialized equipment or large-scale user engagement.

Recent advances in deep learning have also been applied to depression detection, with more sophisticated results but new challenges. Acharya et al. [43] employed a convolutional neural network (CNN) model to analyze electroencephalogram (EEG) data, achieving accuracies of 93.5% and 96.0% using signals from the left and right hemispheres, respectively. Their study supports the hypothesis that depression is linked to hyperactivity in the right hemisphere. While EEG-based deep learning models perform well in detecting depression, their application is limited by the need for specialized equipment and the complexity of interpreting EEG data, especially in large-scale deployments. Marriwala and Chaudhar [44] introduced a hybrid deep learning model combining textual and audio features, applying Long Short Term Memory (LSTM) and Bidirectional LSTM (Bi-LSTM) models to the DAIC-WoZ database. Their findings showed that the audio CNN model outperformed the textual model, achieving an impressive accuracy of 98%, highlighting the effectiveness of audio features in detecting depression. However, like other deep learning models, these approaches require extensive computational resources and large datasets to generalize well. This poses a challenge for smaller-scale datasets like the 2005–2006 NSHAP dataset used in this research, which lacks the volume and diversity necessary for training deep learning models effectively. Moreover, while deep learning models demonstrate higher accuracy, they often operate as “black boxes”, making it difficult to interpret the results or understand the importance of individual features. In contrast, classical ML models not only perform comparably well with fewer resources but also offer easier integration with XAI techniques, providing clearer insights into feature importance and model decision-making processes.

2.2. Depression Severity Prediction

Depression severity prediction involves determining the level of depression in an individual, which is critical to providing appropriate care and intervention. Various screening tools categorize severity differently. For example, PHQ-9 divides depression into categories such as mild, moderate, moderately severe, and severe [32], while the BDI uses minimal, mild, moderate, and severe [33]. Self-screening tools such as these are more commonly used to categorize the severity of depression than DSM-5 since DSM-5 is typically used to diagnose depression as present or absent. Although similar to depression detection, prediction of severity faces unique challenges. A significant limitation of self-screening tools is that depressed individuals may be reluctant to seek self-evaluation [31], thus preventing clinical evaluation and accurate severity assessment. This issue is particularly concerning for those with severe depression, as they have a higher risk of suicide and other comorbidities [13]. Therefore, accurate prediction of the severity of depression, especially after an initial diagnosis of depression, is essential for appropriate and timely intervention. Here, ML techniques can play a crucial role in enhancing the accuracy and efficiency of severity prediction.

Many studies have explored the application of ML to predict the severity of depression, often using diverse data sources such as biomarkers, functional brain activity, and behavioral data. These studies vary significantly in their approaches, each presenting unique strengths and limitations. Bader et al. [45] explored the combination of oxidative stress biomarkers (e.g., 8-isoprostane, 8-OHdG, and glutathione) with sociodemographic and clinical data to predict depression severity using ML models. The study demonstrated that integrating biomarkers with additional health-related factors improved detection accuracy, with oxidative stress markers ranked as the most critical predictors. While the Random Forest classifier consistently outperformed other models, the limitation here lies in the complexity of collecting and analyzing biomarkers, which may not be readily accessible in all healthcare settings. Furthermore, biomarkers like oxidative stress markers may vary based on factors unrelated to depression, such as physical health conditions, which could introduce noise into the model and limit its generalizability. In contrast, Huang et al. [46] took a functional near-infrared spectroscopy (fNIRS) approach, analyzing brain activity to classify mild and severe depression. Their support vector machine model achieved a high accuracy of 92.8%, demonstrating the effectiveness of combining temporal and correlation features from brain data. While this method offers a more objective diagnostic tool, the primary limitation is its dependency on specialized equipment (fNIRS), which is costly and requires technical expertise, making it less feasible for widespread clinical use. Additionally, like oxidative biomarkers, brain activity data may reflect other cognitive or physiological states, potentially affecting the model’s specificity in real-world applications. Choudhary et al. [47] took a different approach by leveraging passive smartphone data, such as digital behavioral markers and gyroscope sensor data, to predict depression severity. This method offers a non-invasive and continuous monitoring solution, achieving an accuracy of 87% for a two-class model (none vs. severe) and 78% for a three-class model. However, while smartphone data are scalable and convenient, its reliance on self-reported PHQ-9 scores as a ground truth introduces subjectivity, and the slight reduction in accuracy when incorporating gyroscope data suggests potential noise from irrelevant data sources. Shin et al. [48] examined voice as a potential biomarker for detecting both minor and major depressive episodes, utilizing voice features extracted from interviews. Their model achieved an Area Under Curve (AUC) of 65.9%, with 65.6% sensitivity and 66.2% specificity, indicating the potential for voice analysis in distinguishing between depression severities. However, a major limitation of this study is the small sample size (93 participants), which hinders the generalizability of the results. Moreover, voice data can be influenced by numerous external factors, such as physical illness or environmental noise, which complicates its use as a standalone biomarker for depression severity. Additionally, the relatively low performance of the model compared to others in the field suggests that more research is needed to fully capture the relationship between voice characteristics and depression severity.

Across these studies, the contrast between objective physiological data (biomarkers, brain activity, and voice features) and passive behavioral data (smartphone usage) is clear. While physiological data often lead to higher predictive accuracy, the need for specialized equipment (e.g., fNIRS, oxidative stress biomarker assays) limits the scalability and accessibility of these approaches. On the other hand, behavioral data collected from smartphones provides a scalable, non-invasive solution but is prone to noise and subjectivity, especially when paired with self-reported depression scores.

In addition to traditional ML methods, deep learning techniques have been applied to predict depression severity, demonstrating high accuracy but also introducing new challenges. Mao et al. [49] employed a multimodal approach, combining speech and text data to predict depression severity across five classes. Their model, trained on the DAIC-WOZ dataset, achieved an impressive F1-score of 0.9870 at the sequence level and 0.9074 at the patient level for the audio modality. Despite the promising results, the approach faces significant limitations due to the resource-intensive nature of deep learning models. These models require large datasets, high computational power, and often function as “black boxes”, making it difficult to interpret feature importance and understand how predictions are made. This lack of transparency and the computational demands pose challenges for their practical deployment in clinical settings.

When comparing deep learning approaches to traditional ML models, the contrast between performance and explainability becomes apparent. While deep learning models like those proposed by Mao et al. achieve higher accuracy in predicting depression severity, they suffer from black-box limitations and require substantial data and computational resources. In contrast, traditional ML models, while often slightly less accurate, provide more interpretable results and can be applied in settings where computational power or large datasets are not readily available. The trade-off between interpretability and performance is a key consideration in selecting the appropriate model for predicting depression severity.

2.3. Depression Scale Prediction

Depression scales, such as the PHQ-9, are widely used due to their ease of use, low cost, and ability to provide quantitative estimates for both depression diagnosis and severity. Our study used the PHQ-9 scale, similar to many of the studies mentioned. Although these studies may use different versions of the PHQ-9, such as the PHQ-8 or variations adapted for specific populations, they still offer valuable validation for using a regression layer in predicting depression severity. This alignment strengthens the methodological consistency of our model, ensuring that our numerical estimation approach is grounded in well-established clinical practice.

Jin et al. [50] developed a generalized multilevel Poisson regression model to predict depression severity in patients with diabetes, using PHQ-9 scores to assess depression over time. With 29 factors analyzed and a root mean square error (RMSE) of around 4, their model provided both population-level and patient-specific predictions. Although Jin et al. focused on diabetes, limiting the generalizability to broader populations, their use of longitudinal data and PHQ-9 reinforces the validity of our approach. However, the reliance on clinical trial data, which may not reflect real-world conditions, introduces a potential limitation. Syed et al. [51] used a different version of the PHQ-9 scale as part of the audio/visual emotion challenge to predict depression severity based on biomarkers of psychomotor retardation, including audio, video, and motion capture data. Their model achieved an RMSE of 6.34, indicating more errors compared to Jin et al.’s study. The higher error, alongside the complexity of data collection using motion capture, suggests that while multimodal data offer comprehensive insights, it also introduces noise and challenges in practical application. Aharonson et al. [52] used PHQ-8 scores to predict depression severity from speech data, introducing two ML architectures. Their second model, which grouped participants by severity class before applying regression, achieved an RMSE of 4.1, outperforming previous studies with RMSEs between 6.32 and 6.94. However, a limitation of Aharonson et al.’s work is the small dataset size (189 participants), which limits generalizability. Additionally, while speech-based models are scalable, they can suffer from variability in audio quality, potentially reducing prediction accuracy in diverse environments.

Despite the limitations presented in these studies—such as the focus on specific populations in Jin et al.’s study [50], the complexity of multimodal data collection in Syed et al.’s work [51], and the small dataset size in Aharonson et al.’s research [52]—they collectively demonstrate that using the PHQ-9 scale for predicting depression severity is a valid and reliable approach. These studies validate the PHQ-9 and its variants (e.g., PHQ-8) as effective tools in ML models for quantifying depression levels, offering robust performance across various methodologies and data sources. The consistency of results, despite different contexts and datasets, further supports the applicability of the PHQ-9 scale in predictive models for depression diagnosis and severity assessment, underscoring its value in both clinical and ML environments.

2.4. Dynamic Ensemble

Model stability and performance can be significantly improved through the use of ensemble methods, which include techniques like bagging, boosting, and stacking [53,54,55]. These methods typically employ a variety of base models, ranging from decision trees to more advanced classifiers such as support vector machines and neural networks. Recent studies have successfully applied these ensemble techniques to tasks like depression detection and severity prediction, utilizing classifiers such as random forests, gradient-boosting machines, and deep learning models, as discussed earlier. However, most of these studies have relied on static ensemble techniques, where the selection of base classifiers occurs only once during the training phase. A novel and increasingly promising approach to ensemble learning is DES, in which classifiers are dynamically chosen for each new instance to be classified [28]. In DES, the system first evaluates the competence level of each classifier in a pool of available classifiers. Based on this assessment, an ensemble is dynamically formed, selecting the most competent classifiers to predict the label for the specific query sample. The key idea behind DES is that not every classifier is equally capable of classifying all unknown samples; instead, each classifier specializes in a distinct local region of the feature space. Therefore, the challenge lies in dynamically identifying and selecting the most suitable classifiers for each individual sample. Various methods have been developed to address this, including techniques such as KNORA-E, KNORA-U, DES-KNN, and the Frienemy Indecision Region (FIRE) framework [56]. Several studies have explored the use of dynamic ensemble methods in medical applications. For example, KP, Muhammed Niyas, and Thiyagarajan (2021) aimed to improve the classification of healthy individuals, patients with mild cognitive impairment (MCI) and patients with Alzheimer’s disease (AD) at the baseline stage using data from the Alzheimer’s Disease Neuroimaging Initiative-TADPOLE dataset. This dataset includes multimodal features such as medical imaging, cerebrospinal fluid, cognitive tests, and demographic information. The study compared the performance of DES algorithms with traditional ML classifiers, evaluating both based on metrics like balanced classification accuracy, sensitivity, and specificity. Their results showed that the DES algorithms improved the classifier performance, particularly in distinguishing between healthy individuals, patients with MCI, and those with AD [57]. In the context of depression, Janardhan, Naulegari, and Nandhini Kumaresh [58] developed a four-stage ML classification system for the detection of depression using acoustic parameters. Speech recordings were obtained from the DAIC-WOZ dataset, and the eGeMAPS feature set was extracted. To address the class imbalance, adaptive synthetic resampling and data preprocessing were applied. Three feature selection methods were used: Borruta, SVM-RFE, and Fisher score to identify relevant features. In the fourth stage, several classifiers were tested with hyperparameter tuning performed via GridSearchCV during a 10-fold cross-validation. DES classifiers, particularly the KNORAU, were used to improve accuracy. The study found that the KNORAU, using 15 features selected by Fisher’s score, achieved the highest accuracy compared to individual classical ML or static ensemble classifiers on the DAIC-WOZ dataset. Despite the potential effectiveness of dynamic ensemble methods, very few studies in the literature have applied them to depression analysis. To our knowledge, no existing studies have yet utilized dynamic ensemble techniques in a unified framework for both depression detection and severity prediction. This represents a significant gap in current research, highlighting the potential of future work to explore the benefits of dynamic ensemble methods in comprehensive depression analysis.

3. Proposed Framework

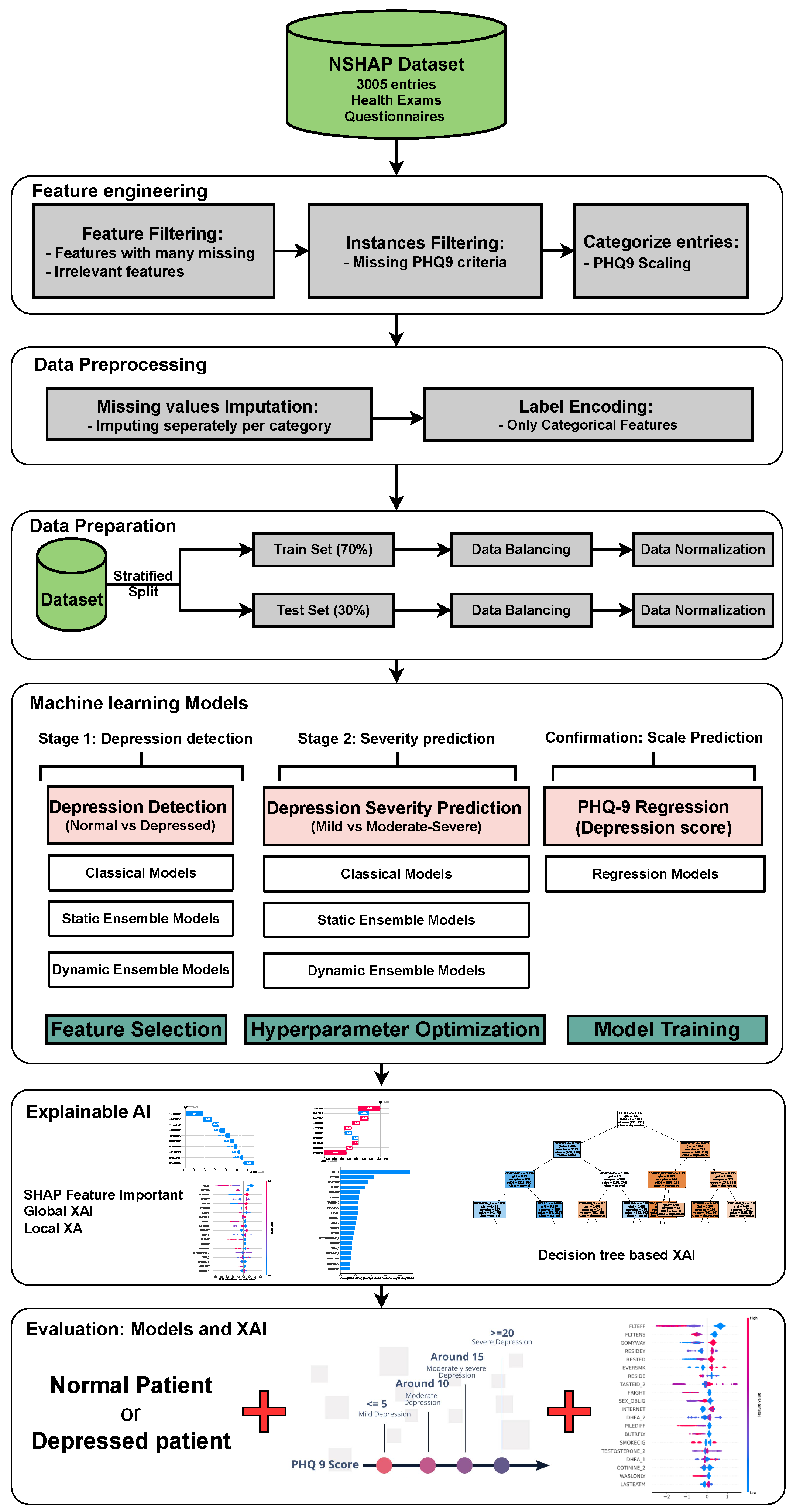

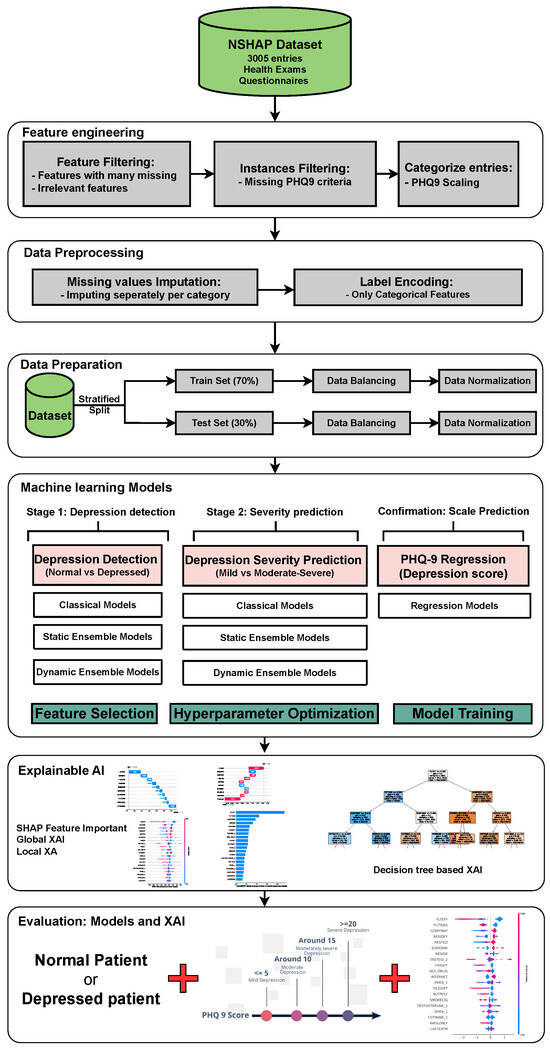

Figure 1 illustrates the proposed model for depression detection, severity prediction, and scale prediction. The primary objective of this model is to deliver accurate and interpretable predictions. The model is structured into two main layers and one confirmation layer. The two main layers include the detection layer, which distinguishes between individuals with MDD and individuals without MDD, as well as the severity prediction layer, which differentiates between mildly depressed and moderately–severely depressed individuals. The third layer, referred to as the “confirmation layer” serves as a regression layer that predicts the PHQ-9 score for each individual. This layer further substantiates the predictions made by the two main layers, providing an additional level of validation and reinforcing the accuracy and reliability of the overall model. Each of the two main layers will utilize a collection of ML models, including classical ML models, static ensemble models, and DES models. The confirmation layer will only use static ensemble regressors. These models are compared using default hyperparameters with no feature selection, default hyperparameters with feature selection, and optimized hyperparameters with feature selection. The model with the best statistical performance is then extended to add XAI capabilities using different techniques. For ML engineers, XAI helps evaluate model stability, identify biases, and ensure that decisions are based on sound, interpretable logic, thereby improving model performance and trustworthiness. In clinical settings, XAI enables healthcare professionals to better understand the key factors driving model predictions, improving confidence in the model’s decision, and facilitating more informed diagnoses and treatments for MDD. This fosters greater trust between clinicians and AI systems, promoting responsible deployment of AI in mental health care.

Figure 1.

The architecture of the proposed framework. Abbreviations: National Social Life, Health, and Aging Project (NSHAP); explainable artificial intelligence (XAI).

3.1. Data Collection

This study uses the 2005–2006 iteration of the NSHAP dataset. Initiated in 2005–2006, NSHAP is a comprehensive longitudinal study aimed at examining the intricate relationships between social connections, health outcomes, and aging among older adults in the United States. Conducted by the National Opinion Research Center in collaboration with principal investigators from the University of Chicago, the study involved more than 3000 face-to-face interviews and the collection of biomeasures within participants’ homes. The sample was nationally representative, consisting of adults aged 57 to 85. The NSHAP dataset offers a wealth of variables that span multiple domains, including social networks, relationships, interviews, physical health, and biomarker data, such as saliva samples and blood pressure readings. In addition, the data set includes a robust set of mental health assessments, providing valuable information on the psychological well-being of the participants. These mental health measures are especially pertinent to study the prevalence and impact of conditions such as depression in the aging population [59]. This dataset is well suited for our research objectives due to its extensive variety of features, which allow for a comprehensive analysis of the factors that contribute to depression. Not only does it allow us to identify key contributors to depression in individuals, but it also provides the opportunity to explore the factors that exacerbate more severe forms of depression. These data are available at https://doi.org/10.3886/ICPSR20541.v10 (accessed on 25 April 2024).

3.2. Feature and Entry Exclusion

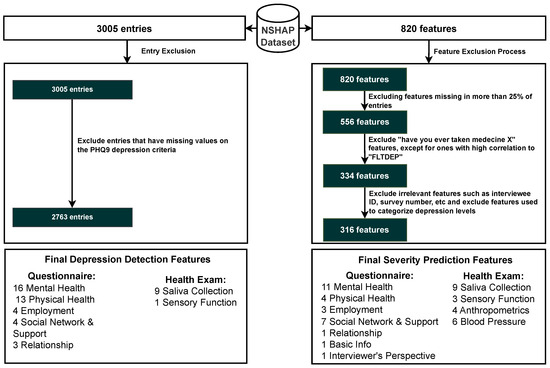

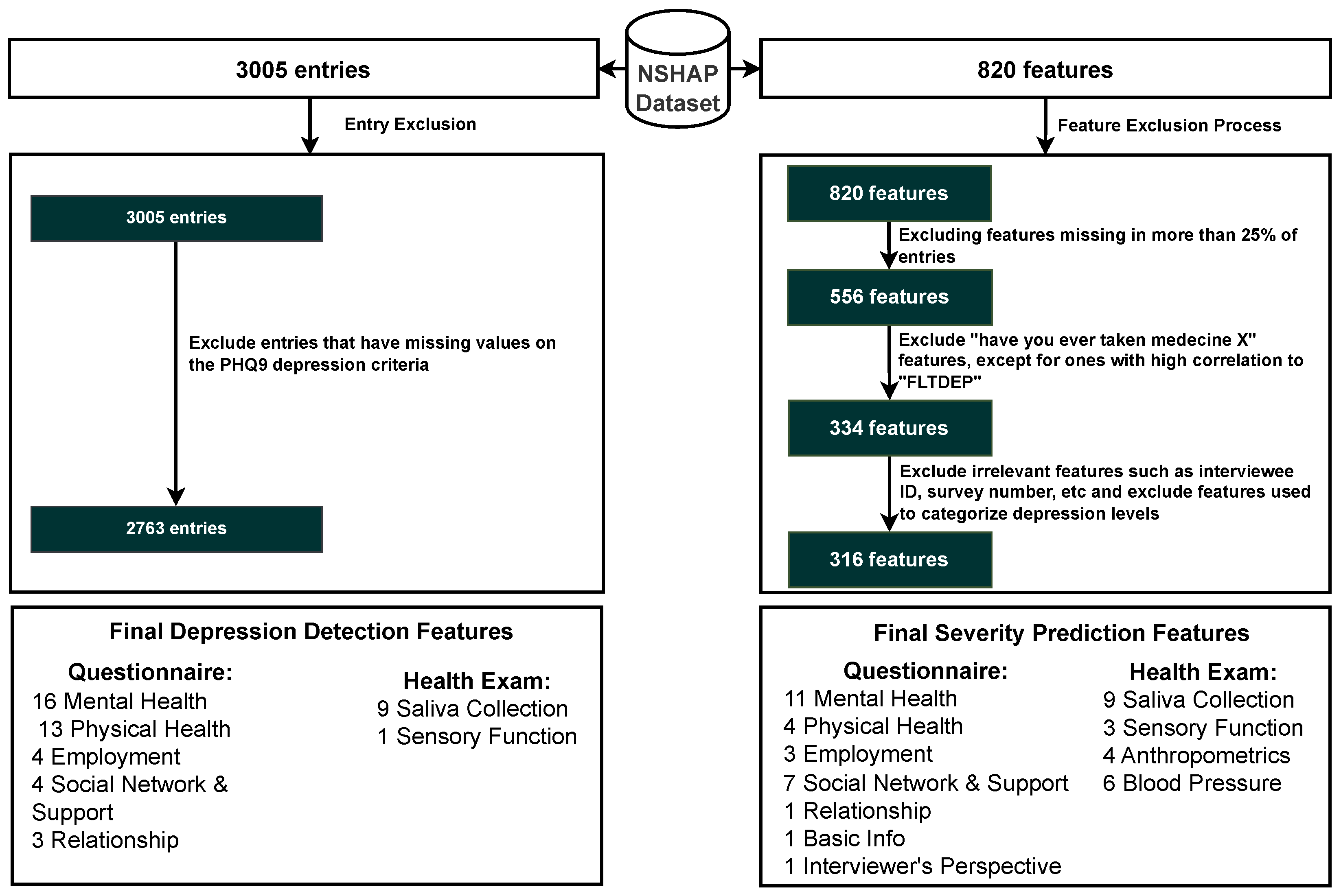

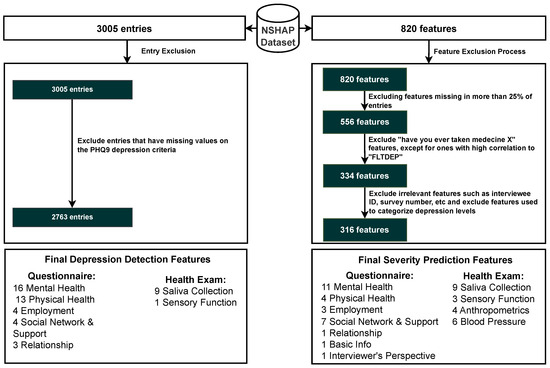

3.2.1. Feature Exclusion

The NSHAP dataset originally comprised 820 features, but only 316 features were selected based on specific criteria. Initially, 264 features were excluded because they were missing in more than 25% of the entries. Subsequently, 222 features related to questions about whether participants had ever taken specific medications were removed, as the majority of responses were negative, indicating low relevance. However, specific medication features that showed a high correlation with the FLTDEP variable (felt depression) were retained to identify possible connections to depression. In addition, nine irrelevant features, such as interview IDs or survey numbers, that do not have analytical value were removed. The other nine features directly used to categorize depression levels were also removed to avoid training the model on the same criteria used for categorization, ensuring unbiased model development. By excluding features with a high percentage of missing data and those with low relevance, the model becomes more robust to noise and better equipped to generalize to new data. The elimination of irrelevant or redundant features reduces the risk of overfitting, where the model might learn specific patterns that do not generalize beyond the training dataset. Furthermore, focusing on the most relevant features allows the model to learn meaningful relationships that contribute to better predictions of depression. The inclusion of medication features with high correlation to depression ensures that the model remains sensitive to factors that might influence mental health, improving its ability to identify at-risk individuals. Overall, this careful feature selection enhances the model’s accuracy, interpretability, and efficiency in both training and deployment scenarios. This selection process resulted in 316 features, which are organized into categories, as shown in Figure 2. These categories are divided by the original researchers of this dataset into questionnaire features and health examination features, each with their subcategories, which facilitates a structured approach to the analysis in Section 6 [59].

3.2.2. Entry Exclusion

The initial NSHAP dataset consisted of 3005 individuals. However, due to some entries containing missing responses to the questions used to calculate PHQ-9 scores, only 2763 entries were deemed suitable for analysis. Although imputation techniques can be applied to other variables within the dataset, imputing values for the PHQ-9 was deemed inappropriate, as this instrument serves as the primary measure for categorizing depression status. Excluding entries with missing PHQ-9 responses ensures that the dataset used for model training and testing is reliable and free from the biases that could arise from imputed values for this variable. Of the remaining 2763 entries, 1308 individuals were classified as having no depression symptoms (normal), while 1455 were classified as depressed.

Figure 2.

Inclusion criteria for entry and features. Abbreviations: Patient Health Questionnaire 9 (PHQ-9).

Figure 2.

Inclusion criteria for entry and features. Abbreviations: Patient Health Questionnaire 9 (PHQ-9).

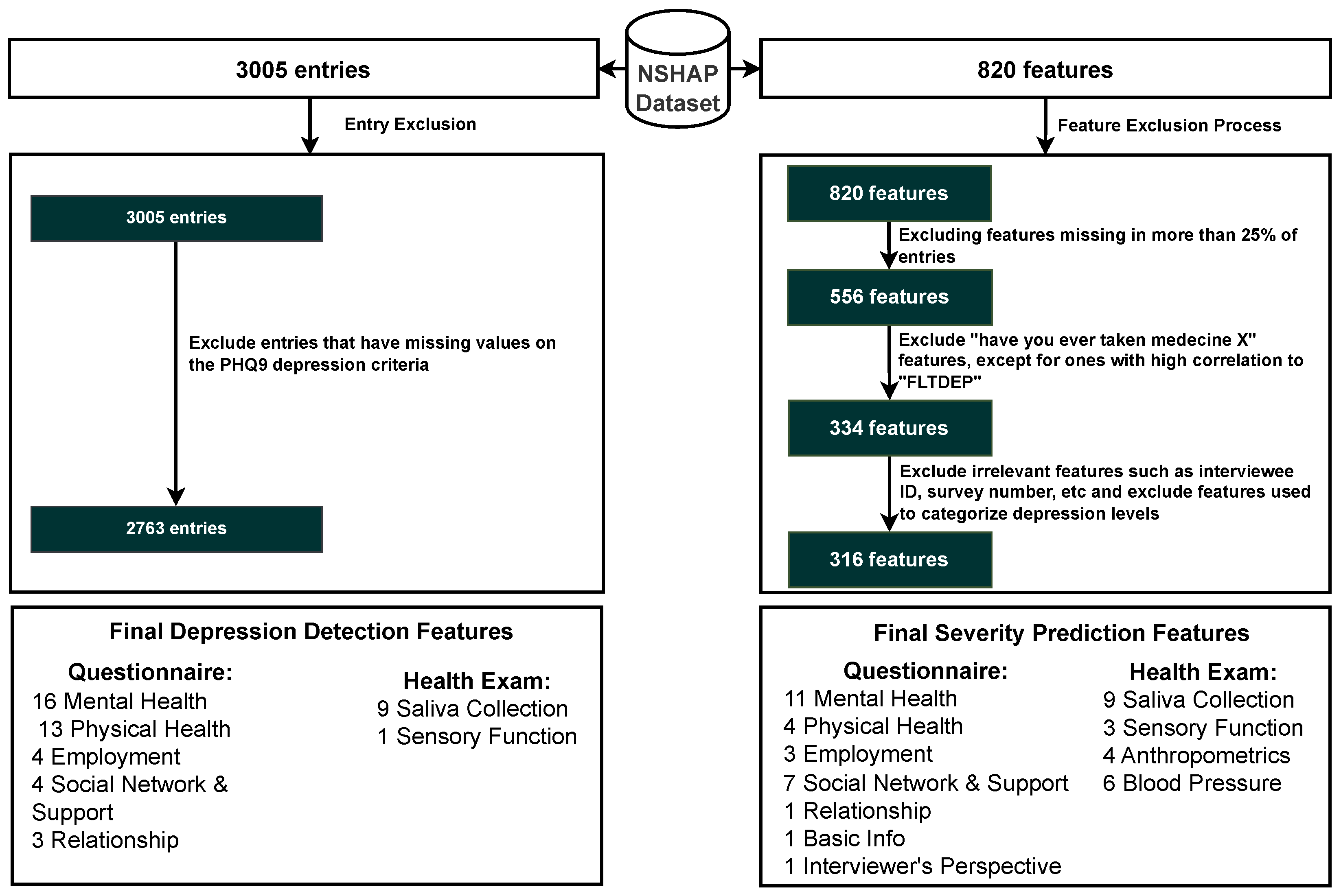

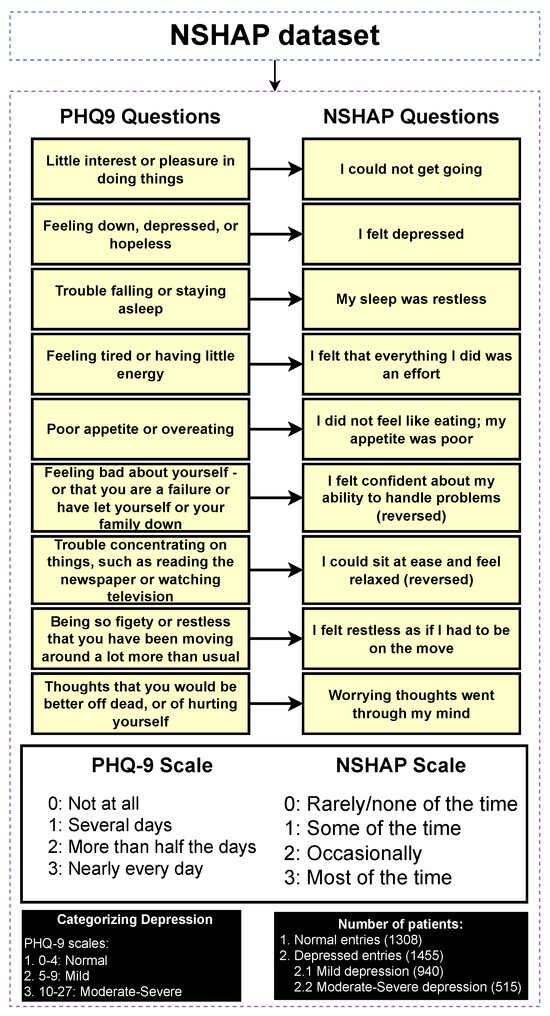

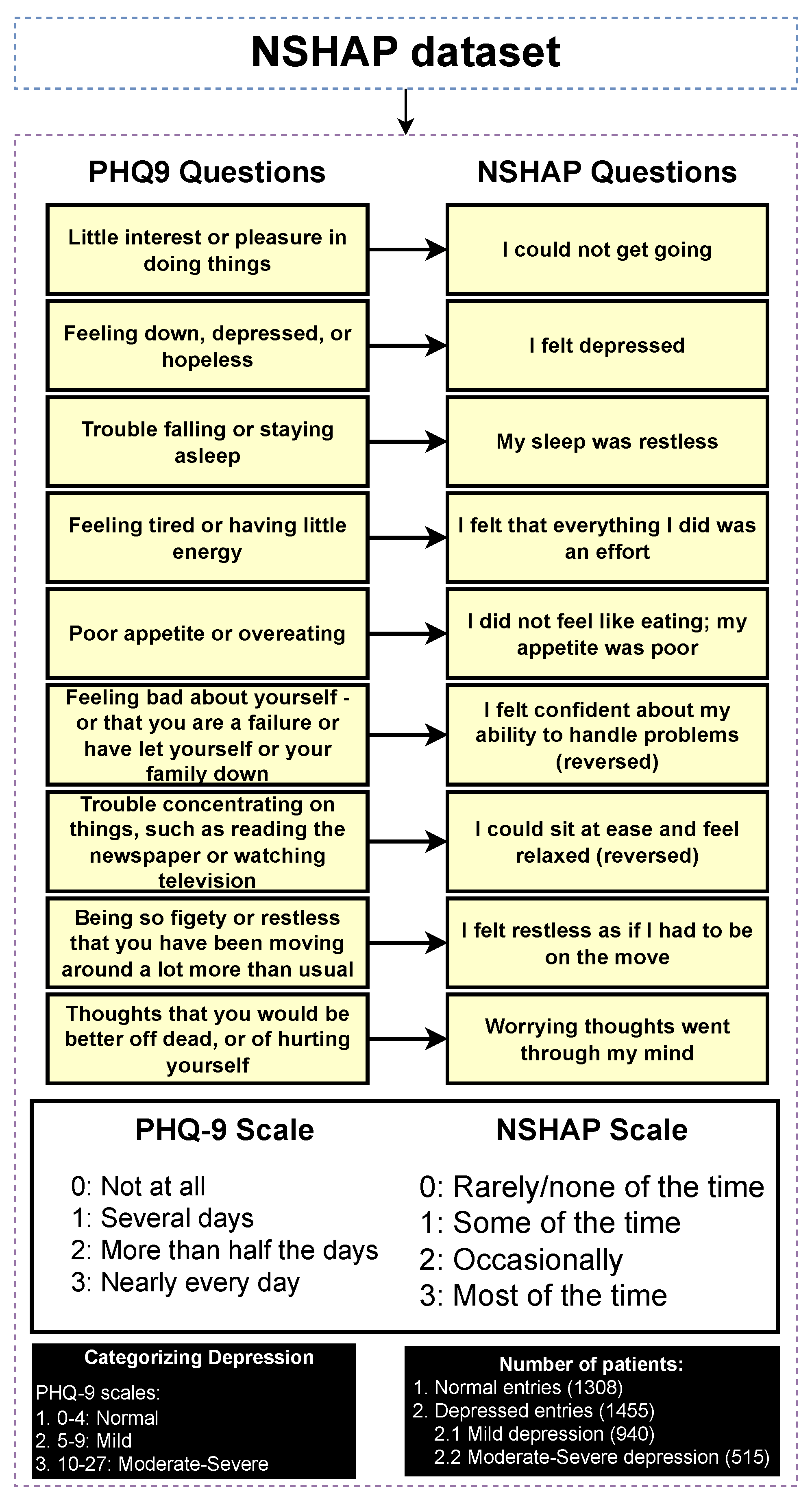

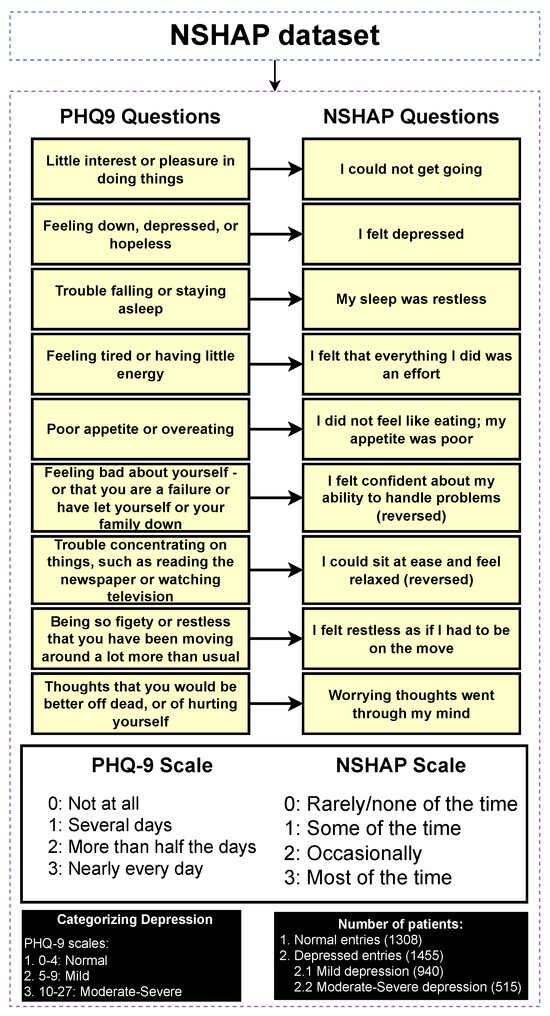

3.3. Classifying Entries Through the PHQ-9 Scale

The NSHAP dataset focuses on the general well-being of older Americans. Given its broad scope, the dataset does not include a specific depression categorization, as it covers a wide range of topics. However, it contains mental health questions that assess the mental health of participants in various categories. To address this, we can take advantage of PHQ-9, a widely recognized tool used to detect, diagnose, and measure the severity of depression. The PHQ-9 consists of nine questions that evaluate the frequency of depressive symptoms over the past two weeks, with responses ranging from “0” (not at all) to “3” (nearly every day). The total score ranges from 0 to 27, with higher scores indicating a higher severity of depression [32]. Figure 3 illustrates the mapping process in which each PHQ-9 question is matched to the corresponding questions of the NSHAP dataset that are as similar as possible. The response options (0–3) are aligned with the PHQ-9 scale, allowing for a coherent categorization of entries based on the PHQ-9 framework. The categorization of the entries was performed using the following mapping: normal (0–4), mild depression (5–9), and moderate to severe depression (10–27), based on the original categorizations of PHQ-9. Subsequently, the detection layer is designed to distinguish between normal and depressed individuals, where the depressed category includes mild and moderate–severe depression. The severity prediction layer, which focuses solely on depressed individuals, differentiates between mild and moderate–severe cases. The regression layer uses the entire dataset to predict the PHQ-9 scores of individuals.

Table 1 presents the results of a chi-square test comparing a selection of categorical features between normal and depressed individuals. The results show that several features have p-values ≤ 0.001, indicating a significant association between these features and the depression categories, thereby supporting the effectiveness of the categorization process. This is consistent with intuitive expectations, as features such as “self-rated general happiness” display very low p-values, suggesting a strong correlation with depression status. Thus, the categorization approach appeared to be valid and aligned with the data. Additionally, as part of further exploratory analysis, a comparison of numerical features between depressed and normal individuals is provided in Supplementary Table S15.

Figure 3.

Categorizing entries into depression categories (normal, mild depression, moderate–severe depression).

Figure 3.

Categorizing entries into depression categories (normal, mild depression, moderate–severe depression).

Table 1.

Chi-square test on a selection of categorical features (normal and depressed).

3.4. Data Pre-Processing

After certain entries and features are excluded, the data must undergo pre-processing to ensure that they are suitable for model processing. This preprocessing includes encoding categorical labels and imputing missing values to create a complete and analyzable dataset.

3.4.1. Label Encode

There are numerical and categorical features in our tabular dataset. Since many models cannot process non-integer values, label encoding is necessary for the categorical features. Label encoding was chosen due to its simplicity and efficiency, particularly given that many of the categorical features are ordinal, such as scales for mental health questions. Additionally, for non-ordinal features, most are binary, making label encoding a suitable and straightforward choice.

3.4.2. Data Imputation

Certain entries in the dataset exhibit missing values in various features. To address these gaps, median imputation within their specific categories has been identified as the most effective method based on empirical trials. This technique ensures that the imputed values accurately reflect their respective categories, thereby enhancing the overall integrity of the dataset. Imputing missing values is important as these features contribute to the model’s robustness and generalizability. Without imputation, the model could be forced to discard incomplete data, potentially leading to reduced sample size and introducing bias. This could negatively impact the model’s performance, especially in capturing key patterns and relationships within the data.

3.4.3. Data Preparation

This section covers data splitting, data balancing, and data normalization. These are essential steps in developing a robust and accurate ML model.

3.4.4. Data Splitting

The dataset is divided into 70% for training and 30% for testing. This separation is conducted prior to balancing and normalization to prevent data leakage. The division process is repeated 10 times, and the average results, along with standard deviations, are reported to ensure the generalization of the model.

3.4.5. Data Balancing

The dataset is relatively balanced, with the detection layer showing a ratio of approximately 1.11:1 (depressed to normal) and the severity prediction layer showing a ratio of approximately 1.83:1 (mild to moderate–severe). However, data balancing was still performed to ensure the model is both robust and fair, preventing bias towards the more prevalent class. To achieve this, the Synthetic Minority Over-sampling Technique (SMOTE), a method that generates synthetic samples for the minority class to balance the dataset, was applied to the training data alone [60]. Additionally, random undersampling was performed on the test data to ensure that accuracy metrics reflect a fair distribution of categories, allowing for an accurate assessment of the model’s performance across both categories.

3.4.6. Data Normalization

After splitting and balancing the dataset, normalization is performed using the min–max scaling technique to transform the data into a range with a mean of zero and a standard deviation of one. The scaler is fitted on the training data and subsequently applied to the testing data without refitting to prevent data leakage issues.

3.5. Depression Detection and Severity Prediction Layers

In this stage, a comparative performance analysis was conducted across various ML models. Initially, classical ML models were employed, including Decision Tree (DT), Logistic Regression (LR), Naive Bayes (NB), K-Neighbors (KN), Multilayer Perceptron (MLP), and Support Vector Classification (SVC). Following this, static ensemble models such as Random Forest (RF), XGBoost (XGB), Gradient Boosting (GB), AdaBoost (AB), CatBoost (CB), LightGBM (LGBM), and Voting Classifier (Vot) were evaluated. Finally, state-of-the-art DES algorithms were evaluated, including KNORAE, KNORAU, KNOP, DESMI, METADES, DESKNN, and DESP, along with their FIRE-enhanced versions: FIRE-KNORA-U, FIRE-KNORA-E, FIRE-METADES, FIRE-DESKNN, FIRE-DESP, and FIRE-KNOP. The FIRE framework enhances DES by focusing on classifiers capable of accurately distinguishing between ambiguous samples of different classes (frienemies) in indecision regions, thus improving overall classification performance [56].

While many classical ML models and static ensemble techniques have been applied to the field of depression detection and severity prediction [16,18,61], there has been limited exploration of DES. Static ensemble models generally outperform individual base classifiers [28], which is why they are often preferred. However, DES differs by selecting the most competent base classifiers for each new test sample in real-time, potentially improving accuracy [28]. Despite the potential of dynamic ensemble models [26,62,63], they remain underexplored in depression detection, particularly within the NSHAP dataset, where they have not, to our knowledge, been applied. Therefore, this study proposes the use of DES to improve the performance of the AI model in this field. We comprehensively evaluate classical, static, and dynamic models using default hyperparameters, optimized hyperparameters, with feature selection, and without feature selection, providing a detailed performance comparison.

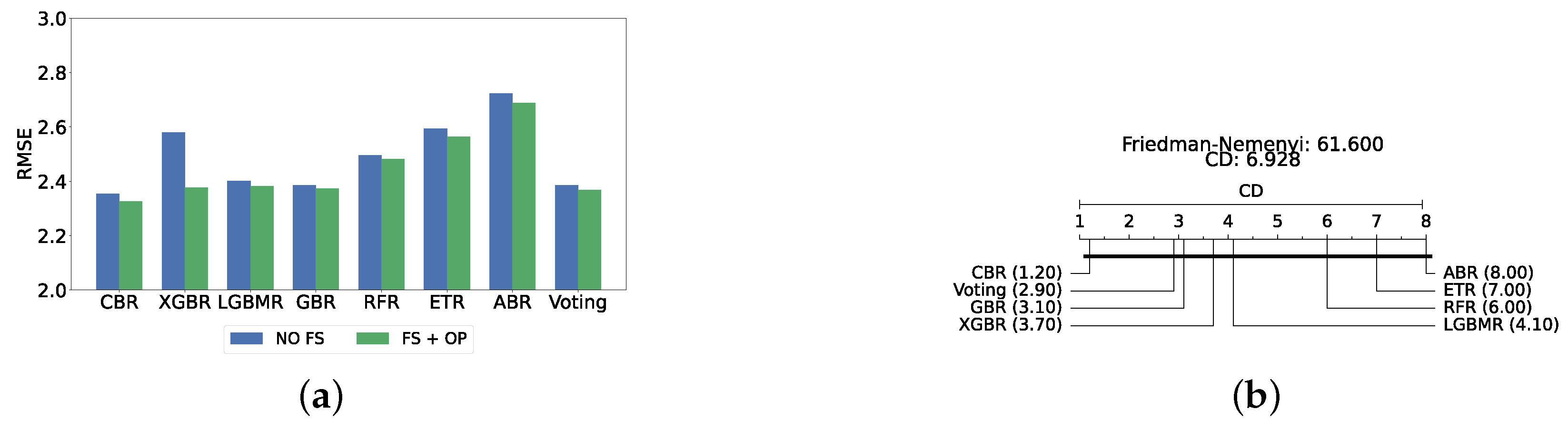

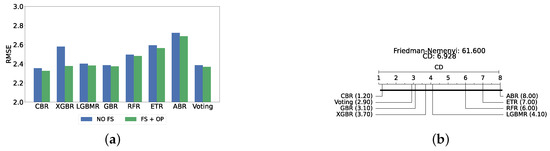

3.6. PHQ-9 Scale Prediction Layer

The confirmation layer in the proposed framework serves as a regression layer aimed at predicting individual PHQ-9 scores. As its primary function is to provide additional validation and robustness to the predictions made by the main layers, only static ensemble regression algorithms are employed at this stage. The regression models utilized in this layer include CatBoost Regressor (CBR), XGBoost Regressor (XGBR), LightGBM Regressor (LGBMR), Gradient Boosting Regressor (GBR), Random Forest Regressor (RFR), Extra Trees Regressor (ETR), and AdaBoost Regressor (ABR).

3.7. Explainable Artificial Intelligence

XAI fosters transparency, trust, and ethical practices in AI systems. Transparent AI is essential for users and stakeholders to understand and trust decision-making processes, particularly in high-stakes domains such as healthcare. XAI addresses this need by providing interpretable insights into how AI models arrive at their predictions, enabling users to comprehend and trust the technology’s outcomes. This transparency is also crucial for ensuring ethical AI practices, such as fairness, accountability, and responsibility. It is challenging to uphold these ethical principles without clear insights into how AI models function. Moreover, XAI facilitates regulatory compliance, with frameworks such as the General Data Protection Regulation mandating explanations for decisions made by automated systems, ensuring the transparency necessary for legal adherence [64]. In the medical domain, XAI is particularly indispensable. According to Chaddad et al. (2023), adopting interpretable and transparent AI systems in healthcare is necessary to gain the trust of medical professionals and patients. XAI techniques such as feature visualization, saliency maps, and DTs have been successfully employed to enhance the interpretability of AI models in diagnostics, treatment planning, and patient management. This transparency is crucial to effectively integrate AI into clinical practice and ensure that clinicians can trust and rely on AI-assisted decision-making [65]. In mental health, XAI is gaining traction as a tool to enhance the precision and accessibility of mental health assessments and interventions. Byeon (2023) highlights how XAI, particularly through methods like SHapley Additive exPlanations (SHAP) and Local Interpretable Model-Agnostic Explanation (LIME), is being used to predict depression and assist in expert decision-making. By improving the interpretability of AI models in psychiatric applications, XAI plays a crucial role in increasing the acceptance of AI in mental health, particularly in identifying high-risk individuals and guiding treatment decisions [66].

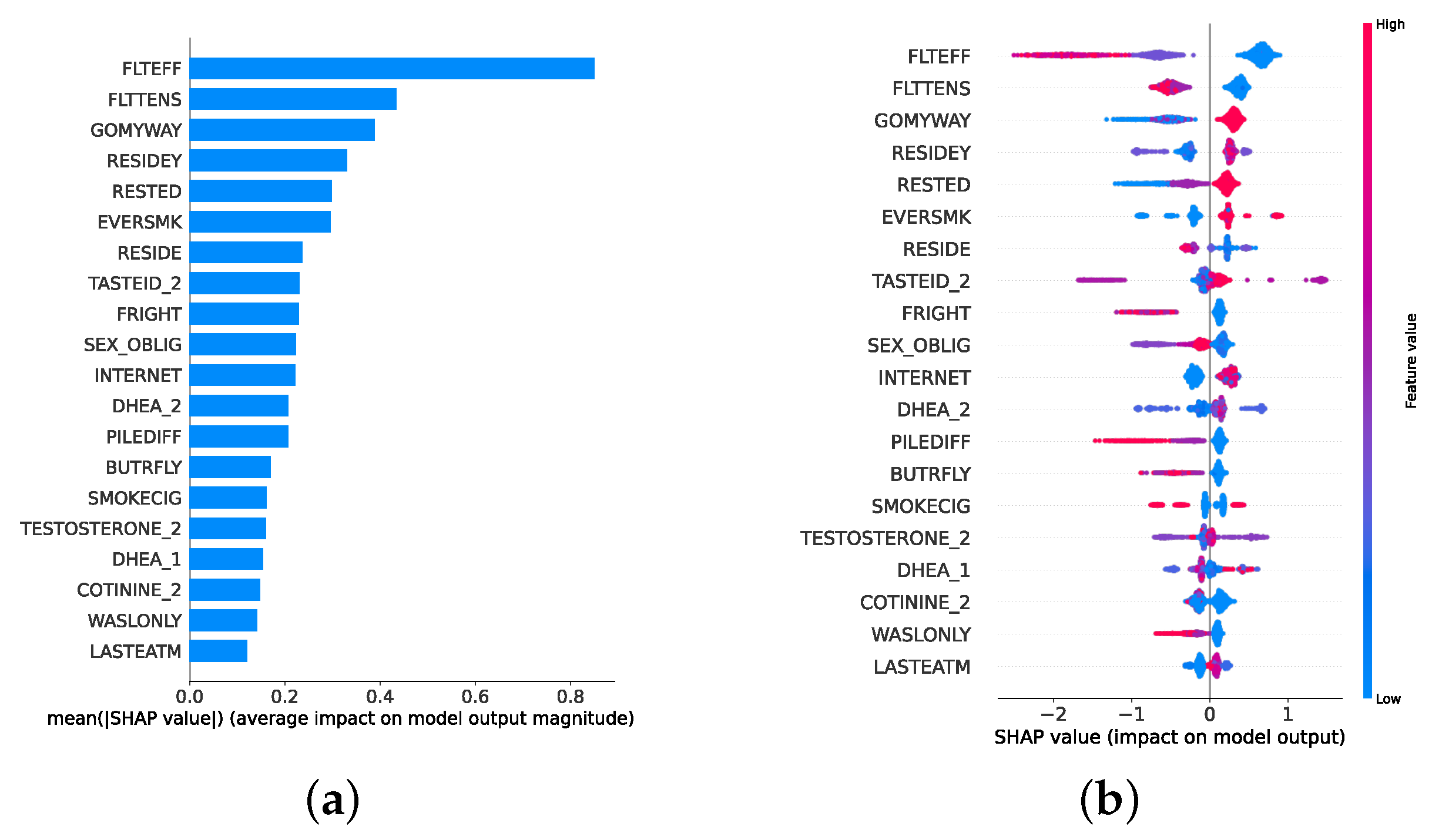

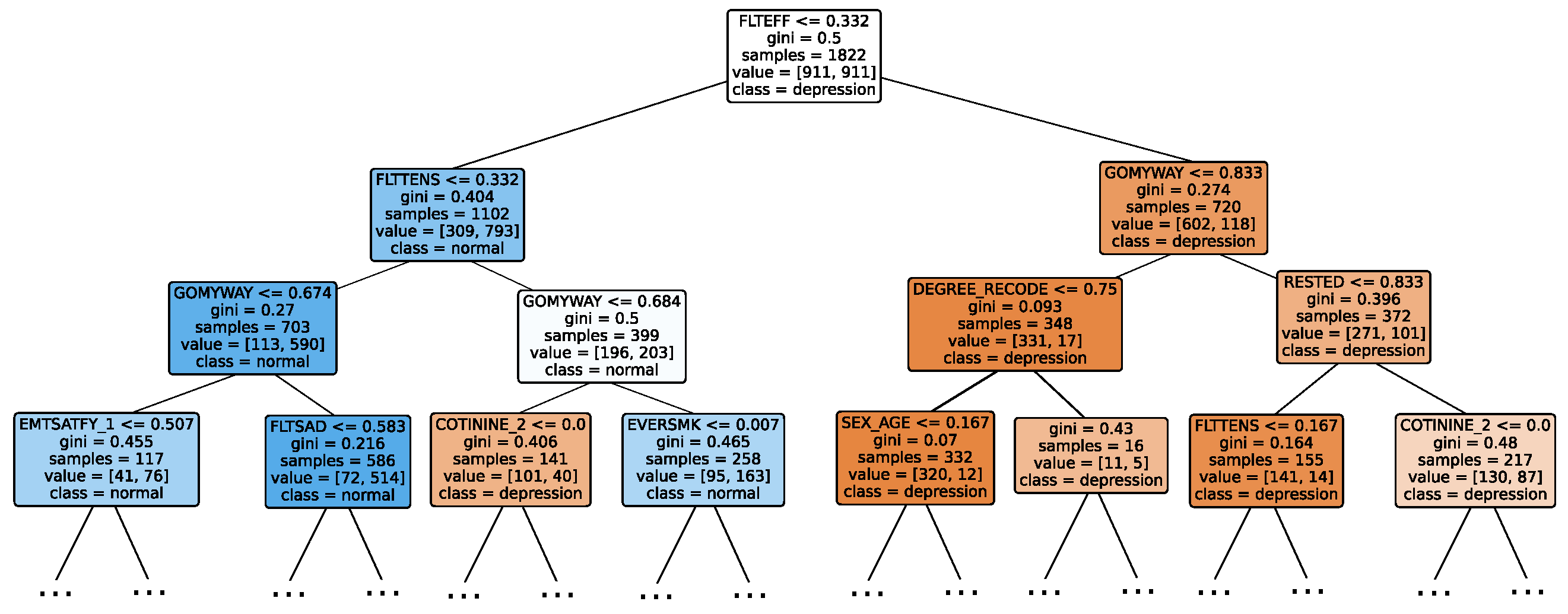

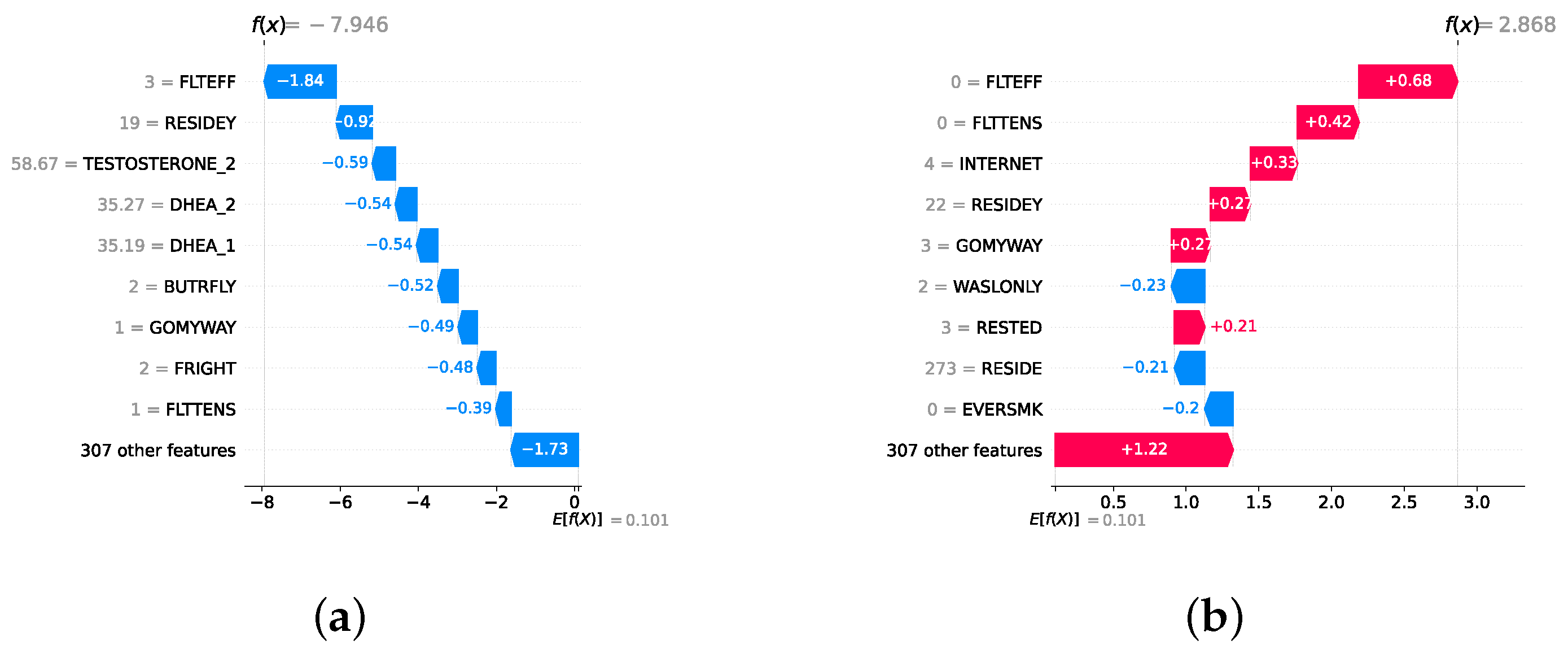

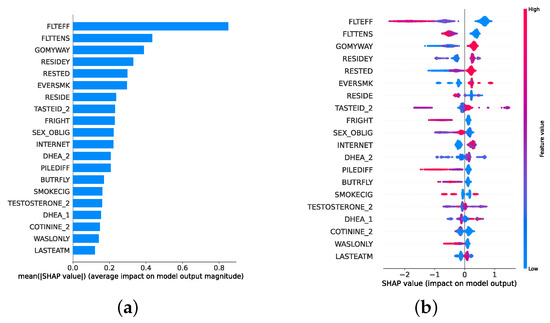

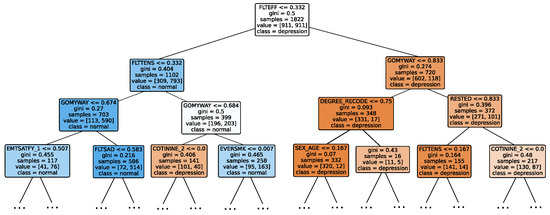

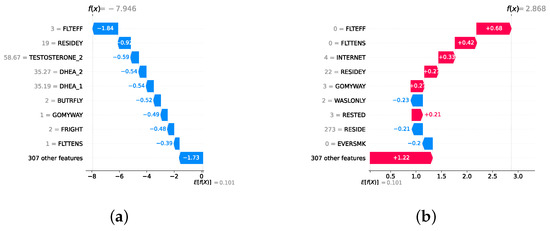

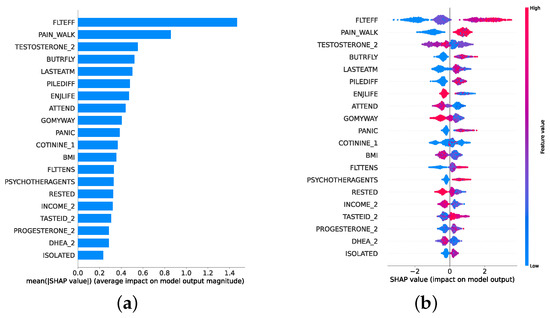

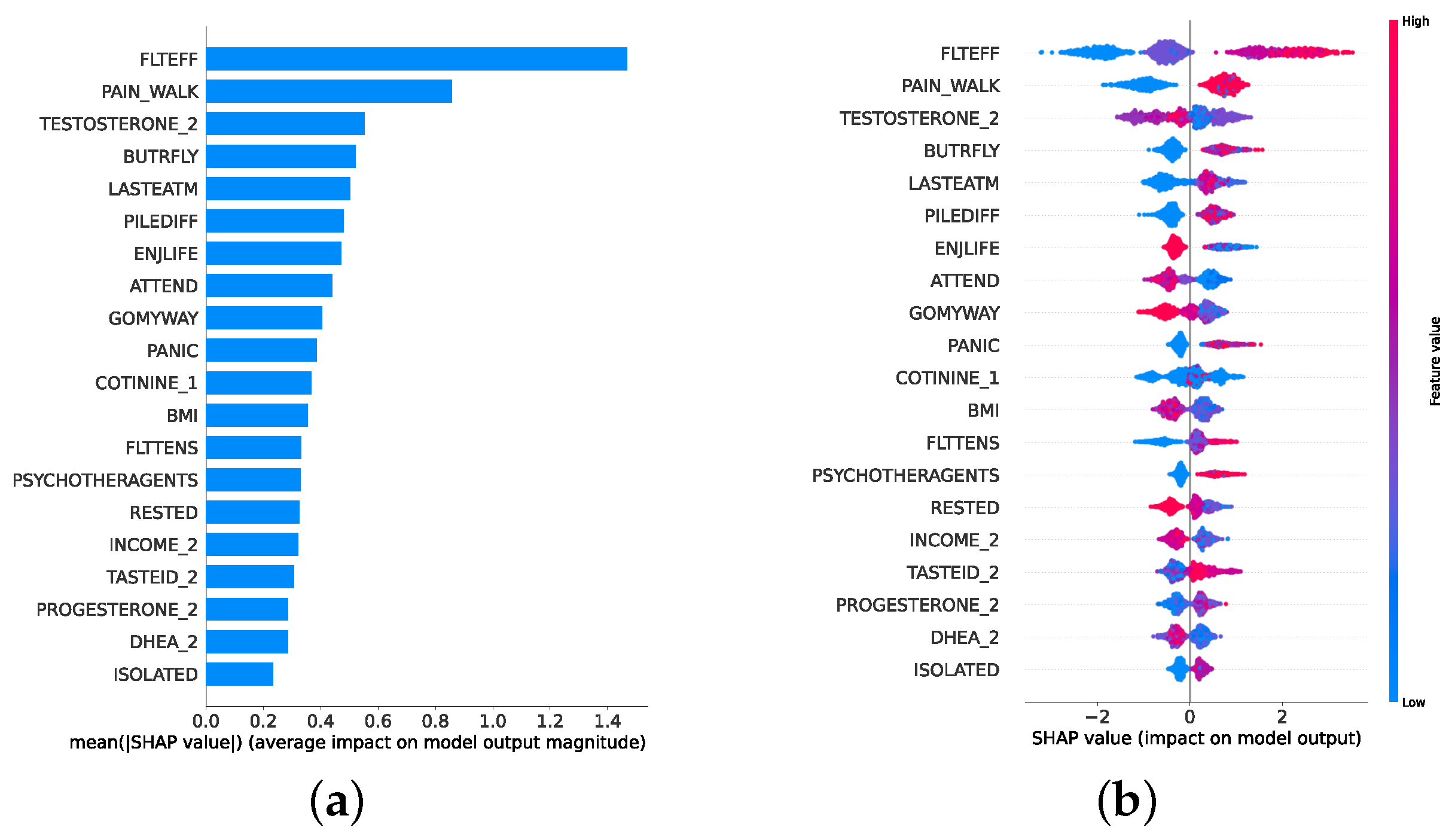

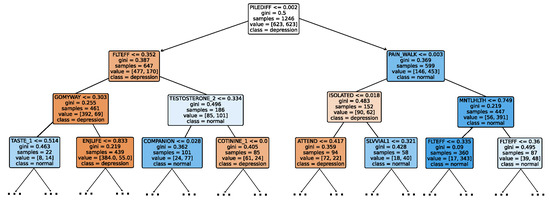

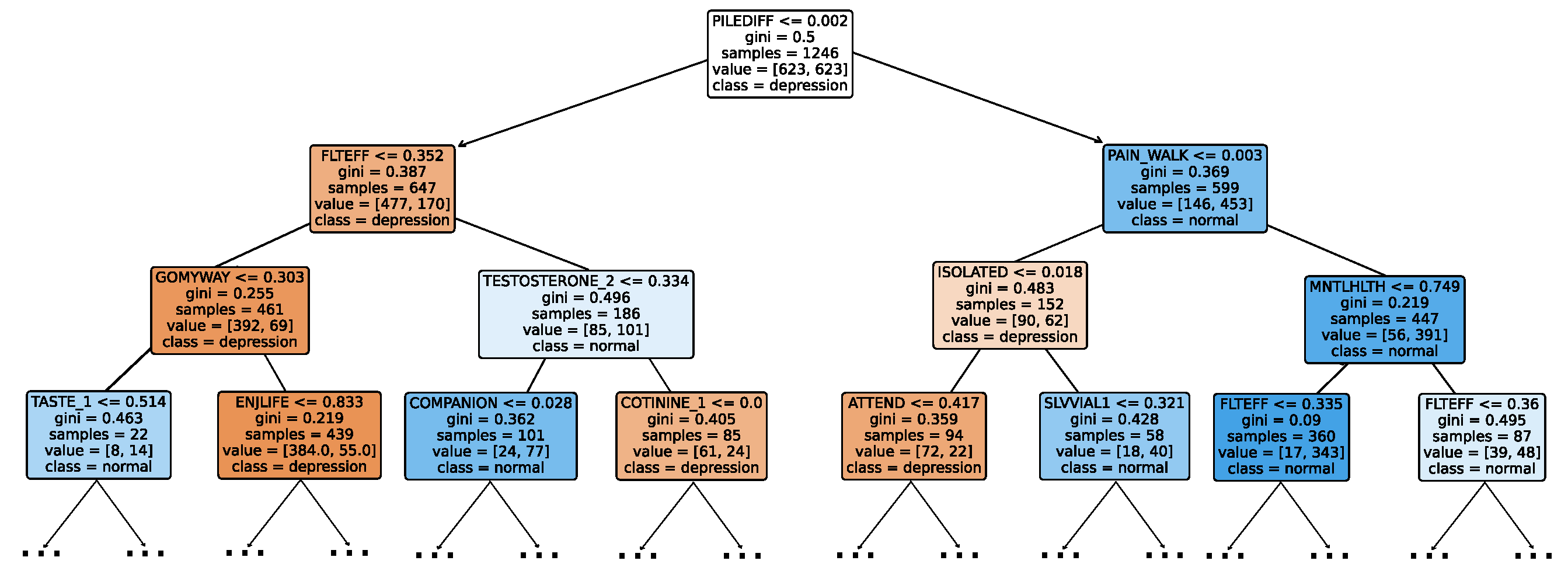

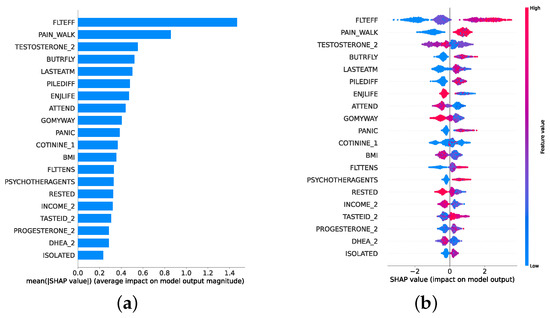

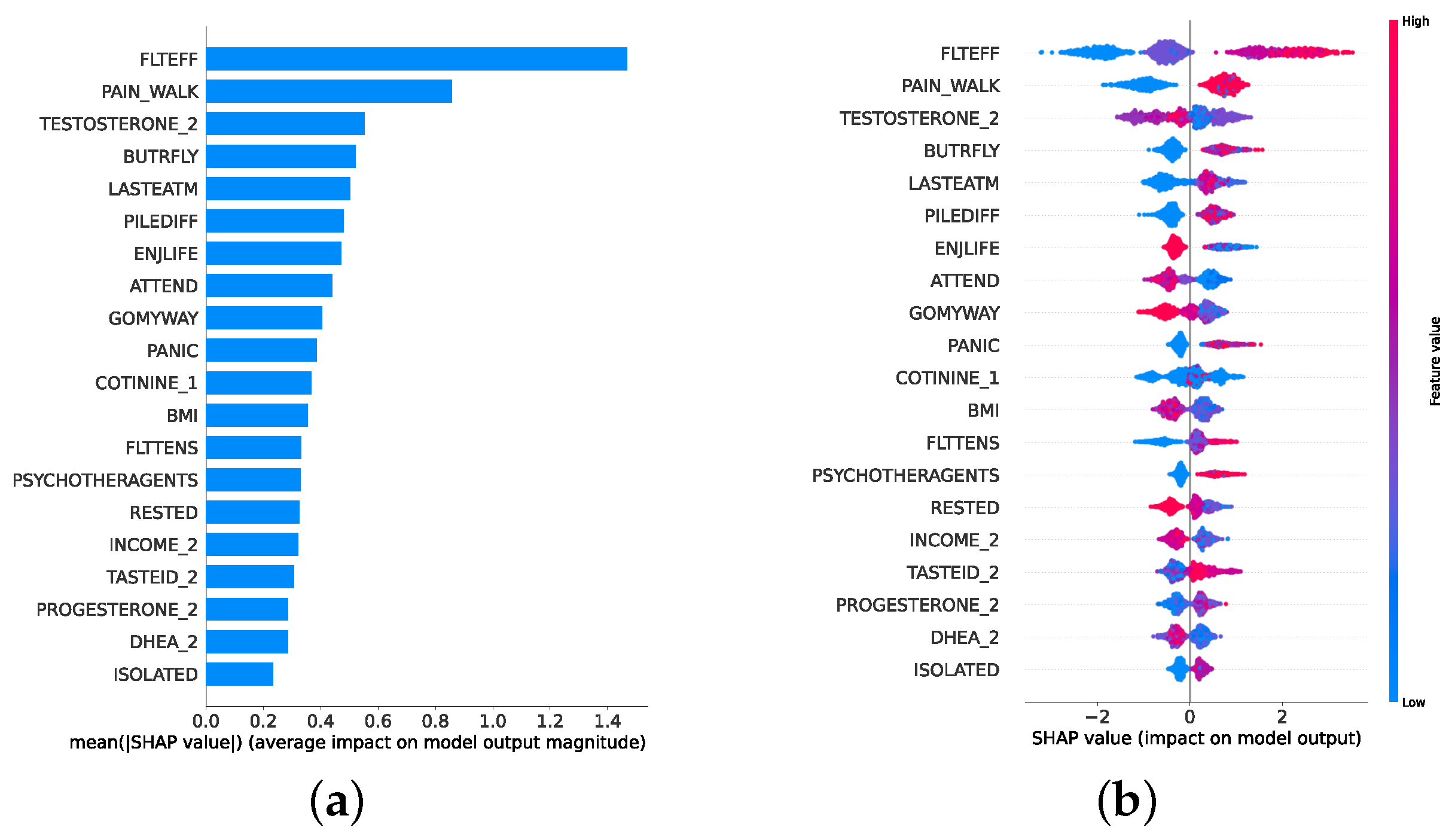

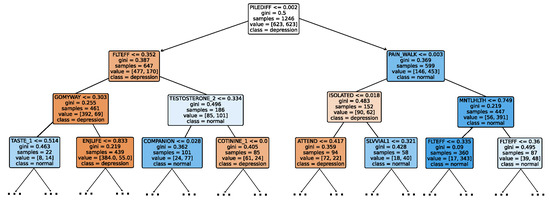

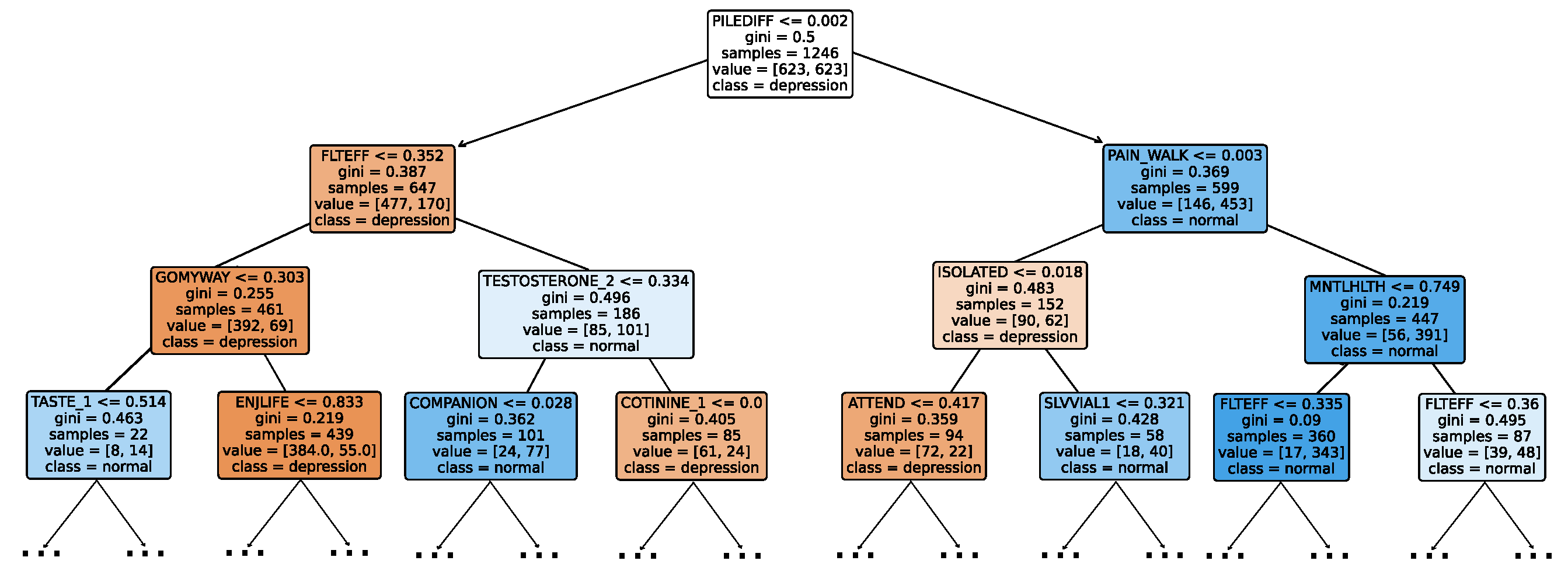

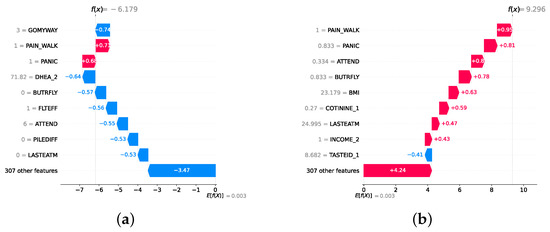

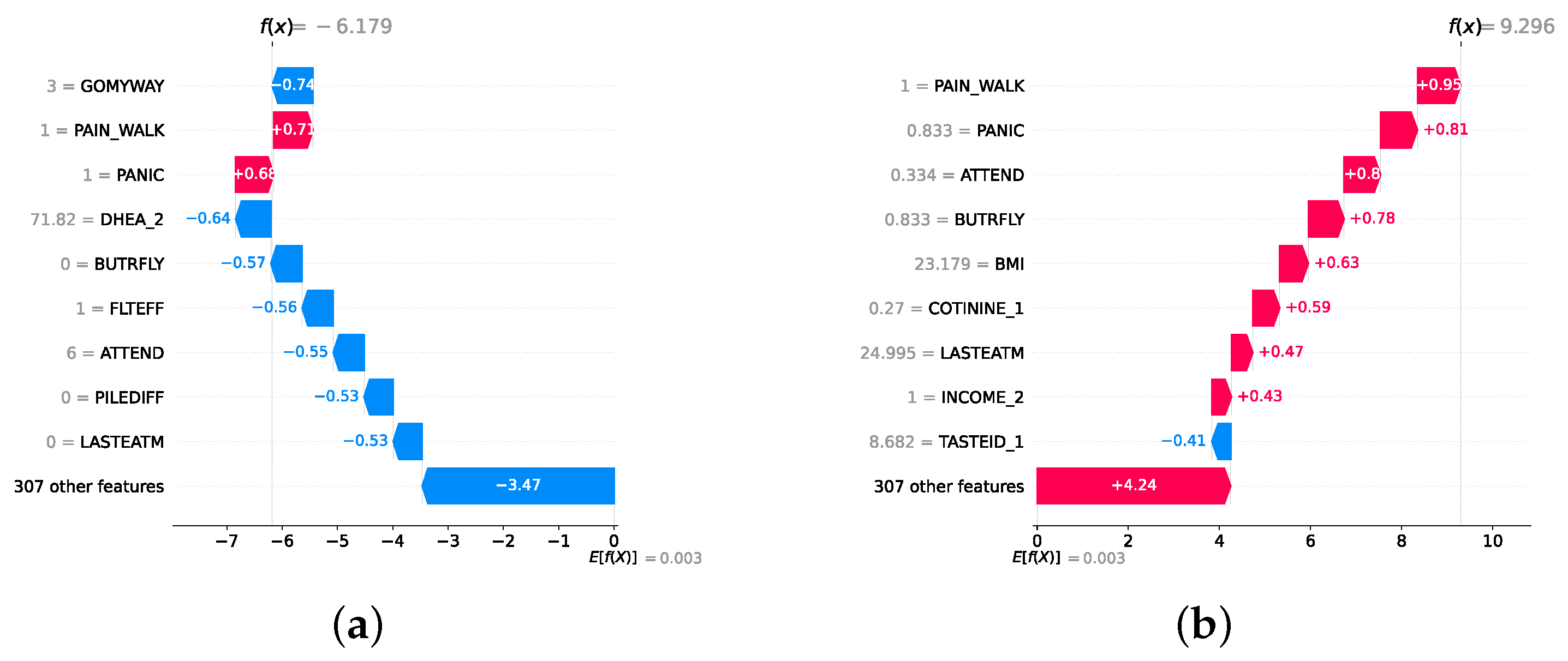

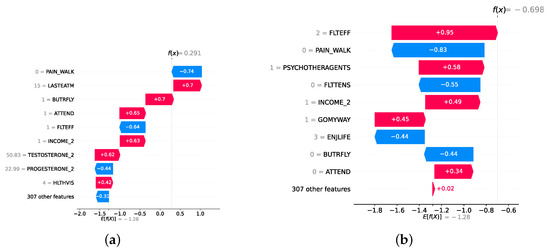

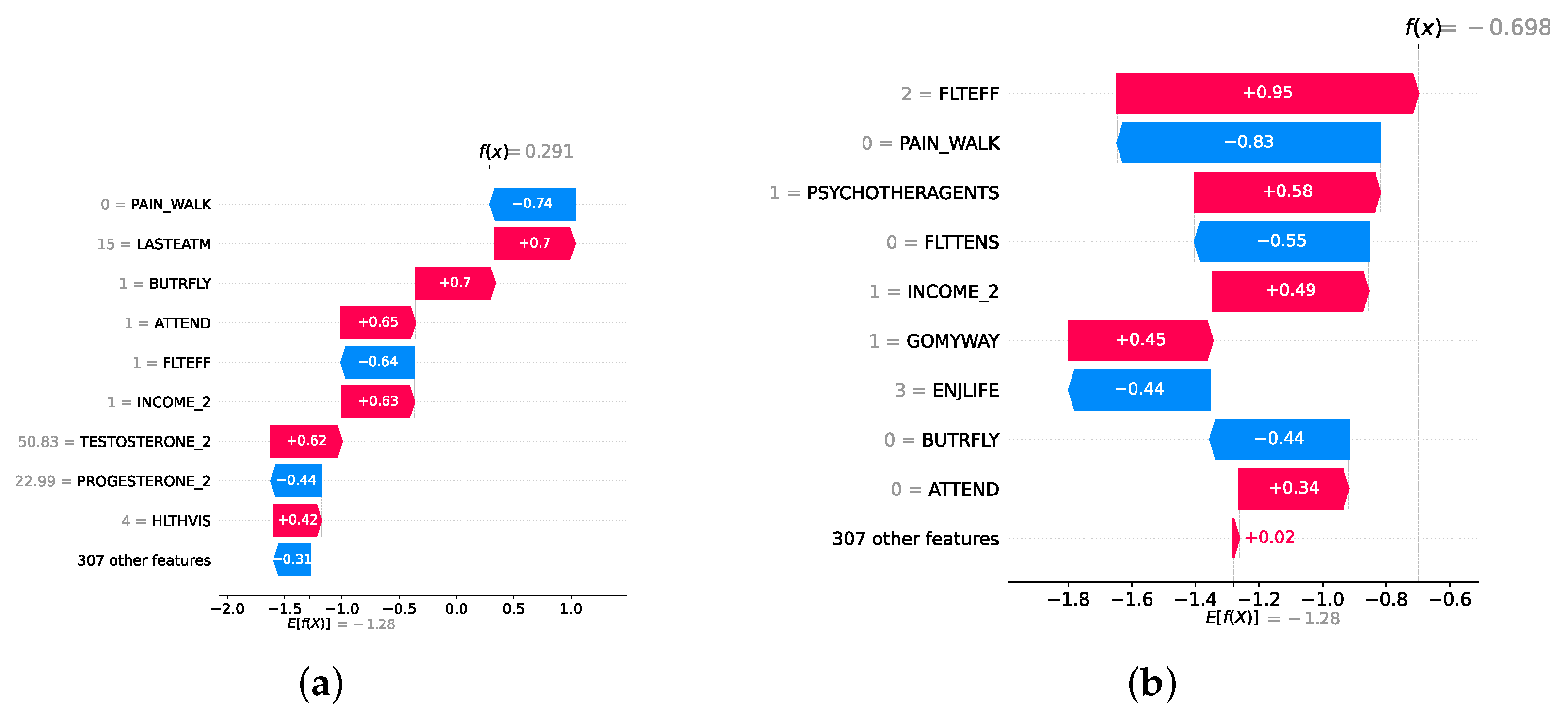

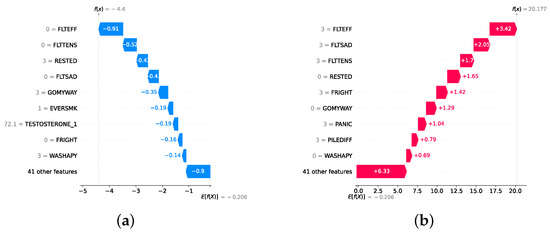

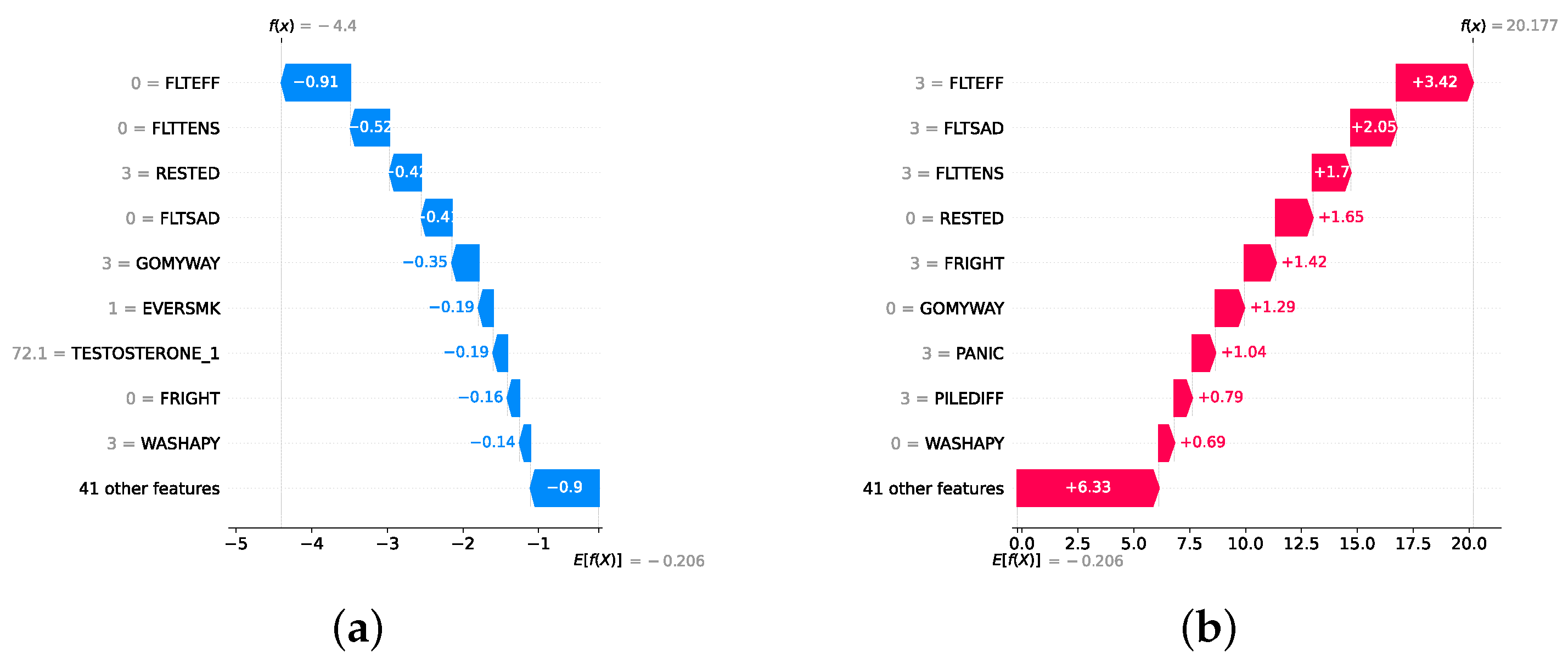

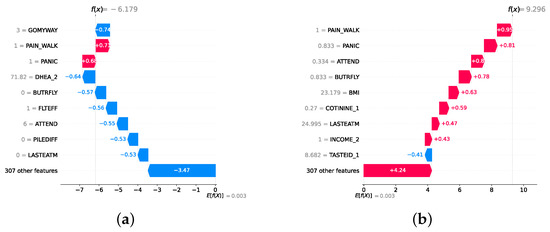

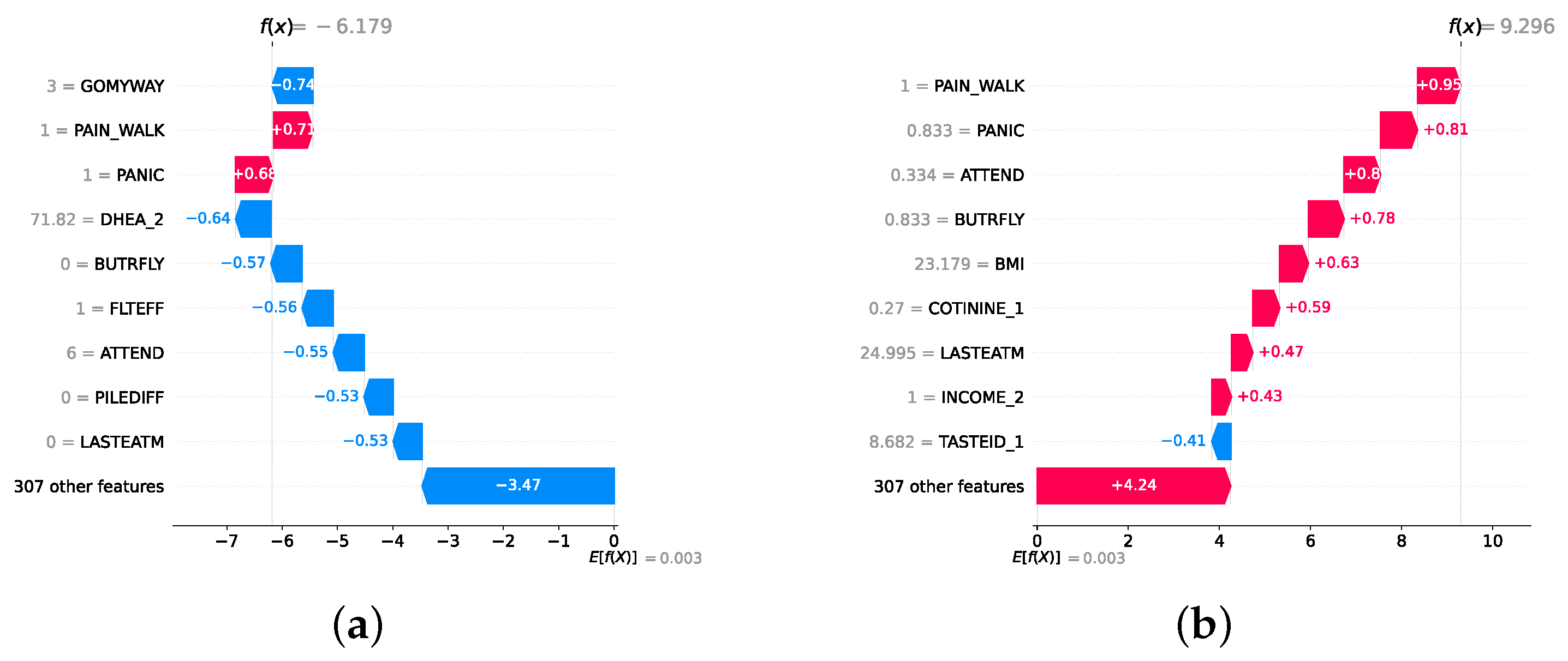

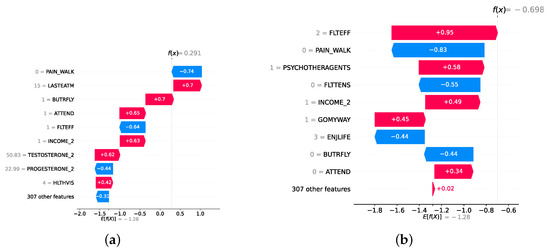

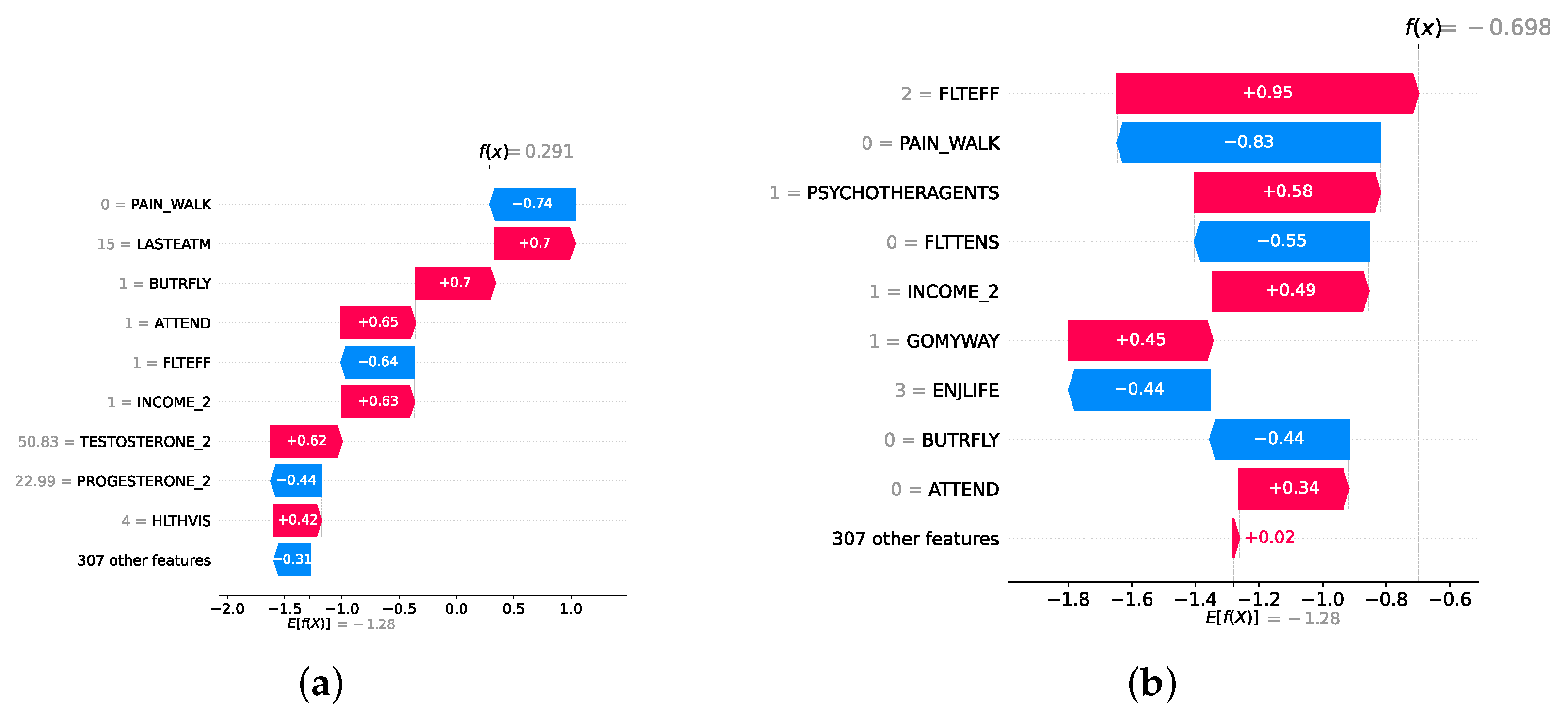

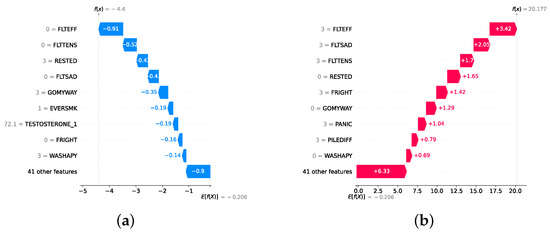

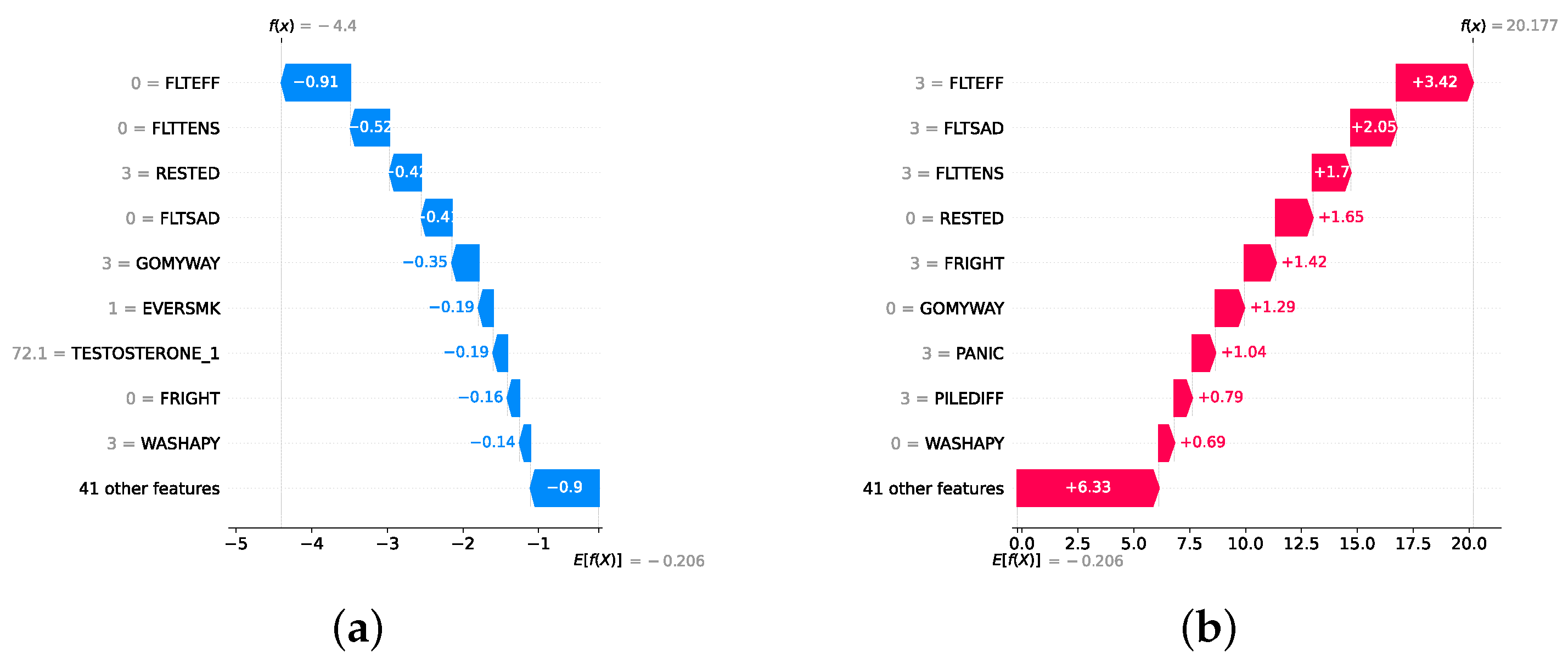

Given the growing importance of XAI in promoting ethical AI development, ensuring regulatory compliance, and fostering trust, its incorporation into ML applications is essential. Explainability techniques provide deeper insights into how AI models generate predictions, building user confidence in the models’ outputs. Specifically, XAI allows for a clear understanding of the factors that drive the model’s predictions, particularly in identifying depression and assessing its severity in different stages of the framework. In the proposed framework for depression detection and severity prediction, various explainability techniques were applied to visualize and interpret the best-performing models at each layer. SHAP summary plots were generated to offer a global view of feature importance, detailing how specific features influence the model’s overall predictions. SHAP beeswarm plots complemented this by showing the distribution of feature impacts across individual instances. In addition, DT visualizations were employed to explain the hierarchical structure of decision-making, with decision-tree rule paths providing step-by-step insight into how individual predictions are made. For more instance-specific analysis, waterfall plots were created to break down feature contributions for both depression detection and severity prediction. These explainability techniques provide a comprehensive understanding of the key factors that influence the predictions of the model, providing critical information on the presence and severity of depression. By improving transparency and trust in the model, XAI facilitates the responsible deployment of AI systems in clinical and real-world settings, ensuring that these tools can be used confidently by healthcare professionals and end users alike.

4. Experimental Setup

The experiments in this study were performed on a system with the following specifications: an AMD Ryzen 9 5900HS CPU running at 3.3 GHz and 16 GB of RAM. The operating system used was Windows 11. The software environment included Python 3.12.3 with key libraries such as Imbalanced-learn 0.12.2, Numpy 1.26.4, Orange3 3.37.0, Pandas 2.2.2, Scikit-learn 1.4.2, and Matplotlib 3.8.4. The code of all experiments is available at https://github.com/InfoLab-SKKU/DES4Depression (accessed on 25 September 2024) and data are available at https://doi.org/10.3886/ICPSR20541.v10 (accessed on 25 April 2024).

4.1. Performance Evaluation Metrics

To assess the performance of the models for the detection and severity prediction layers, a comprehensive evaluation using various performance metrics was conducted, including accuracy, precision, recall, F1-score, and AUC. For regression models, metrics include RMSE, MAE (Mean Absolute Error), and (R-squared) to evaluate regression models.

4.2. Experimental Roadmap for the Detection and Severity Prediction Layers

In both the detection and severity prediction layers, three sublayers were implemented sequentially: classical ML, followed by static ensemble ML, and then dynamic ensemble models. These sublayers were applied in succession, with the best-performing models from each preceding sublayer used for feature selection in the following layer. Initially, classical ML models were employed, utilizing the top 200 features identified through correlation analysis. The most optimal classical ML model was then selected for feature selection for the static ensemble layer, narrowing down to the top 150 features. Subsequently, the best static ensemble model was used to identify the top 50 features of the dynamic ensemble layer. A list of all classical ML models, static ensemble models, and dynamic ensemble models can be found in Section 3.5. The sublayers of the classical models and static ensemble models underwent three testing conditions: without feature selection and without hyperparameter optimization, with feature selection but without hyperparameter optimization, and with both feature selection and hyperparameter optimization. The latter condition was used to determine the “best model” for feature selection in the subsequent layer. For the dynamic ensemble sublayer, only one testing condition was implemented, where all base classifiers were optimized, and feature selection was made. All base classifiers utilized within the dynamic ensemble sublayer correspond to the classifiers implemented in both the classical and static ensemble approaches. These base classifiers have undergone hyperparameter optimization to ensure optimal performance when incorporated into the dynamic ensemble sublayer. For the purpose of a fair comparison, the dynamic ensemble methods themselves are employed with default parameter settings. Hyperparameter optimization of the base classifiers was based on Bayesian optimization (Bayes search). It is important to note that hyperparameter optimization was performed subsequent to the feature selection step. The search spaces for optimizing hyperparameters of classical models can be found in Supplementary Listing S1, while the search spaces for optimizing hyperparameters of static ensemble models can be found in Supplementary Listing S2. Ultimately, the model demonstrating the highest performance was selected and further enhanced with XAI. Note that it is challenging to obtain feature importance with DES methods. Hence, the best classical ML or static ensemble model will be used to generate XAI.

4.3. Experimental Roadmap for the Scale Prediction Layer

A static ensemble approach will be employed utilizing several static ensemble regressors, a list of which is stated in Section 3.6. The study will be conducted in two phases: initially without feature selection and hyperparameter optimization, and subsequently with the implementation of both feature selection and hyperparameter optimization. The best model was then used in XAI. Feature selection was completed by the best model from the detection layer, while hyperparameter optimization was completed through Bayes search. The search spaces for optimizing hyperparameters of regressors can be found in Supplementary Listing S3.

4.4. Experimental Roadmap for XAI

Model explainability is conducted separately for all three layers. For the two primary layers—detection and severity prediction—both global explainability and local-instance explainability methods are employed. In the case of global explainability, the best-performing model from either the classical or static ensemble models is selected to generate SHAP summary plots and SHAP beeswarm plots. These visualizations provide insights into feature importance, illustrating how individual features contribute to the presence of depression in the detection layer or to the severity of depression in the severity prediction layer. Additionally, a DT visualization is created to demonstrate the decision-making process of the DT model. For local-instance explainability, waterfall plots are utilized to illustrate the model’s decision-making process for individual cases. In the detection layer, waterfall plots are generated to compare normal versus depressed individuals, while in the severity prediction layer, they compare mild versus moderate–severe depression cases. Furthermore, several sequential decision-making paths from a DT will be shown for both layers to see how a DT makes its decision when predicting the presence of depression or the severity of depression in an individual. In the confirmation layer (regression), only local-instance explainability is applied, specifically through the use of waterfall plots. These plots are generated for two extreme cases: a PHQ-9 score of 0 (indicating no depression) and a PHQ-9 score of 27 (indicating severe depression), providing detailed insights into the factors influencing the lowest and highest possible depression scores.

5. Results and Discussion

5.1. Results of Depression Detection

This section provides a comprehensive analysis of the outcomes derived from the detection layer experiments (i.e., normal individuals against depressed individuals). The discussion is structured into three subsections: classical ML models, static ensemble models, and dynamic ensemble models. The test results are collected and reported for all models. A 10-fold cross-validation is used for models’ optimization to ensure the robustness and stability of the results, and a holdout test set is employed to measure the generalization performance. The test results are presented as mean ± standard deviation to accurately convey the variability in the performance of the models. Accuracy and F1-score are utilized as primary metrics for the evaluation, providing a consistent baseline for comparison across different models. The findings of these evaluations not only highlight the effectiveness of each model but also inform the selection of features and optimization strategies for subsequent layers in the experimental roadmap.

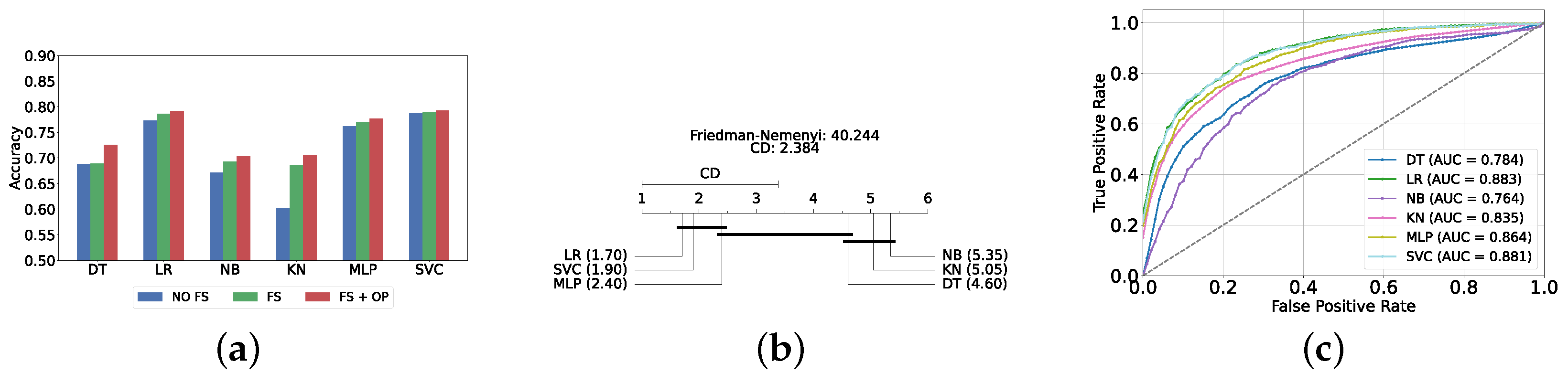

5.1.1. Classical ML Models

The performance of classical ML models is evaluated under three distinct conditions: without feature selection and hyperparameter optimization (refer to Supplementary Table S1), with feature selection only (refer to Supplementary Table S2), and with both feature selection and hyperparameter optimization (refer to Table 2). The feature selection process for this layer utilizes the top 200 features identified via the correlation operation. As anticipated, the performance of all classical ML models improved following the implementation of feature selection and hyperparameter optimization. The SVC model achieved the highest accuracy and F1-score (i.e., 81.47% ± 1.25% and 81.45% ± 1.24%, respectively).

Table 2.

Classical classifier results with feature selection and hyperparameter optimization (detection layer).

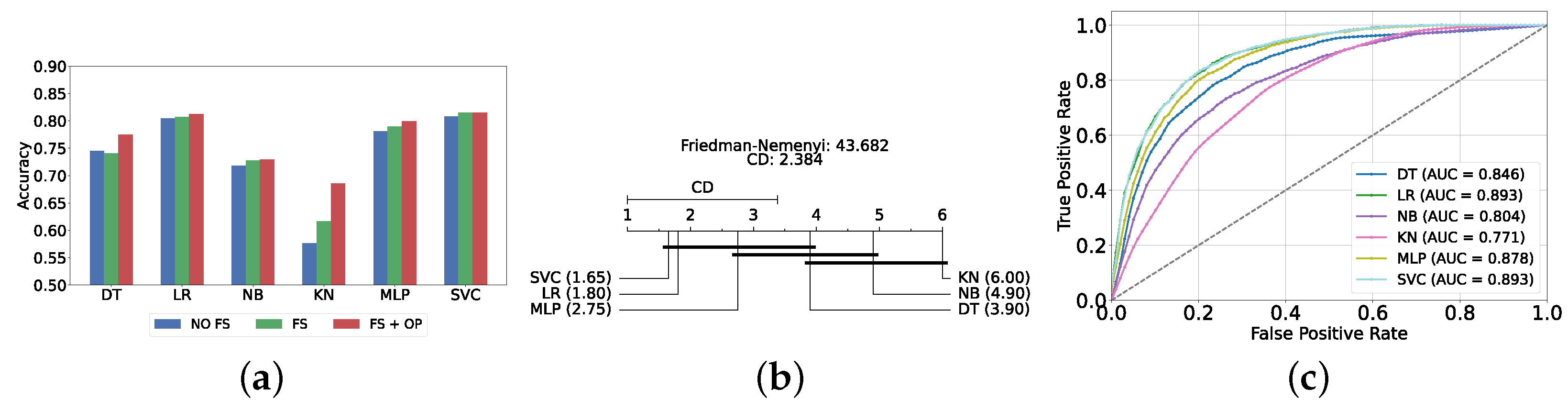

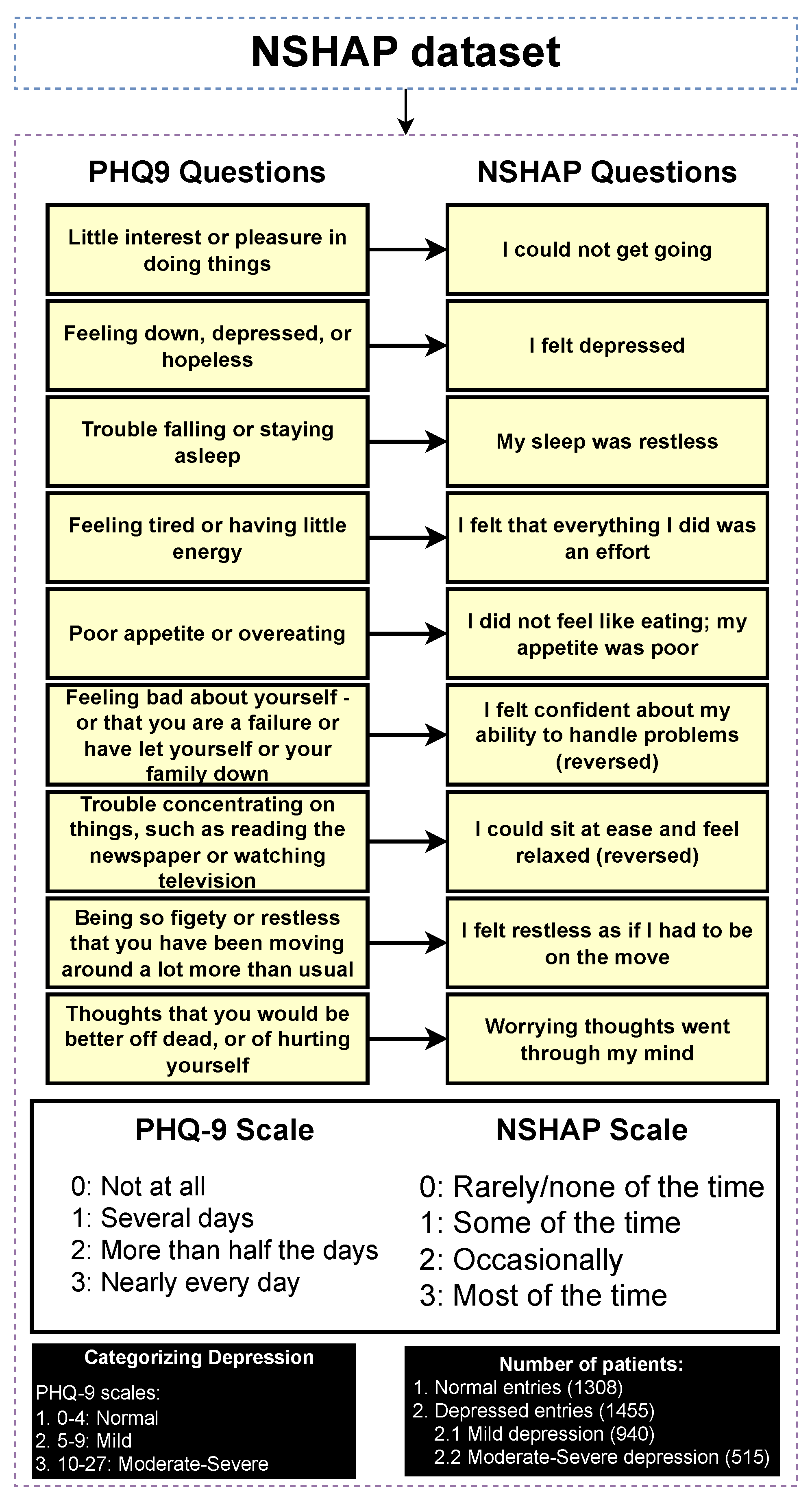

Figure 4a provides a summary of this stage, illustrating the performance of classical ML models with and without the application of feature selection and hyperparameter optimization steps. As observed, the performance of all models, except for DT, improves after feature selection and further increases following hyperparameter optimization (including DT). This demonstrates the significance of these steps in optimizing model performance. Figure 4b shows the Friedman–Nemenyi test, revealing that SVC, LR, and MLP are statistically similar in performance, while these models are distinct from KNN, NB, and DT. Lastly, Figure 4c depicts the Receiver Operating Characteristic (ROC) curves along with their respective AUC scores, further evidencing the classifiers’ effectiveness in differentiating classes.

Figure 4.

Performance comparison of different classical classifiers at the detection layer. Abbreviations: feature selection (fs); hyperparameter optimization (op); critical difference (cd); area under curve (auc). (a) Performance of classical classifiers with and without feature selection and optimization (detection layer). (b) Comparison of classical classifiers based on the Friedman test (detection layer). (c) AUC scores for classical classifiers with feature selection and hyperparameter optimization (detection layer).

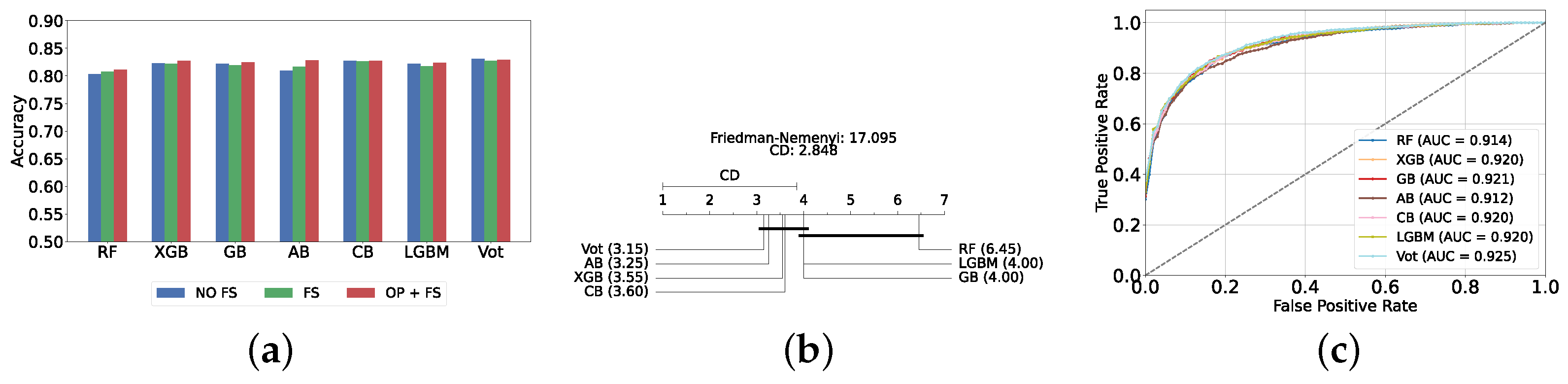

5.1.2. Static Ensemble Models

In this section, we evaluate the performance of static ensemble models. Similar to the previous section, we assessed the performance of static ensemble ML models under three different conditions: without feature selection and hyperparameter optimization (refer to Supplementary Table S3), with feature selection only (refer to Supplementary Table S4), and with both feature selection and hyperparameter optimization (refer to Table 3). For feature selection, the top 150 features were identified using LR, as it is the second-best model evaluated in the previous classic ML models experiment. This choice is due to the difficulty in obtaining feature importance scores for SVC, which does not inherently provide a straightforward method for feature importance extraction.

Table 3.

Static ensemble classifier results with feature selection and hyperparameter optimization (detection layer).

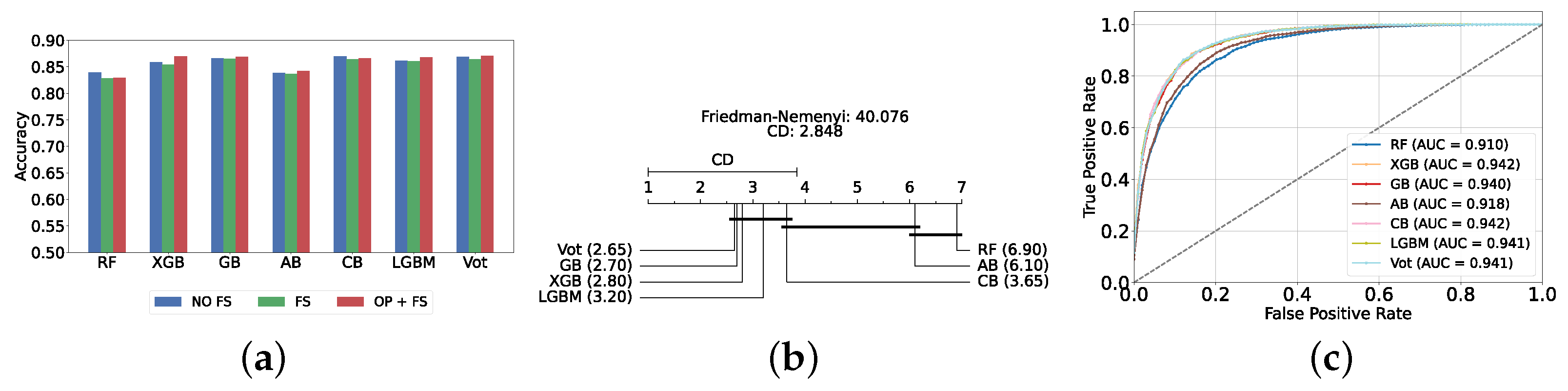

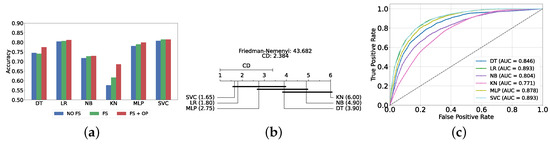

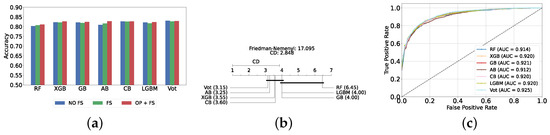

The most effective static ensemble model identified was the Vot classifier, which achieved an accuracy and F1-score of 87.08% ± 1.06% and 87.08% ± 1.06%, respectively. The Vot classifier excels because it leverages the strengths of multiple base models, combining their predictions to produce a more robust and accurate final decision. Moreover, this represents a significant improvement over the SVC model. Figure 5a provides a comparative analysis of model performance with and without the application of feature selection and hyperparameter optimization. It should be noted that some models experienced a decrease in accuracy following feature selection (without hyperparameter optimization), suggesting that a larger feature set may sometimes be beneficial. However, after optimizing hyperparameters, all models showed an increase in accuracy, indicating that optimizing hyperparameters for the new feature set is crucial for improving performance. Furthermore, all static ensemble models outperformed all classical ML models, highlighting the superiority of static ensemble methods over classical approaches. Figure 5b shows that Vot, GB, XGB, LGBM, and CB exhibit similar statistical performance, distinct from AB and RF, with RF being the lowest-ranked model. Finally, Figure 5c depicts the ROC curves alongside their corresponding AUC scores.

Figure 5.

Performance comparison of different static ensemble classifiers at the detection layer. (a) Performance of static ensemble classifiers with and without feature selection and optimization (detection layer). (b) Comparison of static ensemble classifiers based on the Friedman test (detection layer). (c) AUC scores for static ensemble classifiers with feature selection and hyperparameter optimization (detection layer).

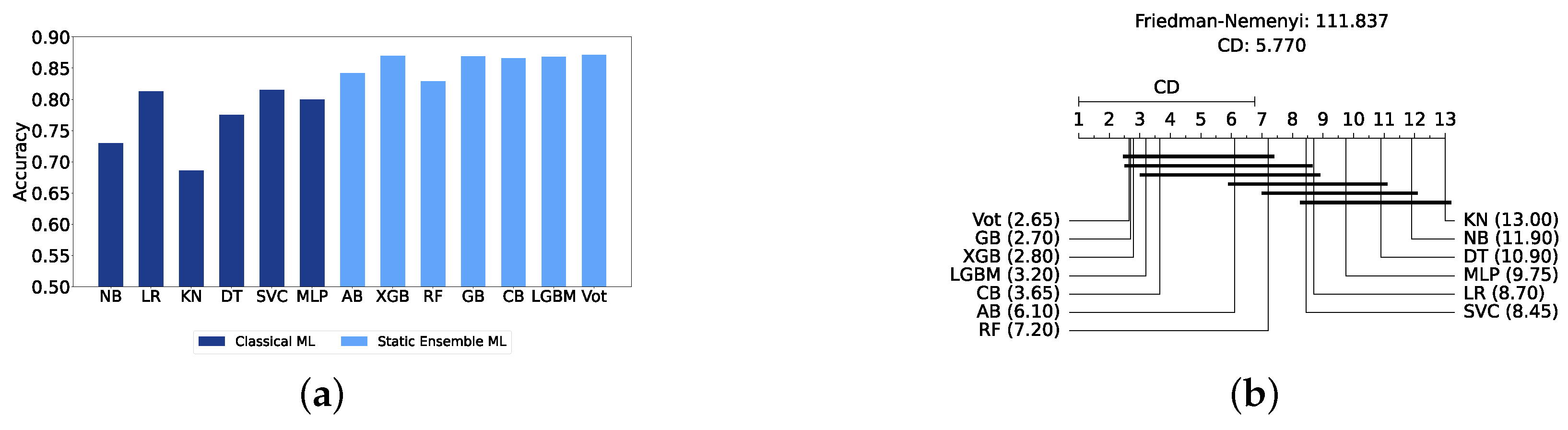

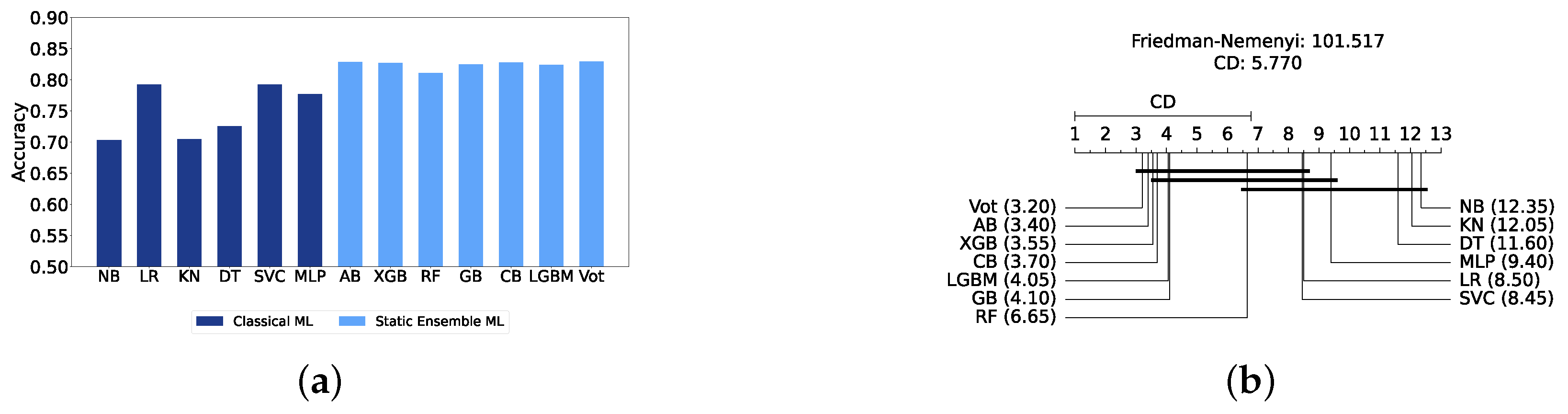

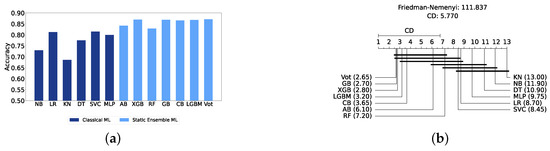

Comparison of Classic and Static Ensemble Classifiers

We present a comparison of classical and static ensemble models based on their accuracy, as illustrated in Figure 6a. It is noteworthy that all static ensemble models outperformed the classical ML models. The KNN model demonstrated the poorest performance among all models. Conversely, several static ensemble models, including Vot, LGBM, CB, GB, and XGB, exhibited similar high performance, with Vot being the highest-performing one. These results are further evidenced by the Friedman–Nemenyi test results illustrated in Figure 6b, which highlight Vot as the top performer with an average rank of 2.65, while KNN ranks the lowest with an average rank of 13.00.

Figure 6.

Performance comparison between different classic and static classifiers at the detection layer. (a) Performance metric comparison between classic and static ensemble classifiers (detection layer). (b) Comparison of classic and static ensemble classifiers based on the Friedman test (detection layer).

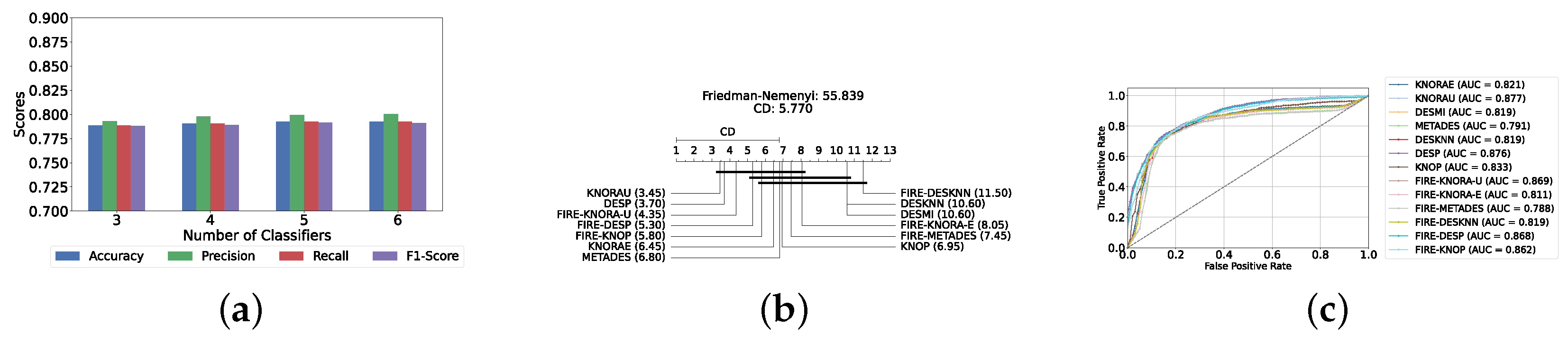

5.1.3. Dynamic Ensemble ML Models

In this section, we explore the performance of dynamic ensemble models toward the detection layer. It is worth mentioning that, in this evaluation, only 50 features were utilized, selected using XGB due to its superior performance in feature selection, except for the Vot classifier, for which feature importance could not be determined easily.

The construction of dynamic ensembles requires the selection of the optimal number and types of base classifiers. In particular, the list of base classifiers remains consistent with those optimized in previous sections. In this section, the performance of these ensembles was evaluated using the top three, four, five, and all six base classifiers, evaluated using twelve DES techniques.

Results of DES with a Pool of Classical Classifiers

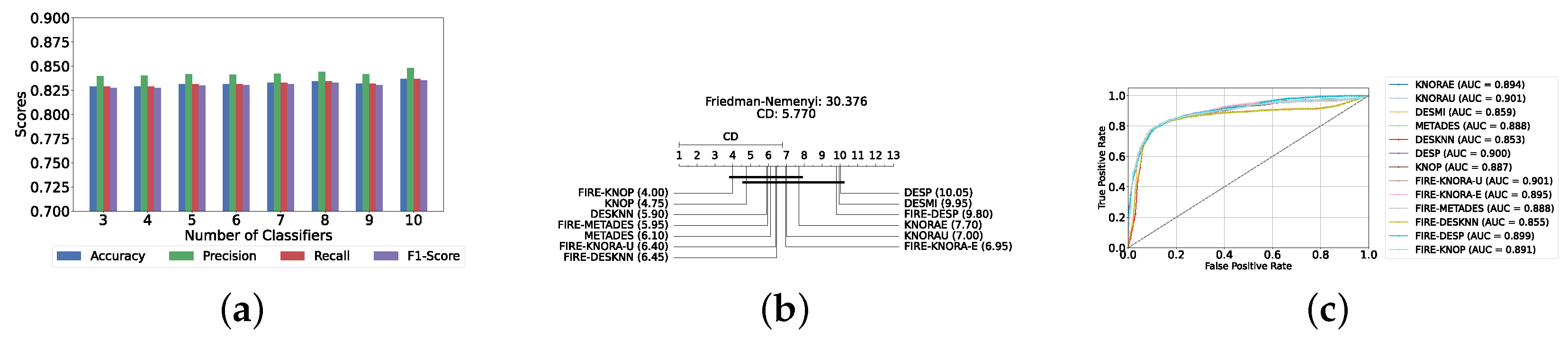

With a pool of optimized classical ML classifiers, the use of the six base classifiers results in the highest precision. The best performance was achieved with the FIRE-KNOP method, which achieved an accuracy and F1-Score of 83.28% ± 1.60% and 83.27% ± 1.60% respectively. Detailed metrics for all DES techniques employing six classical classifiers are presented in Table 4. The enhanced performance of FIRE-KNOP can be attributed to the incorporation of the FIRE capability, which implements dynamic frienemy pruning within the KNOP algorithm [56]. Figure 7a illustrates the performance of the FIRE-KNOP model with varying numbers of base classifiers. The results indicate that the performance metrics remain consistent for configurations with 4, 5, and 6 base classifiers, while a slight decline in performance is observed when the number of base classifiers is reduced to 3. Figure 7b presents the results of the Friedman–Nemenyi test, demonstrating that many classifiers are statistically similar in terms of performance. Notably, FIRE-KNOP emerges as the best-performing model, while FIRE-DESKNN is identified as the worst-performing model in the comparison. Lastly, Figure 7c displays the ROC curve along with the respective AUC scores. Details on the performance of DES models with different numbers of base classical classifiers can be seen in Supplementary Table S5.

Table 4.

DES model results with all six base classical classifiers (detection layer).

Figure 7.

Performance comparison of different DES classifiers with classical classifiers at the detection layer. (a) Comparison of FIRE-KNOP with classical classifiers pool with different numbers of base classifiers (detection layer). (b) Comparison of DES classifiers with a pool of 6 classical classifiers based on the Friedman test (detection layer). (c) AUC scores for DES classifiers with a pool of 6 classical classifiers (detection layer).

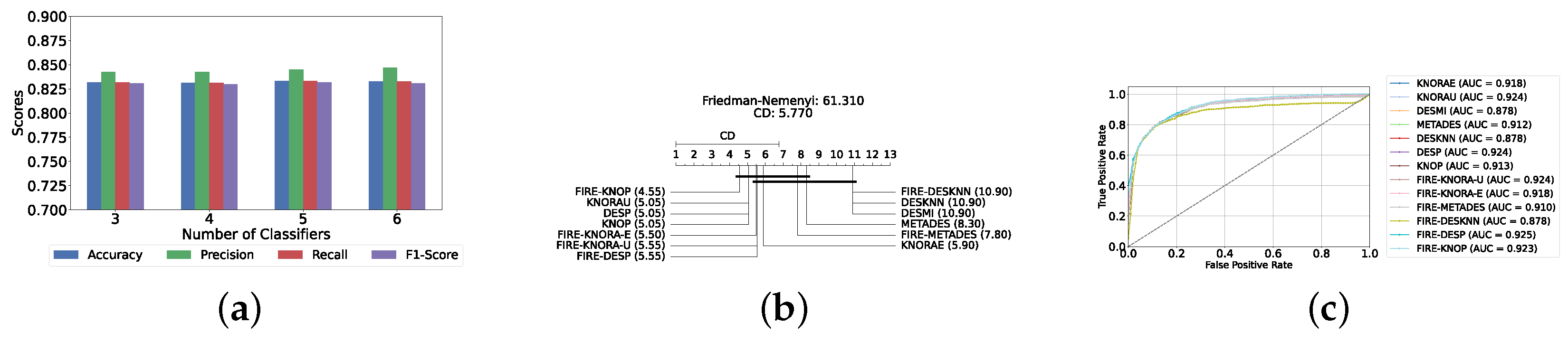

Results of DES with a Pool of Static Ensemble Models

We explored the potential to improve the performance of DES algorithms by using static ensemble models as the classifier pool for DES models. This approach was motivated by the superior performance of the static ensemble models observed in earlier experiments.

Our evaluation indicates that the optimal configuration consists of using five base classifiers: XGB, GB, AB, CB, and LGBM. The most effective configuration involved using the FIRE-KNOP method with the five aforementioned base classifiers. This method consistently demonstrated superior performance, mirroring the results observed with DES using a pool of classical classifiers. Notably, the accuracies and F1-scores achieved with this configuration were significantly higher amongst all DES methods, reflecting the improved performance of using static ensemble models over classical models. Specifically, the FIRE-KNOP method achieved an accuracy and F1-Score of 88.21% ± 1.05% and 88.21% ± 1.05%, respectively. Detailed metrics for all DES models using five static classifiers can be found in Table 5. Figure 8a illustrates the performance of FIRE-KNOP with varying numbers of base classifiers. In this case, using three, four, or five classifiers resulted in similar performance metrics, while using six classifiers led to a decrease in performance. This contrasts with the results for DES using classical classifiers, suggesting that the number of classifiers may be less important than the selection of the classifiers themselves. Figure 8b presents the Friedman–Nemenyi test results, with FIRE-KNOP again emerging as the best-performing model and DESKNN as the worst, mirroring the trends observed with DES using classical classifiers. Finally, Figure 8c shows the ROC curve with the respective AUC scores. Details on the performance of DES models with a different number of base static classifiers can be seen in Supplementary Table S6.

Table 5.

DES model results with five base static classifiers (detection layer).

Figure 8.

Performance comparison of DES classifiers with a static ensemble classifiers pool at the detection layer. (a) Comparison of FIRE-KNOP with a static ensemble classifiers pool with a different number of base classifiers (detection layer). (b) Comparison of DES classifiers with a pool of 5 static ensemble classifiers based on the Friedman test (detection layer). (c) AUC scores for DES classifiers with a pool of 5 static ensemble classifiers (detection layer).

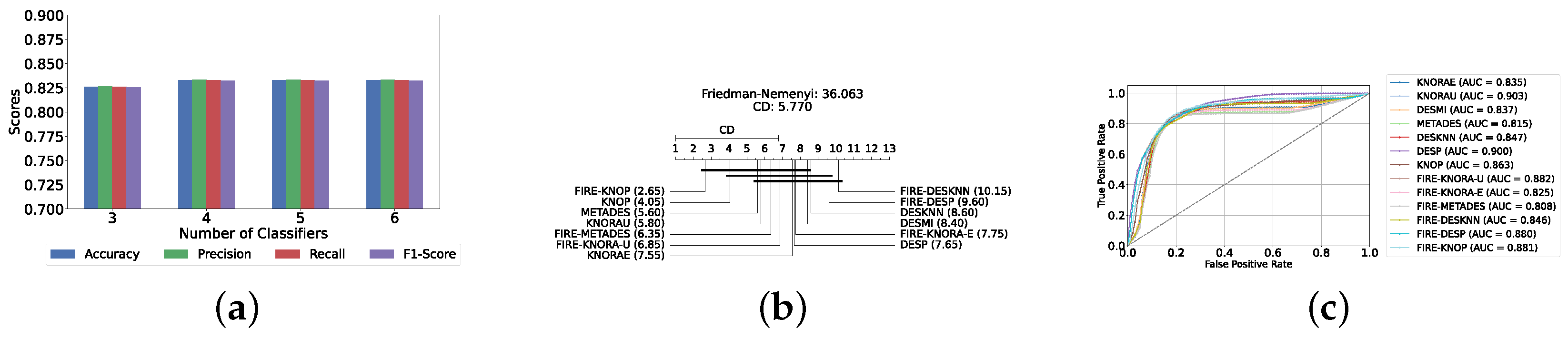

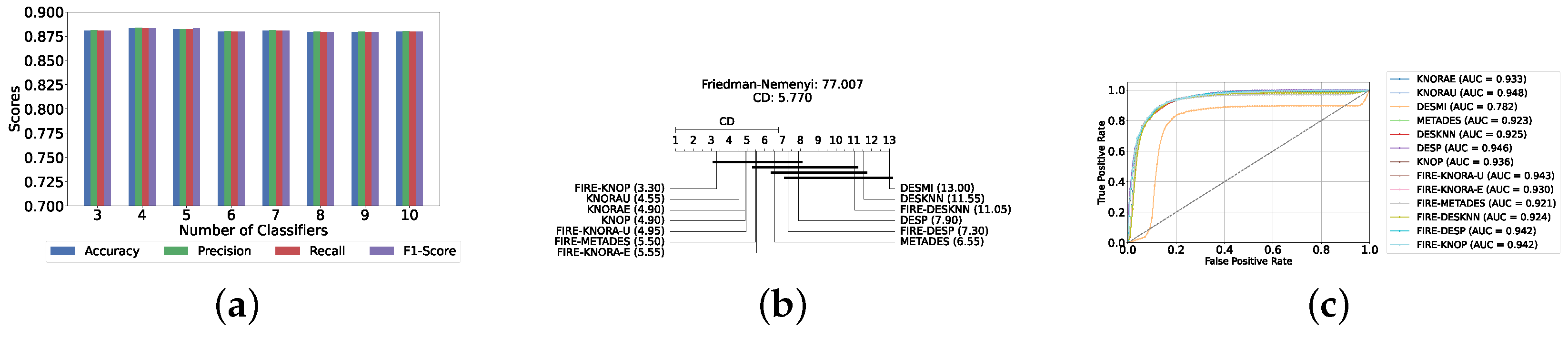

Results of DES with a Mixed Pool of Classical and Static Ensemble Classifiers

A mixed pool of optimized classical and static ensemble classifiers was used to optimize the performance of DES models further. The aim was to enhance performance by leveraging the diversity offered by a combined pool. Various pool sizes were tested, ranging from four to ten base classifiers. The best performance across all experiments was achieved with a combination of three static ensemble classifiers and one classical classifier (XGB, CB, LGBM, SVC). Based on the optimal 3/1 combination of static and classical classifiers, we evaluated the performance of 12 different DES techniques. Consistently, the FIRE-KNOP method emerged as the best performer, achieving an accuracy and F1-Score of 88.33% ± 0.96% and 88.33% ± 0.96%, respectively. Additionally, the best-performing model exhibits the lowest standard deviation, indicating its greater stability and consistency in comparison to the other models. This result not only highlights the effectiveness of FIRE-KNOP but also demonstrates its stability, as evidenced by the lowest standard deviation compared to other DES methods for all metrics. Detailed metrics for all DES techniques using this configuration can be found in Table 6. Figure 9a illustrates the performance of FIRE-KNOP with varying numbers of base classifiers, demonstrating consistent metrics across configurations ranging from 3 to 10 base classifiers. This contrasts with the conclusions drawn from previous experimental stages, where the number of base classifiers did not significantly impact the performance of DES models, suggesting the potential insignificance of classifier quantity in this context. Figure 9b displays the Friedman–Nemenyi test, once again demonstrating that FIRE-KNOP is the best-performing model. However, DESMI is identified as the worst-performing model, differing from previous trends. Finally, Figure 9c displays the ROC curve with the respective AUC scores. Details on the performance of DES models with different numbers of base mixed classifiers can be seen in Supplementary Table S7.

Table 6.

DES model results with a pool of four mixed classifiers (detection layer).

Figure 9.

Performance comparison of DES classifiers with a mixed classifiers pool at the detection layer. (a) Comparison of FIRE-KNOP with a mixed classifiers pool with a different number of base classifiers (detection layer). (b) Comparison of DES classifiers with a pool of 4 mixed classifiers based on the Friedman test (detection layer). (c) AUC scores for DES classifiers with a pool of 4 mixed ensemble classifiers (detection layer).

5.2. Results of Depression Severity Prediction

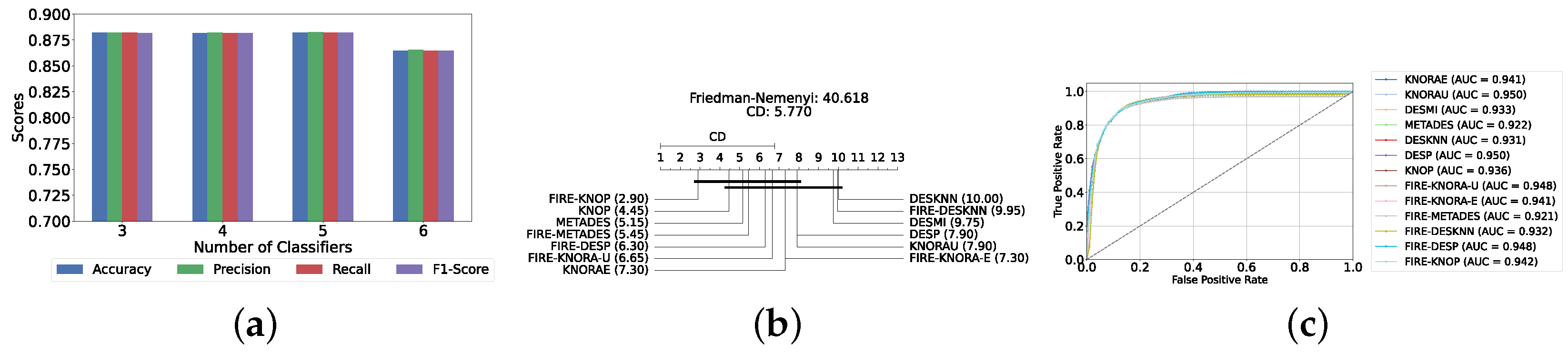

In this section, we delve into the severity prediction layer, specifically focusing on differentiating between mild and moderate–severe depression. This task mirrors the preceding detection section in terms of methodology, involving the use of classical ML models, static ensemble models, and dynamic ensemble models. A 10-fold holdout testing technique was employed to ensure robust validation and accuracy, the F1-Score was chosen as the primary metric for evaluation, and Bayes search was utilized for hyperparameter tuning. All experimental procedures were conducted in alignment with those used in the detection layer, ensuring methodological consistency and reliability.

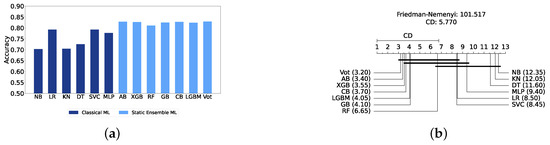

5.2.1. Classical ML Models

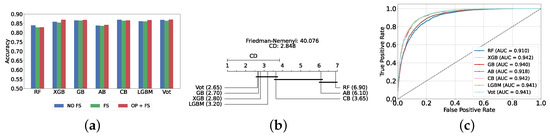

The evaluation of the severity prediction layer follows the same process as the detection layer, using classical ML models under three conditions: without feature selection and hyperparameter optimization (refer to Supplementary Table S8), with feature selection only (refer to Supplementary Table S9), and with both feature selection and hyperparameter optimization (refer to Table 7). The feature selection process used the top 200 features identified by correlation, and the hyperparameters were subsequently optimized. The SVC remains the most effective model for the classical section in the severity prediction layer, achieving an accuracy and F1-Score of 79.26% ± 1.99% and 79.16% ± 2.04%, respectively. This performance is comparable to that of the detection task, although the accuracy is marginally lower by approximately 2%. This slight decrease in accuracy suggests that severity prediction might be more challenging than detection. Figure 10a provides an overview of this stage, illustrating the performance of ML models with and without the application of feature selection and hyperparameter optimization. As demonstrated, all models experienced a notable improvement in performance following feature selection, with an additional increase observed after hyperparameter optimization. The Friedman–Nemenyi test is shown in Figure 10b, indicating that LR, SVC, and MLP exhibit similar performance, which is statistically distinct from that of DT, KNN, and NB. This observation mirrors the results of the detection layer, potentially suggesting a consistent trend across these models. The ROC curves, along with the models’ respective AUC scores, are shown in Figure 10c.

Table 7.

Classical classifier results with feature selection and hyperparameter optimization (severity prediction layer).

Figure 10.

Performance comparison of different classical classifiers at the severity prediction layer. (a) Performance of classical classifiers with and without optimization (severity prediction layer). (b) Comparison of classical classifiers based on the Friedman test (severity prediction layer). (c) AUC scores for classical classifiers with feature selection and hyperparameter optimization (severity prediction layer).

5.2.2. Static Ensemble ML Models

In this section, the performance of static ensemble models was evaluated for the depression severity prediction task, analogous to the depression detection discussed above. We evaluated the performance of static ML models under three conditions: without feature selection and hyperparameter optimization (refer to Supplementary Table S10), with feature selection only (refer to Supplementary Table S11), and with both feature selection and hyperparameter optimization (refer to Table 8). Similarly to the depression detection layer, the top 150 features were identified using LR.

Table 8.

Static ensemble classifier results with feature selection and hyperparameter optimization (severity prediction layer).

Vot, once again, demonstrates superior performance, achieving the highest accuracy and F1-Score of 82.94% ± 1.78% and 82.76% ± 1.84%, respectively. A notable difference in this analysis is that AB emerged as the second highest performing model instead of XGB. In such case, AB will be utilized as the feature selector model for the DES layers, as it is difficult to obtain feature importance with the Vot model.