Explainable AI in Diagnostic Radiology for Neurological Disorders: A Systematic Review, and What Doctors Think About It

Abstract

1. Introduction

1.1. Neurological Disorders—Morbidity and Mortality

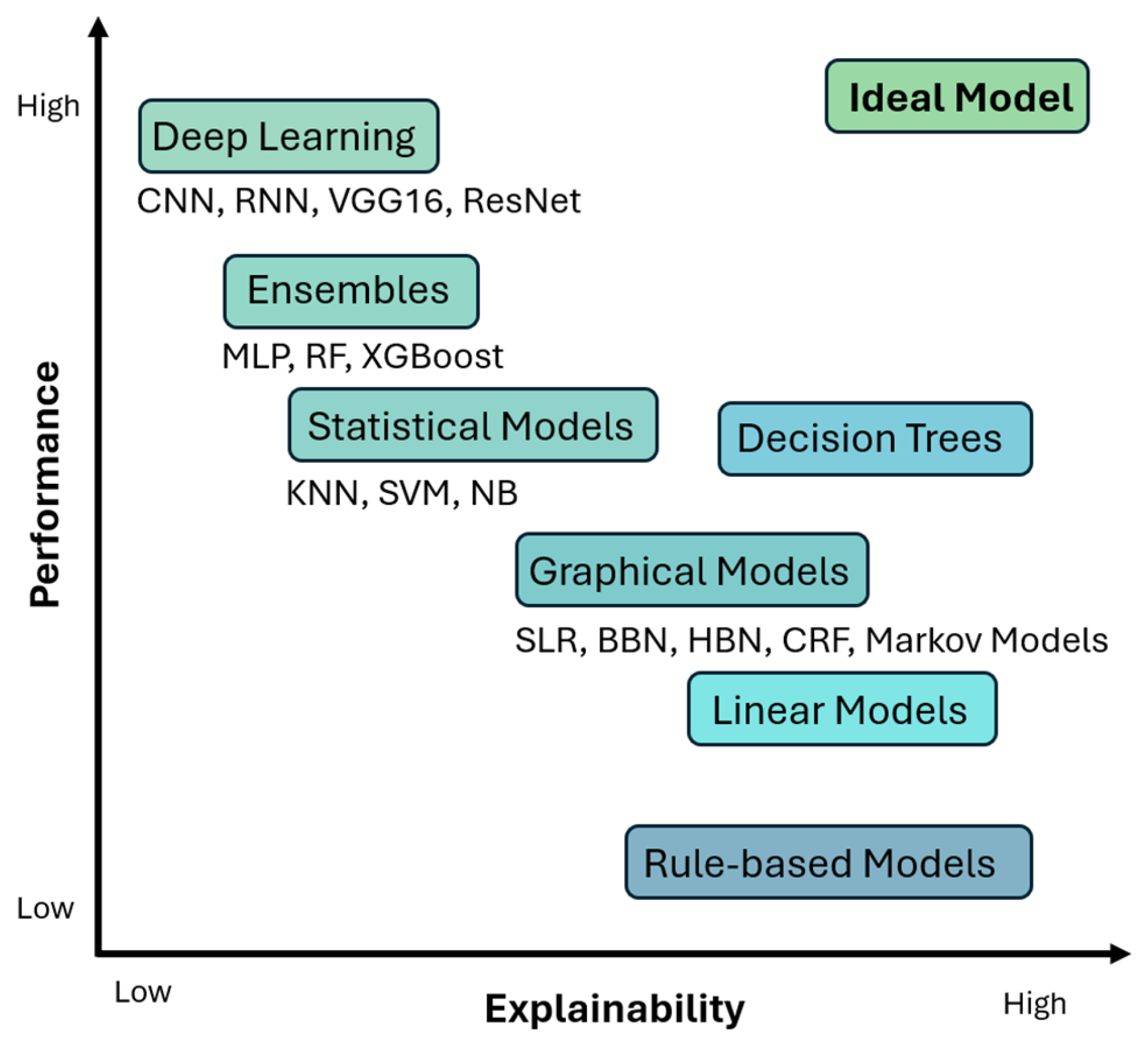

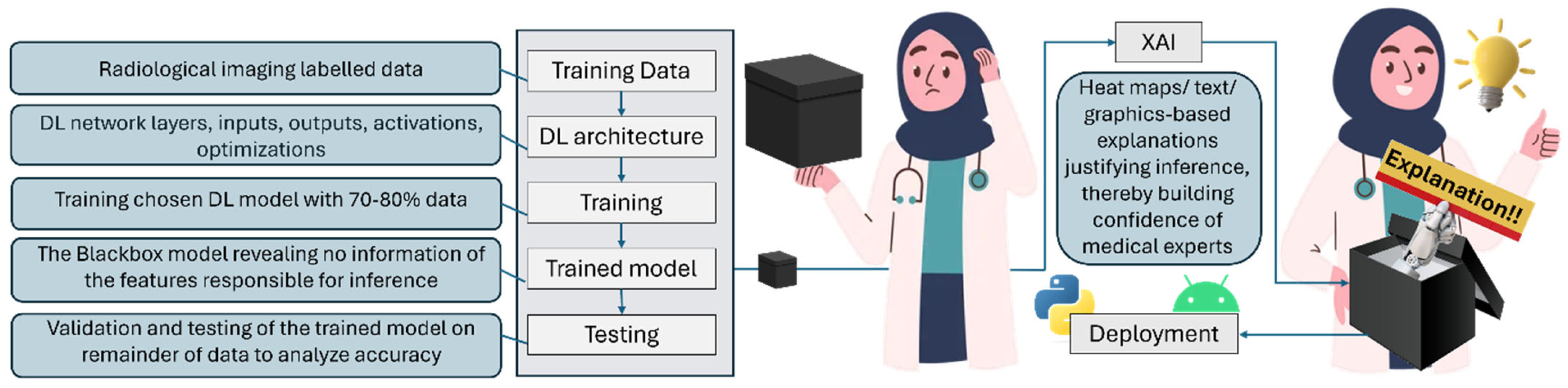

1.2. AI in CAD

- Interpretability: Interpretability refers to the ability to understand the decision-making process of an AI model. The operation of an interpretable model is transparent and provides details about the relationships between inputs and outputs.

- Explainability: Explainability refers to the ability of an AI model to provide clear and intuitive explanations of the decisions made to the end user. In other words, an explainable AI model provides justification of the decisions made.

- Transparency: Transparency refers to the ability of an AI model to provide a view into the inner workings of the system, from inputs to inferences.

- Black box: The black box model in AI is one whose operations are not visible to the user. Such models arrive at decisions without providing any explanation as to how they were reached. Such models lack transparency, and are therefore frowned upon and not trusted in applications like diagnostic medicine, where precious human lives are on the line.

1.3. Unraveling the Mystery!

1.4. XAI Methods and Frameworks

1.5. Would XAI Be the Matchmaker?

2. Methods

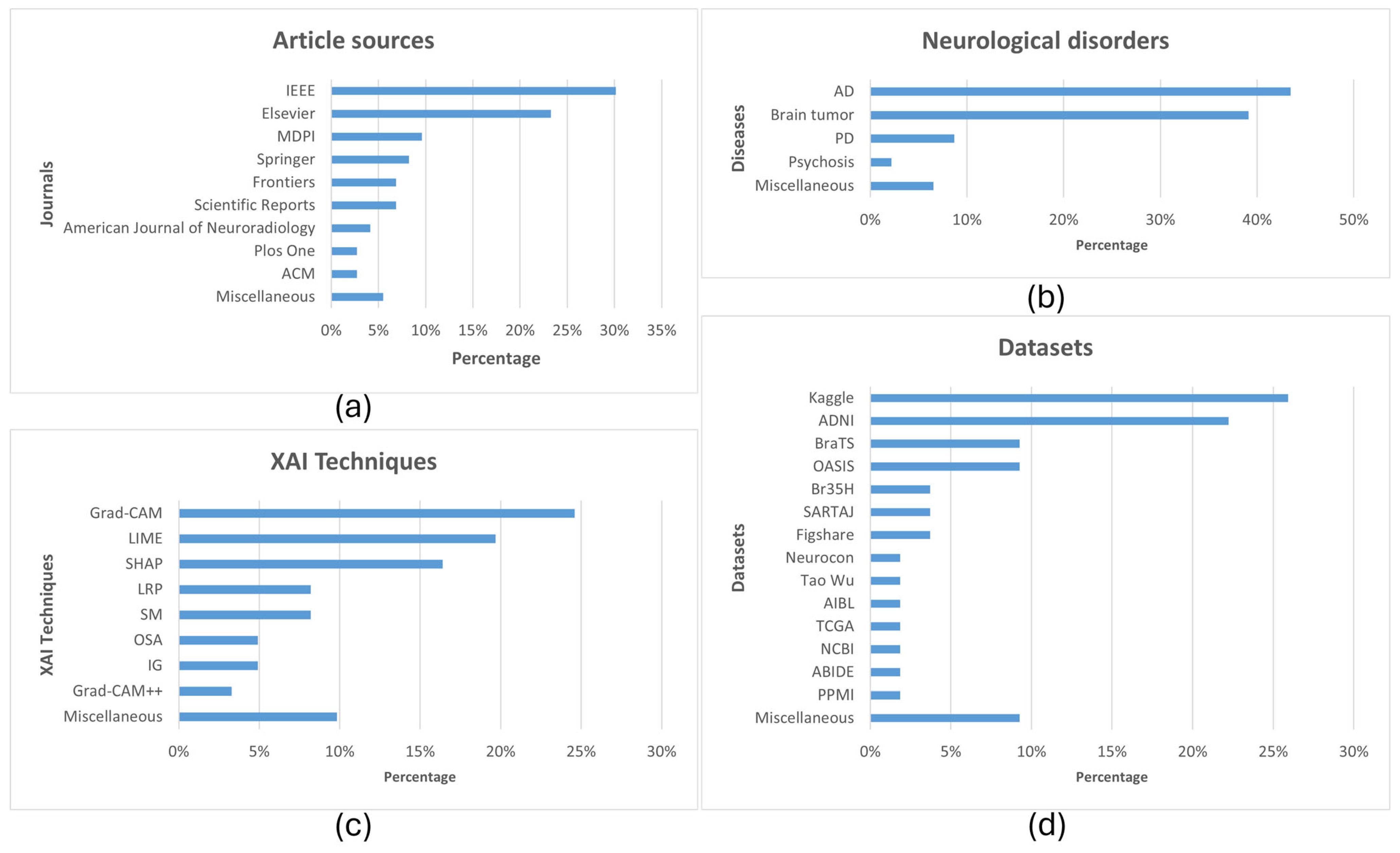

2.1. Study Selection

2.2. Inclusion Criteria

2.3. Exclusion Criteria

- Understanding development with explainable human functional brain challenges

- Studies incorporating electronic health records

- Classification of MRI brain scan orders for quality improvement

- Symmetric diffeomorphic image registration

- Life expectancy prediction, inference of onset times

- Drug, alcohol abuse/addiction classification

- Phobia identification

2.4. Highlights and Information Extracted

- Year of study

- Diseases researched

- Modalities employed

- AI techniques used

- Accuracy of developed systems

- Algorithms used for Explainability

- Datasets used

3. Results

- AI and XAI in CAD of Neurological Disorders

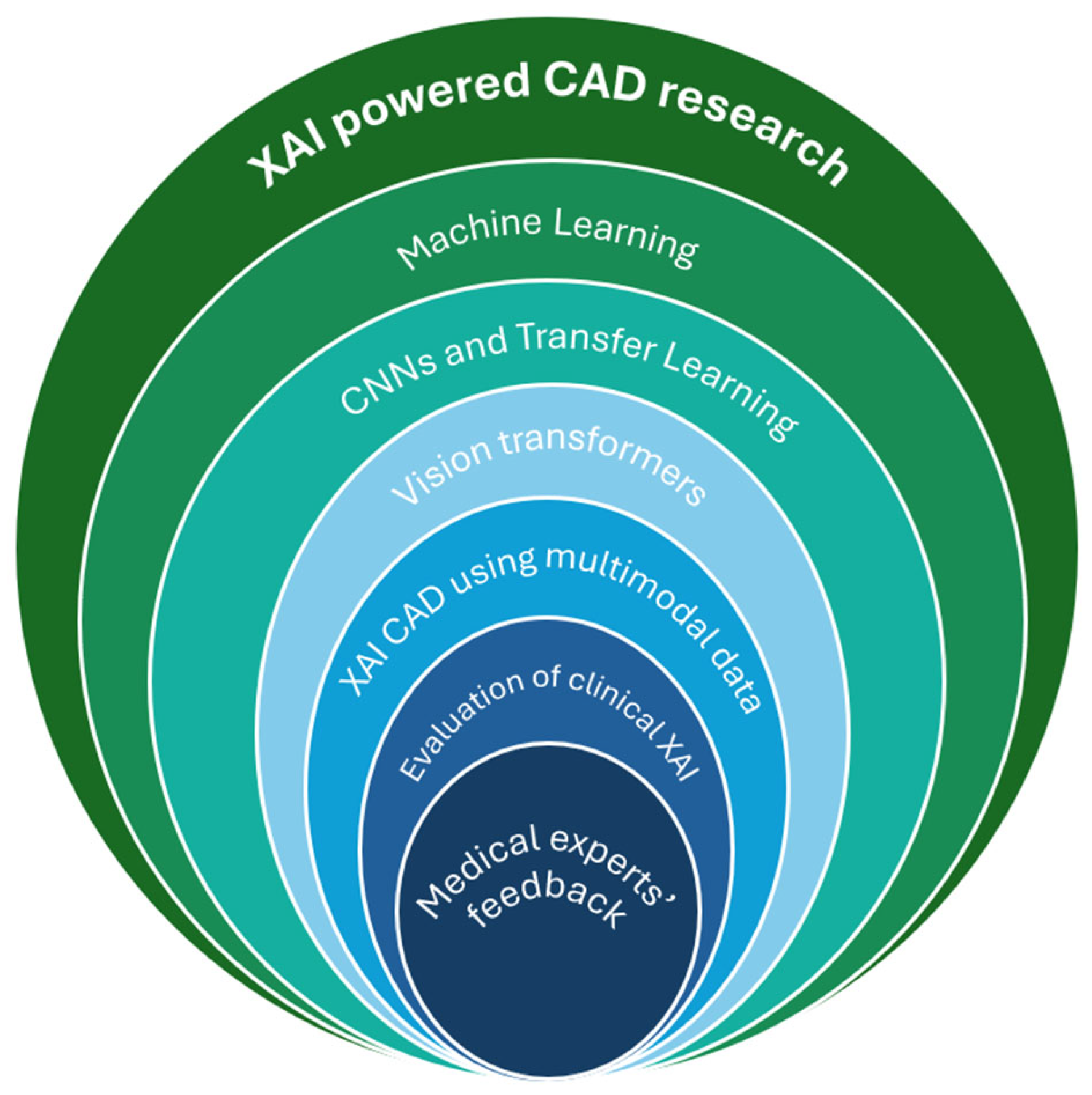

3.1. AI Applications in CAD

3.2. XAI Research in CAD

3.2.1. Machine Learning

3.2.2. CNNs and Transfer Learning

| Study | Pathology | Modality | Technology | Accuracy | XAI | Dataset |

|---|---|---|---|---|---|---|

| [73] 2024 | PD | MRI T1w | 12 pre-trained CNN models | VGG19 best performance | Grad-CAM | PPMI (213 PD, 213 Normal Control (NC)), NEUROCRON (27 PD, 16 NC) and Tao Wu (18 PD, 18 NC) |

| [74] 2024 | AD, progressive Mild Cognitive Impairment (pMCI), stable MCI (sMCI) | MRI | 2D-CNN, TL | AD-CN 86.5%, sMCI-pMCI 72.5% | 3D attention map | ADNI (AD 191, pMCI 121, sMCI 110, NC 204 subjects) |

| [75] 2024 | Very mild dementia, moderate dementia, mild dementia, non demented | MRI | DenseNet121, MobileNetV2 | MobileNetV2 93%, DenseNet121 88% | LIME | OASIS |

| [76] 2024 | Brain tumor | MRI | Disease and spatial attention model (DaSAM) | Up to 99% | - | Figshare and Kaggle datasets |

| [77] 2024 | Brain tumor | MRI | VGG16 | 99.4% | Grad-CAM | Kaggle and BraTS 2021 dataset |

| [78] 2024 | Brain tumor | MRI FLAIR, T1, T2w | CNN | 98.97% | - | 3300 images from BraTS dataset |

| [79] 2024 | AD | MRI | Ensemble-1 (VGG16 and VGG19) and Ensemble-2 (DenseNet169 and DenseNet201) | up to 96% | Saliency maps and Grad-CAM | Kaggle and OASIS-2 (896 MRIs for mild dementia, 64 moderate dementia, 3200 non-dementia, and 2240 very mild dementia) |

| [80] 2024 | Glioma, Meningioma, Pituitary tumor | MRI | CNN | 80% | LIME, SHAP, Integrated Gradients (IG), and Grad-CAM | 7043 images from Figshare, SARTAJ, Br35H datasets |

| [81] 2024 | AD | MRI | CNN | Real MRI 88.98%, Real + Synthetic MRIs 97.50% | Grad-CAM | Kaggle—896 MRIs for Mild Impairment, 64 Moderate Impairment, 3200 No Impairment, 2240 Very Mild Impairment. Synthetic images generated using Wasserstein Generative Adversarial Network with Gradient Penalty (WGAN-GP) |

| [82] 2024 | Brain tumor | MRI T1w | 10 TL frameworks | Up to 98% for EfficientNetB0 | Grad-CAM, Grad-CAM++, IG, and Saliency Mapping | Kaggle—926 MRI images of glioma tumors, 500 with no tumors, 901 pituitary tumors, and 937 meningioma tumors |

| [83] 2024 | Brain tumor | MRI | ResNet50 | 98.52% | Grad-CAM | Kaggle |

| [84] 2024 | AD, MCI | MRI | CNNs with a multi-feature kernel supervised within-class-similar discriminative dictionary learning (MKSCDDL) | 98.27% | Saliency maps, Grad-CAM, Score-CAM, Grad-CAM++ | ADNI |

| [85] 2024 | Brain tumor | MRI | Physics-informed deep learning (PIDL) | 96% | LIME, Grad-CAM | Kaggle—glioma 1621 images, meningioma 1645, pituitary tumors 1775, and non-tumorous scans 2000 images |

| [86] 2024 | Brain tumors four classes: glioma, meningioma, no tumor, and pituitary tumors | MRI | VGG19 with inverted pyramid pooling module (iPPM) | 99.3% | LIME | Kaggle—7023 images |

| [2] 2023 | PD | MRI T1w | CNN | 79.3% | Saliency maps | 1024 PD patients and 1017 age and sex matched HC from 13 different studies |

| [87] 2023 | Brain tumor | MRI | VGG16 | 97.33% | LRP | 1500 normal brain MRI images and 1500 tumor brain MRI images—Kaggle |

| [3] 2023 | Non-dementia, very mild, mild, and moderate | MRI | CNN | 94.96%. | LIME | ADNI |

| [72] 2023 | AD, MCI | DW-MRI | CNN | 78% for NC-MCI (45 test samples), 91% for NC-AD (45 test samples) and 81% MCI-AD (49 test samples) | Saliency map visualization | ADNI2 and ADNI-Go—152 NC, 181 MCI and 147 AD |

| [18] 2023 | AD | MRI | 3D CNN | 87% | Genetic algorithm-based Occlusion Map method with a set of Backpropagation-based explainability methods | ADNI—145 samples (74 AD and 71 HC) |

| [88] 2023 | Brain tumor | MRI | VGG16, InceptionV3, VGG19, ResNet50, InceptionResNetV2, Xception, and IVX16 | 95.11%, 93.88%, 94.19%, 93.88%, 93.58%, 94.5%, and 96.94% for VGG16, InceptionV3, VGG19, ResNet50, InceptionResNetV2, Xception, and IVX16, respectively | LIME | Kaggle—3264 images |

| [12] 2022 | Brain tumor (classification and segmentation) | MRI | ResNet50 for classification, encoder–decoder neural network for segmentation | - | Vanilla gradient, guided backpropagation, integrated gradients, guided integrated gradients, SmoothGrad, Grad-CAM, and guided Grad-CAM visualizations | BraTS challenges 2019 (259 cases of HGG and 76 cases of LGG) and 2021 (1251 MRI images with ground truth annotations) |

| [89] 2022 | Brain tumors (meningioma, glioma, and pituitary) | MRI | CNN | 94.64% | LIME, SHAP | 2870 images from Kaggle |

| [67] 2022 | Early-stage AD dementia | MRI | EfficientNet-B0 | AUC: 0.82 | Occlusion Sensitivity | 251 from OASIS-3 |

| [68] 2022 | AD | MRI T1w | MAXNet with dual attention module (DAM) and multi-resolution fusion module (MFM) | 95.4% | High-resolution activation mapping (HAM), and a prediction-basis creation and retrieval (PCR) | ADNI—826 cognitively normal individuals and 422 Alzheimer’s patients |

| [90] 2022 | Brain tumors (survival rate prediction) | MRI T1w, T1ce, T2w, FLAIR | CNN | 71% | SHAP | 235 patients from BraTS 2020 |

| [91] 2022 | Brain tumor | MRI | VGG16 | - | SHAP | Kaggle |

| [69] 2022 | AD: non-demented, very mild demented, mild demented and moderate demented | MRI | VGG16 | 78.12% | LRP | 6400 images with 4 classes |

| [17] 2022 | PD | Dopamine transporter (DAT) SPECT | CNN | 95.8% | LRP | 1296 clinical DAT-SPECT as “normal” or “reduced” from the PACS of the Department of Nuclear Medicine of the University Medical left Hamburg Eppendorf |

| [92] 2022 | Psychosis | MRI | Neural network-based classifier | Above 72% | LRP | 77 first-episode psychosis (FEP) patients, 58 clinical high-risk subjects with no later transition to psychosis (CHR_NT), 15 clinical high-risk subjects with later transition (CHR_T), and 44 HC from the early detection of psychosis project (FePsy) at the Department of Psychiatry, University of Basel, Switzerland |

| [70] 2021 | AD vs. NC and pMCI vs. sMCI | MRI | Three-dimensional residual attention deep neural network (3D ResAttNet) | 91% AD vs. NC, 82% pMCI vs. sMCI | Grad-CAM | 1407 subjects from ADNI-1, ADNI-2 and ADNI-3 datasets |

| [93] 2021 | PD | DAT SPECT | 3D CNN | 97.0% | LRP | 1306 123I-FP-CIT-SPECT, PACS of the Department of Nuclear Medicine of the University Medical left Hamburg Eppendorf |

| [94] 2021 | Age Prediction | MRI T1w | DNN | - | SHAP and LIME | ABIDE I—378 T1w MRI |

| [5] 2021 | AD, MCI | EEG | SVM, ANN, CNN | Up to 96% | LIME | 284 AD, 56 MCI, 100 HC |

| [95] 2021 | Age estimation | Structural MRI (sMRI), susceptibility-weighted imaging (SWI) and diffusion MRI (dMRI) | DNN | - | SHAP and LIME | 16394 subjects (7742 male and 8652 female) from UKB United Kingdom Biobank |

| [36] 2021 | Brain tumor lower-grade gliomas and the most aggressive malignancy, glioblastoma (WHO grade IV) | MRI T2w | DenseNet121, GoogLeNet, MobileNet | DenseNet-121, GoogLeNet, MobileNet achieved an accuracy of 92.1, 87.3, and 88.9 | Grad-CAM | TCGA dataset from The Cancer Imaging Archive repositories—354 subjects—19,200 and 14,800 slices of brain images with and without tumor lesions |

| [71] 2020 | AD | MRI T1w | Variants of AlexNet, VGG16 | - | Swap Test/Occlusion Test | ADNI Australian Imaging, Biomarker and Lifestyle Flagship Study of Ageing3 (AIBL)—training, validation, and test sets, each of them containing respectively 1779, 427, and 575 images |

3.2.3. Vision Transformers

3.2.4. XAI-Powered CAD Using Multimodal Data

3.2.5. Evaluation of Clinical XAI

3.2.6. Medical Experts’ Feedback

3.3. The Expert Opinion

- 1.

- In your opinion, can AI be useful to act as a CAD tool for your assistance in reaching concrete diagnoses using medical imaging data?

- 2.

- Would integration of “Explainability” to the AI (black box) models build your confidence and make you feel more comfortable in using such systems?

- Expert 1—Dr. Danesh Kella

- “Artificial intelligence, particularly machine learning algorithms, has shown significant promise in assisting healthcare professionals with the diagnosis of medical conditions using imaging data such as X-rays, MRIs, and CT scans. However, the routine integration of AI into medical imaging diagnosis is in its infancy, and several challenges and limitations exist. For instance, the interpretability of AI-generated diagnoses remains a significant concern, as clinicians need to understand the reasoning—the “black box”—behind the model’s recommendations to routinely incorporate it into clinical practice.

- Yes, the explanation of the AI’s decision into AI models would likely increase confidence and comfort in using such systems. However, it would be even more helpful if the AI explains it in a manner akin to how a professor would explain to their trainees how a certain characteristic of the brain mass on MRI indicates the likelihood of a certain tumor. By providing human-like insights into how AI models arrive at their decisions, they offer transparency and clarity to users, including healthcare professionals.

- Despite these challenges, AI has a definite role in the future of medicine; however, the extent and exact nature remain unclear. Collaborative efforts between AI programmers, healthcare professionals, and regulatory bodies are crucial to ensuring the safe and responsible deployment of AI technology in clinical settings.”

- Expert 2—Dr. Amrat Zakari Usman

- “No, I don’t think AI can be useful to act as a computer aided diagnosis, interpretation by clinician is important so that other differential diagnosis will be considered in the course of patients’ management.

- No because I don’t think AI can give enough reason to back up a diagnosis.”

- Expert 3—Dr. Raheel Imtiaz Memon

- “While new and emerging technologies can certainly be an asset in the medical field, I have my reservations about the use of AI for diagnosis purposes. AI is still quite new and though efficient in many areas, it is not a perfect science. No AI can replicate time spent and experiences had during medical school, residency, and other post graduate training, or the value of peer consultation and appropriate bedside manner—all things which aid in appropriate diagnosis. While AI can be used as a tool in addition to those already being used, it should not be relied upon for diagnostic purposes.”

- Experts 4, 5—Dr. Maria Miandad Jakhro & Dr. Ashraf Niazi

- “As doctors, we believe that AI can be tremendously valuable as a Computer-Aided Diagnosis (CAD) tool in interpreting medical imaging data. The integration of AI into diagnostic processes has the potential to revolutionize how we approach patient care in terms of accuracy, efficiency, and accessibility. AI algorithms excel in analyzing vast amounts of medical imaging data with remarkable speed and precision. This capability can significantly aid healthcare professionals in interpreting complex images, identifying subtle abnormalities, and reaching accurate diagnoses. Moreover, AI-powered CAD systems can serve as a valuable second opinion, offering insights that complement and validate our own diagnostic assessments. One of the most compelling advantages of AI in medical imaging is its ability to enhance diagnostic efficiency. By rapidly processing imaging data, AI can expedite the diagnostic process, leading to quicker treatment decisions and improved patient outcomes, particularly in time-sensitive situations. Furthermore, AI has the potential to address challenges related to access to specialized expertise. In regions where there may be a shortage of experienced radiologists or specialists, AI-powered CAD tools can provide valuable support, ensuring that patients receive timely and accurate diagnoses regardless of geographic location. However, it’s important to recognize that AI should not replace the role of healthcare professionals. Rather, it should be viewed as a valuable tool that augments our diagnostic capabilities. As doctors, our clinical judgment, experience, and empathy are irreplaceable components of patient care that cannot be replicated by AI.

- In conclusion, we believe that AI holds immense promise as a CAD tool in medical imaging, offering significant benefits in terms of accuracy, efficiency, and accessibility. Embracing AI technology alongside our expertise as healthcare professionals can lead to more effective diagnosis and treatment, ultimately enhancing the quality of care we provide to our patients.”

- Expert 6—Prof. Muhammad Tameem Akhtar

- “The set of diseases on which the AI is developed will be different in every part of the world with different sets of diseases with different clinical presentation and radiological pictures, unless a good number of histopathologically proven cases with images which are super imposed to reach a conclusive and definitive diagnosis. Hence, studies must be conducted in different parts of the world with the same set of these diagnosed diseases and the same quality of images to be as close to diagnosis as 95% accuracy.

- The study must be multidisciplinary with multiple caste and religions as there are different pattern of disease in different foods, lifestyle patterns. It needs at least hundred thousand cases of similar characteristics, patterns to reach to consensus and accurate diagnosis in keeping in mind with multiple filters of age, sex, dietary habit living standards as well as environment.

- Yes, AI has a definite role provided a large study must be performed with large number of patients. It cannot replace human integration and involvement can minimise errors. Diseases are classified as per ACR codes. It will take years to be confident about the accuracy of diagnosis and will take time to develop confidence and accuracy.

- The images are self-exclamatory. There is discrepancy in actual area of involvement and adjacent tissue involvement. The extent of the disease is variable in different projections while the disease itself is limited with the effects widespread which may be due to changes in the chemistry in adjacent tissue; where multiple factors like compactness/looseness of cells, and chemistry of intra and extra cellular compartments, natural state and disease state of cells play a vital role. Again, the signals generated from the cells will vary according to human habitat, including diet, ethnicity, environment etc.”

- Expert 7—Dr. Shahabuddin Siddiqui

- “It is a good idea to develop a tool which can read images of the brain and diagnose certain diseases. If properly trained, I certainly believe it can delineate all the abnormal areas of the brain. It may be able to pick up the small lesions which may otherwise be missed by the human eye. This certainly will aid the Radiologists in making an accurate diagnosis of the patient. But practically, I don’t think this tool can itself establish the diagnosis of the patient. Most diseases do not follow typical imaging patterns. The signal characteristics and the location of the disease process can vary greatly from patient to patient. Similarly, many different neurological disorders can have overlapping imaging features. It will therefore require the background knowledge and experience in clinical neurology and radiology to differentiate the disease considering all the key factors including the patient’s epidemiology, clinical presentation (signs and symptoms), lab results and the imaging features, not just the imaging features alone. So all in all, I think it can help neurologists and radiologists with accurately picking up the imaging findings but establishing the accurate diagnosis of the patient per se will still be the job of the primary neurologist and radiologist.

- The integration of explainability will certainly build my confidence and make me feel more comfortable using such systems. But I will always rely on my clinical and radiology expertise to make the diagnosis.”

4. Discussion

4.1. Crux of the Matter and the Way Forward

4.2. The Challenges Ahead

4.2.1. Limited Training Datasets and Generalizability Issues

4.2.2. Current Focus Mostly on Optimizing Performance of CAD Tools

4.2.3. Absence of Ground Truth Data for Explainability

4.2.4. Focus on Single Modality

4.2.5. Are Only Visual Explanations Sufficient?

4.2.6. How to Judge XAI Performance?

4.2.7. More Doctors Onboard, Please!

4.2.8. User Awareness

4.2.9. Security, Safety, Legal, and Ethical Challenges

4.2.10. Let There Be Symbiosis!

5. Conclusions

- The integration of explainability in such CAD tools will surely increase the confidence of medical experts, but the current modes of explanation might not be enough. More thorough and human professor-like explanations are what the healthcare professionals are looking for.

- The quantitative and qualitative evaluation of such XAI schemes requires a lot of attention. The absence of ground truth data for explainability is another major concern at the moment which needs special attention, along with legal, ethical, safety, and security issues.

- None of this can be achieved without uniting both medical professionals and scientists to work toward this cause in absolute symbiosis.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. WHO—The Top 10 Causes of Death. 2018. Available online: http://www.who.int/en/news-room/fact-sheets/detail/the-top-10-causes-of-death (accessed on 4 November 2023).

- Camacho, M.; Wilms, M.; Mouches, P.; Almgren, H.; Souza, R.; Camicioli, R.; Ismail, Z.; Monchi, O.; Forkert, N.D. Explainable classification of Parkinson’s disease using deep learning trained on a large multi-center database of T1-weighted MRI datasets. NeuroImage Clin. 2023, 38, 103405. [Google Scholar] [CrossRef] [PubMed]

- Duamwan, L.M.; Bird, J.J. Explainable AI for Medical Image Processing: A Study on MRI in Alzheimer’s Disease. In Proceedings of the 16th International Conference on PErvasive Technologies Related to Assistive Environments, Corfu, Greece, 5–7 July 2023; pp. 480–484. [Google Scholar] [CrossRef]

- Salih, A.; Galazzo, I.B.; Cruciani, F.; Brusini, L.; Radeva, P. Investigating Explainable Artificial Intelligence for MRI-Based Classification of Dementia: A New Stability Criterion for Explainable Methods. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 4003–4007. [Google Scholar] [CrossRef]

- Sidulova, M.; Nehme, N.; Park, C.H. Towards Explainable Image Analysis for Alzheimer’s Disease and Mild Cognitive Impairment Diagnosis. In Proceedings of the 2021 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 12–14 October 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Tjoa, E.; Guan, C. A Survey on Explainable Artificial Intelligence (XAI): Toward Medical XAI. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4793–4813. [Google Scholar] [CrossRef] [PubMed]

- Tomson, T. Excess mortality in epilepsy in developing countries. Lancet Neurol. 2006, 5, 804–805. [Google Scholar] [CrossRef]

- Avants, B.B.; Epstein, C.L.; Grossman, M.; Gee, J.C. Symmetric diffeomorphic image registration with cross-correlation: Evaluating automated labeling of elderly and neurodegenerative brain. Med. Image Anal. 2008, 12, 26–41. [Google Scholar] [CrossRef]

- Nemoto, K.; Sakaguchi, H.; Kasai, W.; Hotta, M.; Kamei, R.; Noguchi, T.; Minamimoto, R.; Arai, T.; Asada, T. Differentiating Dementia with Lewy Bodies and Alzheimer’s Disease by Deep Learning to Structural MRI. J. Neuroimaging 2021, 31, 579–587. [Google Scholar] [CrossRef]

- Farahani, F.V.; Fiok, K.; Lahijanian, B.; Karwowski, W.; Douglas, P.K. Explainable AI: A review of applications to neuroimaging data. Front. Neurosci. 2022, 16, 906290. [Google Scholar] [CrossRef]

- Yang, G.; Ye, Q.; Xia, J. Unbox the black-box for the medical explainable AI via multi-modal and multi-centre data fusion: A mini-review, two showcases and beyond. Inf. Fusion 2021, 77, 29–52. [Google Scholar] [CrossRef]

- Zeineldin, R.A.; Karar, M.E.; Elshaer, Z.; Coburger, J.; Wirtz, C.R.; Burgert, O.; Mathis-Ullrich, F. Explainability of deep neural networks for MRI analysis of brain tumors. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1673–1683. [Google Scholar] [CrossRef]

- Odusami, M.; Maskeliūnas, R.; Damaševičius, R.; Misra, S. Explainable Deep-Learning-Based Diagnosis of Alzheimer’s Disease Using Multimodal Input Fusion of PET and MRI Images. J. Med. Biol. Eng. 2023, 43, 291–302. [Google Scholar] [CrossRef]

- Fellous, J.M.; Sapiro, G.; Rossi, A.; Mayberg, H.; Ferrante, M. Explainable Artificial Intelligence for Neuroscience: Behavioral Neurostimulation. Front. Neurosci. 2019, 13, 1346. [Google Scholar] [CrossRef]

- Herent, P.; Jegou, S.; Wainrib, G.; Clozel, T. Brain age prediction of healthy subjects on anatomic MRI with deep learning: Going beyond with an ‘explainable AI’ mindset. bioRxiv 2018, 413302. [Google Scholar] [CrossRef]

- Qian, J.; Li, H.; Wang, J.; He, L. Recent Advances in Explainable Artificial Intelligence for Magnetic Resonance Imaging. Diagnostics 2023, 13, 1571. [Google Scholar] [CrossRef] [PubMed]

- Nazari, M.; Kluge, A.; Apostolova, I.; Klutmann, S.; Kimiaei, S.; Schroeder, M.; Buchert, R. Explainable AI to improve acceptance of convolutional neural networks for automatic classification of dopamine transporter SPECT in the diagnosis of clinically uncertain parkinsonian syndromes. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 1176–1186. [Google Scholar] [CrossRef] [PubMed]

- Shojaei, S.; Abadeh, M.S.; Momeni, Z. An evolutionary explainable deep learning approach for Alzheimer’s MRI classification. Expert Syst. Appl. 2023, 220, 119709. [Google Scholar] [CrossRef]

- Nazir, S.; Dickson, D.M.; Akram, M.U. Survey of explainable artificial intelligence techniques for biomedical imaging with deep neural networks. Comput. Biol. Med. 2023, 156, 106668. [Google Scholar] [CrossRef]

- Górriz, J.M.; Álvarez-Illán, I.; Álvarez-Marquina, A.; Arco, J.E.; Atzmueller, M.; Ballarini, F.; Barakova, E.; Bologna, G.; Bonomini, P.; Castellanos-Dominguez, G.; et al. Computational approaches to Explainable Artificial Intelligence: Advances in theory, applications and trends. Inf. Fusion 2023, 100, 101945. [Google Scholar] [CrossRef]

- Taşcı, B. Attention Deep Feature Extraction from Brain MRIs in Explainable Mode: DGXAINet. Diagnostics 2023, 13, 859. [Google Scholar] [CrossRef]

- Galazzo, I.B.; Cruciani, F.; Brusini, L.; Salih, A.; Radeva, P.; Storti, S.F.; Menegaz, G. Explainable Artificial Intelligence for Magnetic Resonance Imaging Aging Brainprints: Grounds and challenges. IEEE Signal Process. Mag. 2022, 39, 99–116. [Google Scholar] [CrossRef]

- Zeineldin, R.A.; Karar, M.E.; Elshaer, Z.; Coburger, J.; Wirtz, C.R.; Burgert, O.; Mathis-Ullrich, F. Explainable hybrid vision transformers and convolutional network for multimodal glioma segmentation in brain MRI. Sci. Rep. 2024, 14, 3713. [Google Scholar] [CrossRef]

- Champendal, M.; Müller, H.; Prior, J.O.; dos Reis, C.S. A scoping review of interpretability and explainability concerning artificial intelligence methods in medical imaging. Eur. J. Radiol. 2023, 169, 111159. [Google Scholar] [CrossRef]

- Borys, K.; Schmitt, Y.A.; Nauta, M.; Seifert, C.; Krämer, N.; Friedrich, C.M.; Nensa, F. Explainable AI in medical imaging: An overview for clinical practitioners—Beyond saliency-based XAI approaches. Eur. J. Radiol. 2023, 162, 110786. [Google Scholar] [CrossRef] [PubMed]

- Jin, W.; Li, X.; Fatehi, M.; Hamarneh, G. Guidelines and evaluation of clinical explainable AI in medical image analysis. Med. Image Anal. 2023, 84, 102684. [Google Scholar] [CrossRef] [PubMed]

- van der Velden, B.H.M.; Kuijf, H.J.; Gilhuijs, K.G.A.; Viergever, M.A. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef] [PubMed]

- Viswan, V.; Shaffi, N.; Mahmud, M.; Subramanian, K.; Hajamohideen, F. Explainable Artificial Intelligence in Alzheimer’s Disease Classification: A Systematic Review. Cognit. Comput. 2024, 16, 1–14. [Google Scholar] [CrossRef]

- Borys, K.; Schmitt, Y.A.; Nauta, M.; Seifert, C.; Krämer, N.; Friedrich, C.M.; Nensa, F. Explainable AI in medical imaging: An overview for clinical practitioners—Saliency-based XAI approaches. Eur. J. Radiol. 2023, 162, 110787. [Google Scholar] [CrossRef]

- Jin, W.; Li, X.; Hamarneh, G. Evaluating Explainable AI on a Multi-Modal Medical Imaging Task: Can Existing Algorithms Fulfill Clinical Requirements? Proc. AAAI Conf. Artif. Intell. 2022, 36, 11945–11953. [Google Scholar] [CrossRef]

- Holzinger, A.; Biemann, C.; Pattichis, C.S.; Kell, D.B. What do we need to build explainable AI systems for the medical domain? arXiv 2017, arXiv:1712.09923. [Google Scholar] [CrossRef]

- Jahan, S.; Abu Taher, K.; Kaiser, M.S.; Mahmud, M.; Rahman, M.S.; Hosen, A.S.; Ra, I.H. Explainable AI-based Alzheimer’s prediction and management using multimodal data. PLoS ONE 2023, 18, e0294253. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. ‘ Why should I trust you?’ Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Chakrabarty, N. Brain MRI Images for Brain Tumor Detection. 2018. Available online: https://www.kaggle.com/datasets/navoneel/brain-mri-images-for-brain-tumor-detection (accessed on 17 November 2024).

- Esmaeili, M.; Vettukattil, R.; Banitalebi, H.; Krogh, N.R.; Geitung, J.T. Explainable artificial intelligence for human-machine interaction in brain tumor localization. J. Pers. Med. 2021, 11, 1213. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 37, 71. [Google Scholar] [CrossRef]

- Liang, S.; Beaton, D.; Arnott, S.R.; Gee, T.; Zamyadi, M.; Bartha, R.; Symons, S.; MacQueen, G.M.; Hassel, S.; Lerch, J.P.; et al. Magnetic resonance imaging sequence identification using a metadata learning approach. Front. Neuroinform. 2021, 15, 622951. [Google Scholar] [CrossRef] [PubMed]

- Hoopes, A.; Mora, J.S.; Dalca, A.V.; Fischl, B.; Hoffmann, M. SynthStrip: Skull-stripping for any brain image. Neuroimage 2022, 260, 119474. [Google Scholar] [CrossRef] [PubMed]

- Hashemi, M.; Akhbari, M.; Jutten, C. Delve into multiple sclerosis (MS) lesion exploration: A modified attention U-net for MS lesion segmentation in brain MRI. Comput. Biol. Med. 2022, 145, 105402. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Tong, L.; Chen, L.; Jiang, Z.; Zhou, F.; Zhang, Q.; Zhang, X.; Jin, Y.; Zhou, H. Deep learning based brain tumor segmentation: A survey. Complex Intell. Syst. 2023, 9, 1001–1026. [Google Scholar] [CrossRef]

- Ali, S.S.A.; Memon, K.; Yahya, N.; Sattar, K.A.; El Ferik, S. Deep Learning Framework-Based Automated Multi-class Diagnosis for Neurological Disorders. In Proceedings of the 2023 7th International Conference on Automation, Control and Robots (ICACR), Kuala Lumpur, Malaysia, 4–6 August 2023; pp. 87–91. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef]

- ElSebely, R.; Abdullah, B.; Salem, A.A.; Yousef, A.H. Multiple Sclerosis Lesion Segmentation Using Ensemble Machine Learning. Saudi J. Eng. Technol. 2020, 5, 134. [Google Scholar] [CrossRef]

- Vang, Y.S.; Cao, Y.; Chang, P.D.; Chow, D.S.; Brandt, A.U.; Paul, F.; Scheel, M.; Xie, X. SynergyNet: A fusion framework for multiple sclerosis brain MRI segmentation with local refinement. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020; pp. 131–135. [Google Scholar]

- Zeng, C.; Gu, L.; Liu, Z.; Zhao, S. Review of deep learning approaches for the segmentation of multiple sclerosis lesions on brain MRI. Front. Neuroinform. 2020, 14, 610967. [Google Scholar] [CrossRef]

- van der Voort, S.R.; Smits, M.; Klein, S.; Initiative, A.D.N. DeepDicomSort: An automatic sorting algorithm for brain magnetic resonance imaging data. Neuroinformatics 2021, 19, 159–184. [Google Scholar] [CrossRef]

- Pizarro, R.; Assemlal, H.E.; De Nigris, D.; Elliott, C.; Antel, S.; Arnold, D.; Shmuel, A. Using Deep Learning Algorithms to Automatically Identify the Brain MRI Contrast: Implications for Managing Large Databases. Neuroinformatics 2019, 17, 115–130. [Google Scholar] [CrossRef]

- Gao, R.; Luo, G.; Ding, R.; Yang, B.; Sun, H. A Lightweight Deep Learning Framework for Automatic MRI Data Sorting and Artifacts Detection. J. Med. Syst. 2023, 47, 124. [Google Scholar] [CrossRef]

- Ranjbar, S.; Singleton, K.W.; Jackson, P.R.; Rickertsen, C.R.; Whitmire, S.A.; Clark-Swanson, K.R.; Mitchell, J.R.; Swanson, K.R.; Hu, L.S. A Deep Convolutional Neural Network for Annotation of Magnetic Resonance Imaging Sequence Type. J. Digit. Imaging 2020, 33, 439–446. [Google Scholar] [CrossRef] [PubMed]

- de Mello, J.P.V.; Paixão, T.M.; Berriel, R.; Reyes, M.; Badue, C.; De Souza, A.F.; Oliveira-Santos, T. Deep learning-based type identification of volumetric MRI sequences. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 1–8. [Google Scholar]

- Memon, K.; Yahya, N.; Siddiqui, S.; Hashim, H.; Yusoff, M.Z.; Ali, S.S.A. NIVE: NeuroImaging Volumetric Extractor, a High-Performance Skull-Stripping Tool. J. Adv. Res. Appl. Sci. Eng. Technol. 2025, 50, 228–245. [Google Scholar] [CrossRef]

- Bruscolini, A.; Sacchetti, M.; La Cava, M.; Gharbiya, M.; Ralli, M.; Lambiase, A.; De Virgilio, A.; Greco, A. Diagnosis and management of neuromyelitis optica spectrum disorders—An update. Autoimmun. Rev. 2018, 17, 195–200. [Google Scholar] [CrossRef] [PubMed]

- Baghbanian, S.M.; Asgari, N.; Sahraian, M.A.; Moghadasi, A.N. A comparison of pediatric and adult neuromyelitis optica spectrum disorders: A review of clinical manifestation, diagnosis, and treatment. J. Neurol. Sci. 2018, 388, 222–231. [Google Scholar] [CrossRef] [PubMed]

- Thouvenot, E. Multiple sclerosis biomarkers: Helping the diagnosis? Rev. Neurol. 2018, 174, 364–371. [Google Scholar] [CrossRef]

- Xin, B.; Zhang, L.; Huang, J.; Lu, J.; Wang, X. Multi-level Topological Analysis Framework for Multifocal Diseases. In Proceedings of the 2020 16th International Conference on Control, Automation, Robotics and Vision (ICARCV), Shenzhen, China, 13–15 December 2020; pp. 666–671. [Google Scholar] [CrossRef]

- Nayak, D.R.; Dash, R.; Majhi, B. Automated diagnosis of multi-class brain abnormalities using MRI images: A deep convolutional neural network based method. Pattern Recognit. Lett. 2020, 138, 385–391. [Google Scholar] [CrossRef]

- Rudie, J.D.; Rauschecker, A.M.; Xie, L.; Wang, J.; Duong, M.T.; Botzolakis, E.J.; Kovalovich, A.; Egan, J.M.; Cook, T.; Bryan, R.N.; et al. Subspecialty-level deep gray matter differential diagnoses with deep learning and Bayesian networks on clinical brain MRI: A pilot study. Radiol. Artif. Intell. 2020, 2, e190146. [Google Scholar] [CrossRef]

- Krishnammal, P.M.; Raja, S.S. Convolutional neural network based image classification and detection of abnormalities in MRI brain images. In Proceedings of the 2019 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 4–6 April 2019; pp. 548–553. [Google Scholar]

- Raja, S.S. Deep learning based image classification and abnormalities analysis of MRI brain images. In Proceedings of the 2019 TEQIP III Sponsored International Conference on Microwave Integrated Circuits, Photonics and Wireless Networks (IMICPW), Tiruchirappalli, India, 22–24 May 2019; pp. 427–431. [Google Scholar]

- Mangeat, G.; Ouellette, R.; Wabartha, M.; De Leener, B.; Plattén, M.; Danylaité Karrenbauer, V.; Warntjes, M.; Stikov, N.; Mainero, C.; Cohen-Adad, J.; et al. Machine learning and multiparametric brain MRI to differentiate hereditary diffuse leukodystrophy with spheroids from multiple sclerosis. J. Neuroimaging 2020, 30, 674–682. [Google Scholar] [CrossRef]

- Khan, H.A.; Jue, W.; Mushtaq, M.; Mushtaq, M.U. Brain tumor classification in MRI image using convolutional neural network. Math. Biosci. Eng. 2021, 17, 6203–6216. [Google Scholar] [CrossRef]

- Singh, G.; Vadera, M.; Samavedham, L.; Lim, E.C.-H. Machine learning-based framework for multi-class diagnosis of neurodegenerative diseases: A study on Parkinson’s disease. IFAC PapersOnLine 2016, 49, 990–995. [Google Scholar] [CrossRef]

- Kalbkhani, H.; Shayesteh, M.G.; Zali-Vargahan, B. Robust algorithm for brain magnetic resonance image (MRI) classification based on GARCH variances series. Biomed. Signal Process. Control 2013, 8, 909–919. [Google Scholar] [CrossRef]

- Palkar, A.; Dias, C.C.; Chadaga, K.; Sampathila, N. Empowering Glioma Prognosis With Transparent Machine Learning and Interpretative Insights Using Explainable AI. IEEE Access 2024, 12, 31697–31718. [Google Scholar] [CrossRef]

- Achilleos, K.G.; Leandrou, S.; Prentzas, N.; Kyriacou, P.A.; Kakas, A.C.; Pattichis, C.S. Extracting Explainable Assessments of Alzheimer’s disease via Machine Learning on brain MRI imaging data. In Proceedings of the 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), Cincinnati, OH, USA, 26–28 October 2020; pp. 1036–1041. [Google Scholar] [CrossRef]

- Bordin, V.; Coluzzi, D.; Rivolta, M.W.; Baselli, G. Explainable AI Points to White Matter Hyperintensities for Alzheimer’s Disease Identification: A Preliminary Study. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, Scotland, UK, 11–15 July 2022; pp. 484–487. [Google Scholar] [CrossRef]

- Yu, L.; Xiang, W.; Fang, J.; Phoebe Chen, Y.P.; Zhu, R. A novel explainable neural network for Alzheimer’s disease diagnosis. Pattern Recognit. 2022, 131, 108876. [Google Scholar] [CrossRef]

- Sudar, K.M.; Nagaraj, P.; Nithisaa, S.; Aishwarya, R.; Aakash, M.; Lakshmi, S.I. Alzheimer’s Disease Analysis using Explainable Artificial Intelligence (XAI). In Proceedings of the 2022 International Conference on Sustainable Computing and Data Communication Systems (ICSCDS), Erode, India, 7–9 April 2022; pp. 419–423. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Zhu, W.; Sun, L.; Zhang, D. An Explainable 3D Residual Self-Attention Deep Neural Network for Joint Atrophy Localization and Alzheimer’s Disease Diagnosis Using Structural MRI. IEEE J. Biomed. Health Inform. 2022, 26, 5289–5297. [Google Scholar] [CrossRef]

- Nigri, E.; Ziviani, N.; Cappabianco, F.; Antunes, A.; Veloso, A. Explainable Deep CNNs for MRI-Based Diagnosis of Alzheimer’s Disease. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020. [Google Scholar] [CrossRef]

- Essemlali, A.; St-Onge, E.; Descoteaux, M.; Jodoin, P.M. Understanding Alzheimer disease’s structural connectivity through explainable AI. Proc. Mach. Learn. Res. 2020, 121, 217–229. [Google Scholar]

- Veetil, I.K.; Chowdary, D.E.; Chowdary, P.N.; Sowmya, V.; Gopalakrishnan, E.A. An analysis of data leakage and generalizability in MRI based classification of Parkinson’s Disease using explainable 2D Convolutional Neural Networks. Digit. Signal Process. Rev. J. 2024, 147, 104407. [Google Scholar] [CrossRef]

- Lozupone, G.; Bria, A.; Fontanella, F.; De Stefano, C. AXIAL: Attention-based eXplainability for Interpretable Alzheimer’s Localized Diagnosis using 2D CNNs on 3D MRI brain scans. arXiv 2024, arXiv:2407.02418. [Google Scholar] [CrossRef]

- Deshmukh, A.; Kallivalappil, N.; D’Souza, K.; Kadam, C. AL-XAI-MERS: Unveiling Alzheimer’s Mysteries with Explainable AI. In Proceedings of the 2024 Second International Conference on Emerging Trends in Information Technology and Engineering (ICETITE), Vellore, India, 22–23 February 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Tehsin, S.; Nasir, I.M.; Damaševičius, R.; Maskeliūnas, R. DaSAM: Disease and Spatial Attention Module-Based Explainable Model for Brain Tumor Detection. Big Data Cogn. Comput. 2024, 8, 97. [Google Scholar] [CrossRef]

- Padmapriya, S.T.; Devi, M.S.G. Computer-Aided Diagnostic System for Brain Tumor Classification using Explainable AI. In Proceedings of the 2024 IEEE International Conference on Interdisciplinary Approaches in Technology and Management for Social Innovation (IATMSI), Gwalior, India, 14–16 March 2024; Volume 2, pp. 1–6. [Google Scholar] [CrossRef]

- Thiruvenkadam, K.; Ravindran, V.; Thiyagarajan, A. Deep Learning with XAI based Multi-Modal MRI Brain Tumor Image Analysis using Image Fusion Techniques. In Proceedings of the 24 International Conference on Trends in Quantum Computing and Emerging Business Technologies, Pune, India, 22–23 March 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Mahmud, T.; Barua, K.; Habiba, S.U.; Sharmen, N.; Hossain, M.S.; Andersson, K. An Explainable AI Paradigm for Alzheimer’s Diagnosis Using Deep Transfer Learning. Diagnostics 2024, 14, 345. [Google Scholar] [CrossRef]

- Narayankar, P.; Baligar, V.P. Explainability of Brain Tumor Classification Based on Region. In Proceedings of the 2024 International Conference on Emerging Technologies in Computer Science for Interdisciplinary Applications (ICETCS), Bengaluru, India, 22–23 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Mansouri, D.; Echtioui, A.; Khemakhem, R.; Ben Hamida, A. Explainable AI Framework for Alzheimer’s Diagnosis Using Convolutional Neural Networks. In Proceedings of the 2024 IEEE 7th International Conference on Advanced Technologies, Signal and Image Processing (ATSIP), Sousse, Tunisia, 11–13 July 2024; Volume 1, pp. 93–98. [Google Scholar] [CrossRef]

- Nhlapho, W.; Atemkeng, M.; Brima, Y.; Ndogmo, J.C. Bridging the Gap: Exploring Interpretability in Deep Learning Models for Brain Tumor Detection and Diagnosis from MRI Images. Information 2024, 15, 182. [Google Scholar] [CrossRef]

- Mohamed Musthafa, M.; Mahesh, T.R.; Vinoth Kumar, V.; Guluwadi, S. Enhancing brain tumor detection in MRI images through explainable AI using Grad-CAM with Resnet 50. BMC Med. Imaging 2024, 24, 107. [Google Scholar] [CrossRef]

- Adarsh, V.; Gangadharan, G.R.; Fiore, U.; Zanetti, P. Multimodal classification of Alzheimer’s disease and mild cognitive impairment using custom MKSCDDL kernel over CNN with transparent decision-making for explainable diagnosis. Sci. Rep. 2024, 14, 1774. [Google Scholar] [CrossRef] [PubMed]

- Amin, A.; Hasan, K.; Hossain, M.S. XAI-Empowered MRI Analysis for Consumer Electronic Health. IEEE Trans. Consum. Electron. 2024, 70, 1. [Google Scholar] [CrossRef]

- Haque, R.; Hassan, M.M.; Bairagi, A.K.; Shariful Islam, S.M. NeuroNet19: An explainable deep neural network model for the classification of brain tumors using magnetic resonance imaging data. Sci. Rep. 2024, 14, 1524. [Google Scholar] [CrossRef]

- Ahmed, F.; Asif, M.; Saleem, M.; Mushtaq, U.F.; Imran, M. Identification and Prediction of Brain Tumor Using VGG-16 Empowered with Explainable Artificial Intelligence. Int. J. Comput. Innov. Sci. 2023, 2, 24–33. [Google Scholar]

- Hossain, S.; Chakrabarty, A.; Gadekallu, T.R.; Alazab, M.; Piran, M.J. Vision Transformers, Ensemble Model, and Transfer Learning Leveraging Explainable AI for Brain Tumor Detection and Classification. IEEE J. Biomed. Health Inform. 2023, 28, 1261–1272. [Google Scholar] [CrossRef]

- Gaur, L.; Bhandari, M.; Razdan, T.; Mallik, S.; Zhao, Z. Explanation-Driven Deep Learning Model for Prediction of Brain Tumour Status Using MRI Image Data. Front. Genet. 2022, 13, 822666. [Google Scholar] [CrossRef]

- Eder, M.; Moser, E.; Holzinger, A.; Jean-Quartier, C.; Jeanquartier, F. Interpretable Machine Learning with Brain Image and Survival Data. BioMedInformatics 2022, 2, 492–510. [Google Scholar] [CrossRef]

- Benyamina, H.; Mubarak, A.S.; Al-Turjman, F. Explainable Convolutional Neural Network for Brain Tumor Classification via MRI Images. In Proceedings of the 2022 International Conference on Artificial Intelligence of Things and Crowdsensing (AIoTCs), Nicosia, Cyprus, 26–28 October 2022; pp. 266–272. [Google Scholar] [CrossRef]

- Korda, A.I.; Andreou, C.; Rogg, H.V.; Avram, M.; Ruef, A.; Davatzikos, C.; Koutsouleris, N.; Borgwardt, S. Identification of texture MRI brain abnormalities on first-episode psychosis and clinical high-risk subjects using explainable artificial intelligence. Transl. Psychiatry 2022, 12, 481. [Google Scholar] [CrossRef]

- Nazari, M.; Kluge, A.; Apostolova, I.; Klutmann, S.; Kimiaei, S.; Schroeder, M.; Buchert, R. Data-driven identification of diagnostically useful extrastriatal signal in dopamine transporter SPECT using explainable AI. Sci. Rep. 2021, 11, 22932. [Google Scholar] [CrossRef]

- Lombardi, A.; Diacono, D.; Amoroso, N.; Monaco, A.; Tavares, J.M.R.; Bellotti, R.; Tangaro, S. Explainable Deep Learning for Personalized Age Prediction With Brain Morphology. Front. Neurosci. 2021, 15, 22932. [Google Scholar] [CrossRef] [PubMed]

- Salih, A.; Galazzo, I.B.; Raisi-Estabragh, Z.; Petersen, S.E.; Gkontra, P.; Lekadir, K.; Menegaz, G.; Radeva, P. A new scheme for the assessment of the robustness of explainable methods applied to brain age estimation. In Proceedings of the 2021 IEEE 34th International Symposium on Computer-Based Medical Systems (CBMS), Aveiro, Portugal, 7–9 June 2021; pp. 492–497. [Google Scholar] [CrossRef]

- Dosovitskiy, A. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition At Scale. In Proceedings of the ICLR 2021—9th International Conference on Learning Representations, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Dehghani, M.; Djolonga, J.; Mustafa, B.; Padlewski, P.; Heek, J.; Gilmer, J.; Steiner, A.P.; Caron, M.; Geirhos, R.; Alabdulmohsin, I.; et al. Scaling Vision Transformers to 22 Billion Parameters. Proc. Mach. Learn. Res. 2023, 202, 7480–7512. [Google Scholar]

- Poonia, R.C.; Al-Alshaikh, H.A. Ensemble approach of transfer learning and vision transformer leveraging explainable AI for disease diagnosis: An advancement towards smart healthcare 5.0. Comput. Biol. Med. 2024, 179, 108874. [Google Scholar] [CrossRef] [PubMed]

- Kamal, M.S.; Northcote, A.; Chowdhury, L.; Dey, N.; Crespo, R.G.; Herrera-Viedma, E. Alzheimer’s Patient Analysis Using Image and Gene Expression Data and Explainable-AI to Present Associated Genes. IEEE Trans. Instrum. Meas. 2021, 70, 2513107. [Google Scholar] [CrossRef]

- El-Sappagh, S.; Alonso, J.M.; Islam, S.M.R.; Sultan, A.M.; Kwak, K.S. A multilayer multimodal detection and prediction model based on explainable artificial intelligence for Alzheimer’s disease. Sci. Rep. 2021, 11, 2660. [Google Scholar] [CrossRef]

- Kumar, A.; Manikandan, R.; Kose, U.; Gupta, D.; Satapathy, S.C. Doctor’s dilemma: Evaluating an explainable subtractive spatial lightweight convolutional neural network for brain tumor diagnosis. ACM Trans. Multimed. Comput. Commun. Appl. 2021, 17, 1–26. [Google Scholar] [CrossRef]

- Zaharchuk, G.; Gong, E.; Wintermark, M.; Rubin, D.; Langlotz, C.P. Deep learning in neuroradiology. Am. J. Neuroradiol. 2018, 39, 1776–1784. [Google Scholar] [CrossRef]

- Korfiatis, P.; Erickson, B. Deep learning can see the unseeable: Predicting molecular markers from MRI of brain gliomas. Clin. Radiol. 2019, 74, 367–373. [Google Scholar] [CrossRef]

- Bakator, M.; Radosav, D. Deep learning and medical diagnosis: A review of literature. Multimodal Technol. Interact. 2018, 2, 47. [Google Scholar] [CrossRef]

- Zhang, Z.; Sejdić, E. Radiological images and machine learning: Trends, perspectives, and prospects. Comput. Biol. Med. 2019, 108, 354–370. [Google Scholar] [CrossRef]

- Chauhan, N.; Choi, B.-J. Performance analysis of classification techniques of human brain MRI images. Int. J. Fuzzy Log. Intell. Syst. 2019, 19, 315–322. [Google Scholar] [CrossRef]

- Mazurowski, M.A.; Buda, M.; Saha, A.; Bashir, M.R. Deep learning in radiology: An overview of the concepts and a survey of the state of the art with focus on MRI. J. Magn. Reson. Imaging 2019, 49, 939–954. [Google Scholar] [CrossRef] [PubMed]

- Yao, A.D.; Cheng, D.L.; Pan, I.; Kitamura, F. Deep learning in neuroradiology: A systematic review of current algorithms and approaches for the new wave of imaging technology. Radiol. Artif. Intell. 2020, 2, e190026. [Google Scholar] [CrossRef]

- Lima, A.A.; Mridha, M.F.; Das, S.C.; Kabir, M.M.; Islam, M.R.; Watanobe, Y. A comprehensive survey on the detection, classification, and challenges of neurological disorders. Biology 2022, 11, 469. [Google Scholar] [CrossRef]

- Kim, J.; Hong, J.; Park, H. Prospects of deep learning for medical imaging. Precis. Futur. Med. 2018, 2, 37–52. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, M.; Liu, M.; Zhang, D. A survey on deep learning for neuroimaging-based brain disorder analysis. Front. Neurosci. 2020, 14, 560709. [Google Scholar] [CrossRef]

- Tatekawa, H.; Sakamoto, S.; Hori, M.; Kaichi, Y.; Kunimatsu, A.; Akazawa, K.; Miyasaka, T.; Oba, H.; Okubo, T.; Hasuo, K.; et al. Imaging differences between neuromyelitis optica spectrum disorders and multiple sclerosis: A multi-institutional study in Japan. Am. J. Neuroradiol. 2018, 39, 1239–1247. [Google Scholar] [CrossRef]

- Miki, Y. Magnetic resonance imaging diagnosis of demyelinating diseases: An update. Clin. Exp. Neuroimmunol. 2019, 10, 32–48. [Google Scholar] [CrossRef]

- Fiala, C.; Rotstein, D.; Pasic, M.D. Pathobiology, diagnosis, and current biomarkers in neuromyelitis optica spectrum disorders. J. Appl. Lab. Med. 2022, 7, 305–310. [Google Scholar] [CrossRef]

- Etemadifar, M.; Norouzi, M.; Alaei, S.-A.; Karimi, R.; Salari, M. The diagnostic performance of AI-based algorithms to discriminate between NMOSD and MS using MRI features: A systematic review and meta-analysis. Mult. Scler. Relat. Disord. 2024, 105682. [Google Scholar] [CrossRef]

- Tatli, S.; Macin, G.; Tasci, I.; Tasci, B.; Barua, P.D.; Baygin, M.; Tuncer, T.; Dogan, S.; Ciaccio, E.J.; Acharya, U.R. Transfer-transfer model with MSNet: An automated accurate multiple sclerosis and myelitis detection system. Expert Syst. Appl. 2024, 236, 121314. [Google Scholar] [CrossRef]

- Kuchling, J.; Paul, F. Visualizing the central nervous system: Imaging tools for multiple sclerosis and neuromyelitis optica spectrum disorders. Front. Neurol. 2020, 11, 450. [Google Scholar] [CrossRef] [PubMed]

- Duong, M.T.; Rudie, J.D.; Wang, J.; Xie, L.; Mohan, S.; Gee, J.C.; Rauschecker, A.M. Convolutional neural network for automated FLAIR lesion segmentation on clinical brain MR imaging. Am. J. Neuroradiol. 2019, 40, 1282–1290. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.-U.; Kim, H.-J.; Choi, J.-H.; Choi, J.-Y.; Kim, J.-S. Comparison of ocular motor findings between neuromyelitis optica spectrum disorder and multiple sclerosis involving the brainstem and cerebellum. Cerebellum 2019, 18, 511–518. [Google Scholar] [CrossRef]

- Majumder, A.; Veilleux, C.B. Smart Health and Cybersecurity in the Era of Artificial Intelligence; IntechOpen: London, UK, 2021. [Google Scholar]

- Chakraborty, C.; Nagarajan, S.M.; Devarajan, G.G.; Ramana, T.V.; Mohanty, R. Intelligent AI-based healthcare cyber security system using multi-source transfer learning method. ACM Trans. Sens. Netw. 2023. [Google Scholar] [CrossRef]

- Saura, J.R.; Ribeiro-Soriano, D.; Palacios-Marqués, D. Setting privacy ‘by default’ in social IoT: Theorizing the challenges and directions in Big Data Research. Big Data Res. 2021, 25, 100245. [Google Scholar] [CrossRef]

- Chen, P.Y.; Cheng, Y.C.; Zhong, Z.H.; Zhang, F.Z.; Pai, N.S.; Li, C.M.; Lin, C.H. Information Security and Artificial Intelligence-Assisted Diagnosis in an Internet of Medical Thing System (IoMTS). IEEE Access 2024, 12, 9757–9775. [Google Scholar] [CrossRef]

- Damar, M.; Özen, A.; Yılmaz, A. Cybersecurity in The Health Sector in The Reality of Artificial Intelligence, And Information Security Conceptually. J. AI 2024, 8, 61–82. [Google Scholar] [CrossRef]

- Yanase, J.; Triantaphyllou, E. The seven key challenges for the future of computer-aided diagnosis in medicine. Int. J. Med. Inform. 2019, 129, 413–422. [Google Scholar] [CrossRef]

- Biasin, E.; Kamenjašević, E.; Ludvigsen, K.R. Cybersecurity of AI medical devices: Risks, legislation, and challenges. In Research Handbook on Health, AI and the Law; Edward Elgar Publishing: Cheltenham, UK, 2024; pp. 57–74. [Google Scholar] [CrossRef]

- Rai, H.M.; Tsoy, D.; Daineko, Y. MetaHospital: Implementing robust data security measures for an AI-driven medical diagnosis system. Procedia Comput. Sci. 2024, 241, 476–481. [Google Scholar] [CrossRef]

| Study | Pathology | Modality | Technology | Accuracy | XAI | Dataset |

|---|---|---|---|---|---|---|

| [65] 2024 | Glioma | 23 Clinical and Molecular/mutation factors | RF, decision trees (DT), logistic regression (LR), K-nearest neighbors (KNN), Adaboost, Support Vector Machine (SVM), Catboost, Light Gradient-Boosting Machine (LGBM) classifier, Xgboost, CNN | 88% for Xgboost | SHAP, Eli5, LIME, and QLattice | Glioma Grading Clinical and Mutation Features Dataset—352 Glioblastoma Multiforme (GBM), 487 LGG patients |

| [32] 2023 | AD, cognitively normal, non-Alzheimer’s dementia, uncertain dementia, and others | Clinical, Psychological, and MRI segmentation data | RF, LR, DT, MLP, KNN, GB, AdaB, SVM, and Naïve Bayes (NB) | 98.81% | SHAP | OASIS-3, ADRC clinical data, Number of NC, AD, Other dementia/Non-AD, Uncertain, and Others are 4476, 1058, 142, 505, and 43, respectively |

| [21] 2023 | Brain tumor | MRI | DenseNet201, iterative neighborhood component (INCA) feature selector, SVM | 98.65% and 99.97%, for Datasets I and II | Grad-CAM | Four-class Kaggle brain tumor dataset and the three-class Figshare brain tumor dataset |

| [4] 2022 | AD, EMCI, MCI, LMCI | MRI T1w | DT, LGBM, LR, RF and Support Vector Classifier (SVC) | - | SHAP | ADNI3—475 subjects, including 300 controls (HC, 254 Cognitively Normal and 46 Significant Memory Concern) and 175 patients with dementia (comprising 70 early MCI, 55 MCI, 34 Late MCI and 16 AD) |

| [66] 2020 | AD | T1w volumetric 3D sagittal magnetization prepared rapid gradient-echo (MPRAGE) scans | DT and RF | Average 91% | Argumentation-based reasoning frame-work | ADNI—NC 144 and AD 69 |

| Study | Pathology | Modality | Technology | Accuracy | XAI | Dataset |

|---|---|---|---|---|---|---|

| [98] 2024 | AD | MRI | Transfer learning (TL), vision transformer (ViT) | TL 58%, TL ViT Ensemble 96% | - | ADNI |

| [23] 2024 | Gliomas segmentation | Three-dimensional pre-operative multimodal MRI scans including T1w, T1Gd, T2w, and FLAIR | Hybrid vision transformers and CNNs | Dice up to 0.88 | Grad-CAM—TransXAI, post-hoc surgeon understandable heatmaps | BraTS 2019 challenge dataset including 335 training and 125 validation subjects |

| Study | Pathology | Modality | Technology | Accuracy | XAI | Dataset |

|---|---|---|---|---|---|---|

| [13] 2023 | AD | PET and MRI | Modified Resnet18 | 73.90% | - | ADNI—412 MRIs and 412 PETs |

| [99] 2021 | AD | MRI and gene expression data | CNN, KNN, SVC, Xboost | 97.6% | LIME | Kaggle—6400 MRI images, gene from the dataset OASIS −3, NCBI database, which contains 104 gene expression data from patients |

| [100] 2021 | AD, MCI | 11 modalities—PET, MRI, Cognitive scores, Genetic, CSF, Lab tests data, etc. | RF | 93.95% for AD detection and 87.08% for progression prediction | SHAP—these explanations are represented in natural language form to help physicians understand the predictions | ADNI—294 cognitively normal, 254 stable MCI, 232 progressive MCI, and 268 AD |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hafeez, Y.; Memon, K.; AL-Quraishi, M.S.; Yahya, N.; Elferik, S.; Ali, S.S.A. Explainable AI in Diagnostic Radiology for Neurological Disorders: A Systematic Review, and What Doctors Think About It. Diagnostics 2025, 15, 168. https://doi.org/10.3390/diagnostics15020168

Hafeez Y, Memon K, AL-Quraishi MS, Yahya N, Elferik S, Ali SSA. Explainable AI in Diagnostic Radiology for Neurological Disorders: A Systematic Review, and What Doctors Think About It. Diagnostics. 2025; 15(2):168. https://doi.org/10.3390/diagnostics15020168

Chicago/Turabian StyleHafeez, Yasir, Khuhed Memon, Maged S. AL-Quraishi, Norashikin Yahya, Sami Elferik, and Syed Saad Azhar Ali. 2025. "Explainable AI in Diagnostic Radiology for Neurological Disorders: A Systematic Review, and What Doctors Think About It" Diagnostics 15, no. 2: 168. https://doi.org/10.3390/diagnostics15020168

APA StyleHafeez, Y., Memon, K., AL-Quraishi, M. S., Yahya, N., Elferik, S., & Ali, S. S. A. (2025). Explainable AI in Diagnostic Radiology for Neurological Disorders: A Systematic Review, and What Doctors Think About It. Diagnostics, 15(2), 168. https://doi.org/10.3390/diagnostics15020168