Artificial Intelligence-Empowered Radiology—Current Status and Critical Review

Abstract

1. Introduction

2. Historical Background

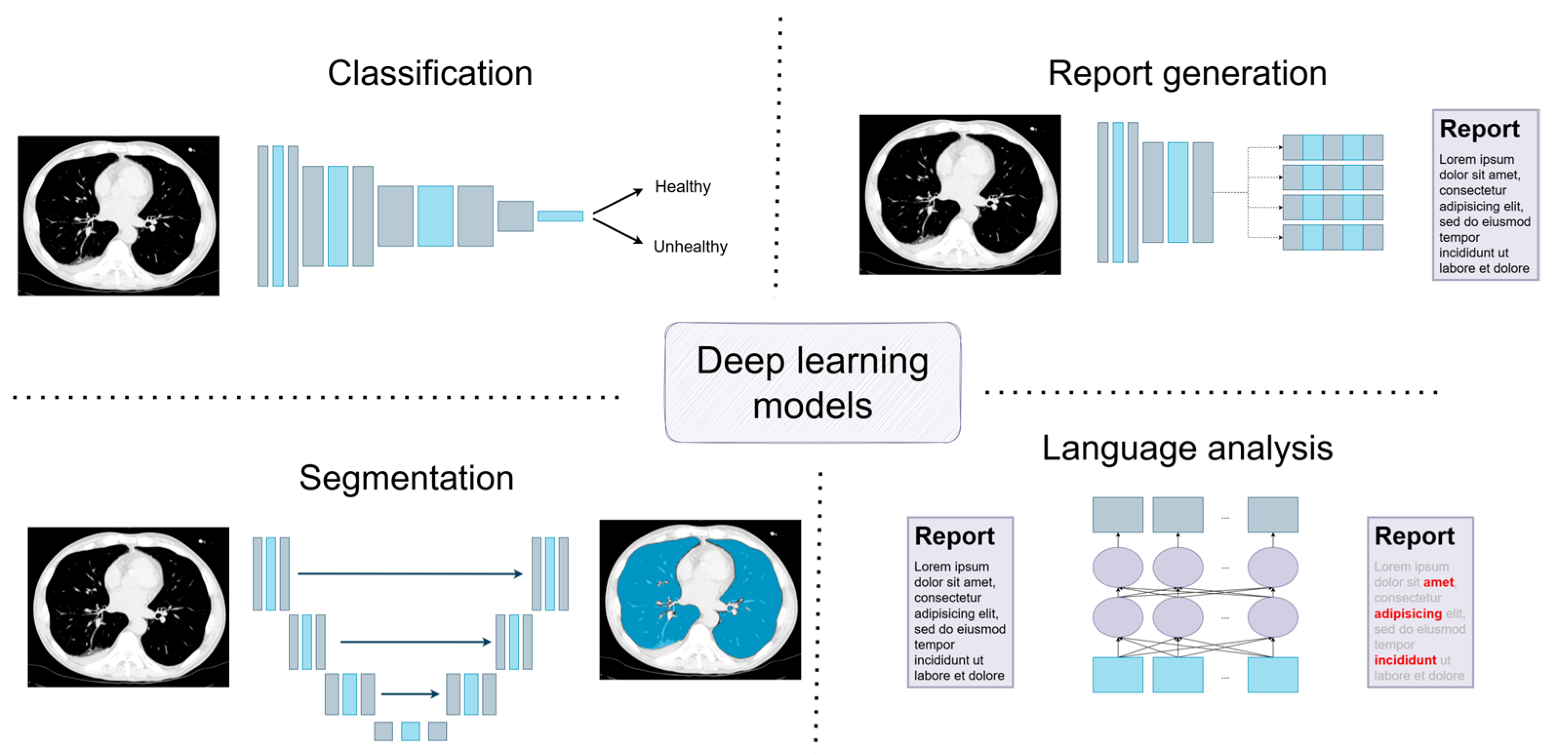

3. Deep Learning Models: A Short Introduction to Current Solutions

3.1. Classification

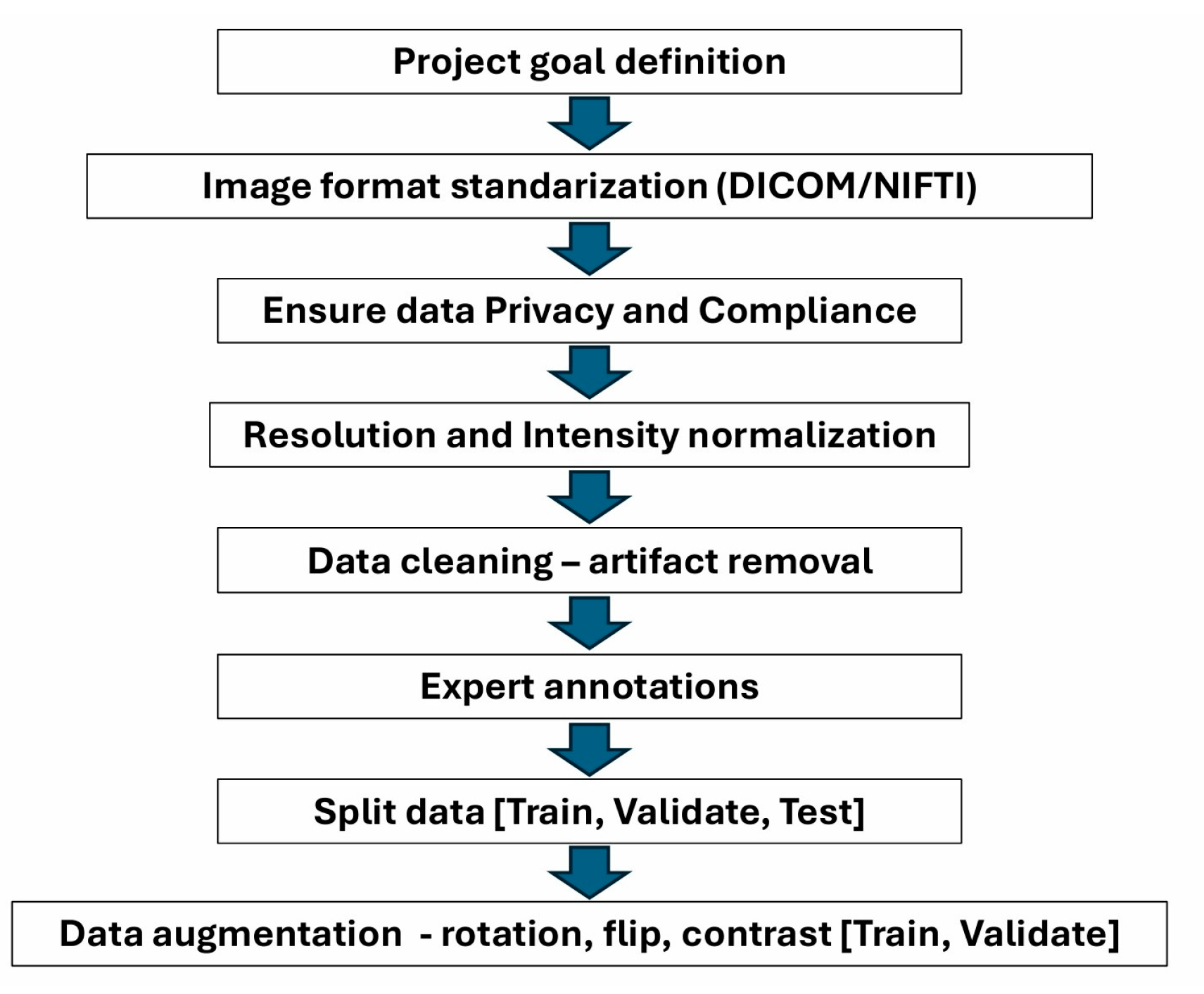

3.2. Segmentation

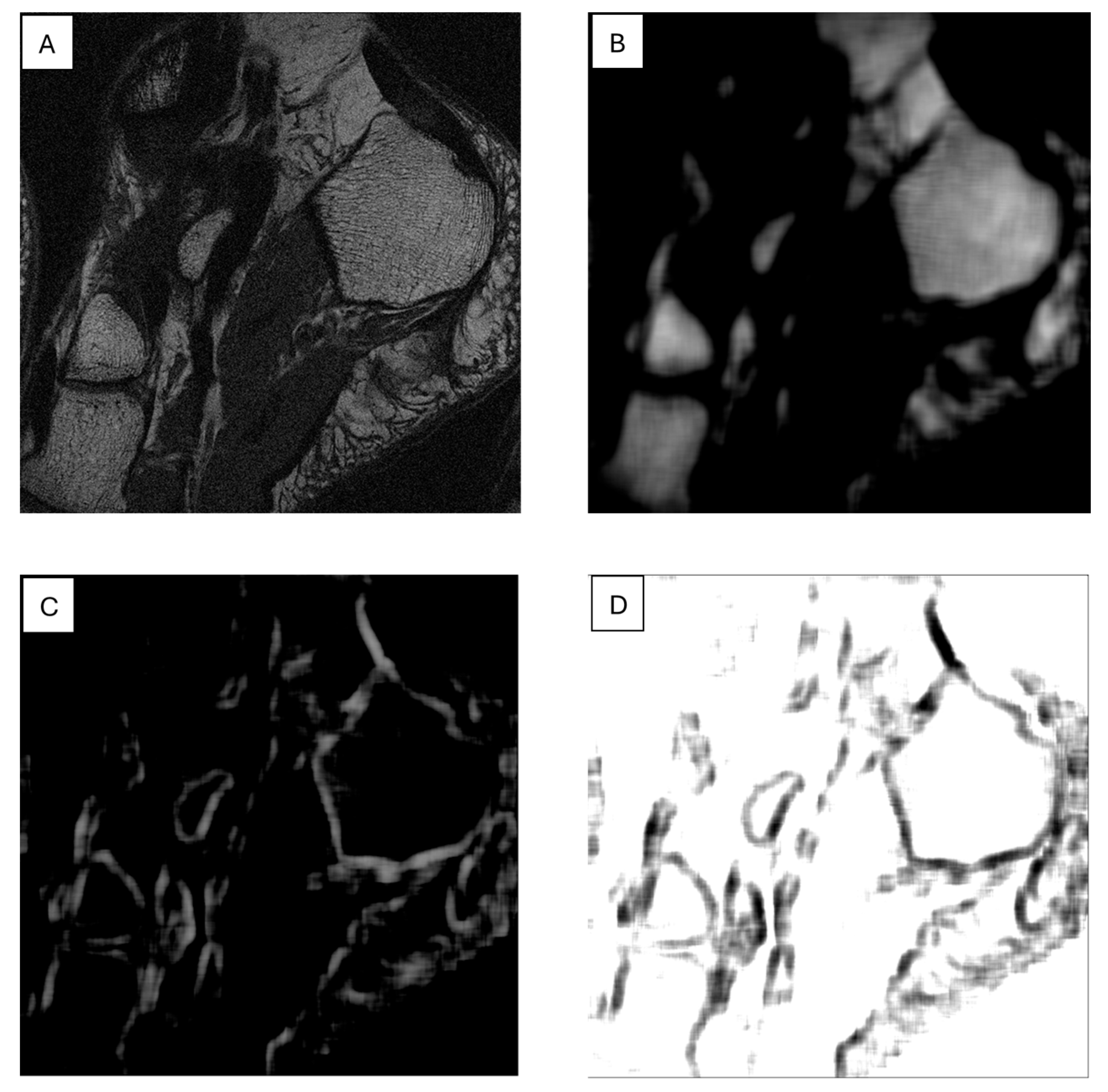

3.3. Report Generation

3.4. Language Analysis

4. Are Machines More Efficient than Human Doctors?

5. Is the Job of a Radiologist at Risk?

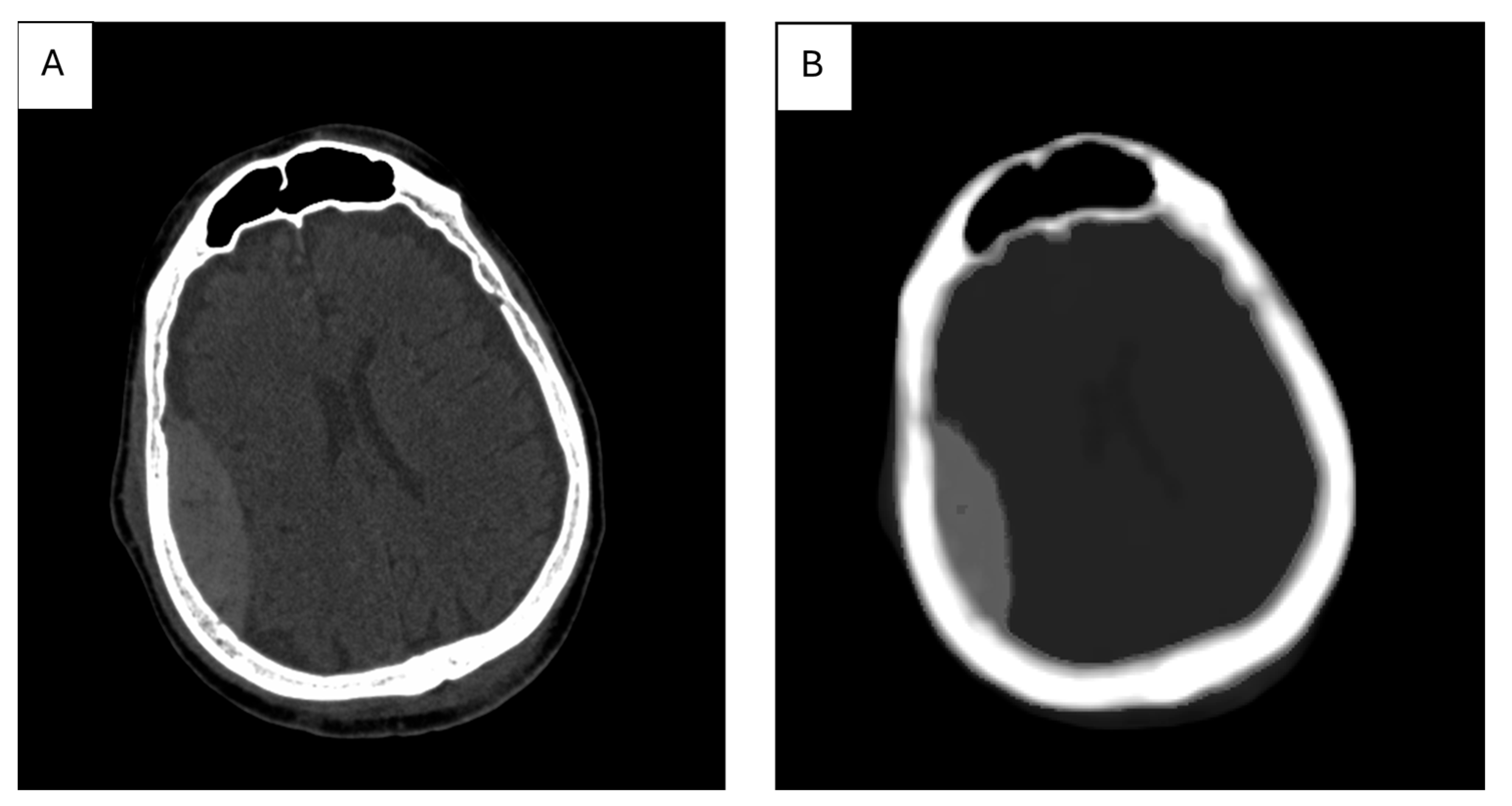

6. Importance of Data Preparation for Processing with AI

7. The Role of Textural Analysis in Image Preprocessing

8. AI Is Supportive but Must Be Used with Caution

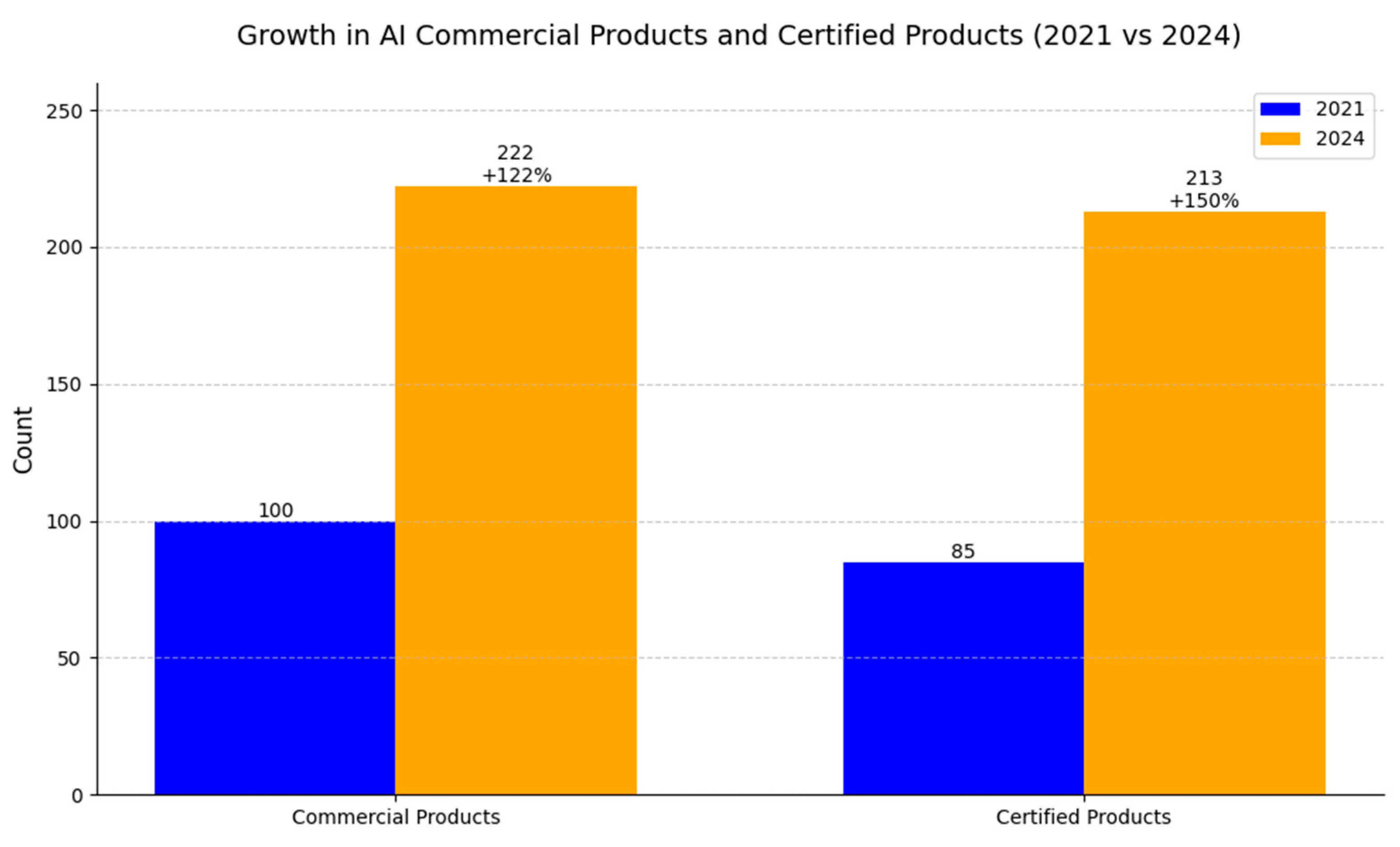

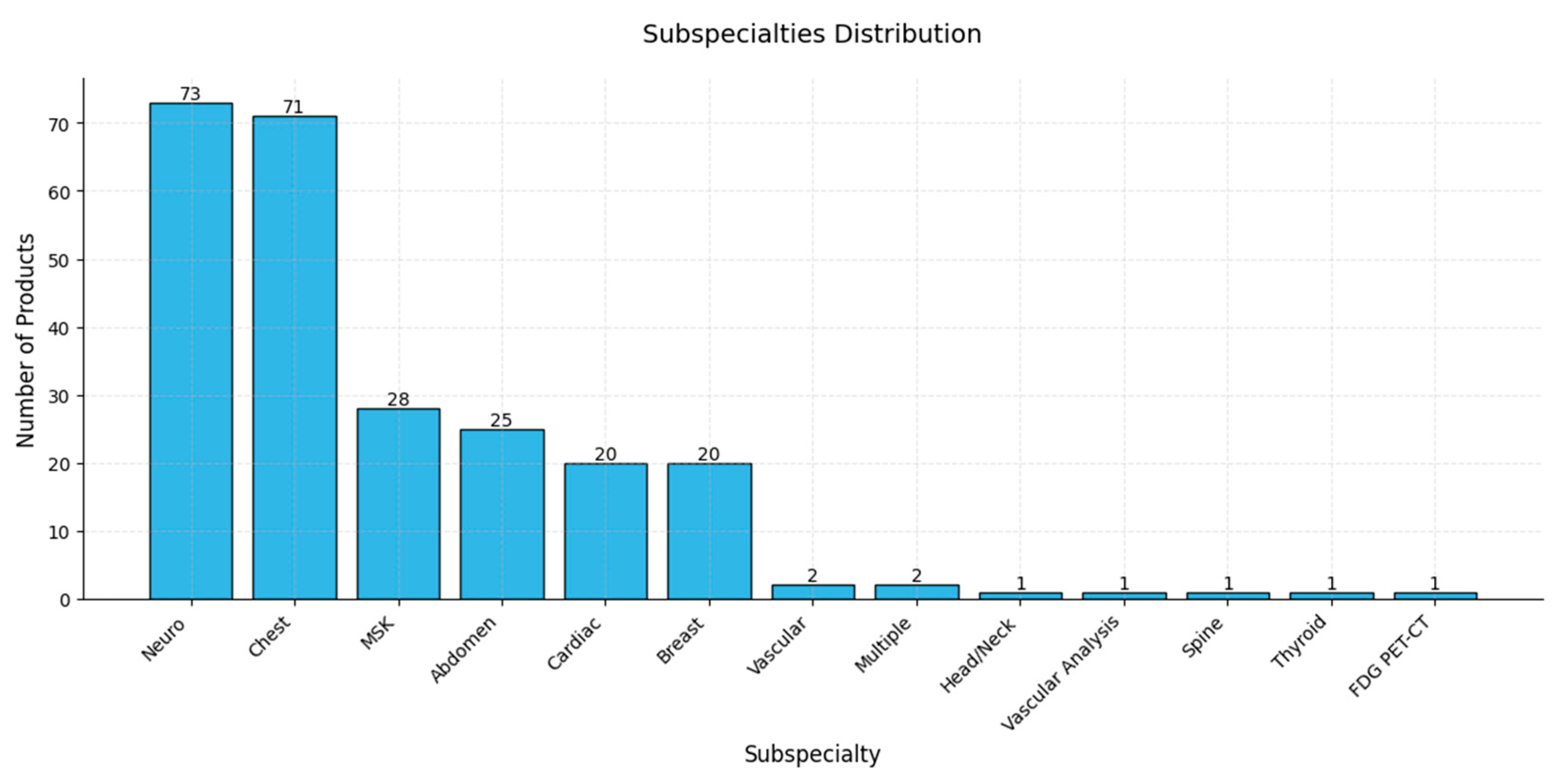

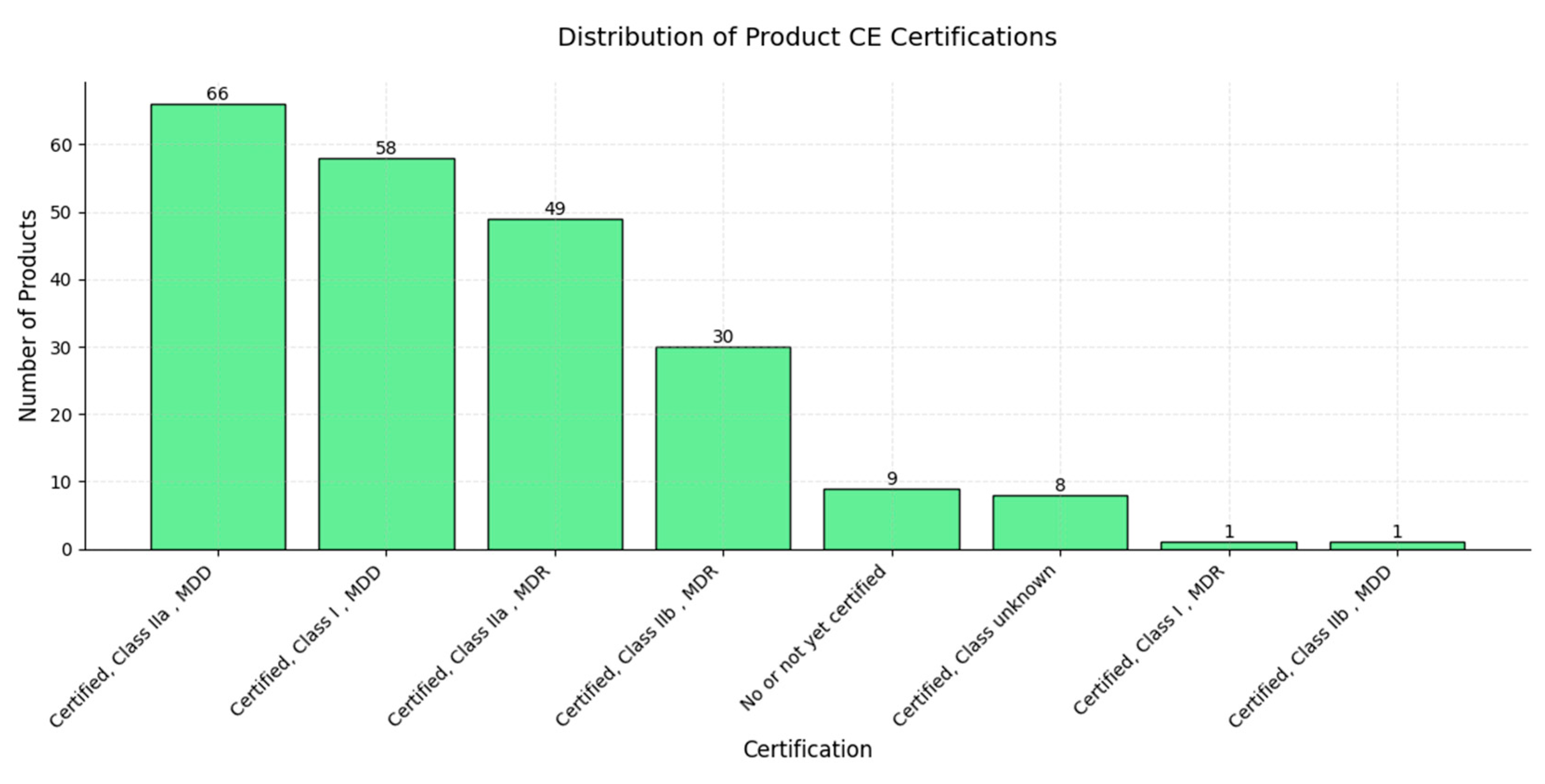

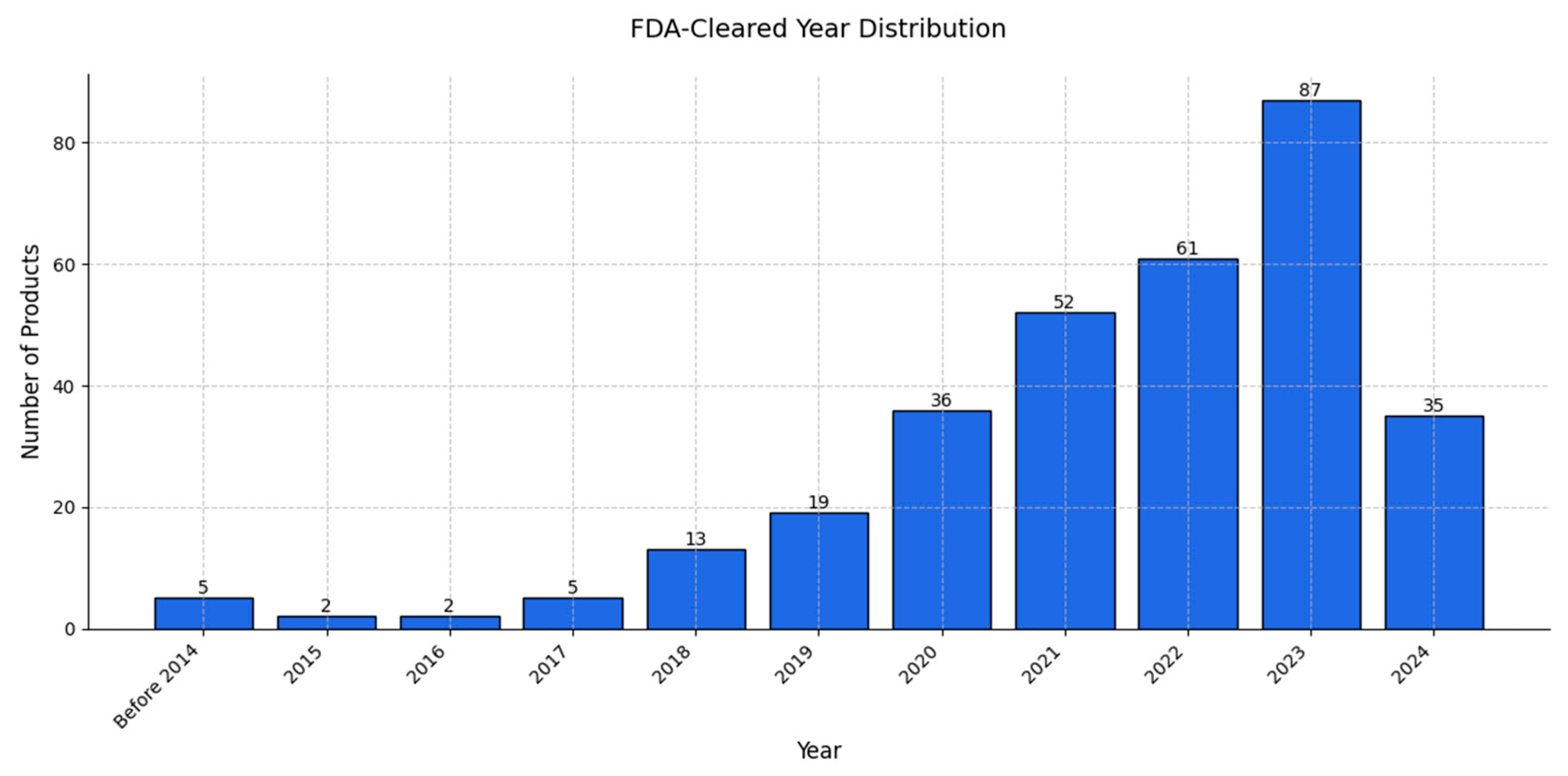

9. Review of AI Products Used in Radiology: Status in 2024

10. Examples of Practical Implementation of AI Models

11. Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial Intelligence in Healthcare: Transforming the Practice of Medicine. Future Healthc. J. 2021, 8, e188–e194. [Google Scholar] [CrossRef]

- Morris, M.A.; Saboury, B.; Burkett, B.; Gao, J.; Siegel, E.L. Reinventing Radiology: Big Data and the Future of Medical Imaging. J. Thorac. Imaging 2018, 33, 4–16. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, J.; Iqbal, S.; Ahmad, N. The Radiological Renaissance: Transformative Advances in Emergency Care. Cosm. J. Biol. 2024, 2, 62–69. [Google Scholar]

- Phillips-Wren, G. Ai Tools in Decision Making Support Systems: A Review. Int. J. Artif. Intell. Tools 2012, 21, 1240005. [Google Scholar] [CrossRef]

- Soori, M.; Jough, F.K.G.; Dastres, R.; Arezoo, B. AI-Based Decision Support Systems in Industry 4.0, A Review. J. Econ. Technol. 2024. [Google Scholar] [CrossRef]

- Pierce, R.L.; Van Biesen, W.; Van Cauwenberge, D.; Decruyenaere, J.; Sterckx, S. Explainability in Medicine in an Era of AI-Based Clinical Decision Support Systems. Front. Genet. 2022, 13, 903600. [Google Scholar] [CrossRef] [PubMed]

- Raparthi, M.; Gayam, S.R.; Kasaraneni, B.P.; Kondapaka, K.K.; Pattyam, S.P.; Putha, S.; Kuna, S.S.; Nimmagadda, V.S.P.; Sahu, M.K.; Thuniki, P. AI-Driven Decision Support Systems for Precision Medicine: Examining the Development and Implementation of AI-Driven Decision Support Systems in Precision Medicine. J. Artif. Intell. Res. 2021, 1, 11–20. [Google Scholar]

- Mintz, Y.; Brodie, R. Introduction to Artificial Intelligence in Medicine. Minim. Invasive Ther. Allied Technol. 2019, 28, 73–81. [Google Scholar] [CrossRef] [PubMed]

- Lai, V.; Chen, C.; Liao, Q.V.; Smith-Renner, A.; Tan, C. Towards a Science of Human-AI Decision Making: A Survey of Empirical Studies. arXiv 2021, arXiv:2112.11471. [Google Scholar]

- De Vreede, T.; Raghavan, M.; De Vreede, G.-J. Design Foundations for AI Assisted Decision Making: A Self Determination Theory Approach. In Proceedings of the 54th Hawaii International Conference on System Sciences, Kauai, HI, USA, 5–8 January 2021. [Google Scholar]

- Yang, X.; Aurisicchio, M. Designing Conversational Agents: A Self-Determination Theory Approach. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, ACM, Yokohama, Japan, 6 May 2021; pp. 1–16. [Google Scholar]

- Wang, F.-Y.; Zhang, J.J.; Zheng, X.; Wang, X.; Yuan, Y.; Dai, X.; Zhang, J.; Yang, L. Where Does AlphaGo Go: From Church-Turing Thesis to AlphaGo Thesis and Beyond. IEEE/CAA J. Autom. Sin. 2016, 3, 113–120. [Google Scholar] [CrossRef]

- Hamet, P.; Tremblay, J. Artificial Intelligence in Medicine. Metab. Clin. Exp. 2017, 69, S36–S40. [Google Scholar] [CrossRef] [PubMed]

- Amisha; Malik, P.; Pathania, M.; Rathaur, V. Overview of Artificial Intelligence in Medicine. J. Fam. Med. Prim. Care 2019, 8, 2328. [Google Scholar] [CrossRef] [PubMed]

- Rezazade Mehrizi, M.H.; Van Ooijen, P.; Homan, M. Applications of Artificial Intelligence (AI) in Diagnostic Radiology: A Technography Study. Eur. Radiol. 2021, 31, 1805–1811. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial Intelligence in Radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Tariq, A.; Purkayastha, S.; Padmanaban, G.P.; Krupinski, E.; Trivedi, H.; Banerjee, I.; Gichoya, J.W. Current Clinical Applications of Artificial Intelligence in Radiology and Their Best Supporting Evidence. J. Am. Coll. Radiol. 2020, 17, 1371–1381. [Google Scholar] [CrossRef] [PubMed]

- Kapoor, N.; Lacson, R.; Khorasani, R. Workflow Applications of Artificial Intelligence in Radiology and an Overview of Available Tools. J. Am. Coll. Radiol. 2020, 17, 1363–1370. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional Neural Networks: An Overview and Application in Radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed]

- Van Leeuwen, K.G.; Schalekamp, S.; Rutten, M.J.C.M.; Van Ginneken, B.; De Rooij, M. Artificial Intelligence in Radiology: 100 Commercially Available Products and Their Scientific Evidence. Eur. Radiol. 2021, 31, 3797–3804. [Google Scholar] [CrossRef]

- Wichmann, J.L.; Willemink, M.J.; De Cecco, C.N. Artificial Intelligence and Machine Learning in Radiology: Current State and Considerations for Routine Clinical Implementation. Invest. Radiol. 2020, 55, 619–627. [Google Scholar] [CrossRef]

- Pesapane, F.; Summers, P. Ethics and Regulations for AI in Radiology. In Artificial Intelligence for Medicine; Elsevier: Amsterdam, The Netherlands, 2024; pp. 179–192. ISBN 978-0-443-13671-9. [Google Scholar]

- Maleki, F.; Muthukrishnan, N.; Ovens, K.; Reinhold, C.; Forghani, R. Machine Learning Algorithm Validation. Neuroimaging Clin. N. Am. 2020, 30, 433–445. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Sutskever, I. Training Recurrent Neural Networks. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2013. [Google Scholar]

- Wu, Z.; Shen, C.; Van Den Hengel, A. Wider or Deeper: Revisiting the ResNet Model for Visual Recognition. Pattern Recognit. 2019, 90, 119–133. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Kai, L.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, June 20-25 June 2009; pp. 248–255. [Google Scholar]

- Witt, S. How Jensen Huang’s Nvidia Is Powering the A.I. Revolution. The New Yorker. 2023. Available online: https://www.newyorker.com/magazine/2023/12/04/how-jensen-huangs-nvidia-is-powering-the-ai-revolution (accessed on 1 December 2024).

- Goriparthi, R.; Luqman, S. Deep Learning Architectures for Real-Time Image Recognition: Innovations and Applications. J. Data Sci. Intell. Syst. 2024, 15, 880–907. [Google Scholar]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A Survey of the Recent Architectures of Deep Convolutional Neural Networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Cheng, J.; Wang, P.; Li, G.; Hu, Q.; Lu, H. Recent Advances in Efficient Computation of Deep Convolutional Neural Networks. Front. Inf. Technol. Electron. Eng. 2018, 19, 64–77. [Google Scholar] [CrossRef]

- Ali, I.; Hart, G.R.; Gunabushanam, G.; Liang, Y.; Muhammad, W.; Nartowt, B.; Kane, M.; Ma, X.; Deng, J. Lung Nodule Detection via Deep Reinforcement Learning. Front. Oncol. 2018, 8, 108. [Google Scholar] [CrossRef]

- Obuchowicz, R.; Strzelecki, M.; Piórkowski, A. Clinical Applications of Artificial Intelligence in Medical Imaging and Image Processing—A Review. Cancers 2024, 16, 1870. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Schulz, S.; Woerl, A.-C.; Jungmann, F.; Glasner, C.; Stenzel, P.; Strobl, S.; Fernandez, A.; Wagner, D.-C.; Haferkamp, A.; Mildenberger, P.; et al. Multimodal Deep Learning for Prognosis Prediction in Renal Cancer. Front. Oncol. 2021, 11, 788740. [Google Scholar] [CrossRef] [PubMed]

- Yi, P.H.; Kim, T.K.; Yu, A.C.; Bennett, B.; Eng, J.; Lin, C.T. Can AI Outperform a Junior Resident? Comparison of Deep Neural Network to First-Year Radiology Residents for Identification of Pneumothorax. Emerg. Radiol. 2020, 27, 367–375. [Google Scholar] [CrossRef] [PubMed]

- Chamberlin, J.H.; Aquino, G.; Nance, S.; Wortham, A.; Leaphart, N.; Paladugu, N.; Brady, S.; Baird, H.; Fiegel, M.; Fitzpatrick, L.; et al. Automated Diagnosis and Prognosis of COVID-19 Pneumonia from Initial ER Chest X-Rays Using Deep Learning. BMC Infect. Dis. 2022, 22, 637. [Google Scholar] [CrossRef]

- Abrahamsen, B.S.; Knudtsen, I.S.; Eikenes, L.; Bathen, T.F.; Elschot, M. Pelvic PET/MR Attenuation Correction in the Image Space Using Deep Learning. Front. Oncol. 2023, 13, 1220009. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Shao, J.; Xu, X.; Yi, L.; Wang, G.; Bai, C.; Guo, J.; He, Y.; Zhang, L.; Yi, Z.; et al. DeepLN: A Multi-Task AI Tool to Predict the Imaging Characteristics, Malignancy and Pathological Subtypes in CT-Detected Pulmonary Nodules. Front. Oncol. 2022, 12, 683792. [Google Scholar] [CrossRef] [PubMed]

- Albiol, A.; Albiol, F.; Paredes, R.; Plasencia-Martínez, J.M.; Blanco Barrio, A.; Santos, J.M.G.; Tortajada, S.; González Montaño, V.M.; Rodríguez Godoy, C.E.; Fernández Gómez, S.; et al. A Comparison of COVID-19 Early Detection between Convolutional Neural Networks and Radiologists. Insights Imaging 2022, 13, 122. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2016. [Google Scholar]

- Fink, A.; Tran, H.; Reisert, M.; Rau, A.; Bayer, J.; Kotter, E.; Bamberg, F.; Russe, M.F. A Deep Learning Approach for Projection and Body-Side Classification in Musculoskeletal Radiographs. Eur. Radiol. Exp. 2024, 8, 23. [Google Scholar] [CrossRef] [PubMed]

- Arbabshirani, M.R.; Fornwalt, B.K.; Mongelluzzo, G.J.; Suever, J.D.; Geise, B.D.; Patel, A.A.; Moore, G.J. Advanced Machine Learning in Action: Identification of Intracranial Hemorrhage on Computed Tomography Scans of the Head with Clinical Workflow Integration. NPJ Digit. Med. 2018, 1, 9. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.P.; Jung, J.W.; Yoo, Y.J.; Choi, S.H.; Yoon, J. Intelligent Evaluation of Global Spinal Alignment by a Decentralized Convolutional Neural Network. J. Digit. Imaging 2022, 35, 213–225. [Google Scholar] [CrossRef]

- Solak, A.; Ceylan, R.; Bozkurt, M.A.; Cebeci, H.; Koplay, M. Adrenal Lesion Classification with Abdomen Caps and the Effect of ROI Size. Phys. Eng. Sci. Med. 2023, 46, 865–875. [Google Scholar] [CrossRef]

- Gasulla, Ó.; Ledesma-Carbayo, M.J.; Borrell, L.N.; Fortuny-Profitós, J.; Mazaira-Font, F.A.; Barbero Allende, J.M.; Alonso-Menchén, D.; García-Bennett, J.; Del Río-Carrrero, B.; Jofré-Grimaldo, H.; et al. Enhancing Physicians’ Radiology Diagnostics of COVID-19’s Effects on Lung Health by Leveraging Artificial Intelligence. Front. Bioeng. Biotechnol. 2023, 11, 1010679. [Google Scholar] [CrossRef] [PubMed]

- Santhanam, P.; Nath, T.; Peng, C.; Bai, H.; Zhang, H.; Ahima, R.S.; Chellappa, R. Artificial Intelligence and Body Composition. Diabetes Metab. Syndr.: Clin. Res. Rev. 2023, 17, 102732. [Google Scholar] [CrossRef] [PubMed]

- Willemink, M.J.; Roth, H.R.; Sandfort, V. Toward Foundational Deep Learning Models for Medical Imaging in the New Era of Transformer Networks. Radiol. Artif. Intell. 2022, 4, e210284. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Newry, UK, 2017; Volume 30. [Google Scholar]

- Larson, N.; Nguyen, C.; Do, B.; Kaul, A.; Larson, A.; Wang, S.; Wang, E.; Bultman, E.; Stevens, K.; Pai, J.; et al. Artificial Intelligence System for Automatic Quantitative Analysis and Radiology Reporting of Leg Length Radiographs. J. Digit. Imaging 2022, 35, 1494–1505. [Google Scholar] [CrossRef] [PubMed]

- Nurzynska, K.; Li, D.; Walts, A.E.; Gertych, A. Multilayer Outperforms Single-Layer Slide Scanning in AI-Based Classification of Whole Slide Images with Low-Burden Acid-Fast Mycobacteria (AFB). Comput. Methods Programs Biomed. 2023, 234, 107518. [Google Scholar] [CrossRef] [PubMed]

- Haji Maghsoudi, O.; Gastounioti, A.; Scott, C.; Pantalone, L.; Wu, F.-F.; Cohen, E.A.; Winham, S.; Conant, E.F.; Vachon, C.; Kontos, D. Deep-LIBRA: An Artificial-Intelligence Method for Robust Quantification of Breast Density with Independent Validation in Breast Cancer Risk Assessment. Med. Image Anal. 2021, 73, 102138. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Stember, J.N.; Shalu, H. Deep Neuroevolution Squeezes More Out of Small Neural Networks and Small Training Sets: Sample Application to MRI Brain Sequence Classification. In International Symposium on Intelligent Informatics; Thampi, S.M., Mukhopadhyay, J., Paprzycki, M., Li, K.-C., Eds.; Smart Innovation, Systems and Technologies; Springer Nature: Singapore, 2023; Volume 333, pp. 153–167. ISBN 978-981-19-8093-0. [Google Scholar]

- Seker, M.E.; Koyluoglu, Y.O.; Ozaydin, A.N.; Gurdal, S.O.; Ozcinar, B.; Cabioglu, N.; Ozmen, V.; Aribal, E. Diagnostic Capabilities of Artificial Intelligence as an Additional Reader in a Breast Cancer Screening Program. Eur. Radiol. 2024, 34, 6145–6157. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Xin, X.; Wang, Y.; Guo, Y.; Hao, Q.; Yang, X.; Wang, J.; Zhang, J.; Zhang, B.; Wang, W. Automated Radiological Report Generation For Chest X-Rays With Weakly-Supervised End-to-End Deep Learning. arXiv 2020, arXiv:2006.10347. [Google Scholar]

- Bassi, P.R.A.S.; Yavuz, M.C.; Wang, K.; Chen, X.; Li, W.; Decherchi, S.; Cavalli, A.; Yang, Y.; Yuille, A.; Zhou, Z. RadGPT: Constructing 3D Image-Text Tumor Datasets. arXiv 2025, arXiv:2501.04678. [Google Scholar]

- Sun, Z.; Ong, H.; Kennedy, P.; Tang, L.; Chen, S.; Elias, J.; Lucas, E.; Shih, G.; Peng, Y. Evaluating GPT4 on Impressions Generation in Radiology Reports. Radiology 2023, 307, e231259. [Google Scholar] [CrossRef] [PubMed]

- Kathait, A.S.; Garza-Frias, E.; Sikka, T.; Schultz, T.J.; Bizzo, B.; Kalra, M.K.; Dreyer, K.J. Assessing Laterality Errors in Radiology: Comparing Generative Artificial Intelligence and Natural Language Processing. J. Am. Coll. Radiol. 2024, 21, 1575–1582. [Google Scholar] [CrossRef]

- Butler, J.J.; Harrington, M.C.; Tong, Y.; Rosenbaum, A.J.; Samsonov, A.P.; Walls, R.J.; Kennedy, J.G. From Jargon to Clarity: Improving the Readability of Foot and Ankle Radiology Reports with an Artificial Intelligence Large Language Model. Foot Ankle Surg. 2024, 30, 331–337. [Google Scholar] [CrossRef]

- Adams, L.C.; Truhn, D.; Busch, F.; Kader, A.; Niehues, S.M.; Makowski, M.R.; Bressem, K.K. Leveraging GPT-4 for Post Hoc Transformation of Free-Text Radiology Reports into Structured Reporting: A Multilingual Feasibility Study. Radiology 2023, 307, e230725. [Google Scholar] [CrossRef] [PubMed]

- Matute-González, M.; Darnell, A.; Comas-Cufí, M.; Pazó, J.; Soler, A.; Saborido, B.; Mauro, E.; Turnes, J.; Forner, A.; Reig, M.; et al. Utilizing a Domain-Specific Large Language Model for LI-RADS V2018 Categorization of Free-Text MRI Reports: A Feasibility Study. Insights Imaging 2024, 15, 280. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.K.; Greenspan, H.; Davatzikos, C.; Duncan, J.S.; Van Ginneken, B.; Madabhushi, A.; Prince, J.L.; Rueckert, D.; Summers, R.M. A Review of Deep Learning in Medical Imaging: Imaging Traits, Technology Trends, Case Studies With Progress Highlights, and Future Promises. Proc. IEEE 2021, 109, 820–838. [Google Scholar] [CrossRef] [PubMed]

- Najjar, R. Redefining Radiology: A Review of Artificial Intelligence Integration in Medical Imaging. Diagnostics 2023, 13, 2760. [Google Scholar] [CrossRef] [PubMed]

- A. Semary, N.; Ahmed, W.; Amin, K.; Pławiak, P.; Hammad, M. Enhancing Machine Learning-Based Sentiment Analysis through Feature Extraction Techniques. PLoS ONE 2024, 19, e0294968. [Google Scholar] [CrossRef]

- Davis, E. Benchmarks for Automated Commonsense Reasoning: A Survey. ACM Comput. Surv. 2024, 56, 81. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, J.; Shan, Z.; He, J. Compression Represents Intelligence Linearly. arXiv 2024, arXiv:2404.09937. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Alshqaqeeq, F.; McGuire, C.; Overcash, M.; Ali, K.; Twomey, J. Choosing Radiology Imaging Modalities to Meet Patient Needs with Lower Environmental Impact. Resour. Conserv. Recycl. 2020, 155, 104657. [Google Scholar] [CrossRef]

- Wang, P.; Vasconcelos, N. A Machine Teaching Framework for Scalable Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 11–17 October 2021; pp. 4945–4954. [Google Scholar]

- Raina, R.; Battle, A.; Lee, H.; Packer, B.; Ng, A.Y. Self-Taught Learning: Transfer Learning from Unlabeled Data. In Proceedings of the 24th International Conference on Machine Learning, Corvallis, OR, USA, 20–24 June 2007; pp. 759–766. [Google Scholar]

- Pfob, A.; Lu, S.-C.; Sidey-Gibbons, C. Machine Learning in Medicine: A Practical Introduction to Techniques for Data Pre-Processing, Hyperparameter Tuning, and Model Comparison. BMC Med. Res. Methodol. 2022, 22, 282. [Google Scholar] [CrossRef] [PubMed]

- Borgli, R.J.; Kvale Stensland, H.; Riegler, M.A.; Halvorsen, P. Automatic Hyperparameter Optimization for Transfer Learning on Medical Image Datasets Using Bayesian Optimization. In Proceedings of the 2019 13th International Symposium on Medical Information and Communication Technology (ISMICT), Oslo, Norway, 8–10 May 2019; pp. 1–6. [Google Scholar]

- Bradshaw, T.J.; Huemann, Z.; Hu, J.; Rahmim, A. A Guide to Cross-Validation for Artificial Intelligence in Medical Imaging. Radiol. Artif. Intell. 2023, 5, e220232. [Google Scholar] [CrossRef] [PubMed]

- Coppola, F.; Faggioni, L.; Gabelloni, M.; De Vietro, F.; Mendola, V.; Cattabriga, A.; Cocozza, M.A.; Vara, G.; Piccinino, A.; Lo Monaco, S.; et al. Human, All Too Human? An All-Around Appraisal of the “Artificial Intelligence Revolution” in Medical Imaging. Front. Psychol. 2021, 12, 710982. [Google Scholar] [CrossRef] [PubMed]

- Jun-Yong, H.; Hyun, P.S.; Young-Jin, J. Artificial Intelligence Based Medical Imaging: An Overview. J. Radiol. Sci. Technol. 2020, 43, 195–208. [Google Scholar] [CrossRef]

- Tran, K.; Bøtker, J.P.; Aframian, A.; Memarzadeh, K. Artificial Intelligence for Medical Imaging. In Artificial Intelligence in Healthcare; Elsevier: Amsterdam, The Netherlands, 2020; pp. 143–162. ISBN 978-0-12-818438-7. [Google Scholar]

- Hasani, N.; Morris, M.A.; Rahmim, A.; Summers, R.M.; Jones, E.; Siegel, E.; Saboury, B. Trustworthy Artificial Intelligence in Medical Imaging. PET Clin. 2022, 17, 1–12. [Google Scholar] [CrossRef]

- Jiang, C.; Huang, Z.; Pedapati, T.; Chen, P.-Y.; Sun, Y.; Gao, J. Network Properties Determine Neural Network Performance. Nat. Commun. 2024, 15, 5718. [Google Scholar] [CrossRef]

- Kaur, P.; Singh, R.K. A Review on Optimization Techniques for Medical Image Analysis. Concurr. Comput. 2023, 35, e7443. [Google Scholar] [CrossRef]

- Kim, Y.J.; Kim, K.G. Development of an Optimized Deep Learning Model for Medical Imaging. J. Korean Soc. Radiol. 2020, 81, 1274. [Google Scholar] [CrossRef]

- Centeno-Telleria, M.; Zulueta, E.; Fernandez-Gamiz, U.; Teso-Fz-Betoño, D.; Teso-Fz-Betoño, A. Differential Evolution Optimal Parameters Tuning with Artificial Neural Network. Mathematics 2021, 9, 427. [Google Scholar] [CrossRef]

- Rajbhandari, S.; Rasley, J.; Ruwase, O.; He, Y. ZeRO: Memory Optimizations Toward Training Trillion Parameter Models. In Proceedings of the SC20: International Conference for High Performance Computing, Networking, Storage and Analysis, Atlanta, GA, USA, 9–19 November 2019. [Google Scholar]

- Ji, J.L.; Spronk, M.; Kulkarni, K.; Repovš, G.; Anticevic, A.; Cole, M.W. Mapping the Human Brain’s Cortical-Subcortical Functional Network Organization. NeuroImage 2019, 185, 35–57. [Google Scholar] [CrossRef]

- Maguire, P.; Moser, P.; Maguire, R. Understanding Consciousness as Data Compression. J. Cogn. Sci. 2016, 17, 63–94. [Google Scholar] [CrossRef]

- Waqas, A.; Bui, M.M.; Glassy, E.F.; El Naqa, I.; Borkowski, P.; Borkowski, A.A.; Rasool, G. Revolutionizing Digital Pathology With the Power of Generative Artificial Intelligence and Foundation Models. Lab. Investig. 2023, 103, 100255. [Google Scholar] [CrossRef]

- Busby, L.P.; Courtier, J.L.; Glastonbury, C.M. Bias in Radiology: The How and Why of Misses and Misinterpretations. RadioGraphics 2018, 38, 236–247. [Google Scholar] [CrossRef]

- Tee, Q.X.; Nambiar, M.; Stuckey, S. Error and Cognitive Bias in Diagnostic Radiology. J. Med. Imag. Rad. Onc 2022, 66, 202–207. [Google Scholar] [CrossRef] [PubMed]

- Dujmović, M.; Malhotra, G.; Bowers, J.S. What Do Adversarial Images Tell Us about Human Vision? eLife 2020, 9, e55978. [Google Scholar] [CrossRef] [PubMed]

- Rashid, A.B.; Kausik, M.A.K. AI Revolutionizing Industries Worldwide: A Comprehensive Overview of Its Diverse Applications. Hybrid. Adv. 2024, 7, 100277. [Google Scholar] [CrossRef]

- Yousaf Gill, A.; Saeed, A.; Rasool, S.; Husnain, A.; Khawar Hussain, H. Revolutionizing Healthcare: How Machine Learning Is Transforming Patient Diagnoses—A Comprehensive Review of AI’s Impact on Medical Diagnosis. JWS 2023, 2, 1638–1652. [Google Scholar] [CrossRef]

- Recht, M.P.; Dewey, M.; Dreyer, K.; Langlotz, C.; Niessen, W.; Prainsack, B.; Smith, J.J. Integrating Artificial Intelligence into the Clinical Practice of Radiology: Challenges and Recommendations. Eur. Radiol. 2020, 30, 3576–3584. [Google Scholar] [CrossRef] [PubMed]

- Willemink, M.J.; Koszek, W.A.; Hardell, C.; Wu, J.; Fleischmann, D.; Harvey, H.; Folio, L.R.; Summers, R.M.; Rubin, D.L.; Lungren, M.P. Preparing Medical Imaging Data for Machine Learning. Radiology 2020, 295, 4–15. [Google Scholar] [CrossRef]

- Li, X.; Morgan, P.S.; Ashburner, J.; Smith, J.; Rorden, C. The First Step for Neuroimaging Data Analysis: DICOM to NIfTI Conversion. J. Neurosci. Methods 2016, 264, 47–56. [Google Scholar] [CrossRef]

- Tovino, S.A. The HIPAA Privacy Rule and the EU GDPR: Illustrative Comparisons. Seton Hall. Law. Rev. 2017, 47, 973–993. [Google Scholar] [PubMed]

- Mayerhoefer, M.E.; Materka, A.; Langs, G.; Häggström, I.; Szczypiński, P.; Gibbs, P.; Cook, G. Introduction to Radiomics. J. Nucl. Med. 2020, 61, 488–495. [Google Scholar] [CrossRef] [PubMed]

- Vliegenthart, R.; Fouras, A.; Jacobs, C.; Papanikolaou, N. Innovations in Thoracic Imaging: CT, Radiomics, AI and X-ray Velocimetry. Respirology 2022, 27, 818–833. [Google Scholar] [CrossRef]

- Kunimatsu, A.; Yasaka, K.; Akai, H.; Sugawara, H.; Kunimatsu, N.; Abe, O. Texture Analysis in Brain Tumor MR Imaging. MRMS 2022, 21, 95–109. [Google Scholar] [CrossRef]

- Obuchowicz, R.; Kruszyńska, J.; Strzelecki, M. Classifying Median Nerves in Carpal Tunnel Syndrome: Ultrasound Image Analysis. Biocybern. Biomed. Eng. 2021, 41, 335–351. [Google Scholar] [CrossRef]

- Materka, A.; Strzelecki, M. On the Importance of MRI Nonuniformity Correction for Texture Analysis. In Proceedings of the IEEE International Conference on Signal Processing, Algorithms, Architectures, Arrangements and Applications, SPA, Poznan, Poland, 26–28 September 2013. [Google Scholar]

- Kociołek, M.; Strzelecki, M.; Obuchowicz, R. Does Image Normalization and Intensity Resolution Impact Texture Classification? Comput. Med. Imaging Graph. 2020, 81, 101716. [Google Scholar] [CrossRef] [PubMed]

- Szczypiński, P.M.; Strzelecki, M.; Materka, A.; Klepaczko, A. Mazda—The Software Package for Textural Analysis of Biomedical Images. In Computers in Medical Activity; Kącki, E., Rudnicki, M., Stempczyńska, J., Eds.; Advances in Soft Computing; Springer: Berlin/Heidelberg, Germany, 2009; Volume 65, pp. 73–84. ISBN 978-3-642-04461-8. [Google Scholar]

- Reska, D.; Kretowski, M. GPU-Accelerated Image Segmentation Based on Level Sets and Multiple Texture Features. Multimed. Tools Appl. 2021, 80, 5087–5109. [Google Scholar] [CrossRef]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A Self-Configuring Method for Deep Learning-Based Biomedical Image Segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Li, W.; Qu, C.; Chen, X.; Bassi, P.R.A.S.; Shi, Y.; Lai, Y.; Yu, Q.; Xue, H.; Chen, Y.; Lin, X.; et al. AbdomenAtlas: A Large-Scale, Detailed-Annotated, & Multi-Center Dataset for Efficient Transfer Learning and Open Algorithmic Benchmarking. Med. Image Anal. 2024, 97, 103285. [Google Scholar] [CrossRef] [PubMed]

- Hering, A.; Hansen, L.; Mok, T.C.W.; Chung, A.C.S.; Siebert, H.; Hager, S.; Lange, A.; Kuckertz, S.; Heldmann, S.; Shao, W.; et al. Learn2Reg: Comprehensive Multi-Task Medical Image Registration Challenge, Dataset and Evaluation in the Era of Deep Learning. IEEE Trans. Med. Imaging 2023, 42, 697–712. [Google Scholar] [CrossRef] [PubMed]

- Baheti, B.; Chakrabarty, S.; Akbari, H.; Bilello, M.; Wiestler, B.; Schwarting, J.; Calabrese, E.; Rudie, J.; Abidi, S.; Mousa, M.; et al. The Brain Tumor Sequence Registration (BraTS-Reg) Challenge: Establishing Correspondence Between Pre-Operative and Follow-up MRI Scans of Diffuse Glioma Patients. arXiv 2021, arXiv:2112.06979. [Google Scholar]

- Chen, X.; Diaz-Pinto, A.; Ravikumar, N.; Frangi, A. Deep Learning in Medical Image Registration. Prog. Biomed. Eng. 2020, 3, 012003. [Google Scholar] [CrossRef]

- Xiao, H.; Teng, X.; Liu, C.; Li, T.; Ren, G.; Yang, R.; Shen, D.; Cai, J. A Review of Deep Learning-Based Three-Dimensional Medical Image Registration Methods. Quant. Imaging Med. Surg. 2021, 11, 4895–4916. [Google Scholar] [CrossRef]

- Andrade, N.; Faria, F.A.; Cappabianco, F.A.M. A Practical Review on Medical Image Registration: From Rigid to Deep Learning Based Approaches. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018; pp. 463–470. [Google Scholar]

- Nurzynska, K.; Piórkowski, A.; Strzelecki, M.; Kociołek, M.; Banyś, R.P.; Obuchowicz, R. Differentiating Age and Sex in Vertebral Body CT Scans—Texture Analysis versus Deep Learning Approach. Biocybern. Biomed. Eng. 2024, 44, 20–30. [Google Scholar] [CrossRef]

- Mang, A.; Gholami, A.; Davatzikos, C.; Biros, G. PDE-Constrained Optimization in Medical Image Analysis. Optim. Eng. 2018, 19, 765–812. [Google Scholar] [CrossRef]

- Chen, J.; Liu, Y.; Wei, S.; Bian, Z.; Subramanian, S.; Carass, A.; Prince, J.L.; Du, Y. A Survey on Deep Learning in Medical Image Registration: New Technologies, Uncertainty, Evaluation Metrics, and Beyond. Med. Image Anal. 2025, 100, 103385. [Google Scholar] [CrossRef]

- Friedrich, P.; Frisch, Y.; Cattin, P.C. Deep Generative Models for 3D Medical Image Synthesis. arXiv 2024, arXiv:2410.17664. [Google Scholar]

- Huijben, E.M.C.; Terpstra, M.L.; Galapon, A., Jr.; Pai, S.; Thummerer, A.; Koopmans, P.; Afonso, M.; Van Eijnatten, M.; Gurney-Champion, O.; Chen, Z.; et al. Generating Synthetic Computed Tomography for Radiotherapy: SynthRAD2023 Challenge Report. Med. Image Anal. 2024, 97, 103276. [Google Scholar] [CrossRef] [PubMed]

- Cho, J.; Zakka, C.; Kaur, D.; Shad, R.; Wightman, R.; Chaudhari, A.; Hiesinger, W. MediSyn: Text-Guided Diffusion Models for Broad Medical 2D and 3D Image Synthesis. arXiv 2024, arXiv:2405.09806. [Google Scholar]

- Muckley, M.J.; Riemenschneider, B.; Radmanesh, A.; Kim, S.; Jeong, G.; Ko, J.; Jun, Y.; Shin, H.; Hwang, D.; Mostapha, M.; et al. Results of the 2020 fastMRI Challenge for Machine Learning MR Image Reconstruction. IEEE Trans Med Imaging 2021, 40, 2306–2317. [Google Scholar] [CrossRef]

- Bhadra, S.; Kelkar, V.A.; Brooks, F.J.; Anastasio, M.A. On Hallucinations in Tomographic Image Reconstruction. IEEE Trans. Med. Imaging 2021, 40, 3249–3260. [Google Scholar] [CrossRef] [PubMed]

- Roh, J.; Ryu, D.; Lee, J. CT Synthesis with Deep Learning for MR-Only Radiotherapy Planning: A Review. Biomed. Eng. Lett. 2024, 14, 1259–1278. [Google Scholar] [CrossRef] [PubMed]

- Chung, T.; Dillman, J.R. Deep Learning Image Reconstruction: A Tremendous Advance for Clinical MRI but Be Careful…. Pediatr. Radiol. 2023, 53, 2157–2158. [Google Scholar] [CrossRef] [PubMed]

- Reinke, A.; Tizabi, M.D.; Baumgartner, M.; Eisenmann, M.; Heckmann-Nötzel, D.; Kavur, A.E.; Rädsch, T.; Sudre, C.H.; Acion, L.; Antonelli, M.; et al. Understanding Metric-Related Pitfalls in Image Analysis Validation. Nat. Methods 2024, 21, 182–194. [Google Scholar] [CrossRef] [PubMed]

- Rädsch, T.; Reinke, A.; Weru, V.; Tizabi, M.D.; Heller, N.; Isensee, F.; Kopp-Schneider, A.; Maier-Hein, L. Quality Assured: Rethinking Annotation Strategies in Imaging AI. In Computer Vision—ECCV 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2025; Volume 15136, pp. 52–69. ISBN 978-3-031-73228-7. [Google Scholar]

- Christodoulou, E.; Reinke, A.; Houhou, R.; Kalinowski, P.; Erkan, S.; Sudre, C.H.; Burgos, N.; Boutaj, S.; Loizillon, S.; Solal, M.; et al. Confidence Intervals Uncovered: Are We Ready for Real-World Medical Imaging AI? In Medical Image Computing and Computer Assisted Intervention—MICCAI 2024; Linguraru, M.G., Dou, Q., Feragen, A., Giannarou, S., Glocker, B., Lekadir, K., Schnabel, J.A., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2024; Volume 15010, pp. 124–132. ISBN 978-3-031-72116-8. [Google Scholar]

- Fernandez-Quilez, A. Deep Learning in Radiology: Ethics of Data and on the Value of Algorithm Transparency, Interpretability and Explainability. AI Ethics 2023, 3, 257–265. [Google Scholar] [CrossRef]

- Keel, B.; Quyn, A.; Jayne, D.; Relton, S.D. State-of-the-Art Performance of Deep Learning Methods for Pre-Operative Radiologic Staging of Colorectal Cancer Lymph Node Metastasis: A Scoping Review. BMJ Open 2024, 14, e086896. [Google Scholar] [CrossRef] [PubMed]

- Marey, A.; Arjmand, P.; Alerab, A.D.S.; Eslami, M.J.; Saad, A.M.; Sanchez, N.; Umair, M. Explainability, Transparency and Black Box Challenges of AI in Radiology: Impact on Patient Care in Cardiovascular Radiology. Egypt. J. Radiol. Nucl. Med. 2024, 55, 183. [Google Scholar] [CrossRef]

- Tsai, M.-J.; Lin, P.-Y.; Lee, M.-E. Adversarial Attacks on Medical Image Classification. Cancers 2023, 15, 4228. [Google Scholar] [CrossRef] [PubMed]

- Amirian, M.; Schwenker, F.; Stadelmann, T. Trace and Detect Adversarial Attacks on CNNs Using Feature Response Maps. In Artificial Neural Networks in Pattern Recognition; Pancioni, L., Schwenker, F., Trentin, E., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11081, pp. 346–358. ISBN 978-3-319-99977-7. [Google Scholar]

- Shao, R.; Shi, Z.; Yi, J.; Chen, P.-Y.; Hsieh, C.-J. On the Adversarial Robustness of Vision Transformers. arXiv 2021, arXiv:2103.15670. [Google Scholar]

- Mo, Y.; Wu, D.; Wang, Y.; Guo, Y.; Wang, Y. When Adversarial Training Meets Vision Transformers: Recipes from Training to Architecture. In Proceedings of the 36th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates Inc.: Red Hook, NY, USA, 2024. [Google Scholar]

- Health AI Register 2024. Available online: https://www.HealthAIregister.com (accessed on 28 August 2024).

- AI Central. Available online: https://aicentral.acrdsi.org/ (accessed on 3 September 2024).

- Grenier, P.A.; Brun, A.L.; Mellot, F. The Potential Role of Artificial Intelligence in Lung Cancer Screening Using Low-Dose Computed Tomography. Diagnostics 2022, 12, 2435. [Google Scholar] [CrossRef]

- Lauritzen, A.D.; Lillholm, M.; Lynge, E.; Nielsen, M.; Karssemeijer, N.; Vejborg, I. Early Indicators of the Impact of Using AI in Mammography Screening for Breast Cancer. Radiology 2024, 311, e232479. [Google Scholar] [CrossRef]

- Roca, P.; Attye, A.; Colas, L.; Tucholka, A.; Rubini, P.; Cackowski, S.; Ding, J.; Budzik, J.-F.; Renard, F.; Doyle, S.; et al. Artificial Intelligence to Predict Clinical Disability in Patients with Multiple Sclerosis Using FLAIR MRI. Diagn. Interv. Imaging 2020, 101, 795–802. [Google Scholar] [CrossRef]

- Van Leeuwen, K.G.; Becks, M.J.; Grob, D.; De Lange, F.; Rutten, J.H.E.; Schalekamp, S.; Rutten, M.J.C.M.; Van Ginneken, B.; De Rooij, M.; Meijer, F.J.A. AI-Support for the Detection of Intracranial Large Vessel Occlusions: One-Year Prospective Evaluation. Heliyon 2023, 9, e19065. [Google Scholar] [CrossRef] [PubMed]

- Adams, S.J.; Madtes, D.K.; Burbridge, B.; Johnston, J.; Goldberg, I.G.; Siegel, E.L.; Babyn, P.; Nair, V.S.; Calhoun, M.E. Clinical Impact and Generalizability of a Computer-Assisted Diagnostic Tool to Risk-Stratify Lung Nodules With CT. J. Am. Coll. Radiol. 2023, 20, 232–242. [Google Scholar] [CrossRef] [PubMed]

- Asif, A.; Charters, P.F.P.; Thompson, C.A.S.; Komber, H.M.E.I.; Hudson, B.J.; Rodrigues, J.C.L. Artificial Intelligence Can Detect Left Ventricular Dilatation on Contrast-Enhanced Thoracic Computer Tomography Relative to Cardiac Magnetic Resonance Imaging. Br. J. Radiol. 2022, 95, 20210852. [Google Scholar] [CrossRef] [PubMed]

- Arasu, V.A.; Habel, L.A.; Achacoso, N.S.; Buist, D.S.M.; Cord, J.B.; Esserman, L.J.; Hylton, N.M.; Glymour, M.M.; Kornak, J.; Kushi, L.H.; et al. Comparison of Mammography AI Algorithms with a Clinical Risk Model for 5-Year Breast Cancer Risk Prediction: An Observational Study. Radiology 2023, 307, e222733. [Google Scholar] [CrossRef] [PubMed]

- Gräfe, D.; Beeskow, A.B.; Pfäffle, R.; Rosolowski, M.; Chung, T.S.; DiFranco, M.D. Automated Bone Age Assessment in a German Pediatric Cohort: Agreement between an Artificial Intelligence Software and the Manual Greulich and Pyle Method. Eur. Radiol. 2023, 34, 4407–4413. [Google Scholar] [CrossRef]

- Eom, H.J.; Cha, J.H.; Choi, W.J.; Cho, S.M.; Jin, K.; Kim, H.H. Mammographic Density Assessment: Comparison of Radiologists, Automated Volumetric Measurement, and Artificial Intelligence-Based Computer-Assisted Diagnosis. Acta Radiol. 2024, 65, 708–715. [Google Scholar] [CrossRef] [PubMed]

- Habuza, T.; Navaz, A.N.; Hashim, F.; Alnajjar, F.; Zaki, N.; Serhani, M.A.; Statsenko, Y. AI Applications in Robotics, Diagnostic Image Analysis and Precision Medicine: Current Limitations, Future Trends, Guidelines on CAD Systems for Medicine. Inform. Med. Unlocked 2021, 24, 100596. [Google Scholar] [CrossRef]

- Tadavarthi, Y.; Vey, B.; Krupinski, E.; Prater, A.; Gichoya, J.; Safdar, N.; Trivedi, H. The State of Radiology AI: Considerations for Purchase Decisions and Current Market Offerings. Radiol. Artif. Intell. 2020, 2, e200004. [Google Scholar] [CrossRef]

- Wenderott, K.; Krups, J.; Luetkens, J.A.; Gambashidze, N.; Weigl, M. Prospective Effects of an Artificial Intelligence-Based Computer-Aided Detection System for Prostate Imaging on Routine Workflow and Radiologists’ Outcomes. Eur. J. Radiol. 2024, 170, 111252. [Google Scholar] [CrossRef]

- Jacobs, C.; Schreuder, A.; Van Riel, S.J.; Scholten, E.T.; Wittenberg, R.; Wille, M.M.W.; De Hoop, B.; Sprengers, R.; Mets, O.M.; Geurts, B.; et al. Assisted versus Manual Interpretation of Low-Dose CT Scans for Lung Cancer Screening: Impact on Lung-RADS Agreement. Radiol. Imaging Cancer 2021, 3, e200160. [Google Scholar] [CrossRef] [PubMed]

- Jimenez-Pastor, A.; Lopez-Gonzalez, R.; Fos-Guarinos, B.; Garcia-Castro, F.; Wittenberg, M.; Torregrosa-Andrés, A.; Marti-Bonmati, L.; Garcia-Fontes, M.; Duarte, P.; Gambini, J.P.; et al. Automated Prostate Multi-Regional Segmentation in Magnetic Resonance Using Fully Convolutional Neural Networks. Eur. Radiol. 2023, 33, 5087–5096. [Google Scholar] [CrossRef]

- Nampewo, I.; Ariana, P.; Vijayan, S. Benefits of Artificial Intelligence versus Human-Reader in Chest X-Ray Screening for Tuberculosis in the Philippines. Int. J. Health Sci. Res. 2024, 14, 277–287. [Google Scholar] [CrossRef]

- Pemberton, H.G.; Goodkin, O.; Prados, F.; Das, R.K.; Vos, S.B.; Moggridge, J.; Coath, W.; Gordon, E.; Barrett, R.; Schmitt, A.; et al. Automated Quantitative MRI Volumetry Reports Support Diagnostic Interpretation in Dementia: A Multi-Rater, Clinical Accuracy Study. Eur. Radiol. 2021, 31, 5312–5323. [Google Scholar] [CrossRef]

- Van Leeuwen, K.G.; Schalekamp, S.; Rutten, M.J.C.M.; Huisman, M.; Schaefer-Prokop, C.M.; De Rooij, M.; Van Ginneken, B.; Maresch, B.; Geurts, B.H.J.; Van Dijke, C.F.; et al. Comparison of Commercial AI Software Performance for Radiograph Lung Nodule Detection and Bone Age Prediction. Radiology 2024, 310, e230981. [Google Scholar] [CrossRef] [PubMed]

- Cooper, R.G. The AI Transformation of Product Innovation. Ind. Mark. Manag. 2024, 119, 62–74. [Google Scholar] [CrossRef]

- Gao, S.; Xu, Z.; Kang, W.; Lv, X.; Chu, N.; Xu, S.; Hou, D. Artificial Intelligence-Driven Computer Aided Diagnosis System Provides Similar Diagnosis Value Compared with Doctors’ Evaluation in Lung Cancer Screening. BMC Med. Imaging 2024, 24, 141. [Google Scholar] [CrossRef] [PubMed]

- Savage, C.H.; Elkassem, A.A.; Hamki, O.; Sturdivant, A.; Benson, D.; Grumley, S.; Tzabari, J.; Junck, K.; Li, Y.; Li, M.; et al. Prospective Evaluation of Artificial Intelligence Triage of Incidental Pulmonary Emboli on Contrast-Enhanced CT Examinations of the Chest or Abdomen. Am. J. Roentgenol. 2024, 223, e2431067. [Google Scholar] [CrossRef]

- Rothenberg, S.A.; Savage, C.H.; Abou Elkassem, A.; Singh, S.; Abozeed, M.; Hamki, O.; Junck, K.; Tridandapani, S.; Li, M.; Li, Y.; et al. Prospective Evaluation of AI Triage of Pulmonary Emboli on CT Pulmonary Angiograms. Radiology 2023, 309, e230702. [Google Scholar] [CrossRef]

- Chien, H.-W.C.; Yang, T.-L.; Juang, W.-C.; Chen, Y.-Y.A.; Li, Y.-C.J.; Chen, C.-Y. Pilot Report for Intracranial Hemorrhage Detection with Deep Learning Implanted Head Computed Tomography Images at Emergency Department. J. Med. Syst. 2022, 46, 49. [Google Scholar] [CrossRef] [PubMed]

- Schaffter, T.; Buist, D.S.M.; Lee, C.I.; Nikulin, Y.; Ribli, D.; Guan, Y.; Lotter, W.; Jie, Z.; Du, H.; Wang, S.; et al. Evaluation of Combined Artificial Intelligence and Radiologist Assessment to Interpret Screening Mammograms. JAMA Netw. Open 2020, 3, e200265. [Google Scholar] [CrossRef] [PubMed]

- Lauri, C.; Shimpo, F.; Sokołowski, M.M. Artificial Intelligence and Robotics on the Frontlines of the Pandemic Response: The Regulatory Models for Technology Adoption and the Development of Resilient Organisations in Smart Cities. J. Ambient. Intell. Human. Comput. 2023, 14, 14753–14764. [Google Scholar] [CrossRef] [PubMed]

- Justo-Hanani, R. The Politics of Artificial Intelligence Regulation and Governance Reform in the European Union. Policy Sci. 2022, 55, 137–159. [Google Scholar] [CrossRef]

- Smuha, N.A. From a ‘Race to AI’ to a ‘Race to AI Regulation’: Regulatory Competition for Artificial Intelligence. Law Innov. Technol. 2021, 13, 57–84. [Google Scholar] [CrossRef]

- Tanguay, W.; Acar, P.; Fine, B.; Abdolell, M.; Gong, B.; Cadrin-Chênevert, A.; Chartrand-Lefebvre, C.; Chalaoui, J.; Gorgos, A.; Chin, A.S.-L.; et al. Assessment of Radiology Artificial Intelligence Software: A Validation and Evaluation Framework. Can. Assoc. Radiol. J. 2023, 74, 326–333. [Google Scholar] [CrossRef] [PubMed]

- Wimpfheimer, O.; Kimmel, Y. Artificial Intelligence in Medical Imaging: An Overview of a Decade of Experience. Isr. Med. Assoc. J. 2024, 26, 122–125. [Google Scholar]

- Mehta, S.S. Commercializing Successful Biomedical Technologies: Basic Principles for the Development of Drugs, Diagnostics and Devices; Cambridge University Press: Cambridge, NY, USA, 2008; ISBN 978-0-521-87098-6. [Google Scholar]

- Wu, K.; Wu, E.; Theodorou, B.; Liang, W.; Mack, C.; Glass, L.; Sun, J.; Zou, J. Characterizing the Clinical Adoption of Medical AI through U.S. Insurance Claims. NEJM AI 2023, 1, AIoa2300030. [Google Scholar] [CrossRef]

- Fornell, D. FDA Has Now Cleared 700 AI Healthcare Algorithms, More Than 76% in Radiology. Health Imaging. 2023. Available online: https://healthimaging.com/topics/artificial-intelligence/fda-has-now-cleared-700-ai-healthcare-algorithms-more-76-radiology (accessed on 1 December 2024).

- MarketsandMarkets Artificial Intelligence (AI) in Healthcare Market by Offering (Hardware, Software, Services), Technology (Machine Learning, NLP, Context-Aware Computing, Computer Vision), Application, End User and Region—Global Forecast to 2028. 2023. Available online: https://www.marketsandmarkets.com/Market-Reports/ai-toolkit-market-252755052.html (accessed on 30 November 2024).

- Varghese, J. Artificial Intelligence in Medicine: Chances and Challenges for Wide Clinical Adoption. Visc. Med. 2020, 36, 443–449. [Google Scholar] [CrossRef]

- Chau, M. Ethical, Legal, and Regulatory Landscape of Artificial Intelligence in Australian Healthcare and Ethical Integration in Radiography: A Narrative Review. J. Med. Imaging Radiat. Sci. 2024, 55, 101733. [Google Scholar] [CrossRef] [PubMed]

- Zhu, S.; Gilbert, M.; Chetty, I.; Siddiqui, F. The 2021 Landscape of FDA-Approved Artificial Intelligence/Machine Learning-Enabled Medical Devices: An Analysis of the Characteristics and Intended Use. Int. J. Med. Inform. 2022, 165, 104828. [Google Scholar] [CrossRef] [PubMed]

- Ibragimov, B.; Arzamasov, K.; Maksudov, B.; Kiselev, S.; Mongolin, A.; Mustafaev, T.; Ibragimova, D.; Evteeva, K.; Andreychenko, A.; Morozov, S. A 178-Clinical-Center Experiment of Integrating AI Solutions for Lung Pathology Diagnosis. Sci. Rep. 2023, 13, 1135. [Google Scholar] [CrossRef]

- Robert, D.; Sathyamurthy, S.; Singh, A.K.; Matta, S.A.; Tadepalli, M.; Tanamala, S.; Bosemani, V.; Mammarappallil, J.; Kundnani, B. Effect of Artificial Intelligence as a Second Reader on the Lung Nodule Detection and Localization Accuracy of Radiologists and Non-Radiology Physicians in Chest Radiographs: A Multicenter Reader Study. Acad. Radiol. 2024, in press. [Google Scholar] [CrossRef]

- Saha, A.; Bosma, J.S.; Twilt, J.J.; van Ginneken, B.; Bjartell, A.; Padhani, A.R.; Bonekamp, D.; Villeirs, G.; Salomon, G.; Giannarini, G.; et al. Artificial Intelligence and Radiologists in Prostate Cancer Detection on MRI (PI-CAI): An International, Paired, Non-Inferiority, Confirmatory Study. Lancet Oncol. 2024, 25, 879–887. [Google Scholar] [CrossRef]

- Wang, T.-W.; Hsu, M.-S.; Lee, W.-K.; Pan, H.-C.; Yang, H.-C.; Lee, C.-C.; Wu, Y.-T. Brain Metastasis Tumor Segmentation and Detection Using Deep Learning Algorithms: A Systematic Review and Meta-Analysis. Radiother. Oncol. 2024, 190, 110007. [Google Scholar] [CrossRef]

- Lu, S.-L.; Xiao, F.-R.; Cheng, J.C.-H.; Yang, W.-C.; Cheng, Y.-H.; Chang, Y.-C.; Lin, J.-Y.; Liang, C.-H.; Lu, J.-T.; Chen, Y.-F.; et al. Randomized Multi-Reader Evaluation of Automated Detection and Segmentation of Brain Tumors in Stereotactic Radiosurgery with Deep Neural Networks. Neuro Oncol. 2021, 23, 1560–1568. [Google Scholar] [CrossRef] [PubMed]

- Salehi, M.A.; Mohammadi, S.; Harandi, H.; Zakavi, S.S.; Jahanshahi, A.; Shahrabi Farahani, M.; Wu, J.S. Diagnostic Performance of Artificial Intelligence in Detection of Primary Malignant Bone Tumors: A Meta-Analysis. J. Imaging Inform. Med. 2024, 37, 766–777. [Google Scholar] [CrossRef]

- Bachmann, R.; Gunes, G.; Hangaard, S.; Nexmann, A.; Lisouski, P.; Boesen, M.; Lundemann, M.; Baginski, S.G. Improving Traumatic Fracture Detection on Radiographs with Artificial Intelligence Support: A Multi-Reader Study. BJR|Open 2023, 6, tzae011. [Google Scholar] [CrossRef]

- Jalal, S.; Parker, W.; Ferguson, D.; Nicolaou, S. Exploring the Role of Artificial Intelligence in an Emergency and Trauma Radiology Department. Can. Assoc. Radiol. J. 2021, 72, 167–174. [Google Scholar] [CrossRef]

- Ketola, J.H.J.; Inkinen, S.I.; Mäkelä, T.; Syväranta, S.; Peltonen, J.; Kaasalainen, T.; Kortesniemi, M. Testing Process for Artificial Intelligence Applications in Radiology Practice. Phys. Med. 2024, 128, 104842. [Google Scholar] [CrossRef]

| Factor | Radiologists | AI Models |

|---|---|---|

| Data Processing Volume | Moderate | High |

| Connections (Trillions) | 80 | 3 |

| Adaptability | High | Low |

| Perception of Patterns | High | Moderate |

| Consistency | Moderate | High |

| Speed of Analysis | Moderate | High |

| Fatigue Resistance | No | Yes |

| Bias Resistance | No | Yes |

| Training Techniques Required | No | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Obuchowicz, R.; Lasek, J.; Wodziński, M.; Piórkowski, A.; Strzelecki, M.; Nurzynska, K. Artificial Intelligence-Empowered Radiology—Current Status and Critical Review. Diagnostics 2025, 15, 282. https://doi.org/10.3390/diagnostics15030282

Obuchowicz R, Lasek J, Wodziński M, Piórkowski A, Strzelecki M, Nurzynska K. Artificial Intelligence-Empowered Radiology—Current Status and Critical Review. Diagnostics. 2025; 15(3):282. https://doi.org/10.3390/diagnostics15030282

Chicago/Turabian StyleObuchowicz, Rafał, Julia Lasek, Marek Wodziński, Adam Piórkowski, Michał Strzelecki, and Karolina Nurzynska. 2025. "Artificial Intelligence-Empowered Radiology—Current Status and Critical Review" Diagnostics 15, no. 3: 282. https://doi.org/10.3390/diagnostics15030282

APA StyleObuchowicz, R., Lasek, J., Wodziński, M., Piórkowski, A., Strzelecki, M., & Nurzynska, K. (2025). Artificial Intelligence-Empowered Radiology—Current Status and Critical Review. Diagnostics, 15(3), 282. https://doi.org/10.3390/diagnostics15030282