Integrating Machine Learning and Deep Learning for Predicting Non-Surgical Root Canal Treatment Outcomes Using Two-Dimensional Periapical Radiographs

Abstract

:1. Introduction

2. Study Objectives

3. Materials and Methods

3.1. Sample Selection

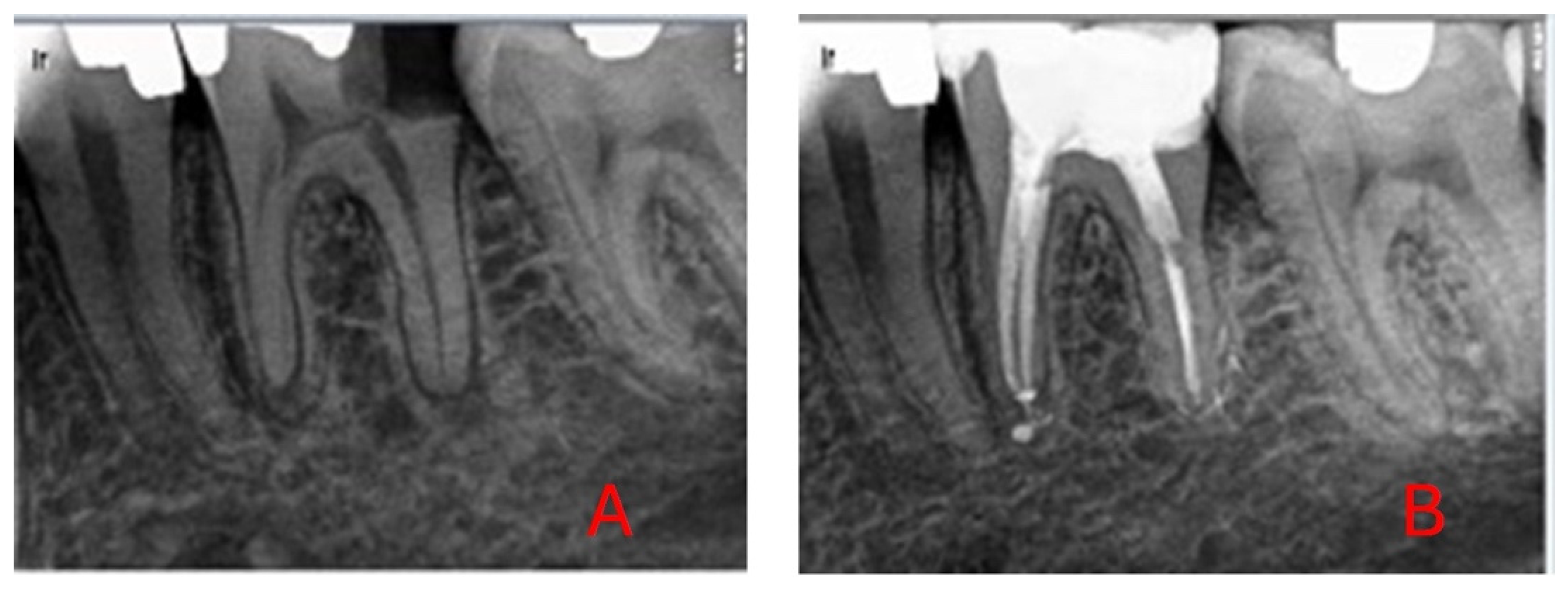

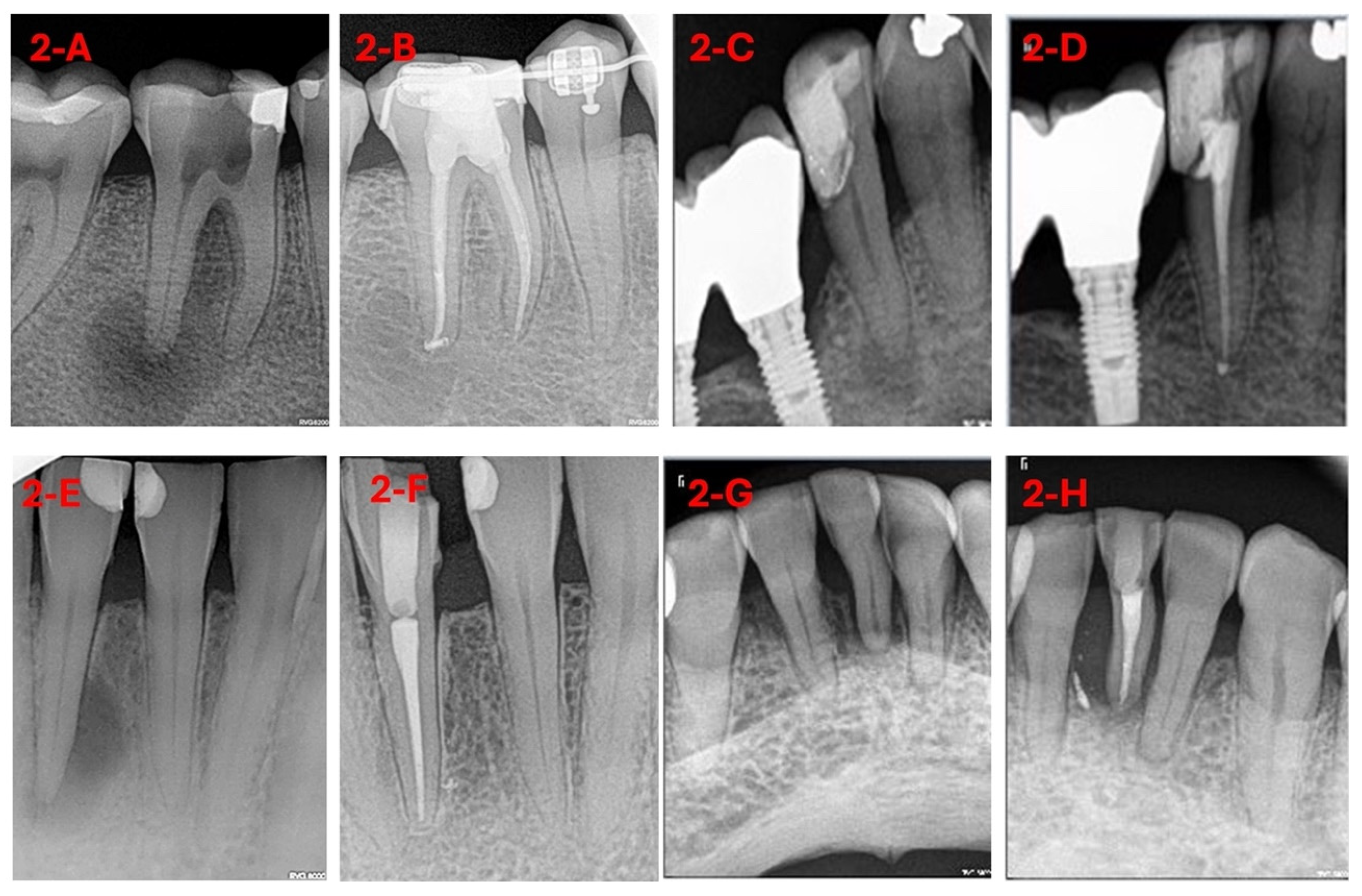

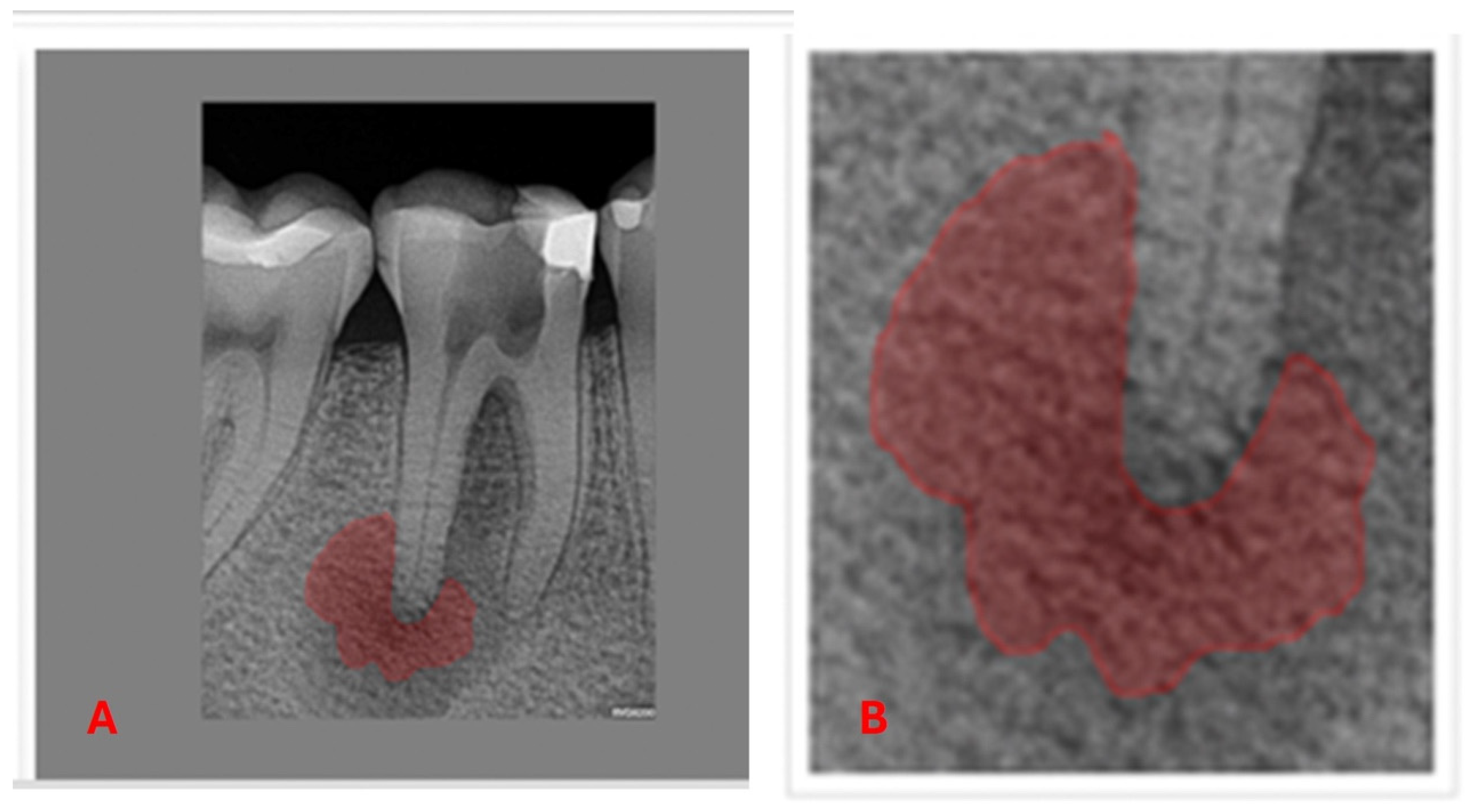

3.2. Intervention Procedure

3.3. Machine Learning and Deep Learning Analysis

3.4. Statistical Analysis

- Comparison between the best ML model from the previous study [26], random forest (RF), and the combined DL and best performing ML model.

- Comparison between random forest and DL in general: (RF vs. DL).

- Comparison between the clinical professional’s prediction (DP) and the combined DL and best performing ML model.

- Comparison between the clinical professional’s prediction (DP) and the DL model by itself: (DP vs. DL).

- Comparison between the combined DL and best performing ML model and DL model.

4. Results

4.1. Comparison Between Random Forest (RF) and the Deep Learning–Logistic Regression Model (DL-LR)

4.2. Comparison Between Random Forest and Deep Learning in General (RF vs. DL)

4.3. Comparison Between the Clinical Professional’s Prediction (DP) and Deep Learning–Logistic Regression (DP vs. DL-LR)

4.4. Comparison Between the Clinical Professional’s Prediction (DP) and Deep Learning (DL) (DP vs. DL)

4.5. Comparison Between Deep Learning and the Combined Logistic Regression–Deep Learning Model (DL vs. DL-LR)

4.6. Interpretation of Statistical Comparisons

5. Discussion

6. Conclusions

7. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Samuel, A.L. Some Studies in Machine Learning Using the Game of Checkers. IBM J. Res. Dev. 1959, 44, 206–226. [Google Scholar] [CrossRef]

- Hwang, J.-J.; Jung, Y.-H.; Cho, B.-H.; Heo, M.-S. An overview of deep learning in the field of dentistry. Imaging Sci. Dent. 2019, 49, 1–7. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Angermueller, C.; Pärnamaa, T.; Parts, L.; Stegle, O. Deep learning for computational biology. Mol. Syst. Biol. 2016, 12, 878. [Google Scholar] [CrossRef]

- Anwar, S.M.; Majid, M.; Qayyum, A.; Awais, M.; Alnowami, M.; Khan, M.K. Medical Image Analysis using Convolutional Neural Networks: A Review. J. Med. Syst. 2018, 42, 226. [Google Scholar] [CrossRef]

- Lin, P.L.; Huang, P.Y.; Huang, P.W. Automatic methods for alveolar bone loss degree measurement in periodontitis periapical radiographs. Comput. Methods Programs Biomed. 2017, 148, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Ekert, T.; Krois, J.; Meinhold, L.; Elhennawy, K.; Emara, R.; Golla, T.; Schwendicke, F. Deep Learning for the Radiographic Detection of Apical Lesions. J. Endod. 2019, 45, 917–922.e5. [Google Scholar] [CrossRef]

- Wang, X.; Cai, B.; Cao, Y.; Zhou, C.; Yang, L.; Liu, R.; Long, X.; Wang, W.; Gao, D.; Bao, B. Objective method for evaluating orthodontic treatment from the lay perspective: An eye-tracking study. Am. J. Orthod. Dentofac. Orthop. 2016, 150, 601–610. [Google Scholar] [CrossRef]

- Alarifi, A.; AlZubi, A.A. Memetic Search Optimization Along with Genetic Scale Recurrent Neural Network for Predictive Rate of Implant Treatment. J. Med. Syst. 2018, 42, 202. [Google Scholar] [CrossRef]

- Gehlot, P.M.; Sudeep, P.; Murali, B.; Mariswamy, A.B. Artificial intelligence in endodontics: A narrative review. J. Int. Oral Health 2023, 15, 134–141. [Google Scholar] [CrossRef]

- Aminoshariae, A.; Kulild, J.; Nagendrababu, V. Artificial Intelligence in Endodontics: Current Applications and Future Directions. J. Endod. 2021, 47, 1352–1357. [Google Scholar] [CrossRef]

- Ourang, S.A.; Sohrabniya, F.; Mohammad-Rahimi, H.; Dianat, O.; Aminoshariae, A.; Nagendrababu, V.; Dummer, P.M.H.; Duncan, H.F.; Nosrat, A. Artificial intelligence in endodontics: Fundamental principles, workflow, and tasks. Int. Endod. J. 2024, 57, 1546–1565. [Google Scholar] [CrossRef] [PubMed]

- Khanagar, S.B.; Alfadley, A.; Alfouzan, K.; Awawdeh, M.; Alaqla, A.; Jamleh, A. Developments and Performance of Artificial Intelligence Models Designed for Application in Endodontics: A Systematic Review. Diagnostics 2023, 13, 414. [Google Scholar] [CrossRef] [PubMed]

- Asiri, A.F.; Altuwalah, A.S. The role of neural artificial intelligence for diagnosis and treatment planning in endodontics: A qualitative review. Saudi Dent. J. 2022, 34, 270–281. [Google Scholar] [CrossRef] [PubMed]

- Karobari, M.I.; Adil, A.H.; Basheer, S.N.; Murugesan, S.; Savadamoorthi, K.S.; Mustafa, M.; Abdulwahed, A.; Almokhatieb, A.A. Evaluation of the Diagnostic and Prognostic Accuracy of Artificial Intelligence in Endodontic Dentistry: A Comprehensive Review of Literature. Comput. Math. Methods Med. 2023, 2023, 7049360. [Google Scholar] [CrossRef]

- Khanagar, S.B.; Al-ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry—A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef]

- Machoy, M.E.; Szyszka-Sommerfeld, L.; Vegh, A.; Gedrange, T.; Woźniak, K. The ways of using machine learning in dentistry. Adv. Clin. Exp. Med. 2020, 29, 375–384. [Google Scholar] [CrossRef]

- Zhang, Y.; Weng, Y.; Lund, J. Applications of Explainable Artificial Intelligence in Diagnosis and Surgery. Diagnostics 2022, 12, 237. [Google Scholar] [CrossRef]

- Pethani, F. Promises and perils of artificial intelligence in dentistry. Aust. Dent. J. 2021, 66, 124–135. [Google Scholar] [CrossRef]

- Schwendicke, F.; Samek, W.; Krois, J. Artificial Intelligence in Dentistry: Chances and Challenges. J. Dent. Res. 2020, 99, 769–774. [Google Scholar] [CrossRef]

- Alasqah, M.; Alotaibi, F.D.; Gufran, K. The Radiographic Assessment of Furcation Area in Maxillary and Mandibular First Molars while Considering the New Classification of Periodontal Disease. Healthcare 2022, 10, 1464. [Google Scholar] [CrossRef] [PubMed]

- Basrani, B. Endodontic Radiology, Segunda; Wiley-Blackwell: Hoboken, NJ, USA, 2012; pp. 36–38. [Google Scholar]

- Azarpazhooh, A.; Khazaei, S.; Jafarzadeh, H.; Malkhassian, G.; Sgro, A.; Elbarbary, M.; Cardoso, E.; Oren, A.; Kishen, A.; Shah, P.S. A Scoping Review of Four Decades of Outcomes in Nonsurgical Root Canal Treatment, Nonsurgical Retreatment, and Apexification Studies: Part 3—A Proposed Framework for Standardized Data Collection and Reporting of Endodontic Outcome Studies. J. Endod. 2022, 48, 40–54. [Google Scholar] [CrossRef]

- Azarpazhooh, A.; Sgro, A.; Cardoso, E.; Elbarbary, M.; Lighvan, N.L.; Badewy, R.; Malkhassian, G.; Jafarzadeh, H.; Bakhtiar, H.; Khazaei, S.; et al. A Scoping Review of 4 Decades of Outcomes in Nonsurgical Root Canal Treatment, Nonsurgical Retreatment, and Apexification Studies—Part 2: Outcome Measures. J. Endod. 2022, 48, 29–39. [Google Scholar] [CrossRef] [PubMed]

- Azarpazhooh, A.; Cardoso, E.; Sgro, A.; Elbarbary, M.; Lighvan, N.L.; Badewy, R.; Malkhassian, G.; Jafarzadeh, H.; Bakhtiar, H.; Khazaei, S.; et al. A Scoping Review of 4 Decades of Outcomes in Nonsurgical Root Canal Treatment, Nonsurgical Retreatment, and Apexification Studies—Part 1: Process and General Results. J. Endod. 2022, 48, 15–28. [Google Scholar] [CrossRef]

- Bennasar, C.; García, I.; Gonzalez-Cid, Y.; Pérez, F.; Jiménez, J. Second Opinion for Non-Surgical Root Canal Treatment Prognosis Using Machine Learning Models. Diagnostics 2023, 13, 2742. [Google Scholar] [CrossRef]

- Lee, J.; Seo, H.; Choi, Y.J.; Lee, C.; Kim, S.; Lee, Y.S.; Lee, S.; Kim, E. An Endodontic Forecasting Model Based on the Analysis of Preoperative Dental Radiographs: A Pilot Study on an Endodontic Predictive Deep Neural Network. J. Endod. 2023, 49, 710–719. [Google Scholar] [CrossRef]

- Li, S.; Liu, J.; Zhou, Z.; Zhou, Z.; Wu, X.; Li, Y.; Wang, S.; Liao, W.; Ying, S.; Zhao, Z. Artificial intelligence for caries and periapical periodontitis detection. J. Dent. 2022, 122, 104107. [Google Scholar] [CrossRef] [PubMed]

- Panyarak, W.; Wantanajittikul, K.; Suttapak, W.; Charuakkra, A.; Prapayasatok, S. Feasibility of deep learning for dental caries classification in bitewing radiographs based on the ICCMS™ radiographic scoring system. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2023, 135, 272–281. [Google Scholar] [CrossRef]

- Mohammad-Rahimi, H.; Dianat, O.; Abbasi, R.; Zahedrozegar, S.; Ashkan, A.; Motamedian, S.R.; Rohban, M.H.; Nosrat, A. Artificial Intelligence for Detection of External Cervical Resorption Using Label-Efficient Self-Supervised Learning Method. J. Endod. 2024, 50, 144–153.e2. [Google Scholar] [CrossRef]

- Kim, B.S.; Yeom, H.G.; Lee, J.H.; Shin, W.S.; Yun, J.P.; Jeong, S.H.; Kang, J.H.; Kim, S.W.; Kim, B.C. Deep Learning-Based Prediction of Paresthesia after Third Molar Extraction: A Preliminary Study. Diagnostics 2021, 11, 1572. [Google Scholar] [CrossRef]

- Vilkomir, K.; Phen, C.; Baldwin, F.; Cole, J.; Herndon, N.; Zhang, W. Classification of mandibular molar furcation involvement in periapical radiographs by deep learning. Imaging Sci. Dent. 2024, 54, 257–263. [Google Scholar] [CrossRef]

- Kim, Y.-H.; Park, J.-B.; Chang, M.-S.; Ryu, J.-J.; Lim, W.H.; Jung, S.-K. Influence of the depth of the convolutional neural networks on an artificial intelligence model for diagnosis of orthognathic surgery. J. Pers. Med. 2021, 11, 356. [Google Scholar] [CrossRef] [PubMed]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning, 2nd ed.; Springer: New York, NY, USA, 2021; pp. 229–232. [Google Scholar]

- El Adoui, M.; Drisis, S.; Benjelloun, M. Multi-input deep learning architecture for predicting breast tumor response to chemotherapy using quantitative MR images. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1491–1500. [Google Scholar] [CrossRef] [PubMed]

- Mukherjee, P.; Zhou, M.; Lee, E.; Schicht, A.; Balagurunathan, Y.; Napel, S.; Gillies, R.; Wong, S.; Thieme, A.; Leung, A.; et al. A shallow convolutional neural network predicts prognosis of lung cancer patients in multi-institutional computed tomography image datasets. Nat. Mach. Intell. 2020, 2, 274–282. [Google Scholar] [CrossRef] [PubMed]

- Xie, H.; Zhang, T.; Song, W.; Wang, S.; Zhu, H.; Zhang, R.; Zhang, W.; Yu, Y.; Zhao, Y. Super-resolution of Pneumocystis carinii pneumonia CT via self-attention GAN. Comput. Methods Programs Biomed. 2021, 212, 106467. [Google Scholar] [CrossRef]

- Ricucci, D.; Siqueira, J.F., Jr. Biofilms and Apical Periodontitis: Study of Prevalence and Association with Clinical and Histopathologic Findings. J. Endod. 2010, 36, 1277–1288. [Google Scholar] [CrossRef]

- Lee, J.-H.; Kim, D.-H.; Jeong, S.-N.; Choi, S.-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Shan, T.; Tay, F.R.; Gu, L. Application of Artificial Intelligence in Dentistry. J. Dent. Res. 2021, 100, 232–244. [Google Scholar] [CrossRef]

- Kim, J.-E.; Nam, N.-E.; Shim, J.-S.; Jung, Y.-H.; Cho, B.-H.; Hwang, J.J. Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs. J. Clin. Med. 2020, 9, 1117. [Google Scholar] [CrossRef]

- Krois, J.; Ekert, T.; Meinhold, L.; Golla, T.; Kharbot, B.; Wittemeier, A.; Dörfer, C.; Schwendicke, F. Deep Learning for the Radiographic Detection of Perio-dontal Bone Loss. Sci. Rep. 2019, 9, 8495. [Google Scholar] [CrossRef]

- Hung, K.; Montalvao, C.; Tanaka, R.; Kawai, T.; Bornstein, M.M. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofacial Radiol. 2019, 49, 20190107. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Lee, H.; Jang, B.; Kim, K.-D.; Kim, J.; Kim, H.; Park, W. Development and Validation of a Visually Explainable Deep Learning Model for Classification of C-shaped Canals of the Mandibular Second Molars in Periapical and Panoramic Dental Radiographs. J. Endod. 2022, 48, 914–921. [Google Scholar] [CrossRef] [PubMed]

- Umer, F.; Habib, S. Critical Analysis of Artificial Intelligence in Endodontics: A Scoping Review. J. Endod. 2021, 48, 152–160. [Google Scholar] [CrossRef] [PubMed]

- Chugal, N.M.; Clive, J.M.; Spångberg, L.S. A prognostic model for assessment of the outcome of endodontic treatment: Effect of biologic and diagnostic variables. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2001, 91, 342–352. [Google Scholar] [CrossRef]

- Friedman, S. Prognosis of initial endodontic therapy. Endod. Top. 2002, 2, 59–88. [Google Scholar] [CrossRef]

- Moidu, N.P.; Sharma, S.; Chawla, A.; Kumar, V.; Logani, A. Deep learning for categorization of endodontic lesion based on radiographic periapical index scoring system. Clin. Oral Investig. 2021, 26, 651–658. [Google Scholar] [CrossRef]

- Jiménez Pinzón, A.; Segura Egea, J.J. Valoración clínica y radiológica del estado periapical: Registros e índices periapicales. Endodoncia 2003, 21, 220–228. [Google Scholar]

- Chugal, N.M.; Clive, J.M.; Spångberg, L.S. Endodontic infection: Some biologic and treatment factors associated with outcome. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2003, 96, 81–90. [Google Scholar] [CrossRef]

- Friedman, S.; Abitbol, S.; Lawrence, H. Treatment Outcome in Endodontics: The Toronto Study. Phase 1: Initial Treatment. J. Endod. 2003, 29, 787–793. [Google Scholar] [CrossRef]

- Farzaneh, M.; Abitbol, S.; Lawrence, H.; Friedman, S. Treatment Outcome in Endodontics—The Toronto Study. Phase II: Initial Treatment. J. Endod. 2004, 30, 302–309. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, G.; Zhang, Y.; Wang, J.; Jin, Q.; Sun, L.; Zhang, Q.; Lian, Q.; Qian, G.; Xia, N.; et al. AGMB-Transformer: Anatomy-Guided Multi-Branch Transformer Network for Automated Evaluation of Root Canal Therapy. IEEE J. Biomed. Health Inform. 2022, 26, 1684–1695. [Google Scholar] [CrossRef] [PubMed]

- Herbst, C.S.; Schwendicke, F.; Krois, J.; Herbst, S.R. Association between patient-, tooth- and treatment-level factors and root canal treatment failure: A retrospective longitudinal and machine learning study. J. Dent. 2022, 117, 103937. [Google Scholar] [CrossRef]

- Campo, L.; Aliaga, I.J.; De Paz, J.F.; García, A.E.; Bajo, J.; Villarubia, G.; Corchado, J.M. Retreatment Predictions in Odontology by means of CBR Systems. Comput. Intell. Neurosci. 2016, 2016, 7485250. [Google Scholar] [CrossRef] [PubMed]

- Ramezanzade, S.; Laurentiu, T.; Bakhshandah, A.; Ibragimov, B.; Kvist, T.; EndoReCo, E.; Bjorndal, L. The efficiency of artificial intelligence methods for finding radiographic features in different endodontic treatments—A systematic review. Acta Odontol. Scand. 2023, 81, 422–435. [Google Scholar] [PubMed]

- Sunnetci, K.M.; Kaba, E.; Beyazal Çeliker, F.; Alkan, A. Comparative parotid gland segmentation by using ResNet-18 and MobileNetV2 based DeepLab v3+ architectures from magnetic resonance images. Concurr. Comput. 2023, 35, e7405. [Google Scholar] [CrossRef]

- Boreak, N. Effectiveness of Artifcial Intelligence Applications Designed for Endodontic Diagnosis, Decision-making, and Pre-diction of Prognosis: A Systematic Review. J. Contemp. Dent. Pract. 2020, 21, 926–934. [Google Scholar] [CrossRef]

- Pauwels, R.; Brasil, D.M.; Yamasaki, M.C.; Jacobs, R.; Bosmans, H.; Freitas, D.Q.; Haiter-Neto, F. Artificial intelligence for detection of periapical lesions on intraoral radiographs: Comparison between convolutional neural networks and human observers. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2021, 131, 610–616. [Google Scholar] [CrossRef]

- Sadr, S.; Mohammad-Rahimi, H.; Motamedian, S.R.; Zahedrozegar, S.; Motie, P.; Vinayahalingam, S.; Dianat, O.; Nosrat, A. Deep Learning for Detection of Periapical Radiolucent Lesions: A Systematic Review and Meta-analysis of Diagnostic Test Accuracy. J. Endod. 2023, 49, 248–261.e3. Available online: https://linkinghub.elsevier.com/retrieve/pii/S0099239922008457 (accessed on 17 January 2023). [CrossRef]

- Sherwood, A.A.; Setzer, F.C.; K, S.D.; Shamili, J.V.; John, C.; Schwendicke, F. A Deep Learning Approach to Segment and Classify C-Shaped Canal Morphologies in Mandibular Second Molars Using Cone-beam Computed Tomography. J. Endod. 2021, 47, 1907–1916. [Google Scholar] [CrossRef]

| Variable | Levels | p-Value | Effect Size |

|---|---|---|---|

| Age | 15–24; 25–34; 35–44; 45–54; 55–64; ≥65 | 0.0056 | 0.372 |

| Highest level of education | Primary; Secondary; Post secondary | 0.0016 | 0.33 |

| Arch | Mandible; Maxilla | 0.02 | 0.21 |

| Smoking | No; Every day; Some days; Former | 0.046 | 0.26 |

| Patient co-operation | No; Yes | 0.028 | 0.21 |

| Pain relieved by | None; Cold; Medication | 0.003 | 0.31 |

| Duration of the pain | Sec; Min; Continuous | 0.027 | 0.245 |

| Periapical | Asymptomatic AP; Symptomatic AP; Chronic Apical Abscess; Acute Apical Abscess | 0.01 | 0.31 |

| Estimated prognosis by clinician | Hopeless; Questionable; Fair; Good; Excellent | 0.034 | 0.29 |

| Prediction by DL | Success; Failure | 0.000000127 | 0.53 |

| Metric | DP | RF | Logistic Regression (DL-LR) | DL |

|---|---|---|---|---|

| TP | 42 | 57 | 57 | 59 |

| FN | 27 | 12 | 8 | 6 |

| FP | 21 | 15 | 15 | 18 |

| TN | 29 | 35 | 28 | 25 |

| Sensitivity | 0.61 (0.48, 0.72) | 0.83 (0.72, 0.91) | 0.87 (0.77, 0.94) | 0.90 (0.80, 0.90) |

| Specificity | 0.58 (0.43, 0.72) | 0.7 (0.55, 0.82) | 0.65 (0.49, 0.78) | 0.58 (0.42, 0.72) |

| PPV | 0.67 (0.54, 0.78) | 0.79 (0.68, 0.88) | 0.79 (0.67,0.87) | 0.76 (0.65, 0.85) |

| NPV | 0.52 (0.38, 0.65) | 0.74 (0.6, 0.86) | 0.77 (0.60, 0.89) | 0.80 (0.62, 0.92) |

| Accuracy | 0.6 (0.5, 0.69) | 0.77 (0.69, 0.84) | 0.78 (0.69, 0.86) | 0.77 (0.68, 0.85) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bennasar, C.; Nadal-Martínez, A.; Arroyo, S.; Gonzalez-Cid, Y.; López-González, Á.A.; Tárraga, P.J. Integrating Machine Learning and Deep Learning for Predicting Non-Surgical Root Canal Treatment Outcomes Using Two-Dimensional Periapical Radiographs. Diagnostics 2025, 15, 1009. https://doi.org/10.3390/diagnostics15081009

Bennasar C, Nadal-Martínez A, Arroyo S, Gonzalez-Cid Y, López-González ÁA, Tárraga PJ. Integrating Machine Learning and Deep Learning for Predicting Non-Surgical Root Canal Treatment Outcomes Using Two-Dimensional Periapical Radiographs. Diagnostics. 2025; 15(8):1009. https://doi.org/10.3390/diagnostics15081009

Chicago/Turabian StyleBennasar, Catalina, Antonio Nadal-Martínez, Sebastiana Arroyo, Yolanda Gonzalez-Cid, Ángel Arturo López-González, and Pedro Juan Tárraga. 2025. "Integrating Machine Learning and Deep Learning for Predicting Non-Surgical Root Canal Treatment Outcomes Using Two-Dimensional Periapical Radiographs" Diagnostics 15, no. 8: 1009. https://doi.org/10.3390/diagnostics15081009

APA StyleBennasar, C., Nadal-Martínez, A., Arroyo, S., Gonzalez-Cid, Y., López-González, Á. A., & Tárraga, P. J. (2025). Integrating Machine Learning and Deep Learning for Predicting Non-Surgical Root Canal Treatment Outcomes Using Two-Dimensional Periapical Radiographs. Diagnostics, 15(8), 1009. https://doi.org/10.3390/diagnostics15081009