Abstract

Background: To understand if binocular vision disorders are associated with Digital Eye Strain Syndrome (DESS), a study protocol is needed to ensure consistency across observational studies. This study aims to test the feasibility of a protocol to assess DESS, screen time, binocular vision, and dry eye. Methods: DESIROUS is an observational cross-sectional study among Polytechnic students at the Lisbon School of Health Technology, Portugal. The protocol includes three questionnaires (Computer Vision Syndrome Questionnaire [CVS-Q], Convergence Insufficiency Symptom Survey [CISS], and Dry Eye Questionnaire version 5 [DEQ-5]), an assessment of visual acuity and binocular vision (cover test for near and distance, stereopsis, near point convergence (NPC), near point accommodation (NPA), accommodative facility, vergence), and the ocular surface break-up tear (BUT) test. The questionnaires were validated using Cronbach’s alpha. Interobserver variability for BUT was assessed using Cohen’s Kappa, Intraclass Correlation Coefficient (ICC), and Bland–Altman analysis involving three observers (A, B, and C), compared against an expert as the gold standard. Results: A total of 18 students were included in the validation phase (mean age: 21.50 ± 0.62 years; females: 77.8%). The internal consistency of the CVS-Q (α = 0.773) and the CISS (α = 0.756) was considered good, while the DEQ-5 showed a reasonable internal consistency (α = 0.594). Observer A had the highest agreement with the gold standard (Cohen’s Kappa = 0.710 and p < 0.001; ICC = 0.924, p < 0.001). Conclusions: We provide a protocol to assess binocular vision and the ocular surface, with an emphasis on objective measures while integrating other assessment approaches. Further studies are necessary to validate this protocol, potentially incorporating new measures to enhance its validity across different populations.

1. Introduction

The widespread use of social media had a significant impact on day-to-day living, amplifying screen time and increasing the risk of Digital Eye Strain Syndrome (DESS) [1]. DESS is of significant public health concern [2,3,4]. With the surge in online education prompted by the COVID-19 pandemic, both educators and students have substantially increased their screen exposure [5,6]. Research spanning from 2020 to 2023 across multiple countries showed that the prevalence of DESS among university students ranges from 50.8% to 94.5% [7,8,9,10,11,12,13,14]. This high prevalence may be attributed to the simultaneous use of multiple digital devices, often two or three at a time, for extended periods. Such usage increases the visual and cognitive load, making students particularly susceptible to DESS.

DESS is associated with a range of symptoms, including headaches, blurred or double vision, ocular dryness, difficulty focusing, neck and back discomfort, light sensitivity, and color distortion [2,15,16]. Although these symptoms are often transient, they can evolve into persistent manifestations, which have significant economic consequences for digital device-dependent professionals [1,3,17]. A comprehensive ocular evaluation is essential before diagnosing DESS, which should include refractive assessment, binocular vision evaluation, and ocular surface evaluation [18]. It is important to note that uncorrected refractive error acts as a confounding factor. Therefore, a refractive assessment should always be performed [19].

A previous systematic review highlighted the need for further research in frequent users, such as adolescents, as well as patients with dry eye or accommodative/binocular vision anomalies [20]. These groups may be at higher risk of experiencing ocular discomfort symptoms that may be diagnosed as DESS [20]. Although workplace ergonomics and dry eye syndrome have been associated with DESS [21,22,23,24], further research is warranted to elucidate the mechanisms underlying the potential link with binocular vision anomalies. Previous studies suggested a potential association between DESS and binocular vision, specifically anomalies of convergence and accommodation, leading to increased accommodative lag, reduced amplitude, and a potential shift in the near point of convergence [20]. Nevertheless, further research is necessary to ascertain the origins of complaints, as headaches, double vision, and blurriness mirror complaints linked with accommodative and convergence dysfunctions. A recent study conducted on a clinical population found that visual symptoms were not influenced by the number of hours spent using digital devices but rather by the presence of visual dysfunction, including refractive, accommodative, and/or binocular issues [25]. While studies like Cacho et al., 2024 emphasize the role of pre-existing visual dysfunctions, others suggest that extended device use may contribute to DESS symptoms through mechanisms such as prolonged near work or reduced blink rates [25].

Previous studies have assessed the presence of DESS with the Computer Vision Syndrome Questionnaire (CVS-Q) without assessing binocular vision [11,12,14,26,27], introducing bias due to the potential overlap of symptoms caused by binocular vision disorders or ocular surface conditions. Thus, scientific evidence on the link between binocular vision and DESS is limited [20]. In addition, previous studies were mostly cross-sectional and lacking well-defined protocols [28,29,30,31,32,33,34]. Furthermore, previous studies have evaluated a plethora of variables, without assessing binocular vison [28,29,34,35,36,37]. Moreover, numerous studies failed to adjust their models for potential confounding variables. Hence, it is imperative for vision professionals to understand the origins of visual symptoms related with prolonged screen time [1,38,39]. Thus, future research studies should aim to incorporate objective evaluations of binocular vision and the ocular surface, alongside with objective measurements of screen time. Consequently, our study is guided by two main questions: (1) Can objective assessment of binocular vision and ocular surface parameters detect functional changes associated with DESS more reliably than subjective reports alone? (2) Do binocular vision anomalies, particularly those affecting accommodation and convergence, precede or contribute to the onset of DESS, especially in individuals with excessive screen exposure? To explore whether DESS represents a distinct condition or a manifestation of underlying binocular vision anomalies, refractive errors, or dry eye disease, we developed a structured study protocol. This protocol—DESIROUS—integrates both objective clinical assessments and subjective symptom evaluations to improve our understanding, diagnosis, and management of DESS. The primary aim of this pilot study is to assess the feasibility of implementing this standardized protocol, with a particular focus on objective measures related to binocular vision and ocular surface evaluation.

2. Materials and Methods

2.1. Study Design and Population

The DESIROUS study is an observational cross-sectional study to be implemented among Polytechnic students of health technologies from the Lisbon School of Health Technology (ESTeSL) in Portugal. The study was approved by the ethical committees of the University of Évora (reference number 22090) and ESTeSL (reference number 96-2022). The DESIROUS study was registered on ClinicalTrials.gov under the following identifier: NCT05675475.

Cluster sampling was selected due to data protection limitations imposed by the ethics committee of ESTeSL. ESTeSL offers 9 undergraduate programs (bachelor’s degree programs) in the field of healthcare. The programs span four academic years, which are defined as classes. The final year of each program is dedicated to research and internship. Three programs were selected to be included in the study: Clinical Physiology, Medical Imaging and Radiotherapy, and Orthoptics and Vision Sciences. This was due to the perceived higher utilization of digital devices in these programs.

2.2. Inclusion and Exclusion Criteria

The DESIROUS study inclusion criteria for sample selection encompassed first-, second-, and third-year students of healthcare technologies programs at ESTeSL, as these years are characterized by a heavier curriculum and continuous study load. Additionally, only students aged between 18 and 35 years will be considered eligible to mitigate the confounding factor of presbyopia. Students with history of ocular surgery, strabismus, nystagmus, or amblyopia will be excluded.

2.3. Informed Consent

Prior to their participation, all subjects will be required to sign an informed consent form developed in accordance with the principles set forth in the Helsinki Declaration and the Oviedo Convention. The informed consent document provides comprehensive information about this study, including its circumstances, precise nature, and background. Furthermore, the document elucidated the methodologies employed, the confidentiality, and anonymity of the data.

2.4. Sample Size Calculation

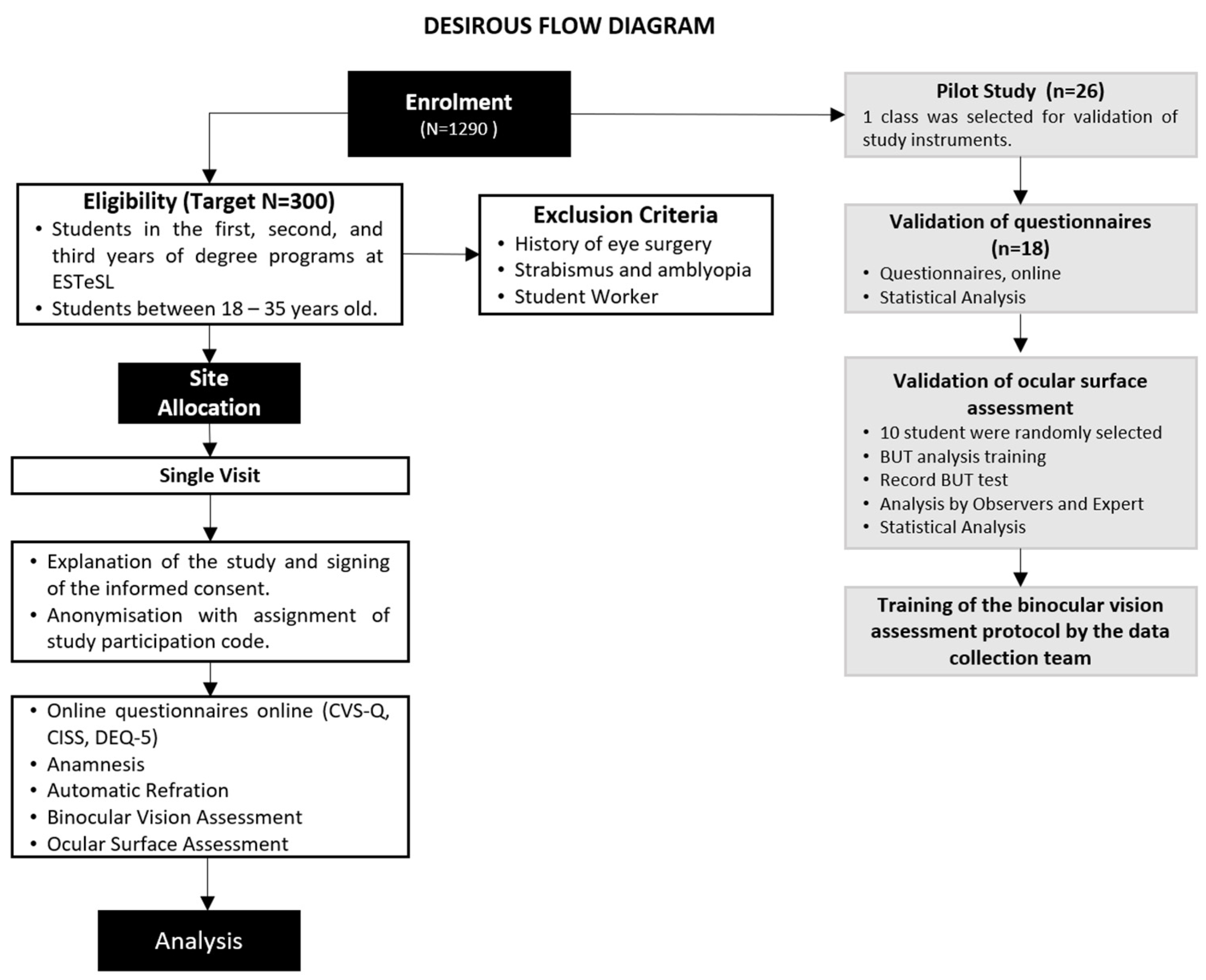

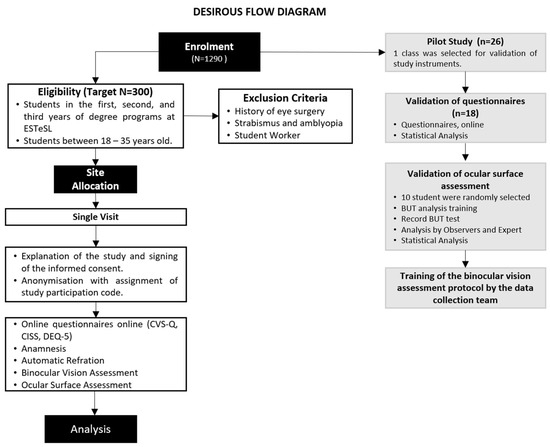

The study conducted by Cantó-Sancho et al. on university students provided the basis for estimating the sample size for the DESIROUS study [40], showing that 76.6% of the Spanish population had DESS. No published studies were identified regarding the prevalence of DESS among university students in Portugal. A 95% confidence interval for the population prevalence indicates that 288 individuals need to be recruited to achieve an estimation precision of 5 percentage points, which corresponds to a range of 70% to 80% [41]. A total of 300 subjects will be enrolled in the main study to account for potential withdrawals (Figure 1). Here, we present the validation of the instruments that was conducted on a sample of one class from the Orthoprosthetics degree program.

Figure 1.

DESIROUS flow diagram. Legends: ESTeSL: Lisbon School of Health Technology; CVS-Q: Computer Vision Syndrome Questionnaire; CISS: Convergence Insufficiency Symptom Survey; DEQ-5: Dry Eye Questionnaire version 5; BUT: tear film break-up time.

2.5. Study Procedures and Schedule of Assessments

The main study visit comprises two distinct methodological approaches. Firstly, three questionnaires will be administered online in Google Forms. Secondly, participants will undergo an orthoptic assessment and ocular surface assessment using the study instruments outlined below (Figure 1).

All students from the selected class (n = 26) were invited to participate in the pilot study (Figure 1). Data collection was carried out between 2 February and 3 March 2023. Overall, 18 of 26 third-year Orthoprosthetics students (69.2%) responded to the first phase of the pilot study—questionnaire validation phase. The non-responders (n = 8; 30.8%) were working students or those who did not attend classes regularly. Subsequently, 10 students were randomly selected to participate in the second phase of the pilot—ocular surface evaluation and assessment of binocular vision. The mean age of the included students was 21.5 ± 0.62 years (77.8% were females).

2.6. Study Instruments

The present study sought to validate the Portuguese-language questionnaires specifically for university health technology students and to evaluate the intra-operator consistency of tests that rely on operator analysis within the scope of ocular surface assessment, thereby strengthening the robustness of future study protocols.

2.7. Questionnaires

Three questionnaires, translated and validated for the Portuguese language and culture, were selected through a comprehensive literature review [42,43,44,45]. The Computer Vision Syndrome Questionnaire (CVS-Q) was designed to assess the presence of visual symptoms associated with prolonged computer use, enabling the diagnosis and quantification of symptoms of DESS. The Convergence Insufficiency Symptom Survey (CISS) was designed to enable the identification of the frequency and severity of visual discomfort symptoms in individuals with convergence insufficiency. The abbreviated version of the Dry Eye Questionnaire, version-5 (DEQ-5), evaluates signs and symptoms of dry eye. As previously mentioned, the symptomatology of DESS can overlap with other binocular dysfunctions and ocular surface abnormalities. Therefore, a comparison between the 3 questionnaires and an objective assessment of binocular vision and ocular surface will be made to understand the origin of the symptoms.

2.8. Computer Vision Syndrome Questionnaire

The CVS-Q is an instrument that assess and quantifies symptoms associated with Computer Vision Syndrome (CVS) [4]. The instrument employs a Rasch rating scale model to analyze 16 symptoms. The frequency of each symptom is rated on a scale of 0 to 3, with 0 representing never and 3 representing nearly daily. The intensity of each symptom is rated on a scale of 0 to 3, with 0 representing moderate and 3 representing very intense. The frequency and intensity data are recoded to calculate the severity of each symptom, resulting in a total score. A total score of 6 or higher indicates that the individual exhibits symptoms of CVS [4] (Table 1). This questionnaire was translated and validated for the Portuguese language and culture in 2020, in a population of workers using computers for more than six hours per day [42].

Table 1.

Summary of the characteristics and scores of the questionnaires applied, CVS-Q, CISS, and DEQ-5.

2.9. Convergence Insufficiency Symptom Survey

The CISS was developed by the Convergence Insufficiency Treatment Trial (CITT) group to evaluate the impact of the treatment on symptoms associated with convergence insufficiency (CI). In a previous study, this questionnaire was validated and considered a reliable tool suitable for clinical use or as an outcome measure for research studies involving adults with CI [46]. The CISS evaluates the presence and severity of symptoms related to CI. It comprises fifteen Likert-scale questions, with response options ranging from “never“ to “always”, scored from 0 to 4 for various typical symptoms of this binocular vision issue. The total of all responses provides a final score of symptomatology for each individual ranging from 0 (no symptoms) to 60 (considered severely symptomatic). In adults, absence of symptoms of CI is classified by scores below 21, while CI is identified with a score of 21 or higher [46] (Table 1). This questionnaire was selected for its extensive clinical utilization, facilitating the diagnosis and monitoring of treatment outcomes in CI [47,48,49,50]. Additionally, this questionnaire was translated and validated for the Portuguese language and culture in 2013, for university students in the health sciences, as well as science and engineering fields, with a mean age of 21.79 ± 2.42 years [45].

2.10. Dry Eye Questionnaire

The DEQ-5 is a condensed version of the original DEQ comprising five questions designed to assess ocular discomfort, dryness, and tearing, which are all indicative of dry eye. It is one of the two instruments recommended by the Tear Film and Ocular Surface Society Dry Eye Workshop II Diagnostic Methodology Report [50]. Two questions assess the intensity of the symptoms experienced, with 0 indicating that the symptom has never been experienced and 1 to 5 indicating an increasing level of severity, from mild to very intense. Three questions evaluate the frequency of signs and symptoms, with responses ranging from 0 to 4, corresponding to “Never”, “Rarely”, “Sometimes”, “Often”, and “Constantly”. The aggregation of respondents’ answers results in a final score, which ranges from 0 to 22 points. The questionnaire outcomes allow the differentiation of patients suspected of having dry eye disease without Sjögren’s syndrome (greater than 6 points) and those suspected of having dry eye due to Sjögren’s syndrome (greater than 12 points) from those without alterations (less than or equal to 6 points) [51] (Table 1).

The full version of the DEQ comprises 21 items with 58 questions and assesses symptoms by determining frequency, daytime severity, and intensity on a typical day during a one-week recall period. This questionnaire also includes inquiries about the time of day symptoms worsened, impact on daily life activities, medications, allergies, dry mouth, nose or vagina, treatments, and overall patient assessment [52]. This comprehensive questionnaire has been used in epidemiological and clinical studies, and the full version of the DEQ was initially chosen due to its broader scope [42,43,44,45]. However, the length of the questionnaire would make it impractical for students to participate and would unnecessarily prolong this study’s duration. The DEQ-5 was found to be more applicable in clinical settings due to its smaller size, cross-cultural translation, and validation. The questions are related to symptoms that occurred during previous month, assessing both the frequency and the intensity of symptoms [7]. A recent study conducted in an African population applied Rasch analysis to the DEQ-5 and found satisfactory psychometric properties for clinical use [53].

2.11. Binocular Vision and Screen Time Assessment

An assessment of binocular vision and screen time data were included in the protocol consisting of the following:

- Subjective data will be collected by asking the amount of time (in hours) spent using various digital devices, including desktops, laptops, tablets, and smartphones. Additionally, data regarding the number of hours of sleep per day, averaged over the past week (7 days), will also be gathered.

- Objective data on screen time will be collected by examining the participant’s mobile phone usage (average daily time over the past week, last 7 days), phone model, and screen size. Smartphones (Android and iOS systems) have this information available in the digital well-being area for Android devices and in screen time settings for iOS devices. In addition to this information, participants will be asked about the electronic device (desktop, laptop, tablet, smartphone) used most frequently per day and if they own a smartwatch.

- Evaluation of objective refraction without cycloplegia, using the automatic refractometer GR-21 GRAND SEIKO (Japan).

- Distance visual acuity assessment with refractive correction using the CSV-1000 ETDRS provides a full range of LogMAR testing (1.0 to −0.3) at a test distance of 8 feet using standardized luminance, 85 cd/m2.

- Identification of oculomotor deviations using the cover test for near and distance vision, with the Lang fixation cube (LANG-STEREOTEST AG) at 40 cm for near and a distant fixation point for far. An opaque cover spoon will be employed at both distances.

- Evaluation of near stereopsis using the Random Dot Butterfly stereotest (Stereo Optical Co., Chicago, IL, USA) graded circle test at 40 cm, 800 to 40 s of arc.

- Assessment of near point of convergence (NPC) and accommodation (NPA) using the RAF (Royal Air Force) ruler (Haag-Streit, UK). For measuring the NPC, the target used is the fixation point on the near point card. The card will be brought from a distance of 50 cm along the facial midline in free space, moving approximately 2 cm/s towards the participant’s nasal bridge. The card will be stopped when the participant reports seeing double or when the examiner obvers any eye deviation. This measurement will be repeated three times. The measurement of the NPA is similar to that of the NPC. However, in this test, the target used is the N5 horizontal line, and the card is stopped when the participant reports that the letters are blurred.

- Assessment of accommodative facility using Flippers ±2.00 Diopter and near visual acuity chart “1” in LogMAR sizes for testing at 16 inches (40 cm). The patient focuses on the 0.1 visual acuity line on the near vision scale, and the examiner counts how many cycles the participant can complete in one minute. Each cycle consists of one positive lens and one negative lens. The examiner places the flipper, and when the vision becomes clear, the participant indicates this quickly by saying “now”, after which the examiner alternates the lens.

- Assessment of fusional amplitudes in space, convergence, and divergence for near and distance vision using horizontal prism bar and for near Lang fixation cube (LANG-STEREOTEST AG) and for far distant fixation point.

The normative values for each variable of the binocular vision assessment are detailed in Table 2.

Table 2.

Summary of normative values for the binocular vision in the DESIROUS protocol.

The data collection for the main study will be conducted by three operators. All the operators had training on how to perform the binocular vision to ensure consistency in all the measurements. Potential environmental confounding factors, including lighting and posture, were controlled throughout this study. All data collection took place in the same examination room under consistent lighting conditions. Both participants and examiners adhered to standardized postures throughout the entire procedure.

Binocular vision assessment will be classified as either normal or abnormal. A student that falls within the values shown in Table 2 will be classified as normal, while students with at least 1 value outside the normal range will be classified as abnormal.

2.12. Ocular Surface Assessment

Tear film break-up time (BUT) test is a technique that uses a slit-lamp device and sodium fluorescein strips to measure the stability of the tear film. The diffuse technique with the cobalt blue filter is used during the measurements. In general, more than 10 s is considered as normal and less than 10 s as dry eye [61]. A weak tear film is indicated by a fast tear break-up time; the longer it takes, the more stable the tear film.

Given the presence of three operators in data collection, it was crucial to ensure the absence of inter-operator variability. To establish interobserver validation, an expert in ocular surface was consulted as the gold standard.

Initially, training on performing BUT measures was conducted, followed by the pre-validation stage. The BUT test was recorded on video using a camera attached to a slit lamp. Subsequently, these videos were anonymized and sent to the three members of the data collection team (A, B, and C) and an expert in ocular surface with 20 years of experience, the gold standard for assessment. The results of the videos assessments were compared and statistically analyzed to evaluate their level of agreement.

2.13. Statistical Analysis

Questionnaire validation was assessed with the Cronbach’s alpha coefficient, which measures the degree of variability and reliability of internal consistency within a scale. The Cronbach’s alpha [62] represents the average of correlations among items within an instrument, reflecting the correlation between questionnaire responses, and values range from 0 to 1 (values closer to 1 suggest higher internal consistency; values below 0.70 suggest low internal consistency; 0.70 is a minimum acceptable value) [63]. A maximum value exceeding 0.90 may indicate redundancy or duplication, with several similar questions assessing the same object. Thus, alpha values between 0.80 and 0.90 are considered ideal [63].

For the BUT measures, the Cohen’s Kappa coefficient was used to measure the level of agreement between the expert and each of the data collection members. In addition, Fleiss’ Kappa was also employed to gain an overall understanding of the agreement among the 3 participants. Fleiss’ Kappa is derived from Cohen’s Kappa and is considered the most suitable coefficient for evaluating the degree of agreement among three or more examiners, particularly when the evaluated scale has many categories. Kappa values can range from −1 to +1. A value of −1 indicates total disagreement, 0 indicates agreement equivalent to chance, and values above 0 represent increasing agreement among the evaluators, up to the maximum value of +1, indicating perfect agreement. Subsequently, the analysis focuses on each observer compared to the expert, for which three stages of statistical analysis are performed, Intraclass Correlation Coefficient (ICC) and Bland–Altman analysis. The ICC value ranges from 0 to 1, with values below 0.5 indicating low reliability, values between 0.5 and 0.75 indicating moderate reliability, values between 0.75 and 0.9 indicating good reliability, and values above 0.9 indicating excellent reliability.

The Bland–Altman analysis was used to assess the agreement between the 3 observers and the expert. The limits of agreement were calculated using the mean and standard deviation of the differences between the observers and the expert, allowing the construction of the Bland–Altman scatter plot. In this plot, a high degree of agreement is shown by the reduction in dispersion of points and their proximity to the line representing the mean bias. Conversely, a low degree of agreement is indicated by the high dispersion of points and their distance from the line representing the mean trend. Data analysis was conducted using IBM® SPSS Statistics®, version 27, with a significance level of 0.05.

3. Results

The most frequently used digital device was the smartphone (5.33 ± 2.40 h per day), followed by the laptop (2.06 ± 1.78 h per day or week). Additionally, the students’ subjective screen time was compared to the objective screen time, revealing only a slight overestimation (5.33 ± 2.40 versus 4.89 ± 1.91 h).

The results of the questionnaires showed that 77.8% (n = 14) of participants exhibited symptoms of DESS, and 27.8% (n = 5) showed responses indicative of convergence insufficiency. In contrast, the analysis of the DEQ-5 revealed that only 38.9% (n = 7) of participants exhibited symptoms of dry eye disease (DED).

3.1. Validation of the Questionnaires

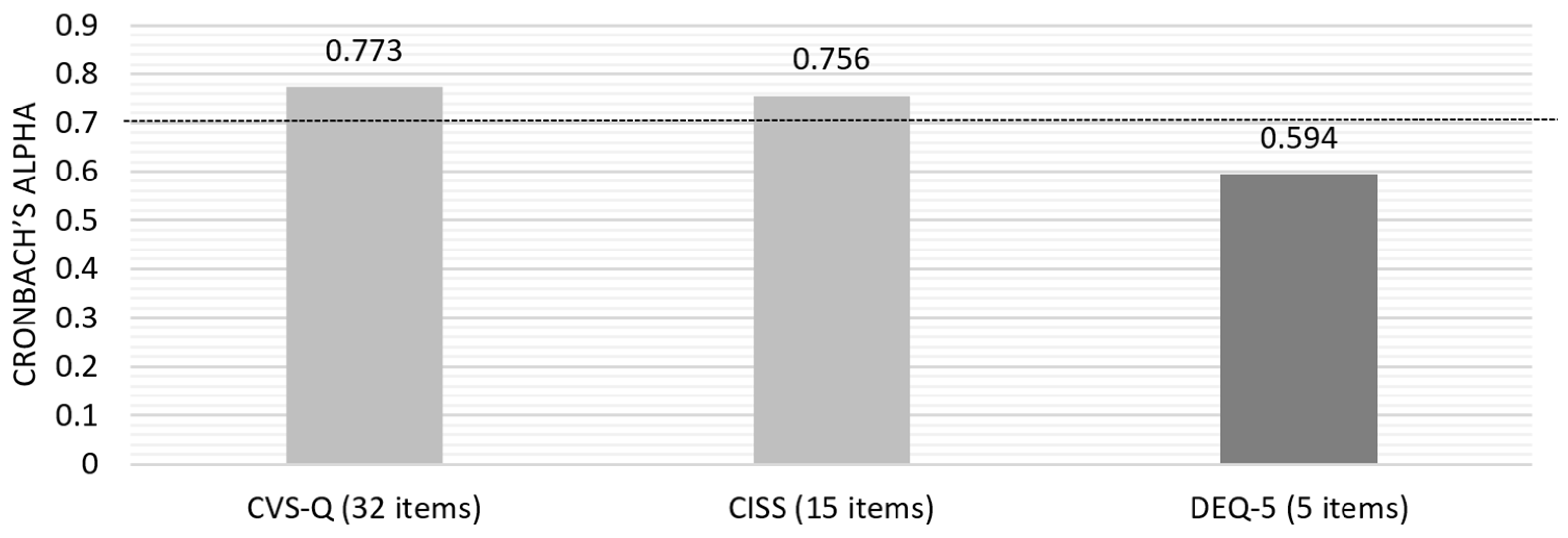

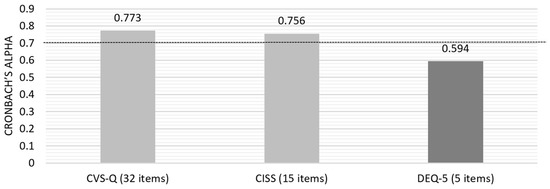

Initially, the Cronbach’s alpha for the questionnaires’ answers was calculated based on standardized items, yielding a value of 0.869. Subsequently, individual analysis of each questionnaire was conducted to assess the overall internal consistency (Figure 2) and the consistency of each question item.

Figure 2.

Cronbach’s alpha for the three questionnaires included in the pilot study.

Due to the lower consistency of DEQ-5, an evaluation of the “Item-Total Statistics” was conducted, which identified that removing question number 5 (“In the last month, on a normal day, how often did you feel or have your eyes watery?”) would increase the alpha value to 0.710, a value on the threshold of acceptable internal consistency.

Additionally, participants were queried regarding the duration and difficulty of the three questionnaires. All respondents indicated that the duration and difficulty were appropriate. No missing values were identified during the analysis of the questionnaire data.

The digital format responses provided greater validity control and was more secure than the paper version. In the paper-based questionnaire, there were two instances of missing data due to non-responses regarding the intensity of reported symptoms in the frequency section in the CVS, showing only 80% of valid answers. This issue did not occur in the online version, which required mandatory responses (100% of the answers were valid).

3.2. Binocular Vision and Ocular Surface Assessment

From the ten students randomly selected for the pilot study, only nine consented to be assessed. Six students exhibited symptoms of DESS, three had symptoms of CI, and three exhibited dry eye disease (DED) symptoms. Average visual acuity (0.13 ± 0.14 LogMAR), near stereopsis (44.44 ± 7.27 s of arc), and NPC (7.56 ± 3.25 cm) were only slightly reduced, while NPA (11.67 ± 2.83 D), binocular accommodative facility (6.11 ± 4.60 cpm), and fusional amplitudes (Positive Fusional Vergence (PFV) for far = 21.00 ± 10.42 DP; PFV for near = 31.33 ± 10.94 DP; Negative Fusional Vergence (NFV) for near = 12.56 ± 6.23 DP; NFV for far = 8.50 ± 2.07 DP) were within normal ranges (Table 3). In the objective assessment of binocular vision, seven participants were identified as having binocular vision anomalies, with five of these cases presenting convergence insufficiency. Among those with binocular vision anomalies, five tested positive on the CVS-Q, whereas only two were positive on the CISS.

Table 3.

Results from the objective assessment of refractive error, visual acuity, binocular vision, and screen time.

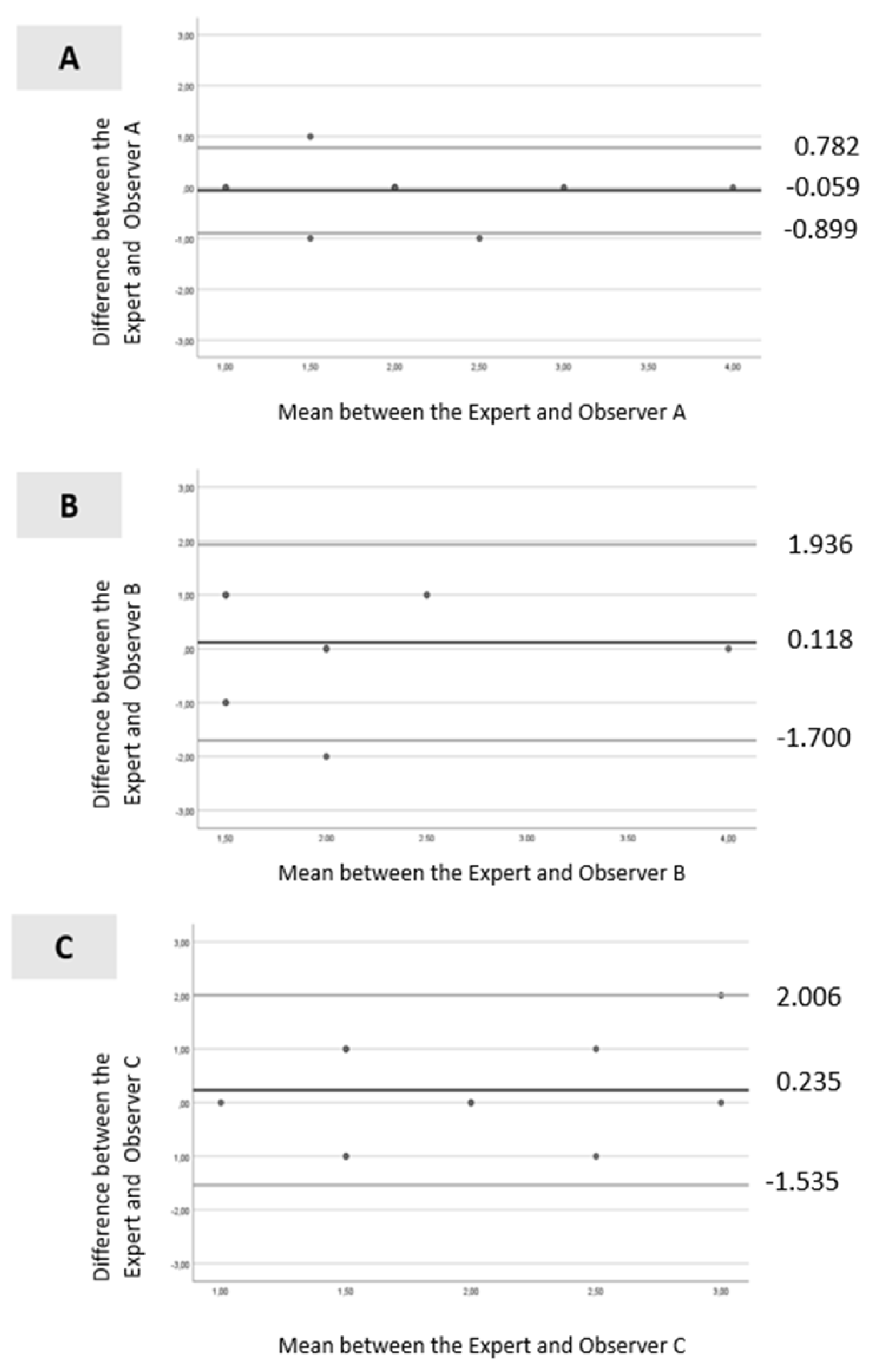

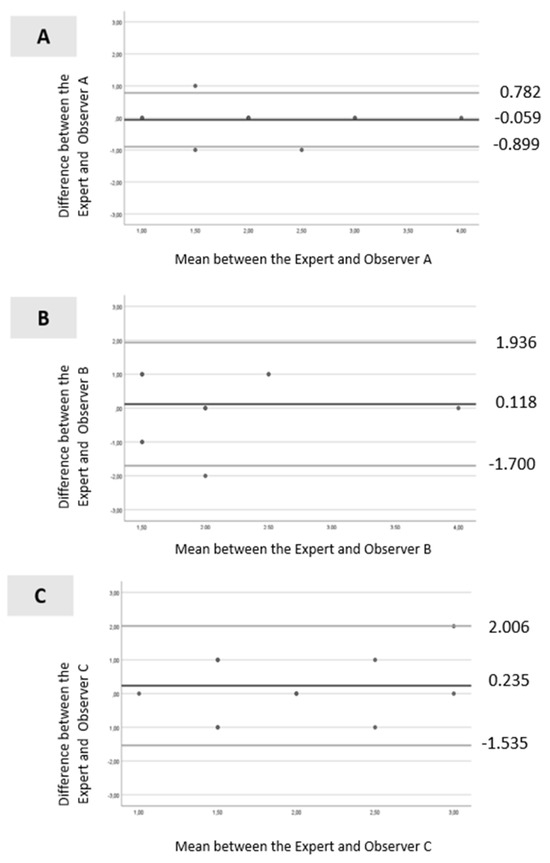

In the BUT measurements, only Observer A and the expert exhibited substantial agreement, with Cohen’s Kappa ranging from 0.61 to 0.80 (Cohen’s Kappa = 0.710 and p-value < 0.001). For Observer B (Cohen’s Kappa = −0.127; p-value = 0.46) and Observer C (Cohen’s Kappa = −0.094; p-value = 0.59), the agreement with the expert was negative and not statistically significant. The interobserver reliability assessed by the ICC revealed that Observer A exhibited an ICC of 0.924 (p < 0.001). Conversely, the ICC between Observer B and Observer C with the expert showed a low level of agreement, with ICC values of 0.466 and 0.380 respectively (both p < 0.001).

Bland–Altman analysis is presented in Figure 3A–C. For Observer A (Figure 3A), all values were within the levels of agreement, except for one. The dispersion between the points and the line representing the mean bias was not considerable (mean ± standard deviation [SD] = −0.059 ± 0.429; limits ranging from −0.899 to 0.782). For Observer B (Figure 3B), all values were within the levels of agreement, and the dispersion between the points and the line representing the mean bias was not significant (mean ± SD =0.118 ± 0.928; limits ranging from −1.700 to 1.936). Similar results were observed for Observer C (Figure 3C), with all values within the levels of agreement, and the dispersion between the points and the line representing the mean bias was minimal (mean ± SD = 0.235 ± 0.903; limits ranged from −1.535 to 2.006).

Figure 3.

Bland–Altman scatter plot: (A)—between the expert and Observer A; (B)—between the expert and Observer B and (C)—between the expert and Observer C. Legend: black line (--): mean line; gray line upper (--) = mean difference + 1.96 x SD difference; gray line lower (--) = mean difference—1.96 x SD difference.

4. Discussion

The literature review indicated the need to develop a protocol for the assessment of binocular vision and the ocular surface to ascertain their role in DESS. We have conducted a feasibility study to test the implementation of such protocol, which may facilitate comparison across different observational studies and ensure applicability in both clinical and scientific research contexts. We found notable inconsistencies in the subjective assessment of visual symptoms across studies. While most studies used only a single questionnaire [28,29,31,34,37,39], two studies employed two questionnaires, with both including CVS-Q [35,64]. The CVS-Q emerged as the most frequently used tool; however, the overall variability in the selection and application of questionnaires limits comparability between studies. One of the key strengths of the present study is the inclusion of three questionaries and an objective assessment of binocular vision and the use of objective metrics for smartphone screen time, collected directly from each participant’s device. The DESIROUS protocol integrates a comprehensive set of instruments, including questionnaires, binocular vision assessment methodologies, and ocular surface evaluation, all supported by validated data collection tools.

The present study revealed that two of the three included questionnaires, CVS-Q and CISS, exhibited good internal consistency, whereas the DEQ-5 showed only moderate internal consistency. The original Spanish CVS-Q questionnaire demonstrated sensitivity and specificity above 70% and a Cronbach’s alpha of 0.78 [4]. A 2024 literature review on DESS and binocular vision changes revealed that the CVS-Q was the most frequently used tool [28,31,33,40] due the straightforward assessment of the syndrome prevalence. Accordingly, this questionnaire was selected for inclusion in the DESIROUS study. Another contributing factor was its adaptation and translation into Portuguese [42,65], which demonstrated good internal consistency with Cronbach’s alpha values of 0.87 for frequency assessment and 0.87 for intensity assessment [42].

Rouse et al. (2004) demonstrated that the CISS exhibited excellent internal consistency, with a Cronbach’s alpha of 0.96. Furthermore, the questionnaire exhibited high sensitivity (97.8%) and specificity (87%) in detecting CI in a population of young adults aged 19 to 30 [46]. Upon translation and cultural adaption to Portuguese in 2014, the CISS exhibited good internal consistency with a Cronbach’s alpha of 0.89. Additionally, it demonstrated high reproducibility as a tool for measuring visual discomfort associated with near-vision tasks, similar to other studies [45]. A recent study applying Rasch analysis to the structure of the CISS identified that certain categories within the questionnaire require refinement to enhance its performance. Nonetheless, the authors concluded that the CISS remains a valid and reliable tool for measuring the symptoms it assesses [66]. We performed a Rasch analysis of CISS. However, the analysis failed to produce a stable solution regarding the quality of the items within the scale and the resulting measurement model. Initially, we observed that the Rasch analysis software excluded 87% (13 out of 15) of the items. This outcome is likely due to the small sample size and the limited variability in item responses within the scale. The small sample size resulted from selecting a class of 26 students, of whom only 18 agreed to participate in this study. Given these limitations, we opted to use Cronbach’s alpha alone, as it remains a valid approach for assessing internal consistency in our context. However, Rasch analysis could be advantageous in the future, and we plan to conduct it in the main study, where a larger and more diverse sample will allow for a more robust psychometric analysis.

The CISS, specifically designed for CI, does not allow for the identification of other binocular vision anomalies, as the symptoms of accommodative and non-strabismic binocular dysfunctions are varied and often overlap, with no clear consensus on which should be considered for the diagnosis of each condition [67]. Recently, the Symptom Questionnaire for Visual Dysfunctions (SQVD) has been developed to identify symptoms associated with various binocular vision disorders [68]. However, it has not yet been translated into Portuguese. In the future, it would be valuable to translate and culturally adapt this questionnaire for Portuguese and university students, as it was validated in a clinical population.

In the Portuguese translation of the DEQ, internal consistency was not statistically evaluated with Cronbach’s alpha [43]. Instead, content validity was assessed by calculating the percentage agreement for each item across three translations, resulting in a high agreement rate of 81.5% among members from the first and second evaluation committees. Gross et al. revealed that the interobserver reliability of the translated DEQ-5 varied from 0.584 to 0.813, where question 2b (“When your eyes were dry, how intense was this dryness at the end of the day, in the two hours following bedtime?”) exhibited the lowest reliability [44]. In terms of internal consistency, the questionnaire had a Cronbach’s alpha of 0.89, indicating a high level of internal consistency [44]. The lower alpha value observed in this pilot study may be attributed to the small sample size. All items were retained to be tested in the main study to maintain comparability with the existing literature. However, future studies with larger and more diverse populations will be necessary to reassess internal consistency. These studies may also include item-level analyses to identify and refine potentially problematic items, thereby improving the questionnaire’s reliability in the context of DESS.

A comparison of the internal consistency values obtained in the present study with the original questionnaires and their translations into Portuguese revealed that, although the internal consistency was good, values were slightly lower than those obtained originally. This divergence may be attributed to the characteristics of the sample population, as the previous study included older individuals than that of the present study who were less susceptible to contact with electronic devices, which may have rendered certain items potentially less suitable for their age group. These findings may also be attributed to the limited sample size.

It is important to note that questionnaires have disadvantages, particularly memory bias and the subjectivity of responses. These instruments assess the frequency and intensity of symptoms, yet not all individuals have the same ability to accurately recall past events. Moreover, the measurement scale is influenced by the inherent subjectivity of each respondent’s perception. For this reason, although questionnaires provide valuable indicators for assessing certain conditions, it is essential to complement the information obtained with objective measurements, ensuring a more precise and robust evaluation.

To evaluate binocular vision, previous studies have focused on accommodation, although the tests used varied. Most studies evaluated BAF, NPA [28,29,31,35,37,64], and accommodative posture. A limited number of studies have assessed fusional vergence [30,33] and the NPC [28,30,37]. A strength of our protocol is its comprehensive approach, as it includes all these assessments—accommodation, fusional vergence, and NPC—ensuring a more complete evaluation of binocular vision. While the cover test was performed to identify the heterophoria, a limitation of the current study is the lack of prismatic cover test measurements, which would enable the quantification of the heterophoria. Future studies should test the accuracy of adding the prismatic cover test measurements to the protocol.

The analysis of interobserver variability for the BUT test revealed that Observer A had the highest and statistically significant results, indicating excellent agreement with the expert. Caution should be exercised when using measures from non-expert operators given the considerable discrepancies observed between operators for the BUT test. A low ICC value may be indicative of not only a low degree of agreement among assessors but also a lack of variability among individuals in the sample, a small sample size, or a small number of observers [69]. The Bland–Altman analysis revealed that all observers demonstrated a good level of agreement, as the dispersion of points was reduced and relatively close to the line representing the mean bias. However, Observer A exhibited the most reliable agreement with the gold standard, as evidenced by the narrower limits, suggesting more accurate and reliable measurements. Based on these findings, in the main study, video recordings of the BUT test will be taken with a camera and will be evaluated by Observer A to ensure the reliability of all measurements. Given that we found variability in the administration of the BUT test, future studies should incorporate rigorous examiner training protocols and consider the use of video-assisted documentation to ensure greater consistency and reliability in objective testing.

Given that assessments of symptoms rely on subjective questionnaires, this study highlights the need to develop further studies with more objective measures to improve research accuracy and reliability of study protocols. In the future, this protocol could be optimized by removing or incorporating other instruments to improve its validity and accuracy. In particular, efforts will be made to improve standardization in the main study, including (1) structured examiner training sessions using standardized video demonstrations and competency-based checklists; (2) calibration procedures across examiners to ensure consistent adherence to the examination protocols; and (3) an evaluation of the feasibility of video-assisted documentation to allow for post hoc quality control and improved reproducibility.

Limitations of the Study

This study has several limitations that should be considered for future protocol improvements. A limitation of this study is the relatively small sample size. However, as a pilot, the primary aim was to test the feasibility and robustness of the study protocol. Future studies involving larger and more diverse populations will be essential to enhance statistical power and assess the protocol’s applicability across broader demographic and clinical settings. The questionnaire chosen to assess convergence insufficiency is widely used internationally; however, some studies have raised concerns about its suitability as a screening or diagnostic tool for CI [70,71]. Furthermore, a recent Rasch analysis identified aspects of the questionnaire that require refinement to enhance its performance [66].

In our pilot study, the validation of the questionnaires was limited to an assessment of internal consistency due to the sample size being insufficient for a full psychometric analysis. Future studies with larger and more diverse samples are needed to enable comprehensive psychometric validations, including assessments of construct validity, test–retest reliability, and factor structure. To further enhance the comprehensiveness of symptom profiling in future phases, additional validated tools, such as SQVD, may be incorporated. These instruments could provide a more nuanced understanding of the range and severity of visual symptoms associated with DESS, particularly those related to binocular vision. Although the SQVD is not yet available in Portuguese, its inclusion in the protocol could add significant value, pending appropriate translation and cultural adaptation.

In future phases of the study, it is important to consider the addition of a longitudinal follow-up to monitor DESS symptoms and visual and binocular function changes, particularly in relation to sustained screen exposure. This will help clarify whether certain functional markers, such as accommodation or tear film instability, may serve as early indicators of symptom development.

It is therefore recommended that the protocol be refined in line with ongoing scientific research. Despite these limitations, the results contribute to the validation of the study instruments, providing a more robust foundation for future studies using this methodology and serving as a basis for further research in the field.

5. Conclusions

The DESIROUS protocol aims to employ methods to ensure consistent data collection procedures, including a combination of objective and subjective measures. This pilot study supports the feasibility of implementing the DESIROUS protocol to assess binocular vision and ocular surface parameters. These preliminary findings serve as a foundation for a larger study aimed at exploring these associations in greater depth. The overarching goal is to establish clinical guidelines that assist eye care professionals in incorporating objective assessments into clinical practice, thereby enabling more personalized and effective management of visual complaints. This study contributes to the advancement of personalized medicine by integrating both subjective and objective measures to better characterize individual visual profiles associated with DESS. The DESIROUS protocol offers a structured framework for identifying potential functional biomarkers—such as binocular vision anomalies or ocular surface anomalies—that may help distinguish true DESS from other coexisting or underlying conditions requiring clinical intervention, such as convergence insufficiency, uncorrected refractive errors, or dry eye disease. By facilitating early and more accurate diagnosis, this approach may enable tailored treatment strategies, reduce misdiagnosis, and support targeted patient management.

Author Contributions

M.J.B. conceived the idea, developed the protocol, reviewed the literature, participated in the data collection for the pilot study, and wrote the initial draft of the manuscript. C.L. and A.M.-R. supervised the design of the data collection tools and contributed to the manuscript writing. P.A. was responsible for the sampling process and the development of the statistical approach. C.L., A.M.-R., P.A., and A.G. critically reviewed the manuscript for intellectual content. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was publicly discussed as part of a doctoral thesis project in July 2022 in Évora, Portugal, and was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of the University of Évora (reference number 22090-29/11/2022) and the Lisbon School of Health Technology (reference number 96-2022-10/01/2023).

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Acknowledgments

Susana Plácido from the School of Health Technology, Polytechnic Institute of Lisbon. Finalist students of the bachelor’s program in Orthoptics and Vision Sciences at the Lisbon School of Health Technology, Patricia Barreira, Raquel Pereira, and Sofia Lourenço.

Conflicts of Interest

C.L. reports personal fees from Eyerising International outside the submitted work. A.G. reports personal fees from Thea, Nevakar, Zeiss, and Eyerising and grants from Essilor and CooperVision outside the submitted work. M.J.B., P.A., and A.M.-R. declare that they do not have competing interests.

Abbreviations

The following abbreviations are used in this manuscript:

| MDPI | Multidisciplinary Digital Publishing Institute |

| BAF | Binocular accommodative facility |

| BUT | Tear film break-up time |

| CI | Convergence insufficiency |

| CISS | Convergence Insufficiency Symptom Survey |

| CITT | Convergence Insufficiency Treatment Trial |

| cm | Centimeters |

| cpm | Cycles per minute |

| CVS | Computer Vision Syndrome |

| CVS-Q | Computer Vision Syndrome Questionnaire |

| DED | Dry eye disease |

| DEQ-5 | Dry Eye Questionnaire version 5 |

| DESS | Digital Eye Strain Syndrome |

| ESTeSL | Lisbon School of Health Technology |

| ICC | Intraclass Correlation Coefficient |

| LE | Left Eye |

| NFV | Negative Fusional Vergence |

| NPA | Near point accommodation |

| NPC | Near point of convergence |

| OSDI | Ocular Surface Disease Index |

| PD | Prismatic Diopter |

| PFV | Positive Fusional Vergence |

| QOL | Quality of Life |

| RAF | Royal Air Force ruler |

| RE | Right Eye |

| SD | Standard deviation |

| SS | Sjögren’s Syndrome |

| SQVD | Symptom Questionnaire for Visual Dysfunctions |

| VA | Visual acuity |

References

- Sheppard, A.L.; Wolffsohn, J.S. Digital eye strain: Prevalence, measurement and amelioration. BMJ Open Ophthalmol. 2018, 3, e000146. [Google Scholar] [CrossRef]

- Blehm, C.; Vishnu, S.; Khattak, A.; Mitra, S.; Yee, R.W. Computer vision syndrome: A review. Surv. Ophthalmol. 2005, 50, 253–262. [Google Scholar] [CrossRef] [PubMed]

- Rosenfield, M. Computer vision syndrome: A review of ocular causes and potential treatments. Ophthalmic Physiol. Opt. 2011, 31, 502–515. [Google Scholar] [CrossRef]

- Seguí, M.; Cabrero-garcía, J.; Crespo, A.; Verdú, J.; Ronda, E. A reliable and valid questionnaire was developed to measure Computer Vision Syndrome at the workplace. J. Clin. Epidemiol. 2015, 68, 662–673. [Google Scholar] [CrossRef]

- Seresirikachorn, K.; Thiamthat, W.; Sriyuttagrai, W.; Soonthornworasiri, N.; Singhanetr, P.; Yudtanahiran, N.; Theeramunkong, T. Effects of digital devices and online learning on computer vision syndrome in students during the COVID-19 era: An online questionnaire study. BMJ Paediatr. Open 2022, 6, e001429. [Google Scholar] [CrossRef]

- Abusamak, M.; Jaber, H.M.; Alrawashdeh, H.M. The Effect of Lockdown Due to the COVID-19 Pandemic on Digital Eye Strain Symptoms Among the General Population: A Cross-Sectional Survey. Front. Public Health 2022, 10, 895517. [Google Scholar] [CrossRef] [PubMed]

- Akowuah, P.K.; Nti, A.N.; Ankamah-Lomotey, S.; Frimpong, A.A.; Fummey, J.; Boadi, P.; Osei-Poku, K.; Adjei-Anang, J. Digital Device Use, Computer Vision Syndrome, and Sleep Quality among an African Undergraduate Population. Adv. Public Health 2021, 2021, 6611348. [Google Scholar] [CrossRef]

- Almudhaiyan, T.M.; Aldebasi, T.; Alakel, R.; Marghlani, L.; Aljebreen, A.; Moazin, O.M. The Prevalence and Knowledge of Digital Eye Strain Among the Undergraduates in Riyadh, Saudi Arabia. Cureus J. Med. Sci. 2023, 15, e37081. [Google Scholar] [CrossRef]

- Gammoh, Y. Digital Eye Strain and Its Risk Factors Among a University Student Population in Jordan: A Cross-Sectional Study. Cureus 2021, 13, 6–13. [Google Scholar] [CrossRef]

- Iqbal, M.; Soliman, A.; Ibrahim, O.; Gad, A. Analysis of the Outcomes of the Screen-Time Reduction in Computer Vision Syndrome: A Cohort Comparative Study. Clin. Ophthalmol. 2023, 17, 123–134. [Google Scholar] [CrossRef]

- Peter, R.G.; Giloyan, A.; Harutyunyan, T.; Petrosyan, V. Computer vision syndrome (CVS): The assessment of prevalence and associated risk factors among the students of the American University of Armenia. J. Public Health 2023, 2023, 1–10. [Google Scholar] [CrossRef]

- Sharma, A.; Satija, J.; Antil, P.; Dahiya, R.; Shekhawat, S. Determinants of digital eye strain among university students in a district of India: A cross-sectional study. J. Public Health 2023, 32, 1571–1576. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Wei, X.; Deng, Y. Computer Vision Syndrome During SARS-CoV-2 Outbreak in University Students: A Comparison Between Online Courses and Classroom Lectures. Front. Public Health 2021, 9, 696036. [Google Scholar] [CrossRef] [PubMed]

- Wangsan, K.; Upaphong, P.; Assavanopakun, P.; Sapbamrer, R.; Sirikul, W.; Kitro, A.; Sirimaharaj, N.; Kuanprasert, S.; Saenpo, M.; Saetiao, S.; et al. Self-Reported Computer Vision Syndrome among Thai University Students in Virtual Classrooms during the COVID-19 Pandemic: Prevalence and Associated Factors. Int. J. Environ. Res. Public Health 2022, 19, 3996. [Google Scholar] [CrossRef]

- Anshel, J. The Eyes and Visual System. In Visual Ergonomics Handbook, 1st ed.; CRC Press: Boca Raton, FL, USA, 2005; p. 232. [Google Scholar]

- Shrestha, G.S.; Mohamed, F.N.; Shah, D.N. Visual problems among video display terminal (VDT) users in Nepal. J. Optom. 2011, 4, 56–62. [Google Scholar] [CrossRef]

- Yang, S.N.; Tai, Y.C.; Hayes, J.R.; Doherty, R.; Corriveau, P.; Sheedy, J.E. Effects of font size and display quality on reading performance and visual discomfort of developmental readers. Optom. Vis. Sci. 2010, 87, 105–244. [Google Scholar]

- Rosenfield, M.; Li, R.T.; Kirsch, N.T. A double-blind test of blue-blocking filters on symptoms of digital eye strain. Work 2020, 65, 343–348. [Google Scholar] [CrossRef]

- Cacho-Martínez, P.; Cantó-Cerdán, M.; Carbonell-Bonete, S.; García-Muñoz, Á. Characterization of visual symptomatology associated with refractive, accommodative, and binocular anomalies. J. Ophthalmol. 2015, 2015, 31–42. [Google Scholar] [CrossRef]

- Jaiswal, S.; Asper, L.; Long, J.; Lee, A.; Harrison, K.; Golebiowski, B. Ocular and visual discomfort associated with smartphones, tablets and computers: What we do and do not know. Clin. Exp. Optom. 2019, 102, 463–477. [Google Scholar] [CrossRef]

- Gowrisankaran, S.; Sheedy, J.E. Computer vision syndrome: A review. Work 2015, 52, 303–314. [Google Scholar] [CrossRef]

- Sánchez-Brau, M.; Domenech-Amigot, B.; Brocal-Fernández, F.; Quesada-Rico, J.A.; Seguí-Crespo, M. Prevalence of Computer Vision Syndrome and Its Relationship with Ergonomic and Individual Factors in Presbyopic VDT Workers Using Progressive Addition Lenses. Int. J. Environ. Res. Public Health 2020, 17, 1003. [Google Scholar] [CrossRef] [PubMed]

- Soria-Oliver, M.; López, J.S.; Torrano, F.; García-González, G.; Lara, Á. New patterns of information and communication technologies usage at work and their relationships with visual discomfort and musculoskeletal diseases: Results of a cross-sectional study of spanish organizations. Int. J. Environ. Res. Public Health 2019, 16, 3166. [Google Scholar] [CrossRef] [PubMed]

- Mowatt, L.; Gordon, C.; Santosh, A.B.R.; Jones, T. Computer vision syndrome and ergonomic practices among undergraduate university students. Int. J. Clin. Pract. 2018, 72, e13035. [Google Scholar] [CrossRef] [PubMed]

- Cacho-Martínez, P.; Cantó-Cerdán, M.; Lara-Lacárcel, F.; García-Muñoz, Á. Assessing the role of visual dysfunctions in the association between visual symptomatology and the use of digital devices. J. Optom. 2024, 17, 100510. [Google Scholar] [CrossRef]

- Alqarni, A.M.; Alabdulkader, A.M.; Alghamdi, A.N.; Altayeb, J.; Jabaan, R.; Assaf, L.; A Alanazi, R. Prevalence of Digital Eye Strain Among University Students and Its Association with Virtual Learning During the COVID-19 Pandemic. Clin. Ophthalmol. 2023, 17, 1755–1768. [Google Scholar] [CrossRef]

- Artime-Ríos, E.; Suárez-Sánchez, A.; Sánchez-Lasheras, F.; Seguí-Crespo, M. Computer vision syndrome in healthcare workers using video display terminals: An exploration of the risk factors. J. Adv. Nurs. 2022, 78, 2095–2110. [Google Scholar] [CrossRef]

- De-Hita-Cantalejo, C.; García-pérez, Á.; Capote-puente, R.; Sánchez-gonzález, M.C. Accommodative and binocular disorders in preteens with computer vision syndrome: A cross-sectional study. Ann. N. Y. Acad. Sci. 2021, 1492, 73–81. [Google Scholar] [CrossRef]

- De-Hita-Cantalejo, C.; Sanchez-Gonzalez, J.M.; Silva-Viguera, C.; Sanchez-Gonzalez, M.C. Tweenager Computer Visual Syndrome Due to Tablets and Laptops during the Postlockdown COVID-19 Pandemic and the Influence on the Binocular and Accommodative System. J. Clin. Med. 2022, 11, 5317. [Google Scholar] [CrossRef]

- Maharjan, U.; Rijal, S.; Jnawali, A.; Sitaula, S.; Bhattarai, S.; Shrestha, G.B. Binocular vision findings in normally-sighted school aged children who used digital devices. PLoS ONE 2022, 17, e0266068. [Google Scholar] [CrossRef]

- Liu, Z.Y.; Zhang, K.Y.; Gao, S.A.; Yang, J.; Qiu, W. Correlation between Eye Movements and Asthenopia: A Prospective Observational Study. J. Clin. Med. 2022, 11, 7043. [Google Scholar] [CrossRef]

- Auffret, E.; Mielcarek, M.; Bourcier, T.; Delhommais, A.; Speeg-Schatz, C.; Sauer, A. Digital eye strain. Functional symptoms and binocular balance analysis in intensive digital users. J. Fr. D’ophtalmol. 2022, 45, 438–445. [Google Scholar] [CrossRef]

- Yammouni, R.; Evans, B.J.W. Is reading rate in digital eyestrain influenced by binocular and accommodative anomalies? J. Optom. 2021, 14, 229–239. [Google Scholar] [CrossRef] [PubMed]

- Golebiowski, B.; Long, J.; Harrison, K.; Lee, A.; Chidi-Egboka, N.; Asper, L. Smartphone Use and Effects on Tear Film, Blinking and Binocular Vision. Curr. Eye Res. 2020, 45, 428–434. [Google Scholar] [CrossRef]

- Yammouni, R.; Evans, B.J. An investigation of low power convex lenses (adds) for eyestrain in the digital age (CLEDA). J. Optom. 2020, 13, 198–209. [Google Scholar] [CrossRef] [PubMed]

- Sánchez-Brau, M.; García González, G. Prevalencia del síndrome visual informático (SVI) en trabajadores présbitas. Arch. Prev. Riesgos Laborales 2021, 24, 200–203. [Google Scholar] [CrossRef]

- Auffret, E.; Mielcarek, M.; Bourcier, T.; Delhommais, A.; Speeg-Schatz, C.; Sauer, A. Stress oculaire induit par les écrans. Analyses des symptômes fonctionnels et de l ’ équilibre binoculaire chez des utilisateurs intensifs. J. Fr. D’ophtalmol. 2022, 45, 438–445. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Zeng, P.; Deng, X.-W.; Liang, J.-Q.; Liao, Y.-R.; Fan, S.-X.; Xiao, J.-H. Eye Habits Affect the Prevalence of Asthenopia in Patients with Myopia. J. Ophthalmol. 2022, 2022, 8669217. [Google Scholar] [CrossRef]

- Sánchez-Brau, M.; Domenech-Amigot, B.; Brocal-Fernández, F.; Seguí-Crespo, M. Computer vision syndrome in presbyopic digital device workers and progressive lens design. Ophthalmic Physiol. Opt. 2021, 41, 922–931. [Google Scholar] [CrossRef]

- Cantó-Sancho, N.; Sánchez-Brau, M.; Ivorra-Soler, B.; Seguí-Crespo, M. Computer vision syndrome prevalence according to individual and video display terminal exposure characteristics in Spanish university students. Int. J. Clin. Pract. 2021, 75, e13681. [Google Scholar] [CrossRef]

- Lwanga, S.K.; Lemeshow, S. Sample Size Determination in Health Studies: A Practical Manual; World Health Organization: Geneva, Switzerland, 1991. [Google Scholar]

- Rodrigues, M.A.; Mateus, C. Adaptação cultural e confiabilidade da versão portuguesa do Computer Vision Syndrome Questionnaire (CVS-Q). Resumos Ciênc. Visão 2020, 261. [Google Scholar]

- Alves, M.; Brardo, F. Tradução e validação para a Língua Portuguesa do Questionário Dry Eye Questionnaire. Universidade da Beira Interior, Covilhã, Portugal, 2012. [Google Scholar]

- Gross, L.G.; Pozzebon, M.E.; Favarato, A.P.; Ayub, G.; Alves, M. Translation and validation of the 5-Item Dry Eye Questionnaire into Portuguese. Arq. Bras. Oftalmol. 2024, 87, e2022-0197. [Google Scholar] [CrossRef] [PubMed]

- Tavares, C.; Nunes, A.M.M.F.; Nunes, A.J.S.; Pato, M.V.; Monteiro, P.M.L. Translation and validation of Convergence Insufficiency Symptom Survey (CISS) to Portuguese-psychometric results. Arq. Bras. Oftalmol. 2014, 77, 21–24. [Google Scholar] [CrossRef] [PubMed]

- Rouse, M.W.; Borsting, E.J.; Mitchell, G.L.; Scheiman, M.; Cotter, S.A.; Cooper, J.; Kulp, M.T.; London, R.; Wensveen, J.; Convergence Insufficiency Treatment Trial Group. Validity and reliability of the revised convergence insufficiency symptom survey in adults. Ophthalmic Physiol. Opt. 2004, 24, 384–390. [Google Scholar] [CrossRef] [PubMed]

- Darko-Takyia, C.; Owusu-Ansaha, A.; Boampongb, F.; Morny, E.K.; Hammond, F.; Ocansey, S. Convergence insufficiency symptom survey (CISS) scores are predictive of severity and number of clinical signs of convergence insufficiency in young adult Africans. J. Optom. 2022, 15, 228–237. [Google Scholar] [CrossRef]

- Boccardo, L.; Di Vizio, A.; Galli, G.; Naroo, S.A.; Fratini, A.; Tavazzi, S.; Gurioli, M.; Zeri, F. Translation and validation of convergence insufficiency symptom survey to Italian: Psychometric results. J. Optom. 2023, 16, 189–198. [Google Scholar] [CrossRef]

- Marran, L.F. Validity and reliability of the revised Convergence Insufficiency Symptom Survey in children aged 9 to 18 years (multiple letters). Optom. Vis. Sci. 2004, 81, 489–490. [Google Scholar] [CrossRef]

- Wolffsohn, J.S.; Arita, R.; Chalmers, R.; Djalilian, A.; Dogru, M.; Dumbleton, K.; Gupta, P.K.; Karpecki, P.; Lazreg, S.; Pult, H.; et al. TFOS DEWS II Diagnostic Methodology report. Ocul. Surf. 2017, 15, 539–574. [Google Scholar]

- Chalmers, R.L.; Begley, C.G.; Caffery, B. Validation of the 5-Item Dry Eye Questionnaire (DEQ-5): Discrimination across self-assessed severity and aqueous tear deficient dry eye diagnoses. Contact Lens Anterior Eye 2010, 33, 55–60. [Google Scholar] [CrossRef]

- Stapleton, F.; Alves, M.; Bunya, V.Y.; Jalbert, I.; Lekhanont, K.; Malet, F.; Na, K.-S.; Schaumberg, D.; Uchino, M.; Vehof, J.; et al. TFOS DEWS II Epidemiology Report. Ocul. Surf. 2017, 15, 334–365. [Google Scholar] [CrossRef]

- Ilechie, A.; Mensah, T.; Abraham, C.H.; Addo, N.A.; Ntodie, M.; Ocansey, S.; Boadi-Kusi, S.B.; Owusu-Ansah, A.; Ezinne, N. Assessment of four validated questionnaires for screening of dry eye disease in an African cohort. Contact Lens Anterior Eye 2022, 45, 101468. [Google Scholar] [CrossRef]

- von Noorden, G.K.; Campos, E.C. Binocular Vision and Ocular Motility: Theory and Management of Strabismus, 6th ed.; Mosby: St. Louis, MO, USA, 2002. [Google Scholar]

- Bicas, H.E.A. Fisiologia da visão binocular. Arq. Bras. Oftalmol. 2004, 67, 172–180. [Google Scholar] [CrossRef]

- Daiber, H.F.; Gnugnoli, D.M. Visual Acuity; StatPearls: Treasure Island, FL, USA, 2024. [Google Scholar]

- Hussaindeen, J.R.; Murali, A. Accommodative insufficiency: Prevalence, impact and treatment options. Clin. Optom. 2020, 12, 135–149. [Google Scholar] [CrossRef] [PubMed]

- Franco, S.; Moreira, A.; Fernandes, A.; Baptista, A. Accommodative and binocular vision dysfunctions in a Portuguese clinical population. J. Optom. 2022, 15, 271–277. [Google Scholar] [CrossRef]

- Nunes, A.F.; Monteiro, P.M.L.; Ferreira, F.B.P.; Nunes, A.S. Convergence insufficiency and accommodative insufficiency in children. BMC Ophthalmol. 2019, 19, 58. [Google Scholar] [CrossRef]

- García-Muñoz, Á.; Carbonell-Bonete, S.; Cantó-Cerdán, M.; Cacho-Martínez, P. Accommodative and binocular dysfunctions: Prevalence in a randomised sample of university students. Clin. Exp. Optom. 2016, 99, 313–321. [Google Scholar] [CrossRef]

- Abelson, M.B.; Ousler, G.W.; Nally, L.A.; Welch, D.; Krenzer, K. Alternative reference values for tear film break up time in normal and dry eye populations. Adv. Exp. Med. Biol. 2002, 506, 1121–1125. [Google Scholar] [CrossRef]

- Cronbach, L.J. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Streiner, D.L. Statistical Developments and Applications Being Inconsistent About Consistency: When Coefficient Alpha Does and Doesn’t Matter Streiner Scales And Indexes. J. Personal. Assess. 2003, 80, 217–222. [Google Scholar] [CrossRef] [PubMed]

- Vaz, F.T.; Henriques, S.P.; Silva, D.S.; Roque, J.; Lopes, A.S.; Mota, M. Digital asthenopia: Portuguese group of ergophthalmology survey. Acta. Med. Port. 2019, 32, 260–265. [Google Scholar] [CrossRef]

- Pina, A. Síndrome Visual de Computador: Influência de Fatores Individuais e da Ergonomia do Posto de Trabalho nas Alterações Visuais. Dissertação, Instituto Politécnico do Porto, Porto, Portugal, 2018. [Google Scholar]

- González-Pérez, M.; Pérez-Garmendia, C.; Barrio, A.R.; García-Montero, M.; Antona, B. Spanish cross-cultural adaptation and rasch analysis of the convergence insufficiency symptom survey (Ciss). Transl. Vis. Sci. Technol. 2020, 9, 23. [Google Scholar] [CrossRef]

- García-Muñoz, Á.; Carbonell-Bonete, S.; Cacho-Martínez, P. Symptomatology associated with accommodative and binocular vision anomalies. J. Optom. 2014, 7, 178–192. [Google Scholar] [CrossRef] [PubMed]

- Cantó-Cerdán, M.; Cacho-Martínez, P.; Lara-Lacárcel, F.; García-Muñoz, Á. Rasch analysis for development and reduction of Symptom Questionnaire for Visual Dysfunctions (SQVD). Sci. Rep. 2021, 11, 14855. [Google Scholar] [CrossRef] [PubMed]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed]

- Horwood, A.M.; Toor, S.; Riddell, P.M. Screening for convergence insuf fi ciency using the CISS is not indicated in young adults. Br. J. Ophthalmol. 2014, 98, 679–683. [Google Scholar] [CrossRef] [PubMed]

- Clark, T.Y.; Clark, R.A. Convergence Insufficiency Symptom Survey Scores for Reading Versus Other Near Visual Activities in School-Age Children. Am. J. Ophthalmol. 2015, 160, 905–912.e2. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).