A Three-Dimensional Triangle Mesh Integration Method for Oblique Photography Model Data

Abstract

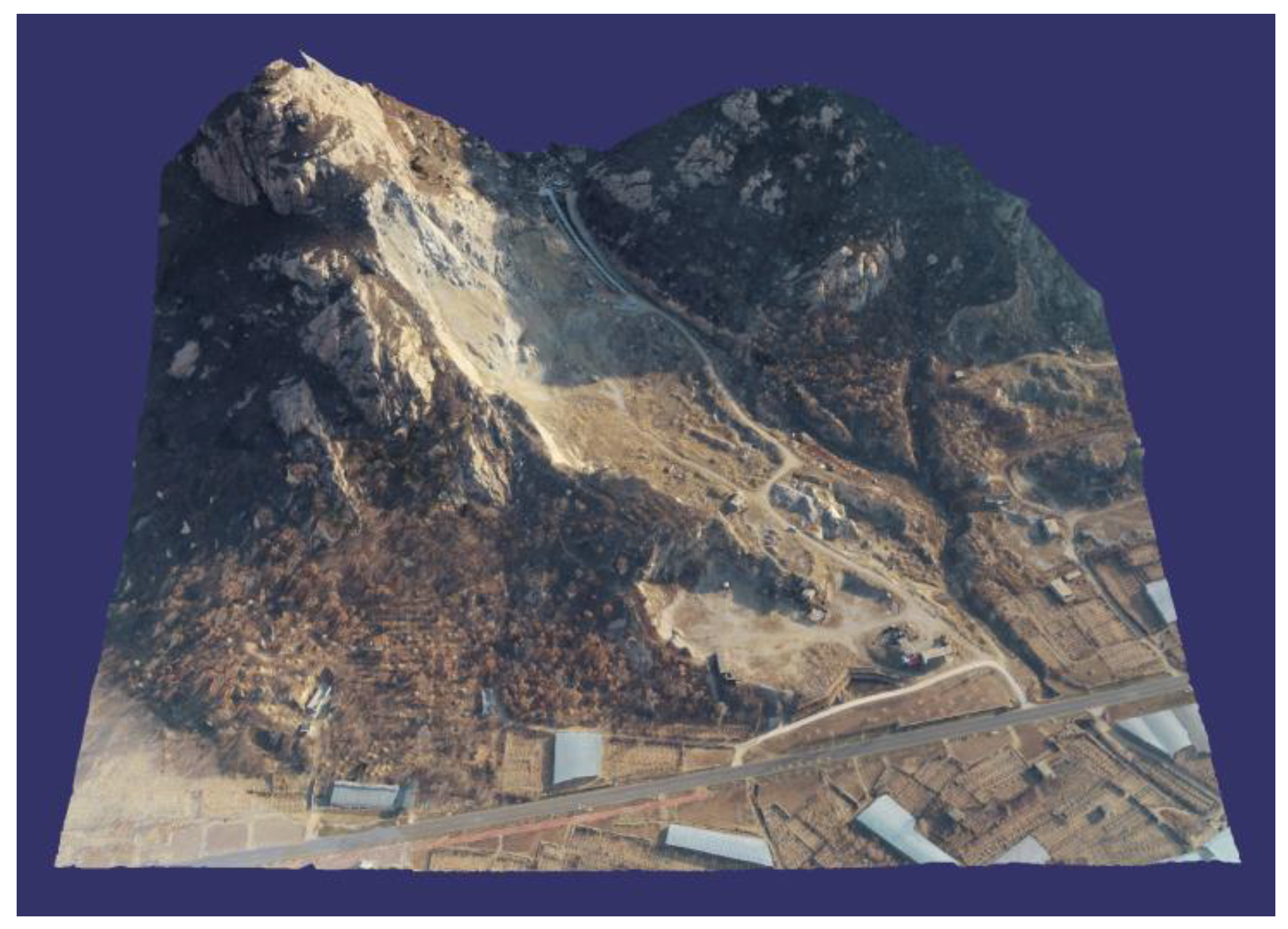

:1. Introduction

2. Data and Methods

2.1. Data Acquisition

2.2. Methods

2.2.1. Drawing 3D Model Boundary Line

2.2.2. 3D Triangle Mesh Cutting and Reconstruction

2.2.3. 3D Model Integration

3. Experimental Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, D.R.; Shao, Z.F.; Yang, X.M. Theory and practice from digital city to smart city. Geospat. Inf. 2011, 9, 2. [Google Scholar]

- Song, Y.-S. Cultural assets reconstruction using efficient 3D-positioning method for tourism geographic information system. J. Tour. Leis. Res. 2010, 22, 97–111. [Google Scholar]

- Leng, X.; Liu, D.; Luo, J.; Mei, Z. Research on a 3D geological disaster monitoring platform based on REST service. ISPRS Int. J. Geo-Inf. 2018, 7, 226. [Google Scholar] [CrossRef]

- Singla, J.G.; Padia, K. A novel approach for generation and visualization of virtual 3D city model using open source libraries. J. Indian Soc. Remote Sens. 2021, 49, 1239–1244. [Google Scholar] [CrossRef]

- Mademlis, I.; Mygdalis, V.; Nikolaidis, N.; Montagnuolo, M.; Negro, F.; Messina, A.; Pitas, I. High-level multiple-UAV cinematography tools for covering outdoor events. IEEE Trans. Broadcast. 2019, 65, 627–635. [Google Scholar] [CrossRef]

- Zhang, Z.X.; Zhang, J.Q. Solutions and core techniques of city modeling. World Sci. Technol. RD 2003, 25, 7. [Google Scholar]

- Wu, Y.Q.; Zhang, J.X. Application value of three-dimensional UAV real scene modeling technology in mine deep and peripheral geological situation exploration. World Nonferrous Met. 2022, 14, 113–115. [Google Scholar]

- Murtiyoso, A.; Remondino, F.; Rupnik, E.; Nex, F.; Grussenmeyer, P. Oblique aerial photography tool for building inspection and damage assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 309–313. [Google Scholar] [CrossRef]

- Garland, M.; Willmott, A.; Heckbert, P.S. Hierarchical face clustering on polygonal surfaces. In Proceedings of the 2001 Symposium on Interactive 3D Graphics, Chapel Hill, NC, USA, 26–29 March 2001; Association for Computing Machinery: New York, NY, USA, 2001; pp. 49–58. [Google Scholar]

- Sander, P.V.; Wood, Z.J.; Gortler, S.; Snyder, J.; Hoppe, H. Multi-chart geometry images. In Proceedings of the Eurographics Symposium on Geometry Processing, Aachen, Germany, 23–25 June 2003; 10p. [Google Scholar]

- Lévy, B.; Petitjean, S.; Ray, N.; Maillot, J. Least squares conformal maps for automatic texture atlas generation. ACM Trans. Graph. TOG 2002, 21, 362–371. [Google Scholar] [CrossRef]

- Paulsen, R.R.; Bærentzen, J.A.; Larsen, R. Markov random field surface reconstruction. IEEE Trans. Vis. Comput. Graph. 2009, 16, 636–646. [Google Scholar] [CrossRef]

- Wang, X.; Liu, X.; Lu, L.; Li, B.; Cao, J.; Yin, B.; Shi, X. Automatic hole-filling of CAD models with feature-preserving. Comput. Graph. 2012, 36, 101–110. [Google Scholar] [CrossRef]

- Li, Z.; Meek, D.S.; Walton, D.J. Polynomial blending in a mesh hole-filling application. Comput. Aided Des. 2010, 42, 340–349. [Google Scholar] [CrossRef]

- Liu, S.; Wang, C.C. Quasi-interpolation for surface reconstruction from scattered data with radial basis function. Comput. Aided Geom. Des. 2012, 29, 435–447. [Google Scholar] [CrossRef]

- Lindstrom, P.; Koller, D.; Ribarsky, W.; Hodges, L.F.; Faust, N.; Turner, G.A. Real-time, continuous level of detail rendering of height fields. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; pp. 109–118. [Google Scholar]

- Hoppe, H. View-dependent refinement of progressive meshes. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 3–8 August 1997; pp. 189–198. [Google Scholar]

- Ye, K.; Ma, X.Y.; Shi, L. A monomorphic method of oblique photography model based on 3D scene. In Proceedings of the 3rd International Conference on Green Materials and Environmental Engineering (GMEE), Beijing, China, 22–23 October 2017; pp. 93–97. [Google Scholar]

- Schneider, M.; Klein, R. Efficient and accurate rendering of vector data on virtual landscapes. J. WSCG 2007, 15, 59–64. [Google Scholar]

- Agugiaro, G.; Kolbe, T.H. A deterministic method to integrate triangular meshes of different resolution. ISPRS J. PhotoGrammetry Remote Sens. 2012, 71, 96–109. [Google Scholar] [CrossRef]

- Xie, X.; Xu, W.; Zhu, Q.; Zhang, Y.; Du, Z. Integration method of TINs and GRIDs for multi-resolution surface modeling. Geo-Spat. Inf. Sci. 2013, 16, 61–68. [Google Scholar] [CrossRef]

- Geng, Z.Y.; Ren, N.; Li, Y.C.; Xiao, J.C. Research on the fusion method of 3D models of oblique photography and large scene terrain. Sci. Surv. Mapp. 2016, 41, 108–113. [Google Scholar]

- Noardo, F. Multisource spatial data integration for use cases applications. Trans. GIS 2022, 26, 2874–2913. [Google Scholar] [CrossRef]

- Livny, Y.; Kogan, Z.; El-Sana, J. Seamless patches for GPU-based terrain rendering. Vis. Comput. 2009, 25, 197–208. [Google Scholar] [CrossRef]

- Jung, Y.; So, B.; Lee, K.; Hwang, S. Swept volume with self-intersection for five-axis ball-end milling. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2003, 217, 1173–1178. [Google Scholar] [CrossRef]

- Temkin, I.; Myaskov, A.; Deryabin, S.; Konov, I.; Ivannikov, A. Design of a digital 3D model of transport–technological environment of open-pit mines based on the common use of telemetric and geospatial information. Sensors 2021, 21, 6277. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z. Research on 3D digital map system and key technology. Procedia Environ. Sci. 2012, 12, 514–520. [Google Scholar]

- Lee, G.; Lee, S.; Kwon, S. A study on compression of 3D model data and optimization of website. J. Eng. Appl. Sci. JEAS 2019, 14, 3934–3937. [Google Scholar] [CrossRef]

- Yoon, G.-J.; Song, J.; Hong, Y.-J.; Yoon, S.M. Single image based three-dimensional scene reconstruction using semantic and geometric priors. Neural Process. Lett. 2022, 54, 3679–3694. [Google Scholar] [CrossRef]

- Yuan, Z.; Li, Y.; Tang, S.; Li, M.; Guo, R.; Wang, W. A survey on indoor 3D modeling and applications via RGB-D devices. Front. Inf. Technol. Electron. Eng. 2021, 22, 815–826. [Google Scholar] [CrossRef]

- Fu, Y.; Yan, Q.; Liao, J.; Zhou, H.; Tang, J.; Xiao, C. Seamless texture optimization for RGB-D reconstruction. IEEE Trans. Vis. Comput. Graph. 2023, 29, 1845–1859. [Google Scholar] [CrossRef]

- Ruan, M.; Xie, M.; Liao, H.; Wang, F. Studying on conversion of oblique photogrammetry data based on OSG and ARX. Proc. IOP Conf. Ser. Earth Environ. Sci. 2019, 310, 032062. [Google Scholar]

- Hughes, J.F.; Foley, J.D. Computer Graphics: Principles and Practice; Pearson Education: London, UK, 2014. [Google Scholar]

- Ericson, C. Real-Time Collision Detection; CRC Press: Boca Raton, FL, USA, 2004. [Google Scholar]

| Experimental 3D Models | Coordinate Range (X) | Coordinate Range (Y) | Coordinate Range (Z) |

|---|---|---|---|

| Building | 15.4097~127.8028 | −244.6184~−166.8273 | 40.7358~110.0085 |

| Circular Garden | 14.9737~29.4751 | −339.6959~−324.4265 | 41.1104~47.5641 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Che, D.; Su, M.; Ma, B.; Chen, F.; Liu, Y.; Wang, D.; Sun, Y. A Three-Dimensional Triangle Mesh Integration Method for Oblique Photography Model Data. Buildings 2023, 13, 2266. https://doi.org/10.3390/buildings13092266

Che D, Su M, Ma B, Chen F, Liu Y, Wang D, Sun Y. A Three-Dimensional Triangle Mesh Integration Method for Oblique Photography Model Data. Buildings. 2023; 13(9):2266. https://doi.org/10.3390/buildings13092266

Chicago/Turabian StyleChe, Defu, Min Su, Baodong Ma, Feng Chen, Yining Liu, Duo Wang, and Yanen Sun. 2023. "A Three-Dimensional Triangle Mesh Integration Method for Oblique Photography Model Data" Buildings 13, no. 9: 2266. https://doi.org/10.3390/buildings13092266

APA StyleChe, D., Su, M., Ma, B., Chen, F., Liu, Y., Wang, D., & Sun, Y. (2023). A Three-Dimensional Triangle Mesh Integration Method for Oblique Photography Model Data. Buildings, 13(9), 2266. https://doi.org/10.3390/buildings13092266