Integrating Machine Learning and Remote Sensing in Disaster Management: A Decadal Review of Post-Disaster Building Damage Assessment

Abstract

1. Introduction

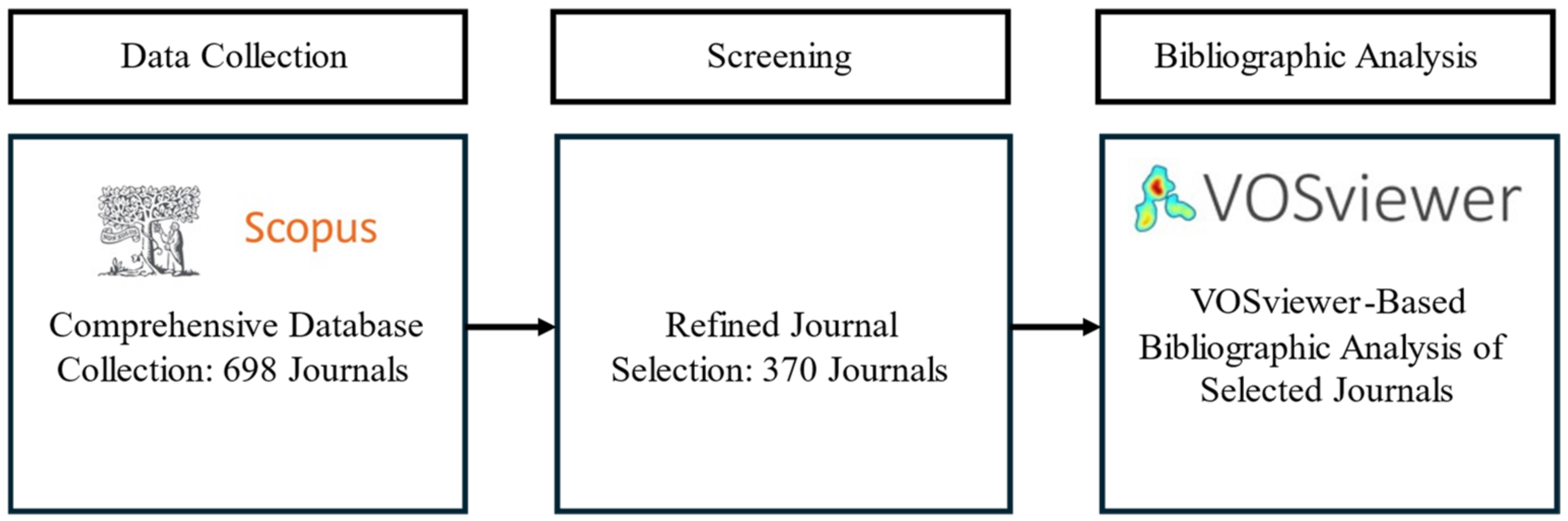

2. Methodology of the Research

2.1. Article Collection from Sources

- What are the comparative strengths and limitations of remote sensing (satellite, UAV) versus ground-based sensing technologies in the detection and assessment of building damage following various types of disasters (e.g., earthquakes, floods, hurricanes)?

- How can artificial intelligence and deep learning techniques (e.g., CNNs) improve the accuracy and efficiency of building damage assessment from diverse data sources?

- Considering the challenges in real-time data collection and analysis in post-disaster scenarios, what are the most effective AI-driven strategies for rapidly assessing building damage to support immediate response and recovery efforts?

- How do machine learning models compare in their ability to detect, segment, and classify different types of building damage in disaster-affected areas?

2.2. Data Sorting/Article Selection

2.3. Bibliometric Analysis Using Vosviewer

3. Results of Bibliometric Analysis

3.1. Bibliometric Performance Trends

3.1.1. Yearly Publication Metrics

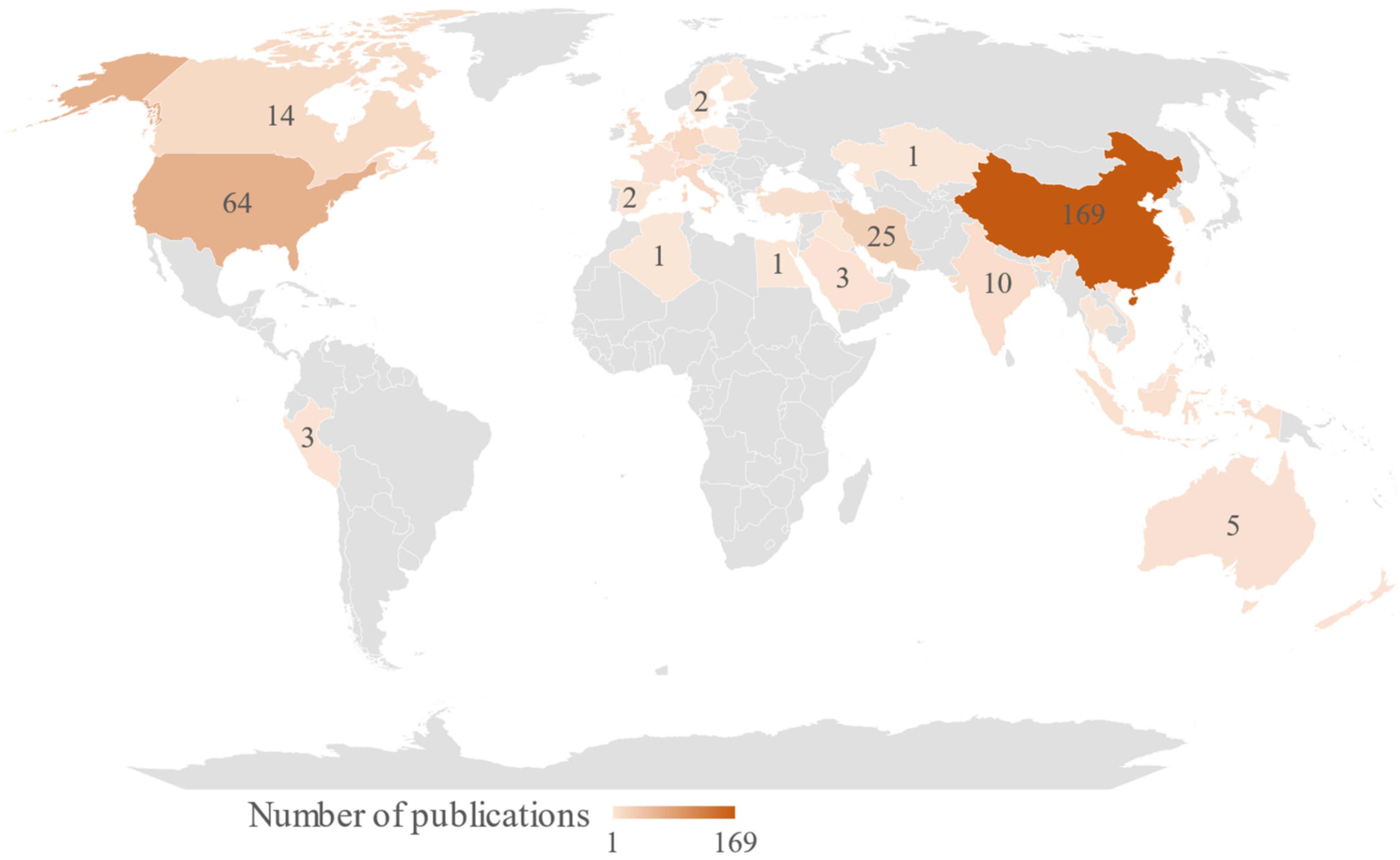

3.1.2. Geographical Distribution of Publications

3.2. Bibliometric Mapping

3.2.1. Co-Occurrence of Keywords

3.2.2. Co-Authorship Network

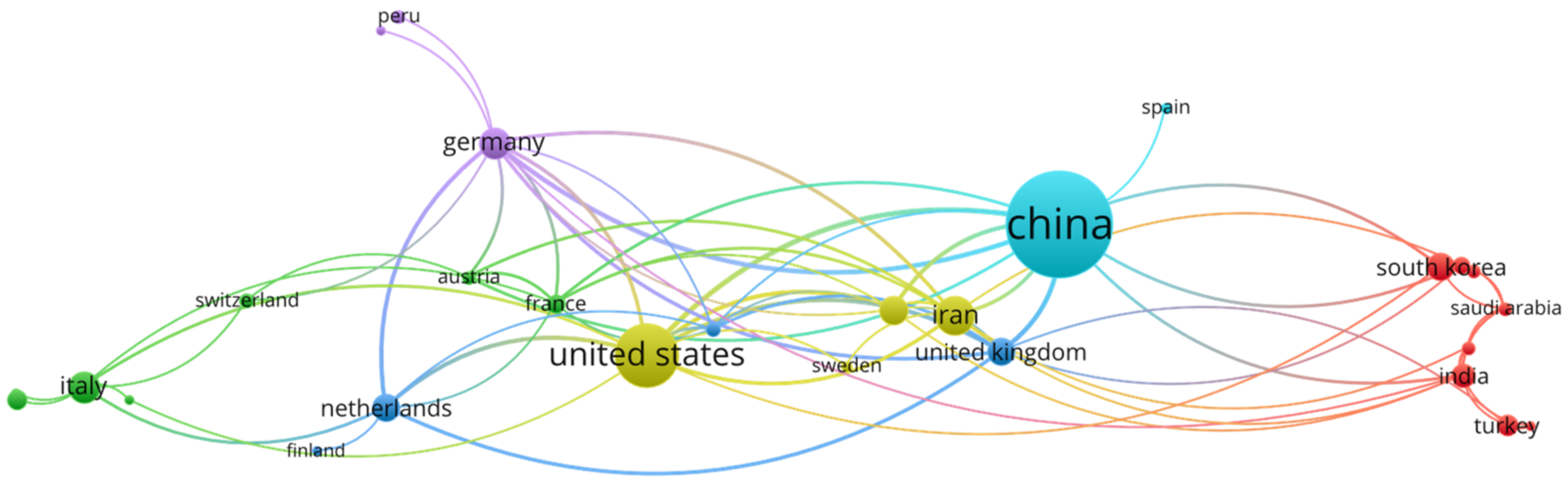

- Co-Authorship Network of Countries

- Co-Authorship Network of Authors

3.2.3. Citation Analysis

- Citation Analysis by Countries

- Citation Analysis of Sources

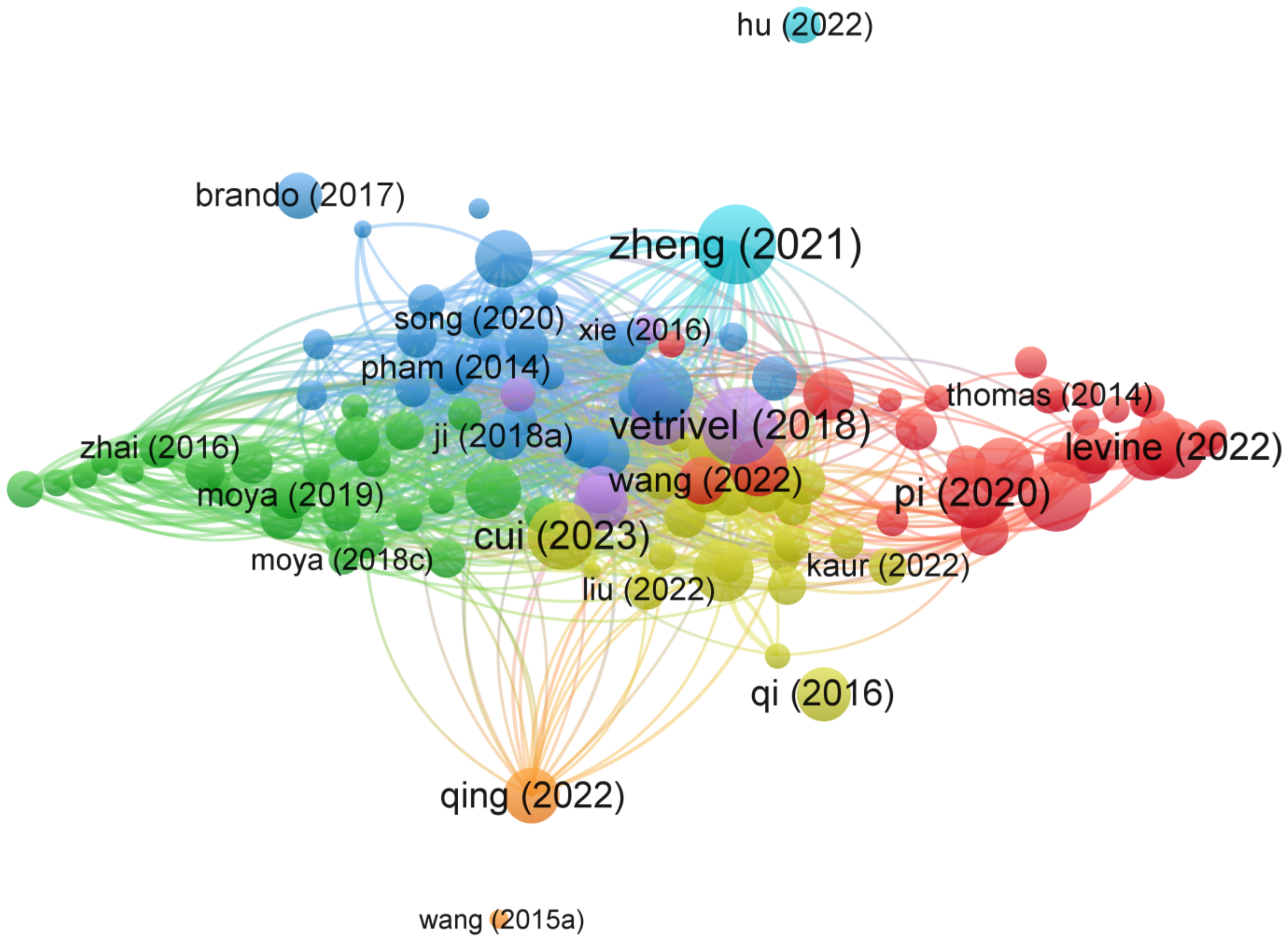

- Citation Analysis of Journal Articles

3.2.4. Bibliographic Coupling

4. Discussion

4.1. Techniques for Data Collection in Building Damage Assessment

4.1.1. Satellite-Based Data Collection

4.1.2. UAV-Based Data Collection

4.1.3. Ground-Based Data Collection

4.2. Analytical Techniques for Post-Disaster Building Assessment

4.2.1. Image-Based Analysis

4.2.2. Point Cloud Data Analysis

4.2.3. Radar Remote Sensing Data Analysis

5. Challenges and Future Direction

5.1. Challenges to Be Solved

- Data Quality and Availability

- Integration of Multiple Data Sources

- Processing and Analysis Complexity

5.2. Future Directions

- Advanced-Data Fusion Techniques

- Real-Time Data Processing

- Improving Model Generalization

- Enhancing UAV Capabilities

- Training for the Rescue Team/Disaster Management Team

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- EM-DAT: Human Cost of Disasters (2000–2019). 2020. Available online: https://www.preventionweb.net/publication/cred-crunch-issue-no-61-december-2020-human-cost-disasters-2000-2019 (accessed on 28 June 2024).

- Jones, R.L.; Guha-Sapir, D.; Tubeuf, S. Human and Economic Impacts of Natural Disasters: Can We Trust the Global Data? Sci. Data 2022, 9, 572. [Google Scholar] [CrossRef] [PubMed]

- Alexander, D. Principles of Emergency Planning and Management; Oxford University Press: Oxford, UK; New York, NY, USA, 2002; ISBN 978-0-19-521838-1. [Google Scholar]

- Hu, D.; Chen, J.; Li, S. Reconstructing Unseen Spaces in Collapsed Structures for Search and Rescue via Deep Learning Based Radargram Inversion. Autom. Constr. 2022, 140, 104380. [Google Scholar] [CrossRef]

- Hu, D.; Chen, L.; Du, J.; Cai, J.; Li, S. Seeing through Disaster Rubble in 3D with Ground-Penetrating Radar and Interactive Augmented Reality for Urban Search and Rescue. J. Comput. Civ. Eng. 2022, 36, 04022021. [Google Scholar] [CrossRef]

- Hu, D.; Li, S.; Chen, J.; Kamat, V.R. Detecting, Locating, and Characterizing Voids in Disaster Rubble for Search and Rescue. Adv. Eng. Inform. 2019, 42, 100974. [Google Scholar] [CrossRef]

- Deng, L.; Wang, Y. Post-Disaster Building Damage Assessment Based on Improved U-Net. Sci. Rep. 2022, 12, 15862. [Google Scholar] [CrossRef]

- Jiang, X.; He, Y.; Li, G.; Liu, Y.; Zhang, X. Building Damage Detection via Superpixel-Based Belief Fusion of Space-Borne SAR and Optical Images. IEEE Sens. J. 2020, 20, 2008–2022. [Google Scholar] [CrossRef]

- Malmgren-Hansen, D.; Sohnesen, T.; Fisker, P.; Baez, J. Sentinel-1 Change Detection Analysis for Cyclone Damage Assessment in Urban Environments. Remote Sens. 2020, 12, 2409. [Google Scholar] [CrossRef]

- Liu, C.; Sui, H.; Huang, L. Identification of Building Damage from UAV-Based Photogrammetric Point Clouds Using Supervoxel Segmentation and Latent Dirichlet Allocation Model. Sensors 2020, 20, 6499. [Google Scholar] [CrossRef]

- Pi, Y.; Nath, N.; Behzadan, A. Convolutional Neural Networks for Object Detection in Aerial Imagery for Disaster Response and Recovery. Adv. Eng. Inform. 2020, 43, 101009. [Google Scholar] [CrossRef]

- Agbehadji, I.E.; Schütte, S.; Masinde, M.; Botai, J.; Mabhaudhi, T. Climate Risks Resilience Development: A Bibliometric Analysis of Climate-Related Early Warning Systems in Southern Africa. Climate 2023, 12, 3. [Google Scholar] [CrossRef]

- Van Eck, N.J.; Waltman, L. Software Survey: VOSviewer, a Computer Program for Bibliometric Mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef]

- Su, H.-N.; Lee, P.-C. Mapping Knowledge Structure by Keyword Co-Occurrence: A First Look at Journal Papers in Technology Foresight. Scientometrics 2010, 85, 65–79. [Google Scholar] [CrossRef]

- Lee, P.-C.; Su, H.-N. Investigating the Structure of Regional Innovation System Research through Keyword Co-Occurrence and Social Network Analysis. Innovation 2010, 12, 26–40. [Google Scholar] [CrossRef]

- Bornmann, L.; Daniel, H. What Do Citation Counts Measure? A Review of Studies on Citing Behavior. J. Doc. 2008, 64, 45–80. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Nex, F.; Vosselman, G. Disaster Damage Detection through Synergistic Use of Deep Learning and 3D Point Cloud Features Derived from Very High Resolution Oblique Aerial Images, and Multiple-Kernel-Learning. ISPRS J. Photogramm. Remote Sens. 2018, 140, 45–59. [Google Scholar] [CrossRef]

- Fernandez Galarreta, J.; Kerle, N.; Gerke, M. UAV-Based Urban Structural Damage Assessment Using Object-Based Image Analysis and Semantic Reasoning. Nat. Hazards Earth Syst. Sci. 2015, 15, 1087–1101. [Google Scholar] [CrossRef]

- Cooner, A.; Shao, Y.; Campbell, J. Detection of Urban Damage Using Remote Sensing and Machine Learning Algorithms: Revisiting the 2010 Haiti Earthquake. Remote Sens. 2016, 8, 868. [Google Scholar] [CrossRef]

- Nex, F.; Duarte, D.; Tonolo, F.G.; Kerle, N. Structural Building Damage Detection with Deep Learning: Assessment of a State-of-the-Art CNN in Operational Conditions. Remote Sens. 2019, 11, 2765. [Google Scholar] [CrossRef]

- Ji, M.; Liu, L.; Buchroithner, M. Identifying Collapsed Buildings Using Post-Earthquake Satellite Imagery and Convolutional Neural Networks: A Case Study of the 2010 Haiti Earthquake. Remote Sens. 2018, 10, 1689. [Google Scholar] [CrossRef]

- Janalipour, M.; Mohammadzadeh, A. Building Damage Detection Using Object-Based Image Analysis and ANFIS From High-Resolution Image (Case Study: BAM Earthquake, Iran). IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1937–1945. [Google Scholar] [CrossRef]

- Gong, L.; Wang, C.; Wu, F.; Zhang, J.; Zhang, H.; Li, Q. Earthquake-Induced Building Damage Detection with Post-Event Sub-Meter VHR TerraSAR-X Staring Spotlight Imagery. Remote Sens. 2016, 8, 887. [Google Scholar] [CrossRef]

- Pan, X.; Yang, T.Y. Postdisaster Image-based Damage Detection and Repair Cost Estimation of Reinforced Concrete Buildings Using Dual Convolutional Neural Networks. Comput. Aided Civ. Infrastruct. Eng. 2020, 35, 495–510. [Google Scholar] [CrossRef]

- Ji, M.; Liu, L.; Du, R.; Buchroithner, M.F. A Comparative Study of Texture and Convolutional Neural Network Features for Detecting Collapsed Buildings After Earthquakes Using Pre- and Post-Event Satellite Imagery. Remote Sens. 2019, 11, 1202. [Google Scholar] [CrossRef]

- Moya, L.; Zakeri, H.; Yamazaki, F.; Liu, W.; Mas, E.; Koshimura, S. 3D Gray Level Co-Occurrence Matrix and Its Application to Identifying Collapsed Buildings. ISPRS J. Photogramm. Remote Sens. 2019, 149, 14–28. [Google Scholar] [CrossRef]

- Adriano, B.; Yokoya, N.; Xia, J.; Miura, H.; Liu, W.; Matsuoka, M.; Koshimura, S. Learning from Multimodal and Multitemporal Earth Observation Data for Building Damage Mapping. ISPRS J. Photogramm. Remote Sens. 2021, 175, 132–143. [Google Scholar] [CrossRef]

- Anniballe, R.; Noto, F.; Scalia, T.; Bignami, C.; Stramondo, S.; Chini, M.; Pierdicca, N. Earthquake Damage Mapping: An Overall Assessment of Ground Surveys and VHR Image Change Detection after L’Aquila 2009 Earthquake. Remote Sens. Environ. 2018, 210, 166–178. [Google Scholar] [CrossRef]

- Shaodan, L.; Hong, T.; Shi, H.; Yang, S.; Ting, M.; Jing, L.; Zhihua, X. Unsupervised Detection of Earthquake-Triggered Roof-Holes From UAV Images Using Joint Color and Shape Features. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1823–1827. [Google Scholar] [CrossRef]

- Shen, Y.; Zhu, S.; Yang, T.; Chen, C.; Pan, D.; Chen, J.; Xiao, L.; Du, Q. BDANet: Multiscale Convolutional Neural Network with Cross-Directional Attention for Building Damage Assessment From Satellite Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5402114. [Google Scholar] [CrossRef]

- Moya, L.; Yamazaki, F.; Liu, W.; Yamada, M. Detection of Collapsed Buildings from Lidar Data Due to the 2016 Kumamoto Earthquake in Japan. Nat. Hazards Earth Syst. Sci. 2018, 18, 65–78. [Google Scholar] [CrossRef]

- Sun, W.; Shi, L.; Yang, J.; Li, P. Building Collapse Assessment in Urban Areas Using Texture Information From Postevent SAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3792–3808. [Google Scholar] [CrossRef]

- Song, D.; Tan, X.; Wang, B.; Zhang, L.; Shan, X.; Cui, J. Integration of Super-Pixel Segmentation and Deep-Learning Methods for Evaluating Earthquake-Damaged Buildings Using Single-Phase Remote Sensing Imagery. Int. J. Remote Sens. 2020, 41, 1040–1066. [Google Scholar] [CrossRef]

- Qing, Y.; Ming, D.; Wen, Q.; Weng, Q.; Xu, L.; Chen, Y.; Zhang, Y.; Zeng, B. Operational Earthquake-Induced Building Damage Assessment Using CNN-Based Direct Remote Sensing Change Detection on Superpixel Level. Int. J. Appl. EARTH Obs. Geoinf. 2022, 112, 102899. [Google Scholar] [CrossRef]

- Sharma, R.; Tateishi, R.; Hara, K.; Nguyen, H.; Gharechelou, S.; Nguyen, L. Earthquake Damage Visualization (EDV) Technique for the Rapid Detection of Earthquake-Induced Damages Using SAR Data. Sensors 2017, 17, 235. [Google Scholar] [CrossRef] [PubMed]

- Tu, J.; Li, D.; Feng, W.; Han, Q.; Sui, H. Detecting Damaged Building Regions Based on Semantic Scene Change from Multi-Temporal High-Resolution Remote Sensing Images. ISPRS Int. J. Geo-Inf. 2017, 6, 131. [Google Scholar] [CrossRef]

- Li, Y.; Ye, S.; Bartoli, I. Semisupervised Classification of Hurricane Damage from Postevent Aerial Imagery Using Deep Learning. J. Appl. Remote Sens. 2018, 12, 045008. [Google Scholar] [CrossRef]

- Qi, J.; Song, D.; Shang, H.; Wang, N.; Hua, C.; Wu, C.; Qi, X.; Han, J. Search and Rescue Rotary-Wing UAV and Its Application to the Lushan Ms 7.0 Earthquake. J. Field Robot. 2016, 33, 290–321. [Google Scholar] [CrossRef]

- Moya, L.; Marval Perez, L.; Mas, E.; Adriano, B.; Koshimura, S.; Yamazaki, F. Novel Unsupervised Classification of Collapsed Buildings Using Satellite Imagery, Hazard Scenarios and Fragility Functions. Remote Sens. 2018, 10, 296. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Wang, J.; Ma, A.; Zhang, L. Building Damage Assessment for Rapid Disaster Response with a Deep Object-Based Semantic Change Detection Framework: From Natural Disasters to Man-Made Disasters. Remote Sens. Environ. 2021, 265, 112636. [Google Scholar] [CrossRef]

- Brando, G.; Rapone, D.; Spacone, E.; O’Banion, M.S.; Olsen, M.J.; Barbosa, A.R.; Faggella, M.; Gigliotti, R.; Liberatore, D.; Russo, S.; et al. Damage Reconnaissance of Unreinforced Masonry Bearing Wall Buildings after the 2015 Gorkha, Nepal, Earthquake. Earthq. Spectra 2017, 33, 243–273. [Google Scholar] [CrossRef]

- Zhai, W.; Shen, H.; Huang, C.; Pei, W. Building Earthquake Damage Information Extraction from a Single Post-Earthquake PolSAR Image. Remote Sens. 2016, 8, 171. [Google Scholar] [CrossRef]

- Levine, N.; Spencer, B. Post-Earthquake Building Evaluation Using UAVs: A BIM-Based Digital Twin Framework. Sensors 2022, 22, 873. [Google Scholar] [CrossRef] [PubMed]

- Pham, T.-T.-H.; Apparicio, P.; Gomez, C.; Weber, C.; Mathon, D. Towards a Rapid Automatic Detection of Building Damage Using Remote Sensing for Disaster Management: The 2010 Haiti Earthquake. Disaster Prev. Manag. Int. J. 2014, 23, 53–66. [Google Scholar] [CrossRef]

- Xie, S.; Duan, J.; Liu, S.; Dai, Q.; Liu, W.; Ma, Y.; Guo, R.; Ma, C. Crowdsourcing Rapid Assessment of Collapsed Buildings Early after the Earthquake Based on Aerial Remote Sensing Image: A Case Study of Yushu Earthquake. Remote Sens. 2016, 8, 759. [Google Scholar] [CrossRef]

- Wang, Y.; Cui, L.; Zhang, C.; Chen, W.; Xu, Y.; Zhang, Q. A Two-Stage Seismic Damage Assessment Method for Small, Dense, and Imbalanced Buildings in Remote Sensing Images. Remote Sens. 2022, 14, 1012. [Google Scholar] [CrossRef]

- Cui, L.; Jing, X.; Wang, Y.; Huan, Y.; Xu, Y.; Zhang, Q. Improved Swin Transformer-Based Semantic Segmentation of Postearthquake Dense Buildings in Urban Areas Using Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 369–385. [Google Scholar] [CrossRef]

- Thomas, J.; Kareem, A.; Bowyer, K.W. Automated Poststorm Damage Classification of Low-Rise Building Roofing Systems Using High-Resolution Aerial Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3851–3861. [Google Scholar] [CrossRef]

- Wang, X.Q.; Dou, A.X.; Wang, L.; Yuan, X.X.; Ding, X.; Zhang, W. RS-based assessment of seismic intensity of the 2013 Lushan, Sichuan, China MS7.0 earthquake. Chin. J. Geophys. 2015, 58, 163–171. (In Chinese) [Google Scholar] [CrossRef]

- Kaur, S.; Gupta, S.; Singh, S.; Koundal, D.; Zaguia, A. Convolutional Neural Network Based Hurricane Damage Detection Using Satellite Images. Soft Comput. 2022, 26, 7831–7845. [Google Scholar] [CrossRef]

- Liu, C.; Sui, H.; Wang, J.; Ni, Z.; Ge, L. Real-Time Ground-Level Building Damage Detection Based on Lightweight and Accurate YOLOv5 Using Terrestrial Images. Remote Sens. 2022, 14, 2763. [Google Scholar] [CrossRef]

- Chen, Q.; Yang, H.; Li, L.; Liu, X. A Novel Statistical Texture Feature for SAR Building Damage Assessment in Different Polarization Modes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 154–165. [Google Scholar] [CrossRef]

- ESA Sentinel-2 User Handbook. Available online: https://sentinel.esa.int/documents/247904/685211/Sentinel-2_User_Handbook (accessed on 28 June 2024).

- Tamkuan, N.; Nagai, M. Fusion of Multi-Temporal Interferometric Coherence and Optical Image Data for the 2016 Kumamoto Earthquake Damage Assessment. ISPRS Int. J. Geo-Inf. 2017, 6, 188. [Google Scholar] [CrossRef]

- Zhu, X.X.; Wang, Y.; Montazeri, S.; Ge, N. A Review of Ten-Year Advances of Multi-Baseline SAR Interferometry Using TerraSAR-X Data. Remote Sens. 2018, 10, 1374. [Google Scholar] [CrossRef]

- Endo, Y.; Adriano, B.; Mas, E.; Koshimura, S. New Insights into Multiclass Damage Classification of Tsunami-Induced Building Damage from SAR Images. Remote Sens. 2018, 10, 2059. [Google Scholar] [CrossRef]

- Zink, M.; Moreira, A.; Hajnsek, I.; Rizzoli, P.; Bachmann, M.; Kahle, R.; Fritz, T.; Huber, M.; Krieger, G.; Lachaise, M.; et al. TanDEM-X: 10 Years of Formation Flying Bistatic SAR Interferometry. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3546–3565. [Google Scholar] [CrossRef]

- Eineder, M.; Minet, C.; Steigenberger, P.; Cong, X.; Fritz, T. Imaging Geodesy—Toward Centimeter-Level Ranging Accuracy With TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2011, 49, 661–671. [Google Scholar] [CrossRef]

- Rao, P.; Zhou, W.; Bhattarai, N.; Srivastava, A.K.; Singh, B.; Poonia, S.; Lobell, D.B.; Jain, M. Using Sentinel-1, Sentinel-2, and Planet Imagery to Map Crop Type of Smallholder Farms. Remote Sens. 2021, 13, 1870. [Google Scholar] [CrossRef]

- Tiampo, K.F.; Huang, L.; Simmons, C.; Woods, C.; Glasscoe, M.T. Detection of Flood Extent Using Sentinel-1A/B Synthetic Aperture Radar: An Application for Hurricane Harvey, Houston, TX. Remote Sens. 2022, 14, 2261. [Google Scholar] [CrossRef]

- Nur, A.S.; Lee, C.-W. Damage Proxy Map (DPM) of the 2016 Gyeongju and 2017 Pohang Earthquakes Using Sentinel-1 Imagery. Korean J. Remote Sens. 2021, 37, 13–22. [Google Scholar] [CrossRef]

- Liu, H.; Song, C.; Li, Z.; Liu, Z.; Ta, L.; Zhang, X.; Chen, B.; Han, B.; Peng, J. A New Method for the Identification of Earthquake-Damaged Buildings Using Sentinel-1 Multitemporal Coherence Optimized by Homogeneous SAR Pixels and Histogram Matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7124–7143. [Google Scholar] [CrossRef]

- Sandhini Putri, A.F.; Widyatmanti, W.; Umarhadi, D.A. Sentinel-1 and Sentinel-2 Data Fusion to Distinguish Building Damage Level of the 2018 Lombok Earthquake. Remote Sens. Appl. Soc. Environ. 2022, 26, 100724. [Google Scholar] [CrossRef]

- Li, X.; Guo, H.; Zhang, L.; Chen, X.; Liang, L. A New Approach to Collapsed Building Extraction Using RADARSAT-2 Polarimetric SAR Imagery. IEEE Geosci. Remote Sens. Lett. 2012, 9, 677–681. [Google Scholar] [CrossRef]

- Watanabe, M.; Thapa, R.B.; Ohsumi, T.; Fujiwara, H.; Yonezawa, C.; Tomii, N.; Suzuki, S. Detection of Damaged Urban Areas Using Interferometric SAR Coherence Change with PALSAR-2. Earth Planets Space 2016, 68, 131. [Google Scholar] [CrossRef]

- Noda, A.; Suzuki, S.; Shimada, M.; Toda, K.; Miyagi, Y. COSMO-SkyMed and ALOS-1/2 X and L Band Multi-Frequency Results in Satellite Disaster Monitoring. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 211–214. [Google Scholar]

- Zhao, L.; Zhang, Q.; Li, Y.; Qi, Y.; Yuan, X.; Liu, J.; Li, H. China’s Gaofen-3 Satellite System and Its Application and Prospect. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11019–11028. [Google Scholar] [CrossRef]

- Bai, Y.; Gao, C.; Singh, S.; Koch, M.; Adriano, B.; Mas, E.; Koshimura, S. A Framework of Rapid Regional Tsunami Damage Recognition From Post-Event TerraSAR-X Imagery Using Deep Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 43–47. [Google Scholar] [CrossRef]

- Dong, L.; Shan, J. A Comprehensive Review of Earthquake-Induced Building Damage Detection with Remote Sensing Techniques. ISPRS J. Photogramm. Remote Sens. 2013, 84, 85–99. [Google Scholar] [CrossRef]

- Zhou, Z.; Gong, J.; Hu, X. Community-Scale Multi-Level Post-Hurricane Damage Assessment of Residential Buildings Using Multi-Temporal Airborne LiDAR Data. Autom. Constr. 2019, 98, 30–45. [Google Scholar] [CrossRef]

- He, M.; Zhu, Q.; Du, Z.; Hu, H.; Ding, Y.; Chen, M. A 3D Shape Descriptor Based on Contour Clusters for Damaged Roof Detection Using Airborne LiDAR Point Clouds. Remote Sens. 2016, 8, 189. [Google Scholar] [CrossRef]

- Axel, C.; Van Aardt, J. Building Damage Assessment Using Airborne Lidar. J. Appl. Remote Sens. 2017, 11, 046024. [Google Scholar] [CrossRef]

- Foroughnia, F.; Macchiarulo, V.; Berg, L.; DeJong, M.; Milillo, P.; Hudnut, K.W.; Gavin, K.; Giardina, G. Quantitative Assessment of Earthquake-Induced Building Damage at Regional Scale Using LiDAR Data. Int. J. Disaster Risk Reduct. 2024, 106, 104403. [Google Scholar] [CrossRef]

- Hauptman, L.; Mitsova, D.; Briggs, T.R. Hurricane Ian Damage Assessment Using Aerial Imagery and LiDAR: A Case Study of Estero Island, Florida. J. Mar. Sci. Eng. 2024, 12, 668. [Google Scholar] [CrossRef]

- Hensley, S.; Jones, C.; Lou, Y. Prospects for Operational Use of Airborne Polarimetric SAR for Disaster Response and Management. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; IEEE: Munich, Germany, 2012; pp. 103–106. [Google Scholar]

- Hildmann, H.; Kovacs, E. Review: Using Unmanned Aerial Vehicles (UAVs) as Mobile Sensing Platforms (MSPs) for Disaster Response, Civil Security and Public Safety. Drones 2019, 3, 59. [Google Scholar] [CrossRef]

- Dong, J.; Ota, K.; Dong, M. UAV-Based Real-Time Survivor Detection System in Post-Disaster Search and Rescue Operations. IEEE J. Miniaturization Air Space Syst. 2021, 2, 209–219. [Google Scholar] [CrossRef]

- Wang, Y.; Jing, X.; Cui, L.; Zhang, C.; Xu, Y.; Yuan, J.; Zhang, Q. Geometric Consistency Enhanced Deep Convolutional Encoder-Decoder for Urban Seismic Damage Assessment by UAV Images. Eng. Struct. 2023, 286, 116132. [Google Scholar] [CrossRef]

- Berke, P.R.; Kartez, J.; Wenger, D. Recovery after Disaster: Achieving Sustainable Development, Mitigation and Equity. Disasters 1993, 17, 93–109. [Google Scholar] [CrossRef] [PubMed]

- Meyer, M.A.; Hendricks, M.D. Using Photography to Assess Housing Damage and Rebuilding Progress for Disaster Recovery Planning. J. Am. Plann. Assoc. 2018, 84, 127–144. [Google Scholar] [CrossRef]

- Kashani, A.; Graettinger, A. Cluster-Based Roof Covering Damage Detection in Ground-Based Lidar Data. Autom. Constr. 2015, 58, 19–27. [Google Scholar] [CrossRef]

- Yang, F.; Wen, X.; Wang, X.; Li, X.; Li, Z. A Model Study of Building Seismic Damage Information Extraction and Analysis on Ground-Based LiDAR Data. Adv. Civ. Eng. 2021, 2021, 5542012. [Google Scholar] [CrossRef]

- Jiang, H.; Li, Q.; Jiao, Q.; Wang, X.; Wu, L. Extraction of Wall Cracks on Earthquake-Damaged Buildings Based on TLS Point Clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3088–3096. [Google Scholar] [CrossRef]

- Bodoque, J.; Guardiola-Albert, C.; Aroca-Jiménez, E.; Eguibar, M.; Martínez-Chenoll, M. Flood Damage Analysis: First Floor Elevation Uncertainty Resulting from LiDAR-Derived Digital Surface Models. Remote Sens. 2016, 8, 604. [Google Scholar] [CrossRef]

- Huang, M.-S.; Gül, M.; Zhu, H.-P. Vibration-Based Structural Damage Identification under Varying Temperature Effects. J. Aerosp. Eng. 2018, 31, 04018014. [Google Scholar] [CrossRef]

- Chiabrando, F.; Giulio Tonolo, F.; Lingua, A. Uav Direct Georeferencing Approach in An Emergency Mapping Context. The 2016 Central Italy Earthquake Case Study. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 247–253. [Google Scholar] [CrossRef]

- Umemura, R.; Samura, T.; Tadamura, K. An Efficient Orthorectification of a Satellite SAR Image Used for Monitoring Occurrence of Disaster. In Proceedings of the 2018 International Workshop on Advanced Image Technology (IWAIT), Chiang Mai, Thailand, 7–9 January 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar]

- Yahyanejad, S.; Wischounig-Strucl, D.; Quaritsch, M.; Rinner, B. Incremental Mosaicking of Images from Autonomous, Small-Scale UAVs. In Proceedings of the 2010 7th IEEE International Conference on Advanced Video and Signal Based Surveillance, Boston, MA, USA, 29 August–1 September 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 329–336. [Google Scholar]

- Joshi, A.R.; Tarte, I.; Suresh, S.; Koolagudi, S.G. Damage Identification and Assessment Using Image Processing on Post-Disaster Satellite Imagery. In Proceedings of the 2017 IEEE Global Humanitarian Technology Conference (GHTC), San Jose, CA, USA, 19–22 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–7. [Google Scholar]

- Yeum, C.M.; Dyke, S.J.; Ramirez, J. Visual Data Classification in Post-Event Building Reconnaissance. Eng. Struct. 2018, 155, 16–24. [Google Scholar] [CrossRef]

- Coulter, L.L.; Stow, D.A.; Lippitt, C.D.; Fraley, G.W. Repeat Station Imaging for Rapid Airborne Change Detection. In Time-Sensitive Remote Sensing; Lippitt, C.D., Stow, D.A., Coulter, L.L., Eds.; Springer New York: Piscataway, NJ, USA, 2015; pp. 29–43. ISBN 978-1-4939-2601-5. [Google Scholar]

- Hong, Z.; Zhong, H.; Pan, H.; Liu, J.; Zhou, R.; Zhang, Y.; Han, Y.; Wang, J.; Yang, S.; Zhong, C. Classification of Building Damage Using a Novel Convolutional Neural Network Based on Post-Disaster Aerial Images. Sensors 2022, 22, 5920. [Google Scholar] [CrossRef] [PubMed]

- Ghosh Mondal, T.; Jahanshahi, M.R.; Wu, R.; Wu, Z.Y. Deep Learning-based Multi-class Damage Detection for Autonomous Post-disaster Reconnaissance. Struct. Control Health Monit. 2020, 27, e2507. [Google Scholar] [CrossRef]

- Al Shafian, S.; Hu, D.; Yu, W. Deep Learning Enhanced Crack Detection for Tunnel Inspection. In Proceedings of the International Conference on Transportation and Development 2024, Atlanta, GA, USA, 13 June 2024; American Society of Civil Engineers: Reston, VA, USA, 2024; pp. 732–741. [Google Scholar]

- Kazemi, F.; Asgarkhani, N.; Jankowski, R. Machine Learning-Based Seismic Fragility and Seismic Vulnerability Assessment of Reinforced Concrete Structures. Soil Dyn. Earthq. Eng. 2023, 166, 107761. [Google Scholar] [CrossRef]

- Kazemi, F.; Asgarkhani, N.; Jankowski, R. Machine Learning-Based Seismic Response and Performance Assessment of Reinforced Concrete Buildings. Arch. Civ. Mech. Eng. 2023, 23, 94. [Google Scholar] [CrossRef]

- Bai, Y.; Sezen, H.; Yilmaz, A. Detecting Cracks and Spalling Automatically in Extreme Events by End-To-End Deep Learning Frameworks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 2, 161–168. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, M.; Wan, N.; Deng, Z.; He, Z.; Luo, J. Missing Measurement Data Recovery Methods in Structural Health Monitoring: The State, Challenges and Case Study. Measurement 2024, 231, 114528. [Google Scholar] [CrossRef]

- Huang, M.; Zhang, J.; Hu, J.; Ye, Z.; Deng, Z.; Wan, N. Nonlinear Modeling of Temperature-Induced Bearing Displacement of Long-Span Single-Pier Rigid Frame Bridge Based on DCNN-LSTM. Case Stud. Therm. Eng. 2024, 53, 103897. [Google Scholar] [CrossRef]

- Hu, D.; Li, S.; Du, J.; Cai, J. Automating Building Damage Reconnaissance to Optimize Drone Mission Planning for Disaster Response. J. Comput. Civ. Eng. 2023, 37, 04023006. [Google Scholar] [CrossRef]

- Wang, Y.; Feng, W.; Jiang, K.; Li, Q.; Lv, R.; Tu, J. Real-Time Damaged Building Region Detection Based on Improved YOLOv5s and Embedded System From UAV Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4205–4217. [Google Scholar] [CrossRef]

- Ding, J.; Zhang, J.; Zhan, Z.; Tang, X.; Wang, X. A Precision Efficient Method for Collapsed Building Detection in Post-Earthquake UAV Images Based on the Improved NMS Algorithm and Faster R-CNN. Remote Sens. 2022, 14, 663. [Google Scholar] [CrossRef]

- Wen, H.; Hu, J.; Xiong, F.; Zhang, C.; Song, C.; Zhou, X. A Random Forest Model for Seismic-Damage Buildings Identification Based on UAV Images Coupled with RFE and Object-Oriented Methods. Nat. Hazards 2023, 119, 1751–1769. [Google Scholar] [CrossRef]

- Takhtkeshha, N.; Mohammadzadeh, A.; Salehi, B. A Rapid Self-Supervised Deep-Learning-Based Method for Post-Earthquake Damage Detection Using UAV Data (Case Study: Sarpol-e Zahab, Iran). Remote Sens. 2022, 15, 123. [Google Scholar] [CrossRef]

- Hristidis, V.; Chen, S.-C.; Li, T.; Luis, S.; Deng, Y. Survey of Data Management and Analysis in Disaster Situations. J. Syst. Softw. 2010, 83, 1701–1714. [Google Scholar] [CrossRef]

- Shah, S.A.; Seker, D.Z.; Hameed, S.; Draheim, D. The Rising Role of Big Data Analytics and IoT in Disaster Management: Recent Advances, Taxonomy and Prospects. IEEE Access 2019, 7, 54595–54614. [Google Scholar] [CrossRef]

- Mohammadi, M.E.; Watson, D.P.; Wood, R.L. Deep Learning-Based Damage Detection from Aerial SfM Point Clouds. Drones 2019, 3, 68. [Google Scholar] [CrossRef]

- Lohani, B.; Ghosh, S. Airborne LiDAR Technology: A Review of Data Collection and Processing Systems. Proc. Natl. Acad. Sci. India Sect. Phys. Sci. 2017, 87, 567–579. [Google Scholar] [CrossRef]

- Sultan, V.; Sarksyan, T.; Yadav, S. Wildfires Damage Assessment Via LiDAR. Int. J. Adv. Appl. Sci. 2022, 9, 34–43. [Google Scholar] [CrossRef]

- Yoo, H.T.; Lee, H.; Chi, S.; Hwang, B.-G.; Kim, J. A Preliminary Study on Disaster Waste Detection and Volume Estimation Based on 3D Spatial Information. In Proceedings of the Computing in Civil Engineering 2017, Seattle, WA, USA, 22 June 2017; American Society of Civil Engineers: Reston, VA, USA, 2017; pp. 428–435. [Google Scholar]

- Yu, D.; He, Z. Digital Twin-Driven Intelligence Disaster Prevention and Mitigation for Infrastructure: Advances, Challenges, and Opportunities. Nat. Hazards 2022, 112, 1–36. [Google Scholar] [CrossRef]

- Yu, R.; Li, P.; Shan, J.; Zhang, Y.; Dong, Y. Multi-Feature Driven Rapid Inspection of Earthquake-Induced Damage on Building Facades Using UAV-Derived Point Cloud. Meas. J. Int. Meas. Confed. 2024, 232, 114679. [Google Scholar] [CrossRef]

- Ji, H.; Luo, X. 3D Scene Reconstruction of Landslide Topography Based on Data Fusion between Laser Point Cloud and UAV Image. Environ. Earth Sci. 2019, 78, 534. [Google Scholar] [CrossRef]

- Han, X.-F.; Jin, J.S.; Wang, M.-J.; Jiang, W.; Gao, L.; Xiao, L. A Review of Algorithms for Filtering the 3D Point Cloud. Signal Process. Image Commun. 2017, 57, 103–112. [Google Scholar] [CrossRef]

- Frulla, L.A.; Milovich, J.A.; Karszenbaum, H.; Gagliardini, D.A. Radiometric Corrections and Calibration of SAR Images. In Proceedings of the IGARSS ’98. Sensing and Managing the Environment. 1998 IEEE International Geoscience and Remote Sensing, Seattle, WA, USA, 6–10 July 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 1147–1149. [Google Scholar]

- El-Darymli, K.; McGuire, P.; Gill, E.; Power, D.; Moloney, C. Understanding the Significance of Radiometric Calibration for Synthetic Aperture Radar Imagery. In Proceedings of the 2014 IEEE 27th Canadian Conference on Electrical and Computer Engineering (CCECE), Toronto, ON, Canada, 4–7 May 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–6. [Google Scholar]

- Garg, R.; Kumar, A.; Prateek, M.; Pandey, K.; Kumar, S. Land Cover Classification of Spaceborne Multifrequency SAR and Optical Multispectral Data Using Machine Learning. Adv. Space Res. 2022, 69, 1726–1742. [Google Scholar] [CrossRef]

- Choo, A.L.; Chan, Y.K.; Koo, V.C.; Lim, T.S. Study on Geometric Correction Algorithms for SAR Images. Int. J. Microw. Opt. Technol. 2014, 9, 68–72. [Google Scholar]

- Choi, H.; Jeong, J. Speckle Noise Reduction Technique for SAR Images Using Statistical Characteristics of Speckle Noise and Discrete Wavelet Transform. Remote Sens. 2019, 11, 1184. [Google Scholar] [CrossRef]

- Liu, S.; Wu, G.; Zhang, X.; Zhang, K.; Wang, P.; Li, Y. SAR Despeckling via Classification-Based Nonlocal and Local Sparse Representation. Neurocomputing 2017, 219, 174–185. [Google Scholar] [CrossRef]

- Ma, Q. Improving SAR Target Recognition Performance Using Multiple Preprocessing Techniques. Comput. Intell. Neurosci. 2021, 2021, 6572362. [Google Scholar] [CrossRef] [PubMed]

- Ge, P.; Gokon, H.; Meguro, K. A Review on Synthetic Aperture Radar-Based Building Damage Assessment in Disasters. Remote Sens. Environ. 2020, 240, 111693. [Google Scholar] [CrossRef]

- Kim, M.; Park, S.-E.; Lee, S.-J. Detection of Damaged Buildings Using Temporal SAR Data with Different Observation Modes. Remote Sens. 2023, 15, 308. [Google Scholar] [CrossRef]

- Akhmadiya, A.; Nabiyev, N.; Moldamurat, K.; Dyussekeyev, K.; Atanov, S. Use of Sentinel-1 Dual Polarization Multi-Temporal Data with Gray Level Co-Occurrence Matrix Textural Parameters for Building Damage Assessment. Pattern Recognit. Image Anal. 2021, 31, 240–250. [Google Scholar] [CrossRef]

- James, J.; Heddallikar, A.; Choudhari, P.; Chopde, S. Analysis of Features in SAR Imagery Using GLCM Segmentation Algorithm. In Data Science; Verma, G.K., Soni, B., Bourennane, S., Ramos, A.C.B., Eds.; Transactions on Computer Systems and Networks; Springer Singapore: Singapore, 2021; pp. 253–266. ISBN 9789811616808. [Google Scholar]

- Raucoules, D.; Ristori, B.; De Michele, M.; Briole, P. Surface Displacement of the Mw 7 Machaze Earthquake (Mozambique): Complementary Use of Multiband InSAR and Radar Amplitude Image Correlation with Elastic Modelling. Remote Sens. Environ. 2010, 114, 2211–2218. [Google Scholar] [CrossRef]

- Sun, X.; Chen, X.; Yang, L.; Wang, W.; Zhou, X.; Wang, L.; Yao, Y. Using InSAR and PolSAR to Assess Ground Displacement and Building Damage after a Seismic Event: Case Study of the 2021 Baicheng Earthquake. Remote Sens. 2022, 14, 3009. [Google Scholar] [CrossRef]

- Xu, Z.; Wang, R.; Zhang, H.; Li, N.; Zhang, L. Building Extraction from High-Resolution SAR Imagery Based on Deep Neural Networks. Remote Sens. Lett. 2017, 8, 888–896. [Google Scholar] [CrossRef]

- Shahzad, M.; Maurer, M.; Fraundorfer, F.; Wang, Y.; Zhu, X.X. Buildings Detection in VHR SAR Images Using Fully Convolution Neural Networks. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1100–1116. [Google Scholar] [CrossRef]

- Stephenson, O.L.; Kohne, T.; Zhan, E.; Cahill, B.E.; Yun, S.-H.; Ross, Z.E.; Simons, M. Deep Learning-Based Damage Mapping With InSAR Coherence Time Series. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5207917. [Google Scholar] [CrossRef]

- Deng, Z.; Huang, M.; Wan, N.; Zhang, J. The Current Development of Structural Health Monitoring for Bridges: A Review. Buildings 2023, 13, 1360. [Google Scholar] [CrossRef]

- Afrin, A.; Rahman, M.M.; Chowdhury, A.H.; Eshraq, M.; Ukasha, M.R. Fire and Disaster Detection with Multimodal Quadcopter by Machine Learning; BRAC University: Dhaka, Bangladesh, 2023. [Google Scholar]

- Wang, T.; Tao, Y.; Chen, S.-C.; Shyu, M.-L. Multi-Task Multimodal Learning for Disaster Situation Assessment. In Proceedings of the 2020 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Shenzhen, China, 6–8 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 209–212. [Google Scholar]

- Qin, W.; Tang, J.; Lu, C.; Lao, S. A Typhoon Trajectory Prediction Model Based on Multimodal and Multitask Learning. Appl. Soft Comput. 2022, 122, 108804. [Google Scholar] [CrossRef]

- Morales, J.; Vázquez-Martín, R.; Mandow, A.; Morilla-Cabello, D.; García-Cerezo, A. The UMA-SAR Dataset: Multimodal Data Collection from a Ground Vehicle during Outdoor Disaster Response Training Exercises. Int. J. Robot. Res. 2021, 40, 835–847. [Google Scholar] [CrossRef]

- Mohanty, S.D.; Biggers, B.; Sayedahmed, S.; Pourebrahim, N.; Goldstein, E.B.; Bunch, R.; Chi, G.; Sadri, F.; McCoy, T.P.; Cosby, A. A Multi-Modal Approach towards Mining Social Media Data during Natural Disasters—A Case Study of Hurricane Irma. Int. J. Disaster Risk Reduct. 2021, 54, 102032. [Google Scholar] [CrossRef]

- Bultmann, S.; Quenzel, J.; Behnke, S. Real-Time Multi-Modal Semantic Fusion on Unmanned Aerial Vehicles. In Proceedings of the 2021 European Conference on Mobile Robots (ECMR), Bonn, Germany, 31 August–1 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–8. [Google Scholar]

- Yang, W.; Liu, X.; Zhang, L.; Yang, L.T. Big Data Real-Time Processing Based on Storm. In Proceedings of the 2013 12th IEEE International Conference on Trust, Security and Privacy in Computing and Communications, Melbourne, Australia, 16–18 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1784–1787. [Google Scholar]

- Zeng, F.; Pang, C.; Tang, H. Sensors on the Internet of Things Systems for Urban Disaster Management: A Systematic Literature Review. Sensors 2023, 23, 7475. [Google Scholar] [CrossRef] [PubMed]

- Bouchard, I.; Rancourt, M.-È.; Aloise, D.; Kalaitzis, F. On Transfer Learning for Building Damage Assessment from Satellite Imagery in Emergency Contexts. Remote Sens. 2022, 14, 2532. [Google Scholar] [CrossRef]

- Sousa, M.J.; Moutinho, A.; Almeida, M. Wildfire Detection Using Transfer Learning on Augmented Datasets. Expert Syst. Appl. 2020, 142, 112975. [Google Scholar] [CrossRef]

- Kyrkou, C.; Theocharides, T. Deep-Learning-Based Aerial Image Classification for Emergency Response Applications Using Unmanned Aerial Vehicles. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 517–525. [Google Scholar]

- Alshowair, A.; Bail, J.; AlSuwailem, F.; Mostafa, A.; Abdel-Azeem, A. Use of Virtual Reality Exercises in Disaster Preparedness Training: A Scoping Review. SAGE Open Med. 2024, 12, 20503121241241936. [Google Scholar] [CrossRef] [PubMed]

- Shafian, S.A.; Hu, D.; Li, Y.; Adhikari, S. Improving Construction Site Safety by Incident Reporting Through Utilizing Virtual Reality. In Proceedings of the 2024 South East Section Meeting, Marietta, GA, USA, 10–12 March 2024. [Google Scholar]

- Sharma, S.; Jerripothula, S.; Mackey, S.; Soumare, O. Immersive Virtual Reality Environment of a Subway Evacuation on a Cloud for Disaster Preparedness and Response Training. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence for Human-like Intelligence (CIHLI), Orlando, FL, USA, 9–12 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–6. [Google Scholar]

- Jane Lamb, K.; Davies, J.; Bowley, R.; Williams, J.-P. Incident Command Training: The Introspect Model. Int. J. Emerg. Serv. 2014, 3, 131–143. [Google Scholar] [CrossRef]

- Renganayagalu, S.K.; Mallam, S.C.; Nazir, S. Effectiveness of VR Head Mounted Displays in Professional Training: A Systematic Review. Technol. Knowl. Learn. 2021, 26, 999–1041. [Google Scholar] [CrossRef]

- Zhu, Y.; Li, N. Virtual and Augmented Reality Technologies for Emergency Management in the Built Environments: A State-of-the-Art Review. J. Saf. Sci. Resil. 2021, 2, 1–10. [Google Scholar] [CrossRef]

- Xie, B.; Liu, H.; Alghofaili, R.; Zhang, Y.; Jiang, Y.; Lobo, F.D.; Li, C.; Li, W.; Huang, H.; Akdere, M.; et al. A Review on Virtual Reality Skill Training Applications. Front. Virtual Real. 2021, 2, 645153. [Google Scholar] [CrossRef]

- Grassini, S.; Laumann, K.; Rasmussen Skogstad, M. The Use of Virtual Reality Alone Does Not Promote Training Performance (but Sense of Presence Does). Front. Psychol. 2020, 11, 1743. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al Shafian, S.; Hu, D. Integrating Machine Learning and Remote Sensing in Disaster Management: A Decadal Review of Post-Disaster Building Damage Assessment. Buildings 2024, 14, 2344. https://doi.org/10.3390/buildings14082344

Al Shafian S, Hu D. Integrating Machine Learning and Remote Sensing in Disaster Management: A Decadal Review of Post-Disaster Building Damage Assessment. Buildings. 2024; 14(8):2344. https://doi.org/10.3390/buildings14082344

Chicago/Turabian StyleAl Shafian, Sultan, and Da Hu. 2024. "Integrating Machine Learning and Remote Sensing in Disaster Management: A Decadal Review of Post-Disaster Building Damage Assessment" Buildings 14, no. 8: 2344. https://doi.org/10.3390/buildings14082344

APA StyleAl Shafian, S., & Hu, D. (2024). Integrating Machine Learning and Remote Sensing in Disaster Management: A Decadal Review of Post-Disaster Building Damage Assessment. Buildings, 14(8), 2344. https://doi.org/10.3390/buildings14082344