Enhancing Building Services in Higher Education Campuses through Participatory Science

Abstract

1. Introduction

2. Methodology

3. Application to University Campus

3.1. Overview

3.2. Facilities Mapping and Parameters Assessment

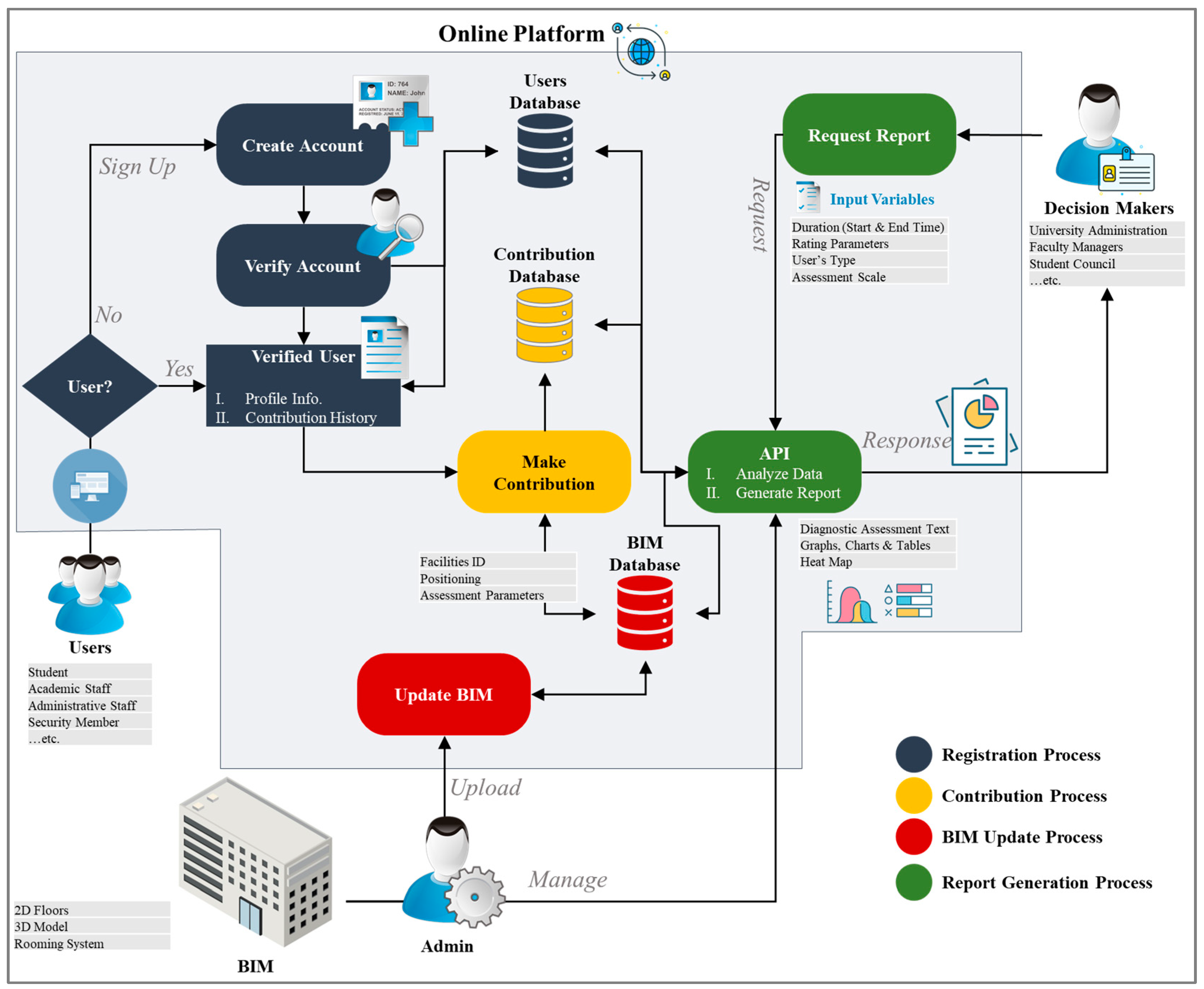

3.3. Online Participatory Platform

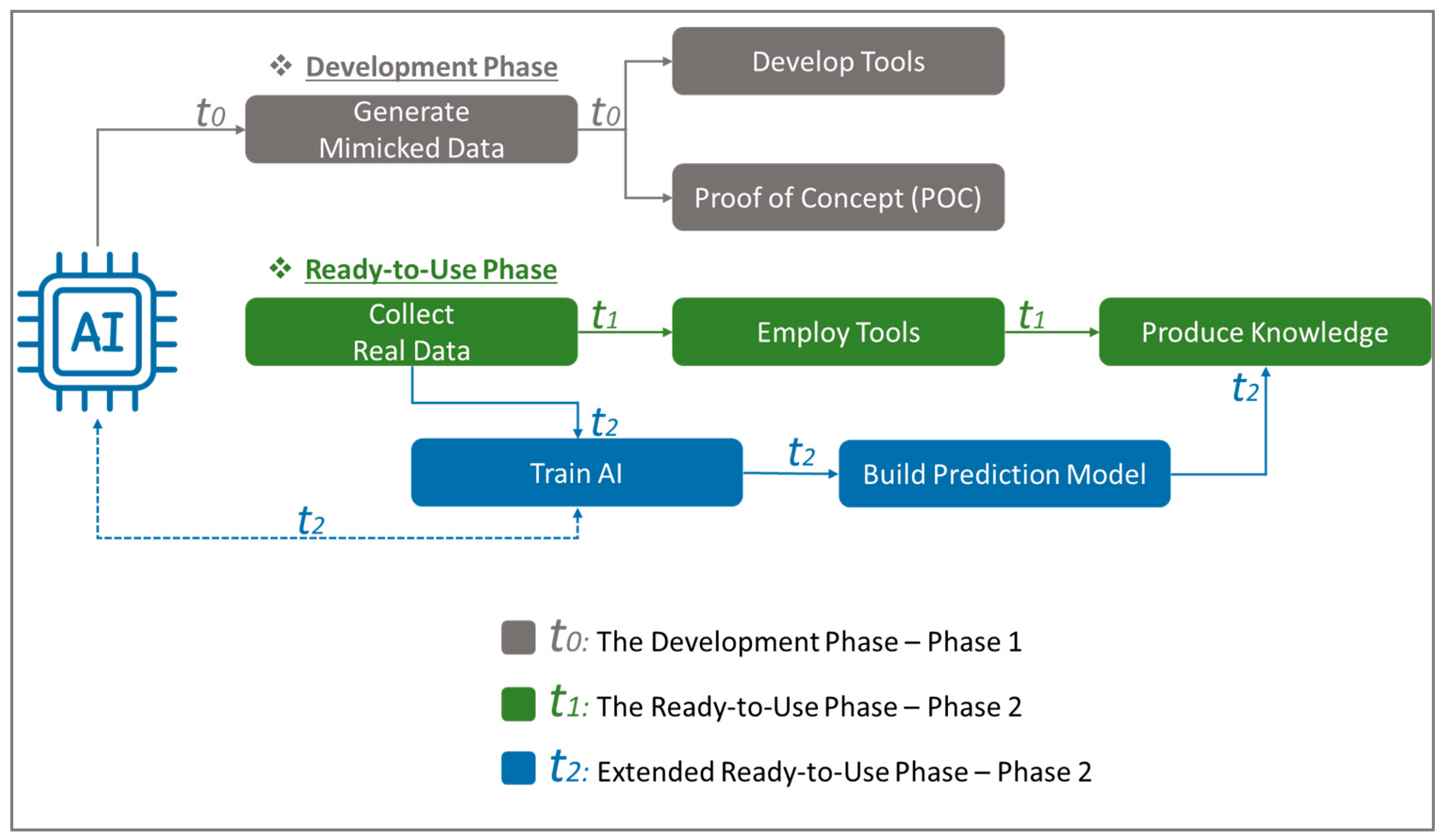

3.4. AI-Driven Data Generation

4. Results

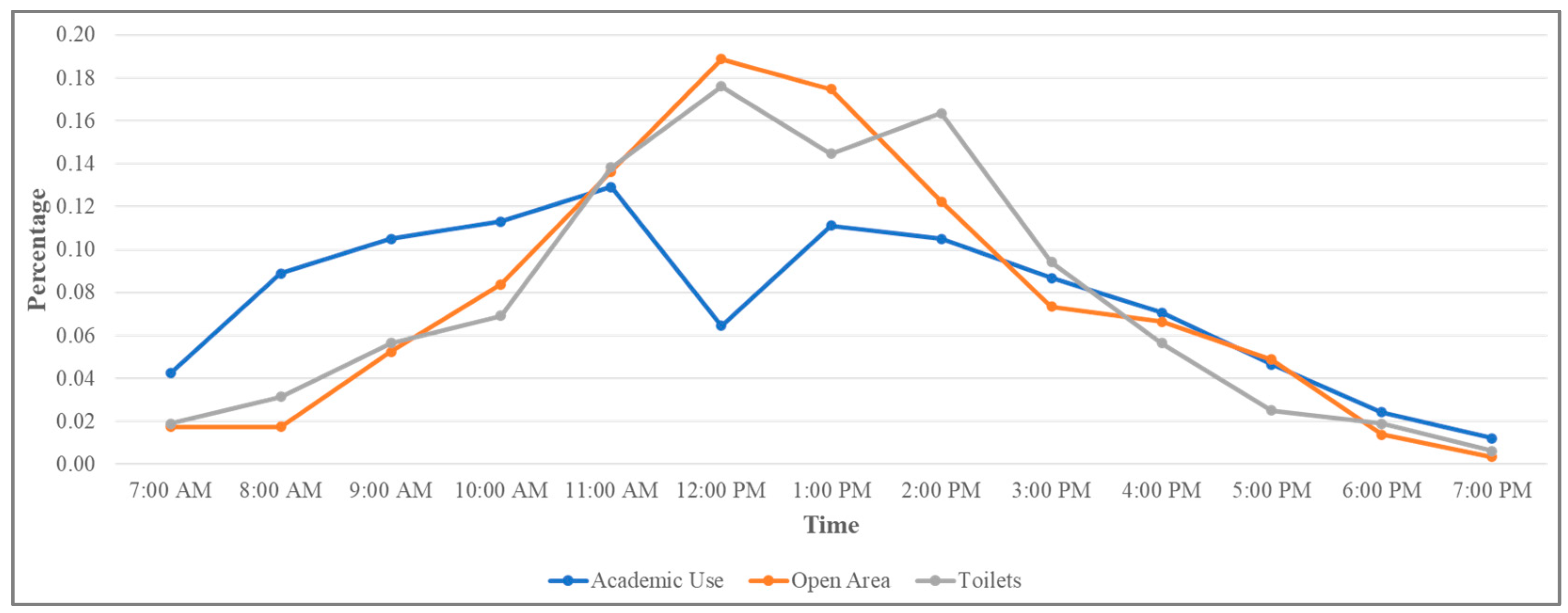

4.1. Overview of the AI-Generated Data

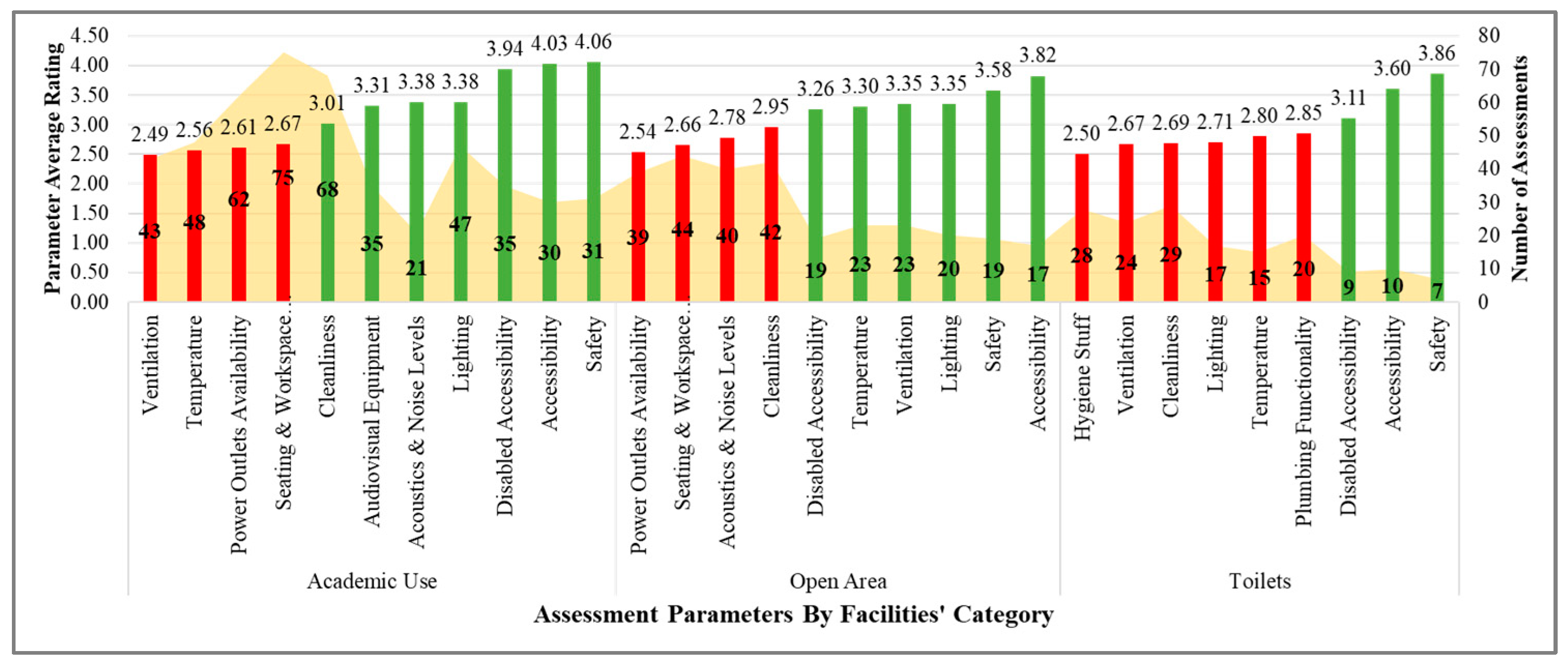

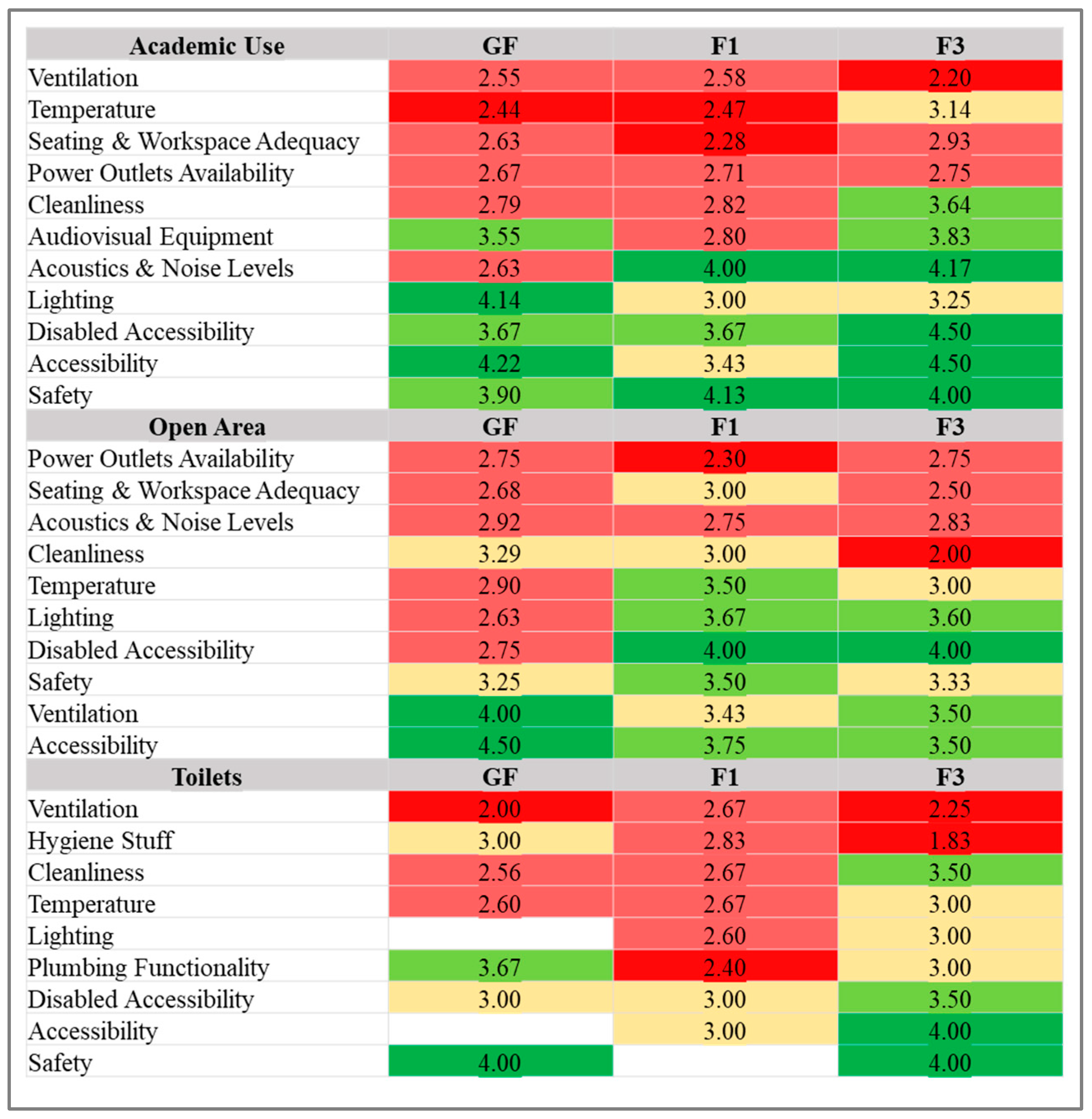

4.2. User’s Satisfaction

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pupiales-Chuquin, S.-A.; Tenesaca-Luna, G.-A.; Mora-Arciniegas, M.-B. Proposal of a Methodology for the Implementation of a Smart Campus. In Proceedings of the Sixth International Congress on Information and Communication Technology, London, UK, 25–26 February 2021; Yang, X.-S., Sherratt, S., Dey, N., Joshi, A., Eds.; Springer: Singapore, 2022; pp. 589–602. [Google Scholar]

- Villegas-Ch, W.; Palacios-Pacheco, X.; Luján-Mora, S. Application of a Smart City Model to a Traditional University Campus with a Big Data Architecture: A Sustainable Smart Campus. Sustainability 2019, 11, 2857. [Google Scholar] [CrossRef]

- Radu, L.-D. Disruptive Technologies in Smart Cities: A Survey on Current Trends and Challenges. Smart Cities 2020, 3, 1022–1038. [Google Scholar] [CrossRef]

- Rieder, E.; Schmuck, M.; Tugui, A. A Scientific Perspective on Using Artificial Intelligence in Sustainable Urban Development. Big Data Cogn. Comput. 2023, 7, 3. [Google Scholar] [CrossRef]

- Shahrour, I.; Xie, X. Role of Internet of Things (IoT) and Crowdsourcing in Smart City Projects. Smart Cities 2021, 4, 1276–1292. [Google Scholar] [CrossRef]

- Wang, J.; Nguyen, D.Q.; Bonkalo, T.; Grebennikov, O. Smart Governance of Urban Data. E3S Web Conf. 2021, 301, 5005. [Google Scholar] [CrossRef]

- Lazarova-Molnar, S.; Logason, H.; Andersen, P.G.; Kjærgaard, M.B. Mobile Crowdsourcing of Occupant Feedback in Smart Buildings. SIGAPP Appl. Comput. Rev. 2017, 17, 5–14. [Google Scholar] [CrossRef]

- Mansour, S.; Sassine, R.; Guibert, S. Leveraging Diverse Data Sources for ESTP Campus Digital Twin Development: Methodology and Implementation. In Innovations in Smart Cities Applications Volume 7; Lecture Notes in Networks and Systems; Ben Ahmed, M., Boudhir, A.A., El Meouche, R., Karaș, İ.R., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 243–257. [Google Scholar]

- Zhu, H.; Chau, S.C.-K.; Guarddin, G.; Liang, W. Integrating IoT-Sensing and Crowdsensing with Privacy: Privacy-Preserving Hybrid Sensing for Smart Cities. ACM Trans. Internet Things 2022, 3, 1–30. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, J.; Li, M.; Huang, J.; Wang, X. A Review of Smart Design Based on Interactive Experience in Building Systems. Sustainability 2020, 12, 6760. [Google Scholar] [CrossRef]

- Calvo, M.; Galabo, R.; Owen, V.; Cruickshank, L.; Sara, R. Strategies and Tactics of Participatory Architecture. In Proceedings of the DRS Conference Proceedings 2022, Bilbao, Spain, 25 June–3 July 2022. [Google Scholar]

- Cardamone, C.; Lobel, L. Using Citizen Science to Engage Introductory Students: From Streams to the Solar System. J. Microbiol. Biol. Educ. 2016, 17, 117–119. [Google Scholar] [CrossRef]

- Schweiger, G.; Eckerstorfer, L.V.; Hafner, I.; Fleischhacker, A.; Radl, J.; Glock, B.; Wastian, M.; Rößler, M.; Lettner, G.; Popper, N.; et al. Active Consumer Participation in Smart Energy Systems. Energy Build. 2020, 227, 110359. [Google Scholar] [CrossRef]

- Goh, C.S.; Chong, H.-Y. Opportunities in the Sustainable Built Environment: Perspectives on Human-Centric Approaches. Energies 2023, 16, 1301. [Google Scholar] [CrossRef]

- Rymarzak, M.; den Heijer, A.; Curvelo Magdaniel, F.; Arkesteijn, M. Identifying the Influence of University Governance on Campus Management: Lessons from the Netherlands and Poland. Stud. High. Educ. 2020, 45, 1298–1311. [Google Scholar] [CrossRef]

- Sanabria, Z.J.; Alfaro-Ponce, B.; González Peña, O.I.; Terashima-Marín, H.; Ortiz-Bayliss, J.C. Engagement and Social Impact in Tech-Based Citizen Science Initiatives for Achieving the SDGs: A Systematic Literature Review with a Perspective on Complex Thinking. Sustainability 2022, 14, 10978. [Google Scholar] [CrossRef]

- Omotayo, T.; Moghayedi, A.; Awuzie, B.; Ajayi, S. Infrastructure Elements for Smart Campuses: A Bibliometric Analysis. Sustainability 2021, 13, 7960. [Google Scholar] [CrossRef]

- Ahmed, V.; Khatri, M.F.; Bahroun, Z.; Basheer, N. Optimizing Smart Campus Solutions: An Evidential Reasoning Decision Support Tool. Smart Cities 2023, 6, 2308–2346. [Google Scholar] [CrossRef]

- Nachandiya, N.; Gambo, Y.; Joel, N.B.; Davwar, P. Smart Technologies for Smart Campus Information System. Asian J. Res. Comput. Sci. 2018, 1–7. [Google Scholar] [CrossRef]

- Valks, B.; Arkesteijn, M.; Koutamanis, A.; Den Heijer, A. Towards Smart Campus Management: Defining Information Requirements for Decision Making through Dashboard Design. Buildings 2021, 11, 201. [Google Scholar] [CrossRef]

- Valks, B.; Arkesteijn, M.H.; Koutamanis, A.; den Heijer, A.C. Towards a Smart Campus: Supporting Campus Decisions with Internet of Things Applications. Build. Res. Inf. 2021, 49, 1–20. [Google Scholar] [CrossRef]

- Ali, Z.; Shah, M.A.; Almogren, A.; Ud Din, I.; Maple, C.; Khattak, H.A. Named Data Networking for Efficient IoT-Based Disaster Management in a Smart Campus. Sustainability 2020, 12, 3088. [Google Scholar] [CrossRef]

- Eltamaly, A.M.; Alotaibi, M.A.; Alolah, A.I.; Ahmed, M.A. IoT-Based Hybrid Renewable Energy System for Smart Campus. Sustainability 2021, 13, 8555. [Google Scholar] [CrossRef]

- Jabbar, W.A.; Wei, C.W.; Azmi, N.A.A.M.; Haironnazli, N.A. An IoT Raspberry Pi-Based Parking Management System for Smart Campus. Internet Things 2021, 14, 100387. [Google Scholar] [CrossRef]

- Nagowah, S.; Sta, H.; Gobin-Rahimbux, B. Modelling Sustainability for an IoT-Enabled Smart Green Campus Using an Ontology-Based Approach. In Proceedings of the WOP@ ISWC, Athens, Greece, 6–7 November 2023. [Google Scholar]

- Islam, M.M.; Nafiz Ahmed, T. A Greener Campus: Reusing Water, Reducing Waste and Protecting the Environment. In Proceedings of the IN4OBE Global Virtual Summit, Virtual, 9–10 June 2023. [Google Scholar]

- Mohd Nawi, N.F.; Er, A.C. Campus Sustainability: A Case Study in Universiti Malaysia Sabah (UMS). J. Sustain. Sci. Manag. 2018, 2018, 113–124. [Google Scholar]

- Min-Allah, N.; Alrashed, S. Smart Campus—A Sketch. Sustain. Cities Soc. 2020, 59, 102231. [Google Scholar] [CrossRef] [PubMed]

- Polin, K.; Yigitcanlar, T.; Limb, M.; Washington, T. The Making of Smart Campus: A Review and Conceptual Framework. Buildings 2023, 13, 891. [Google Scholar] [CrossRef]

- Prandi, C.; Monti, L.; Ceccarini, C.; Salomoni, P. Smart Campus: Fostering the Community Awareness Through an Intelligent Environment. Mob. Netw. Appl. 2020, 25, 945–952. [Google Scholar] [CrossRef]

- Alrashed, S. Key Performance Indicators for Smart Campus and Microgrid. Sustain. Cities Soc. 2020, 60, 102264. [Google Scholar] [CrossRef]

- Yip, C.; Zhang, Y.; Lu, E.; Dong, Z.Y. A Hybrid Assessment Framework for Human-centred Sustainable Smart Campus: A Case Study on COVID-19 Impact. IET Smart Cities 2022, 4, 184–196. [Google Scholar] [CrossRef]

- Fortes, S.; Santoyo-Ramón, J.A.; Palacios, D.; Baena, E.; Mora-García, R.; Medina, M.; Mora, P.; Barco, R. The Campus as a Smart City: University of Málaga Environmental, Learning, and Research Approaches. Sensors 2019, 19, 1349. [Google Scholar] [CrossRef]

- Tuzcuoğlu, D.; de Vries, B.; Yang, D.; Sungur, A. What Is a Smart Office Environment? An Exploratory Study from a User Perspective. J. Corp. Real. Estate 2022, 25, 118–138. [Google Scholar] [CrossRef]

- Glass, J.E.; Matson, T.E.; Lim, C.; Hartzler, A.L.; Kimbel, K.; Lee, A.K.; Beatty, T.; Parrish, R.; Caldeiro, R.M.; McWethy, A.G.; et al. Approaches for Implementing App-Based Digital Treatments for Drug Use Disorders Into Primary Care: A Qualitative, User-Centered Design Study of Patient Perspectives. J. Med. Internet Res. 2021, 23, e25866. [Google Scholar] [CrossRef]

- Rodriguez, N.M.; Burleson, G.; Linnes, J.C.; Sienko, K.H. Thinking Beyond the Device: An Overview of Human- and Equity-Centered Approaches for Health Technology Design. Annu. Rev. Biomed. Eng. 2023, 25, 257–280. [Google Scholar] [CrossRef]

- Crooks, R. Toward People’s Community Control of Technology: Race, Access, and Education. Just Tech 2022. [Google Scholar] [CrossRef]

- UN DESA THE 17 GOALS|Sustainable Development. Available online: https://sdgs.un.org/goals (accessed on 23 August 2024).

- Aljer, A.; Itair, M.; Akil, M.; Sharour, I. Knowledge Infrastructure Data Wizard (KIDW): A Cooperative Approach for Data Management and Knowledge Dissemination. In Innovations in Smart Cities Applications Volume 7; Lecture Notes in Networks and Systems; Ben Ahmed, M., Boudhir, A.A., El Meouche, R., Karaș, İ.R., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 34–43. [Google Scholar]

- Corbari, C.; Paciolla, N.; Ben Charfi, I.; Skokovic, D.; Sobrino, J.A.; Woods, M. Citizen Science Supporting Agricultural Monitoring with Hundreds of Low-Cost Sensors in Comparison to Remote Sensing Data. Eur. J. Remote Sens. 2022, 55, 388–408. [Google Scholar] [CrossRef]

- Itair, M.; Shahrour, I.; Hijazi, I. The Use of the Smart Technology for Creating an Inclusive Urban Public Space. Smart Cities 2023, 6, 2484–2498. [Google Scholar] [CrossRef]

- Pallavi, S.; Yashas, S.R.; Anilkumar, K.M.; Shahmoradi, B.; Shivaraju, H.P. Comprehensive Understanding of Urban Water Supply Management: Towards Sustainable Water-Socio-Economic-Health-Environment Nexus. Water Resour. Manag. 2021, 35, 315–336. [Google Scholar] [CrossRef]

- Aburas, H.; Shahrour, I.; Giglio, C. Route Planning under Mobility Restrictions in the Palestinian Territories. Sustainability 2024, 16, 660. [Google Scholar] [CrossRef]

- Itair, M.; Hijazi, I.; Mansour, S.; Shahrour, I. Empowering Sustainability Advancement in Urban Public Spaces Through Low-Cost Technology and Citizen Engagement. In Innovations in Smart Cities Applications Volume 7; Lecture Notes in Networks and Systems; Ben Ahmed, M., Boudhir, A.A., El Meouche, R., Karaș, İ.R., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 292–299. [Google Scholar]

- Itair, M.; Shahrour, I.; Dbeis, A.; Bian, H.; Samhan, S. Leveraging Participatory Science for Tackling Water Supply Challenges in Water-Scarce Developing Regions. Water 2024, 16, 2080. [Google Scholar] [CrossRef]

- Paul, J.D.; Cieslik, K.; Sah, N.; Shakya, P.; Parajuli, B.P.; Paudel, S.; Dewulf, A.; Buytaert, W. Applying Citizen Science for Sustainable Development: Rainfall Monitoring in Western Nepal. Front. Water 2020, 2, 581375. [Google Scholar] [CrossRef]

- Pocock, M.J.O.; Roy, H.E.; August, T.; Kuria, A.; Barasa, F.; Bett, J.; Githiru, M.; Kairo, J.; Kimani, J.; Kinuthia, W.; et al. Developing the Global Potential of Citizen Science: Assessing Opportunities That Benefit People, Society and the Environment in East Africa. J. Appl. Ecol. 2019, 56, 274–281. [Google Scholar] [CrossRef]

- Roche, J.; Bell, L.; Galvão, C.; Golumbic, Y.N.; Kloetzer, L.; Knoben, N.; Laakso, M.; Lorke, J.; Mannion, G.; Massetti, L.; et al. Citizen Science, Education, and Learning: Challenges and Opportunities. Front. Sociol. 2020, 5, 613814. [Google Scholar] [CrossRef]

- Sagers, M. How Can Citizen Science Be Used Effectively within Environmental Education in Order to Foster Environmental Change? Hamline University: St Paul, MN, USA, 2020. [Google Scholar]

- Cornish, F.; Breton, N.; Moreno-Tabarez, U.; Delgado, J.; Rua, M.; de-Graft Aikins, A.; Hodgetts, D. Participatory Action Research. Nat. Rev. Methods Primer 2023, 3, 34. [Google Scholar] [CrossRef]

- Pocock, M.J.O.; Chapman, D.S.; Sheppard, L.J.; Roy, H.E. Choosing and Using Citizen Science: A Guide to When and How to Use Citizen Science to Monitor Biodiversity and the Environment; NERC/Centre for Ecology & Hydrology: Wallingford, UK, 2015; p. 24. [Google Scholar]

- Shirk, J.L.; Ballard, H.L.; Wilderman, C.C.; Phillips, T.; Wiggins, A.; Jordan, R.; McCallie, E.; Minarchek, M.; Lewenstein, B.V.; Krasny, M.E.; et al. Public Participation in Scientific Research: A Framework for Deliberate Design. Ecol. Soc. 2012, 17, 1–20. [Google Scholar] [CrossRef]

- Herman Assumpção, T.; Popescu, I.; Jonoski, A.; Solomatine, D. Citizen Observations Contributing to Flood Modelling: Opportunities and Challenges. Hydrol. Earth Syst. Sci. 2018, 22, 1473–1489. [Google Scholar] [CrossRef]

- Roche, A.J.; Rickard, L.N.; Huguenard, K.; Spicer, P. Who’s Tapped Out and What’s on Tap? Tapping Into Engagement Within a Place-Based Citizen Science Effort. Soc. Nat. Resour. 2022, 35, 667–683. [Google Scholar] [CrossRef]

- Batsaikhan, A.; Hachinger, S.; Kurtz, W.; Heller, H.; Frank, A. Application of Modern Web Technologies to the Citizen Science Project BAYSICS on Climate Research and Science Communication. Sustainability 2020, 12, 7748. [Google Scholar] [CrossRef]

- Schmuderer, S.; Zink, R.; Gamerith, W. Citizen Participation via Digital Maps: A Comparison of Current Applications. GIForum 2019, 7, 34–46. [Google Scholar] [CrossRef]

- Maund, P.R.; Bentley, J.W.; Austen, G.E.; Irvine, K.N.; Fish, R.; Dallimer, M.; Davies, Z.G. The Features and Processes Underpinning High-Quality Data Generation in Participatory Research and Engagement Activities. Methods Ecol. Evol. 2022, 13, 68–76. [Google Scholar] [CrossRef]

- Santos-Tapia, C.; Verderau, M.; Borràs, S.; Flórez-Santasusana, M.; Flórez, F.; Morales, J.J.; Moli, P.; Borràs, A.; Cirach, M.; Ubalde-López, M. Citizen Science and Social Innovation as Citizen Empowerment Tools to Address Urban Health Challenges: The Case of the Urban Health Citizen Laboratory in Barcelona, Spain. PLoS ONE 2024, 19, e0298749. [Google Scholar] [CrossRef]

- Moor, T.D.; Rijpma, A.; López, M.P. Dynamics of Engagement in Citizen Science: Results from the “Yes, I Do!”-Project. Citiz. Sci. Theory Pract. 2019, 4. [Google Scholar] [CrossRef]

- ANU-FEIT Welcome Message|Faculty of Engineering and Information Technology. Available online: https://eng.najah.edu/en/about/welcome-message/ (accessed on 20 October 2023).

- Abreu, N.; Pinto, A.; Matos, A.; Pires, M. Procedural Point Cloud Modelling in Scan-to-BIM and Scan-vs-BIM Applications: A Review. ISPRS Int. J. Geo-Inf. 2023, 12, 260. [Google Scholar] [CrossRef]

- Beck, S.F.; Abualdenien, J.; Hijazi, I.H.; Borrmann, A.; Kolbe, T.H. Analyzing Contextual Linking of Heterogeneous Information Models from the Domains BIM and UIM. ISPRS Int. J. Geo-Inf. 2021, 10, 807. [Google Scholar] [CrossRef]

- Raza, M.S.; Tayeh, B.A.; Abu Aisheh, Y.I.; Maglad, A.M. Potential Features of Building Information Modeling (BIM) for Application of Project Management Knowledge Areas in the Construction Industry. Heliyon 2023, 9, e19697. [Google Scholar] [CrossRef]

- Bosch, A.; Volker, L.; Koutamanis, A. BIM in the Operations Stage: Bottlenecks and Implications for Owners. Built Environ. Proj. Asset Manag. 2015, 5, 331–343. [Google Scholar] [CrossRef]

- Deng, M.; Menassa, C.; Kamat, V. From BIM to Digital Twins: A Systematic Review of the Evolution of Intelligent Building Representations in the AEC-FM Industry. J. Inf. Technol. Constr. 2021, 26, 58–83. [Google Scholar] [CrossRef]

- Hijazi, I.H.; Krauth, T.; Donaubauer, A.; Kolbe, T. 3DCITYDB4BIM: A system architecture for linking bim server and 3d citydb for bim-gis-integration. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 4, 195–202. [Google Scholar] [CrossRef]

- Lingo, R. Exploring the Potential of AI-Generated Synthetic Datasets: A Case Study on Telematics Data with ChatGPT. arXiv 2023, arXiv:2306.13700. [Google Scholar]

- Hittmeir, M.; Ekelhart, A.; Mayer, R. On the Utility of Synthetic Data: An Empirical Evaluation on Machine Learning Tasks. In Proceedings of the 14th International Conference on Availability, Reliability and Security, Canterbury, UK, 26–29 August 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Korakakis, M.; Mylonas, P.; Spyrou, E. A Short Survey on Modern Virtual Environments That Utilize AI and Synthetic Data. In Proceedings of the MCIS 2018 Proceedings, Corfu, Greece, 28–30 September 2018. [Google Scholar]

- Lu, Y.; Shen, M.; Wang, H.; Wang, X.; van Rechem, C.; Wei, W. Machine Learning for Synthetic Data Generation: A Review. arXiv 2023, arXiv:2302.04062. [Google Scholar] [CrossRef]

- Reiner Benaim, A.; Almog, R.; Gorelik, Y.; Hochberg, I.; Nassar, L.; Mashiach, T.; Khamaisi, M.; Lurie, Y.; Azzam, Z.S.; Khoury, J.; et al. Analyzing Medical Research Results Based on Synthetic Data and Their Relation to Real Data Results: Systematic Comparison From Five Observational Studies. JMIR Med. Inform. 2020, 8, e16492. [Google Scholar] [CrossRef]

- Braddon, A.E.; Robinson, S.; Alati, R.; Betts, K.S. Exploring the Utility of Synthetic Data to Extract More Value from Sensitive Health Data Assets: A Focused Example in Perinatal Epidemiology. Paediatr. Perinat. Epidemiol. 2023, 37, 292–300. [Google Scholar] [CrossRef]

- D’Amico, S.; Dall’Olio, D.; Sala, C.; Dall’Olio, L.; Sauta, E.; Zampini, M.; Asti, G.; Lanino, L.; Maggioni, G.; Campagna, A.; et al. Synthetic Data Generation by Artificial Intelligence to Accelerate Research and Precision Medicine in Hematology. JCO Clin. Cancer Inform. 2023, 7, e2300021. [Google Scholar] [CrossRef]

- Koenecke, A.; Varian, H. Synthetic Data Generation for Economists. arXiv 2020, arXiv:2011.01374. [Google Scholar]

- Mr, N.; Gyasi, S. Customer Dissatisfaction and Complaining Responses Towards Mobile Telephony Services. Afr. J. Inf. Syst. 2012, 4, 1. [Google Scholar]

| User Type | Number of Participants | Percentage of Participants | Number of Assessments | Percentage of Assessments | Assessment Frequency |

|---|---|---|---|---|---|

| Student | 261 | 80.3% | 821 | 82.1% | 3.1 |

| Academic Member | 37 | 11.4% | 108 | 10.8% | 2.9 |

| Administrative Staff | 15 | 4.6% | 36 | 3.6% | 2.4 |

| Security Member | 12 | 3.7% | 35 | 3.5% | 2.9 |

| Total | 325 | 100.0% | 1000 | 100.0% | 3.1 |

| Floor ID/Facilities’ Category | Academic Use | Open Area | Toilets | Emergency | Vertical Circulation | % By Floor |

|---|---|---|---|---|---|---|

| B2 | 1.6% | 0.5% | 0.5% | 0.3% | 2.9% | |

| B1 | 6.2% | 2.4% | 1.5% | 0.3% | 10.4% | |

| GF | 18.0% | 10.3% | 3.1% | 1.3% | 32.7% | |

| F1 | 12.3% | 5.8% | 3.5% | 0.9% | 22.5% | |

| F2 | 0.2% | 4.4% | 3.4% | 0.5% | 8.5% | |

| F3 | 9.3% | 4.1% | 3.0% | 0.8% | 17.2% | |

| F4 | 1.9% | 1.1% | 0.9% | 0.2% | 4.1% | |

| Vertical Circulation | 1.7% | 1.7% | ||||

| % By Facilities’ Category | 49.5% | 28.6% | 15.9% | 4.3% | 1.7% | 100.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Itair, M.; Shahrour, I.; El Meouche, R.; Hattab, N. Enhancing Building Services in Higher Education Campuses through Participatory Science. Buildings 2024, 14, 2784. https://doi.org/10.3390/buildings14092784

Itair M, Shahrour I, El Meouche R, Hattab N. Enhancing Building Services in Higher Education Campuses through Participatory Science. Buildings. 2024; 14(9):2784. https://doi.org/10.3390/buildings14092784

Chicago/Turabian StyleItair, Mohammed, Isam Shahrour, Rani El Meouche, and Nizar Hattab. 2024. "Enhancing Building Services in Higher Education Campuses through Participatory Science" Buildings 14, no. 9: 2784. https://doi.org/10.3390/buildings14092784

APA StyleItair, M., Shahrour, I., El Meouche, R., & Hattab, N. (2024). Enhancing Building Services in Higher Education Campuses through Participatory Science. Buildings, 14(9), 2784. https://doi.org/10.3390/buildings14092784