Abstract

Despite the benefits of learning an instrument, many students drop out early because it can be frustrating for the student, expensive for the caregiver, and loud for the household. Virtual Reality (VR) and Extended Reality (XR) offer the potential to address these challenges by simulating multiple instruments in an engaging and motivating environment through headphones. To assess the potential for commercial VR to augment musical experiences, we used standard VR implementation processes to design four virtual trumpet interfaces: camera-tracking with tracked register selection (two ways), camera-tracking with voice activation, and a controller plus a force-feedback haptic glove. To evaluate these implementations, we created a virtual music classroom that produces audio, notes, and finger pattern guides loaded from a selected Musical Instrument Digital Interface (MIDI) file. We analytically compared these implementations against physical trumpets (both acoustic and MIDI), considering features of ease of use, familiarity, playability, noise, and versatility. The physical trumpets produced the most reliable and familiar experience, and some XR benefits were considered. The camera-based methods were easy to use but lacked tactile feedback. The haptic glove provided improved tracking accuracy and haptic feedback over camera-based methods. Each method was also considered as a proof-of-concept for other instruments, real or imaginary.

1. Introduction

While learning musical instruments has long been associated with numerous physical and cognitive benefits, many students, especially young learners, drop out before they attain these advantages (Cremaschi et al. 2015). Students or parents may be deterred by the up-front cost of acquiring an instrument, as well as the difference in quality between performance instruments and rentals, especially when there is no guarantee they will enjoy playing; the instrument itself may be too loud for their living environment, or they may simply lose motivation from struggling with the requirements of practicing alone, especially if they are not able to observe any results or progress.

Extended reality (XR) is a general term used to describe immersive technologies that simulate or augment reality through computer-generated sensory replacement or enhancement experiences, such a Virtual Reality (VR), where sensations from the real world are obscured and replaced by sensations from a different virtual world, and Augmented Reality (AR), where sensations from the physical world are overlaid with additional sensations or information. XR has the potential to offer solutions to these and other problems with musical skill acquisition. Because XR can simulate anything that can be modeled in a virtual world, it is possible to expose the student to expensive or hard-to-acquire instruments as well as simplified or smaller-sized introductory instruments; bespoke personalized instruments, or even physically impossible instruments. A simulated environment provides the ability to digitally control audio output, and the creation of an immersive environment can augment the playing experience to apply prompts and other learning tools, as well as performance optimizations or creativity tools, leading to a higher degree of interactivity and engagement over traditional instruments in traditional practice environments.

Commercially available VR systems today (such as the Meta Quest or the Valve Index) usually come with a location-tracked optical headset with a microphone and speakers, as well as a pair of location-tracked handheld controllers. While this is sufficient for replicating instruments that rely on motion or position of the hand, such as percussion, many musical instruments require small and precise finger movements that cannot be replicated on the general button configuration provided by standard controllers. For example, the Meta Quest controller features a joystick, two round buttons on the front face, and two buttons on the back known as the grip and trigger. Playing a musical instrument often requires finger movements that are not aligned with these controllers, and there are no consumer-level VR controllers available that approximate the shape or feeling of any traditional musical instrument.

Hand tracking is an attractive solution due to its versatility and availability, with modern VR headsets such as the Meta Quest 2 offering built-in hand tracking with the front-facing cameras. These cameras have suitable accuracy for simple tasks, such as grabbing or user interface (UI) selection, but can struggle with factors such as occlusion, where the hand or other fingers obscure each other; environmental factors such as background noise or poor lighting; and a limited window in which the tracking can be reasonably maintained (Parger et al. 2022). Most importantly, camera tracking does not provide tactile feedback in the form of the sense of touch while manipulating objects, such as shape, or texture; neither does it offer a sense of resistance or pressure, known as force-feedback, to the user. While the virtual hand can appear in the user’s view to be associated with the user’s physical hand, the tangible feeling of interacting with objects (such as a musical instrument) is more difficult to achieve, especially with the dexterous manipulations required for musical interactions.

In the wide variety of traditional musical instruments available to be played, some are easier to simulate than others. Simulating piano or percussion is relatively straightforward, and some string instruments such as guitars can be simulated with reasonable acuity. Simulating wind instruments, however, presents unique technical and human interaction challenges. A significant portion of the instrument’s behaviour is determined by the performer’s interactions at the mouthpiece. For example, the embouchure and breath pressure of a trumpet player simultaneously determines whether a note is sounding or not, as well as the pitch, the volume, and the timbre. Because VR technology usually incorporates a head-mounted visor, adding hardware to track or analyse the position or activation of the mouth or lips is particularly difficult. Creating a virtual music environment for a simulated wind instrument without specialized equipment to simulate a mouthpiece requires a design that deviates from how the instrument typically functions, but still needs to be accurate enough to be a usable practice experience.

In this work, we explore how commercially available VR technology can address these concerns. First, we created a virtual environment designed to simulate a high school music classroom. In this scene, we enlarged a music stand and used it to display dynamically generated notes and associated finger patterns, based on information in a Musical Instrument Digital Interface (MIDI) file, which scroll past the user (in a style similar to rhythm games) towards a target line, which conveys the timing. We chose to tailor this environment to trumpets, as they are played with three fingers on one hand, simplifying tracking and enabling more virtual interface prototypes. Next, we designed four virtual trumpets controlled by either hand tracking provided by the VR headset, or a force-feedback haptic glove; and analytically compared them with a physical acoustic trumpet and Morrison Digital Trumpet based on user experience factors such as ease of use, familiarity, playability, noise, and versatility. Lastly, we use these scenarios to discuss the limitations of current VR hardware and design practices, identify potential sources of simulation sickness, and present a set of areas to be considered for future development.

Based on our evaluations, physical trumpets provided the best simulation experience due to the realistic and timely audio, and haptic feedback and freedom from tracking errors. While playing an acoustic trumpet in virtual reality does not affect its physical properties such as noise or familiarity, the environment may improve engagement and accessibility (Makarova et al. 2023; Stark et al. 2023). The Morrison Digital Trumpet we used does not measure embouchure directly, but rather splits the collection of this embouchure information into breath pressure (which controls note dynamics) and register selection (the set of available notes, determined on traditional instruments by the input frequency), which is controlled by selecting one of a set of buttons on the side of the instrument. This MIDI trumpet can produce a variety of synthesized sounds and effects while maintaining the familiar shape and operation of a trumpet, however, the instrument requires training to develop familiarity with selecting a register button using the left hand while pressing valves with the right.

Virtual trumpets with no physical counterpart allow for the abstraction of the instrument and provide a high degree of versatility, but like the aforementioned digital physical instruments, virtual trumpets require the user to learn a new interface. While hand tracking to control a virtual trumpet may suffer from tracking errors and lack of timely feedback, continuous selection via world space still provided reliable and intuitive register selection. Force-feedback and tactile gloves may provide a higher degree of accuracy and more timely feedback compared to camera-tracking, but current gloves are too expensive or difficult to assemble for an average consumer, and lack the degrees of freedom (specifically the ability to measure “finger splay,” or how far apart the fingers are) to simulate subtle interactions with a range of instruments. Although the virtual trumpets did not perform as well as the physical instruments, we present these prototypes as building blocks towards future VR instrument design, and discuss the advantages and disadvantages of each implementation.

2. Background

2.1. Extended Reality Environments

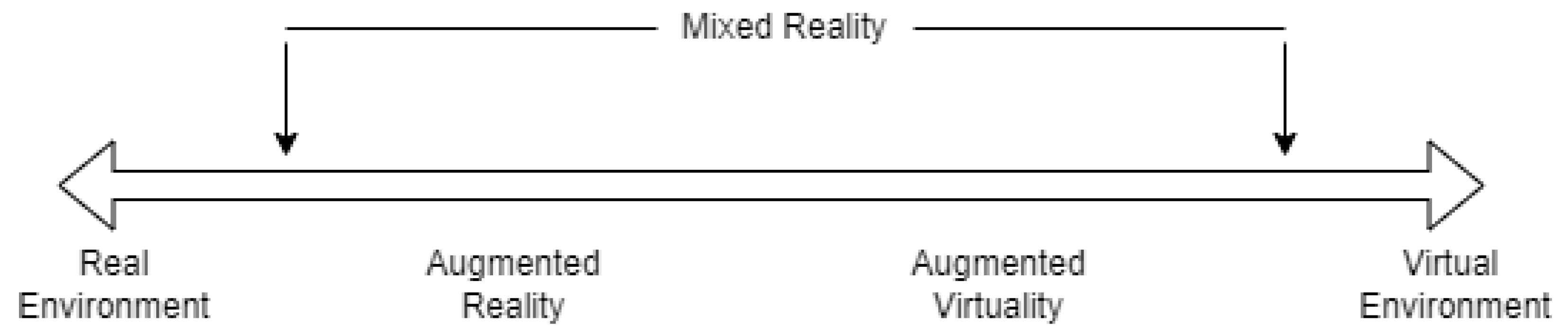

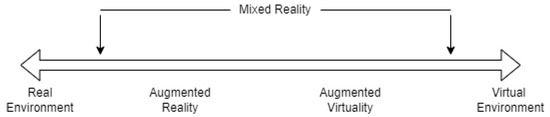

Extended Reality (XR) is an abstract term used to describe technologies that may augment or replace reality with digital overlays and interactions (Alnagrat et al. 2022). While the definition and components of XR are contested in academic literature (Rauschnabel et al. 2022; Speicher et al. 2019), XR experiences are frequently categorized based on Milgram’s reality-virtuality continuum (Milgram and Kishino 1994), shown in Figure 1, with two prominent categories: Virtual Reality (VR), which refers to scenarios with computer-generated environments and interactions, and Augmented Reality (AR), which integrates visual, auditory, or other sensory information with the real world. (Farshid et al. 2018; Speicher et al. 2019). A further distinction between XR technologies is non-immersive methods, which present a virtual environment on a two dimensional (2D) screen that is controlled by input methods such as a mouse and keyboard, and immersive methods, which provide the user with a sense of presence within the environment, such as a Cave Automatic Virtual Environment (CAVE) or, more commonly, a head-mounted display (HMD) (Freina and Ott 2015; Ventura et al. 2019).

Figure 1.

The reality-virtuality continuum (Milgram and Kishino 1994).

Virtual environments can provide many benefits beyond the instrument simulation itself. VR can simulate interesting environments that students might not normally be able to visit or perform in, such as a theatre or concert hall, including varying acoustical reverberation responses (Ppali et al. 2022). These environments can be manipulated to induce different levels of comfort in the user through adding factors such as an active virtual audience, thereby creating an accessible system for treating performance anxiety through virtual exposure therapy (Bissonnette et al. 2015). The novelty of VR increases student interest, with studies on virtual training demonstrating an increase in voluntary repetition (Makarova et al. 2023; Stark et al. 2023), as well as similar or better performance and retention over traditional training (Cabrera et al. 2019). Virtual reality environments also foster creativity through inspirational environments (Li et al. 2022; Ppali et al. 2022) and normally impossible interfaces (Desnoyers-Stewart et al. 2018).

VR Design Practices

Care needs to be taken when designing virtual environments and interactions in order to maintain an appropriate level of placement and immersion (Ryan-Bengtsson and Van Couvering 2022). Even simple actions such as movement or menu selection may cause eye strain, vection, and simulation sickness (Chang et al. 2020; Malone and Brünken 2021), which may last multiple hours in some users (Dużmańska et al. 2018). Traditional 2D interactions, such as UI popup instructions, may not translate favourably into a virtual world, creating intrusive and frustrating menus. Users who struggle to comfortably use a simulation will not be able to stay in the environment, reducing the benefits of sustained practice (Stauffert et al. 2020).

To help guide developers in creating usable and enjoyable VR experiences, Meta has made VR guidelines and best practices available on their website1. Although these guidelines are for the general case, there are three points particularly pertinent to instrument simulation, which relate to eye strain, interaction and model design, and avatars.

VR HMDs create a conflict between accomodative demand, in which a user’s eyes must change the lens size to accommodate depth, and vergence demand, which refers to the degree a user’s eyes must rotate to focus on an item, as virtual objects presented on the screen are always at a set distance, but the user’s eyes must still rotate to converge on the item of interest (Yadin et al. 2018). To avoid simulation sickness generated by this conflict, items the user will be required to focus on should be displayed at a comfortable viewing distance, which Meta suggests is between 0.5 and 1m. Many real-world instruments, especially wind instruments, are held directly in front of a player’s face. Presenting a virtual instrument this close to the user’s view will violate this design principle. The trombone is a particularly problematic example, which not only presents an object close to the user’s view, but also has a moving slide players may visually align with landmarks on the instrument, requiring them to focus on the close-up object.

Although VR controllers can produce virtual hands to simulate simple interactions, they cannot simulate torque or resistance. Because of this, users may lose immersion when interacting with heavy or complex objects, such as a tuba (Stellmacher et al. 2022). Two-handed interactions, such as a flute, are also problematic, as there is no sense of a rigid structure between the player’s hands. Unfortunately, Meta does not offer a direct solution to this problem, and simply encourages developers to find a way to make the lack of weight believable, and to use caution with two-handed objects.

Lastly, Meta warns against the use of virtual arms due to the difficulty of tracking and representing them without additional devices or sensors. Humans are capable of estimating the position, orientation, and motions of their body and limbs through a sense called proprioception (Hillier et al. 2015). While it may be tempting to provide the user an avatar holding a simulated instrument with proper posture, this may result in inconsistencies between the player’s virtual and real arms, specifically with the angle of the elbow, which may negatively effect their immersion. To avoid this, Meta suggests not rendering anything above the wrist.

2.2. Hand Tracking

Hand tracking refers to techniques designed to measure a user’s hand, such as position, movement, and rotation. The data from these measurements is provided to the app, and can be used to generate a three dimensional (3D) hand model to provide an alternative input source to standard controllers. Hand tracking is being adopted by modern headsets such as the Meta Quest 2 or HTC Vive Focus 3 due to its potential to increase immersion (Buckingham 2021), provide different interaction modalities (Voigt-Antons et al. 2020), and improve user experience by providing more realistic haptic feedback (Desnoyers-Stewart et al. 2018; Hwang et al. 2017; Moon et al. 2022).

Hand recognition techniques can be divided into wearable or sensor-based methods. Wearable methods employ a device on the hand that combines tracking the overall hand position in space, such as attaching a standard VR controller to the wrist, with a glove or finger cap to measure finger position or flexion relative to the hand. Several methods have been employed for tracking finger movement, with tradeoffs between cost, accuracy, comfort, and degrees of freedom. A cheap but effective method is to measure finger curl through flex sensors or potentiometers, as employed by the Lucidglove (LucasVRTech 2023), but this only tracks downward grabbing motions. Another method, utilized by companies such as Sensoglove2, is to attach electronic measuring tools including IMUs, accelerometers, or gyroscopes to key positions on the fingers to transmit location data. This achieves good results at medium cost, but it suffers accuracy problems due to poor calibration or drift (Perret and Vander Poorten 2018). Magnetic tracking, such as that used in the Quantum Metaglove by Manus3, shows promising results in terms of accuracy, but has yet to be thoroughly tested against interference from external objects, and is well outside consumer price range. Although some tracking gloves may be purchased or assembled by an average consumer, many are still in development or only sold at enterprise level, making glove-based hand tracking still outside the common experience of most users.

Sensor-based tracking combines non-invasive equipment such as cameras or radar with recognition algorithms such as neural networks (Liu et al. 2022) or hidden Markov models (Talukdar and Bhuyan 2022) to detect and represent a user’s hand and finger positions and motion. Previously, gesture tracking required complex external equipment, such as arrays of sensors pointing at the hands (Zheng et al. 2013). Innovations such as the Xbox Kinect and Leap Motion, now marketed as Ultraleap, made gesture control available on a consumer level through infrared technology and cameras, but still required external equipment and additional setup (Brown et al. 2016; Bundasak and Prachyapattanapong 2019), and limited the range of detection to the infrared broadcast region of the device. Modern commercial VR headsets such as the Meta Quest 2 have enabled hand tracking using built-in cameras, providing reasonably accurate and inexpensive gesture recognition without additional equipment. Because headset-based hand recognition allows a view from only one direction, occlusion problems can be significant, as discussed below.

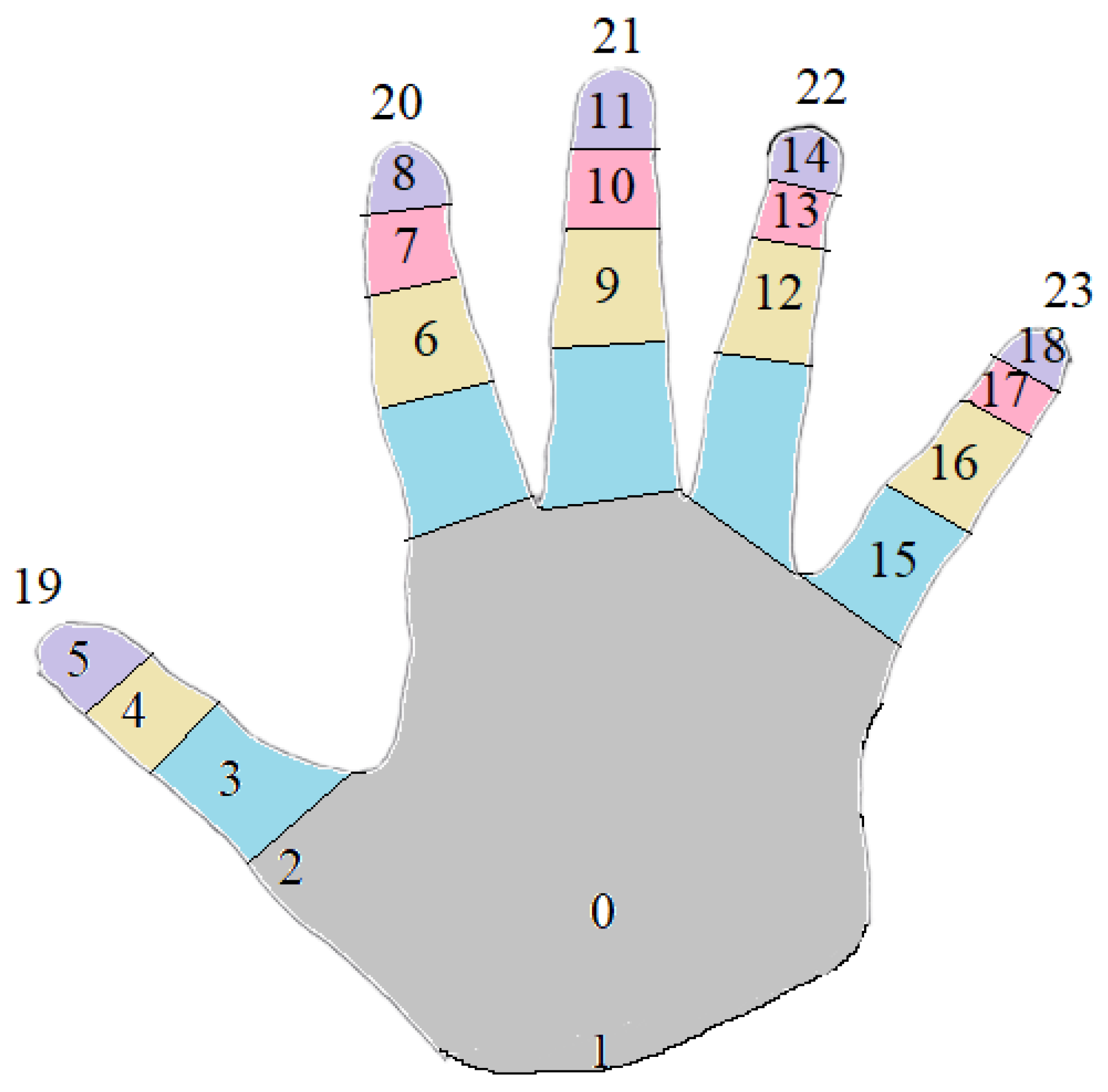

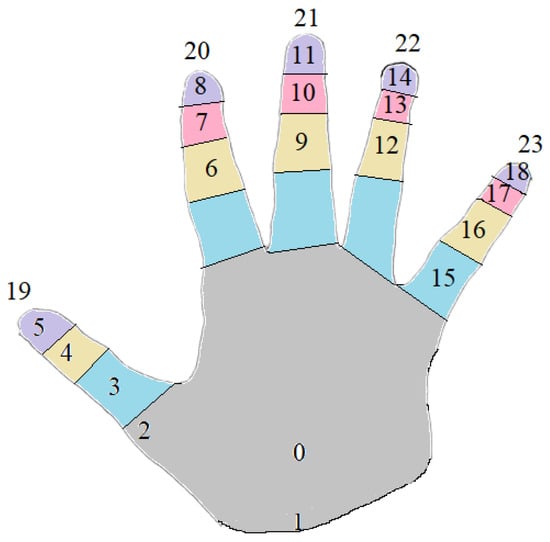

In order to track and represent hands in VR through the two front-facing cameras of the Meta Quest, a snapshot from the video feed is captured, then undergoes a multi-step process to isolate hand data and produce a virtual model (Song et al. 2022). First, the hands are isolated through segmentation and background removal, which uses machine learning to place a bounding box estimating the boundaries of the hands in the video feed. Important landmarks, such as the palm, joints, and fingertips, are mapped, and the spatial data is provided to the app. Through the Oculus Integration Package, the data is rendered as a 3D hand model, with pose and bone information provided by the OVRHand library4, as shown in Figure 2.

Figure 2.

The numeric bone ids used by the OVRHand library.

While this method is suitable for general positioning and distinct gestures such as pinching or grabbing, it suffers several drawbacks. The fingers will be blocked from view by the cameras from most angles an instrument would be held at; and if a physical instrument were held, hand tracking would be severely affected (Hamer et al. 2009), since most hand tracking computational models assume the hand is empty. The viewing angle of the cameras on a VR headset is optimized for inside-out headset tracking, and many musical instruments require the user to hold their hands outside of that window (Parger et al. 2022). For example, a clarinet requires the user to hold their hands below the viewing window of the headset.

Further limitations of this process are related to the hardware of the headset itself. The tracking frequency of the hand model is limited to the frame rate of the camera, and the computational power allocated to the classification is restricted based on operational requirements of the headset (Ren et al. 2018). These two features combine to reduce the accuracy of small movements, especially those required to play a musical instrument. Poor lighting or background noise will also contribute to reduced-quality tracking results. Developers must also be aware of platform-specific hardware restrictions when designing interactions, such as the reserved gestures on the Meta Quest 2; for example pinching with the index finger and thumb with the palm facing the headset will bring up a system menu, even if the developer would like to use that gesture for something else.

Most problematically, hand tracking alone struggles to provide haptic (touch- or motion- based) responses to guide the user, which reduces the user’s ability to perceive, align, and correct their action (Hwang et al. 2017). Playing a musical instrument is a tactile experience, and not being able to feel the instrument, especially during learning activities, can frustrate the user if they are unable to identify problems (Gibbs et al. 2022).

Hand Tracking Guidelines

Although hand tracking is relatively new in VR development and needs further exploration, Meta offers two primary interaction methods: poking and pinching5. Poking describes touching objects directly with the fingertip, such as pressing a button. Pinching refers to pressing the index and thumb together, and can either directly interact with objects, such as picking up an item, or draw a line outwards to use as a laser pointer for UI selection, with the pinch representing selection (Ppali et al. 2022). Pinching is well-suited to VR experiences, and is used as the the default interaction method in the Meta menu when using hand tracking. Pinching is a natural gesture, distinct and easy to track from multiple angles, and provides haptic feedback when the user’s fingertips touch. Ban et al. (2021); Pfeuffer et al. (2017).

2.3. Haptic Feedback

Haptic feedback is the use of touch, force, or vibrations, to help inform users about the success or failure of their actions. Haptic feedback is typically divided into tactile feedback, which refers to sensations such as pressure, temperature, or texture, and kinesthetic feedback, more commonly known as force-feedback, which provides the sense of force, resistance, or position (Park et al. 2022). Inclusion of haptic feedback in applications is important for improving user experience (Gibbs et al. 2022; Hamam et al. 2013; Moon et al. 2022) and performance (Hoggan et al. 2008). Haptic feedback in XR applications is especially valuable as a tool to increase user immersion through more accurate simulated interactions, such as providing feedback when a user grabs objects in virtual space (Zamani et al. 2021).

To provide beneficial haptic feedback, one method is to introduce physical objects in the real world to interact with. For example, a physical piano keyboard could be used which is aligned with a virtual copy of that piano keyboard (Desnoyers-Stewart et al. 2018). Another technique is to use specialized physical controllers that communicate directly with the virtual world, such as a simulated haptic mouthpiece (Smyth et al. 2006). These techniques are less feasible if the physical object required for the simulation is difficult to obtain, or there are no available or accurate controllers; also, this technique lacks flexibility in terms of simulating other experiences. In order to provide accurate but generalized feedback, general-purpose wearable haptic devices are a more extensible and scalable option.

Wearable haptics have previously been divided into three categories: gloves, thimbles, and exoskeletons (Caeiro-Rodríguez et al. 2021; Perret and Vander Poorten 2018). Gloves cover the majority of the hand, with actuators or motors sewn into or mounted on the fabric of the glove substrate to provide feedback to the wearer. Thimbles clamp onto the user’s fingertips, typically the thumb, index, and middle fingers, with the goal of providing tactile feedback to each finger during grasping activities or other interactions. Exoskeletons are external structures running parallel to the user’s bones (in this case, along the user’s fingers), with connections to the individual phalanges of the fingers. While these approaches are mechanically different, they share the same goals and design limitations. The materials need to be lightweight and flexible as to not impede a user’s movement; but durable enough to not deform when the users move their body, and be resistant to permanent deformation over time. The electronics need to be accurate and resistant to drift, while remaining compact enough to avoid collisions during use. Lastly, wearable haptics must be customized for different sizes and shapes to suit a variety of user’s hands, often requiring customized parts and personalized calibration, which elevates the cost and complexity of the device.

2.4. Embouchure Detection

Embouchure describes the positioning of a user’s lips, tongue, teeth, and other relevant facial muscles when playing a breath-controlled instrument (Woldendorp et al. 2016). Analyzing a player’s embouchure is useful both in helping correct their playing, and as an independent performance medium, and has been approached in several ways. Facial feature recognition has been used as a MIDI controller, such as measuring mouth position with a camera (Lyons et al. 2017), or using ultrasound to track tongue position (Vogt et al. 2002). These methods have been used to track large-scale facial movements unrelated to a particular instrument, and the micro-scale differences in the embouchure of wind or brass instruments would be difficult to consistently measure using these techniques.

Depending on the instrument, forming an embouchure may require specific interactions with the instrument itself. For example, the embouchure of a trumpet player is formed by pressing the lips against the mouthpiece of the trumpet with a specific pressure (Woldendorp et al. 2016). Forming an embouchure without the resistance of a physical mouthpiece present may be difficult or impossible, since part of setting the lips in the proper orientation involves pressing them against the instrument itself.

An alternative method is to alter an existing mouthpiece, to create a specialized mouthpiece for the instrument, or to create a new physical device that simulates a mouthpiece. Some designs have made use of air flow to estimate embouchure, by attaching two stagnation probes to a flute mouthpiece (Silva et al. 2005), or by creating a generalized reed model to simulate a variety of sounds and haptic effects (Smyth et al. 2006). Some instruments create sound primarily through vibrations, and may not be suitably measured with air flow alone. In these cases, embedding a pressure sensor in a trumpet or cornet mouthpiece may be effective (Großhauser et al. 2015), allowing real time detection of the user’s lips.

2.5. Pitch Detection

As an alternative to embouchure detection, a player may be asked to hum or sing a note, allowing the system to detect the intended note relative to other control information such as fingers selecting a valve combination. This interaction process is intuitive and simple to implement, but lacks verisimilitude against the intended simulation. One does not hum into a trumpet mouthpiece, and the length of the trumpet tube combined with features of the embouchure is what establishes the note, not the sung pitch of the player. Even given these limitations, however, the possibilities of pitch as an input method should not be rejected. Singing or humming notes is a technique already used in musical instrument practice (Steenstrup et al. 2021), and voice input would more accurately represent mouthpiece input compared to alternatives such as register selection with the player’s hands.

To measure an incoming pitch, a sound’s frequency is read through a microphone and given a human-readable value, such as a letter between A–G or a numeric MIDI value between 0–127. Although it is possible with training for a human to identify errors in pitch and adjust their play or tune their instruments themselves, casual musicians, such as karaoke singers, rely on pitch detection tools (Eldridge et al. 2010). Different pitch detection algorithms present tradeoffs between accuracy, cost, complexity, and latency (Drugman et al. 2018). The Robust Algorithm for Pitch Detection (RAPT) is useful for musical applications as it provides near-instantaneous feedback through a candidate generating function, performs well in noisy conditions, and is well known and tested (Azarov et al. 2012).

While instruments have a typical range of frequencies depending on their construction, such as a trumpet playing between 164.8 and 932.3 Hz, human voices have a voice frequency band between 300–3400 Hz (Hwang et al. 2017), with a person’s comfortable range varying by age, gender, and ethnicity. This uncertain range, combined with the difficulty of holding a tone without practice, makes it difficult to capture and relate human output to an instrument in an inclusive manner. One solution is to capture the human pitch, then process and convert it to the desired range, but this will suffer from inherent delay (Azarov et al. 2012), and users may find the discrepancy between the real and virtual audio unsettling (Ppali et al. 2022).

Since VR headsets contain a microphone and speakers in close proximity, it is necessary for manufacturers to implement noise cancelling to prevent an audio feedback loop (Nakagawa et al. 2015). Currently, the Quest 2 headset does not offer options to adjust or disable noise filtering. This imposes an extra barrier to pitch tracking in VR, as humming or playing an instrument may be filtered, affecting the read pitch, or disregarded outright as background noise.

2.6. Instrument Simulation

While electronic instrument prototypes have existed since the design of the Theremin in 1920, digital instruments became popular with the development of the Musical Instrument Digital Interface (MIDI) during the 1980s. MIDI files contain instructions on how a file should be played, such as the instrument, note on or off, a numerical value between 0 and 127 for the pitch, and a velocity for the volume (Ghatas et al. 2022). MIDI wind controllers, such as the Akai Electric Wind Instrument (EWI)6 or Yamaha WX7, use breath sensors that detect the speed of the air passing through the mouthpiece, generating a “note on” signal, as well as a note velocity, which then combines with the user’s finger pattern to create a numeric value used to generate a tone via a MIDI library (Rideout 2007). Alternatively, some digital instruments, such as Yamaha’s EZ-TP8, measure the incoming pitch from a user singing or humming, and simply play the corresponding note using the selected MIDI instrument. Digital instruments allow for a variety of customization and effects, but often have different characteristics than acoustic instruments, such as the Akai EWI’s capacative sensors preventing users from resting their fingers, or requiring a user to select a musical register via buttons or rollers, and are restricted to the physical shape and feeling of their design.

Conceptually, the easiest instruments to simulate in XR are pitched percussion, such as a marimba or xylophone, since they can be implemented by simply attaching mallets to the user’s hand models which triggers a note when a collision with a tile occurs, and are well-suited for controllers, which track well and can vibrate for haptic feedback. In practice, accurate collision detection is difficult, resulting in incorrect or multiple notes, and the mallet passing through what appears to be a solid object can be unsettling for players (Karjalainen and Maki-Patola 2004). While sensor-based tracking offers more variety for types of instruments by removing the need for controllers, using sensors amplifies the problems of collision detection, due to the difficulty in accurately tracking small finger movements, and the lack of haptic feedback to inform the user’s actions. One solution is to create abstract interfaces and remove the need for accurate instrument representation, such as controlling music with body movements or dancing (Bundasak and Prachyapattanapong 2019), but this is not always useful for training simulations, and although these new music interfaces can be engaging and effective, such interactions do not translate directly to learning of traditional instruments.

2.6.1. Pianos Three Ways

Piano keyboards have become a popular target for XR simulation, because they employ simple collision interactions between the fingers and the piano keys, and use a one-to-one ratio between a given user interaction and an expected note.

There have been three general approaches to improving tracking and haptic feedback for XR pianos. The first is a subset of XR called augmented virtuality, which introduces real-world items into the simulation and aligns them with virtual models of the same object, combining real and virtual interactions in one interface. Researchers demonstrated the potential of this method by overlaying a virtual piano keyboard interface on top of a modified physical MIDI keyboard (Desnoyers-Stewart et al. 2018), which were both connected through the Unity game engine. Due to reflection issues with the optical tracking method used, the keys of the MIDI keyboard were modified by adding matte black tape. Despite participants reporting problems with alignment, and some participants identifying themselves as piano experts, each of eleven participants in this study agreed that the augmented keyboard was more enjoyable than a standard piano.

The second method is force-feedback, where pressure is applied to the user’s fingers to replicate the resistance of interacting with the instrument. Force-feedback hardware is often complex and awkward, requiring external power connections as well as articulated activators or mechatronics, making it particularly challenging for an XR scenario where the user should be able to move freely (Perret and Vander Poorten 2018). Force-feedback haptic gloves for VR are becoming more common, but are still not generally available, making this method less popular with musical instrument simulation due to the bulkiness and lack of availability; however, adaptive resistance has been used, for example, in the Virtual Piano Trainer (Adamovich et al. 2009), which combined a CyberGrasp force-feedback glove and a piano simulation to treat stroke patients with impaired finger functionality.

The third method is tactile feedback. While force-feedback simulates the pressure or motion of the fingers, tactile feedback simulates the sense of touch through vibration, temperature, or variations in pressure. While this is typically done through the use of actuators or other devices embedded in a glove or thimble (Caeiro-Rodríguez et al. 2021; Perret and Vander Poorten 2018), a mid-air piano interface has been developed (Hwang et al. 2017) which provided tactile feedback through ultrasonic vibrations, theoretically providing increased clarity and satisfaction compared to having no such feedback.

2.6.2. Challenges of Wind Instruments

Simulating wind instruments in XR, such as brass or woodwinds, poses additional design constraints due to the importance of the mouthpiece and mouth interactions with the instrument (Woldendorp et al. 2016). Interactions at the mouth determine when a note should sound or not, as well as the volume, pitch, and timbre. Simulating a wind instrument therefore requires some form of apparatus at the mouth, or an alternative method for the user to communicate these features to the simulation system.

Wind instruments typically make use of keys or valves activated by the player’s fingers to change the length of the tube, which determines the note being played. Vibrations caused by interactions with the mouthpiece set up resonances in the tube, creating the note, but because tubes resonate at different frequencies depending on the energy of the vibration, a single pattern of finger interactions can activate several different notes (Woldendorp et al. 2016). The set of notes available with a certain resonance energy is referred to as a register within the range of an instrument (McAdams et al. 2017), and each register contains a set of unique key or valve selections corresponding to a range of notes. A trumpet, for example, with three valves, has a total of 8 possible valve combinations in each register, but in practice, most registers contain fewer than 8 notes; in the extreme case, a bugle is a brass horn with no valves, and all note selection is done by altering the mouthpiece interactions to activate one of the available resonance frequencies of the horn.

Tracking the motion of the fingers to select a register is made more difficult with wind instruments because the fingers are interacting with the instrument at the time of interaction, and the instrument therefore is likely to occlude the finger interactions. Camera based methods will also struggle to track the hand’s position if it is outside the tracking window (Parger et al. 2022), such as the far hand on a flute. Finger pose can also affect tracking, such as when the fingers are close together in a line, or when one finger blocks the others from view. Finger motions on keys or valves are small and subtle, and can suffer from latency inherent to virtual systems (Karjalainen and Maki-Patola 2004; Ppali et al. 2022).

While there has been some work in creating specialized controllers for mouthpieces, such as the virtual reed mouthpiece (Smyth et al. 2006), equipment to simulate the feeling of playing an instrument, such as vibrations or resistance, while also providing the tracking accuracy and degrees of freedom needed for finger patterns, does not exist on a consumer level.

3. Materials and Methods

For this work, we created a simulation environment to provide notes and finger prompts dynamically generated from a MIDI file. We tailored these prompts for either the standard finger pattern of an acoustic trumpet or the modified finger pattern of the Morrison Digital Trumpet. We then attempt to combine current hand tracking best practices outlined by Meta with the register selection methods of existing MIDI instruments. Specifically, we attempt to recreate the sequential button selection of the Morrison Digital Trumpet, the continuous selection of the Akai Electric Wind Instrument, and the pitch detection of the Yamaha EZ-TP. For valve selection, we designed two interfaces: a simple collision detection model and pose recognition model based on saved poses of the virtual hand. Lastly, we assemble a rapid prototype of version #4 of the Lucidglove. We use our virtual environment to internally evaluate each trumpet model based on ease of use, familiarity, playability, noise, versatility, and other user experience factors we observe.

Due to the COVID19 pandemic and the nature of sharing breath based instruments, we were not able to do a formal user study of these implementations. Instead, we engaged in a theoretical feature-based analysis of the different implementation models, considering a set of factors selected as likely to have high impact on which implementation model is better for a given set of circumstances. These models are based on our assessment against theoretical and analytical factors, as well as our personal experiences with the methods and objective analysis of the simulations. We plan to perform a user study in the future, with novice and expert trumpet players.

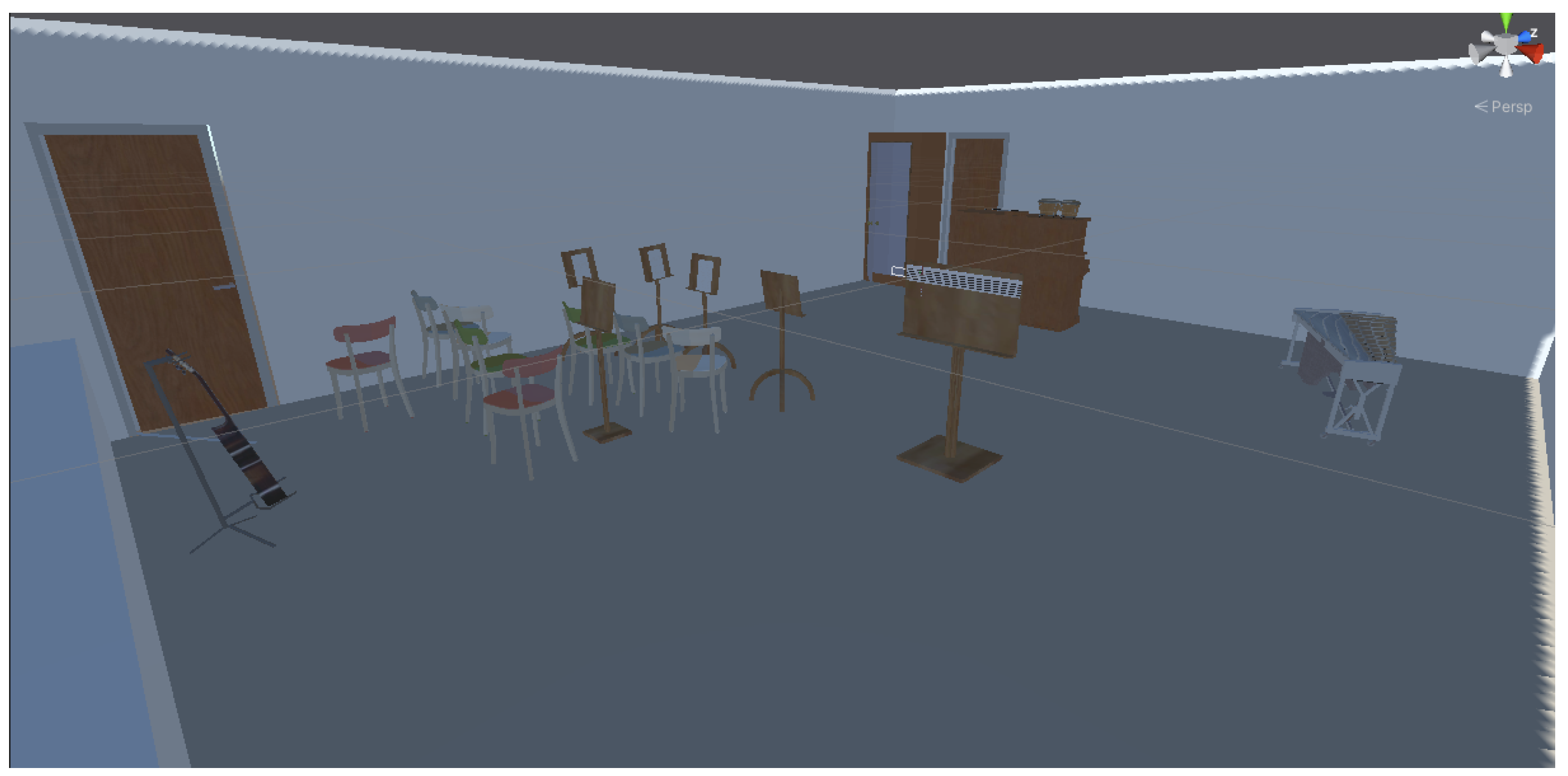

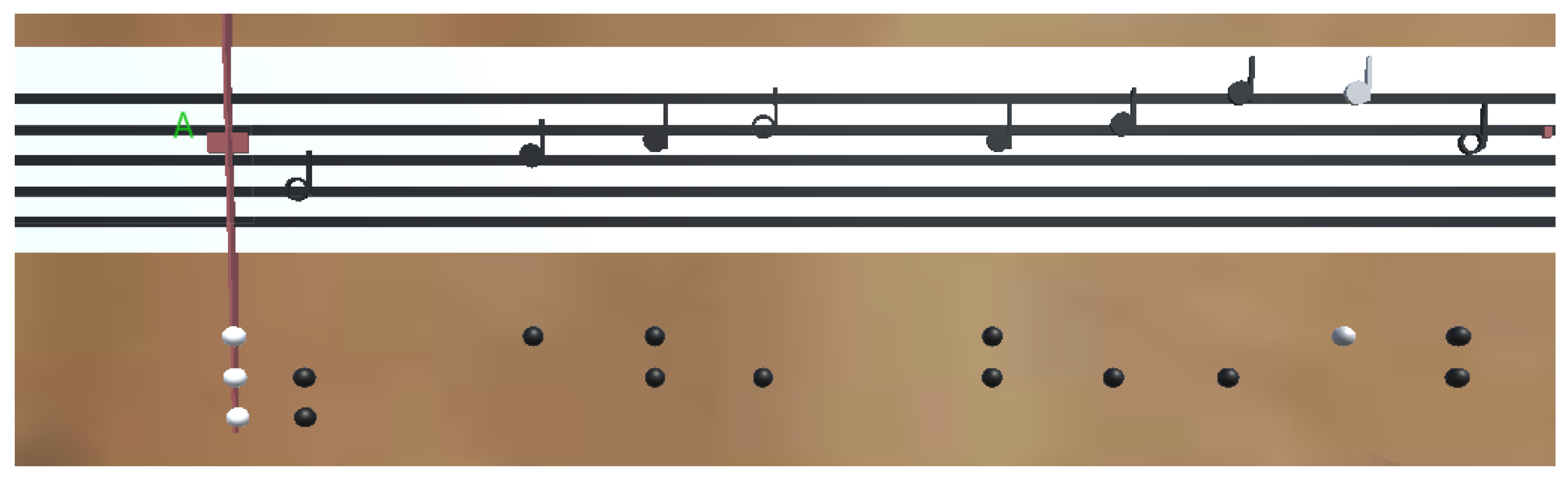

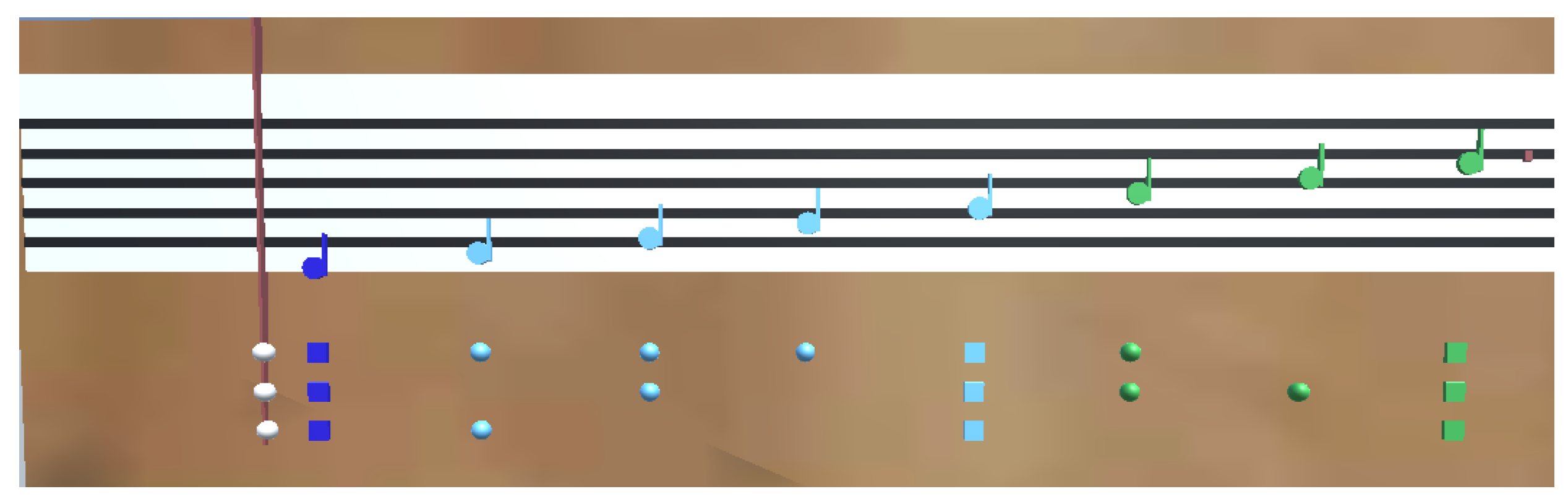

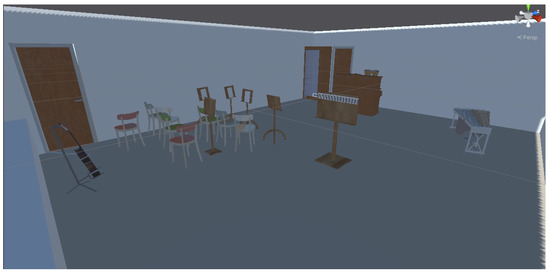

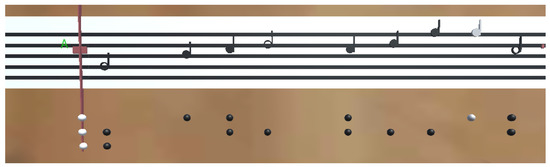

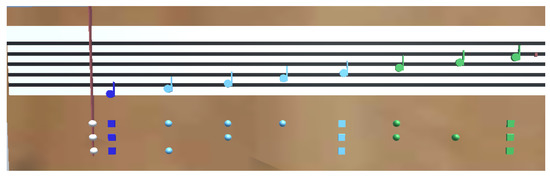

To create our environment, we used Unity 2020.3.12f1 and a music classroom base model uploaded to 3DWarehouse by Cara E.9, which included several musical stands and other props. We arranged these props to simulate the feeling of finding time alone at school to practice, with no additional pressure from an audience. We placed a music stand in the center to display musical prompts on, which we enlarged to improve visibility and give the user time to process and react to prompts, as shown in Figure 3. We synchronized two Maestro Midi Player Toolkits, which are free on the Asset Store10, with one generating notes based on the integer note values from one selected track of a MIDI file made with MuseScore, and the other playing the song on a delay. The models representing quarter, half, and other notes on the staff were made in Paint3D. For the finger patterns, we used flattened spheres to indicate which valves to press, or three squares if no valves should be pressed. For the acoustic trumpet, the notes are coloured white for natural and black for sharps and flats, as shown in Figure 4, while the digital and virtual trumpets use a colour theme of dark blue, light blue, green, orange, and red to show the user which register the user should select, seen in Figure 5. Incoming pitch from a physical instrument or from the user (to select registers) is handled by Pitch Detector11, which makes use of the RAPT algorithm.

Figure 3.

Virtual classroom environment for virtual trumpet testing.

Figure 4.

Example of the white and black notes and finger patterns produced for the acoustic trumpet.

Figure 5.

Example of the Musical Instrument Digital Interface (MIDI) trumpet notes and finger patterns, colourized to represent the register selection required.

For VR hardware, we chose to use a Meta Quest 2, since it is affordable and available on a consumer level, and provides built in hand tracking. For physical trumpets, we used our own standard acoustic trumpets, and a Morrison Digital Trumpet using a Garritan sound library, which in addition to using breath and valves, requires the user to select the register using buttons on the side, as shown in Figure 6.

Figure 6.

The button interface used to select registers on the Morrison Digital Trumpet.

For the virtual trumpets, we tested three methods for register selection:

- Voice activation, which reads the incoming pitch from the user, and rounds it to the nearest value available with the current valve state. Since we found that singing or humming into the microphone was physically straining and socially uncomfortable, we used a kazoo for pitch detection, which also produces a buzz at the lips which references a trumpet mouthpiece, improving immersion of the simulation. We chose a kazoo because it is cheap and easy to acquire, can be tongued like a normal instrument, and vibrates to provide mouth haptics.

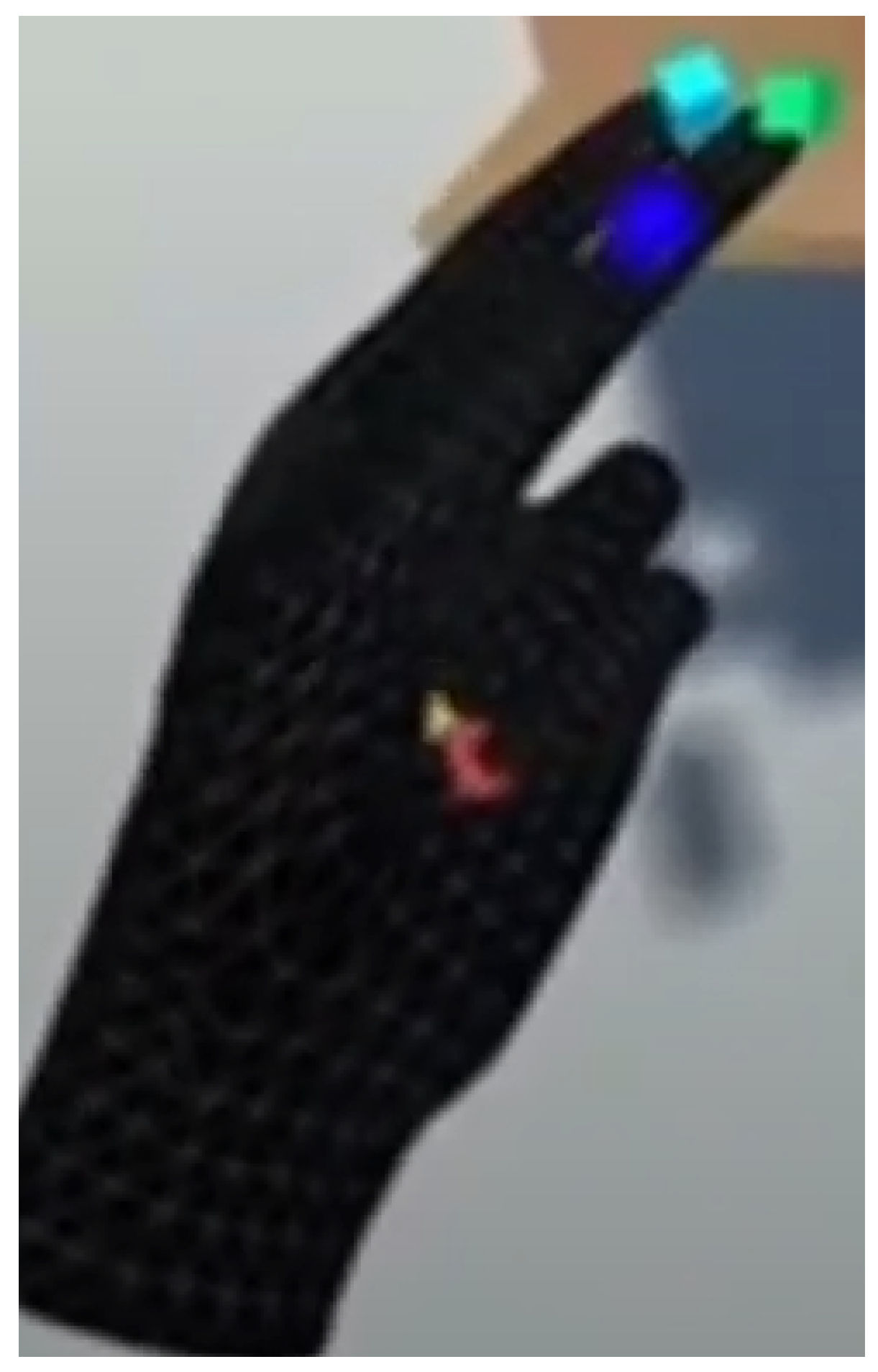

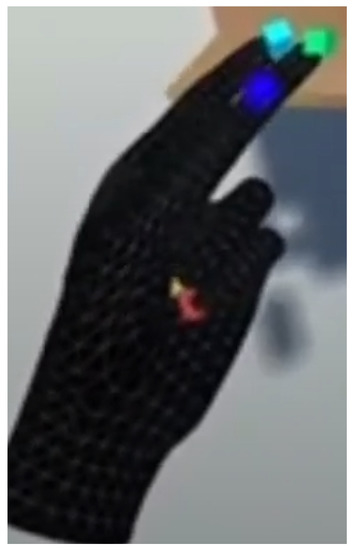

- Discrete selection of the register with the left hand, as a method to replicate the button presses on the MIDI trumpet. We chose the counting up metaphor as it intuitively represents increasing the register by one step, is easy to track, and can be performed quickly. We attached cubes to the left hand’s fingertips using the bone IDs provided by the OVRHand library, with a trigger zone above the hand that changes the register based on which fingertips are in the collision zone. Note on/off is determined by whether the thumb cube is triggered. Each cube is coloured to match the register it plays, based on the MIDI finger patterns, with a dark shade for off and a lighter shade for on, as shown in Figure 7.

Figure 7. Discrete register selection and note activation via coloured cubes on the left hand.

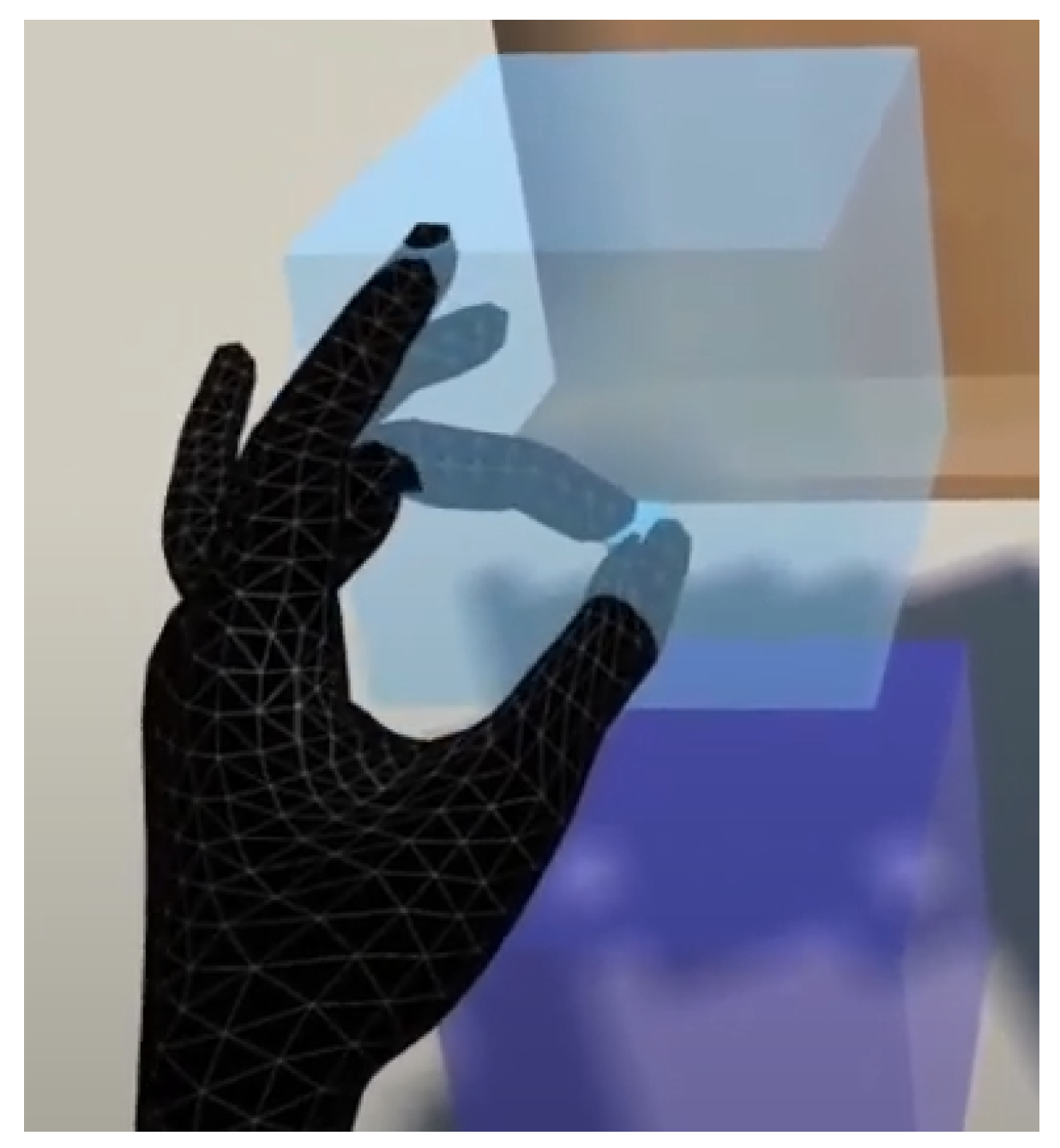

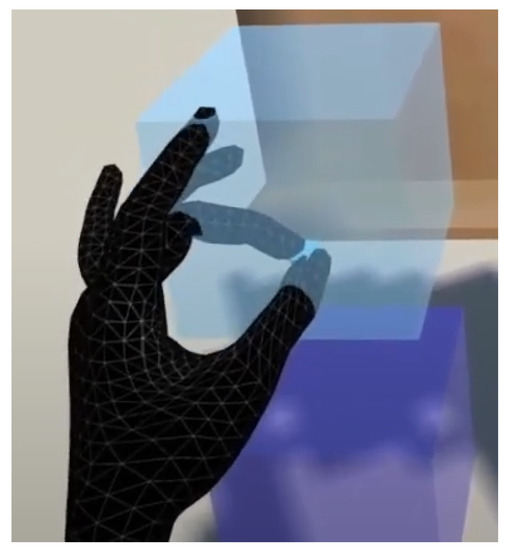

Figure 7. Discrete register selection and note activation via coloured cubes on the left hand. - Continuous selection of the register with the left hand, via a virtual “slider” activated by pinching coloured boxes with the thumb and middle finger, designed to replicate the thumb slider on digital instruments such as the Akai EWI. We chose to pinch with the middle finger, which is a built in function in the OVRHand library, since pinching with the index finger is a reserved system-level gesture on the Meta Quest headset, which opens the Oculus menu. To communicate this requirement, we added a coloured box to the middle finger that changes colour based on the active register. The boxes also match the colouring of the MIDI finger pattern, providing matching hints to the user. Figure 8 shows an example activation of the trumpet.

Figure 8. Continuous register selection and note activation via pinching the virtual slider indicator.

Figure 8. Continuous register selection and note activation via pinching the virtual slider indicator.

For virtual valve activation, we used two methods to determine the tracked finger positions:

- Correlating the local positions of all the bones in the virtual hand and using a nearest neighbour algorithm to classify the valve state based on known gesture templates.

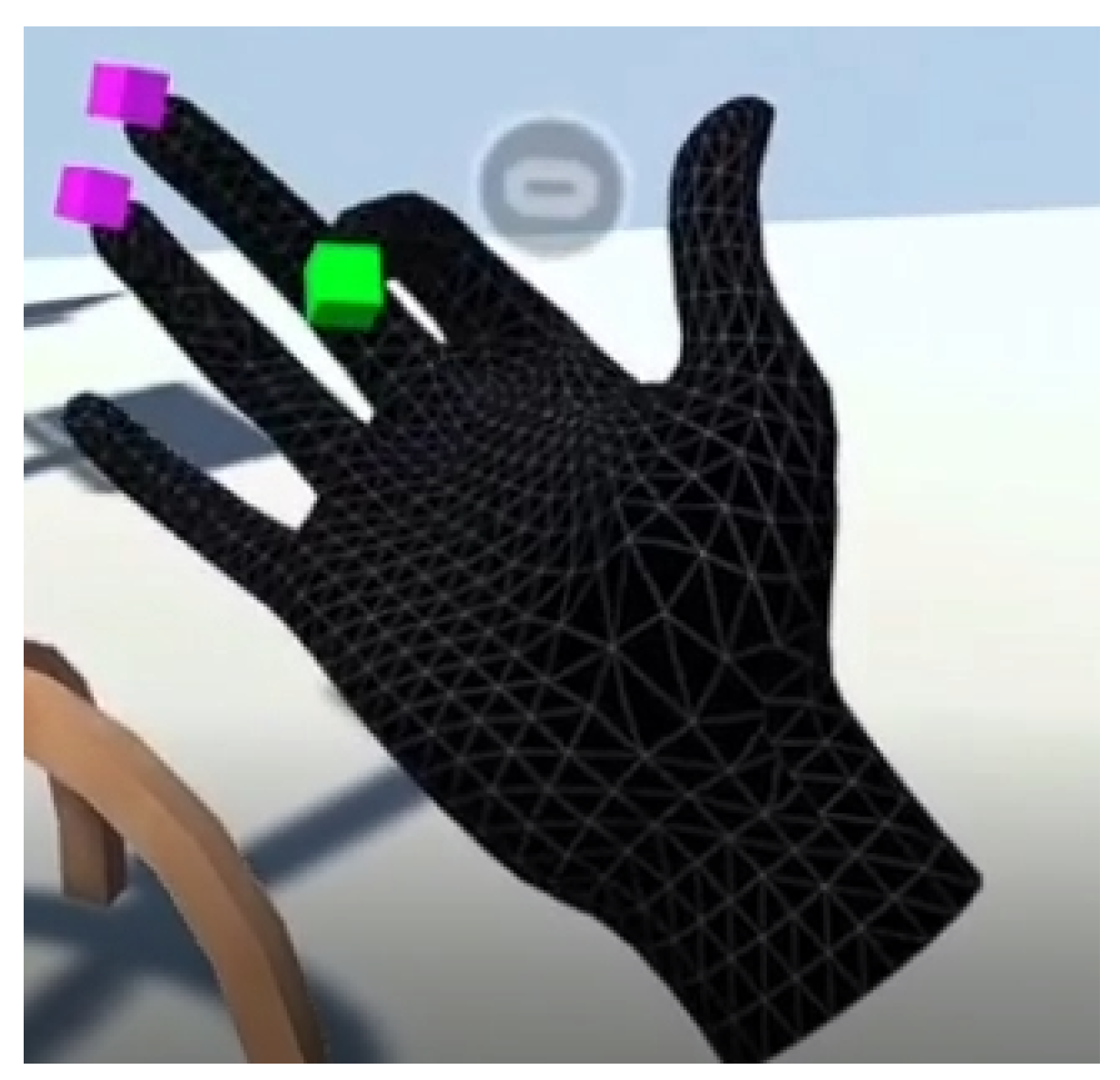

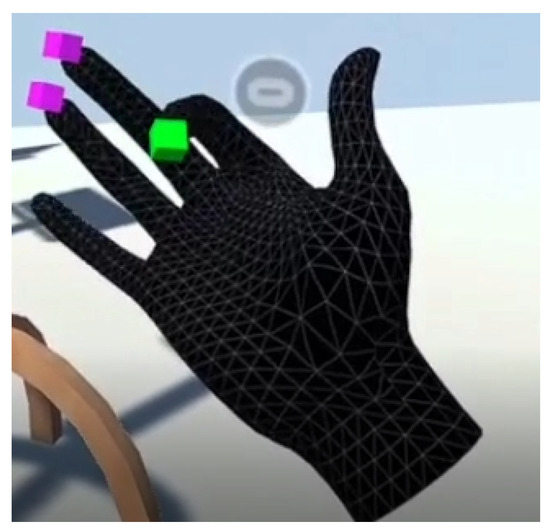

- Measuring the location of the fingertips relative to the palm, for the index, middle, and ring fingers. While our first prototype attempted to use a pinching interface, we found it difficult to reliably track all three fingers pinching simultaneously. Pinching also risked opening the Oculus system menu, since the index finger is frequently required in normal trumpet play. Since real valves are pressed down, counting up as described previously would be counter-intuitive. Thus, we implemented curling the fingertips into the palm, as it provides a similar motion to pinching, and haptic feedback from the fingertip and palm colliding. We indicated the fingertip and palm locations with coloured cubes on the virtual hand’s fingertips, which change colour between green or magenta to represent on or off, as shown in Figure 9.

Figure 9. Valve selection using coloured cubes on the right hand.

Figure 9. Valve selection using coloured cubes on the right hand.

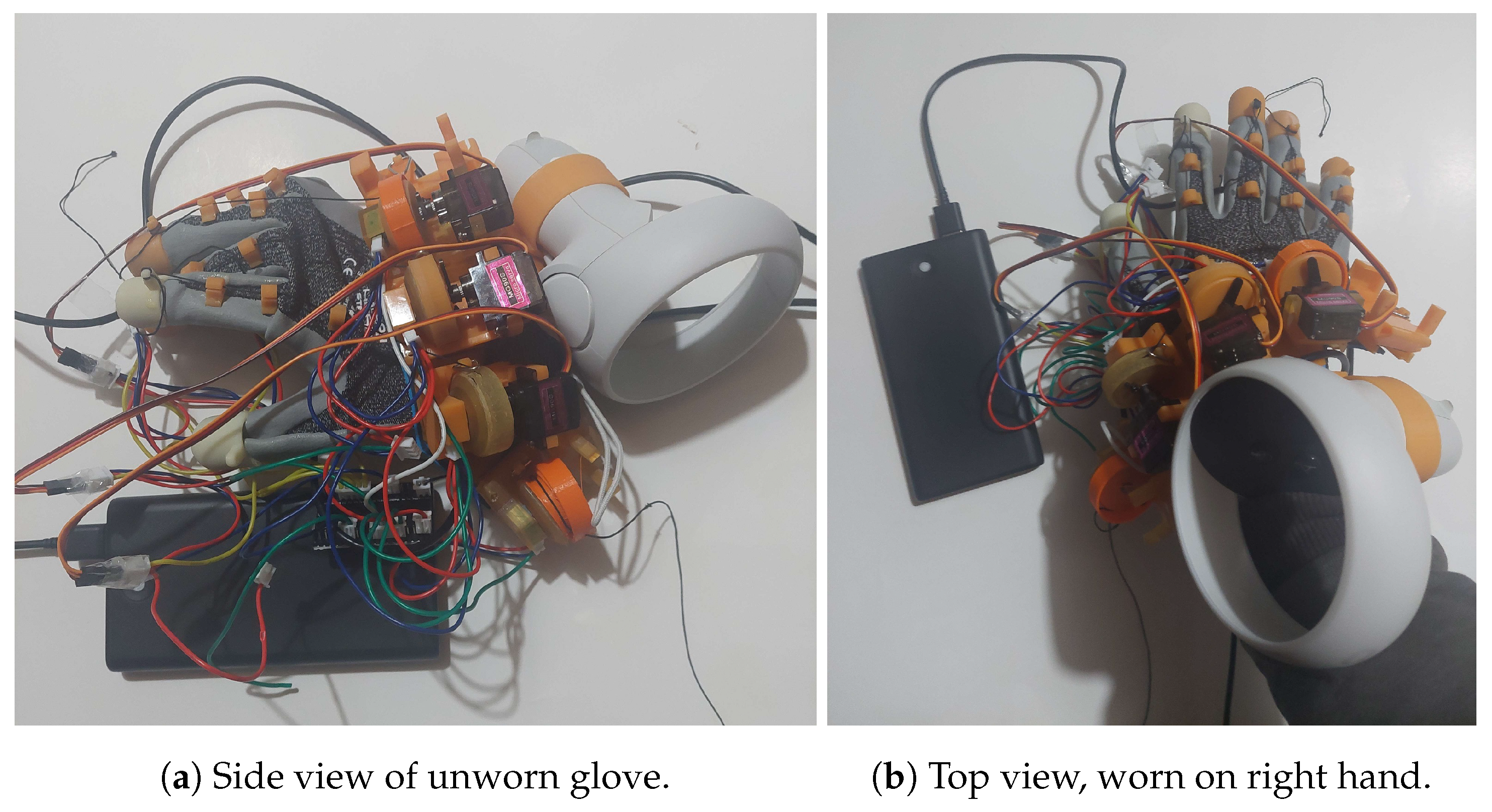

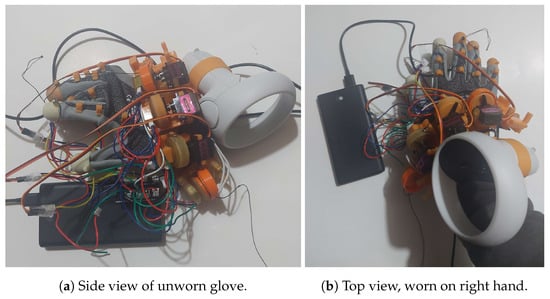

Lastly, we constructed a prototype Lucidglove, based on version #4 of an open-source force-feedback haptic glove project (LucasVRTech 2023). The Lucidglove measures hand position by mounting a VR controller on the wrist, and measures finger flexion using potentiometers mounted to the hand, which are pulled by strings attached to finger caps. Haptic feedback is provided by DC motors powered by a USB battery bank, which restricts motion of the strings and thereby holds the user’s fingers in position when engaged. The result is that the users feel like they are pushing against a physical object, when in fact their finger is being pulled backward by the string. The sensors and actuators on the glove are controlled by an arduino board, which communicates with the virtual reality simulation through drivers via the SteamVR framework. STL files, firmware, and instructions for building Lucidgloves can be found on the Lucidglove GitHub12.

The glove was used to measure the position of the three fingers for playing trumpet: index, middle, and ring fingers on the right hand. The haptic motor interactions were set up to approximate a trumpet valve press. For valve selection, we used the same collision model as the camera-based trumpets. Since this method was constructed to compare against camera-tracking methods, camera tracking was not used to select a register or to activate notes. Instead, to play notes, we used the joystick on the left controller to select a register, and the left grip button to start and stop each note. Figure 10 shows our Lucidglove prototype.

Figure 10.

Prototype Lucidglove with potentiometers and haptic motors connected to Arduino board. Note Virtual Reality (VR) controller connected to glove used for location tracking.

The source code for note generation and virtual trumpet prototypes is available on the Brass Haptics GitHub.13

4. Results

After constructing and experiencing each of the simulation scenarios, we evaluated them based on comparisons of each of the trumpet interfaces against the following features:

- Ease of use: how frustrating is it to play the instrument?

- Familiarity: how much does it feel like playing a real trumpet?

- Playability: can the user comfortably play for extended sessions?

- Noise: could the user play in a household environment without disrupting family or neighbours?

- Versatility: can the instrument be used to simulate other real or imagined instruments?

4.1. Acoustic Trumpet

The acoustic trumpet was used as a baseline experience to test the environment and to compare other implementations. While new players will struggle with finding the correct embouchure and facial strain, especially at high notes, the ease of use and playability increases with experience. Although noise could be mitigated with a mute, a new player with no equipment will be too loud for crowded living environments. The volume of the trumpet also makes it difficult to process the audio through the headset microphone, and add effects in the virtual environment, as the user will struggle to hear the digital output over the sound of the acoustic trumpet. The physical shape and operation of the trumpet also prevents simulation of other instruments. While a physical trumpet is the baseline comparator for simulation of trumpet practice, virtual reality cannot alter the physical properties or limitations of the instrument, and so playing the physical trumpet in virtual reality adds some limitations.

4.2. Morrison Digital Trumpet

This MIDI trumpet uses the same mouthpiece and valves as an acoustic trumpet, allowing it to approximate the shape and feeling of the instrument. Unlike acoustic instruments however, the mouthpiece measures whether air is passing through, not the vibration of the user’s embouchure. Combined with the fact that the mouthpiece is not connected to a resonant tube, normal embouchure is difficult with a MIDI trumpet like this, and, based on our experiences playing the instrument, the user will likely just blow through the instrument, reducing the opportunity for practicing tonguing or buzzing their lips. The MIDI trumpet also uses buttons on the side to select the register, which allows players to access a wider range of notes without strain, but it takes time to learn the skill of selecting the proper register, and we found it difficult to rapidly change registers in complex songs. The registers also use non-standard finger patterns for some notes that do not match the physical trumpet14. The MIDI trumpet is a functional replacement for an experienced trumpet player experimenting with new interfaces, but is not an effective training tool for a novice player.

While the instrument is restricted by its physical structure, the MIDI trumpet is also able to provide additional buttons and features to change the output, and the audio can be further processed to change instruments or add acoustic effects through software. Thus, the MIDI trumpet acts as a middle ground of physical familiarity and digital creativity, with the difficulty transferred from the mouthpiece to register selection with the fingers.

4.3. Virtual Trumpets

With the removal of the physical instrument, the virtual trumpets lack familiarity and feedback, with the only similarity being the three-valve input. Similar to the MIDI trumpet, the difficulty of playing manifests primarily in the method of register selection. While virtual methods are comfortable to play for long periods, the lack of haptic feedback and errors in camera tracking make it difficult to detect and correct mistakes, which may frustrate the user. Since audio is generated within the VR program, the noise and audio can be adapted to provide a variety of creative effects. Note selection with camera tracking is well suited to brass instruments, such as the positioning of a trombone slide or finger curls for valves, but will struggle to accurately measure rapid small finger movements needed for a clarinet or flute.

An additional challenge with instruments other than trumpets is finger splay. Trumpets do not need to measure finger splay, since each finger interacts with a single valve, and does not need to switch between different actuation points. On a clarinet or flute, an individual finger may need to be able to press 3 or 4 different keys depending on the note being played, and some keys are pressed with the side of the finger. This would necessitate measuring the lateral (back-and-forth) movement of the finger (i.e., the splay), in addition to detecting if a key is being pressed. Camera detection techniques will be challenging because it is likely one or both hands are out of frame, and most current haptic gloves do not detect finger splay, instead only measuring how much a finger is contracted or extended.

4.3.1. Register Selection

When interacting with one of the virtual trumpet implementations, without the use of some kind of lip controller, note on/off events and note register selection are accomplished either using the hand models discussed earlier (and below) or using a voice-activated pitch recognition model, requiring the user to hum or sing to select a note. A simple modification of this method which adds lip haptics (improving fidelity and immersion) is to have the user select the note using a kazoo. While the kazoo does not perfectly match the feeling of a trumpet mouthpiece, it helps improve immersion and familiarity through haptic feedback (lip buzz) as well as establishing the desired pitch of a note through microphone input and pitch detection. The kazoo additionally makes voice activation playable over long sessions.

Although the voice activated trumpet works as a proof of concept, we did not find this scenario an enjoyable experience. Despite the RAPT algorithm being near instantaneous, there is still a minor but noticeable delay between the user input and note generation. Between detection errors from unprocessed audio, input filtering from the microphone, and the inconsistency in human voices, measuring pitch on a VR headset using built-in functionality is unreliable, and incorrect note detection further contributed to a frustrating experience. The microphone system built in to commercial VR headsets is optimized for the spoken word, and often assumes other sounds are noise which should be filtered out. In-stream filtering is also implemented to reduce or remove feedback between the VR headset’s microphone and internal headphone speakers. These system-level manipulations of sound make it difficult to apply standard pre- or post-processing of audio signals, including pitch following, pitch alignment or pre-filtering.

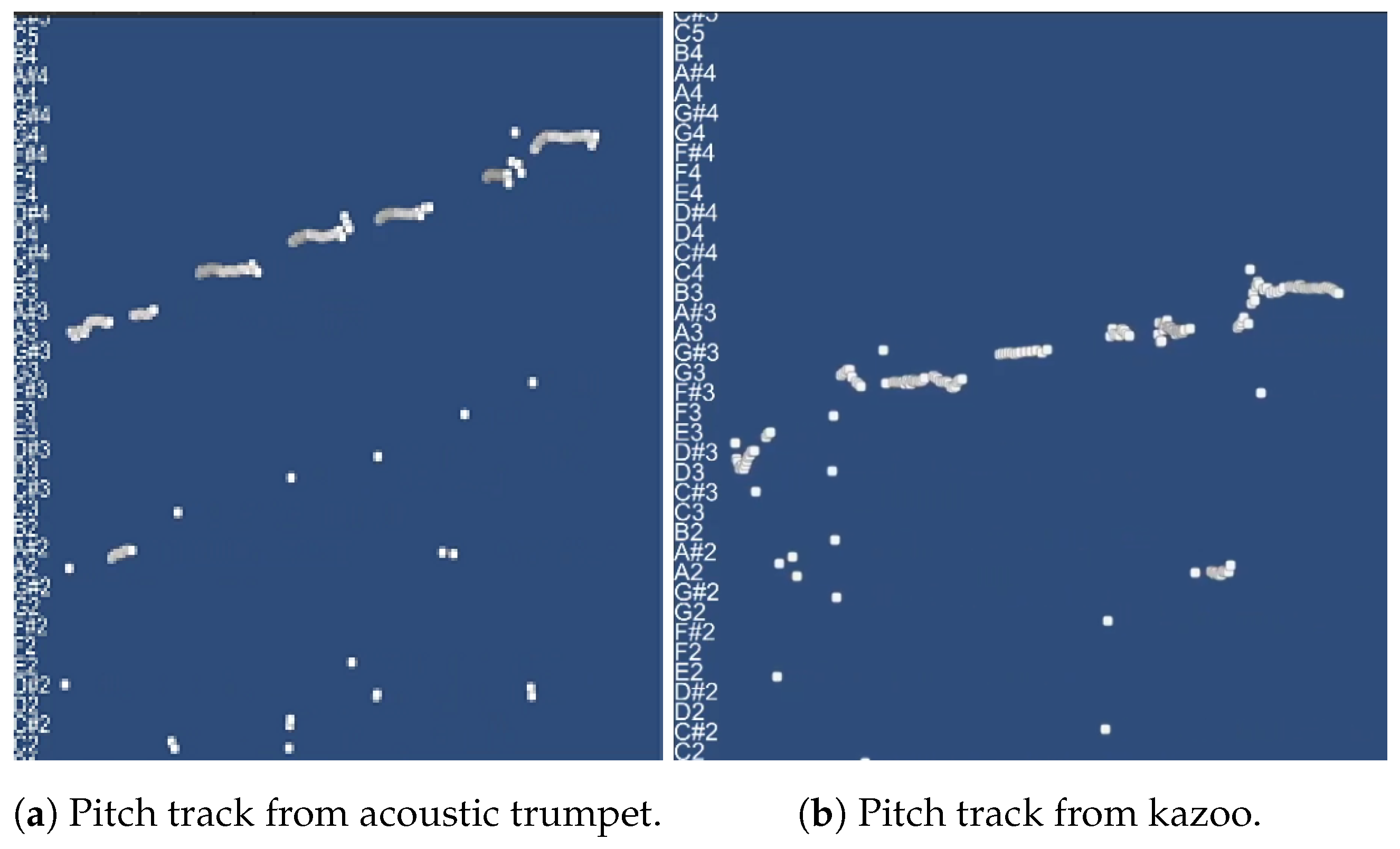

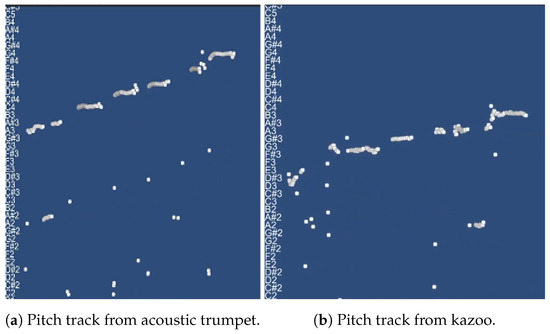

Using the acoustic trumpet as an acoustic input provides a much louder and more consistently pitched signal, generating a more reliable (but still imperfect) pitch trace, as shown in Figure 11. While the kazoo is not as loud as the acoustic trumpet, kazooing loudly enough to be reliably detected still produces a reasonable amount of noise. Making use of a player’s natural vocal pitch range as instrument input improves familiarity, but poses concerns regarding the frequencies an individual is able to produce, which varies depending on factors such as gender, ethnicity, or age. It is possible to increase versatility by processing and transposing voice input, but users may find the delay and mismatch with their voice unsettling (Ppali et al. 2022).

Figure 11.

A comparison of mapped input from an acoustic trumpet and kazoo, on an ascending 5-note scale.

Similar to the MIDI trumpet, sequentially counting registers using specific finger gestures held in virtual space offers a comfortable interface, which is reliable and easy to implement, but it can take some time to learn. In particular, we found that the height and range of motion of the thumb compared to the other fingers could make it difficult to place in the activation zone until the boundaries were learned. The cognitive load of posing both hands and engaging the thumb to play a note, combined with accuracy issues from tracking, can also become problematic. This method lacks versatility, since the number of registers is limited to the five fingers, although this is enough for simple songs, and excludes users with fine motor concerns or missing fingers.

The slider method allows the user to visually relate the colour and physically relate the height of the indicators to register selection. Pinching with the index finger would be more intuitive, but this is a reserved system action invoking the Oculus menu on the Meta Quest 2, and so pinching with the middle finger was implemented. We tested register selection by measuring collision with the indicators, distance from the indicators, and vertical world height, and found that collision and distance were prone to errors, while the world height selects accurately. Like the MIDI trumpet, we tended to just hold the pinch, and other users will likely be tempted to do so as well, since this is easier than pinching each note. This method offers a simple and scalable approach to register selection, since more zones can be added, but loses tracking accuracy and is unreliable with some angles or heights, such as holding the hand high up, which may leave camera tracking range, or the pinch gesture may be occluded by the player’s thumb, may become tiring (holding the left hand in the air while playing), and may be unusable to users with poor shoulder mobility.

4.3.2. Valve Selection Algorithms

We implemented and tested two methods of detecting hand pose for virtual valve selection. In these cases, the user is not holding the trumpet, and the system detects the user’s hand pose and infers the intended valve selection based on that pose gesture. We tested the nearest neighbour algorithm for generic gesture recognition, as well as a collision detection method implemented specifically to detect when fingers are close to the palm of the hand.

While the nearest neighbour algorithm was functional for generic gesture classification, it is not well suited for a three-valve input system like the trumpet. Since all the bones in all of the fingers are tracked, it suffers misclassification when the user isn’t paying sufficient attention to their overall hand pose or a tracking error occurs, and does not have any haptic feedback to notify the user about their mistake. Despite these limitations, pose estimation with machine learning has potential for greater versatility than collision detection methods, as it can detect splay.

The collision detection method for valve selection was both reliable and comfortable, provided the user played with their palm facing the camera, and was easier to implement and test. The visual feedback from the colourized cubes was useful in determining the collision boundaries, but could not be relied on while playing, since the user is focused on the notes. Haptic feedback could theoretically be achieved by tapping one’s fingers across their thumb, but we found this risked triggering the Oculus menu. Some haptic feedback can be achieved by the player tapping their palm with their fingertip, but this requires more exertion than stopping before the palm, and we found this action uncomfortable over time, and since pulling one’s middle finger down will also lower their ring finger, it is prone to error (Noort et al. 2016). This method also would not be able to detect lateral or angular motions, such as splay, and is thus not suitable for instruments that require lateral finger movements.

4.4. Force-Feedback Glove

The Lucidglove implementation we tested provides a different hand tracking method than camera-based tracking. Because finger position is detected using a mechanical sensor on the glove itself, finger position is not subject to occlusion and can be reliably detected in any orientation. The glove could be worn to play a physical trumpet as well, although this configuration was not tested in the current study.

Detecting finger position with the Lucidglove further improves upon camera-based collision detection for valve selection by providing force-feedback resistance when the user has sufficiently flexed their finger to activate the valve. This results in the user perceiving the motion as if they were touching the valve, rather than just mimicking the actions with camera tracking. The improved accuracy of hand tracking allows a natural pose without occlusion problems, creating a greater sense of familiarity. Despite its bulky appearance, the Lucidglove is not particularly heavy on the wrist, and the tension mechanism can be pulled without strain, providing a high playability. While the audio produced by this method is digitally controllable in the same way as it is for camera-based hand tracking methods, it is possible that the glove itself could generate a small amount of noise from the motors engaging. Although this glove has the same creative freedoms as other digitally controlled instruments in terms of modifying MIDI output, this glove translates any direction of string pull into a downward finger curl. Thus, although the glove could be adapted to instruments that use such a motion, it is not suitable for simulating instruments that require splay or lateral movements, as shown in Table 1.

Table 1.

Instruments suitable for simulation via the prototype version #4 Lucidglove.

4.5. Summary of Results

To summarize the results of our experiences and objective comparisons of the designs, we ranked each interface on a scale of 1 to 5, based on our experiences and individual interactions with the interfaces:

- performance failing to meet requirements

- performance beginning to address requirements

- performance approaching requirements

- performance meeting requirements

- performance exceeding requirements

Table 2 shows the results of each of our proposed interfaces:

Table 2.

Analysis of virtual trumpet implementations.

The analysis shows that although the virtual implementations perform better in some aspects such as noise (excluding the voice-activated model) and versatility, all suffer in terms of ease of use and familiarity due to tracking or user errors associated with learning a new interface. When a student is learning a new instrument, the allure of new technologies can contribute to increasing interest and reducing frustration (Makarova et al. 2023; Stark et al. 2023), but if the new implementation itself is frustrating to use, the end result will still be a student who is not motivated to practice. Virtual implementations hold promise for extensible performance systems, but if a player is used to an instrument and a new virtual version of the instrument is not sufficiently familiar, they will likely prefer the physical instrument, as learning the new interface can be frustrating.

4.6. Virtual Environment

Our environment was designed to replicate an empty practice room, in which we placed a music stand displaying dynamically generated notes and finger pattern prompts, which we enlarged to improve visibility. The white and black colouring of the notes and prompts for the acoustic are comparable to piano keys, making it easy to understand the intended note without accidentals. The colourized notes and prompts were especially helpful for the MIDI trumpet and the virtual trumpets, as they utilized the same register and finger pattern scheme. Since a register for the MIDI trumpet uses a set of finger patterns containing different amounts of notes, and the register must be selected by the player, it can be difficult to recall which register a note belongs to while playing. The ability to identify the correct register based on the note colour removes this cognitive load from the user, although they will still need to learn the colour scheme, but while performing in the environment, we noticed that it was easy to become reliant on the finger pattern indicators, and forgo reading the notes on the staff. It may be beneficial to disable either the finger patterns or the staff notes depending on the desired learning outcome.

During our experiences in this environment, we observed two primary sources of simulation sickness. The first and most severe was when we attempted to place instrument models near the player’s vision, which coincides with Meta’s comfortable viewing distance guideline. Although some musicians may prefer to have their instrument represented in the virtual world (Ppali et al. 2022), especially percussionists, instruments held in front of the face, such as trumpets, will likely cause eye strain on the player. Since the virtual trumpets can be held at a distance instead of directly in front of the player’s face, a model of a trumpet could be placed in the player’s hand, but this would disregard Meta’s warning not to place heavy objects in the players hand without a means to justify the weightlessness (Stellmacher et al. 2022).

The second source of simulation sickness we observed during testing was viewing the stand from certain perspectives, such as sitting and looking up. In this implementation, notes and prompts are presented scrolling right to left at a constant speed. We believe the difficulty in tracking the lateral movement of these notes from unintended angles may be the source of this sickness (Golding and Gresty 2005). Additional context around the stand can reduce the effect, and users who are more susceptible to simulator sickness can employ standard methods such as motion reduction or peripheral blocking to mitigate these effects.

5. Discussion

Of all the trumpets, we found the physical trumpets the most enjoyable to play. The physical structure of both the acoustic and MIDI trumpet provides a haptic experience that allows them to be played with vision of the instrument completely blocked. While a virtual environment does not change the physical features of the acoustic trumpet, nor the skill level of the player, having prompts in the style of a rhythm game may help engage and motivate the player. Initially, the MIDI trumpet interface can be confusing due to the requirement to memorize registers and alternative finger patterns, but the colourized prompts remove this requirement, and it becomes more enjoyable with practice.

For the camera-tracked virtual trumpets, we found the pinching at different heights, which approximates selection via a slider, provided the simplest and most reliable interface, and best adhered to hand tracking guidelines established by Meta. While an index pinch would likely track better than the middle finger, which was liable to fail at extreme high or low heights, pinching with the index would often open the system menu, a reserved gesture on the Meta Quest 2 that cannot be disabled. Despite this limitation, the slider method is scalable in that more zones could be added, and it may be possible to add additional MIDI control through the x and z axes.

While the finger counting method provides an intuitive selection that tracks the four fingers well, it has some noticeable limitations. Like the MIDI trumpet, it takes time to associate each pose with its corresponding register. Unlike the MIDI trumpet however, the player must also manage the note on signal with their thumb and the valve selection with their right hand, which can become overwhelming. This is further complicated by potential detection problems from the position, size, and range of motion of the thumb compared to the fingers. Lastly, this method is less scalable than the slider method, as it is limited to the player’s five fingers.

The voice activated trumpet scored the lowest of our prototypes. The player must hum loudly enough for the pitch to be detected, which does not address the noise concern. While the RAPT algorithm works quickly, there can still be a slight delay in activation, which is still sufficient to miss a note or frustrate a user (Ppali et al. 2022). These problems are further exasperated by potential pitch detection errors, which the user may falsely attribute to errors in their own pitch or hand position, and the potential to require transposition between the player’s pitch to meet a trumpet’s note range. While voice activation has potential for better control, this method will require a more specialized approach than our general prototype, such as an implementation of pitch detection systems that can compensate for the internal noise cancellation and speech-centric filtering performed at the system level on commercially available VR headsets

The Lucidglove provided more accurate hand tracking and a haptic response to valve selection, which helps reduce both tracking and user errors when playing. While we used joystick and button input on the left-hand controller to select registers and activate notes, the Lucidglove could be combined with camera-tracked register selection, voice activation, or other lip-based sensors to track embouchure to improve immersion.

It may be possible to play a physical trumpet using a haptic glove, but the Lucidglove implementation includes plastic caps on the fingers that make it difficult and uncomfortable to play physical instruments, as they have no traction or tactile feedback. It would be more practical to adapt a physical instrument with sensors on the valves themselves to detect activation by the player.

In this article, we built a three-finger version of the prototype #4 Lucidglove, which, while suitable for trumpet valves, lacks the degrees of freedom or haptic response for many other instruments. Recently, prototype #5 has been announced, which offers a more compact design as well as tracking of finger splay. Once the build guide for this prototype is made available, we plan to upgrade, and compare prototype #5 with other tactile and force-feedback gloves currently on the market.

5.1. Hand Tracking Interactions for Instruments

Current VR best practices suggest poking or pinching as default interaction techniques. Unfortunately, both of these interaction methods have problems in terms of instrument simulation with a standard VR headset. While using the fingertip to activate notes on collision could work for some instrument interactions, such as pressing a single key on a piano, differentiating between the small finger movements used in splay poses a significant design problem for collision-based systems. Pinching is well suited for controlling UI in the musical environment (Ppali et al. 2022), but hardware restrictions make it difficult to use in instrument simulation. Although not reliable, it is possible to detect multiple fingers pinching simultaneously, but accurately tracking all four pinch gestures would require the user to turn their palm towards the camera. As previously discussed, pinching with the index and thumb while the palm is facing the camera will open the system menus, which frequently occurred in our early, pinch-based prototypes, making this interaction difficult to use effectively.

In this work, we utilized two alternative gestures: finger counting, which we used to replicate the action of selecting buttons on the side of the MIDI trumpet, and touching the fingertip to the palm as an alternative to pinching with the thumb, which simulates the motion of pressing valves. Although finger counting utilizes the opposite motion to pinching, it is still a quick and intuitive motion. While this method does not provide any form of haptic feedback, the player’s proprioception is likely sufficient to help them understand their selection. Although the index, middle, ring, and pinky fingers can be tracked reliably, the thumb’s position, size, and extra range of motion may pose some tracking concerns in a collision-based system.

Pinching to the palm presents a distinct and easily tracked interface, provided the user keeps their palm facing the headset’s camera. Unfortunately, unlike pinching to the thumb, pinching to the palm is not a built in feature of the OVRHand library, and may not automatically scale, and thus may require some adjustment based on the user. It is possible to achieve a small level of haptic feedback as the fingertips make contact with the palm, but due to human physiology, it is difficult to move the middle finger independently (Noort et al. 2016), which may result in accidental activation, especially of the ring finger. Force-feedback gloves may improve upon this interaction by providing mid-air feedback, reducing the exertion of the user’s fingers, and potentially reducing accidental activation of a valve. In the future, we plan to do a user study to evaluate if a force-feedback glove provides a sufficiently improved experience in terms of tracking accuracy and haptic feedback to justify the difficulty a user might have in acquiring or assembling a glove.

5.2. Representing Instruments in VR

Trumpets, like many wind instruments, can be played from haptic feedback or muscle memory, but some musicians may prefer to have some visual representation of their instrument in the virtual world (Ppali et al. 2022). Percussionists in particular may find it necessary to have their instrument accurately presented in the virtual space in order to initially align themselves with the instrument. Unfortunately, based on Meta’s guidelines and our observations, placing a wind instrument model directly in the user’s vision while played produces eye strain and simulation sickness due to the conflict between accomodative and vergence demand (Yadin et al. 2018). Heavy instruments or two-handed instruments pose additional problems due to the lack of weight and rigid structure between the hands (Stellmacher et al. 2022).

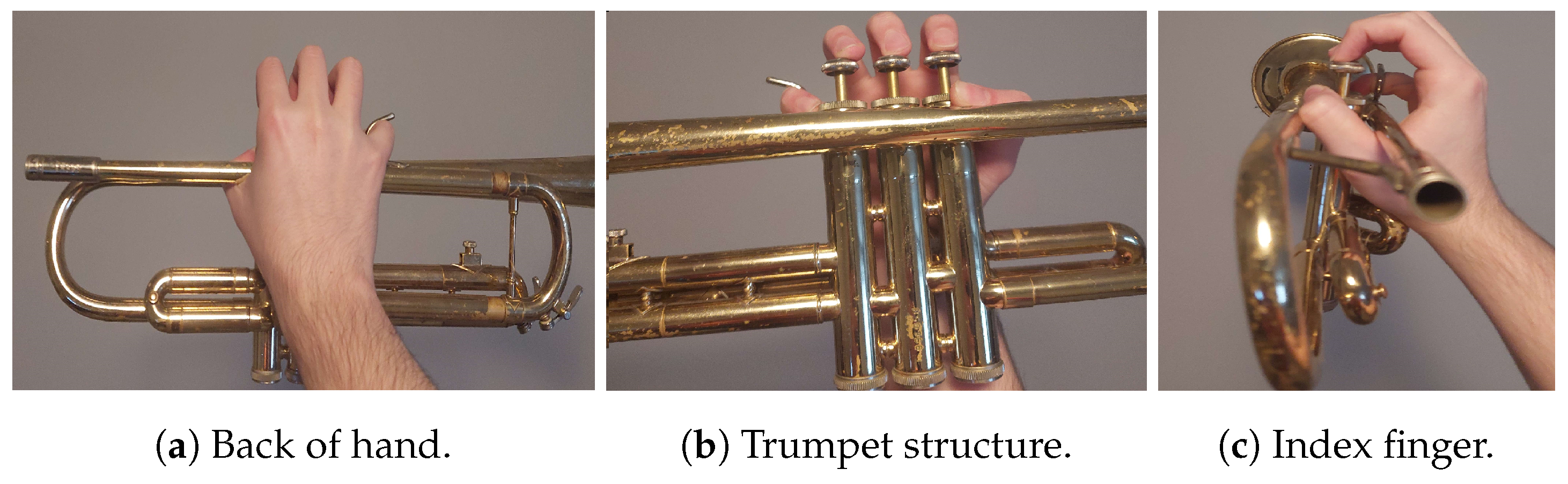

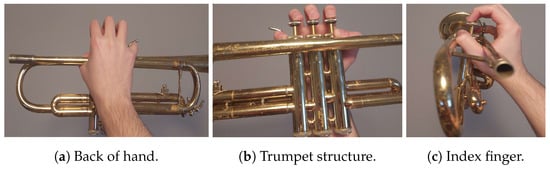

One solution could be to reduce the instrument model to its essential components, such as the valves of a trumpet or the slide of a trombone. Removing the majority of the instrument from a user’s view also improves visibility for reading a musical score, which helps with accessibility. Unfortunately, it would still be difficult to represent the player’s finger or valve position for the acoustic trumpet. If the player is holding a trumpet, current onboard camera-based hand tracking methods will suffer occlusion from either the hand, fingers, or trumpet itself from any comfortable position the trumpet can be held, as shown in Figure 12. It is possible to use pitch detection to estimate which valves are pressed, but it could be disorientating if it were incorrect or rapidly alternated between two notes. Still, hand tracking for a physical trumpet could be achieved by adding a button or sensor that detects when a valve is pressed, providing the method does not interfere with the user’s ability to play the instrument. Rendering valve interactions for the virtual trumpets could be done by attaching a valve model to the palm with the valves positioned under the fingers.

Figure 12.

The fingers of the right hand are occluded from view when holding the trumpet, regardless of view angle.

A key problem with this approach is that it is not always clear what the “core components” of more complex instruments that require finger splay, such as a flute or clarinet, might be. Transparent or partial models may also be a simpler and more feasible approach. Another future direction of this work will be a user study on how to best represent virtual instrument models without incurring eye strain.

5.3. Displaying Notes and Prompts

In this work, we developed a system to dynamically generate notes and prompts, which scrolled across the stand towards a target bar. While this type of interface is used in 2D rhythm games or musical notation software, we noticed increased simulation sickness when viewing this display from unintended angles, which may be attributed to tracking the movement of the notes (Golding and Gresty 2005). Moreover, some musicians may find moving notes distracting or uncomfortable. Such a scrolling interface is also susceptible to desynchronization due to different frame or processing rates.

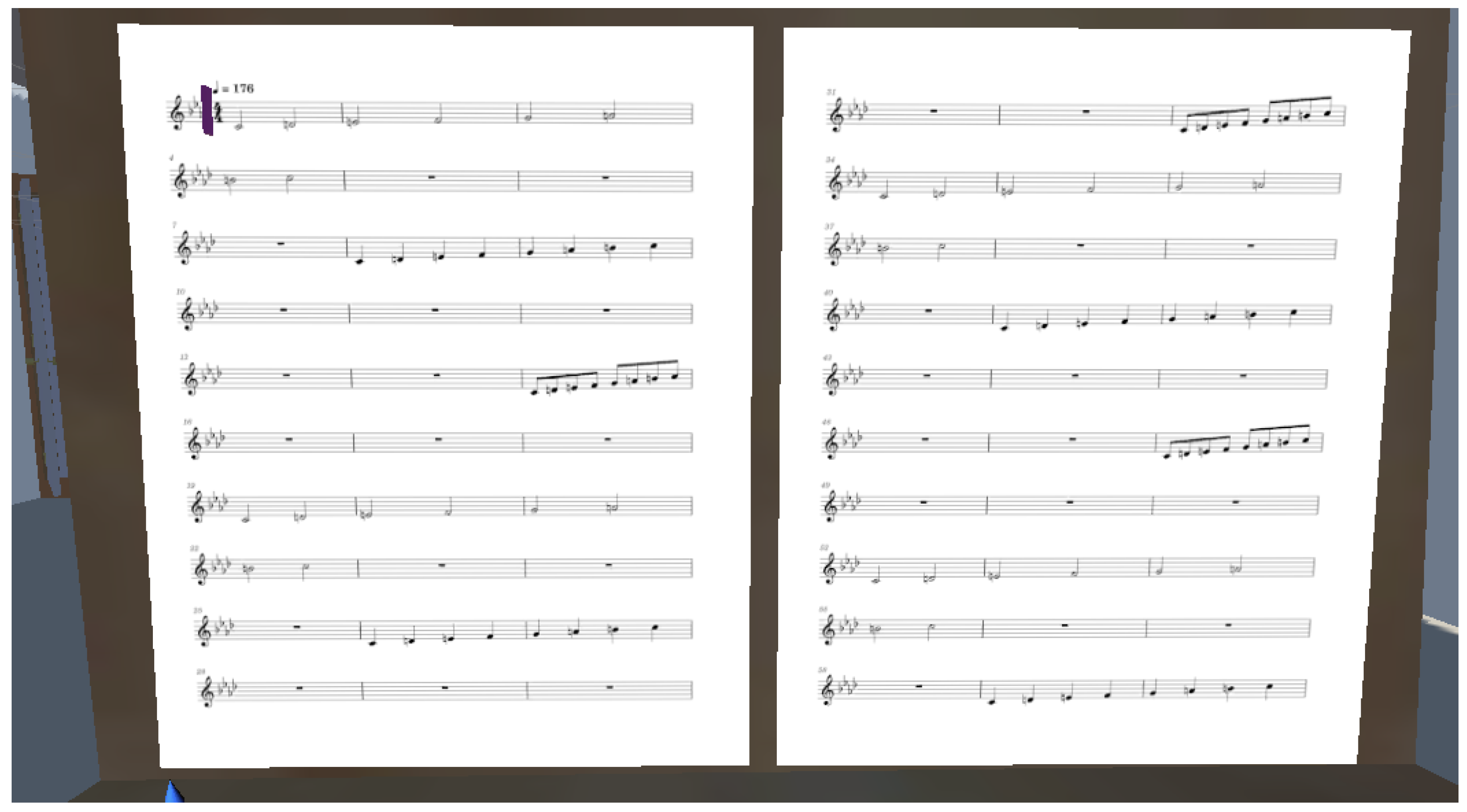

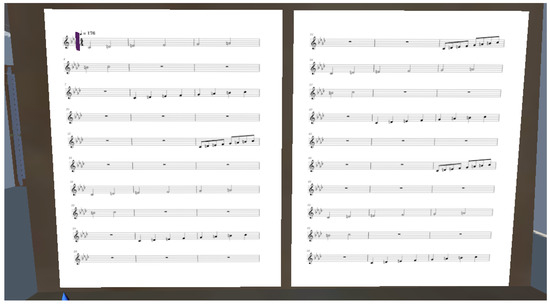

Notes could equally be presented in a static score, with features such as a moving playhead similar to 2D displays such as MuseScore. One solution is to add sheet music images to the scene, as shown in Figure 13, which could be edited to provide additional guides, but this interface would require careful formatting in terms of number of measures per line and lines per page, and it would be difficult to synchronize a playhead with a background image if there are tempo changes in the song. In the future, we plan to explore other MIDI libraries and compare different methods of displaying notes and prompts.

Figure 13.

An alternative music interface displaying engraved sheet music via images.

5.4. Future Instruments

The primary target of our future work is the creation of other instrument simulations. Since the camera-tracked models are not posed how a user would typically hold an instrument, other three-valve brass instruments could be “simulated” by simply changing the MIDI instrument. By expanding this method to all fingers of both hands, it would be possible to simulate other instruments with one simple interaction per finger, such as a tin flute. Instruments that require multiple interactions from one finger, however, such as partially covering a hole or finger splay, cannot be simulated with our implementation. Machine learning methods may be able to differentiate between these subtle movements, but the player would likely find it difficult to reliably replicate the appropriate poses without haptic guidance, such as force-feedback.

A force-feedback glove capable of detecting splay, which the prototype #5 Lucidglove promises, would provide this feedback while also allowing the user to position their hands more appropriate to how an instrument would be properly held. Going forward, our primary research goal is to combine an embouchure detection method, such as a specialized mouthpiece or pressure sensor, with higher quality haptic gloves, and compare the experiences with other real and modified instruments through a user study.

6. Conclusions

In this article, we created a set of Extended Reality scenarios to simulate playing a trumpet, each focusing on a different trumpet interaction modality, as prototype interfaces for future wind instrument simulation. Camera-based and glove-based scenarios tested interactions with and without a trumpet, with different mechanisms for selecting playback registers, selecting valves, and indicating note on/off.