Design and Evaluation of a Multisensory Concert for Cochlear Implant Users

Abstract

1. Introduction

2. Related Work

2.1. Accessible and Inclusive Design in Music

2.2. CI Music

2.3. Multisensory Integration

2.4. Vibrotactile Augmentation of Music

3. Materials and Methods

3.1. Multisensory Integration Design Workshop

3.1.1. Workshop Format

3.1.2. Participants

3.1.3. Workshop Experiences

Installation 1

Installation 2

Installation 3

3.1.4. Experiences and Results

First Participant

Second Participant

Third Participant

3.1.5. Workshop Discussion

3.2. Concert

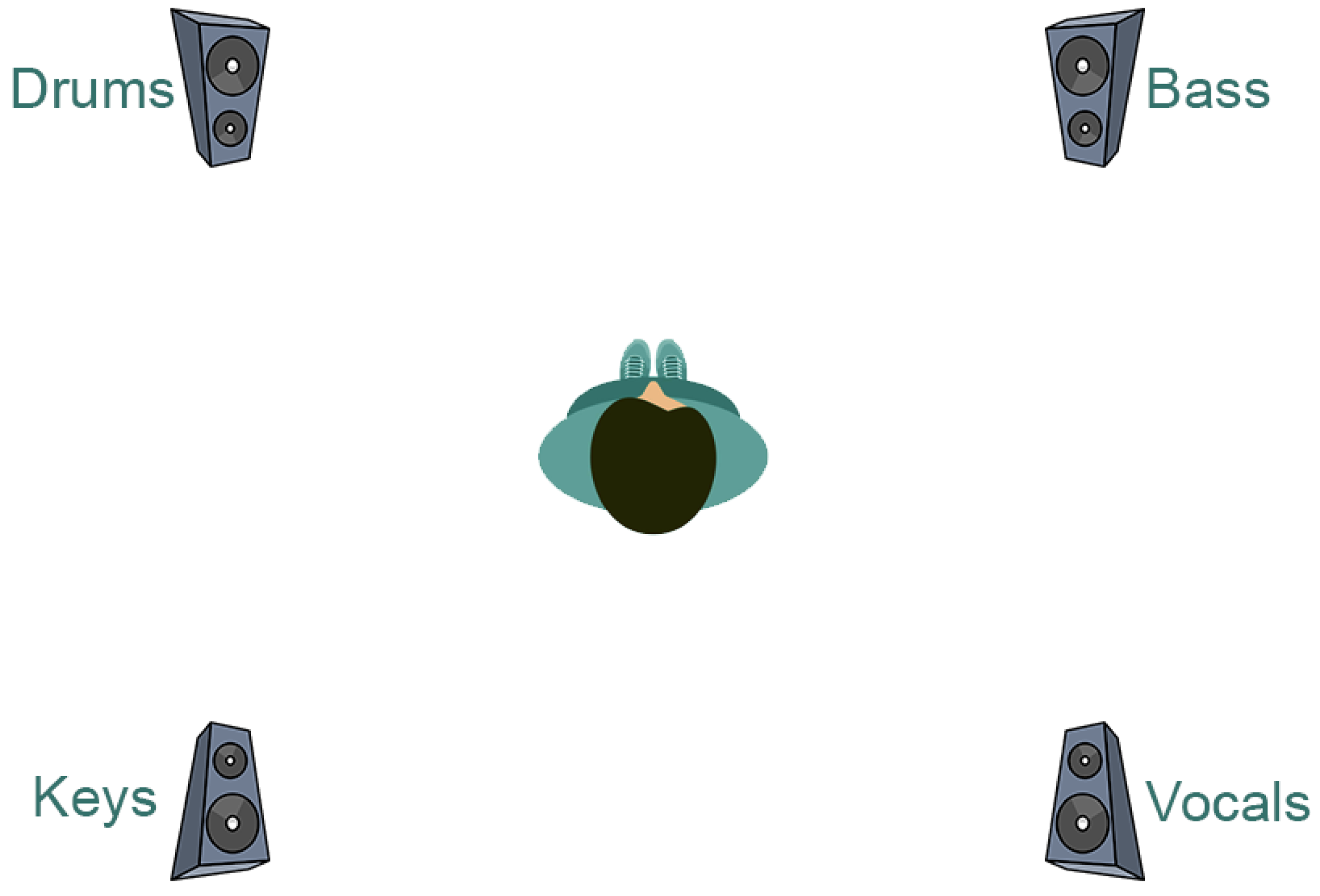

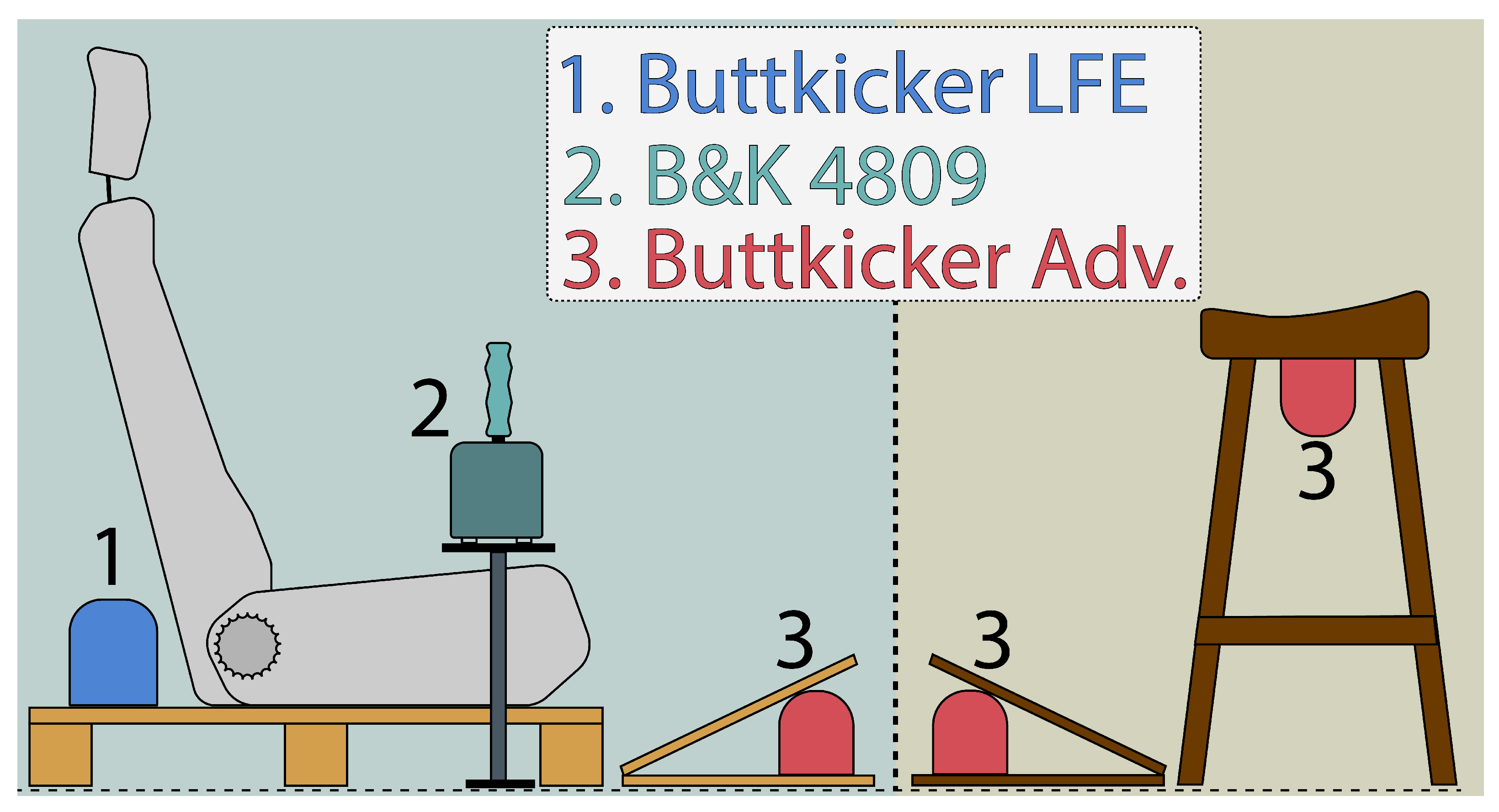

3.2.1. Tactile Displays

- Enhance the concert experience by providing congruent vibrotactile feedback.

- Afford multiple interaction modes and postures to accommodate the variate needs of CI users.

- Present as furniture rather than medical apparatus.

- Encourage a social experience.

- Usable by CI users and normal-hearing participants alike.

Vibrotactile Furniture

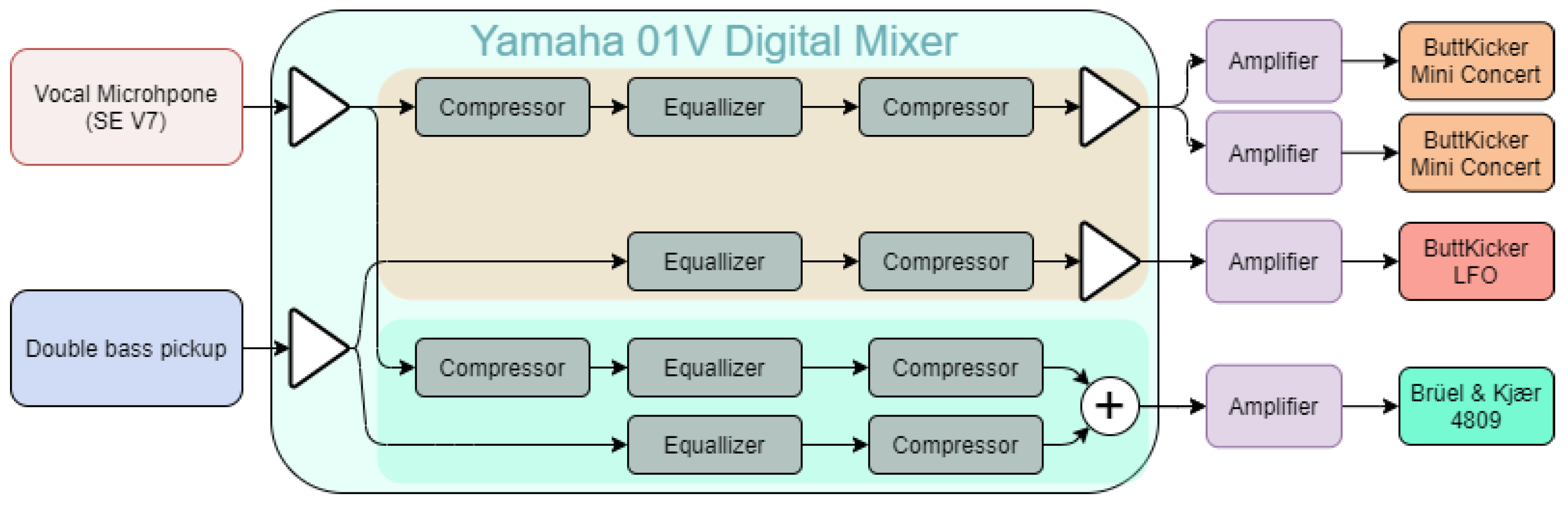

Audio and Tactile Signals

3.2.2. Concert Setup

Music

- The music should be popular in order to increase chances of cognoscibility;

- The music should include lyrics and should be focused on the vocal line;

- The music should revolve around simple, repetitive riffs;

- The melody should be easily represented by a single instrument;

- The music should be easily transposable to the vocalist’s lowest register;

- The setlist should contain songs from multiple genres.

- Peggy Lee—Fever;

- The Civil Wars—Billie Jean;

- Louis Armstrong—What a Wonderful World;

- Postmodern Jukebox—Seven Nation Army;

- J. Knight, D. Farrant, and H. Sanderson—Don’t Give Up On Love (improvisation);

- Ruth Brown—5-10-15 Hours (improvisation);

- Etta James—Something’s got a hold on me (backup song—not used).

3.3. Concert Experience Evaluation

3.3.1. Methodology

3.3.2. Concert Results

3.3.2.1. Focus Group

3.3.2.2. Video-Based Observations

3.3.2.3. Online Interview

- What was it like attending the concert with the vibrating furniture?

- How was it feeling to sit on the furniture during the concert?

- How did you experience the connection between music and vibrations?

- Which instrument did you prefer and why?

- Would you recommend this type of concert furniture to other CI users?

- Do you have any other comments?

3.3.2.4. Limitations

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | https://subpac.com/ (accessed on 22 Febraury 2023). |

| 2 | Facebook CI Group (accessed on 1 October 2021). |

| 3 | https://thebuttkicker.com/ (accessed on 22 February 2023). |

| 4 | http://tactilelabs.com/ (accessed on 22 February 2023). |

| 5 | https://melcph.create.aau.dk/coolhear-workshop/ (accessed on 20 April 2022). |

| 6 | https://zoomcorp.com/ (accessed on 22 February 2023). |

References

- Aker, Scott C., Hamish Innes-Brown, Kathleen F. Faulkner, Marianna Vatti, and Jeremy Marozeau. 2022. Effect of audio-tactile congruence on vibrotactile music enhancement. The Journal of the Acoustical Society of America 152: 3396–409. [Google Scholar] [CrossRef] [PubMed]

- Aydın, Diler, and Suzan Yıldız. 2012. Effect of classical music on stress among preterm infants in a neonatal intensive care unit. HealthMED 6: 3162–68. [Google Scholar]

- Baijal, Anant, Julia Kim, Carmen Branje, Frank A. Russo, and Deborah I. Fels. 2015. Composing vibrotactile music: A multisensory experience with the emoti-chair. CoRR, 509–15. [Google Scholar] [CrossRef]

- Başkent, Deniz, and Robert V. Shannon. 2005. Interactions between cochlear implant electrode insertion depth and frequency-place mapping. The Journal of the Acoustical Society of America 117: 1405–16. [Google Scholar] [CrossRef] [PubMed]

- Bjorner, T. 2016. Qualitative Methods for Consumer Research: The Value of the Qualitative Approach in Theory and Practice. Copenhagen: Hans Reitzels Forlag. [Google Scholar]

- Buyens, Wim, Bas Van Dijk, Marc Moonen, and Jan Wouters. 2014. Music mixing preferences of cochlear implant recipients: A pilot study. International Journal of Audiology 53: 294–301. [Google Scholar] [CrossRef] [PubMed]

- Cavdir, Doga, Francesco Ganis, Razvan Paisa, Peter Williams, and Stefania Serafin. 2022. Multisensory Integration Design in Music for Cochlear Implant Users. Paper present at the 19th Sound and Music Computing Conference, Saint-Étienne, France, June 5–12. [Google Scholar] [CrossRef]

- Cavdir, Doga, and Ge Wang. 2020. Felt sound: A shared musical experience for the deaf and hard of hearing. Paper present at the 20th International Conference on New Interfaces for Musical Expression, Birmingham, UK, July 25–27. [Google Scholar]

- Certo, Michael V., Gavriel D. Kohlberg, Divya A. Chari, Dean M. Mancuso, and Anil K. Lalwani. 2015. Reverberation time influences musical enjoyment with cochlear implants. Otology and Neurotology 36: e46–e50. [Google Scholar] [CrossRef]

- Coffman, Don. 2002. Music and quality of life in older adults. Psychomusicology: A Journal of Research in Music Cognition 18: 76–88. [Google Scholar] [CrossRef]

- Dickens, Amy, Chris Greenhalgh, and Boriana Koleva. 2018. Facilitating accessibility in performance: Participatory design for digital musical instruments. Journal of the Audio Engineering Society 66: 211–19. [Google Scholar] [CrossRef]

- Dorman, Michael F., Louise Loiselle, Josh Stohl, William A. Yost, Anthony Spahr, Chris Brown, and Sarah Cook. 2014. Interaural level differences and sound source localization for bilateral cochlear implant patients. Ear and Hearing 35: 633. [Google Scholar] [CrossRef]

- Dorman, Michael F., Louise H. Loiselle, Sarah J. Cook, William A. Yost, and René H. Gifford. 2016. Sound source localization by normal-hearing listeners, hearing-impaired listeners and cochlear implant listeners. Audiology and Neurotology 21: 127–31. [Google Scholar] [CrossRef]

- Dritsakis, Giorgos, Rachel M. van Besouw, Pádraig Kitterick, and Carl A. Verschuur. 2017. A music-related quality of life measure to guide music rehabilitation for adult cochlear implant users. American Journal of Audiology 26: 268–82. [Google Scholar] [CrossRef] [PubMed]

- Dritsakis, Giorgos, Rachel M. van Besouw, and Aoife O’ Meara. 2017. Impact of music on the quality of life of cochlear implant users: A focus group study. Cochlear Implants International 18: 207–15. [Google Scholar] [CrossRef] [PubMed]

- Fletcher, Mark D., Nour Thini, and Samuel W. Perry. 2020. Enhanced pitch discrimination for cochlear implant users with a new haptic neuroprosthetic. Scientific Reports 10: 10354. [Google Scholar] [CrossRef]

- Fontana, Federico, Ivan Camponogara, Paola Cesari, Matteo Vallicella, and Marco Ruzzenente. 2016. An Exploration on Whole-body and Foot-based Vibrotactile Sensitivity to Melodic Consonance. Paper present at the 13th Sound and Music Computing Conference (SMC2016), Hamburg, Germany, August 31–September 3. [Google Scholar] [CrossRef]

- Foxe, John, Glenn Wylie, Antigona Martinez, Charles Schroeder, Daniel Javitt, David Guilfoyle, Walter Ritter, and Micah Murray. 2002. Auditory-somatosensory multisensory processing in auditory association cortex: An fmri study. Journal of Neurophysiology 88: 540–43. [Google Scholar] [CrossRef] [PubMed]

- Frid, Emma. 2019. Accessible digital musical instruments—A review of musical interfaces in inclusive music practice. Multimodal Technologies and Interaction 3: 57. [Google Scholar] [CrossRef]

- Frosolini, Andrea, Giulio Badin, Flavia Sorrentino, Davide Brotto, Nicholas Pessot, Francesco Fantin, Federica Ceschin, Andrea Lovato, Nicola Coppola, Antonio Mancuso, and et al. 2022. Application of patient reported outcome measures in cochlear implant patients: Implications for the design of specific rehabilitation programs. Sensors 22: 8770. [Google Scholar] [CrossRef] [PubMed]

- Fuller, Christina D., John J. Galvin, III, Bert Maat, Deniz Başkent, and Rolien H. Free. 2018. Comparison of two music training approaches on music and speech perception in cochlear implant users. Trends in Hearing 22: 2331216518765379. [Google Scholar] [CrossRef]

- Galvin, John J., III, Elizabeth Eskridge, Sandy Oba, and Qian-Jie Fu. 2012. Melodic contour identification training in cochlear implant users with and without a competing instrument. In Seminars in Hearing. New York: Thieme Medical Publishers, vol. 33, pp. 399–409. [Google Scholar]

- Gfeller, Kate, Virginia Driscoll, and Adam Schwalje. 2019. Adult cochlear implant recipients’ perspectives on experiences with music in everyday life: A multifaceted and dynamic phenomenon. Frontiers in Neuroscience 13: 1229. [Google Scholar] [CrossRef]

- Gosine, Jane, Deborah Hawksley, and Susan LeMessurier Quinn. 2017. Community building through inclusive music-making. Voices: A World Forum for Music Therapy 17. [Google Scholar] [CrossRef]

- Hinderink, Johannes B., Paul F. M. Krabbe, and Paul Van Den Broek. 2000. Development and application of a health-related quality-of-life instrument for adults with cochlear implants: The nijmegen cochlear implant questionnaire. Otolaryngology-Head and Neck Surgery 123: 756–65. [Google Scholar] [CrossRef]

- Holden, Laura K., Jill B. Firszt, Ruth M. Reeder, Rosalie M. Uchanski, Noël Y. Dwyer, and Timothy A. Holden. 2016. Factors affecting outcomes in cochlear implant recipients implanted with a perimodiolar electrode array located in scala tympani. Otology and Neurotology 37: 1662–68. [Google Scholar] [CrossRef]

- Hopkins, Carl, Saúl Maté-Cid, Robert Fulford, Gary Seiffert, and Jane Ginsborg. 2016. Vibrotactile presentation of musical notes to the glabrous skin for adults with normal hearing or a hearing impairment: Thresholds, dynamic range and high-frequency perception. PLoS ONE 11: e0155807. [Google Scholar] [CrossRef]

- Jack, Robert, Andrew Mcpherson, and Tony Stockman. 2015. Designing tactile musical devices with and for deaf users: A case study. Paper present at the International Conference on the Multimedia Experience of Music, Sheffield, UK, March 23–25. [Google Scholar]

- Jones, Lynette A., and Nadine B. Sarter. 2008. Tactile displays: Guidance for their design and application. Human Factors 50. [Google Scholar] [CrossRef]

- Jousmäki, V., and R. Hari. 1998. Parchment-skin illusion: Sound-biased touch. Current Biology 8. [Google Scholar] [CrossRef]

- Karam, Maria, Frank Russo, and Deborah Fels. 2009. Designing the model human cochlea: An ambient crossmodal audio-tactile display. IEEE Transactions on Haptics 2: 160–69. [Google Scholar] [CrossRef]

- Kayser, Christoph, Christopher Petkov, Mark Augath, and Nikos Logothetis. 2005. Integration of touch and sound in auditory cortex. Neuron 48: 373–84. [Google Scholar] [CrossRef]

- Lucas, Alex, Miguel Ortiz, and Franziska Schroeder. 2020. The longevity of bespoke, accessible music technology: A case for community. Paper present at the International Conference on New Interfaces for Musical Expression, Birmingham, UK, July 21–25; pp. 243–48. [Google Scholar]

- Lucas, Alex Michael, Miguel Ortiz, and Franziska Schroeder. 2019. Bespoke Design for Inclusive Music: The Challenges of Evaluation. Paper present at the 19th International Conference on New Interfaces for Musical Expression, Porto Alegre, Brasil, June 3–6; pp. 105–9. [Google Scholar]

- Marti, Patrizia, and Annamaria Recupero. 2019. Is deafness a disability? Designing hearing aids beyond functionality. Paper present at the 2019 on Creativity and Cognition, San Diego, CA, USA, June 23–26; pp. 133–43. [Google Scholar]

- McDermott, Hugh J. 2004. Music perception with cochlear implants: A review. Trends in Amplification 8: 49–82. [Google Scholar] [CrossRef]

- McRackan, Theodore R., Brittany N. Hand, Craig A. Velozo, Judy R. Dubno, Justin S. Golub, Eric P. Wilkinson, Dawna Mills, John P. Carey, Nopawan Vorasubin, Vickie Brunk, and et al. 2019. Cochlear implant quality of life (ciqol): Development of a profile instrument (ciqol-35 profile) and a global measure (ciqol-10 global). Journal of Speech, Language, and Hearing Research 62: 3554–563. [Google Scholar] [CrossRef]

- Mitchell, Laura, Raymond MacDonald, Christina Knussen, and Mick Serpell. 2007. A survey investigation of the effects of music listening on chronic pain. Psychology of Music 35: 37–57. [Google Scholar] [CrossRef]

- Nanayakkara, Suranga, Elizabeth Taylor, Lonce Wyse, and Sim Ong. 2009. An enhanced musical experience for the deaf: Design and evaluation of a music display and a haptic chair. Paper present at the CHI’09: CHI Conference on Human Factors in Computing Systems, Boston, MA, USA, April 4–9; pp. 337–46. [Google Scholar] [CrossRef]

- Nanayakkara, Suranga, Lonce Wyse, Sim Ong, and Elizabeth Taylor. 2012. Enhancing musical experience for the hearing-impaired using visual and haptic displays. Human-Computer Interaction 28: 115–60. [Google Scholar]

- Nordahl, Rolf, Stefania Serafin, Niels C. Nilsson, and Luca Turchet. 2012. Enhancing realism in virtual environments by simulating the audio-haptic sensation of walking on ground surfaces. Paper present at the 2012 IEEE Virtual Reality Workshops (VRW), Costa Mesa, CA, USA, March 4–8. [Google Scholar] [CrossRef]

- Oxenham, Andrew J. 2008. Pitch perception and auditory stream segregation: Implications for hearing loss and cochlear implants. Trends in Amplification 12: 316–31. [Google Scholar] [CrossRef]

- Paisa, R., J. Andersen, N. C. Nilsson, and S. Serafin. 2022. A comparison of audio-to-tactile conversion algorithms for melody recognition. Paper present at the Baltic Nordic-Acoustic Meetings, Aalborg, Denmark, May 9–11. [Google Scholar]

- Quintero, Christian. 2020. A review: Accessible technology through participatory design. Disability and Rehabilitation: Assistive Technology 17: 369–75. [Google Scholar] [CrossRef]

- Rouger, J., S. Lagleyre, B. Fraysse, S. Deneve, O. Deguine, and P. Barone. 2007. Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proceedings of the National Academy of Sciences of the United States of America 104: 7295–300. [Google Scholar] [CrossRef]

- Samuels, Koichi, and Franziska Schroeder. 2019. Performance without barriers: Improvising with inclusive and accessible digital musical instruments. Contemporary Music Review 38: 476–89. [Google Scholar] [CrossRef]

- Schellenberg, E. Glenn. 2016. Music and Nonmusical Abilities. In The Child as Musician: A Handbook of Musical Development, 2nd ed. Oxford: Oxford University Press, pp. 149–76. [Google Scholar] [CrossRef]

- Schäfer, Thomas, Peter Sedlmeier, Christine Städtler, and David Huron. 2013. The psychological functions of music listening. Frontiers in Psychology 4: 511. [Google Scholar] [CrossRef]

- Someren, Maarten, Yvonne Barnard, and Jacobijn Sandberg. 1994. The Think Aloud Method—A Practical Guide to Modelling Cognitive Processes. London: Academic Press. [Google Scholar]

- Stein, Barry E., and M. Alex Meredith. 1993. The Merging of the Senses. A Bradford Book. Cambridge: MIT Press. [Google Scholar]

- Tilley, A. R., Henry Dreyfuss, and Henry Dreyfuss Associates. 1993. The Measure of Man and Woman: Human Factors in Design. New York: Whitney Library of Design. [Google Scholar]

- Wilska, Alvar. 1954. On the vibrational sensitivity in different regions of the body surface. Acta Physiologica Scandinavica 31: 285–89. [Google Scholar] [CrossRef]

- Wilson, Blake S., and Michael F. Dorman. 2008. Cochlear implants: A remarkable past and a brilliant future. Hearing Research 242: 3–21. [Google Scholar] [CrossRef]

- Zeng, Fan-Gand. 2004. Compression and Cochlear Implants. New York: Springer, pp. 184–220. [Google Scholar] [CrossRef]

- Zeng, Fan Gang, and John J. Galvin. 1999. Amplitude mapping and phoneme recognition in cochlear implant listeners. Ear and Hearing 20: 60–74. [Google Scholar] [CrossRef]

- Zeng, Fan-Gang, Ginger Grant, John Niparko, John Galvin, Robert Shannon, Jane Opie, and Phil Segel. 2002. Speech dynamic range and its effect on cochlear implant performance. The Journal of the Acoustical Society of America 111: 377. [Google Scholar] [CrossRef]

- Črnčec, Rudi, Sarah Wilson, and Margot Prior. 2006. The cognitive and academic benefits of music to children: Facts and fiction. Educational Psychology EDUC PSYCHOL-UK 26: 579–94. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paisa, R.; Cavdir, D.; Ganis, F.; Williams, P.; Percy-Smith, L.M.; Serafin, S. Design and Evaluation of a Multisensory Concert for Cochlear Implant Users. Arts 2023, 12, 149. https://doi.org/10.3390/arts12040149

Paisa R, Cavdir D, Ganis F, Williams P, Percy-Smith LM, Serafin S. Design and Evaluation of a Multisensory Concert for Cochlear Implant Users. Arts. 2023; 12(4):149. https://doi.org/10.3390/arts12040149

Chicago/Turabian StylePaisa, Razvan, Doga Cavdir, Francesco Ganis, Peter Williams, Lone M. Percy-Smith, and Stefania Serafin. 2023. "Design and Evaluation of a Multisensory Concert for Cochlear Implant Users" Arts 12, no. 4: 149. https://doi.org/10.3390/arts12040149

APA StylePaisa, R., Cavdir, D., Ganis, F., Williams, P., Percy-Smith, L. M., & Serafin, S. (2023). Design and Evaluation of a Multisensory Concert for Cochlear Implant Users. Arts, 12(4), 149. https://doi.org/10.3390/arts12040149