Visual Perception of Photographs of Rotated 3D Objects in Goldfish (Carassius auratus)

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Subjects

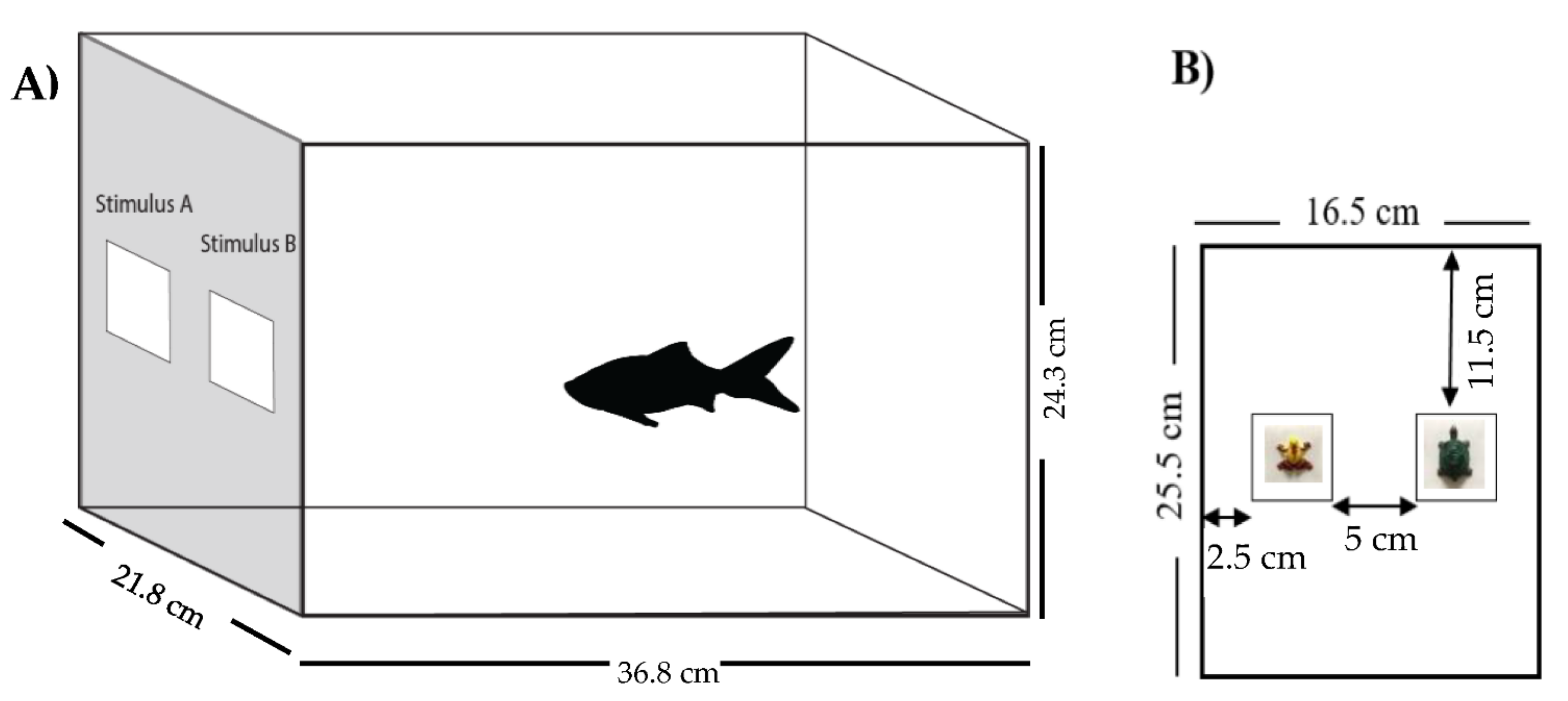

2.2. Stimuli

2.3. Experimental Setup

2.4. Procedure

2.4.1. Training

2.4.2. Testing

2.5. Data Analyses

3. Results

3.1. Training

3.2. Testing

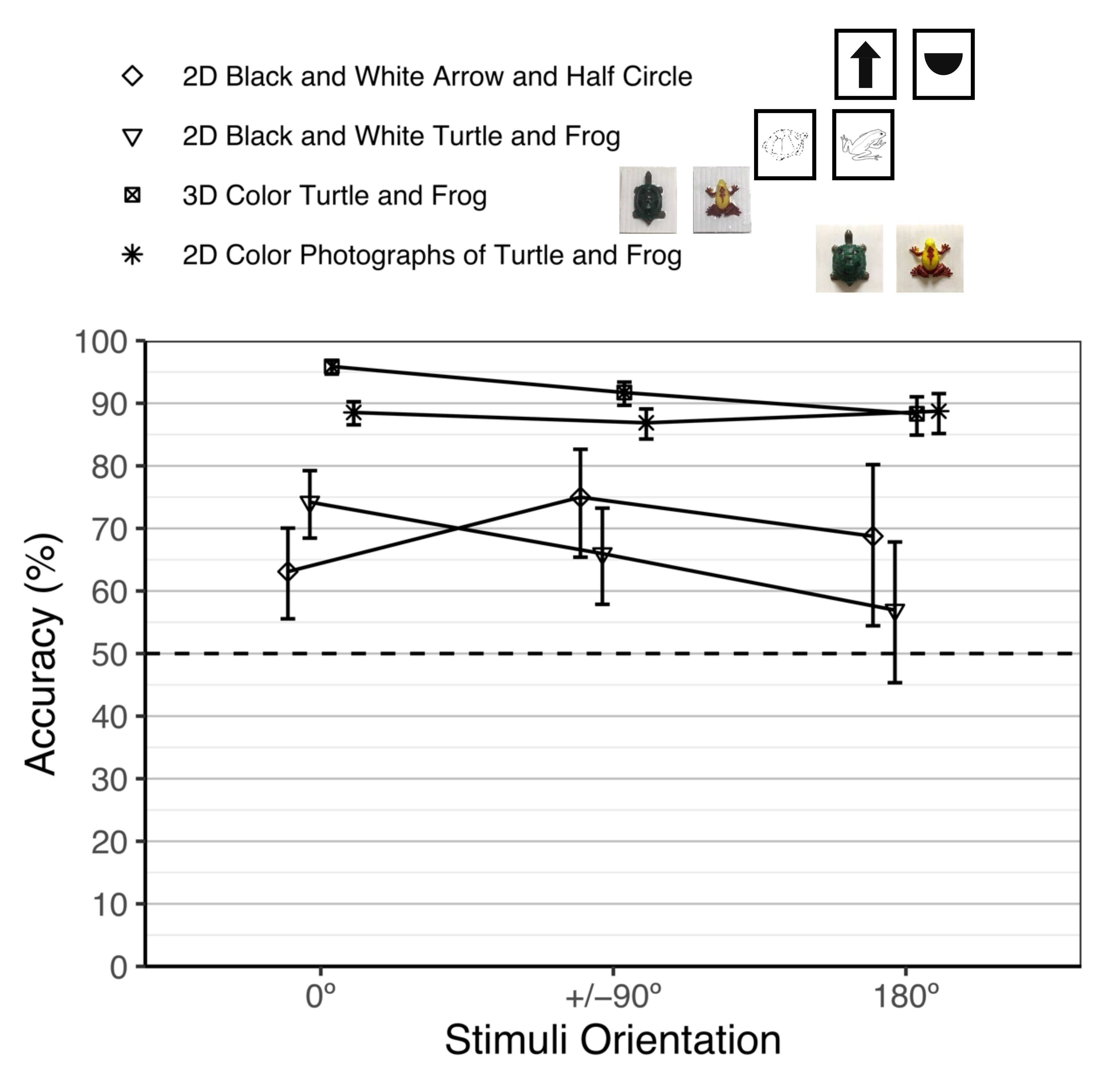

3.2.1. Performance Accuracy

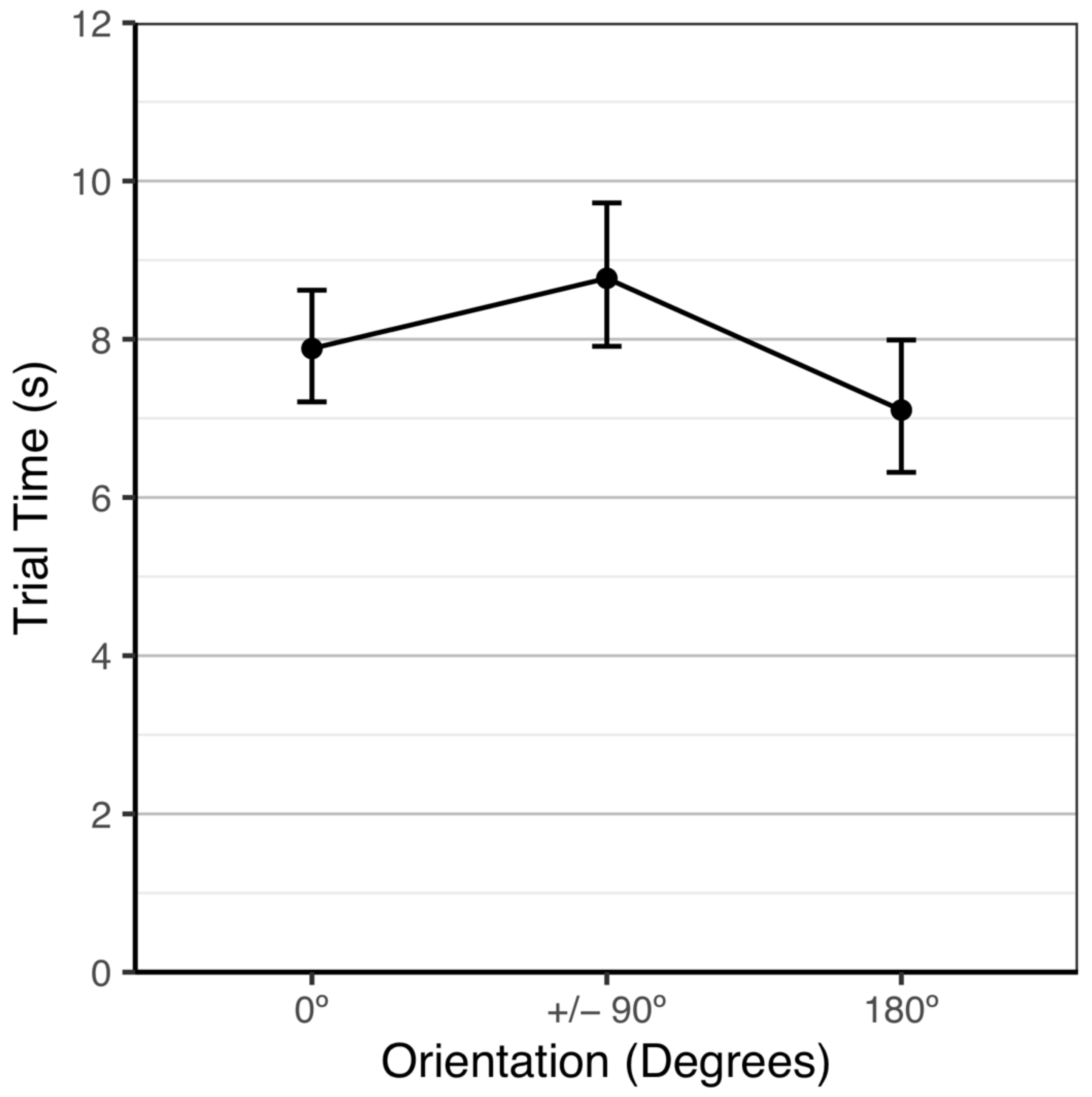

3.2.2. Trial Time

3.2.3. Performance Comparison of the Previous and Current Object Constancy Studies

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jolicoeur, P.; Humphrey, G.K. Perception of rotated two-dimensional and three-dimensional objects and visual shapes. In Visual Constancies: Why Things Look as They Do; Walsh, V., Kulikowski, J., Eds.; Cambridge University Press: Cambridge, UK, 1998; pp. 69–123. [Google Scholar]

- Peissig, J.J.; Goode, T. The recognition of rotated objects in animals. In How Animals See the World: Comparative Behavior, Biology, and Evolution of Vision; Lazareva, O.F., Shimuzu, T., Wasserman, E.A., Eds.; Oxford University Press: New York, NY, USA, 2012; pp. 233–246. [Google Scholar]

- Bertenthal, B.I.; Gredebäck, G.; Boyer, T.W. Differential contributions of development and learning to infants’ knowledge of object continuity and discontinuity. Child Dev. 2013, 84, 413–421. [Google Scholar] [CrossRef] [PubMed]

- Frick, A.; Möhring, W. Mental object rotation and motor development in 8- and 10-month-old infants. J. Exp. Child Psychol. 2013, 115, 708–720. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pedrett, S.; Kaspar, L.; Frick, A. Understanding of object rotation between two and three years of age. Dev. Psychol. 2020, 56, 261. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Delius, J.D.; Hollard, V.D. Orientation invariant pattern recognition by pigeons (Columba livia) and humans (Homo sapiens). J. Comp. Psychol. 1995, 109, 278–290. [Google Scholar] [CrossRef] [PubMed]

- Schluessel, V.; Kraniotakes, H.; Bleckmann, H. Visual discrimination of rotated 3D objects in Malawi cichlids (Pseudotropheus sp.): A first indication for form constancy in fishes. Anim. Cogn. 2014, 17, 359–371. [Google Scholar] [CrossRef]

- Mauck, B.; Dehnhardt, G. Mental rotation in a California sea lion (Zalophus californianus). J. Exp. Biol. 1997, 200, 1309–1316. [Google Scholar] [CrossRef]

- Tarr, M.J. Rotating objects to recognize them: A case study on the role of viewpoint dependency in the recognition of three-dimensional objects. Psychon. Bull. Rev. 1995, 2, 55–82. [Google Scholar] [CrossRef]

- Tarr, M.J.; Pinker, S. Mental rotation and orientation-dependence in shape recognition. Cogn. Psychol. 1989, 21, 233–282. [Google Scholar] [CrossRef]

- Logothetis, N.K.; Pauls, J.; Bülthoff, H.H.; Poggio, T. View-dependent object recognition by monkeys. Curr. Biol. 1994, 4, 401–414. [Google Scholar] [CrossRef]

- Tarr, M.J.; Hayward, W.G. The concurrent encoding of viewpoint-invariant and viewpoint-dependent information in visual object recognition. Vis. Cogn. 2017, 25, 100–121. [Google Scholar] [CrossRef]

- Biederman, I.; Gerhardstein, P.C. Recognizing depth-rotated objects: Evidence and conditions for three-dimensional viewpoint invariance. J. Exp. Psychol. Hum. Percept. Perform. 1993, 19, 1162–1182. [Google Scholar] [CrossRef]

- Vuilleumier, P.; Henson, R.N.; Driver, J.; Dolan, R.J. Multiple levels of visual object constancy revealed by event-related fMRI of repetition priming. Nat. Neurosci. 2002, 5, 491–499. [Google Scholar] [CrossRef] [PubMed]

- DeLong, C.M.; Gardner, K.; Wilcox, K.T. Goldfish (Carassius auratus) viewing 3D objects exhbit viewpoint-invariance in the picture plane and enhanced performance at the familiar aspect angle in the depth planes. 2022; Manuscript in preparation. [Google Scholar]

- Nachtigall, P.E.; Murchison, A.E.; Au, W.W.L. Cylinder and cube discrimination by an echolocating blindfolded bottlenose dolphin. In Animal Sonar Systems; Busnel, R.G., Fish, J.F., Eds.; Plenum Press: New York, NY, USA, 1980; pp. 945–947. [Google Scholar]

- Spetch, M.L. Understanding how pictures are seen is important for comparative visual cognition. Comp. Cogn. Behav. Rev. 2010, 5, 163–166. [Google Scholar] [CrossRef] [Green Version]

- Wasserman, E.A.; Gagliardi, J.L.; Cook, B.R.; Kirkpatrick-Steger, K.; Astley, S.L.; Biederman, I. The pigeon’s recognition of drawings of depth-rotated stimuli. J. Exp. Psychol. Anim. Behav. Process. 1996, 22, 205–221. [Google Scholar] [CrossRef] [PubMed]

- Mitchnick, K.A.; Wideman, C.E.; Huff, A.E.; Palmer, D.; McNaughton, B.L.; Winters, B.D. Development of novel tasks for studying view-invariant object recognition in rodents: Sensitivity to scopolamine. Behav. Brain Res. 2018, 344, 48–56. [Google Scholar] [CrossRef] [PubMed]

- Minini, L.; Jeffery, K.J. Do rats use shape to solve “shape discriminations”? Learn. Mem. 2006, 13, 287–297. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sutherland, N.S. Shape discrimination in rat, octopus, and goldfish: A comparative study. J. Comp. Physiol. Psychol. 1969, 67, 160–176. [Google Scholar] [CrossRef]

- Racca, A.; Amadei, E.; Ligout, S.; Guo, K.; Meints, K.; Mills, D. Discrimination of human and dog faces and inversion responses in domestic dogs (Canis familiaris). Anim. Cogn. 2010, 13, 525–533. [Google Scholar] [CrossRef] [Green Version]

- Pollard, J.S.; Beale, I.L.; Lysons, A.M.; Preston, A.C. Visual discrimination in the erret. Percept. Mot. Ski. 1967, 24, 279–282. [Google Scholar] [CrossRef]

- Corgan, M.E.; Grandin, T.; Matlock, S. Evaluating the recognition of a large rotated object in domestic horses (Equus caballus). J. Anim. Sci. 2020, 98, 1383. [Google Scholar] [CrossRef]

- Kendrick, K.M.; Atkins, K.; Hinton, M.R.; Heavens, P.; Keverne, B. Are faces special for sheep? Evidence from facial and object discrimination learning tests showing effects of inversion and social familiarity. Behav. Process. 1996, 38, 19–35. [Google Scholar] [CrossRef]

- Hopkins, W.D.; Fagot, J.; Vauclair, J. Mirror-image matching and mental rotation problem solving by baboons (Papio papio): Unilateral input enhances performance. J. Exp. Psychol. Gen. 1993, 122, 61–72. [Google Scholar] [CrossRef] [PubMed]

- Burmann, B.; Dehnhardt, G.; Mauck, B. Visual information processing in the lion-tailed macaque (Macaca silenus): Mental rotation or rotational invariance? Brain Behav. Evol. 2005, 65, 168–176. [Google Scholar] [CrossRef] [PubMed]

- Freedman, D.J.; Riesenhuber, M.; Poggio, T.; Miller, E.K. Experience-dependent sharpening of visual shape selectivity in inferior temporal cortex. Cereb. Cortex 2006, 16, 1631–1644. [Google Scholar] [CrossRef] [Green Version]

- Köhler, C.; Hoffmann, K.P.; Dehnhardt, G.; Mauck, B. Mental rotation and rotational invariance in the rhesus monkey (Macaca mulatta). Brain Behav. Evol. 2005, 66, 158–166. [Google Scholar] [CrossRef]

- Nielsen, K.J.; Logothetis, N.K.; Rainer, G. Object features used by humans and monkeys to identify rotated shapes. J. Vis. 2008, 8, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Parr, L.A. The inversion effect reveals species differences in face processing. Acta Psychol. 2011, 138, 204–210. [Google Scholar] [CrossRef] [Green Version]

- Parr, L.A.; Heintz, M. Discrimination of faces and houses by rhesus monkeys: The role of stimulus expertise and rotation angle. Anim. Cogn. 2008, 11, 467–474. [Google Scholar] [CrossRef] [Green Version]

- Hamm, J.; Matheson, W.R.; Honig, W.K. Mental rotation in pigeons (Columba livia)? J. Comp. Psychol. 1997, 111, 76–81. [Google Scholar] [CrossRef]

- Hollard, V.D.; Delius, J.D. Rotational invariance in visual pattern recognition by pigeons and humans. Science 1982, 218, 804–806. [Google Scholar] [CrossRef]

- Jitsumori, M.; Makino, H. Recognition of static and dynamic images of depth-rotated human faces by pigeons. Anim. Learn. Behav. 2004, 32, 145–156. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jitsumori, M.; Ohkubo, O. Orientation discrimination and categorization of photographs of natural objects by pigeons. Behav. Process. 1996, 38, 205–226. [Google Scholar] [CrossRef]

- Spetch, M.L.; Friedman, A.; Reid, S.L. The effect of distinctive parts on recognition of depth-rotated objects by pigeons (Columba livia) and humans. J. Exp. Psychol. Gen. 2001, 130, 238. [Google Scholar] [CrossRef] [PubMed]

- Wood, J.N.; Wood, S.M. One-shot learning of view-invariant object representations in newborn chicks. Cognition 2020, 199, 104192. [Google Scholar] [CrossRef] [PubMed]

- Plowright, C.M.S.; Landry, F.; Church, D.; Heyding, J.; Dupuis-Roy, N.; Thivierge, J.P.; Simonds, V. A change in orientation: Recognition of rotated patterns by bumble bees. J. Insect Behav. 2001, 14, 113–127. [Google Scholar] [CrossRef]

- Schusterman, R.J.; Thomas, T. Shape discrimination and transfer in the California sea lion. Psychon. Sci. 1966, 5, 21–22. [Google Scholar] [CrossRef] [Green Version]

- DeLong, C.M.; Fellner, W.; Wilcox, K.T.; Odell, K.; Harley, H.E. Visual perception in a bottlenose dolphin (Tursiops truncatus): Successful recognition of 2-D objects rotated in the picture and depth planes. J. Comp. Psychol. 2020, 134, 180. [Google Scholar] [CrossRef]

- Wang, M.Y.; Takeuchi, H. Individual recognition and the ‘face inversion effect’ in medaka fish (Oryzias latipes). eLife 2017, 6, e24728. [Google Scholar] [CrossRef]

- Newport, C.; Wallis, G.; Siebeck, U.E. Object recognition in fish: Accurate discrimination across novel views of an unfamiliar object category (human faces). Anim. Behav. 2018, 145, 39–49. [Google Scholar] [CrossRef]

- Bowman, R.S.; Sutherland, N.S. Shape discrimination by goldfish: Coding of irregularities. J. Comp. Physiol. Psychol. 1970, 72, 90–97. [Google Scholar] [CrossRef]

- DeLong, C.M.; Fobe, I.; O’Leary, T.; Wilcox, K.T. Visual perception of planar-rotated 2D objects in goldfish (Carassius auratus). Behav. Process. 2018, 157, 263–278. [Google Scholar] [CrossRef] [PubMed]

- Mackintosh, J.; Sutherland, N.S. Visual discrimination by the goldfish: The orientation of rectangles. Anim. Behav. 1963, 11, 135–141. [Google Scholar] [CrossRef]

- Sutherland, N.S.; Bowman, R. Discrimination of circles and squares with and without knobs by goldfish. Q. J. Exp. Psychol. 1969, 21, 330–338. [Google Scholar] [CrossRef] [PubMed]

- Bowman, R.; Sutherland, N.S. Discrimination of “W” and “V” shapes by goldfish. Q. J. Exp. Psychol. 1969, 21, 69–76. [Google Scholar] [CrossRef] [PubMed]

- Yin, R.K. Looking at upside-down faces. J. Exp. Psychol. 1969, 81, 141–145. [Google Scholar] [CrossRef]

- Avarguès-Weber, A.; d’Amaro, D.; Metzler, M.; Finke, V.; Baracchi, D.; Dyer, A.G. Does holistic processing require a large brain? Insights from honeybees and wasps in fine visual recognition tasks. Front. Psychol. 2018, 1313. [Google Scholar] [CrossRef]

- Baracchi, D.; Petrocelli, I.; Cusseau, G.; Pizzocaro, L.; Teseo, S.; Turillazzi, S. Facial markings in the hover wasps: Quality signals and familiar recognition cues in two species of Stenogastrinae. Anim. Behav. 2013, 85, 203–212. [Google Scholar] [CrossRef] [Green Version]

- Dyer, A.G.; Neumeyer, C.; Chittka, L. Honeybee (Apis mellifera) vision can discriminate between and recognise images of human faces. J. Exp. Biol. 2005, 208, 4709–4714. [Google Scholar] [CrossRef] [Green Version]

- Neumeyer, C. Color vision in fishes and its neural basis. In Sensory Processing in Aquatic Environments; Collin, S.P., Marshall, N.J., Eds.; Springer: New York, NY, USA, 2003; pp. 223–235. [Google Scholar]

- Bovet, D.; Vauclair, J. Picture recognition in animals and humans. Behav. Brain Res. 2000, 109, 143–165. [Google Scholar] [CrossRef]

- Davenport, R.K.; Rogers, C.M. Perception of photographs by apes. Behaviour 1971, 39, 318–320. [Google Scholar]

- Fagot, J.; Parron, C. Picture perception in birds: Perspective from primatologists. Comp. Cogn. Behav. Rev. 2010, 5, 132–135. [Google Scholar] [CrossRef]

- Judge, P.G.; Kurdziel, L.B.; Wright, R.M.; Bohrman, J.A. Picture recognition of food by macaques (Macaca Silenus). Anim. Cogn. 2011, 15, 313–325. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Spetch, M.L.; Friedman, A. Pigeons see correspondence between objects and their pictures. Psychol. Sci. 2006, 17, 966–972. [Google Scholar] [CrossRef] [PubMed]

- Delius, J.D. Categorical discrimination of objects and pictures by pigeons. Anim. Learn. Behav. 1992, 20, 301–311. [Google Scholar] [CrossRef]

- Watanabe, S. How Do Pigeons See Pictures? Recognition of the Real World from Its 2-D Representation; Picture Perception in Animals; Psychology Press: Hoce, UK; Taylor & Francis: Oxfordshire, UK, 2000; pp. 71–90. [Google Scholar]

- Watanabe, S. Object-picture equivalence in the pigeon: An analysis with natural concept and pseudoconcept discriminations. Behav. Process. 1993, 30, 225–231. [Google Scholar] [CrossRef]

- Railton, R.; Foster, T.M.; Temple, W. Object/picture recognition in hens. Behav. Process. 2014, 104, 53–64. [Google Scholar] [CrossRef]

- Thompson, E.L.; Plowright, C. How images may or may not represent flowers: Picture–object correspondence in bumblebees (Bombus impatiens)? Anim. Cogn. 2014, 17, 1031–1043. [Google Scholar] [CrossRef]

- Johnson-Ulrich, Z.; Vonk, J.; Humbyrd, M.; Crowley, M.; Wojtkowski, E.; Yates, F.; Allard, S. Picture object recognition in an American black bear (Ursus americanus). Anim. Cogn. 2016, 19, 1237–1242. [Google Scholar] [CrossRef]

- DeLong, C.M.; Keller, A.M.; Wilcox, K.T.; Fobe, I.; Keenan, S.A. Visual discrimination of geometric and complex 2D shapes in goldfish (Carassius auratus). Anim. Behav. Cogn. 2018, 5, 300–319. [Google Scholar] [CrossRef]

- Siebeck, U.E.; Litherland, L.; Wallis, G.M. Shape learning and discrimination in reef fish. J. Exp. Biol. 2009, 212, 2113–2119. [Google Scholar] [CrossRef] [Green Version]

- Gellermann, L.W. Chance orders of alternating stimuli in visual discrimination experiments. J. Genet. Psychol. 1933, 42, 206–208. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing (3.6.1) [Programming Language]. 2021. Available online: https://www.R-project.org/ (accessed on 5 July 2019).

- Tukey, J. Comparing individual means in the analysis of variance. Biometrics 1949, 5, 99–114. [Google Scholar] [CrossRef] [PubMed]

- Lo, S.; Andrews, S. To transform or not to transform: Using generalized linear mixed models to analyse reaction time data. Front. Psychol. 2015, 6, 1171. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting linear mixed-effects models using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- McNeish, D.M. Estimation methods for mixed logistic models with few clusters. Multivar. Behav. Res. 2016, 51, 790–804. [Google Scholar] [CrossRef]

- Bell, A.; Jones, K. Explaining fixed effects: Random effects modeling of time-series cross-sectional and panel data. Political Sci. Res. Methods 2015, 3, 133–153. [Google Scholar] [CrossRef] [Green Version]

- McNeish, D.M.; Kelley, K. Fixed effects models versus mixed effects models for clustered data: Reviewing the approaches, disentangling the differences, and making recommendations. Psychol. Methods 2019, 24, 20–35. [Google Scholar] [CrossRef]

- McNeish, D.M.; Stapleton, L.M. Modeling clustered data with very few clusters. Multivar. Behav. Res. 2016, 51, 495–518. [Google Scholar] [CrossRef] [Green Version]

- Brincks, A.M.; Enders, C.K.; Llabre, M.M.; Bulotsky-Shearer, R.J.; Prado, G.; Feaster, D.J. Centering predictor variables in three-level contextual models. Multivar. Behav. Res. 2017, 52, 149–163. [Google Scholar] [CrossRef]

- Raudenbush, S.W.; Bryk, A.S. Hierarchical Linear Models: Applications and Data Analysis Methods, 2nd ed.; Sage Publications: Thousand Oaks, CA, USA, 2002. [Google Scholar]

- Yaremych, H.E.; Preacher, K.J.; Hedeker, D. Centering categorical predictors in multilevel models: Best practices and interpretation. Psychol. Methods 2021. [Google Scholar] [CrossRef]

- Rast, P.; MacDonald, S.W.S.; Hofer, S.M. Intensive measurement designs for research on aging. GeroPsych 2012, 25, 45–55. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Maxwell, S.E.; Delaney, H.D.; Kelley, K. Designing Experiments and Analyzing Data: A Model Comparison Perspective, 3rd ed.; Routledge: New York, NY, USA, 2018. [Google Scholar]

- Lenth, R.V. Emmeans: Estimated Marginal Means, aka Least-Squares Means (1.7.4–1) [Computer Software]. 2022. Available online: https://CRAN.R-project.org/package=emmeans (accessed on 15 May 2022).

- Viechtbauer, W. Conducting meta-analyses in {R} with the metafor package. J. Stat. Softw. 2010, 36, 1–48. [Google Scholar] [CrossRef] [Green Version]

- Gerhardstein, P.C.; Biederman, I. 3D orientation invariance in visual object recognition. In Proceedings of the Presented at the Annual Meeting of the Association for Research in Vision and Ophthalmology, Sarasota, FL, USA, 28 April–6 May 1991. [Google Scholar]

- Humphrey, G.K.; Khan, S.C. Recognizing novel views of three-dimensional objects. Can. J. Psychol. Rev. Can. Psychol. 1992, 46, 170. [Google Scholar] [CrossRef] [PubMed]

- Shepard, R.; Metzler, J. Mental rotation of three-dimensional objects. Science 1971, 171, 701–703. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Neumeyer, C. Color vision in goldfish and other vertebrates. In How Animals See the World: Comparative Behavior, Biology, and Evolution of Vision; Lazareva, O.F., Shimuzu, T., Wasserman, E.A., Eds.; Oxford University Press: New York, NY, USA, 2012; pp. 25–42. [Google Scholar]

- Marks, W.B. Visual pigments of single goldfish cones. J. Physiol. 1965, 178, 14–32. [Google Scholar] [CrossRef] [Green Version]

- Ittelson, W.H. Visual perception of markings. Psychon. Bull. Rev. 1996, 3, 171–187. [Google Scholar] [CrossRef] [Green Version]

- Kodric-Brown, A.; Nicoletto, P.F. Repeatability of female choice in the guppy: Response to live and videotaped males. Anim. Behav. 1997, 54, 369–376. [Google Scholar] [CrossRef] [Green Version]

- Rowland, W.J. Do female stickleback care about male courtship vigour? Manipulation of display tempo using video playback. Behaviour 1995, 132, 951–961. Available online: https://www.jstor.org/stable/4535313 (accessed on 15 February 2022). [CrossRef] [Green Version]

- Frech, B.; Vogtsberger, M.; Neumeyer, C. Visual discrimination of objects differing in spatial depth by goldfish. J. Comp. Physiol. A 2012, 198, 53–60. [Google Scholar] [CrossRef]

- Gierszewski, S.; Blackmann, H.; Schluessel, V. Cognitive abilities in Malawi cichlids (Pseudotropheus sp.): Matching-to-sample and image/mirror-image discriminations. PLoS ONE 2013, 8, e57363. [Google Scholar] [CrossRef] [Green Version]

- Wyzisk, K.; Neumeyer, C. Perception of illusory surfaces and contours in goldfish. Vis. Neurosci. 2007, 24, 291–298. [Google Scholar] [CrossRef] [PubMed]

- Lucon-Xiccato, T.; Dadda, M.; Bisazza, A. Sex differences in discrimination of shoal size in the guppy (Poecilia reticulata). Ethology 2016, 122, 481–491. [Google Scholar] [CrossRef]

- Miletto Petrazzini, M.E.; Agrillo, C. Turning to the larger shoal: Are there individual differences in small-and large-quantity discrimination of guppies? Ethol. Ecol. Evol. 2016, 28, 211–220. [Google Scholar] [CrossRef]

- Smith, P.L.; Little, D.R. Small is beautiful: In defense of the small-N design. Psychon. Bull. Rev. 2018, 25, 2083–2101. [Google Scholar] [CrossRef]

- Singer, J.D.; Willet, J.B. A framework for investigating change over time. Appl. Longitud. Data Anal. Modeling Chang. Event Occur. 2003, 315, 115–139. [Google Scholar]

- Normand, M.P. Less is more: Psychologists can learn more by studying fewer people. Front. Psychol. 2016, 7, 934. [Google Scholar] [CrossRef] [Green Version]

| Angle of Rotation | Picture Plane | Depth Plane (y-Axis) | Depth Plane (x-Axis) |

|---|---|---|---|

| 0° * |   | ||

| 90° |   |   |   |

| 180° |   |   |   |

| 270° |   |   |   |

| Start of Training | End of Training | |||

|---|---|---|---|---|

| Fish | M (%) | 95% CI (%) | M (%) | 95% CI (%) |

| 1 | 83.0 | [56.7, 94.8] | 85.1 | [60.7, 95.5] |

| 2 | 90.6 | [69.2, 97.6] | 75.1 | [53.2, 88.9] |

| 3 | 81.3 | [56.7, 93.5] | 88.2 | [64.0, 96.9] |

| 4 | 97.2 | [70.4, 99.8] | 99.9 | - |

| 5 | 100.0 | - | 100.0 | - |

| 6 | 99.4 | [43.1, 100.0] | 96.9 | [75.5, 99.7] |

| Accuracy (%) | Trial Time (s) | |||

|---|---|---|---|---|

| Stimuli Orientation | M | 95% CI | M | 95% CI |

| 0° | 89.9 | [85.9, 92.9] | 7.9 | [7.3, 8.5] |

| 90° | 89.5 | [83.8, 93.3] | 9.0 | [8.1, 10.0] |

| 180° | 90.2 | [84.7, 93.8] | 7.1 | [6.5, 7.8] |

| 270° | 87.4 | [81.1, 91.8] | 8.1 | [7.3, 9.0] |

| Accuracy (%) | Trial Time (s) | |||||

|---|---|---|---|---|---|---|

| Fish | M | 95% CI | Group | M | 95% CI | Group |

| 1 | 76.2 | [69.6, 82.9] | A | 5.5 | [5.0, 6.1] | B |

| 2 | 81.0 | [75.0, 86.9] | AB | 11.9 | [10.1, 14.1] | D |

| 3 | 90.2 | [86.4, 93.9] | CD | 4.2 | [3.8, 4.7] | A |

| 4 | 92.2 | [88.8, 95.6] | D | 11.3 | [9.8, 13.0] | D |

| 5 | 99.4 | [98.4, 99.9] | E | 8.9 | [7.8,10.2] | C |

| 6 | 84.5 | [80.4, 89.3] | BC | 10.0 | [8.9, 11.3] | CD |

| Fish | Rotation Plane | M (s) | 95% CI | Group |

|---|---|---|---|---|

| 1 | Picture | 4.2 | [3.6, 5.0] | A |

| Depth (y-axis) | 7.2 | [6.1, 8.3] | B | |

| Depth (x-axis) | - | - | - | |

| 2 | Picture | 9.4 | [7.2, 12.4] | A |

| Depth (y-axis) | 11.1 | [8.9, 13.9] | A | |

| Depth (x-axis) | 16.1 | [12.5, 20.7] | B | |

| 3 | Picture | 4.0 | [3.5, 4.6] | A |

| Depth (y-axis) | 4.0 | [3.5, 4.5] | A | |

| Depth (x-axis) | 4.8 | [4.2, 5.5] | B | |

| 4 | Picture | 12.4 | [9.9, 15.6] | B |

| Depth (y-axis) | 9.0 | [7.6, 10.6] | A | |

| Depth (x-axis) | 12.9 | [10.5, 16.0] | B | |

| 5 | Picture | 7.8 | [6.6, 9.4] | A |

| Depth (y-axis) | 9.2 | [7.6, 11.1] | A | |

| Depth (x-axis) | 9.8 | [8.1, 11.7] | A | |

| 6 | Picture | 8.5 | [7.2, 10.0] | A |

| Depth (y-axis) | 10.5 | [8.7, 12.6] | AB | |

| Depth (x-axis) | 11.3 | [9.5, 13.5] | B |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wegman, J.J.; Morrison, E.; Wilcox, K.T.; DeLong, C.M. Visual Perception of Photographs of Rotated 3D Objects in Goldfish (Carassius auratus). Animals 2022, 12, 1797. https://doi.org/10.3390/ani12141797

Wegman JJ, Morrison E, Wilcox KT, DeLong CM. Visual Perception of Photographs of Rotated 3D Objects in Goldfish (Carassius auratus). Animals. 2022; 12(14):1797. https://doi.org/10.3390/ani12141797

Chicago/Turabian StyleWegman, Jessica J., Evan Morrison, Kenneth Tyler Wilcox, and Caroline M. DeLong. 2022. "Visual Perception of Photographs of Rotated 3D Objects in Goldfish (Carassius auratus)" Animals 12, no. 14: 1797. https://doi.org/10.3390/ani12141797

APA StyleWegman, J. J., Morrison, E., Wilcox, K. T., & DeLong, C. M. (2022). Visual Perception of Photographs of Rotated 3D Objects in Goldfish (Carassius auratus). Animals, 12(14), 1797. https://doi.org/10.3390/ani12141797