A Touchscreen-Based, Multiple-Choice Approach to Cognitive Enrichment of Captive Rhesus Macaques (Macaca mulatta)

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Animals and Housing

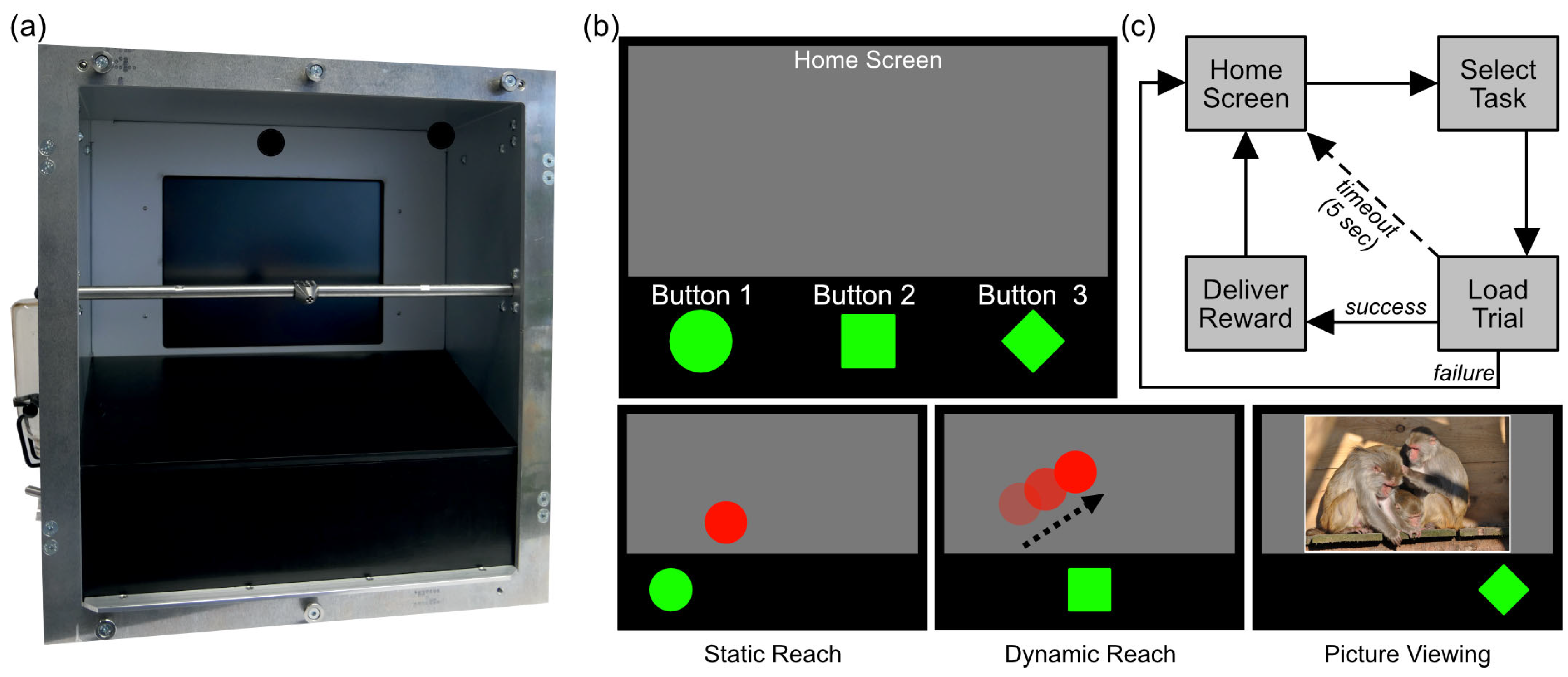

2.2. Apparatus

2.3. Experimental Paradigm

2.4. Experimental Sessions

2.5. Manual Animal Identification for Data Curation

2.6. Data Analysis

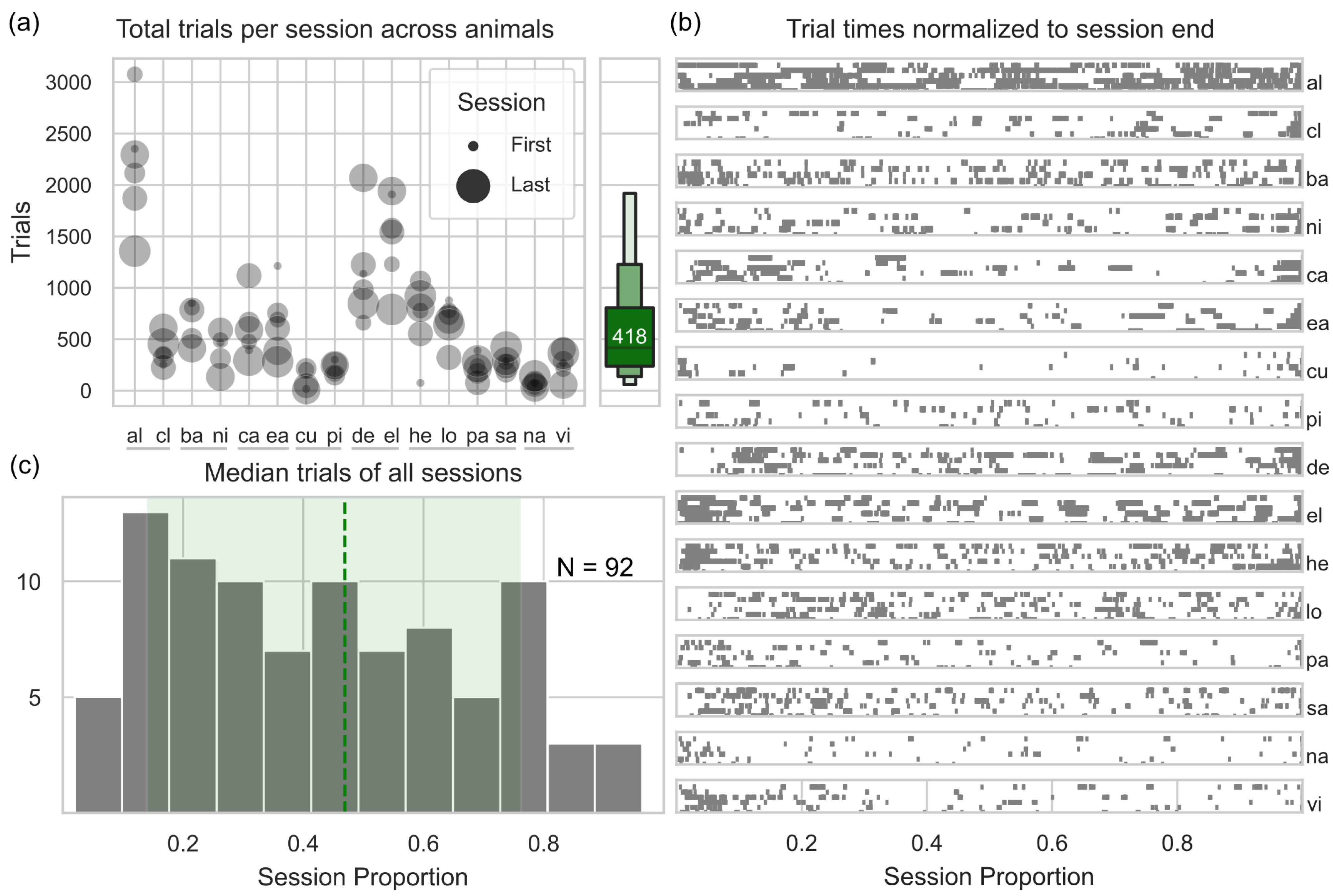

2.6.1. General Engagement Analysis

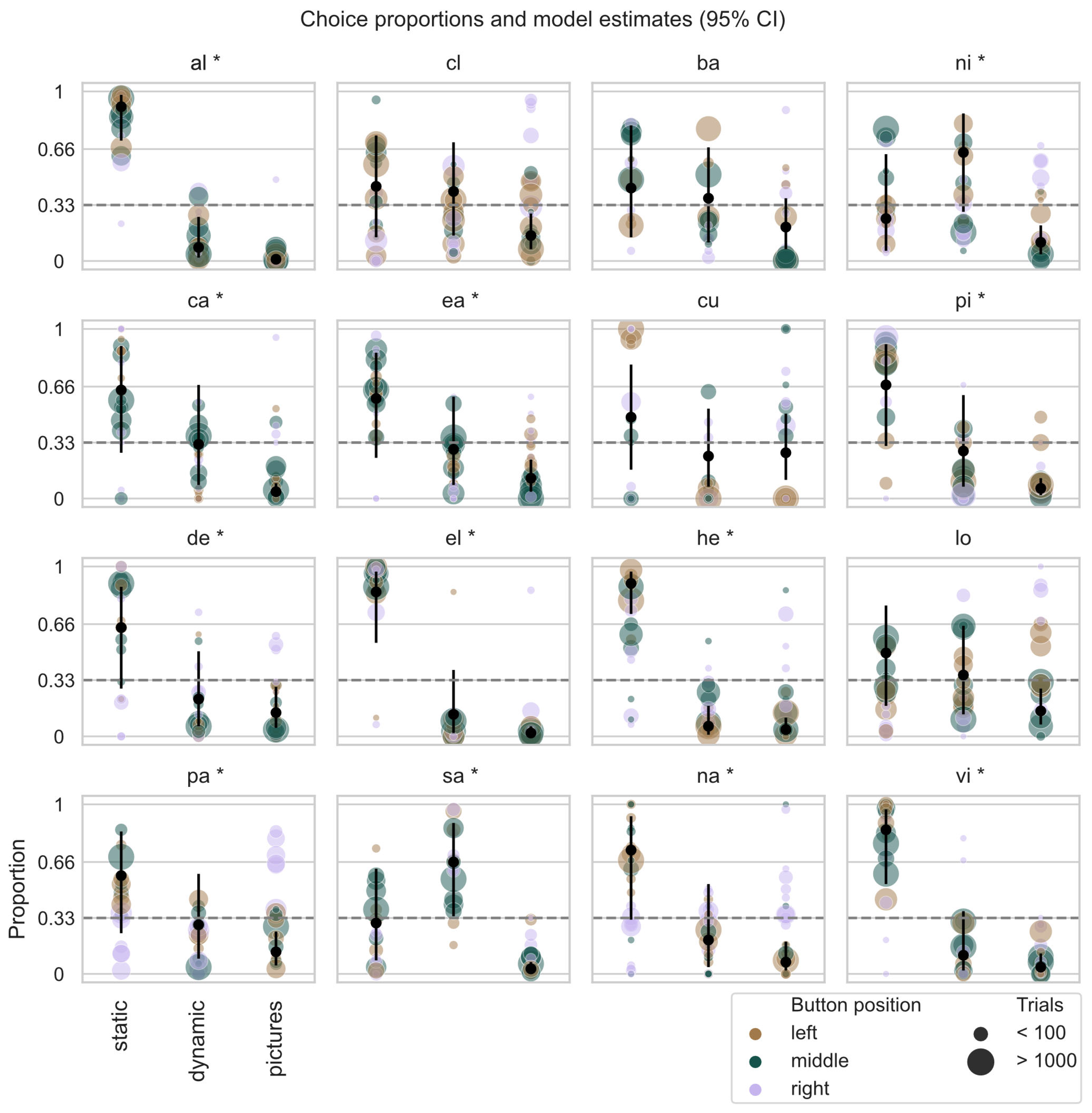

2.6.2. Task Preference Analysis

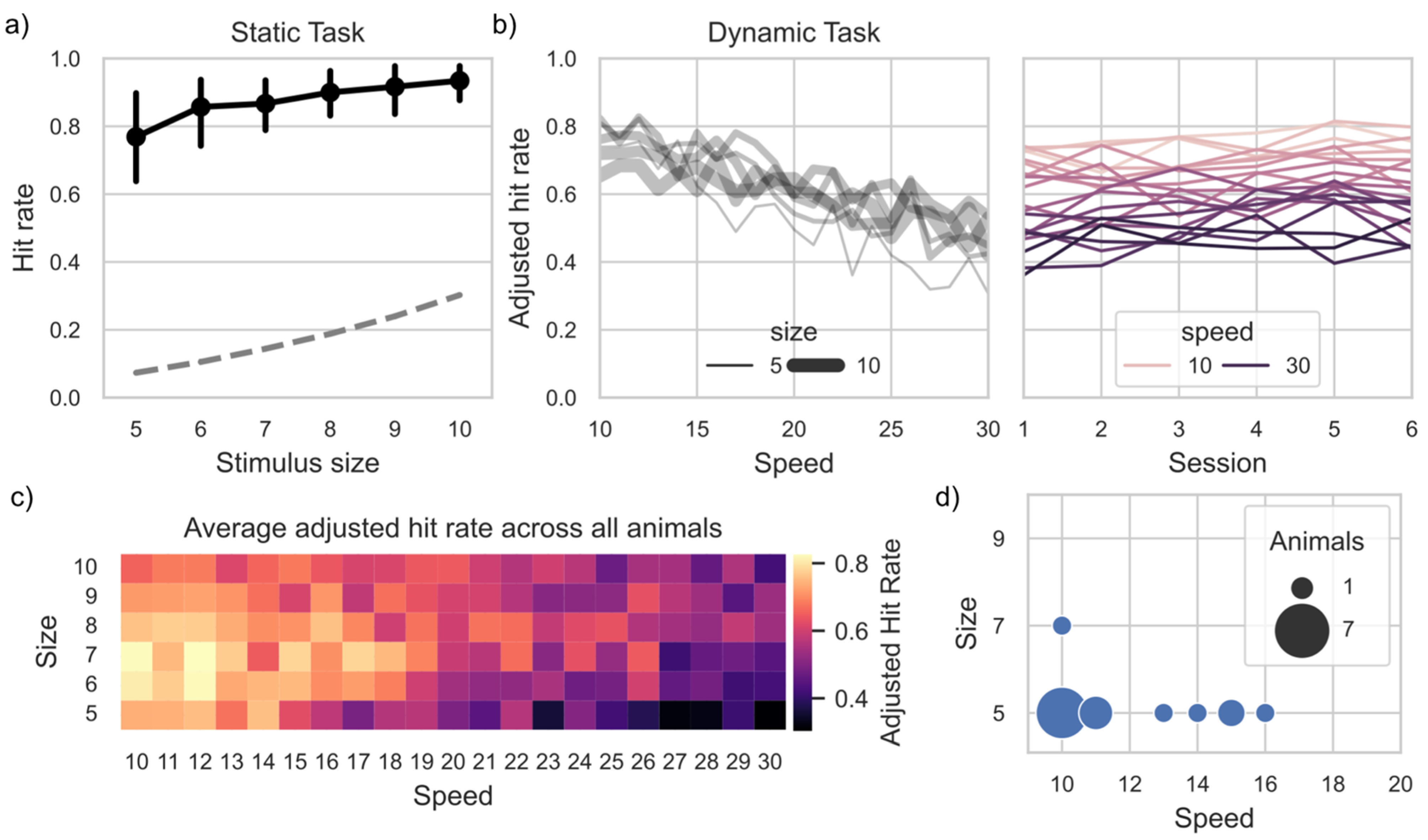

2.6.3. Task Proficiency Analysis

3. Results

3.1. General Engagement

3.2. Task Preference

3.3. Task Proficiency

| General Engagement (Figure 1a) | Correlation between Speed and Adjusted Hitrate (Dynamic task only Figure 4b) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Group | Animal | Age | Avg. Trials per Session | Sessions | Pearson Corr. | p-Value (a = 0.003) | Total Trials | Pearson Corr. | Confidence Intervals (95%) | Number of Speed Values | Trials Performed | p-Value (a = 0.003) |

| 1 | al | 13 | 2206 | 6 | −0.65 | 0.22 | 13,068 | −0.91 | −0.97, −0.8 | 21 | 1622 | >0.001 |

| cl | 7 | 342 | 6 | 0.60 | 0.27 | 2229 | −0.71 | −0.87, −0.4 | 21 | 1179 | 0.0141 | |

| 2 | ba | 17 | 785 | 5 | −0.69 | 0.3 | 3364 | −0.89 | −0.95, −0.74 | 21 | 1025 | 0.0003 |

| ni | 7 | 468 | 5 | −0.38 | 0.61 | 1997 | −0.53 | −0.78, −0.12 | 21 | 426 | 0.0097 | |

| 3 | ca | 12 | 532 | 6 | 0.14 | 0.8 | 3538 | −0.27 | −0.65, 0.22 | 18 | 46 | >0.001 |

| ea | 11 | 649 | 6 | −0.93 | 0.01 | 3937 | −0.67 | −0.85, −0.33 | 21 | 630 | 0.0085 | |

| 4 | cu | 16 | 50 | 5 | −0.20 | 0.77 | 491 | −0.56 | −0.8, −0.17 | 21 | 963 | 0.2771 |

| pi | 12 | 247 | 5 | −0.32 | 0.69 | 1119 | −0.66 | −0.85, −0.32 | 21 | 379 | 0.5265 | |

| 5 | de | 11 | 1059 | 6 | −0.005 | 0.99 | 6922 | −0.62 | −0.83, −0.25 | 21 | 499 | 0.0009 |

| el | 11 | 1561 | 6 | −0.39 | 0.5 | 8992 | −0.78 | −0.91, −0.52 | 21 | 1169 | 0.0011 | |

| 6 | he | 6 | 792 | 6 | 0.5 | 0.39 | 4203 | −0.59 | −0.82, −0.18 | 19 | 76 | 0.0030 |

| lo | 5 | 717 | 6 | −0.39 | 0.51 | 4057 | −0.55 | −0.79, −0.16 | 21 | 663 | >0.001 | |

| pa | 9 | 228 | 6 | −0.15 | 0.8 | 1380 | −0.56 | −0.8, −0.18 | 21 | 208 | 0.0077 | |

| sa | 9 | 283 | 6 | 0.49 | 0.4 | 1697 | −0.15 | −0.54, 0.3 | 21 | 148 | 0.0001 | |

| 7 | na | 6 | 66 | 6 | 0.05 | 0.93 | 436 | −0.74 | −0.89, −0.46 | 21 | 984 | 0.0079 |

| vi | 9 | 277 | 6 | −0.37 | 0.53 | 1588 | −0.13 | −0.54, 0.33 | 20 | 130 | 0.5895 | |

4. Discussion

4.1. Engagement and Sustainability of Interaction

4.2. Task Preference and Individual Differences

4.3. Proficiency and Mastery Development

4.4. Practical Implications and Future Directions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Andrews, M.W. Video-task paradigm extended to Saimiri. Percept. Mot. Ski. 1993, 76, 183–191. [Google Scholar] [CrossRef]

- Brannon, E.M.; Andrews, M.W.; Rosenblum, L.A. Effectiveness of Video of Conspecifics as a Reward for Socially Housed Bonnet Macaques (Macaca radiata). Percept. Mot. Ski. 2016, 98, 849–858. [Google Scholar] [CrossRef]

- Butler, J.L.; Kennerley, S.W. Mymou: A low-cost, wireless touchscreen system for automated training of nonhuman primates. Behav. Res. Methods 2019, 51, 2559–2572. [Google Scholar] [CrossRef]

- Calapai, A.; Berger, M.; Niessing, M.; Heisig, K.; Brockhausen, R.; Treue, S.; Gail, A. A cage-based training, cognitive testing and enrichment system optimized for rhesus macaques in neuroscience research. Behav. Res. 2017, 49, 35–45. [Google Scholar] [CrossRef]

- Calapai, A.; Cabrera-Moreno, J.; Moser, T.; Jeschke, M. Flexible auditory training, psychophysics, and enrichment of common marmosets with an automated, touchscreen-based system. Nat. Commun. 2022, 13, 1648. [Google Scholar] [CrossRef] [PubMed]

- Clark, F.E. Great ape cognition and captive care: Can cognitive challenges enhance well-being? Appl. Anim. Behav. Sci. 2011, 135, 1–12. [Google Scholar] [CrossRef]

- Drea, C.M. Studying primate learning in group contexts: Tests of social foraging, response to novelty, and cooperative problem solving. Methods 2006, 38, 162–177. [Google Scholar] [CrossRef]

- Fagot, J.; Paleressompoulle, D. Automatic testing of cognitive performance in baboons maintained in social groups. Behav. Res. Methods 2009, 41, 396–404. [Google Scholar] [CrossRef] [PubMed]

- Gazes, R.P.; Brown, E.K.; Basile, B.M.; Hampton, R.R. Automated cognitive testing of monkeys in social groups yields results comparable to individual laboratory-based testing. Anim. Cogn. 2013, 16, 445–458. [Google Scholar] [CrossRef]

- O’Leary, J.D.; O’Leary, O.F.; Cryan, J.F.; Nolan, Y.M. A low-cost touchscreen operant chamber using a Raspberry PiTM. Behav. Res. 2018, 50, 2523–2530. [Google Scholar] [CrossRef]

- Richardson, W.K.; Washburn, D.A.; Hopkins, W.D.; Savage-rumbaugh, E.S.; Rumbaugh, D.M. The NASA/LRC computerized test system. Behav. Res. Methods Instrum. Comput. 1990, 22, 127–131. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Schmitt, V. Implementing portable touchscreen-setups to enhance cognitive research and enrich zoo-housed animals. J. Zoo Aquar. Res. 2019, 7, 50–58. [Google Scholar] [CrossRef]

- Takemoto, A.; Izumi, A.; Miwa, M.; Nakamura, K. Development of a compact and general-purpose experimental apparatus with a touch-sensitive screen for use in evaluating cognitive functions in common marmosets. J. Neurosci. Methods 2011, 199, 82–86. [Google Scholar] [CrossRef]

- Truppa, V.; Garofoli, D.; Castorina, G.; Piano Mortari, E.; Natale, F.; Visalberghi, E. Identity concept learning in matching-to-sample tasks by tufted capuchin monkeys (Cebus apella). Anim. Cogn. 2010, 13, 835–848. [Google Scholar] [CrossRef]

- Walker, J.D.; Pirschel, F.; Gidmark, N.; MacLean, J.N.; Hatsopoulos, N.G. A platform for semiautomated voluntary training of common marmosets for behavioral neuroscience. J. Neurophysiol. 2020, 123, 1420–1426. [Google Scholar] [CrossRef]

- Csikszentmihalyi, M.; Csikszentmihalyi, I. Beyond Boredom and Anxiety; Jossey-Bass Publishers, University of Michigan: Ann Arbor, MI, USA, 1975. [Google Scholar]

- Meehan, C.L.; Mench, J.A. The challenge of challenge: Can problem solving opportunities enhance animal welfare? Appl. Anim. Behav. Sci. 2007, 102, 246–261. [Google Scholar] [CrossRef]

- Clark, F. Cognitive enrichment and welfare: Current approaches and future directions. Anim. Behav. Cogn. 2017, 4, 52–71. [Google Scholar] [CrossRef]

- Yeates, J.W.; Main, D.C.J. Assessment of positive welfare: A review. Vet. J. 2008, 175, 293–300. [Google Scholar] [CrossRef]

- Bennett, A.J.; Perkins, C.M.; Tenpas, P.D.; Reinebach, A.L.; Pierre, P.J. Moving evidence into practice: Cost analysis and assessment of macaques’ sustained behavioral engagement with videogames and foraging devices. Am. J. Primatol. 2016, 78, 1250–1264. [Google Scholar] [CrossRef]

- Fagot, J.; Gullstrand, J.; Kemp, C.; Defilles, C.; Mekaouche, M. Effects of freely accessible computerized test systems on the spontaneous behaviors and stress level of Guinea baboons (Papio papio). Am. J. Primatol. 2014, 76, 56–64. [Google Scholar] [CrossRef]

- Hansmeyer, L.; Yurt, P.; Naubahar, A.; Trunk, A.; Berger, M.; Calapai, A.; Treue, S.; Gail, A. Home-enclosure based behavioral and wireless neural recording setup for unrestrained rhesus macaques. eNeuro 2022, 10. [Google Scholar] [CrossRef]

- Evans, T.A.; Beran, M.J.; Chan, B.; Klein, E.D.; Menzel, C.R. An efficient computerized testing method for the capuchin monkey (Cebus apella): Adaptation of the LRC-CTS to a socially housed nonhuman primate species. Behav. Res. Methods 2008, 40, 590–596. [Google Scholar] [CrossRef] [PubMed]

- Tarou, L.R.; Bashaw, M.J. Maximizing the effectiveness of environmental enrichment: Suggestions from the experimental analysis of behavior. Appl. Anim. Behav. Sci. 2007, 102, 189–204. [Google Scholar] [CrossRef]

- Farrar, B.; Altschul, D.; Fischer, J.; van der Mescht, J.; Placì, S.; Troisi, C.A.; Ostojic, L. Trialling Meta-Research in Comparative Cognition: Claims and Statistical Inference in Animal Physical Cognition. Anim. Behav. Cogn. 2020, 7, 1–26. [Google Scholar] [CrossRef] [PubMed]

- Schubiger, M.N.; Fichtel, C.; Burkart, J.M. Validity of Cognitive Tests for Non-human Animals: Pitfalls and Prospects. Front. Psychol. 2020, 11, 1835. [Google Scholar] [CrossRef]

- Fagot, J.; Bonté, E. Automated testing of cognitive performance in monkeys: Use of a battery of computerized test systems by a troop of semi-free-ranging baboons (Papio papio). Behav. Res. Methods 2010, 42, 507–516. [Google Scholar] [CrossRef]

- Berger, M.; Calapai, A.; Stephan, V.; Niessing, M.; Burchardt, L.; Gail, A.; Treue, S. Standardized automated training of rhesus monkeys for neuroscience research in their housing environment. J. Neurophysiol. 2018, 119, 796–807. [Google Scholar] [CrossRef]

- Catania, A.C. Freedom and knowledge: An experimental analysis of preference in pigeons. J. Exp. Anal. Behav. 1975, 24, 89–106. [Google Scholar] [CrossRef]

- Catania, A.C.; Sagvolden, T. Preference for free choice over forced choice in pigeons. J. Exp. Anal. Behav. 1980, 34, 77–86. [Google Scholar] [CrossRef]

- Humphrey, N. Colour and Brightness Preferences in Monkeys. Nature 1971, 229, 615–617. [Google Scholar] [CrossRef]

- Huskisson, S.M.; Jacobson, S.L.; Egelkamp, C.L.; Ross, S.R.; Hopper, L.M. Using a Touchscreen Paradigm to Evaluate Food Preferences and Response to Novel Photographic Stimuli of Food in Three Primate Species (Gorilla gorilla gorilla, Pan troglodytes, and Macaca fuscata). Int. J. Primatol. 2020, 41, 5–23. [Google Scholar] [CrossRef]

- Ogura, T. Contrafreeloading and the value of control over visual stimuli in Japanese macaques (Macaca fuscata). Anim. Cogn. 2011, 14, 427–431. [Google Scholar] [CrossRef] [PubMed]

- Owen, M.A.; Swaisgood, R.R.; Czekala, N.M.; Lindburg, D.G. Enclosure choice and well-being in giant pandas: Is it all about control? Zoo Biol. 2005, 24, 475–481. [Google Scholar] [CrossRef]

- Perdue, B.M.; Evans, T.A.; Washburn, D.A.; Rumbaugh, D.M.; Beran, M.J. Do monkeys choose to choose? Learn. Behav. 2014, 42, 164–175. [Google Scholar] [CrossRef] [PubMed]

- Suzuki, S. Selection of Forced- and Free-Choice by Monkeys (Macaca Fascicularis). Percept. Mot. Ski. 1999, 88, 242–250. [Google Scholar] [CrossRef]

- Suzuki, S.; Matsuzawa, T. Choice Between Two Discrimination Tasks in Chimpanzees (Pan troglodytes). Jpn. Psychol. Res. 1997, 39, 226–235. [Google Scholar] [CrossRef]

- Voss, S.C.; Homzie, M.J. Choice as a Value. Psychol. Rep. 1970, 26, 912–914. [Google Scholar] [CrossRef]

- Washburn, D.A.; Hopkins, W.D.; Rumbaugh, D.M. Perceived control in rhesus monkeys (Macaca mulatta): Enhanced video-task performance. J. Exp. Psychol. Anim. Behav. Process 1991, 17, 123–129. [Google Scholar] [CrossRef]

- Kitchen, A.M.; Martin, A.A. The effects of cage size and complexity on the behaviour of captive common marmosets, Callithrix jacchus jacchus. Lab. Anim. 1996, 30, 317–326. [Google Scholar] [CrossRef] [PubMed]

- Sambrook, T.D.; Buchanan-Smith, H.M. Control and Complexity in Novel Object Enrichment. Anim. Welf. 1997, 6, 207–216. [Google Scholar] [CrossRef]

- Bekinschtein, P.; Oomen, C.A.; Saksida, L.M.; Bussey, T.J. Effects of environmental enrichment and voluntary exercise on neurogenesis, learning and memory, and pattern separation: BDNF as a critical variable? Semin. Cell Dev. Biol. 2011, 22, 536–542. [Google Scholar] [CrossRef] [PubMed]

- Ritvo, S.E.; MacDonald, S.E. Preference for free or forced choice in Sumatran orangutans (Pongo abelii). J. Exp. Anal. Behav. 2020, 113, 419–434. [Google Scholar] [CrossRef]

- Robinson, L.M.; Weiss, A. Nonhuman Primate Welfare: From History, Science, and Ethics to Practice; Springer Nature: Cham, Switzerland, 2023; ISBN 978-3-030-82707-6. [Google Scholar]

- Roelfsema, P.R.; Treue, S. Basic Neuroscience Research with Nonhuman Primates: A Small but Indispensable Component of Biomedical Research. Neuron 2014, 82, 1200–1204. [Google Scholar] [CrossRef] [PubMed]

- Cassidy, L.C.; Bethell, E.J.; Brockhausen, R.R.; Boretius, S.; Treue, S.; Pfefferle, D. The Dot-Probe Attention Bias Task as a Method to Assess Psychological Well-Being after Anesthesia: A Study with Adult Female Long-Tailed Macaques (Macaca fascicularis). Eur. Surg. Res. 2023, 64, 37–53. [Google Scholar] [CrossRef]

- Pfefferle, D.; Plümer, S.; Burchardt, L.; Treue, S.; Gail, A. Assessment of stress responses in rhesus macaques (Macaca mulatta) to daily routine procedures in system neuroscience based on salivary cortisol concentrations. PLoS ONE 2018, 13, e0190190. [Google Scholar] [CrossRef]

- Unakafov, A.M.; Möller, S.; Kagan, I.; Gail, A.; Treue, S.; Wolf, F. Using imaging photoplethysmography for heart rate estimation in non-human primates. PLoS ONE 2018, 13, e0202581. [Google Scholar] [CrossRef] [PubMed]

- Yurt, P.; Calapai, A.; Mundry, R.; Treue, S. Assessing cognitive flexibility in humans and rhesus macaques with visual motion and neutral distractors. Front. Psychol. 2022, 13, 1047292. [Google Scholar] [CrossRef]

- Cabrera-Moreno, J.; Jeanson, L.; Calapai, A. Group-based, autonomous, individualized training and testing of long-tailed macaques (Macaca fascicularis) in their home enclosure to a visuo-acoustic discrimination task. Front. Psychol. 2022, 13, 1047242. [Google Scholar] [CrossRef] [PubMed]

- Aiken, L.S.; West, S.G.; Reno, R.R. Multiple Regression: Testing and Interpreting Interactions; SAGE: Newbury Park, CA, USA, 1991; ISBN 978-0-7619-0712-1. [Google Scholar]

- Schielzeth, H. Simple means to improve the interpretability of regression coefficients. Methods Ecol. Evol. 2010, 1, 103–113. [Google Scholar] [CrossRef]

- Barr, D.J.; Levy, R.; Scheepers, C.; Tily, H.J. Random effects structure for confirmatory hypothesis testing: Keep it maximal. J. Mem. Lang. 2013, 68, 255–278. [Google Scholar] [CrossRef]

- Bürkner, P.-C. brms: An R Package for Bayesian Multilevel Models Using Stan. J. Stat. Softw. 2017, 80, 1–28. [Google Scholar] [CrossRef]

- McElreath, R. Statistical Rethinking: A Bayesian Course with Examples in R and STAN, 2nd ed.; Chapman and Hall/CRC: New York, NY, USA, 2020; ISBN 978-0-429-02960-8. [Google Scholar]

- Clark, F.E. Bridging pure cognitive research and cognitive enrichment. Anim. Cogn. 2022, 25, 1671–1678. [Google Scholar] [CrossRef] [PubMed]

- Clark, F.E. In the Zone: Towards a Comparative Study of Flow State in Primates. Anim. Behav. Cogn. 2023, 10, 62–88. [Google Scholar] [CrossRef]

- Brydges, N.M.; Braithwaite, V.A. Measuring Animal Welfare: What Can Cognition Contribute? Annu. Rev. Biomed. Sci. 2008, 10, T91–T103. [Google Scholar] [CrossRef]

- Seligman, M.E.P. Helplessness: On Depression, Development, and Death; W H Freeman/Times Books/Henry Holt & Co: New York, NY, USA, 1975; 250p, ISBN 978-0-7167-0752-3. [Google Scholar]

- Rosenzweig, M.R.; Bennett, E.L. Psychobiology of plasticity: Effects of training and experience on brain and behavior. Behav. Brain Res. 1996, 78, 57–65. [Google Scholar] [CrossRef] [PubMed]

- Scarmeas, N.; Stern, Y. Cognitive reserve and lifestyle. J. Clin. Exp. Neuropsychol. 2003, 25, 625–633. [Google Scholar] [CrossRef] [PubMed]

- Leotti, L.A.; Iyengar, S.S.; Ochsner, K.N. Born to Choose: The Origins and Value of the Need for Control. Trends Cogn. Sci. 2010, 14, 457–463. [Google Scholar] [CrossRef]

- Perdue, B. Mechanisms underlying cognitive bias in nonhuman primates. Anim. Behav. Cogn. 2017, 4, 105–118. [Google Scholar] [CrossRef][Green Version]

- Harrison, R.A.; Mohr, T.; van de Waal, E. Lab cognition going wild: Implementing a new portable touchscreen system in vervet monkeys. J. Anim. Ecol. 2023, 92, 1545–1559. [Google Scholar] [CrossRef]

- Joly, M.; Ammersdörfer, S.; Schmidtke, D.; Zimmermann, E. Touchscreen-Based Cognitive Tasks Reveal Age-Related Impairment in a Primate Aging Model, the Grey Mouse Lemur (Microcebus murinus). PLoS ONE 2014, 9, e109393. [Google Scholar] [CrossRef]

- Lacreuse, A.; Raz, N.; Schmidtke, D.; Hopkins, W.D.; Herndon, J.G. Age-related decline in executive function as a hallmark of cognitive ageing in primates: An overview of cognitive and neurobiological studies. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2020, 375, 20190618. [Google Scholar] [CrossRef] [PubMed]

- Nawroth, C.; Langbein, J.; Coulon, M.; Gabor, V.; Oesterwind, S.; Benz-Schwarzburg, J.; von Borell, E. Farm Animal Cognition—Linking Behavior, Welfare and Ethics. Front. Vet. Sci. 2019, 6, 24. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Calapai, A.; Pfefferle, D.; Cassidy, L.C.; Nazari, A.; Yurt, P.; Brockhausen, R.R.; Treue, S. A Touchscreen-Based, Multiple-Choice Approach to Cognitive Enrichment of Captive Rhesus Macaques (Macaca mulatta). Animals 2023, 13, 2702. https://doi.org/10.3390/ani13172702

Calapai A, Pfefferle D, Cassidy LC, Nazari A, Yurt P, Brockhausen RR, Treue S. A Touchscreen-Based, Multiple-Choice Approach to Cognitive Enrichment of Captive Rhesus Macaques (Macaca mulatta). Animals. 2023; 13(17):2702. https://doi.org/10.3390/ani13172702

Chicago/Turabian StyleCalapai, Antonino, Dana Pfefferle, Lauren C. Cassidy, Anahita Nazari, Pinar Yurt, Ralf R. Brockhausen, and Stefan Treue. 2023. "A Touchscreen-Based, Multiple-Choice Approach to Cognitive Enrichment of Captive Rhesus Macaques (Macaca mulatta)" Animals 13, no. 17: 2702. https://doi.org/10.3390/ani13172702

APA StyleCalapai, A., Pfefferle, D., Cassidy, L. C., Nazari, A., Yurt, P., Brockhausen, R. R., & Treue, S. (2023). A Touchscreen-Based, Multiple-Choice Approach to Cognitive Enrichment of Captive Rhesus Macaques (Macaca mulatta). Animals, 13(17), 2702. https://doi.org/10.3390/ani13172702