Unsupervised Domain Adaptation for Mitigating Sensor Variability and Interspecies Heterogeneity in Animal Activity Recognition

Abstract

:Simple Summary

Abstract

1. Introduction

- UDA techniques demonstrate the potential to enhance the AAR performance by mitigating data heterogeneity arising from variations in individual behaviors and sensor locations.

- UDA techniques exhibit versatility by being applicable to different mammal species, demonstrating their adaptability and applicability in the field of AAR.

- We present empirical evidence that the influence of minimizing divergence-based, adversarial-based, and reconstruction-based UDA varies depending on the specific domain under consideration.

2. Materials and Methods

2.1. Datasets and Preprocessing

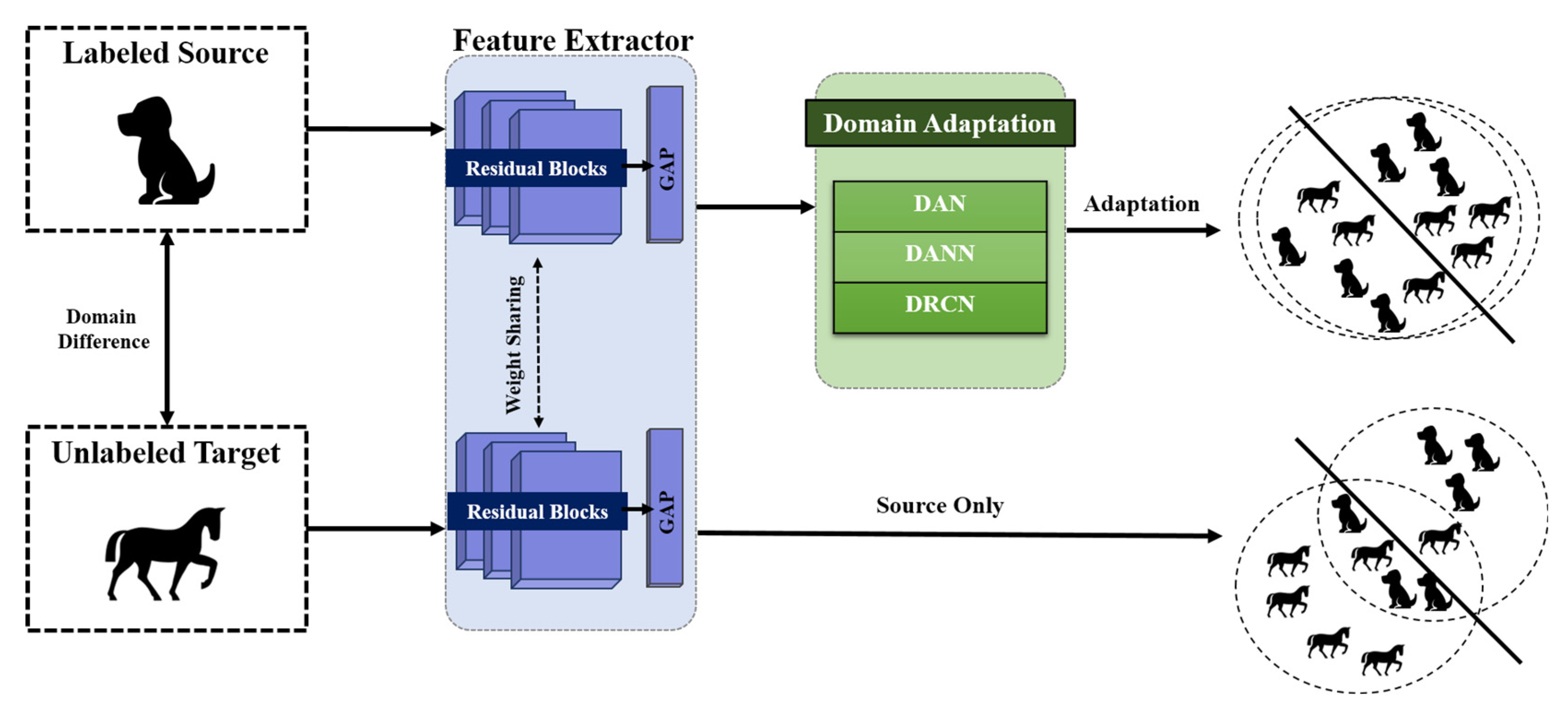

2.2. Proposed Framework

2.2.1. Overview

2.2.2. Unsupervised Domain Adaptation

2.2.3. Residual Neural Network (ResNet)

2.3. Experimental Setting

3. Results

3.1. Classification Results

3.2. Latent Space Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Domain | Sensor Positions | Source | Within-Domain Classification | |||

|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1 Score | |||

| Sensors | Back | 0.9794 | 0.9670 | 0.9663 | 0.9666 | |

| Neck | 0.9272 | 0.8767 | 0.8709 | 0.8734 | ||

| Size | Back | Middle-sized | 0.9778 | 0.9653 | 0.9671 | 0.9661 |

| Large-sized | 0.9785 | 0.9613 | 0.9573 | 0.9592 | ||

| Neck | Middle-sized | 0.9346 | 0.9031 | 0.9058 | 0.9037 | |

| Large-sized | 0.9537 | 0.8644 | 0.8304 | 0.8374 | ||

| Gender | Back | Male | 0.9787 | 0.9678 | 0.9688 | 0.9677 |

| Female | 0.9834 | 0.9731 | 0.9702 | 0.9716 | ||

| Neck | Male | 0.9459 | 0.9165 | 0.9125 | 0.9140 | |

| Female | 0.9452 | 0.8979 | 0.8967 | 0.8972 | ||

| Species | Neck | Dog | 0.9951 | 0.9960 | 0.9918 | 0.9939 |

| Horse | 0.9982 | 0.9950 | 0.9950 | 0.9950 | ||

References

- Nasirahmadi, A.; Sturm, B.; Olsson, A.-C.; Jeppsson, K.-H.; Müller, S.; Edwards, S.; Hensel, O. Automatic scoring of lateral and sternal lying posture in grouped pigs using image processing and Support Vector Machine. Comput. Electron. Agric. 2019, 156, 475–481. [Google Scholar] [CrossRef]

- Schindler, F.; Steinhage, V. Identification of animals and recognition of their actions in wildlife videos using deep learning techniques. Ecol. Inform. 2021, 61, 101215. [Google Scholar] [CrossRef]

- Naik, K.; Pandit, T.; Naik, N.; Shah, P. Activity Recognition in Residential Spaces with Internet of Things Devices and Thermal Imaging. Sensors 2021, 21, 988. [Google Scholar] [CrossRef] [PubMed]

- Cabezas, J.; Yubero, R.; Visitación, B.; Navarro-García, J.; Algar, M.J.; Cano, E.L.; Ortega, F. Analysis of Accelerometer and GPS Data for Cattle Behaviour Identification and Anomalous Events Detection. Entropy 2022, 24, 336. [Google Scholar] [CrossRef] [PubMed]

- Hussain, A.; Begum, K.; Armand, T.P.T.; Mozumder, M.A.I.; Ali, S.; Kim, H.C.; Joo, M.-I. Long Short-Term Memory (LSTM)-Based Dog Activity Detection Using Accelerometer and Gyroscope. Appl. Sci. 2022, 12, 9427. [Google Scholar] [CrossRef]

- Pegorini, V.; Zen Karam, L.; Pitta, C.S.R.; Cardoso, R.; Da Silva, J.C.C.; Kalinowski, H.J.; Ribeiro, R.; Bertotti, F.L.; Assmann, T.S. In Vivo Pattern Classification of Ingestive Behavior in Ruminants Using FBG Sensors and Machine Learning. Sensors 2015, 15, 28456–28471. [Google Scholar] [CrossRef] [PubMed]

- Hussain, A.; Ali, S.; Abdullah; Kim, H.C. Activity Detection for the Wellbeing of Dogs Using Wearable Sensors Based on Deep Learning. IEEE Access 2022, 10, 53153–53163. [Google Scholar] [CrossRef]

- Mao, A.; Huang, E.; Wang, X.; Liu, K. Deep learning-based animal activity recognition with wearable sensors: Overview, challenges, and future directions. Comput. Electron. Agric. 2023, 211, 108043. [Google Scholar] [CrossRef]

- Dutta, R.; Smith, D.; Rawnsley, R.; Bishop-Hurley, G.; Hills, J.; Timms, G.; Henry, D. Dynamic cattle behavioural classification using supervised ensemble classifiers. Comput. Electron. Agric. 2015, 111, 18–28. [Google Scholar] [CrossRef]

- Shahbazi, M.; Mohammadi, K.; Derakhshani, S.M.; Groot Koerkamp, P.W.G. Deep Learning for Laying Hen Activity Recognition Using Wearable Sensors. Agriculture 2023, 13, 738. [Google Scholar] [CrossRef]

- Tzanidakis, C.; Tzamaloukas, O.; Simitzis, P.; Panagakis, P. Precision Livestock Farming Applications (PLF) for Grazing Animals. Agriculture 2023, 13, 288. [Google Scholar] [CrossRef]

- Neethirajan, S. Recent advances in wearable sensors for animal health management. Sens. Bio-Sens. Res. 2017, 12, 15–29. [Google Scholar] [CrossRef]

- Kumpulainen, P.; Cardó, A.V.; Somppi, S.; Törnqvist, H.; Väätäjä, H.; Majaranta, P.; Gizatdinova, Y.; Hoog Antink, C.; Surakka, V.; Kujala, M.V.; et al. Dog behaviour classification with movement sensors placed on the harness and the collar. Appl. Anim. Behav. Sci. 2021, 241, 105393. [Google Scholar] [CrossRef]

- Marcato, M.; Tedesco, S.; O’Mahony, C.; O’Flynn, B.; Galvin, P. Machine learning based canine posture estimation using inertial data. PLoS ONE 2023, 18, e0286311. [Google Scholar] [CrossRef]

- Ferdinandy, B.; Gerencsér, L.; Corrieri, L.; Perez, P.; Újváry, D.; Csizmadia, G.; Miklósi, Á. Challenges of machine learning model validation using correlated behaviour data: Evaluation of cross-validation strategies and accuracy measures. PLoS ONE 2020, 15, e0236092. [Google Scholar] [CrossRef]

- Kleanthous, N.; Hussain, A.; Khan, W.; Sneddon, J.; Liatsis, P. Deep transfer learning in sheep activity recognition using accelerometer data. Expert Syst. Appl. 2022, 207, 117925. [Google Scholar] [CrossRef]

- Mao, A.; Huang, E.; Gan, H.; Liu, K. FedAAR: A Novel Federated Learning Framework for Animal Activity Recognition with Wearable Sensors. Animals 2022, 12, 2142. [Google Scholar] [CrossRef]

- Bollen, K.S.; Horowitz, J. Behavioral evaluation and demographic information in the assessment of aggressiveness in shelter dogs. Appl. Anim. Behav. Sci. 2008, 112, 120–135. [Google Scholar] [CrossRef]

- McGreevy, P.D.; Georgevsky, D.; Carrasco, J.; Valenzuela, M.; Duffy, D.L.; Serpell, J.A. Dog Behavior Co-Varies with Height, Bodyweight and Skull Shape. PLoS ONE 2013, 8, e80529. [Google Scholar] [CrossRef]

- Vilar, J.M.; Rubio, M.; Carrillo, J.M.; Domínguez, A.M.; Mitat, A.; Batista, M. Biomechanic characteristics of gait of four breeds of dogs with different conformations at walk on a treadmill. J. Appl. Anim. Res. 2016, 44, 252–257. [Google Scholar] [CrossRef]

- McVey, C.; Hsieh, F.; Manriquez, D.; Pinedo, P.; Horback, K. Invited Review: Applications of unsupervised machine learning in livestock behavior: Case studies in recovering unanticipated behavioral patterns from precision livestock farming data streams. Appl. Anim. Sci. 2023, 39, 99–116. [Google Scholar] [CrossRef]

- Siegford, J.M.; Steibel, J.P.; Han, J.; Benjamin, M.; Brown-Brandl, T.; Dórea, J.R.R.; Morris, D.; Norton, T.; Psota, E.; Rosa, G.J.M. The quest to develop automated systems for monitoring animal behavior. Appl. Anim. Behav. Sci. 2023, 265, 106000. [Google Scholar] [CrossRef]

- Wilson, G.; Cook, D.J. A Survey of Unsupervised Deep Domain Adaptation. ACM Trans. Intell. Syst. Technol. (TIST) 2020, 11, 1–46. [Google Scholar] [CrossRef]

- Khan, M.A.A.H.; Roy, N.; Misra, A. Scaling Human Activity Recognition via Deep Learning-based Domain Adaptation. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications (PerCom), Athens, Greece, 19–23 March 2018; pp. 1–9. [Google Scholar]

- Sanabria, A.R.; Zambonelli, F.; Dobson, S.; Ye, J. ContrasGAN: Unsupervised domain adaptation in Human Activity Recognition via adversarial and contrastive learning. Pervasive Mob. Comput. 2021, 78, 101477. [Google Scholar] [CrossRef]

- Chang, Y.; Mathur, A.; Isopoussu, A.; Song, J.; Kawsar, F. A Systematic Study of Unsupervised Domain Adaptation for Robust Human-Activity Recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 39. [Google Scholar] [CrossRef]

- Mila, H.; Grellet, A.; Feugier, A.; Chastant-Maillard, S. Differential impact of birth weight and early growth on neonatal mortality in puppies. J. Anim. Sci. 2015, 93, 4436–4442. [Google Scholar] [CrossRef]

- Brown, W.P.; Davidson, J.P.; Zuefle, M.E. Effects of phenotypic characteristics on the length of stay of dogs at two no kill animal shelters. J. Appl. Anim. Welf. Sci. 2013, 16, 2–18. [Google Scholar] [CrossRef]

- Kamminga, J.W.; Janßen, L.M.; Meratnia, N.; Havinga, P.J.M. Horsing Around—A Dataset Comprising Horse Movement. Data 2019, 4, 131. [Google Scholar] [CrossRef]

- Mao, A.; Huang, E.; Gan, H.; Parkes, R.S.V.; Xu, W.; Liu, K. Cross-Modality Interaction Network for Equine Activity Recognition Using Imbalanced Multi-Modal Data. Sensors 2021, 21, 5818. [Google Scholar] [CrossRef]

- Farahani, A.; Voghoei, S.; Rasheed, K.; Arabnia, H.R. A Brief Review of Domain Adaptation. In Advances in Data Science and Information Engineering: Proceedings from ICDATA 2020 and IKE 2020; Springer: Cham, Switzerland, 2021; pp. 877–894. [Google Scholar]

- Beijbom, O. Domain adaptations for computer vision applications. arXiv 2012, arXiv:1211.4860. [Google Scholar]

- Venkateswara, H.; Chakraborty, S.; Panchanathan, S. Deep-Learning Systems for Domain Adaptation in Computer Vision: Learning Transferable Feature Representations. IEEE Signal Process. Mag. 2017, 34, 117–129. [Google Scholar] [CrossRef]

- Ramponi, A.; Plank, B. Neural unsupervised domain adaptation in NLP—A survey. arXiv 2020, arXiv:2006.00632. [Google Scholar]

- Hao, Y.; Zheng, R.; Wang, B. Invariant Feature Learning for Sensor-Based Human Activity Recognition. IEEE Trans. Mob. Comput. 2022, 21, 4013–4024. [Google Scholar] [CrossRef]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M.I. Learning transferable features with deep adaptation networks. In Proceedings of the International Conference on Machine Learning. PMLR, Lille, France, 6–11 July 2015. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 2096–2130. [Google Scholar]

- Wang, Q.; Rao, W.; Sun, S.; Xie, L.; Chng, E.S.; Li, H. Unsupervised Domain Adaptation via Domain Adversarial Training for Speaker Recognition. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4889–4893. [Google Scholar]

- Jin, Y.; Luo, Y.; Zheng, W.; Lu, B. EEG-Based Emotion Recognition using Domain Adaptation Network. In Proceedings of the 2017 International Conference on Orange Technologies (ICOT), Singapore, 8–10 December 2017; pp. 222–225. [Google Scholar]

- Ghifary, M.; Kleijn, W.B.; Zhang, M.; Balduzzi, D.; Li, W. Deep reconstruction-classification networks for unsupervised domain adaptation. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part IV 14; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, Z.; Yan, W.; Oates, T. Time Series Classification from Scratch with Deep Neural Networks: A Strong Baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P. Deep Learning for Time Series Classification: A Review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, L.; Min, F.; He, J. Multiscale Deep Feature Learning for Human Activity Recognition using Wearable Sensors. IEEE Trans. Ind. Electron. 2022, 70, 2106–2116. [Google Scholar] [CrossRef]

- Ferrari, A.; Micucci, D.; Mobilio, M.; Napoletano, P. Human Activities Recognition using Accelerometer and Gyroscope. In Proceedings of the European Conference on Ambient Intelligence, Rome, Italy, 13–15 November 2019; pp. 357–362. [Google Scholar]

- Huang, W.; Zhang, L.; Teng, Q.; Song, C.; He, J. The Convolutional Neural Networks Training with Channel-Selectivity for Human Activity Recognition Based on Sensors. IEEE J. Biomed. Health Inform. 2021, 25, 3834–3843. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing Data using T-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Ladha, C.; Hammerla, N.; Hughes, E.; Olivier, P.; Ploetz, T. Dog’s Life: Wearable Activity Recognition for Dogs. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; pp. 415–418. [Google Scholar]

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2012, 15, 1192–1209. [Google Scholar] [CrossRef]

- Tuncer, T.; Ertam, F.; Dogan, S.; Subasi, A. An Automated Daily Sports Activities and Gender Recognition Method Based on Novel Multikernel Local Diamond Pattern using Sensor Signals. IEEE Trans. Instrum. Meas. 2020, 69, 9441–9448. [Google Scholar] [CrossRef]

- Allemand, M.; Zimprich, D.; Hertzog, C. Cross-Sectional Age Differences and Longitudinal Age Changes of Personality in Middle Adulthood and Old Age. J. Personal. 2007, 75, 323–358. [Google Scholar] [CrossRef] [PubMed]

- Chopik, W.J.; Weaver, J.R. Old dog, new tricks: Age differences in dog personality traits, associations with human personality traits, and links to important outcomes. J. Res. Personal. 2019, 79, 94–108. [Google Scholar] [CrossRef]

- Han, J.; Siegford, J.; Colbry, D.; Lesiyon, R.; Bosgraaf, A.; Chen, C.; Norton, T.; Steibel, J.P. Evaluation of computer vision for detecting agonistic behavior of pigs in a single-space feeding stall through blocked cross-validation strategies. Comput. Electron. Agric. 2023, 204, 107520. [Google Scholar] [CrossRef]

| Size | Gender | Total | ||||

|---|---|---|---|---|---|---|

| Middle-Sized | Large-Sized | Male | Female | |||

| Demographics of Dogs | Number of Dogs (Male) | 23 (10) | 22 (12) | 22 | 23 | 45 (22) |

| Age (Months) | 61.3 | 54.6 | 55.64 | 60.35 | 58 | |

| Weight (kg) | 18.7 | 30.0 | 25 | 23.48 | 24.0 | |

| Amount of Samples | Lying on chest | 2327 | 1023 | 1418 | 1932 | 3350 |

| Sitting | 2273 | 255 | 1426 | 1102 | 2528 | |

| Standing | 1443 | 638 | 1245 | 836 | 2081 | |

| Walking | 2953 | 1931 | 2316 | 2568 | 4884 | |

| Trotting | 3651 | 1737 | 2534 | 2854 | 5388 | |

| Sniffing | 4926 | 3483 | 4190 | 4219 | 8409 | |

| Total | 17,563 | 9067 | 13,129 | 13,511 | 26,640 | |

| Adapted Domain | Sensor Positions | S→T | Source Only | DAN | DANN | DRCN | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | F1 Score | Accuracy | F1 Score | Accuracy | F1 Score | Accuracy | F1 Score | |||

| Sensors | B→N | 0.6088 | 0.4585 | 0.7750 | 0.6295 | 0.6961 | 0.5420 | 0.6947 | 0.5269 | |

| N→B | 0.6574 | 0.4644 | 0.8015 | 0.6955 | 0.7640 | 0.6353 | 0.7616 | 0.6562 | ||

| Size | Back | MS→LS | 0.8771 | 0.7251 | 0.8532 | 0.7364 | 0.8788 | 0.7559 | 0.8890 | 0.7826 |

| LS→MS | 0.8392 | 0.7874 | 0.8032 | 0.7267 | 0.8433 | 0.7891 | 0.8387 | 0.7800 | ||

| Neck | MS→LS | 0.8496 | 0.6435 | 0.8352 | 0.6487 | 0.8476 | 0.6476 | 0.8592 | 0.6741 | |

| LS→MS | 0.7655 | 0.6465 | 0.7621 | 0.6291 | 0.7627 | 0.6244 | 0.7531 | 0.6027 | ||

| Gender | Back | M→F | 0.8866 | 0.8122 | 0.9269 | 0.8805 | 0.9297 | 0.8887 | 0.9274 | 0.8845 |

| F→M | 0.8407 | 0.7547 | 0.8832 | 0.8271 | 0.9007 | 0.8570 | 0.8881 | 0.8376 | ||

| Neck | M→F | 0.7968 | 0.6419 | 0.8073 | 0.6623 | 0.8015 | 0.6438 | 0.8015 | 0.6505 | |

| F→M | 0.7705 | 0.6219 | 0.8174 | 0.7012 | 0.8282 | 0.7190 | 0.8104 | 0.6828 | ||

| Species | Neck | D→H | 0.6386 | 0.5033 | 0.7339 | 0.6234 | 0.7371 | 0.6578 | 0.6854 | 0.6467 |

| H→D | 0.7915 | 0.7613 | 0.7797 | 0.7951 | 0.8600 | 0.8558 | 0.8338 | 0.8260 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahn, S.-H.; Kim, S.; Jeong, D.-H. Unsupervised Domain Adaptation for Mitigating Sensor Variability and Interspecies Heterogeneity in Animal Activity Recognition. Animals 2023, 13, 3276. https://doi.org/10.3390/ani13203276

Ahn S-H, Kim S, Jeong D-H. Unsupervised Domain Adaptation for Mitigating Sensor Variability and Interspecies Heterogeneity in Animal Activity Recognition. Animals. 2023; 13(20):3276. https://doi.org/10.3390/ani13203276

Chicago/Turabian StyleAhn, Seong-Ho, Seeun Kim, and Dong-Hwa Jeong. 2023. "Unsupervised Domain Adaptation for Mitigating Sensor Variability and Interspecies Heterogeneity in Animal Activity Recognition" Animals 13, no. 20: 3276. https://doi.org/10.3390/ani13203276