Automated Measurement of Cattle Dimensions Using Improved Keypoint Detection Combined with Unilateral Depth Imaging

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

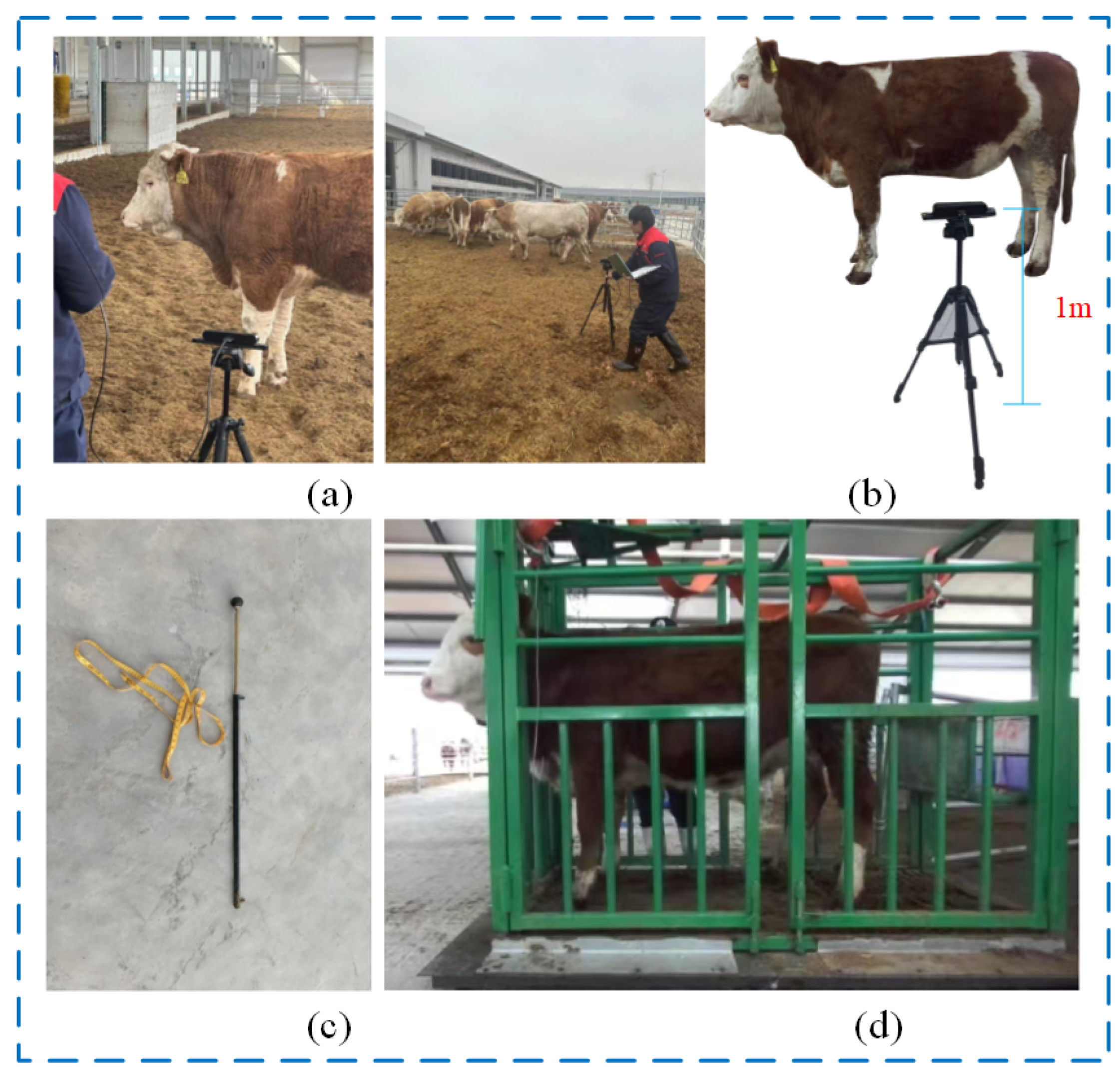

2.1. Data Collection

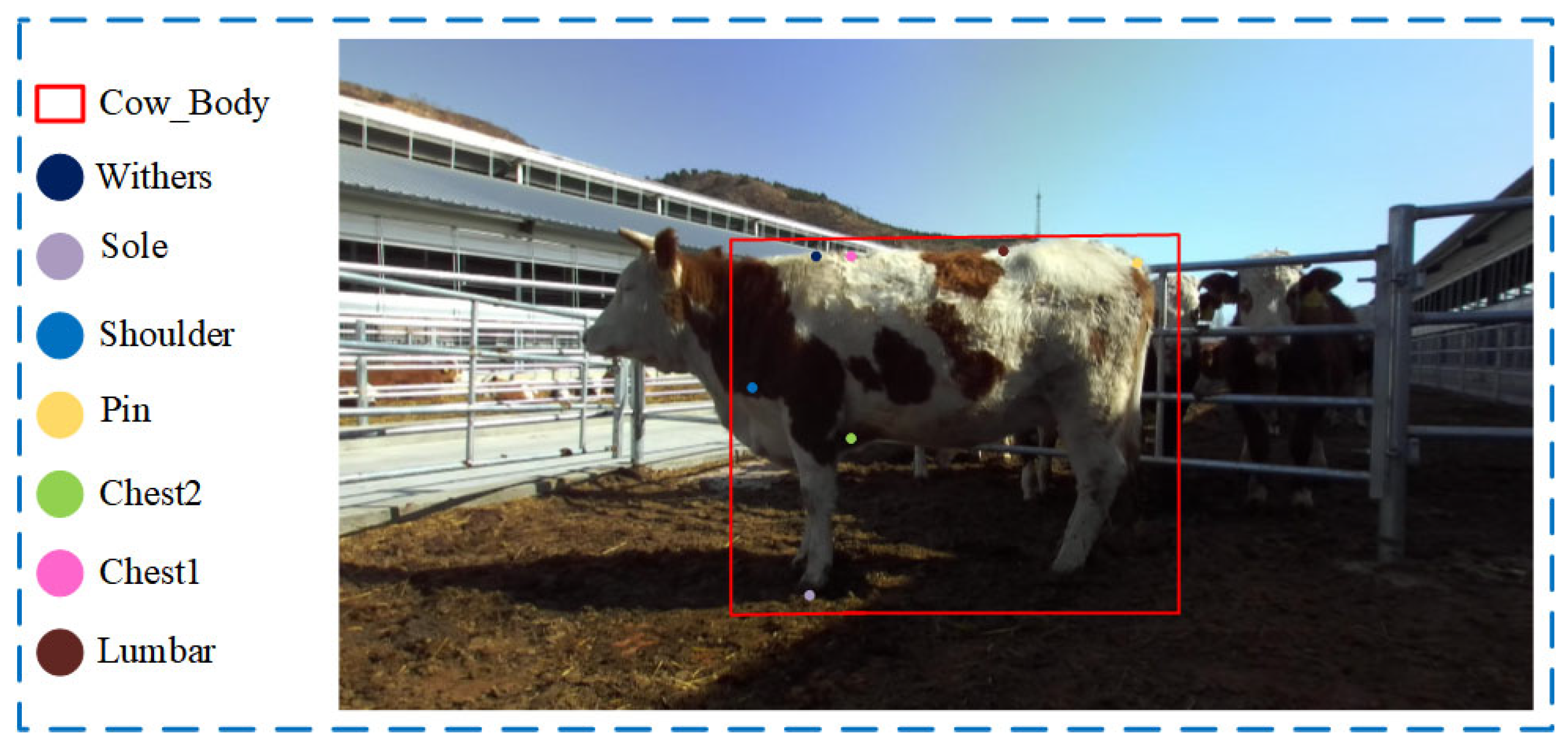

2.2. Dataset Construction

2.3. Technical Route

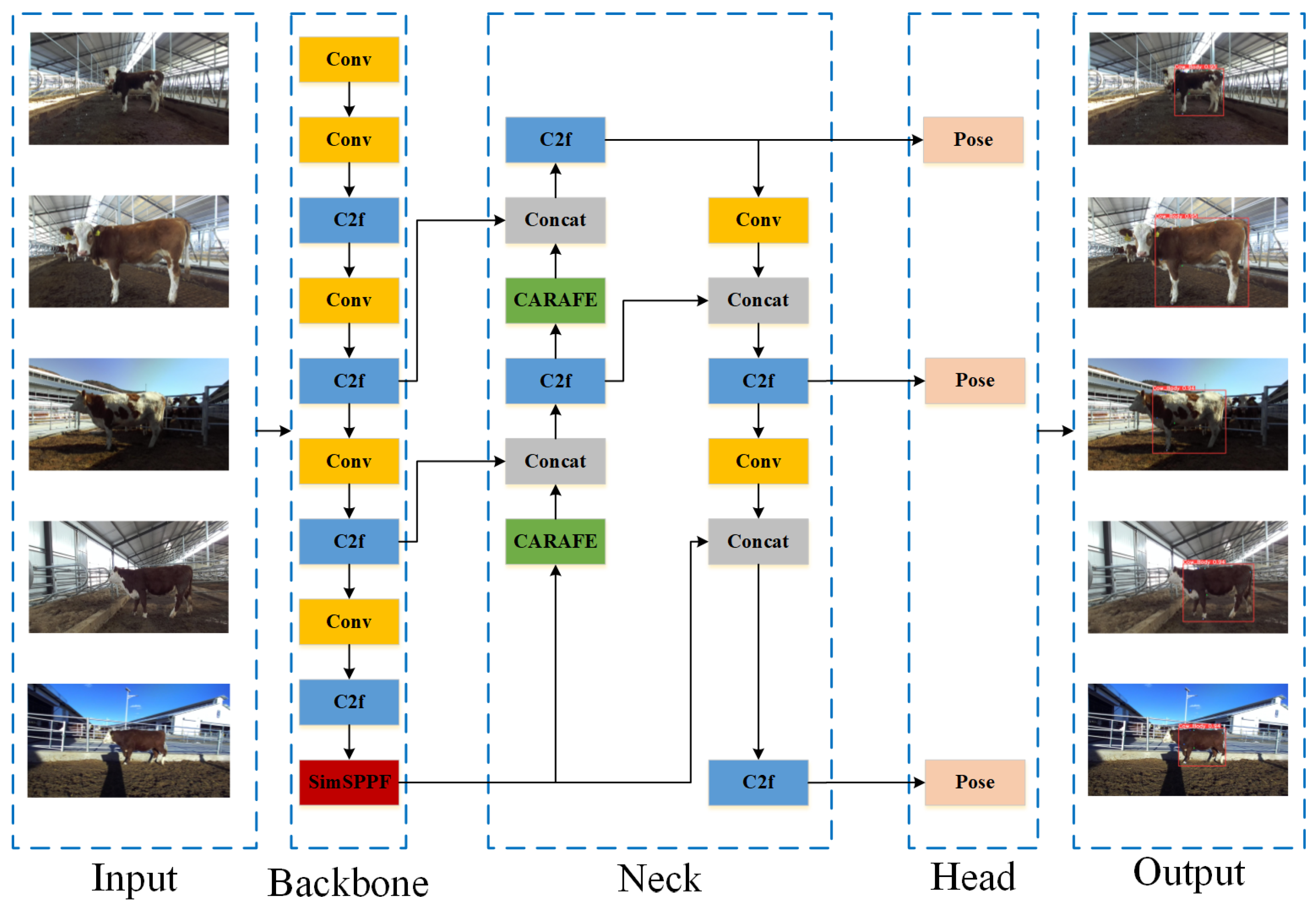

2.4. Improvements to Keypoint Detection Models

2.4.1. Keypoint Detection Based on YOLOv8-Pose

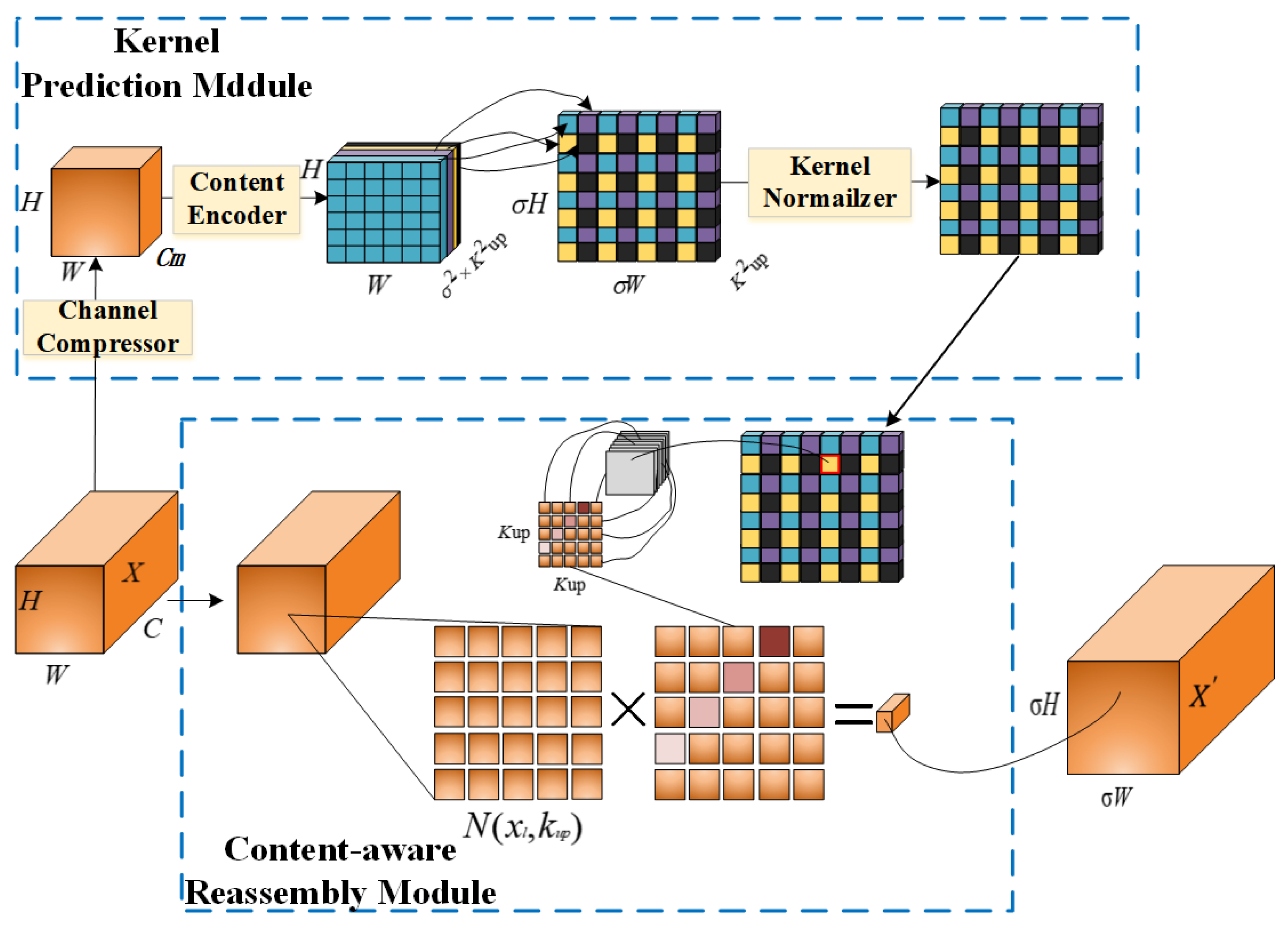

2.4.2. Content-Aware Restructuring Architecture: CARAFE

2.4.3. SimSPPF Network

2.4.4. Improved YOLOv8-Pose Network Model Structure

2.5. Measuring Methods of Beef Cattle Body Size

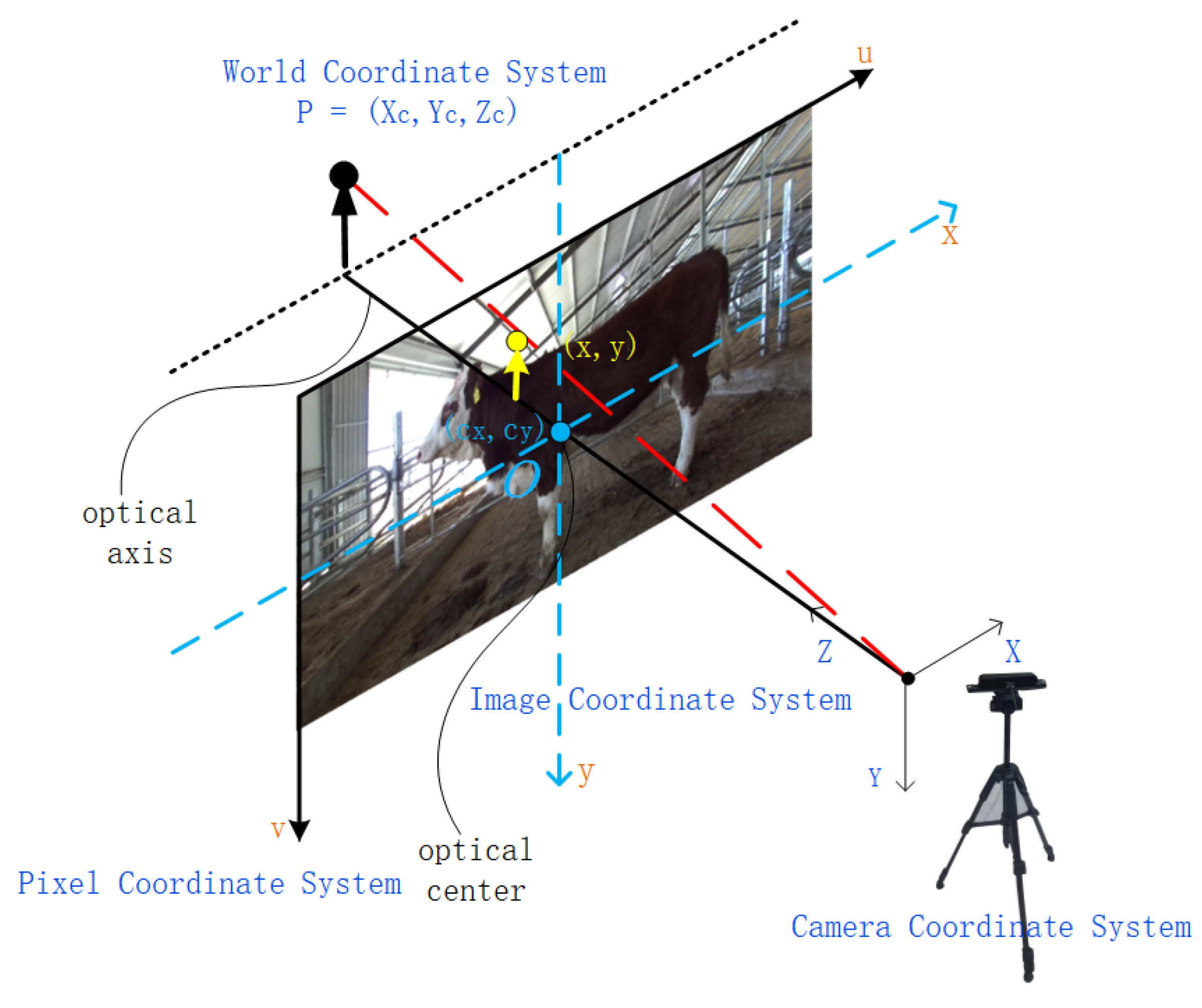

2.5.1. Keypoint Processing of Cow Body Depth Image

| Algorithm 1: Depth Image Processing for Keypoint Localization |

| path: input file path shoulder: coordinates of the shoulder endpoint pin: coordinates of the tuber ischium avg_depth: average depth value near the midpoint max_radius: maximum search radius K: internal parameter matrix coords: stores the converted world coordinates 1: Function LoadDepthImage(path): 2: image ← cv2.imread(path,cv2.IMREAD_UNCHANGED) 3: if image = None then 4: exit(1) 5: else 6: return image[:,:,0] 7: Function GetAverageDepth(image,shoulder,pin): 8: mid ← ((shoulder + pin)/2) 9: return mean depth at 3×3 gird around mid 10: Function FindNearestValidDepth(image,x,y,avg_depth,max_radius): 11: for dx ∈ [−max_radius,max_radius] do 12: for dy ∈[−max_radius,max_radius] do 13: depth ← image[y+dy][x+dx] 14: if 0 < depth ≤ 7000 and |depth − avg_depth| ≤ 500 then 15: return depth 16: Function GetWorldCoordinates(image,keypoints,K): 17: coords ← {} 18: foreach key,(x,y) ∈ keypoints do 19: depth ← image[y][x] 20: if depth valid then 21: coords[key] ← K−1 ∙ [x,y,1] ∙ depth 22: return coords |

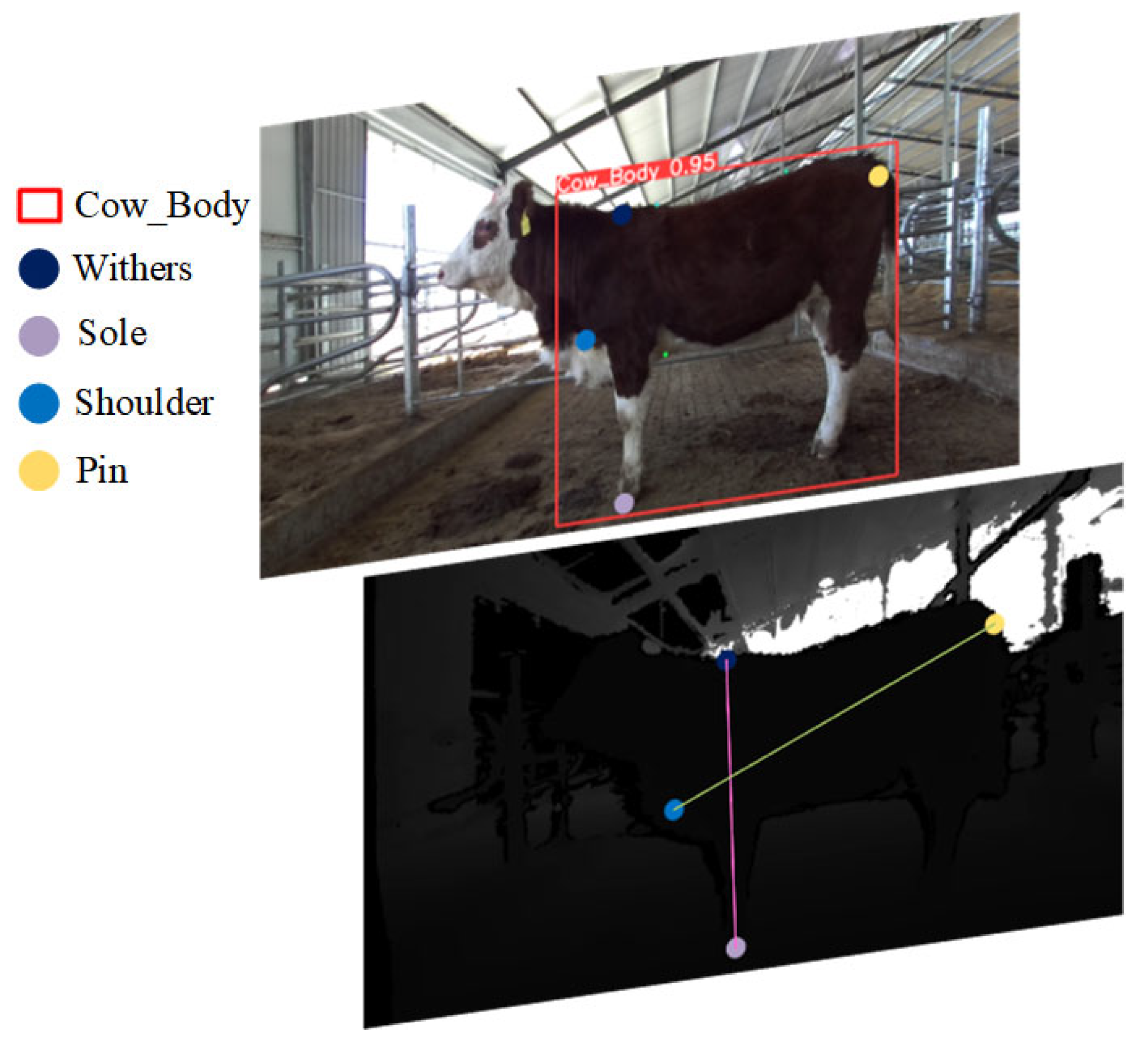

2.5.2. Body Height and Body Length Calculation

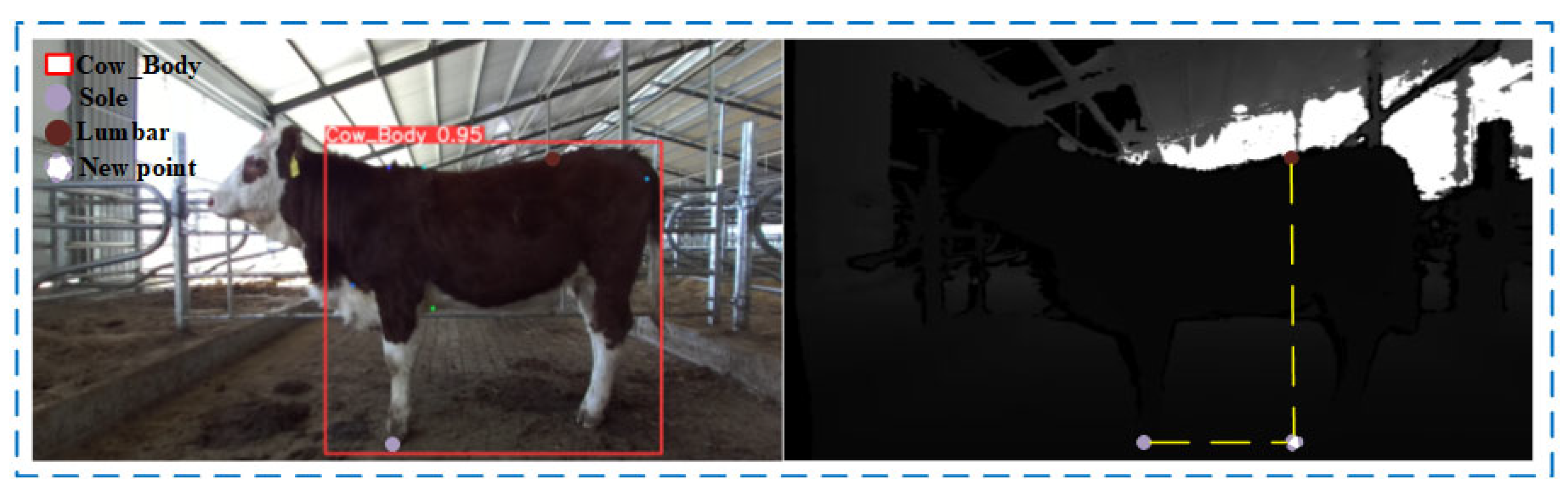

2.5.3. Lumbar Height Calculation

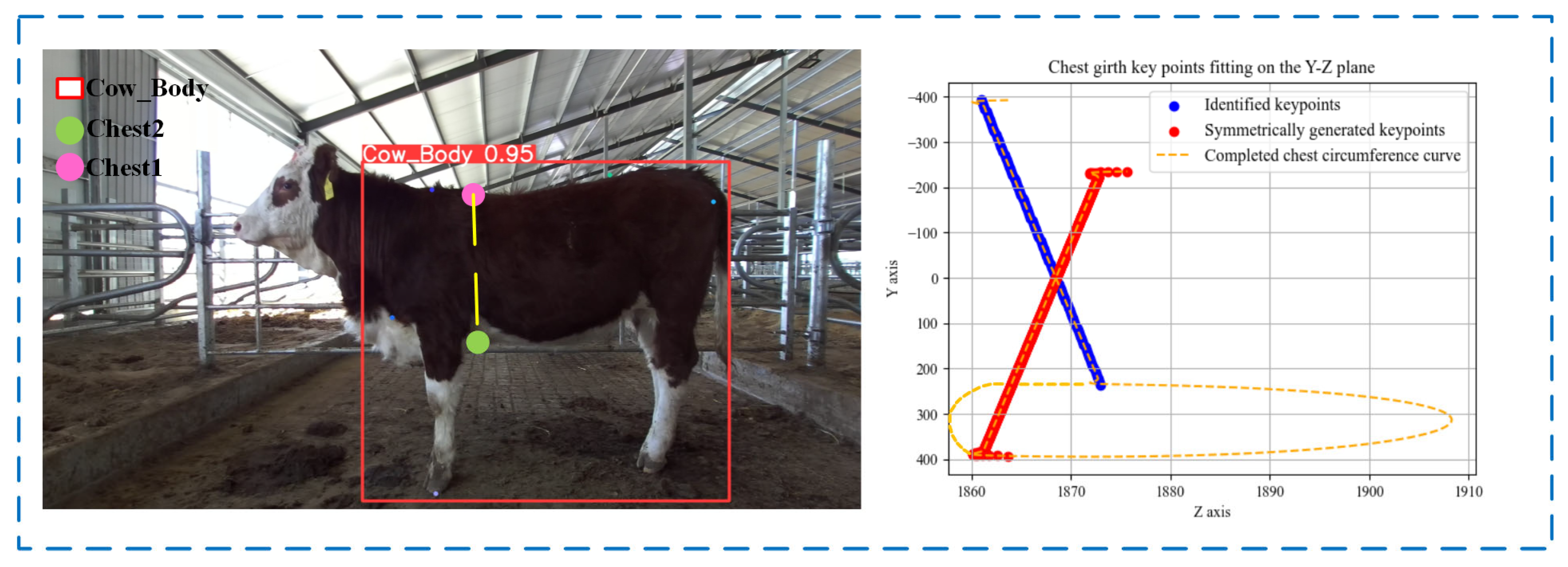

2.5.4. Chest Girth Calculation

| Algorithm 2: Calculate Chest Circumference from Depth Image |

| Chest1: coordinates of the rear edge of the withers Chest2: coordinates of the vertical point of the chest base t: a linear space smooth_factor: smoothing factor rbf_x, rbf_y, and rbf_z: radial basis function interpolation objects t_new: newly generated time series tck: parameters of the strip interpolation x_fine, y_fine, and z_fine: interpolated x, y, and z coordinates 1: Function GenerateIntermediatePoints(world_coords,num_points): 2: point1 ← world_coords[“Chest1”] 3: point2 ← world_coords[“Chest2”] 4: t ← np.linspace(0,1,num_points) 5: return point1 + t[:,None] ∙ (point2 – point1) 6: Function MlsFitting(points,smooth_factor): 7: x,y,z ← points[:,0],points[:,1],points[:,2] 8: rbf_x ← Rbf(np.arange(len(x)),x,’multiquadric’,smooth = smooth_factor) 9: rbf_y ← Rbf(np.arange(len(y)),y,’multiquadric’,smooth = smooth_factor) 10: rbf_z ← Rbf(np.arange(len(z)),z,’multiquadric’,smooth = smooth_factor) 11: t_new ← np.linspace(0,len(x) – 1,len(x) × 10) 12: return np.vstack(rbf_x(t_new),rbf_y(t_new),rbf_z(t_new)).T 13: Function CompleteEllipse(upper_points): 14: mirrored_points ← np.copy(upper_points) 15: mirrored_points[:,1] ← − mirrored_points[:,1] 16: all_points ← np.vstack(upper_points,mirrored_points) 17: (tck,u) ← splprep([all_points[:,0],all_points[:,1],all_points[:,2]],s = 0) 18: u_fine ← np.linspace(0,1,len(all_points) × 10) 19: (x_fine,y_fine,z_fine) ← splev(u_fine,tck) 20: return np.vstack(x_fine,y_fine,z_fine).T |

2.6. Evaluation Indicators

2.6.1. Keypoint Detection Model Evaluation Indicators

2.6.2. Body Size Measurement Evaluation Index

3. Results and Analysis

3.1. Improved Results of YOLOv8-Pose

3.1.1. Comparison of Keypoint Detection Using Different Algorithms

3.1.2. Model Performance Ablation Experiment

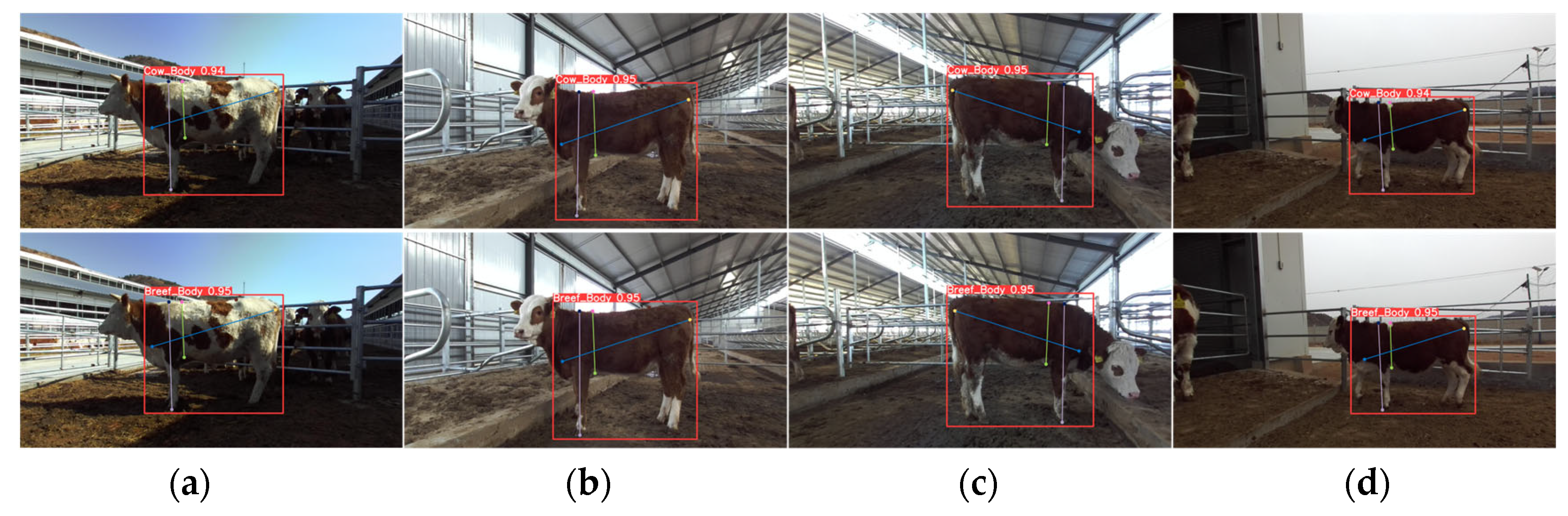

3.1.3. Improved Keypoint Detection Results

3.2. Body Size Measurement Results

3.2.1. Normality Test

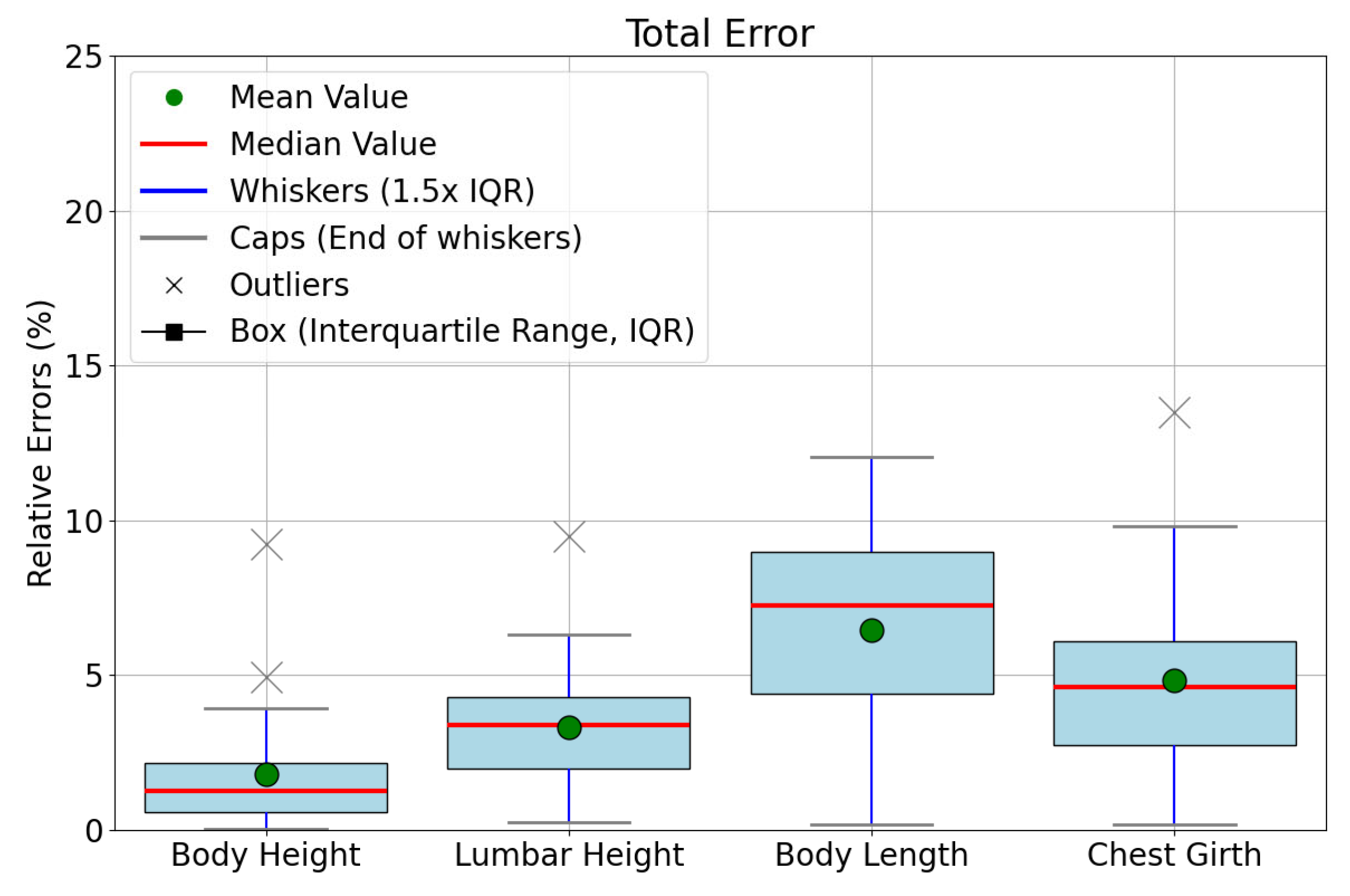

3.2.2. Measurement Results and Analysis

4. Discussion

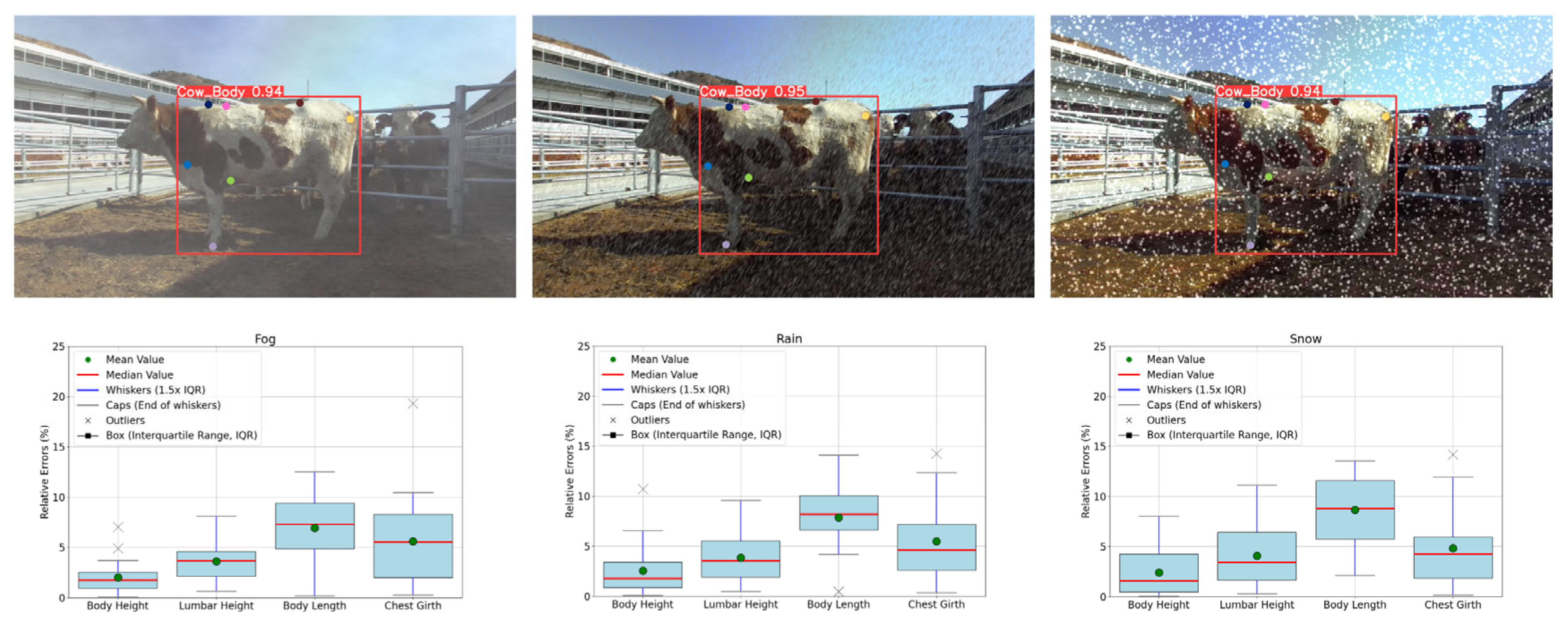

4.1. Effects of Different Noises on the Measurement of Beef Cattle Body Dimensions

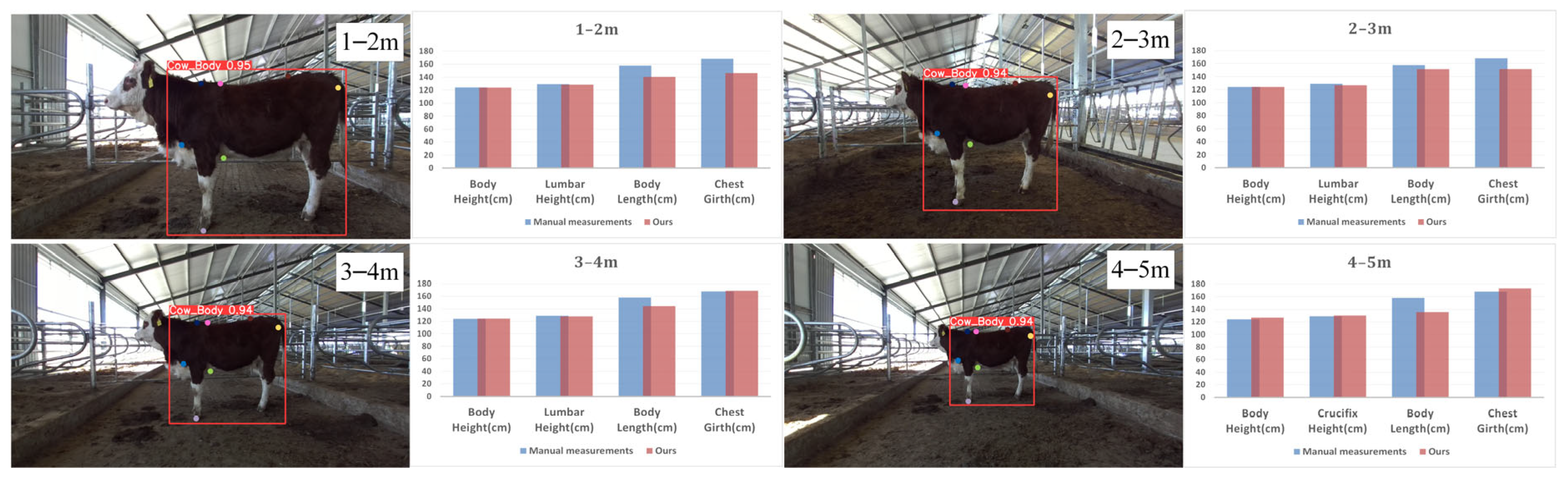

4.2. Effects of Different Distances on the Measurement of Beef Cattle Body Size

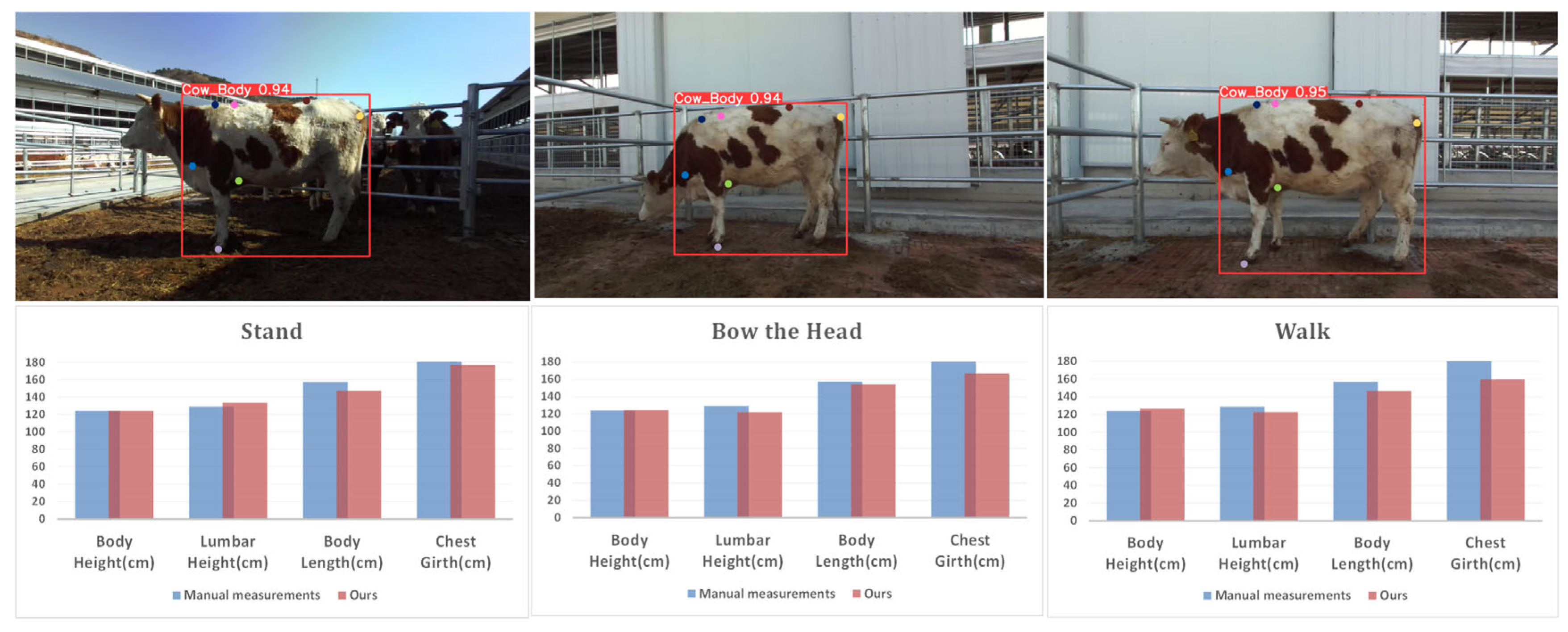

4.3. Effects of Different Postures on the Measurement of Beef Cattle Body Size

4.4. Model Application and Outlook

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Growing at a Slower Pace. World Population Is Expected to Reach 9.7 Billion in 2050 and Could Peak at Nearly 11 Billion around 2100|UN DESA|United Nations Department of Economic and Social Affairs. Available online: https://www.un.org/development/desa/en/news/population/world-population-prospects-2019.html (accessed on 13 July 2024).

- Henchion, M.; Moloney, A.P.; Hyland, J.; Zimmermann, J.; McCarthy, S. Review: Trends for Meat, Milk and Egg Consumption for the next Decades and the Role Played by Livestock Systems in the Global Production of Proteins. Animal 2021, 15, 100287. [Google Scholar] [CrossRef]

- Jeffery, H.B.; Berg, R.T. An Evaluation of Several Measurements of Beef Cow Size as Related to Progeny Performance. Can. J. Anim. Sci. 1972, 52, 23–37. [Google Scholar] [CrossRef]

- Heng-wei, Y.; Raza, S.H.A.; Almohaimeed, H.M.; Alzahrani, S.S.; Alkhalifah, S.M.; Yasir B, A.L.; Yasir B, A.L.; Zan, L. The Body Weight Heritability and the Effect of Non-Genetic Factors on the Body Measurement Traits in Qinchuan Cattle. Anim. Biotechnol. 2023, 34, 4387–4393. [Google Scholar] [CrossRef]

- Dirksen, N.; Gygax, L.; Traulsen, I.; Wechsler, B.; Burla, J.-B. Body Size in Relation to Cubicle Dimensions Affects Lying Behavior and Joint Lesions in Dairy Cows. J. Dairy Sci. 2020, 103, 9407–9417. [Google Scholar] [CrossRef] [PubMed]

- Hozáková, K.; Vavrišínová, K.; Neirurerová, P.; Bujko, J. Growth of Beef Cattle as Prediction for Meat Production: A Review. Acta Fytotech. Zootech. 2020, 23. [Google Scholar] [CrossRef]

- Sieber, M.; Freeman, A.E.; Kelley, D.H. Effects of Body Measurements and Weight on Calf Size and Calving Difficulty of Holsteins1. J. Dairy Sci. 1989, 72, 2402–2410. [Google Scholar] [CrossRef]

- Wang, Y.; Mücher, S.; Wang, W.; Guo, L.; Kooistra, L. A Review of Three-Dimensional Computer Vision Used in Precision Livestock Farming for Cattle Growth Management. Comput. Electron. Agric. 2023, 206, 107687. [Google Scholar] [CrossRef]

- Qiao, Y.; Kong, H.; Clark, C.; Lomax, S.; Su, D.; Eiffert, S.; Sukkarieh, S. Intelligent Perception for Cattle Monitoring: A Review for Cattle Identification, Body Condition Score Evaluation, and Weight Estimation. Comput. Electron. Agric. 2021, 185, 106143. [Google Scholar] [CrossRef]

- Yin, M.; Ma, R.; Luo, H.; Li, J.; Zhao, Q.; Zhang, M. Non-Contact Sensing Technology Enables Precision Livestock Farming in Smart Farms. Comput. Electron. Agric. 2023, 212, 108171. [Google Scholar] [CrossRef]

- Seo, K.-W.; Lee, D.-W.; Choi, E.-G.; Kim, C.-H.; Kim, H.-T. Algorithm for Measurement of the Dairy Cow’s Body Parameters by Using Image Processing. J. Biosyst. Eng. 2012, 37, 122–129. [Google Scholar] [CrossRef]

- Tasdemir, S.; Urkmez, A.; Inal, S. Determination of Body Measurements on the Holstein Cows Using Digital Image Analysis and Estimation of Live Weight with Regression Analysis. Comput. Electron. Agric. 2011, 76, 189–197. [Google Scholar] [CrossRef]

- Viazzi, S.; Bahr, C.; Van Hertem, T.; Schlageter-Tello, A.; Romanini, C.E.B.; Halachmi, I.; Lokhorst, C.; Berckmans, D. Comparison of a Three-Dimensional and Two-Dimensional Camera System for Automated Measurement of Back Posture in Dairy Cows. Comput. Electron. Agric. 2014, 100, 139–147. [Google Scholar] [CrossRef]

- Spoliansky, R.; Edan, Y.; Parmet, Y.; Halachmi, I. Development of Automatic Body Condition Scoring Using a Low-Cost 3-Dimensional Kinect Camera. J. Dairy Sci. 2016, 99, 7714–7725. [Google Scholar] [CrossRef]

- Gao, Y.; Li, Z.; Li, B.; Zhang, L. Extraction of Corn Plant Phenotypic Parameters with Keypoint Detection and Stereo Images. Agronomy 2024, 14, 1110. [Google Scholar] [CrossRef]

- Rodríguez Alvarez, J.; Arroqui, M.; Mangudo, P.; Toloza, J.; Jatip, D.; Rodriguez, J.M.; Teyseyre, A.; Sanz, C.; Zunino, A.; Machado, C.; et al. Estimating Body Condition Score in Dairy Cows From Depth Images Using Convolutional Neural Networks, Transfer Learning and Model Ensembling Techniques. Agronomy 2019, 9, 90. [Google Scholar] [CrossRef]

- Miller, G.A.; Hyslop, J.J.; Barclay, D.; Edwards, A.; Thomson, W.; Duthie, C.-A. Using 3D Imaging and Machine Learning to Predict Liveweight and Carcass Characteristics of Live Finishing Beef Cattle. Front. Sustain. Food Syst. 2019, 3, 30. [Google Scholar] [CrossRef]

- Ruchay, A.; Kober, V.; Dorofeev, K.; Kolpakov, V.; Miroshnikov, S. Accurate Body Measurement of Live Cattle Using Three Depth Cameras and Non-Rigid 3-D Shape Recovery. Comput. Electron. Agric. 2020, 179, 105821. [Google Scholar] [CrossRef]

- Shi, S.; Yin, L.; Liang, S.; Zhong, H.; Tian, X.; Liu, C.; Sun, A.; Liu, H. Research on 3D Surface Reconstruction and Body Size Measurement of Pigs Based on Multi-View RGB-D Cameras. Comput. Electron. Agric. 2020, 175, 105543. [Google Scholar] [CrossRef]

- Huang, L.; Li, S.; Zhu, A.; Fan, X.; Zhang, C.; Wang, H. Non-Contact Body Measurement for Qinchuan Cattle with LiDAR Sensor. Sensors 2018, 18, 3014. [Google Scholar] [CrossRef]

- Le Cozler, Y.; Allain, C.; Caillot, A.; Delouard, J.M.; Delattre, L.; Luginbuhl, T.; Faverdin, P. High-Precision Scanning System for Complete 3D Cow Body Shape Imaging and Analysis of Morphological Traits. Comput. Electron. Agric. 2019, 157, 447–453. [Google Scholar] [CrossRef]

- Wang, K.; Zhu, D.; Guo, H.; Ma, Q.; Su, W.; Su, Y. Automated Calculation of Heart Girth Measurement in Pigs Using Body Surface Point Clouds. Comput. Electron. Agric. 2019, 156, 565–573. [Google Scholar] [CrossRef]

- Du, A.; Guo, H.; Lu, J.; Su, Y.; Ma, Q.; Ruchay, A.; Marinello, F.; Pezzuolo, A. Automatic Livestock Body Measurement Based on Keypoint Detection with Multiple Depth Cameras. Comput. Electron. Agric. 2022, 198, 107059. [Google Scholar] [CrossRef]

- Yang, G.; Xu, X.; Song, L.; Zhang, Q.; Duan, Y.; Song, H. Automated Measurement of Dairy Cows Body Size via 3D Point Cloud Data Analysis. Comput. Electron. Agric. 2022, 200, 107218. [Google Scholar] [CrossRef]

- LabelMe: A Database and Web-Based Tool for Image Annotation|International Journal of Computer Vision. Available online: https://link.springer.com/article/10.1007/s11263-007-0090-8 (accessed on 12 July 2024).

- Maji, D.; Nagori, S.; Mathew, M.; Poddar, D. YOLO-Pose: Enhancing YOLO for Multi Person Pose Estimation Using Object Keypoint Similarity Loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 2637–2646. [Google Scholar]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE: Content-Aware Reassembly of Features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Li, C.; Li, L.; Geng, Y.; Jiang, H.; Cheng, M.; Zhang, B.; Ke, Z.; Xu, X.; Chu, X. YOLOv6 v3.0: A Full-Scale Reloading 2023. arXiv 2023, arXiv:2301.05586. [Google Scholar]

- Lancaster, P.; Salkauskas, K. Surfaces Generated by Moving Least Squares Methods. Math. Comput. 1981, 37, 141–158. [Google Scholar] [CrossRef]

- Buhmann, M.; Jäger, J. On Radial Basis Functions. Snapshots of modern mathematics from Oberwolfach. Numer. Sci. Comput. 2019, 2019, 2. [Google Scholar] [CrossRef]

- Unser, M.; Aldroubi, A.; Eden, M. Fast B-Spline Transforms for Continuous Image Representation and Interpolation. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 277–285. [Google Scholar] [CrossRef]

- Jiang, T.; Lu, P.; Zhang, L.; Ma, N.; Han, R.; Lyu, C.; Li, Y.; Chen, K. RTMPose: Real-Time Multi-Person Pose Estimation Based on MMPose. arXiv 2023, arXiv:2303.07399. [Google Scholar]

- Zhang, Q.; Hou, Z.; Huang, L.; Wang, F.; Meng, H. Reparation with Moving Least Squares Sampling and Extraction of Body Sizes of Beef Cattle from Unilateral Point Clouds. Comput. Electron. Agric. 2024, 224, 109208. [Google Scholar] [CrossRef]

- Babcock, A.H.; Cernicchiaro, N.; White, B.J.; Dubnicka, S.R.; Thomson, D.U.; Ives, S.E.; Scott, H.M.; Milliken, G.A.; Renter, D.G. A Multivariable Assessment Quantifying Effects of Cohort-Level Factors Associated with Combined Mortality and Culling Risk in Cohorts of U.S. Commercial Feedlot Cattle. Prev. Vet. Med. 2013, 108, 38–46. [Google Scholar] [CrossRef] [PubMed]

- Tahir, M.N.; Lan, Y.; Zhang, Y.; Wenjiang, H.; Wang, Y.; Naqvi, S.M. Chapter 4—Application of Unmanned Aerial Vehicles in Precision Agriculture. In Precision Agriculture; Zaman, Q., Ed.; Academic Press: Cambridge, MA, USA, 2023; pp. 55–70. ISBN 978-0-443-18953-1. [Google Scholar]

- The Theory of Search. II. Target Detection|Operations Research. Available online: https://pubsonline.informs.org/doi/abs/10.1287/opre.4.5.503 (accessed on 11 July 2024).

- Gardenier, J.; Underwood, J.; Clark, C. Object Detection for Cattle Gait Tracking. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Brisbane, Australia, 2018; pp. 2206–2213. [Google Scholar]

- Xu, X.; Wang, Y.; Hua, Z.; Yang, G.; Li, H.; Song, H. Light-Weight Recognition Network for Dairy cows Based on the Fusion ofYOLOv5s and Channel Pruning Algorithm. Trans. Chin. Soc. Agric. Eng. 2023, 39, 152–162. [Google Scholar] [CrossRef]

- Xu, X.; Deng, H.; Wang, Y.; Zhang, S.; Song, H. Boosting Cattle Face Recognition under Uncontrolled Scenes by Embedding Enhancement and Optimization. Appl. Soft Comput. 2024, 164, 111951. [Google Scholar] [CrossRef]

| Model | Parameters (M) | GFLOPs (G) | mAP (%) | Model-Size (MB) |

|---|---|---|---|---|

| YOLOV5-pose | 7.12 | 16.7 | 89.7 | 54.83 |

| YOLOV7-pose | 9.61 | 20.1 | 91.2 | 19.6 |

| RTMpose-t | 3.34 | 0.3 | 91.0 | 50.3 |

| YOLOv8-pose | 3.10 | 8.4 | 92.4 | 6.17 |

| Improved YOLOv8-pose | 3.24 | 8.7 | 94.4 | 6.45 |

| YOLOv8-Pose | CARAFE | SimSPPF | Precision (%) | Recall (%) | mAp (%) | Model-Size (MB) |

|---|---|---|---|---|---|---|

| √ | 95.7 | 87 | 92.4 | 6.17 | ||

| √ | √ | 96.3 | 86.6 | 93.5 | 6.47 | |

| √ | √ | 93.5 | 88.4 | 93.8 | 6.17 | |

| √ | √ | √ | 96.7 | 87.4 | 94.4 | 6.45 |

| Norm | Body Height | Lumbar Height | Body Length | Chest Girth |

|---|---|---|---|---|

| S-W Test | 0.978 | 0.948 | 0.941 | 0.941 |

| 0.885 | 0.275 | 0.196 | 0.197 | |

| A-D Test | 0.261 | 0.491 | 0.608 | 0.598 |

| Skewness | 0.064 | 0.661 | 0.720 | 0.560 |

| Kurtosis | −0.163 | 0.273 | 0.032 | −0.403 |

| ID | BH/cm | LH/cm | BL/cm | CG/cm | ||||

|---|---|---|---|---|---|---|---|---|

| 1 | 126 | 124 | 126 | 129 | 146 | 157 | 187 | 187 |

| 2 | 132 | 131 | 133 | 140 | 154 | 169 | 180 | 187 |

| 3 | 128 | 127 | 128 | 136 | 145 | 163 | 188 | 181 |

| 4 | 127 | 127 | 124 | 137 | 148 | 162 | 181 | 180 |

| 5 | 124 | 122 | 126 | 128 | 146 | 146 | 171 | 166 |

| 6 | 124 | 124 | 128 | 129 | 144 | 158 | 169 | 168 |

| 7 | 111 | 109 | 113 | 117 | 127 | 138 | 167 | 154 |

| 8 | 123 | 123 | 129 | 130 | 153 | 153 | 158 | 167 |

| 9 | 119 | 118 | 120 | 123 | 132 | 146 | 176 | 169 |

| 10 | 121 | 118 | 127 | 127 | 150 | 156 | 162 | 173 |

| 11 | 118 | 114 | 118 | 120 | 128 | 145 | 151 | 159 |

| 12 | 113 | 114 | 116 | 121 | 134 | 145 | 171 | 167 |

| 13 | 129 | 118 | 124 | 129 | 136 | 147 | 170 | 166 |

| 14 | 124 | 121 | 126 | 130 | 137 | 151 | 182 | 172 |

| 15 | 115 | 114 | 115 | 123 | 147 | 146 | 165 | 160 |

| 16 | 123 | 119 | 123 | 126 | 133 | 141 | 156 | 164 |

| 17 | 118 | 114 | 118 | 122 | 139 | 144 | 170 | 167 |

| 18 | 132 | 123 | 132 | 127 | 145 | 153 | 153 | 177 |

| 19 | 124 | 119 | 124 | 124 | 134 | 148 | 186 | 178 |

| 20 | 118 | 118 | 118 | 124 | 134 | 141 | 147 | 163 |

| 21 | 123 | 122 | 123 | 127 | 139 | 147 | 151 | 166 |

| 22 | 118 | 117 | 118 | 123 | 140 | 146 | 149 | 163 |

| 23 | 123 | 121 | 123 | 126 | 139 | 150 | 154 | 162 |

| Index | Body Height | Lumbar Height | Body Length | Chest Girth |

|---|---|---|---|---|

| MAE(cm) | 1.52 | 3.83 | 9.77 | 7.80 |

| MRE(%) | 1.28 | 3.02 | 6.57 | 4.65 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, C.; Cao, S.; Li, S.; Bai, T.; Zhao, Z.; Sun, W. Automated Measurement of Cattle Dimensions Using Improved Keypoint Detection Combined with Unilateral Depth Imaging. Animals 2024, 14, 2453. https://doi.org/10.3390/ani14172453

Peng C, Cao S, Li S, Bai T, Zhao Z, Sun W. Automated Measurement of Cattle Dimensions Using Improved Keypoint Detection Combined with Unilateral Depth Imaging. Animals. 2024; 14(17):2453. https://doi.org/10.3390/ani14172453

Chicago/Turabian StylePeng, Cheng, Shanshan Cao, Shujing Li, Tao Bai, Zengyuan Zhao, and Wei Sun. 2024. "Automated Measurement of Cattle Dimensions Using Improved Keypoint Detection Combined with Unilateral Depth Imaging" Animals 14, no. 17: 2453. https://doi.org/10.3390/ani14172453

APA StylePeng, C., Cao, S., Li, S., Bai, T., Zhao, Z., & Sun, W. (2024). Automated Measurement of Cattle Dimensions Using Improved Keypoint Detection Combined with Unilateral Depth Imaging. Animals, 14(17), 2453. https://doi.org/10.3390/ani14172453