Improved YOLOv8-Based Method for the Carapace Keypoint Detection and Size Measurement of Chinese Mitten Crabs

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

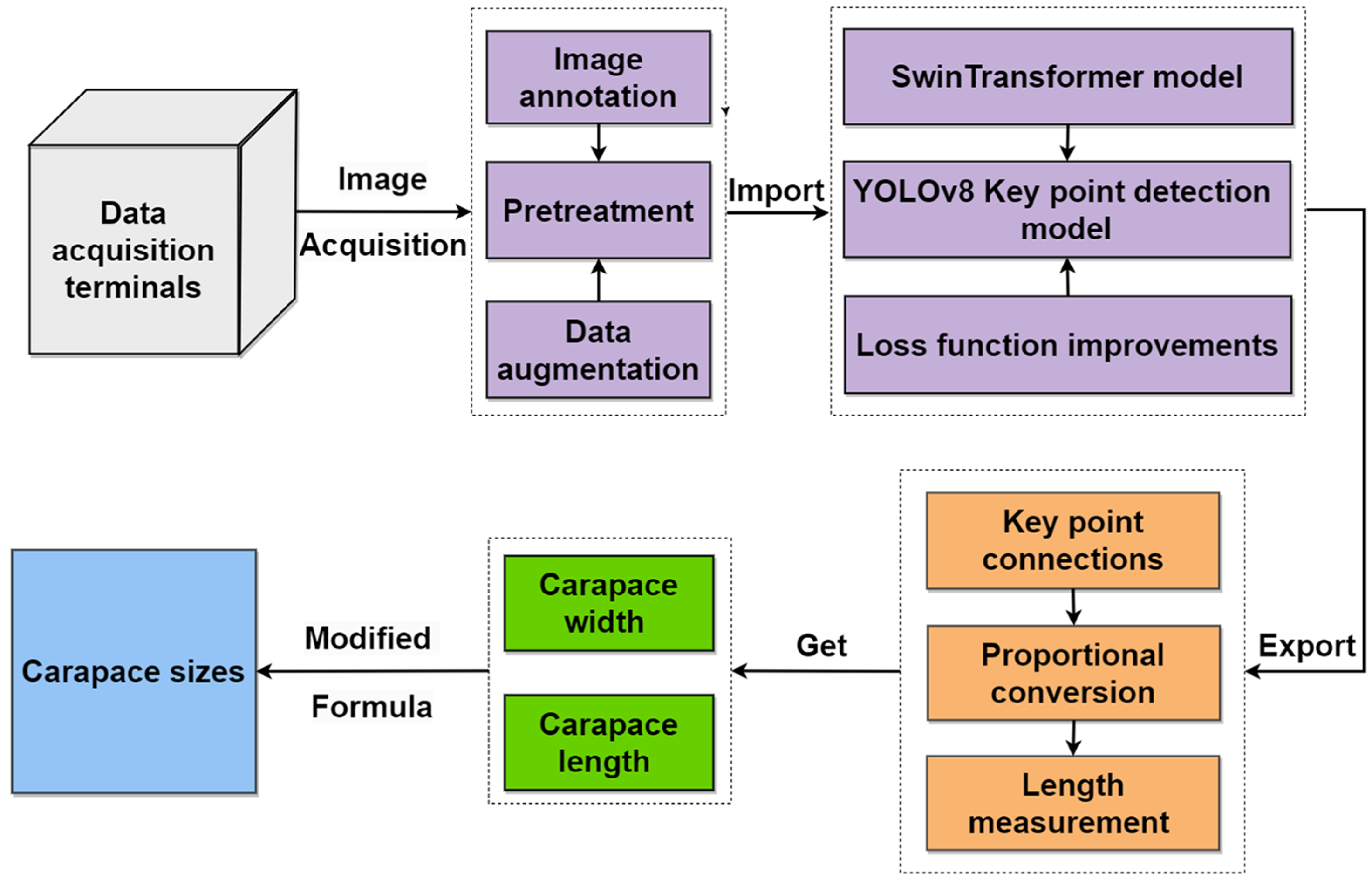

2.1. Overall Flow of the Proposed Method

2.2. Image Acquisition

2.3. Preprocessing

2.3.1. Image Enhancements

2.3.2. Dataset Labeling

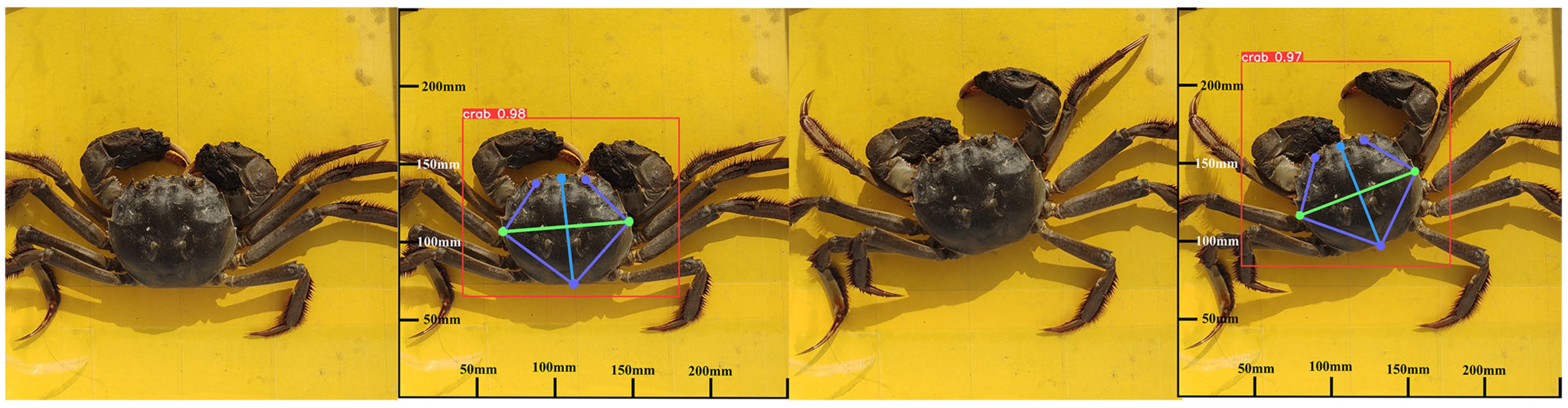

- A rectangular box was used to frame the parts, including the double chelae and dorsal armor, of the river crab labeled as the carapace.

- Six keypoints were used to label the left eye, right eye, and the front, back, left, and right sides of the river crab’s dorsal armor. Their corresponding English names were used as their labeling names, as shown in Table 1.

- At the end of the labeling, a JSON file was automatically generated and converted into the txt file required by YOLOv8l.

- The dataset is divided into training and validation sets in the ratio of 9:1, and the images in the training and validation sets numbered 4522 and 502 images, respectively.

2.4. YOLOv8 Improvements

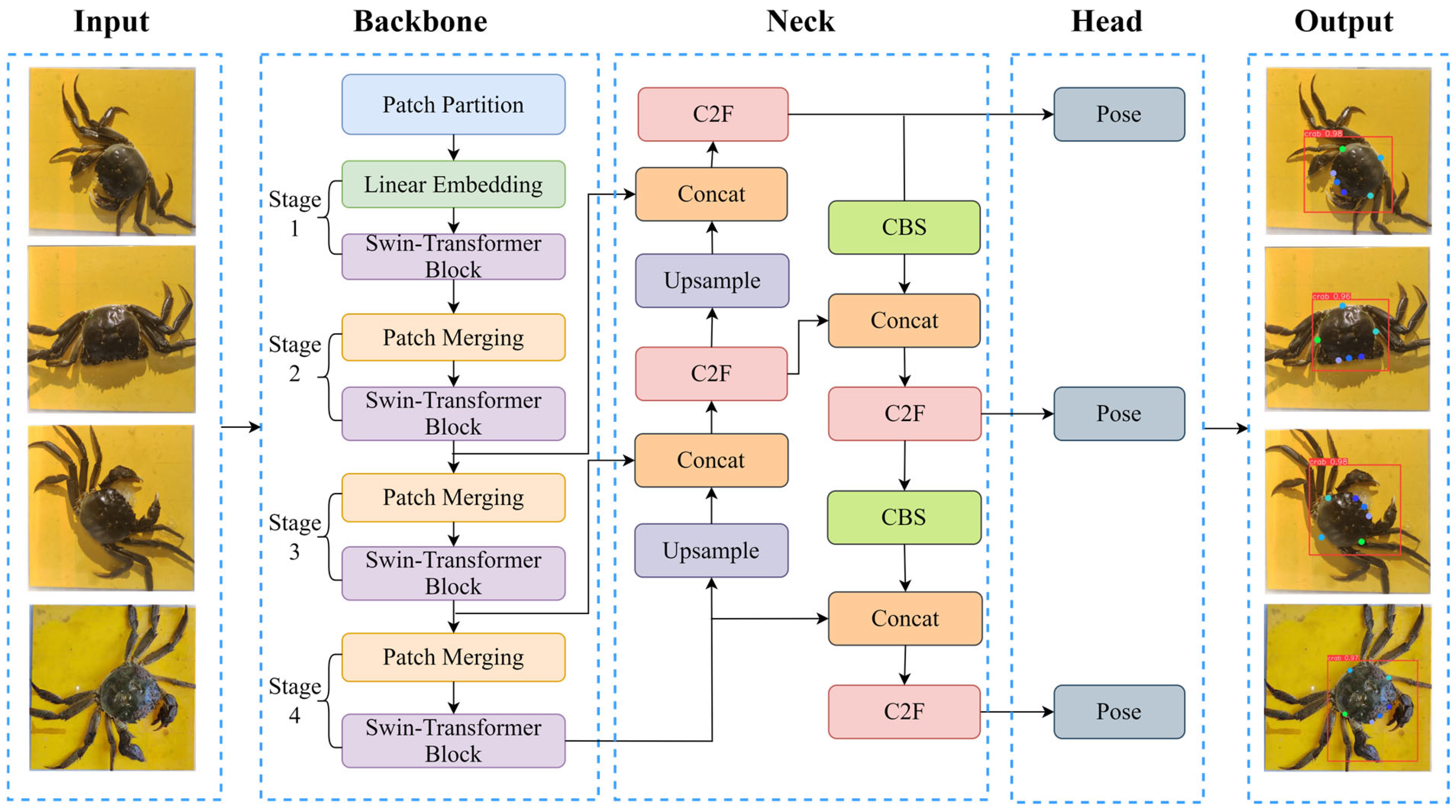

2.4.1. Model Structure

2.4.2. Backbone Network Improvement

2.4.3. Loss Function Improvement

2.5. Prediction of Crab Carapace Size Based on Keypoint Detection

2.5.1. Keypoint Detection

2.5.2. Prediction of Crab Carapace Size

3. Results and Discussion

3.1. Experimental Environment

3.2. Evaluation Indicators

3.2.1. Precision and Recall

3.2.2. mAP

3.2.3. OKS

3.3. Results Showcase

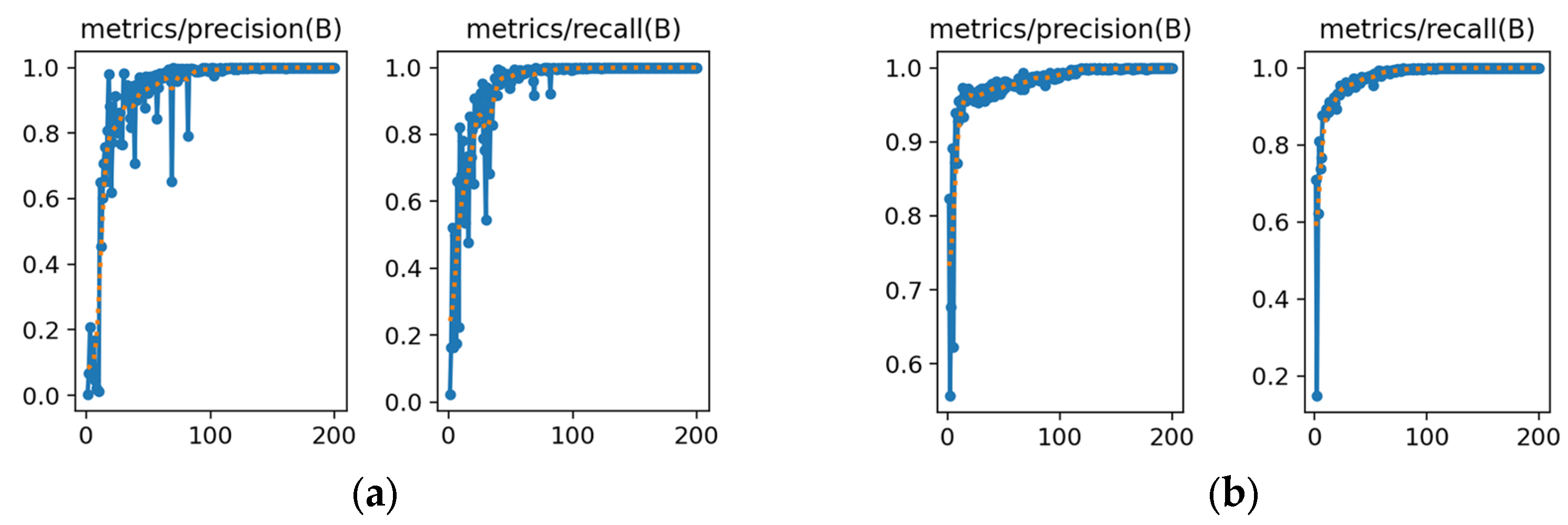

3.3.1. Precision and Recall Evaluation

3.3.2. mAP Evaluation

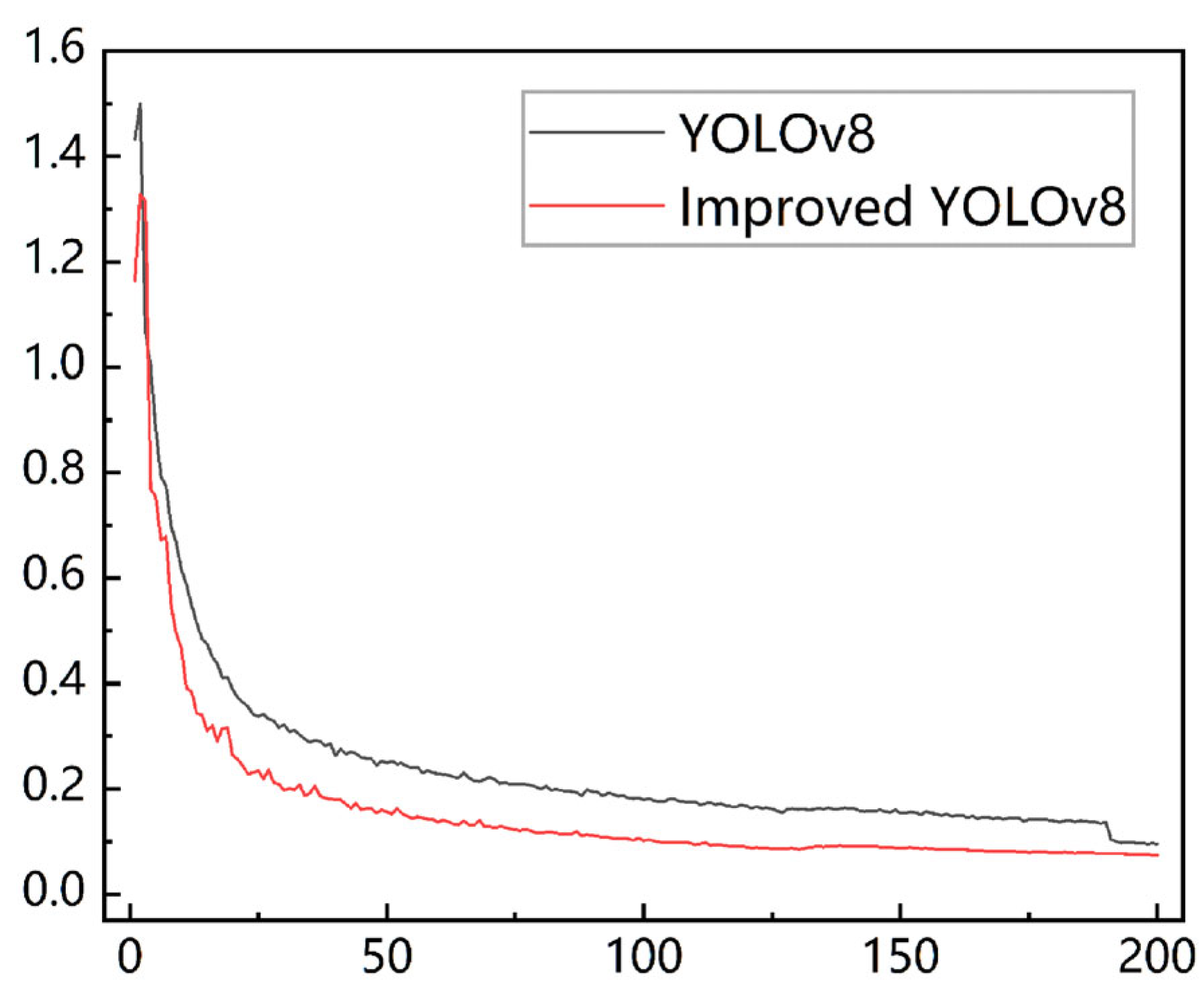

3.3.3. Training Loss

3.3.4. Keypoint Detection Results

3.4. Size Prediction Experiment

4. Conclusions

- (1)

- In consideration of the unique growth characteristics of river crabs, a data acquisition terminal was built to collect images of river crabs. A large number of images of river crab carapace sizes were collected and labeled, and data enhancement was conducted to expand the dataset.

- (2)

- YOLOv8l was improved by introducing the Swin Transformer module into the backbone network. At the same time, the loss function in the YOLOv8l model was improved. The improved YOLOv8l model was enhanced in terms of accuracy, recall, AP, and convergence speed. Specifically, the AP of target detection was 95.88%, and the total average of OKS for keypoint detection was 91.32%.

- (3)

- The improved YOLOv8l model was utilized for keypoint detection. The length proportionality between the background plate and keypoints of river crabs was utilized to determine the actual size and value of river crabs. The final test verified that the average absolute error of size measurement data was 2.34%, which met the test requirements.

- (4)

- The method for the measurement of the shell sizes of river crabs proposed in this study enabled the fast and accurate nondestructive measurement of the growth condition of river crabs. It can collect key growth information of river crabs, provide exact data support for intelligent farming, and assist farmers in their farming decisions. However, there are limitations in creating application software based on the proposed model, and the proposed model needs to be combined with a data acquisition terminal that is set to the proposed specific image-capturing conditions to be applied.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| mAP | Mean average precision |

| OKS | Object keypoint similarity |

| EIoU | Expected intersection over union |

| CIoU | Complete intersection over union |

References

- FFA. 2024 China Fisheries Statistics Yearbook; China Agricultural Publishing House: Beijing, China, 2024. [Google Scholar]

- Sun, Y.; Chen, Z.; Zhao, D.; Zhan, T.; Zhou, W.; Ruan, C. Design and Experiment of Precise Feeding System for Pond Crab Culture. Trans. Chin. Soc. Agric. Mach. 2022, 53, 291–301. [Google Scholar]

- Wang, Y.; Zhang, Y.; Ma, F.; Tian, X.; Ge, S.; Man, C.; Xiao, M. Research on Precise Feeding Strategies for Large-Scale Marine Aquafarms. J. Mar. Sci. Eng. 2024, 12, 1671. [Google Scholar] [CrossRef]

- Cai, K.; Miao, X.; Wang, W.; Pang, H.; Liu, Y.; Song, J. A modified YOLOv3 model for fish detection based on MobileNetv1 as backbone. Aquac. Eng. 2020, 91, 102117. [Google Scholar]

- Chen, X.; Zhang, Y.; Li, D.; Duan, Q. Chinese mitten crab detection and gender classification method based on GMNet-YOLOv4. Comput. Electron. Agric. 2023, 214, 108318. [Google Scholar]

- Kakehi, S.; Sekiuchi, T.; Ito, H.; Ueno, S.; Takeuchi, Y.; Suzuki, K.; Togawa, M. Identification and counting of Pacific oyster Crassostrea gigas larvae by object detection using deep learning. Aquac. Eng. 2021, 95, 102197. [Google Scholar]

- Xu, X.; Li, W.; Duan, Q. Transfer learning and SE-ResNet152 networks-based for small-scale unbalanced fish species identification. Comput. Electron. Agric. 2021, 180, 105878. [Google Scholar]

- Zhang, L.; Li, B.; Sun, X.; Hong, Q.; Duan, Q. Intelligent fish feeding based on machine vision: A review. Biosyst. Eng. 2023, 231, 133–164. [Google Scholar]

- Liu, C.; Wang, Z.; Li, Y.; Zhang, Z.; Li, J.; Xu, C.; Du, R.; Li, D.; Duan, Q. Research progress of computer vision technology in abnormal fish detection. Aquac. Eng. 2023, 103, 102350. [Google Scholar] [CrossRef]

- Gu, H.; Gan, D.; Chen, M.; Feng, G. One-Year-Old Precocious Chinese Mitten Crab Identification Algorithm Based on Task Alignment. Animals 2024, 14, 2128. [Google Scholar]

- Sun, Y.; Yuan, B.; Zhan, T.; Sun, J.; Fang, Z.; Zhao, D. Detecting river crab and bait using improved YOLOv5s. Trans. Chin. Soc. Agric. Eng. 2023, 39, 178–187. [Google Scholar]

- Zhao, D.; Liu, X.; Sun, Y.; Wu, R.; Hong, J.; Ruan, C. Detection of Underwater Crabs Based on Machine Vision. Trans. Chin. Soc. Agric. Mach. 2019, 50, 151–158. [Google Scholar]

- Zhao, D.; Cao, S.; Sun, Y.; Qi, H.; Ruan, C. Small-sized Efficient Detector for Underwater Freely Live Crabs Based on Compound Scaling Neural Network. Trans. Chin. Soc. Agric. Mach. 2020, 51, 163–174. [Google Scholar]

- Zhang, Z.; Lu, X.; Hu, Q. Lightweight Model for River Crab Detection Based on Image Enhancement and Improved YOLO v5s. Trans. Chin. Soc. Agric. Mach. 2024, 55, 124–131+374. [Google Scholar]

- Fang, G.; Zhao, Y. Detection of Critical Parts of River Crab Based on Lightweight YOLOv7-SPSD. Sensors 2024, 24, 7593. [Google Scholar] [CrossRef] [PubMed]

- Ji, W.; Peng, J.; Xu, B.; Zhang, T. Real-time detection of underwater river crab based on multi-scale pyramid fusion image enhancement and MobileCenterNet model. Comput. Electron. Agric. 2023, 204, 107522. [Google Scholar]

- Li, R.; Wen, Y.; Zhang, S.; Xu, X.; Ma, B.; Song, H. Automated measurement of beef cattle body size via key point detection and monocular depth estimation. Expert Syst. Appl. 2024, 244, 123042. [Google Scholar]

- Zhang, A.; Wu, B.; Jiang, C.; Xuan, D.C.Z.; Ma, E.Y.H.; Zhang, F.Y.A. Development and validation of a visual image analysis for monitoring the body size of sheep. J. Appl. Anim. Res. 2018, 46, 1004–1015. [Google Scholar]

- Shi, C.; Wang, Q.; He, X.; Zhang, X.; Li, D. An automatic method of fish length estimation using underwater stereo system based on LabVIEW. Comput. Electron. Agric. 2020, 173, 105419. [Google Scholar]

- Lai, P.-C.; Lin, H.-Y.; Lin, J.-Y.; Hsu, H.-C.; Chu, Y.-N.; Liou, C.-H.; Kuo, Y.-F. Automatic measuring shrimp body length using CNN and an underwater imaging system. Biosyst. Eng. 2022, 221, 224–235. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- S, P.; Denny J, C.M. An efficient approach to detect and segment underwater images using Swin Transformer. Results Eng. 2024, 23, 102460. [Google Scholar]

- Baek, J.-W.; Chung, K. Swin Transformer-Based Object Detection Model Using Explainable Meta-Learning Mining. Appl. Sci. 2023, 13, 3213. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, Y.; Zhang, Z.; Yin, S.; Ma, L. Marine target detection for PPI images based on YOLO-SWFormer. Alex. Eng. J. 2023, 82, 396–403. [Google Scholar] [CrossRef]

- Li, C.; Shen, C.; Feng, G.; Huang, X.; Li, X. Preference for Shelters at Different Developmental Stages of Chinese Mitten Crab (Eriocheir sinensis). Animals 2022, 12, 918. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO, Version 8.0.0; [Computer software]. 2023. [Google Scholar]

| Keypoints | Labeling Tags | Specific Labeling Location |

|---|---|---|

| Left eye | left_eye | Crab’s left eye eyeball |

| Right eye | right_eye | Crab’s right eye eyeball |

| Left side | left_side | Last spine of crab dorsal armor, left side, posteriorly. |

| Right side | right_side | Last spine of crab dorsal armor, right side, posteriorly. |

| Front side | front_side | Crab between two spines at the center point between the eyes. |

| Behind side | behind_side | Corresponding points on the posterior side of the crab on the axis of the anterior side of the dorsal armor |

| Keypoints | Anterior Side | Posterior Side | Left Eye | Right Eye | Left Side | Right Side |

|---|---|---|---|---|---|---|

| Mean | 0.932 | 0.937 | 0.896 | 0.912 | 0.883 | 0.919 |

| Standard deviation | 0.030 | 0.014 | 0.046 | 0.039 | 0.049 | 0.032 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, K.; Chen, Z.; Wang, C.; Zhou, Z.; Xiao, M.; Zhu, H.; Li, D.; Liu, W. Improved YOLOv8-Based Method for the Carapace Keypoint Detection and Size Measurement of Chinese Mitten Crabs. Animals 2025, 15, 941. https://doi.org/10.3390/ani15070941

Chen K, Chen Z, Wang C, Zhou Z, Xiao M, Zhu H, Li D, Liu W. Improved YOLOv8-Based Method for the Carapace Keypoint Detection and Size Measurement of Chinese Mitten Crabs. Animals. 2025; 15(7):941. https://doi.org/10.3390/ani15070941

Chicago/Turabian StyleChen, Ke, Zhuquan Chen, Changbo Wang, Zhifan Zhou, Maohua Xiao, Hong Zhu, Dongfang Li, and Weimin Liu. 2025. "Improved YOLOv8-Based Method for the Carapace Keypoint Detection and Size Measurement of Chinese Mitten Crabs" Animals 15, no. 7: 941. https://doi.org/10.3390/ani15070941

APA StyleChen, K., Chen, Z., Wang, C., Zhou, Z., Xiao, M., Zhu, H., Li, D., & Liu, W. (2025). Improved YOLOv8-Based Method for the Carapace Keypoint Detection and Size Measurement of Chinese Mitten Crabs. Animals, 15(7), 941. https://doi.org/10.3390/ani15070941