Artificial Intelligence Decision-Making Transparency and Employees’ Trust: The Parallel Multiple Mediating Effect of Effectiveness and Discomfort

Abstract

:1. Introduction

2. Background Literature and Research Hypotheses

2.1. AI in Enterprises Requires Trust

2.2. SOR Model

2.3. Algorithmic Reductionism

2.4. Social Identity Theory

2.5. AI Decision-Making Transparency and Employees’ Perceived Transparency

2.6. Mediating Role of Employees’ Perceived Transparency between AI Decision-Making Transparency and Employees’ Trust in AI

2.7. Chain Mediating Role of Employees’ Perceived Transparency and Employees’ Perceived Effectiveness of AI

2.8. Chain Mediating Role of Employees’ Perceived Transparency and Employees’ Discomfort with AI

3. Methods

3.1. Sample and Data Collection

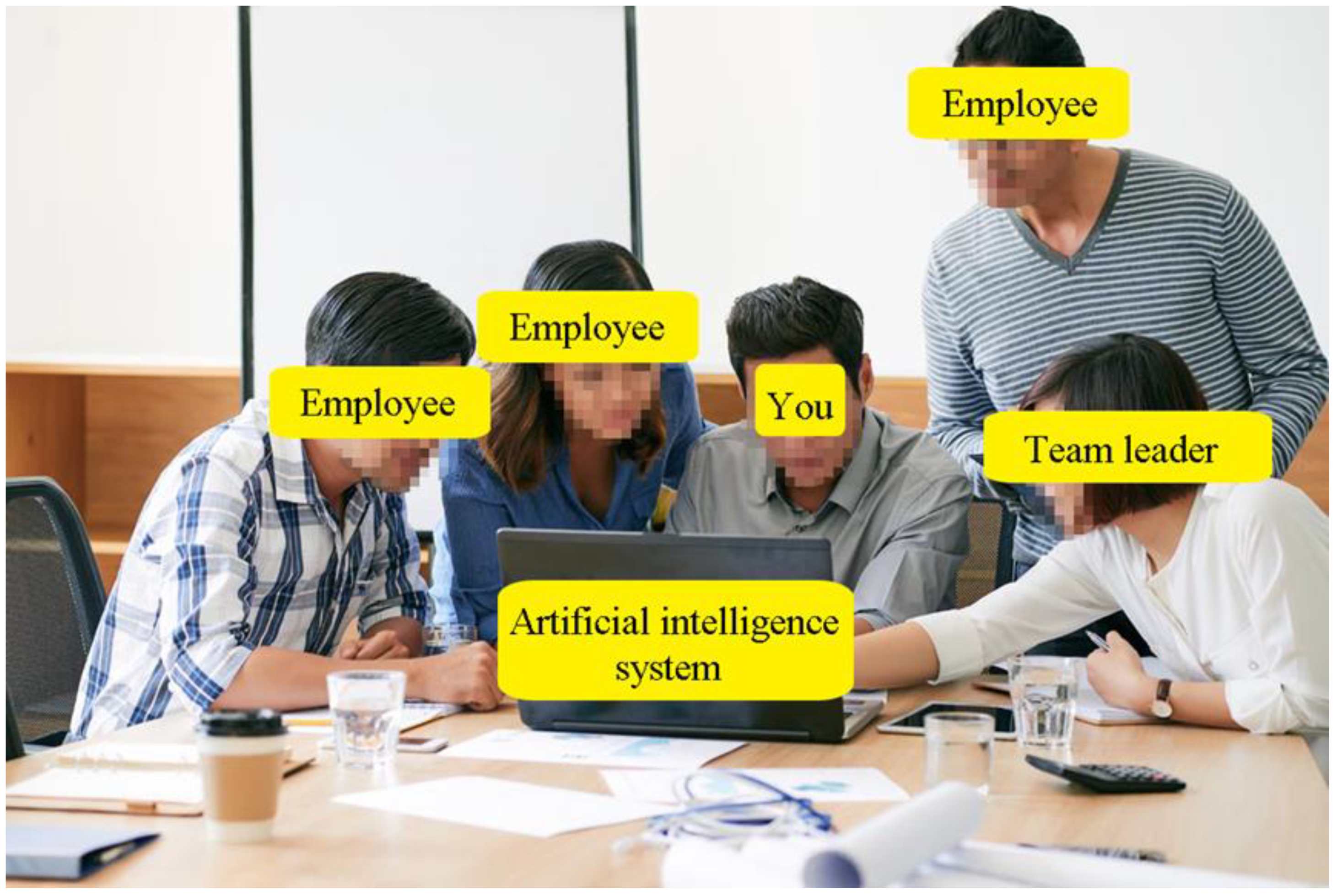

3.2. Procedure and Manipulation

3.3. Measures

3.4. Data Analysis

4. Results

4.1. Validity and Reliability

4.2. The Results of Variance Analysis of AI Decision-Making Non-Transparency and AI Decision-Making Transparency

4.3. Hypotheses Test

5. Discussion

5.1. Theoretical Implications

5.2. Practical Implications

5.3. Limitations and Suggestions for Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

References

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Höddinghaus, M.; Sondern, D.; Hertel, G. The automation of leadership functions: Would people trust decision algorithms? Comput. Hum. Behav. 2021, 116, 106635. [Google Scholar] [CrossRef]

- Hoff, K.A.; Bashir, M. Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors. 2015, 57, 407–434. [Google Scholar] [CrossRef] [PubMed]

- Felzmann, H.; Villaronga, E.F.; Lutz, C.; Tamò-Larrieux, A. Transparency you can trust: Transparency requirements for artificial intelligence between legal norms and contextual concerns. Big Data Soc. 2019, 6, 2053951719860542. [Google Scholar] [CrossRef]

- Sinha, R.; Swearingen, K. The role of transparency in recommender systems. In CHI’02 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2002; pp. 830–831. [Google Scholar] [CrossRef]

- Kulesza, T.; Stumpf, S.; Burnett, M.; Yang, S.; Kwan, I.; Wong, W.K. Too much, too little, or just right? Ways explanations impact end users’ mental models. In 2013 IEEE Symposium on Visual Languages and Human Centric Computing; IEEE: Piscataway, NJ, USA, 2013; pp. 3–10. [Google Scholar] [CrossRef] [Green Version]

- Herlocker, J.L.; Konstan, J.A.; Riedl, J. Explaining collaborative filtering recommendations. In Proceedings of the 2000 ACM conference on Computer Supported Cooperative Work, Philadelphia, PA, USA, 2–6 December 2000; pp. 241–250. [Google Scholar] [CrossRef]

- Pu, P.; Chen, L. Trust-inspiring explanation interfaces for recommender systems. Knowl. Based Syst. 2007, 20, 542–556. [Google Scholar] [CrossRef]

- Cramer, H.; Evers, V.; Ramlal, S.; Van Someren, M.; Rutledge, L.; Stash, N.; Aroyo, L.; Wielinga, B. The effects of transparency on trust in and acceptance of a content-based art recommender. User Model. User-Adapt. Interact. 2008, 18, 455. [Google Scholar] [CrossRef] [Green Version]

- Kim, T.; Hinds, P. Who should I blame? Effects of autonomy and transparency on attributions in human-robot interaction. In ROMAN 2006-The 15th IEEE International Symposium on Robot and Human Interactive Communication; IEEE: Piscataway, NJ, USA, 2006; pp. 80–85. [Google Scholar] [CrossRef]

- Eslami, M.; Krishna Kumaran, S.R.; Sandvig, C.; Karahalios, K. Communicating algorithmic process in online behavioural advertising. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montréal, QC, Canada, 21–27 April 2018; pp. 1–13. [Google Scholar] [CrossRef]

- Kizilcec, R.F. How much information? In Effects of transparency on trust in an algorithmic interface. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 5–12 May 2016; pp. 2390–2395. [Google Scholar] [CrossRef]

- Zhao, R.; Benbasat, I.; Cavusoglu, H. Do users always want to know more? Investigating the relationship between system transparency and users’ trust in advice-giving systems. In Proceedings of the 27th European Conference on Information Systems (ECIS), Stockholm & Uppsala, Sweden, 8–14 June 2019. [Google Scholar]

- Wilson, H.J.; Alter, A.; Shukla, P. Companies are reimagining business processes with algorithms. Harv. Bus. Rev. 2016, 8. [Google Scholar]

- Castelo, N.; Bos, M.W.; Lehmann, D.R. Task-dependent algorithm aversion. J. Mark. Res. 2019, 56, 809–825. [Google Scholar] [CrossRef]

- Lin, J.S.C.; Chang, H.C. The role of technology readiness in self-service technology acceptance. Manag. Serv. Qual. An. Int. J. 2011, 21, 424–444. [Google Scholar] [CrossRef]

- Kolbjørnsrud, V.; Amico, R.; Thomas, R.J. Partnering with AI: How organizations can win over skeptical managers. Strategy Leadersh. 2017, 45, 37–43. [Google Scholar] [CrossRef]

- Rrmoku, K.; Selimi, B.; Ahmedi, L. Application of Trust in Recommender Systems—Utilizing Naive Bayes Classifier. Computation 2022, 10, 6. [Google Scholar] [CrossRef]

- Lin, S.; Döngül, E.S.; Uygun, S.V.; Öztürk, M.B.; Huy, D.T.N.; Tuan, P.V. Exploring the Relationship between Abusive Management, Self-Efficacy and Organizational Performance in the Context of Human–Machine Interaction Technology and Artificial Intelligence with the Effect of Ergonomics. Sustainability 2022, 14, 1949. [Google Scholar] [CrossRef]

- Rossi, F. Building trust in artificial intelligence. J. Int. Aff. 2018, 72, 127–134. [Google Scholar]

- Ötting, S.K.; Maier, G.W. The importance of procedural justice in human–machine interactions: Intelligent systems as new decision agents in organizations. Comput. Hum. Behav. 2018, 89, 27–39. [Google Scholar] [CrossRef]

- Dirks, K.T.; Ferrin, D.L. Trust in leadership: Meta-analytic findings and implications for research and practice. J. Appl. Psychol. 2002, 87, 611–628. [Google Scholar] [CrossRef] [PubMed]

- Chugunova, M.; Sele, D. We and It: An Interdisciplinary Review of the Experimental Evidence on Human-Machine Interaction; Research Paper No. 20-15; Max Planck Institute for Innovation & Competition: Munich, Germany, 2020. [Google Scholar] [CrossRef]

- Smith, P.J.; McCoy, C.E.; Layton, C. Brittleness in the design of cooperative problem-solving systems: The effects on user performance. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 1997, 27, 360–371. [Google Scholar] [CrossRef]

- Zand, D.E. Trust and managerial problem solving. Adm. Sci. Q. 1972, 17, 229–239. [Google Scholar] [CrossRef]

- Räisänen, J.; Ojala, A.; Tuovinen, T. Building trust in the sharing economy: Current approaches and future considerations. J. Clean. Prod. 2021, 279, 123724. [Google Scholar] [CrossRef]

- Mehrabian, A.; Russell, J.A. An Approach to Environmental Psychology; The MIT Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Lee, S.; Ha, S.; Widdows, R. Consumer responses to high-technology products: Product attributes, cognition, and emotions. J. Bus. Res. 2011, 64, 1195–1200. [Google Scholar] [CrossRef]

- Xu, J.; Benbasat, I.; Cenfetelli, R.T. The nature and consequences of trade-off transparency in the context of recommendation agents. MIS Q. 2014, 38, 379–406. [Google Scholar] [CrossRef]

- Saßmannshausen, T.; Burggräf, P.; Wagner, J.; Hassenzahl, M.; Heupel, T.; Steinberg, F. Trust in artificial intelligence within production management–an exploration of antecedents. Ergonomics 2021, 64, 1333–1350. [Google Scholar] [CrossRef] [PubMed]

- Newman, D.T.; Fast, N.J.; Harmon, D.J. When eliminating bias isn’t fair: Algorithmic reductionism and procedural justice in human resource decisions. Organ. Behav. Hum. Dec. 2020, 160, 149–167. [Google Scholar] [CrossRef]

- Noble, S.M.; Foster, L.L.; Craig, S.B. The procedural and interpersonal justice of automated application and resume screening. Int. J. Select. Assess. 2021, 29, 139–153. [Google Scholar] [CrossRef]

- Balasubramanian, N.; Ye, Y.; Xu, M. Substituting human decision-making with machine learning: Implications for organizational learning. Acad Manag Ann. 2020, in press. [Google Scholar] [CrossRef]

- Tajfel, H. Social psychology of intergroup relations. Annu. Rev. Psychol. 1982, 33, 1–39. [Google Scholar] [CrossRef] [Green Version]

- Ferrari, F.; Paladino, M.P.; Jetten, J. Blurring human–machine distinctions: Anthropomorphic appearance in social robots as a threat to human distinctiveness. Int. J. Soc. Robot. 2016, 8, 287–302. [Google Scholar] [CrossRef]

- De Fine Licht, J.; Naurin, D.; Esaiasson, P.; Gilljam, M. When does transparency generate legitimacy? Experimenting on a context-bound relationship. Gov. Int. J. Policy Adm. I. 2014, 27, 111–134. [Google Scholar] [CrossRef]

- De Fine Licht, K.; de Fine Licht, J. Artificial intelligence, transparency, and public decision-making. AI Soc. 2020, 35, 917–926. [Google Scholar] [CrossRef] [Green Version]

- Elia, J. Transparency rights, technology, and trust. Ethics. Inf. Technol. 2009, 11, 145–153. [Google Scholar] [CrossRef]

- Felzmann, H.; Fosch-Villaronga, E.; Lutz, C.; Tamò-Larrieux, A. Towards transparency by design for artificial intelligence. Sci. Eng. Ethics. 2020, 26, 3333–3361. [Google Scholar] [CrossRef]

- Wieringa, M. What to account for when accounting for algorithms: A systematic literature review on algorithmic accountability. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; ACM: New York, NY, USA, 2020; pp. 1–18. [Google Scholar]

- De Fine Licht, J.; Naurin, D.; Esaiasson, P.; Gilljam, M. Does transparency generate legitimacy? An experimental study of procedure acceptance of open-and closed-door decision-making. QoG Work. Pap. Ser. 2011, 8, 1–32. [Google Scholar]

- Rawlins, B. Give the emperor a mirror: Toward developing a stakeholder measurement of organizational transparency. J. Public. Relat. Res. 2008, 21, 71–99. [Google Scholar] [CrossRef]

- Grotenhermen, J.G.; Bruckes, M.; Schewe, G. Are We Ready for Artificially Intelligent Leaders? A Comparative Analysis of Employee Perceptions Regarding Artificially Intelligent and Human Supervisors. In Proceedings of the AMCIS 2020 Conference, Virtual Conference, 15–17 August 2020. [Google Scholar]

- Chander, A.; Srinivasan, R.; Chelian, S.; Wang, J.; Uchino, K. Working with beliefs: AI transparency in the enterprise. In Proceedings of the 2018 IUI Workshops, Tokyo, Japan, 11 March 2018. [Google Scholar]

- Crepaz, M.; Arikan, G. Information disclosure and political trust during the COVID-19 crisis: Experimental evidence from Ireland. J. Elect. Public. Opin. 2021, 31, 96–108. [Google Scholar] [CrossRef]

- Dietvorst, B.J.; Simmons, J.P.; Massey, C. Algorithm aversion: People erroneously avoid algorithms after seeing them err. J. Exp. Psychol. Gen. 2015, 144, 114. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ryan, M. In AI We Trust: Ethics, Artificial Intelligence, and Reliability. Sci. Eng. Ethics. 2020, 26, 2749–2767. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Genc, Y.; Wang, D.; Ahsen, M.E.; Fan, X. Effect of ai explanations on human perceptions of patient-facing ai-powered healthcare systems. J. Med. Syst. 2021, 45, 64. [Google Scholar] [CrossRef]

- Patrzyk, P.M.; Link, D.; Marewski, J.N. Human-like machines: Transparency and comprehensibility. Behav. Brain. Sci. 2017, 40, e276. [Google Scholar] [CrossRef]

- Grace, K.; Salvatier, J.; Dafoe, A.; Zhang, B.; Evans, O. When will AI exceed human performance? Evidence from AI experts. J. Artif. Intell. Res. 2018, 62, 729–754. [Google Scholar] [CrossRef]

- Parasuraman, A.; Colby, C.L. Techno-Ready Marketing: How and Why Your Customers Adopt Technology; Free Press: New York, NY, USA, 2001. [Google Scholar]

- Lai, K.; Xiong, X.; Jiang, X.; Sun, M.; He, L. Who falls for rumor? Influence of personality traits on false rumor belief. Pers. Indiv. Differ. 2020, 152, 109520. [Google Scholar] [CrossRef]

- Tian, Y.; Zhang, H.; Jiang, Y.; Yang, Y. Understanding trust and perceived risk in sharing accommodation: An extended elaboration likelihood model and moderated by risk attitude. J. Hosp. Market. Manag. 2021, 31, 348–368. [Google Scholar] [CrossRef]

- Zhang, G.; Yang, J.; Wu, G.; Hu, X. Exploring the interactive influence on landscape preference from multiple visual attributes: Openness, richness, order, and depth. Urban. For. Urban. Gree. 2021, 65, 127363. [Google Scholar] [CrossRef]

- Hayes, A.F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach; Guilford Publications: New York, USA, 2017. [Google Scholar]

- Wang, W.; Benbasat, I. Recommendation agents for electronic commerce: Effects of explanation facilities on trusting beliefs. J. Manage. Inform. Syst. 2007, 23, 217–246. [Google Scholar] [CrossRef]

- Chen, T.W.; Sundar, S.S. This app would like to use your current location to better serve you: Importance of user assent and system transparency in personalized mobile services. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montréal, QC, Canada, 21–27 April 2018; pp. 1–13. [Google Scholar] [CrossRef]

- Dobrowolski, Z.; Drozdowski, G.; Panait, M. Understanding the Impact of Generation Z on Risk Management—A Preliminary Views on Values, Competencies, and Ethics of the Generation Z in Public Administration. Int. J. Environ. Res. Public Health 2022, 19, 3868. [Google Scholar] [CrossRef] [PubMed]

- Thiebes, S.; Lins, S.; Sunyaev, A. Trustworthy artificial intelligence. Electron. Mark. 2021, 31, 447–464. [Google Scholar] [CrossRef]

| Construct | Items | References |

|---|---|---|

| Perceived transparency | I can access a great deal of information which explaining how the AI system works. | Zhao et al. [13] |

| I can see plenty of information about the AI system’s inner logic. | ||

| I feel that the amount of the available information regarding the AI system’s reasoning is large. | ||

| Effectiveness | I think AI system makes better decision than human. | Castelo et al. [15] |

| I think the decisions made by AI system is useful. | ||

| I think AI system can make decision very well. | ||

| Discomfort | The decision made by the AI system makes me feel uncomfortable. | Castelo et al. [15] |

| The decision made by the AI system makes me feel resistant. | ||

| The decision made by the AI system makes me feel unsettled. | ||

| Trust | I would heavily rely on AI system. | Höddinghaus et al. [2] |

| I would trust AI system completely. | ||

| I would feel comfortable relying on AI system. |

| Factors | Items | Standardized Factor Loadings (λ) | T-Value | Residual Variance (1–λ2) | Cronbach’s α | Composite Reliability (CR) | Average Variance Extracted (AVE) |

|---|---|---|---|---|---|---|---|

| Trust | TRU01 | 0.801 | 10.789 | 0.358 | 0.867 | 0.872 | 0.695 |

| TRU02 | 0.904 | 12.932 | 0.183 | ||||

| TRU03 | 0.792 | 10.611 | 0.373 | ||||

| Effectiveness | EFF01 | 0.706 | 8.803 | 0.502 | 0.805 | 0.817 | 0.599 |

| EFF02 | 0.800 | 10.422 | 0.360 | ||||

| EFF03 | 0.812 | 10.645 | 0.341 | ||||

| Discomfort | DIS01 | 0.850 | 11.827 | 0.278 | 0.897 | 0.901 | 0.753 |

| DIS02 | 0.949 | 14.018 | 0.099 | ||||

| DIS03 | 0.798 | 10.807 | 0.363 | ||||

| Perceived Transparency | PER01 | 0.799 | 10.400 | 0.362 | 0.851 | 0.853 | 0.660 |

| PER02 | 0.861 | 11.519 | 0.259 | ||||

| PER03 | 0.774 | 9.979 | 0.401 |

| Variable | Mean | SD | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|---|

| 1. Trust | 4.655 | 1.223 | 0.834 | |||

| 2. Effectiveness | 4.731 | 0.984 | 0.699 ** | 0.774 | ||

| 3. Discomfort | 3.367 | 1.285 | −0.260 ** | −0.242 ** | 0.868 | |

| 4. Perceived Transparency | 4.748 | 1.266 | 0.382 ** | 0.353 ** | 0.172 ** | 0.812 |

| Dependent Variable | Variable | β | SE | T | 95% Confidence Interval | R2 | F | |

|---|---|---|---|---|---|---|---|---|

| LLCI | ULCI | |||||||

| Perceived transparency | Constant | 4.470 *** | 0.116 | 38.688 | 4.242 | 4.697 | 0.046 | 11.336 *** |

| AI decision-making transparency | 0.544 *** | 0.162 | 3.367 | 0.226 | 0.863 | |||

| Effectiveness | Constant | 3.429 *** | 0.235 | 14.596 | 2.966 | 3.892 | 0.125 | 16.567 *** |

| AI decision-making transparency | −0.026 | 0.124 | −0.208 | −0.269 | 0.218 | |||

| Perceived transparency | 0.277 *** | 0.049 | 5.662 | 0.181 | 0.373 | |||

| Discomfort | Constant | 2.552 *** | 0.323 | 7.910 | 1.916 | 3.188 | 0.031 | 3.753 * |

| AI decision-making transparency | −0.113 | 0.170 | -0.665 | −0.447 | 0.221 | |||

| Perceived transparency | 0.184 ** | 0.067 | 2.739 | 0.052 | 0.316 | |||

| Trust | Constant | 0.772 * | 0.353 | 2.189 | 0.077 | 1.467 | 0.531 | 65.140 *** |

| AI decision-making transparency | −0.119 | 0.113 | −1.049 | −0.341 | 0.104 | |||

| Perceived transparency | 0.203 *** | 0.050 | 4.084 | 0.105 | 0.301 | |||

| Effectiveness | 0.734 *** | 0.064 | 11.554 | 0.609 | 0.859 | |||

| Discomfort | −0.146 ** | 0.046 | −3.156 | −0.237 | −0.055 | |||

| Effect | Boot SE | 95% Confidence Interval | |||

|---|---|---|---|---|---|

| LLCI | ULCI | ||||

| Total effect | 0.086 | 0.160 | −0.229 | 0.401 | |

| Indirect effect | −0.119 | 0.113 | −0.341 | 0.104 | |

| Direct effect | TOTAL | 0.204 | 0.119 | −0.027 | 0.437 |

| ADT → EPT → ETA | 0.111 | 0.045 | 0.034 | 0.212 | |

| ADT → EPE → ETA | −0.019 | 0.091 | −0.199 | 0.166 | |

| ADT → EDA → ETA | 0.017 | 0.026 | −0.033 | 0.073 | |

| ADT → EPT → EPE → ETA | 0.111 | 0.044 | 0.037 | 0.210 | |

| ADT → EPT → EDA → ETA | −0.015 | 0.009 | −0.037 | −0.002 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, L.; Li, Y. Artificial Intelligence Decision-Making Transparency and Employees’ Trust: The Parallel Multiple Mediating Effect of Effectiveness and Discomfort. Behav. Sci. 2022, 12, 127. https://doi.org/10.3390/bs12050127

Yu L, Li Y. Artificial Intelligence Decision-Making Transparency and Employees’ Trust: The Parallel Multiple Mediating Effect of Effectiveness and Discomfort. Behavioral Sciences. 2022; 12(5):127. https://doi.org/10.3390/bs12050127

Chicago/Turabian StyleYu, Liangru, and Yi Li. 2022. "Artificial Intelligence Decision-Making Transparency and Employees’ Trust: The Parallel Multiple Mediating Effect of Effectiveness and Discomfort" Behavioral Sciences 12, no. 5: 127. https://doi.org/10.3390/bs12050127

APA StyleYu, L., & Li, Y. (2022). Artificial Intelligence Decision-Making Transparency and Employees’ Trust: The Parallel Multiple Mediating Effect of Effectiveness and Discomfort. Behavioral Sciences, 12(5), 127. https://doi.org/10.3390/bs12050127