Which Receives More Attention, Online Review Sentiment or Online Review Rating? Spillover Effect Analysis from JD.com

Abstract

1. Introduction

2. Literature Review

3. Theory and Hypotheses

3.1. Online Review Sentiment

3.2. Role of Online Review Characteristics

3.2.1. Product Attributes

3.2.2. Online Review Photos

3.2.3. Online Review Usefulness

4. Methodology

4.1. Product Selection

4.2. Data Collection

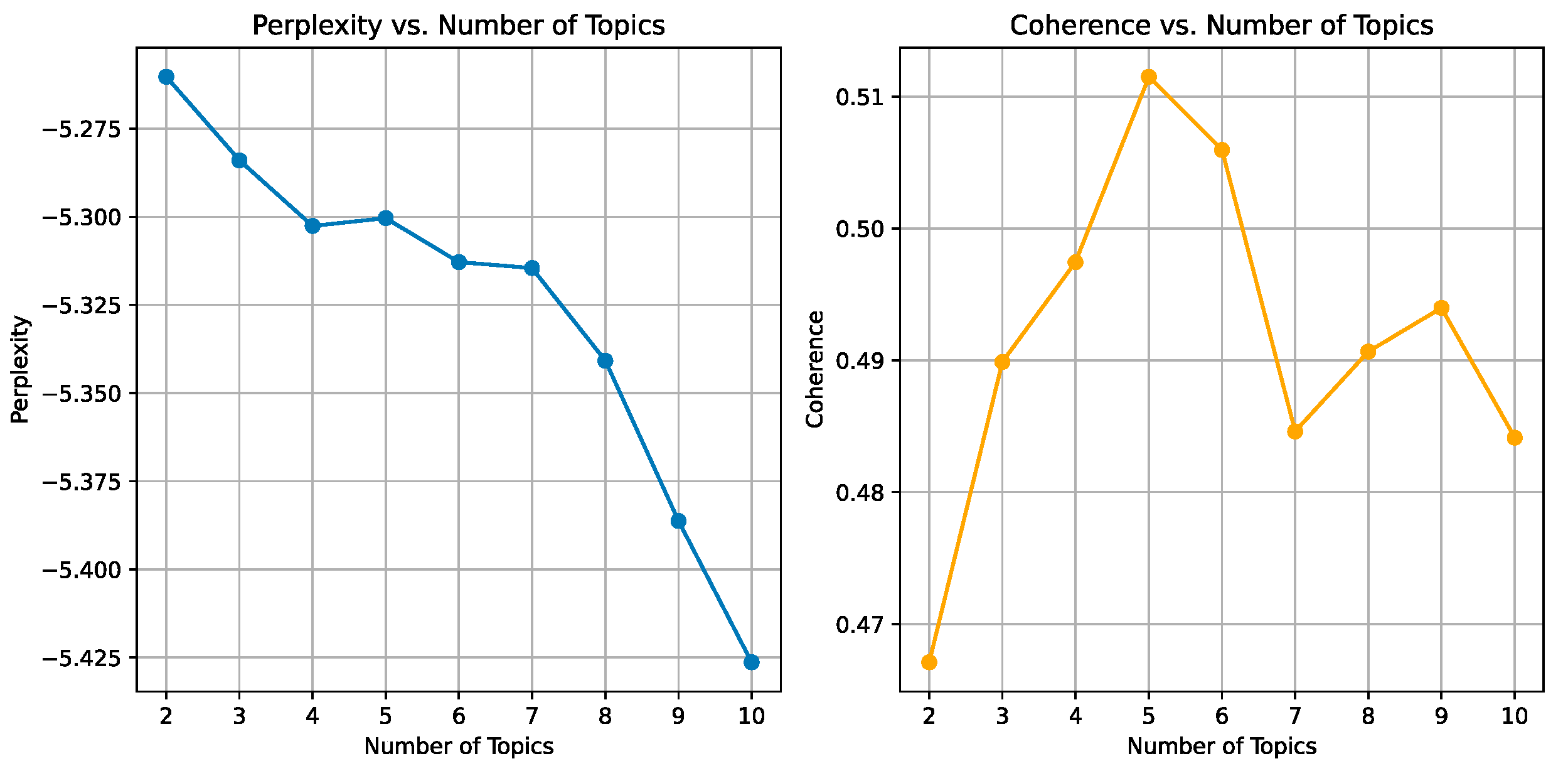

4.3. Product Attribute Analysis

4.4. Variable Measurements

4.5. Empirical Specification

4.5.1. Baseline Model

4.5.2. Interaction Model

5. Results

5.1. Regression Results

| Variables | Model 6 | Model 7 | Model 8 | Model 9 |

|---|---|---|---|---|

| 0.0963 *** (7.08) | 0.0964 *** (7.11) | 0.091 *** (6.68) | 0.0916 *** (6.72) | |

| −0.1049 *** (−4.94) | −0.1195 *** (−5.6) | −0.1425 *** (−6.1) | −0.1493 *** (−6.16) | |

| 0.0266 ** (2.43) | 0.0272 ** (2.49) | 0.0248 ** (2.27) | 0.0254 ** (2.33) | |

| −0.0156 (−0.95) | −0.0153 (−0.94) | −0.0114 (−0.7) | −0.0116 (−0.71) | |

| 0.0301 (1.5) | 0.0305 * (1.75) | 0.0247 (1.41) | 0.0255 (1.28) | |

| 0.0253 * (1.76) | 0.0411 *** (2.6) | 0.0292 ** (2.03) | 0.0405 *** (2.57) | |

| 0.0738 *** (4.15) | 0.0733 *** (4.19) | 0.1006 *** (5.23) | 0.0972 *** (5) | |

| −0.0097 (−0.47) | −0.0066 (−0.32) | |||

| −0.0413 ** (−2.4) | −0.0307 * (−1.75) | |||

| −0.0993 *** (−3.32) | −0.0884 *** (−2.87) | |||

| 0.0262 * (1.66) | 0.026 * (1.66) | 0.0282 * (1.8) | 0.0279 * (1.77) | |

| 0.0662 *** (5.4) | 0.0689 *** (5.62) | 0.0636 *** (5.22) | 0.0659 *** (5.46) | |

| 0.0261 * (1.76) | 0.0241 (1.64) | 0.0192 (1.3) | 0.0185 (1.25) | |

| #products | 269 | 269 | 269 | 269 |

| #online review | 250,300 | 250,300 | 250,300 | 250,300 |

| N | 46,116 | 46,116 | 46,116 | 46,116 |

| R-squared | 0.1502 | 0.1551 | 0.163 | 0.1654 |

5.2. Robustness Checks

6. Discussion and Implications

6.1. Discussion

6.2. Theoretical Implications

6.3. Managerial Implications

6.4. Limitations and Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lu, X.; Ba, S.; Huang, L.; Feng, Y. Promotional marketing or word-of-mouth? Evidence from online restaurant reviews. Inf. Syst. Res. 2013, 24, 596–612. [Google Scholar] [CrossRef]

- Luo, X.; Gu, B.; Zhang, J.; Phang, C.W. Expert blogs and consumer perceptions of competing brands. MIS Q. 2017, 41, 371–396. [Google Scholar] [CrossRef]

- Hu, N.; Pavlou, P.A.; Zhang, J. On self-selection biases in online product reviews. MIS Q. 2017, 41, 449–475. [Google Scholar] [CrossRef]

- King, R.A.; Racherla, P.; Bush, V.D. What we know and don’t know about online word of mouth: A review and synthesis of the literature. J. Interact. Mark. 2014, 28, 167–183. [Google Scholar] [CrossRef]

- Aggarwal, R.; Gopal, R.; Gupta, A.; Singh, H. Putting money where the mouths are: The relation between venture financing and electronic word-of-mouth. Inf. Syst. Res. 2012, 23, 976–992. [Google Scholar] [CrossRef]

- Babic Rosario, A.; Sotgiu, F.; De Valck, K.; Bijmolt, T.H.A. The effect of electronic word of mouth on sales: A meta-analytic review of platform, product, and metric factors. J. Mark. Res. 2016, 53, 297–318. [Google Scholar] [CrossRef]

- Russell, G.J.; Petersen, A. Analysis of cross-category dependence in market basket selection. J. Retail. 2000, 76, 367–392. [Google Scholar] [CrossRef]

- Awe, S. Routledge Dictionary of Economics. Ref. Rev. 2013, 27, 26. [Google Scholar]

- Pauwels, K.; Aksehirli, Z.; Lackman, A. Like the ad or the brand? Marketing stimulates different electronic word-of-mouth content to drive online and offline performance. Int. J. Res. Mark. 2016, 33, 639–655. [Google Scholar] [CrossRef]

- Peres, R.; Van den Bulte, C. When to take or forgo new product exclusivity: Balancing protection from competition against word-of-mouth spillover. J. Mark. 2014, 78, 83–100. [Google Scholar] [CrossRef]

- Lewis, R.; Nguyen, D. Display advertising’s competitive spillovers to consumer search. Quant. Mark. Econ. 2015, 13, 93–115. [Google Scholar] [CrossRef]

- Fossen, B.L.; Mallapragada, G.; De, A. Impact of political television advertisements on viewers’ response to subsequent advertisements. Mark. Sci. 2021, 40, 305–324. [Google Scholar] [CrossRef]

- Krishnan, T.V.; Vakratsas, D. The multiple roles of interpersonal communication in new product growth. Int. J. Res. Mark. 2021, 29, 292–305. [Google Scholar] [CrossRef]

- Borah, A.; Tellis, G.J. Halo (spillover) effects in social media: Do product recalls of one brand hurt or help rival brands? J. Mark. Res. 2016, 53, 143–160. [Google Scholar] [CrossRef]

- Kwark, Y.; Lee, G.M.; Pavlou, P.A.; Qiu, L. On the spillover effects of online product reviews on purchases: Evidence from clickstream data. Inf. Syst. Res. 2021, 32, 895–913. [Google Scholar] [CrossRef]

- Sun, C.; Quan, C.; Ren, F.; Tian, F.; Wang, K. Fine-grained emotion analysis based on mixed model for product review. Int. J. Netw. Distrib. Comput. 2017, 5, 1–11. [Google Scholar] [CrossRef][Green Version]

- Archak, N.; Ghose, A.; Ipeirotis, P.G. Deriving the pricing power of product features by mining consumer reviews. Manag. Sci. 2011, 57, 1485–1509. [Google Scholar] [CrossRef]

- Yin, D.; Bond, S.D.; Zhang, H. Anxious or angry? Effects of discrete emotions on the perceived helpfulness of online reviews. MIS Q. 2014, 38, 539–560. [Google Scholar] [CrossRef]

- Severin, W. Another look at cue summation. AV Commun. Rev. 1967, 15, 233–245. [Google Scholar] [CrossRef]

- Dimoka, A.; Hong, Y.; Pavlou, P.A. On product uncertainty in online markets: Theory and Evidence. MIS Q. 2012, 36, 395–426. [Google Scholar] [CrossRef]

- Li, X.; Wu, C.; Mai, F. The effect of online reviews on product sales: A joint sentiment-topic analysis. Inf. Manag. 2019, 56, 172–184. [Google Scholar] [CrossRef]

- Guo, M.; Xiao, S. An empirical analysis of the factors driving customers’ purchase intention of green smart home products. Front. Psychol. 2023, 14, 1272889. [Google Scholar] [CrossRef]

- Forman, C.; Ghose, A.; Wiesenfeld, B. Examining the relationship between reviews and sales: The role of reviewer identity disclosure in electronic markets. Inf. Syst. Res. 2011, 19, 291–313. [Google Scholar] [CrossRef]

- Gutt, D.; Neumann, J.; Zimmermann, S.; Kundisch, D.; Chen, J. Design of review systems–A strategic instrument to shape online reviewing behavior and economic outcomes. J. Strateg. Inf. Syst. 2019, 28, 104–117. [Google Scholar] [CrossRef]

- Zhu, F.; Zhang, X. Impact of online consumer reviews on sales: The moderating role of product and consumer characteristics. J. Mark. 2010, 74, 133–148. [Google Scholar] [CrossRef]

- Duan, W.; Gu, B.; Whinston, A.B. The dynamics of online word- of-mouth and product sales: An empirical investigation of the movie industry. J. Retail. 2008, 84, 233–242. [Google Scholar] [CrossRef]

- Xu, X.; Wang, X.; Li, Y.; Haghighi, M. Business intelligence in online customer textual reviews: Understanding consumer perceptions and influential factors. Int. J. Inf. Manag. 2017, 37, 673–683. [Google Scholar] [CrossRef]

- Zhang, G.; Qiu, H. Competitive product identification and sales forecast based on consumer reviews. Math. Probl. Eng. 2021, 2021, 2370692. [Google Scholar] [CrossRef]

- Rutherford, D. Routledge Dictionary of Economics, 2nd ed.; Routledge: Oxfordshire, UK, 2002; p. 704. [Google Scholar]

- Rutz, O.J.; Bucklin, R.E. From generic to branded: A model of spillover in paid search advertising. J. Mark. Res. 2008, 48, 87–102. [Google Scholar] [CrossRef]

- Liang, C.; Shi, Z.; Raghu, T.S. The spillover of spotlight: Platform recommendation in the mobile app market. Inf. Syst. Res. 2019, 30, 1296–1318. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, F.; Zhou, Y. Brand spillover as a marketing strategy. Manag. Sci. 2022, 68, 5348–5363. [Google Scholar] [CrossRef]

- Joe, D.Y.; Oh, F.D. Spillover effects within business groups: The case of Korean chaebols. Manag. Sci. 2018, 64, 1396–1412. [Google Scholar] [CrossRef]

- Berger, J.; Sorensen, A.T.; Rasmussen, S.J. Positive effects of negative publicity: When negative reviews increase sales. Mark. Sci. 2010, 29, 815–827. [Google Scholar] [CrossRef]

- Deng, F.M.; Gong, X.Y.; Luo, P.; Liang, X.D. The underestimated online clout of hotel location factors: Spillover effect of online restaurant ratings on hotel ratings. Curr. Issues Tour. 2023, 1–9. [Google Scholar] [CrossRef]

- Kwark, Y.; Chen, J.; Raghunathan, S. Online product reviews: Implications for retailers and competing manufacturers. Inf. Syst. Res. 2014, 25, 93–110. [Google Scholar] [CrossRef]

- Zhai, M.; Wang, X.; Zhao, X. The importance of online customer reviews characteristics on remanufactured product sales: Evidence from the mobile phone market on Amazon.Com. J. Retail. Consum. Serv. 2024, 77, 103677. [Google Scholar] [CrossRef]

- Lovett, M.J.; Peres, R.; Shachar, R. On Brands and Word of Mouth. J. Mark. Res. 2013, 50, 427–444. [Google Scholar] [CrossRef]

- Duan, Y.; Liu, T.; Mao, Z. How online reviews and coupons affect sales and pricing: An empirical study based on e-commerce platform. J. Retail. Consum. Serv. 2022, 65, 102846. [Google Scholar] [CrossRef]

- Li, X.; Hitt, L.M. Self-selection and information role of online product reviews. Inf. Syst. Res. 2008, 19, 456–474. [Google Scholar] [CrossRef]

- Kamakura, W.A.; Kang, W. Chain-Wide and Store-Level Analysis for Cross-Category Management. J. Retail. 2007, 83, 159–170. [Google Scholar] [CrossRef]

- Oliver, R.L.; Rust, R.T.; Varki, S. Customer delight: Foundations, findings, and managerial insight. J. Retail. 1997, 73, 311–336. [Google Scholar] [CrossRef]

- Sun, B.; Kang, M.; Zhao, S. How online reviews with different influencing factors affect the diffusion of new products. Int. J. Consum. Stud. 2023, 47, 1377–1396. [Google Scholar] [CrossRef]

- Chen, Y.; Zhuang, J. Trend Conformity Behavior of Luxury Fashion Products for Chinese Consumers in the Social Media Age: Drivers and Underlying Mechanisms. Behav. Sci. 2024, 14, 521. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.; Yoon, Y. The Influence of Consumer Purchases on Purchase-Related Happiness: A Serial Mediation of Commitment and Selective Information Processing. Behav. Sci. 2023, 13, 396. [Google Scholar] [CrossRef]

- Kang, M.; Sun, B.; Liang, T.; Mao, H.-Y. A study on the influence of online reviews of new products on consumers’ purchase decisions: An empirical study on JD.com. Front. Psychol. 2022, 13, 983060. [Google Scholar] [CrossRef]

- Schneider, M.J.; Gupta, S. Forecasting sales of new and existing products using consumer reviews: A random projections approach. Int. J. Forecast. 2016, 32, 243–256. [Google Scholar] [CrossRef]

- Wells, J.D.; Valacich, J.S.; Hess, T.J. What signal are you sending? How website quality influences perceptions of product quality and purchase intentions. MIS Q. 2011, 373–396. [Google Scholar] [CrossRef]

- Jang, S.; Moutinho, L. Do price promotions drive consumer spending on luxury hotel services? The moderating roles of room price and user-generated content. Int. J. Hosp. Manag. 2019, 78, 27–35. [Google Scholar] [CrossRef]

- Luo, Y.; Ye, Q. The effects of online reviews, perceived value, and gender on continuance intention to use international online out shopping website: An elaboration likelihood model perspective. J. Int. Consum. Mark. 2019, 31, 250–269. [Google Scholar] [CrossRef]

- Yang, L.; Chen, J.; Tan, B.C. Peer in the Picture: An Explorative Study of Online Pictorial Reviews. PACIS. 2014, 388. [Google Scholar]

- Li, Q.; Huang, Z.J.; Christianson, K. Visual attention toward tourism photographs with text: An eye-tracking study. Tour. Manag. 2016, 54, 243–258. [Google Scholar] [CrossRef]

- Ma, Y.; Xiang, Z.; Du, Q.; Fan, W. Effects of user-provided photos on hotel review helpfulness: An analytical approach with deep leaning. Int. J. Hosp. Manag. 2018, 71, 120–131. [Google Scholar] [CrossRef]

- Lo, I.S.; McKercher, B.; Lo, A.; Cheung, C.; Law, R. Tourism and online photography. Tour. Manag. 2011, 32, 725–731. [Google Scholar] [CrossRef]

- Lawrence, M.; O’Connor, M. Sales forecasting updates: How good are they in practice? Int. J. Forecast. 2000, 16, 369–382. [Google Scholar] [CrossRef]

- Li, M.; Huang, L.; Tan, C.H.; Wei, K.K. Helpfulness of online product reviews as seen by consumers: Source and content features. Int. J. Electron. Commer. 2013, 17, 101–136. [Google Scholar] [CrossRef]

- Qiu, L.; Pang, J.; Lim, K.H. Effects of conflicting aggregated rating on eWOM review credibility and diagnosticity: The moderating role of review valence. Decis. Support Syst. 2012, 54, 631–643. [Google Scholar] [CrossRef]

- Korfiatis, N.; García-Bariocanal, E.; Sánchez-Alonso, S. Evaluating content quality and helpfulness of online product reviews: The interplay of review helpfulness vs. review content. Electron. Commer. Res. Appl. 2012, 11, 205–217. [Google Scholar] [CrossRef]

- Cao, Q.; Duan, W.; Gan, Q. Exploring determinants of voting for the “helpfulness” of online user reviews: A text mining approach. Decis. Support Syst. 2011, 50, 511–521. [Google Scholar] [CrossRef]

- Han, L.; Ma, Y.; Addo, P.C.; Liao, M.; Fang, J. The Role of Platform Quality on Consumer Purchase Intention in the Context of Cross-Border E-Commerce: The Evidence from Africa. Behav. Sci. 2023, 13, 385. [Google Scholar] [CrossRef]

- Cui, G.; Lui, H.K.; Guo, X. The effect of online consumer reviews on new product sales. Int. J. Electron. Commer. 2012, 17, 39–58. [Google Scholar] [CrossRef]

- Hong, H.; Xu, D.; Wang, G.A.; Fan, W. Understanding the determinants of online review helpfulness: A meta-analytic investigation. Decis. Support Syst. 2017, 102, 1–11. [Google Scholar] [CrossRef]

- Chen, P.; Dhanasobhon, S.; Smith, M.D. Ananalysis of the differential impact of reviews and reviewers at amazon.com. In Proceedings of the ICIS 2007—Twenty Eighth International Conference on Information Systems, Montreal, QC, Canada, 9–12 December 2007. [Google Scholar]

- Filieri, R. What makes online reviews helpful? A diagnosticity-adoption framework to explain informational and normative influences in e-WOM. J. Bus. Res. 2015, 68, 1261–1270. [Google Scholar] [CrossRef]

- Lee, J.H.; Jung, S.H.; Park, J. The role of entropy of review text sentiments on online WOM and movie box office sales. Electron. Commer. Res. Appl. 2017, 22, 42–52. [Google Scholar] [CrossRef]

- Jabr, W.; Zheng, Z. Know yourself and know your enemy: An analysis of firm recommendations and consumer reviews in a competitive environment. Manag. Inform. Syst. Quart. 2014, 38, 635–654. [Google Scholar] [CrossRef]

- Tversky, A.; Kahneman, D. Loss aversion in riskless choice: A reference-dependent model. Quart. J. Econom. 1991, 106, 1039–1061. [Google Scholar] [CrossRef]

- Kim, R.Y. When does online review matter to consumers? The effect of product quality information cues. Electron. Commer. Res. 2021, 21, 1011–1030. [Google Scholar] [CrossRef]

- Setia, P.; Setia, P.; Venkatesh, V.; Joglekar, S. Leveraging digital technologies: How information quality leads to localized capabilities and customer service performance. MIS Q. 2013, 565–590. [Google Scholar] [CrossRef]

- Che, T.; Peng, Z.; Lai, F.; Luo, X. Online prejudice and barriers to digital innovation: Empirical investigations of Chinese consumers. Inf. Syst. J. 2022, 32, 630–652. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, L.; Guo, X.; Law, R. The influence of online reviews to online hotel booking intentions. Int. J. Contemp. Hospit. Manag. 2015, 27, 1343–1364. [Google Scholar] [CrossRef]

- Elwalda, A.; Lü, K.; Ali, M. Perceived derived attributes of online customer reviews. Comput. Hum. Behav. 2016, 56, 306–319. [Google Scholar] [CrossRef]

- Wang, Y.; Kim, J.; Kim, J. The financial impact of online customer reviews in the restaurant industry: A moderating effect of brand equity. Int. J. Hospit. Manag. 2021, 95, 102895. [Google Scholar] [CrossRef]

- Guo, J.; Wang, X.; Wu, Y. Positive emotion bias: Role of emotional content from online customer reviews in purchase decisions. J. Retail. Consum. Serv. 2020, 52, 101891. [Google Scholar] [CrossRef]

- Filieri, R.; Acikgoz, F.; Ndou, V.; Dwivedi, Y. Is TripAdvisor still relevant? The influence of review credibility, review usefulness, and ease of use on consumers’ continuance intention. Int. J. Contemp. Hospit. Manag. 2021, 33, 199–223. [Google Scholar] [CrossRef]

- Floyd, K.; Freling, R.; Alhoqail, S.; Cho, H.Y.; Freling, T. How online product reviews affect retail sales: A meta-analysis. J. Retail. 2014, 90, 217–232. [Google Scholar] [CrossRef]

- Jang, S.; Chung, J.; Rao, V.R. The importance of functional and emotional content in online consumer reviews for product sales: Evidence from the mobile gaming market. J. Bus. Res. 2021, 130, 583–593. [Google Scholar] [CrossRef]

- Xie, C.; Tian, X.; Feng, X.; Zhang, X.; Ruana, J. Preference characteristics on consumers’ online consumption of fresh agricultural products under the outbreak of COVID-19: An analysis of online review data based on LDA model. Procedia Comput. Sci. 2022, 207, 4486–4495. [Google Scholar] [CrossRef]

| Topic | Keywords | Online Review |

|---|---|---|

| Quality | Quality (0.16), craftsmanship (0.13), good (0.095), texture (0.090), color discrepancy (0.076), fading (0.054), acceptable (0.052), threads (0.043), detail (0.036), washing (0.036) | The quality is pretty good, the collar stays crisp. After wearing it all summer, it is still good as new. |

| Size | Fit (0.088), right size (0.062), size (0.060), well-fitting (0.058), standard (0.052), size appropriate (0.052), off-size (0.045), correct size (0.039), accurate (0.038), suitable (0.035) | The clothes have arrived quickly, are the right size, and are well made. It is a satisfying shopping experience. |

| Fabric | Fabric (0.084), texture (0.075), wrinkles (0.069), pure cotton (0.061), material (0.054), wrinkle-resistant (0.054), breathable (0.050), delicate (0.034), materials used (0.020), skin-friendly (0.020) | The material feels comfortable, even better than some big brands, and the price is affordable. I will definitely come again to purchase. |

| Design | Cut (0.12), like (0.11), color (0.095), fit (0.061), fashionable (0.055), versatile (0.046), good-looking (0.037), slim-fitting (0.037), style (0.032), on-body effect (0.031) | Fast delivery speed, fashionable clothes design with no redundant elements, very slim. |

| Comfort | Comfortable (0.13), comfy (0.11), soft (0.090), breathable (0.034), lightweight (0.027), supple (0.027), comfortable to wear (0.026), breathability (0.025), well-fitting (0.024), easy to wear (0.021) | I bought the size 39. 100% long-staple cotton body wear is more comfortable. However, the color choice is a bit less. |

| Variables | Descriptions |

|---|---|

| Dependent variable | |

| Sales ranking of focal product (range: 0+) | |

| Independent variables | |

| Mean sentiment score of focal product’s online reviews (range: 0–1) | |

| Mean sentiment score of competitive products’ online reviews (range: 0–1) | |

| Mean rating of focal product (range: 0–5) | |

| Mean rating of competitive products (range: 0–5) | |

| Mean number of photos from competitive products’ online reviews (range: 0+) | |

| Mean product polarity attribute difference from competitive products’ online reviews (range: 0–5) | |

| Mean number of useful votes from competitive products’ online reviews (range: 0+) | |

| Control variables | |

| Mean volume of focal product’s online reviews (range: 0+) | |

| Whether a product is under promotion (unit: 0, 1) | |

| Mean number of brand votes of focal product (range: 0+) |

| Variables | Minimum | Maximum | Mean | Std. Dev. |

|---|---|---|---|---|

| 2 | 193 | 10.004 | 13.649 | |

| 0 | 0.999 | 0.927 | 0.111 | |

| 0 | 0.999 | 0.895 | 0.076 | |

| 1 | 5 | 4.95 | 0.397 | |

| 1 | 5 | 4.94 | 0.116 | |

| 0 | 4.376 | 0.589 | 0.754 | |

| −1 | 5 | 3.819 | 1.477 | |

| 0 | 33.21 | 1.231 | 1.934 | |

| 7 | 243 | 38.36 | 2.895 | |

| 0 | 1 | 0.953 | 0.211 | |

| 282 | 10,960 | 3773.67 | 2924.81 |

| Variables | Model 1 | Model 2 | Model 3 | Model 4 | Model 5 |

|---|---|---|---|---|---|

| 0.1011 *** (7.39) | 0.1032 *** (7.56) | 0.1002 *** (7.34) | 0.0947 *** (7.02) | 0.0963 *** (7.08) | |

| −0.1138 *** (−5.82) | −0.1198 *** (−4.75) | −0.1181 *** (−6.01) | −0.1123 *** (−5.81) | −0.1043 *** (−5.1) | |

| 0.0267 ** (2.41) | 0.0269 ** (2.43) | 0.0262 ** (2.36) | 0.0271 ** (2.46) | 0.0266 ** (2.43) | |

| −0.0103 (−0.63) | −0.0075 (−0.45) | −0.0141 (−0.85) | −0.0148 (−0.91) | −0.0156 (−0.95) | |

| −0.0419 ** (2.41) | −0.0312 * (1.78) | ||||

| −0.0283 ** (1.96) | −0.0253 * (1.76) | ||||

| −0.0814 *** (4.73) | −0.0735 *** (4.19) | ||||

| 0.0484 *** (3.18) | 0.0404 ** (2.6) | 0.0431 *** (2.79) | 0.0355 ** (2.32) | 0.0261 * (1.66) | |

| 0.0818 *** (6.84) | 0.0789 *** (6.57) | 0.0813 *** (6.8) | 0.0674 *** (5.51) | 0.0661 *** (5.41) | |

| 0.0394 *** (2.71) | 0.0385 *** (2.65) | 0.0404 *** (2.78) | 0.0241 * (1.83) | 0.0259 * (1.76) | |

| #products | 269 | 269 | 269 | 269 | 269 |

| #online review | 250,300 | 250,300 | 250,300 | 250,300 | 250,300 |

| N | 46,116 | 46,116 | 46,116 | 46,116 | 46,116 |

| R-squared | 0.1114 | 0.123 | 0.1163 | 0.1405 | 0.1501 |

| Variables | Remove Some Samples | Random Selecting | Mobile Phone |

|---|---|---|---|

| 0.1003 *** (7.24) | 0.0891 *** (5.76) | 0.1136 *** (2.71) | |

| −0.1178 *** (−5.96) | −0.1218 *** (−5.31) | −0.1249 *** (−2.63) | |

| 0.0328 *** (2.91) | 0.0362 *** (2.65) | 0.0834 ** (2.03) | |

| −0.0101 (−0.59) | −0.0391 (1.62) | −0.0957 * (−1.89) | |

| 0.0306 * (1.92) | 0.023 (1.36) | 0.1084 ** (2.45) | |

| 0.1022 *** (6.95) | 0.1036 *** (6.02) | 0.2658 ** (2.22) | |

| 0.0331 ** (2.28) | 0.0556 *** (3.22) | 3.1471 * (1.77) | |

| R-squared | 0.1337 | 0.1459 | 0.1937 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shan, S.; Yang, Y.; Li, C. Which Receives More Attention, Online Review Sentiment or Online Review Rating? Spillover Effect Analysis from JD.com. Behav. Sci. 2024, 14, 823. https://doi.org/10.3390/bs14090823

Shan S, Yang Y, Li C. Which Receives More Attention, Online Review Sentiment or Online Review Rating? Spillover Effect Analysis from JD.com. Behavioral Sciences. 2024; 14(9):823. https://doi.org/10.3390/bs14090823

Chicago/Turabian StyleShan, Siqing, Yangzi Yang, and Chenxi Li. 2024. "Which Receives More Attention, Online Review Sentiment or Online Review Rating? Spillover Effect Analysis from JD.com" Behavioral Sciences 14, no. 9: 823. https://doi.org/10.3390/bs14090823

APA StyleShan, S., Yang, Y., & Li, C. (2024). Which Receives More Attention, Online Review Sentiment or Online Review Rating? Spillover Effect Analysis from JD.com. Behavioral Sciences, 14(9), 823. https://doi.org/10.3390/bs14090823