The Impact of Chatbot Response Strategies and Emojis Usage on Customers’ Purchase Intention: The Mediating Roles of Psychological Distance and Performance Expectancy

Abstract

1. Introduction

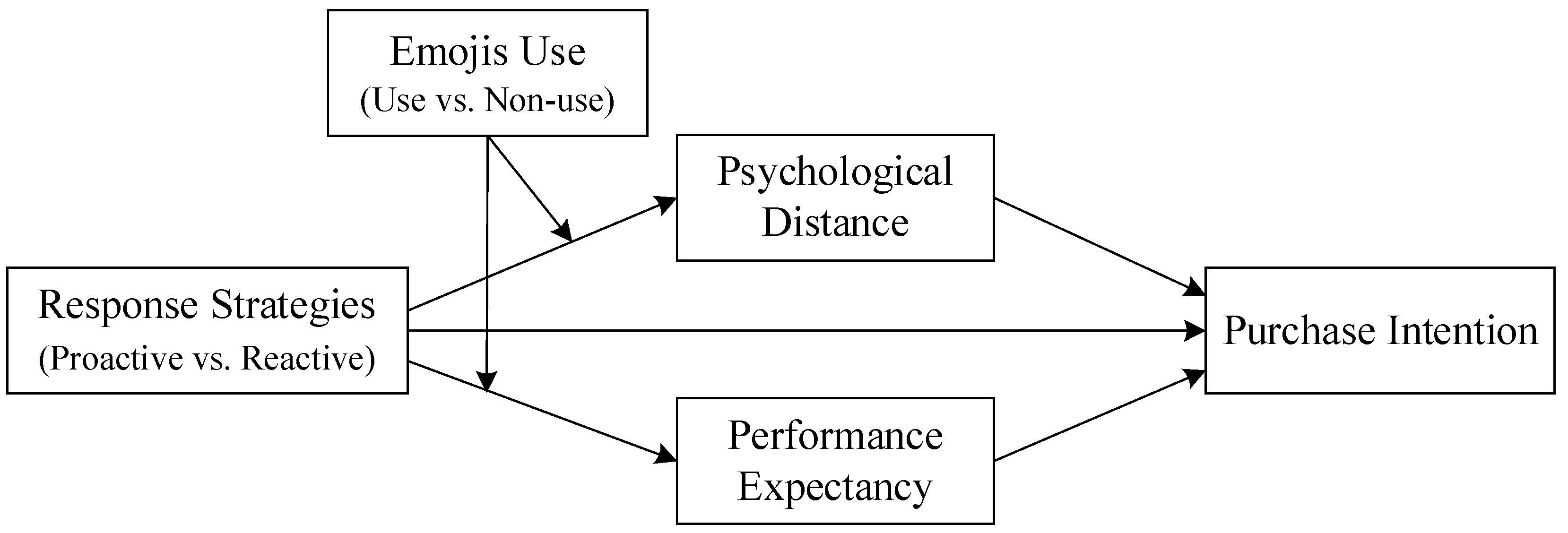

- RQ1: How do chatbot response strategies (proactive/reactive) directly influence user purchase intention?

- RQ2: How do psychological distance and performance expectancy mediate the relationship between chatbot response strategies and purchase intention?

- RQ3: How do emojis moderate the impact of chatbot response strategies on psychological distance and performance expectancy?

2. Literature Review

3. Hypothesis Development

3.1. Chatbot Response Strategies and Users’ Purchase Intention

3.2. The Mediating Role of Psychological Distance

3.3. The Mediating Role of Performance Expectancy

3.4. The Moderating Role of Emojis

4. Method and Results

4.1. Study 1

4.1.1. Pilot Test

4.1.2. Procedure and Measures

4.1.3. Results

4.2. Study 2

4.2.1. Pilot Test

4.2.2. Procedure and Measures

4.2.3. Results

4.3. Study 3

4.3.1. Pilot Test

4.3.2. Procedure and Measures

4.3.3. Results

5. Conclusions and Discussion

5.1. Research Findings

5.2. Theoretical Contribution

5.3. Managerial Implications

5.4. Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abdallah, W., Harraf, A., Mosusa, O., & Sartawi, A. (2023). Investigating factors impacting customer acceptance of artificial intelligence chatbot: Banking sector of Kuwait. International Journal of Applied Research in Management and Economics, 5(4), 45–58. [Google Scholar] [CrossRef]

- Adam, M., Wessel, M., & Benlian, A. (2021). AI-based chatbots in customer service and their effects on user compliance. Electronic Markets, 31(2), 427–445. [Google Scholar] [CrossRef]

- Aslam, F. (2023). The Impact of artificial intelligence on chatbot technology: A study on the current advancements and leading innovations. European Journal of Technology, 7(3), 62–72. [Google Scholar] [CrossRef]

- Azalan, N. S., Mokhtar, M. M., & Abdul Karim, A. H. (2022). Modelling e-Zakat acceptance among Malaysian: An application of UTAUT model during Covid19 pandemic. International Journal of Academic Research in Business and Social Sciences, 12(12), 1619–1625. [Google Scholar] [CrossRef] [PubMed]

- Bai, Q., Dan, Q., Mu, Z., & Yang, M. (2019). A systematic review of emoji: Current research and future perspectives. Frontiers in Psychology, 10, 2221. [Google Scholar] [CrossRef]

- Beattie, A., Edwards, A. P., & Edwards, C. (2020). A bot and a smile: Interpersonal impressions of chatbots and humans using emoji in computer-mediated communication. Communication Studies, 71(3), 409–427. [Google Scholar] [CrossRef]

- Blazevic, V., & Sidaoui, K. (2022). The TRISEC framework for optimizing conversational agent design across search, experience and credence service contexts. Journal of Service Management, 33(4/5), 733–746. [Google Scholar] [CrossRef]

- Blut, M., Wang, C., Wünderlich, N. V., & Brock, C. (2021). Understanding anthropomorphism in service provision: A meta-analysis of physical robots, chatbots, and other AI. Journal of the Academy of Marketing Science, 49(4), 632–658. [Google Scholar] [CrossRef]

- Buyukgoz, S., Grosinger, J., Chetouani, M., & Saffiotti, A. (2022). Two ways to make your robot proactive: Reasoning about human intentions or reasoning about possible futures. Frontiers in Robotics and AI, 9, 929267. [Google Scholar] [CrossRef]

- Cai, D., Li, H., & Law, R. (2022). Anthropomorphism and OTA chatbot adoption: A mixed methods study. Journal of Travel & Tourism Marketing, 39(2), 228–255. [Google Scholar] [CrossRef]

- Cavalheiro, B. P., Prada, M., Rodrigues, D. L., Lopes, D., & Garrido, M. V. (2022). Evaluating the adequacy of emoji use in positive and negative messages from close and distant senders. Cyberpsychology, Behavior, and Social Networking, 25(3), 194–199. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q., Lu, Y., Gong, Y., & Xiong, J. (2023). Can AI chatbots help retain customers? Impact of AI service quality on customer loyalty. Internet Research, 33(6), 2205–2243. [Google Scholar] [CrossRef]

- Chen, S., Li, X., Liu, K., & Wang, X. (2023). Chatbot or human? The impact of online customer service on consumers’ purchase intentions. Psychology & Marketing, 40(11), 2186–2200. [Google Scholar] [CrossRef]

- Chien, S.-Y., Lin, Y.-L., & Chang, B.-F. (2024). The effects of intimacy and proactivity on trust in human-humanoid robot interaction. Information Systems Frontiers, 26(1), 75–90. [Google Scholar] [CrossRef]

- Choudhury, A., & Shamszare, H. (2024). The impact of performance expectancy, workload, risk, and satisfaction on trust in ChatGPT: Cross-sectional survey analysis. JMIR Human Factors, 11(1), e55399. [Google Scholar] [CrossRef]

- Chung, S. I., & Han, K.-H. (2022). Consumer perception of chatbots and purchase intentions: Anthropomorphism and conversational relevance. International Journal of Advanced Culture Technology, 10(1), 211–229. [Google Scholar] [CrossRef]

- Crolic, C., Thomaz, F., Hadi, R., & Stephen, A. T. (2021). Blame the bot: Anthropomorphism and anger in customer–chatbot interactions. Journal of Marketing, 86(1), 132–148. [Google Scholar] [CrossRef]

- Daniel, T. A., & Camp, A. L. (2020). Emojis affect processing fluency on social media. Psychology of Popular Media, 9(2), 208–213. [Google Scholar] [CrossRef]

- De Cicco, R., Silva, S. C., & Alparone, F. R. (2020). Millennials’ attitude toward chatbots: An experimental study in a social relationship perspective. International Journal of Retail & Distribution Management, 48(11), 1213–1233. [Google Scholar] [CrossRef]

- Eiskjær, S., Pedersen, C. F., Skov, S. T., & Andersen, M. Ø. (2023). Usability and performance expectancy govern spine surgeons’ use of a clinical decision support system for shared decision-making on the choice of treatment of common lumbar degenerative disorders. Frontiers in Digital Health, 5, 1225540. [Google Scholar] [CrossRef]

- El-Shihy, D., Abdelraouf, M., Hegazy, M., & Hassan, N. (2024). The influence of AI chatbots in fintech services on customer loyalty within the banking industry. Future of Business Administration, 3(1), 16–28. [Google Scholar] [CrossRef]

- Erle, T. M., Schmid, K., Goslar, S. H., & Martin, J. D. (2022). Emojis as social information in digital communication. Emotion, 22(7), 1529–1543. [Google Scholar] [CrossRef] [PubMed]

- Fournier-Tombs, E., & McHardy, J. (2023). A medical ethics framework for conversational artificial intelligence. Journal of Medical Internet Research, 25, e43068. [Google Scholar] [CrossRef]

- Ghosh, S., Ness, S., & Salunkhe, S. (2024). The role of AI enabled chatbots in omnichannel customer service. Journal of Engineering Research and Reports, 26(6), 327–345. [Google Scholar] [CrossRef]

- Gnewuch, U., Morana, S., Hinz, O., Kellner, R., & Maedche, A. (2023). More than a bot? The impact of disclosing human involvement on customer interactions with hybrid service agents. Information Systems Research, 35(3), 936–955. [Google Scholar] [CrossRef]

- Grosinger, J., Pecora, F., & Saffiotti, A. (2019). Robots that maintain equilibrium: Proactivity by reasoning about user intentions and preferences. Pattern Recognition Letters, 118, 85–93. [Google Scholar] [CrossRef]

- Gursoy, D., Chi, O. H., Lu, L., & Nunkoo, R. (2019). Consumers acceptance of artificially intelligent (AI) device use in service delivery. International Journal of Information Management, 49, 157–169. [Google Scholar] [CrossRef]

- Han, E., Yin, D., & Zhang, H. (2023). Bots with feelings: Should AI agents express positive emotion in customer service? Information Systems Research, 34(3), 1296–1311. [Google Scholar] [CrossRef]

- Hao, M., Cao, W., Wu, M., Liu, Z., She, J., Chen, L., & Zhang, R. (2017). Proposal of initiative service model for service robot. CAAI Transactions on Intelligence Technology, 2(4), 148–153. [Google Scholar] [CrossRef]

- Haupt, M., Rozumowski, A., Freidank, J., & Haas, A. (2023). Seeking empathy or suggesting a solution? Effects of chatbot messages on service failure recovery. Electronic Markets, 33(1), 56. [Google Scholar] [CrossRef]

- Hu, L., Filieri, R., Acikgoz, F., Zollo, L., & Rialti, R. (2023). The effect of utilitarian and hedonic motivations on mobile shopping outcomes. A cross-cultural analysis. International Journal of Consumer Studies, 47(2), 751–766. [Google Scholar] [CrossRef]

- Huang, M.-H., & Rust, R. T. (2021). Engaged to a robot? The role of AI in service. Journal of Service Research, 24(1), 30–41. [Google Scholar] [CrossRef]

- Huang, Y., & Gursoy, D. (2024). Customers’ online service encounter satisfaction with chatbots: Interaction effects of language style and decision-making journey stage. International Journal of Contemporary Hospitality Management, 36, 4074–4091. [Google Scholar] [CrossRef]

- Huda, N. U., Sahito, S. F., Gilal, A. R., Abro, A., Alshanqiti, A., Alsughayyir, A., & Palli, A. S. (2024). Impact of contradicting subtle emotion cues on large language models with various prompting techniques. International Journal of Advanced Computer Science and Applications (IJACSA), 15(4), 407. [Google Scholar] [CrossRef]

- Huțul, T.-D., Popescu, A., Karner-Huțuleac, A., Holman, A. C., & Huțul, A. (2024). Who’s willing to lay on the virtual couch? Attitudes, anthropomorphism and need for human interaction as factors of intentions to use chatbots for psychotherapy. Counselling and Psychotherapy Research, 24(4), 1479–1488. [Google Scholar] [CrossRef]

- Ischen, C., Araujo, T., Van Noort, G., Voorveld, H., & Smit, E. (2020). “I am here to assist you today”: The role of entity, interactivity and experiential perceptions in chatbot persuasion. Journal of Broadcasting & Electronic Media, 64(4), 615–639. [Google Scholar] [CrossRef]

- Jasin, J., Ng, H. T., Atmosukarto, I., Iyer, P., Osman, F., Wong, P. Y. K., Pua, C. Y., & Cheow, W. S. (2023). The implementation of chatbot-mediated immediacy for synchronous communication in an online chemistry course. Education and Information Technologies, 28(8), 10665–10690. [Google Scholar] [CrossRef] [PubMed]

- Jiang, H., Cheng, Y., Yang, J., & Gao, S. (2022). AI-powered chatbot communication with customers: Dialogic interactions, satisfaction, engagement, and customer behavior. Computers in Human Behavior, 134, 107329. [Google Scholar] [CrossRef]

- Jiang, K., Qin, M., & Li, S. (2022). Chatbots in retail: How do they affect the continued use and purchase intentions of Chinese consumers? Journal of Consumer Behaviour, 21(4), 756–772. [Google Scholar] [CrossRef]

- Jiménez-Barreto, J., Rubio, N., & Molinillo, S. (2023). How chatbot language shapes consumer perceptions: The role of concreteness and shared competence. Journal of Interactive Marketing, 58(4), 380–399. [Google Scholar] [CrossRef]

- Kang, J.-W., & Namkung, Y. (2019). The information quality and source credibility matter in customers’ evaluation toward food O2O commerce. International Journal of Hospitality Management, 78, 189–198. [Google Scholar] [CrossRef]

- Keidar, O., Parmet, Y., Olatunji, S. A., & Edan, Y. (2024). Comparison of proactive and reactive interaction modes in a mobile robotic telecare study. Applied Ergonomics, 118, 104269. [Google Scholar] [CrossRef]

- Kim, A. J., Yang, J., Jang, Y., & Baek, J. S. (2021). Acceptance of an informational antituberculosis chatbot among korean adults: Mixed methods research. JMIR mHealth and uHealth, 9(11), e26424. [Google Scholar] [CrossRef]

- Kim, J., & Lucas, A. F. (2024). Perceptions and acceptance of cashless gambling technology: An empirical study of U.S. and Australian consumers. Cornell Hospitality Quarterly, 65(2), 251–265. [Google Scholar] [CrossRef]

- Klein, K., & Martinez, L. F. (2023). The impact of anthropomorphism on customer satisfaction in chatbot commerce: An experimental study in the food sector. Electronic Commerce Research, 23(4), 2789–2825. [Google Scholar] [CrossRef]

- Li, B., Yao, R., & Nan, Y. (2023). How do friendship artificial intelligence chatbots (FAIC) benefit the continuance using intention and customer engagement? Journal of Consumer Behaviour, 22(6), 1376–1398. [Google Scholar] [CrossRef]

- Li, D., Liu, C., & Xie, L. (2022). How do consumers engage with proactive service robots? The roles of interaction orientation and corporate reputation. International Journal of Contemporary Hospitality Management, 34(11), 3962–3981. [Google Scholar] [CrossRef]

- Li, M., & Wang, R. (2023). Chatbots in e-commerce: The effect of chatbot language style on customers’ continuance usage intention and attitude toward brand. Journal of Retailing and Consumer Services, 71, 103209. [Google Scholar] [CrossRef]

- Li, X., & Sung, Y. (2021). Anthropomorphism brings us closer: The mediating role of psychological distance in User–AI assistant interactions. Computers in Human Behavior, 118, 106680. [Google Scholar] [CrossRef]

- Li, Y., & Shin, H. (2023). Should a luxury brand’s chatbot use emoticons? Impact on brand status. Journal of Consumer Behaviour, 22(3), 569–581. [Google Scholar] [CrossRef]

- Liang, H.-Y., Chu, C.-Y., & Lin, J.-S.C. (2020). Engaging customers with employees in service encounters: Linking employee and customer service engagement behaviors through relational energy and interaction cohesion. Journal of Service Management, 31(6), 1071–1105. [Google Scholar] [CrossRef]

- Liu, D., Lv, Y., & Huang, W. (2023). How do consumers react to chatbots’ humorous emojis in service failures. Technology in Society, 73, 102244. [Google Scholar] [CrossRef]

- Liu, M., Yang, Y., Ren, Y., Jia, Y., Ma, H., Luo, J., Fang, S., Qi, M., & Zhang, L. (2024). What influences consumer AI chatbot use intention? An application of the extended technology acceptance model. Journal of Hospitality and Tourism Technology, 15(4), 667–689. [Google Scholar] [CrossRef]

- Liu, S. Q., Vakeel, K. A., Smith, N. A., Alavipour, R. S., Wei, C., & Wirtz, J. (2024). AI concierge in the customer journey: What is it and how can it add value to the customer? Journal of Service Management, 35(6), 136–158. [Google Scholar] [CrossRef]

- Lv, X., Liu, Y., Luo, J., Liu, Y., & Li, C. (2021). Does a cute artificial intelligence assistant soften the blow? The impact of cuteness on customer tolerance of assistant service failure. Annals of Tourism Research, 87, 103114. [Google Scholar] [CrossRef]

- Lv, X., Luo, J., Liang, Y., Liu, Y., & Li, C. (2022). Is cuteness irresistible? The impact of cuteness on customers’ intentions to use AI applications. Tourism Management, 90, 104472. [Google Scholar] [CrossRef]

- Maar, D., Besson, E., & Kefi, H. (2023). Fostering positive customer attitudes and usage intentions for scheduling services via chatbots. Journal of Service Management, 34(2), 208–230. [Google Scholar] [CrossRef]

- Majeed, S., & Kim, W. G. (2024). Antecedents and consequences of conceptualizing online hyperconnected brand selection. Journal of Consumer Marketing, 41(3), 328–339. [Google Scholar] [CrossRef]

- Matini, A., Lekata, S., & Kabaso, B. (2024). The effects of stress and chatbot services usage on customer intention for purchase on E-commerce sites. International Journal on Data Science and Technology, 10(1), 1–10. [Google Scholar] [CrossRef]

- Meyer, N., Weiger, W., & Hammerschmidt, M. (2022). Trust me, I’m a bot—Repercussions of chatbot disclosure in different service frontline settings. Journal of Service Management, 33(2), 221–245. [Google Scholar] [CrossRef]

- Milenia Ayukharisma, M. A., & Budi Santoso, D. (2024). Examining healthcare profesional’s acceptance of electronic medical records system using extended UTAUT2. Buana Information Technology and Computer Sciences (BIT and CS), 5(1), 19–28. [Google Scholar] [CrossRef]

- Mostafa, R. B., & Kasamani, T. (2022). Antecedents and consequences of chatbot initial trust. European Journal of Marketing, 56(6), 1748–1771. [Google Scholar] [CrossRef]

- Myin, M. T., & Watchravesringkan, K. (2024). Investigating consumers’ adoption of AI chatbots for apparel shopping. Journal of Consumer Marketing, 41(3), 314–327. [Google Scholar] [CrossRef]

- Nguyen, M., Casper Ferm, L.-E., Quach, S., Pontes, N., & Thaichon, P. (2023). Chatbots in frontline services and customer experience: An anthropomorphism perspective. Psychology & Marketing, 40(11), 2201–2225. [Google Scholar] [CrossRef]

- Oh, Y. J., Zhang, J., Fang, M.-L., & Fukuoka, Y. (2021). A systematic review of artificial intelligence chatbots for promoting physical activity, healthy diet, and weight loss. International Journal of Behavioral Nutrition and Physical Activity, 18(1), 160. [Google Scholar] [CrossRef] [PubMed]

- Olk, S., Tscheulin, D. K., & Lindenmeier, J. (2021). Does it pay off to smile even it is not authentic? Customers’ involvement and the effectiveness of authentic emotional displays. Marketing Letters, 32(2), 247–260. [Google Scholar] [CrossRef]

- Otterbring, T., Arsenovic, J., Samuelsson, P., Malodia, S., & Dhir, A. (2024). Going the extra mile, now or after a while: The impact of employee proactivity in retail service encounters on customers’ shopping responses. British Journal of Management, 35(3), 1425–1448. [Google Scholar] [CrossRef]

- Paraskevi, G., Saprikis, V., & Avlogiaris, G. (2023). Modeling nonusers’ behavioral intention towards mobile chatbot adoption: An extension of the UTAUT2 model with mobile service quality determinants. Human Behavior and Emerging Technologies, 2023(1), 8859989. [Google Scholar] [CrossRef]

- Park, G., Chung, J., & Lee, S. (2024). Human vs. machine-like representation in chatbot mental health counseling: The serial mediation of psychological distance and trust on compliance intention. Current Psychology, 43(5), 4352–4363. [Google Scholar] [CrossRef]

- Pillai, R., Ghanghorkar, Y., Sivathanu, B., Algharabat, R., & Rana, N. P. (2024). Adoption of artificial intelligence (AI) based employee experience (EEX) chatbots. Information Technology & People, 37(1), 449–478. [Google Scholar] [CrossRef]

- Pizzi, G., Scarpi, D., & Pantano, E. (2021). Artificial intelligence and the new forms of interaction: Who has the control when interacting with a chatbot? Journal of Business Research, 129, 878–890. [Google Scholar] [CrossRef]

- Ramesh, A., & Chawla, V. (2022). Chatbots in marketing: A literature review using morphological and co-occurrence analyses. Journal of Interactive Marketing, 57(3), 472–496. [Google Scholar] [CrossRef]

- Riordan, M. A. (2017). Emojis as tools for emotion work: Communicating affect in text messages. Journal of Language and Social Psychology, 36(5), 549–567. [Google Scholar] [CrossRef]

- Romero-Rodríguez, J.-M., Ramírez-Montoya, M.-S., Buenestado-Fernández, M., & Lara-Lara, F. (2023). Use of ChatGPT at university as a tool for complex thinking: Students’ perceived usefulness. Journal of New Approaches in Educational Research, 12(2), 323–339. [Google Scholar] [CrossRef]

- Roy, R., & Naidoo, V. (2021). Enhancing chatbot effectiveness: The role of anthropomorphic conversational styles and time orientation. Journal of Business Research, 126, 23–34. [Google Scholar] [CrossRef]

- Sands, S., Ferraro, C., Campbell, C., & Tsao, H.-Y. (2021). Managing the human–chatbot divide: How service scripts influence service experience. Journal of Service Management, 32(2), 246–264. [Google Scholar] [CrossRef]

- Schanke, S., Burtch, G., & Ray, G. (2021). Estimating the impact of “humanizing” customer service chatbots. Information Systems Research, 32(3), 736–751. [Google Scholar] [CrossRef]

- Schelble, B. G., Flathmann, C., McNeese, N. J., O’Neill, T., Pak, R., & Namara, M. (2023). Investigating the effects of perceived teammate artificiality on human performance and cognition. International Journal of Human–Computer Interaction, 39(13), 2686–2701. [Google Scholar] [CrossRef]

- Shin, H., Bunosso, I., & Levine, L. R. (2023). The influence of chatbot humour on consumer evaluations of services. International Journal of Consumer Studies, 47(2), 545–562. [Google Scholar] [CrossRef]

- Song, M., Zhang, H., Xing, X., & Duan, Y. (2023). Appreciation vs. apology: Research on the influence mechanism of chatbot service recovery based on politeness theory. Journal of Retailing and Consumer Services, 73, 103323. [Google Scholar] [CrossRef]

- Terblanche, N., & Kidd, M. (2022). Adoption factors and moderating effects of age and gender that influence the intention to use a non-directive reflective coaching chatbot. SAGE Open, 12(2), 215824402210961. [Google Scholar] [CrossRef]

- Tian, W., Ge, J., Zhao, Y., & Zheng, X. (2024). AI Chatbots in Chinese higher education: Adoption, perception, and influence among graduate students—An integrated analysis utilizing UTAUT and ECM models. Frontiers in Psychology, 15, 1268549. [Google Scholar] [CrossRef] [PubMed]

- Trautmann, S. T. (2019). Distance from a distance: The robustness of psychological distance effects. Theory and Decision, 87(1), 1–15. [Google Scholar] [CrossRef]

- Trope, Y., & Liberman, N. (2000). Temporal construal and time-dependent changes in preference. Journal of Personality and Social Psychology, 79(6), 876–889. [Google Scholar] [CrossRef]

- Trzebiński, W., Claessens, T., Buhmann, J., De Waele, A., Hendrickx, G., Van Damme, P., Daelemans, W., & Poels, K. (2023). The effects of expressing empathy/autonomy support using a COVID-19 vaccination chatbot: Experimental study in a sample of Belgian adults. JMIR Formative Research, 7, e41148. [Google Scholar] [CrossRef] [PubMed]

- Tsai, W.-H. S., Liu, Y., & Chuan, C.-H. (2021). How chatbots’ social presence communication enhances consumer engagement: The mediating role of parasocial interaction and dialogue. Journal of Research in Interactive Marketing, 15(3), 460–482. [Google Scholar] [CrossRef]

- Tsaur, S.-H., & Yen, C.-H. (2019). Service redundancy in fine dining: Evidence from Taiwan. International Journal of Contemporary Hospitality Management, 31(2), 830–854. [Google Scholar] [CrossRef]

- Vu, H. N., & Nguyen, T. K. C. (2022). The impact of AI chatbot on customer willingness to pay: An empirical investigation in the hospitality industry. Journal of Trade Science, 10, 105–116. [Google Scholar] [CrossRef]

- Wang, H., Gupta, S., Singhal, A., Muttreja, P., Singh, S., Sharma, P., & Piterova, A. (2022). An artificial intelligence chatbot for young people’s sexual and reproductive health in India (SnehAI): Instrumental case study. Journal of Medical Internet Research, 24(1), e29969. [Google Scholar] [CrossRef] [PubMed]

- Wu, R., Chen, J., Lu Wang, C., & Zhou, L. (2022). The influence of emoji meaning multipleness on perceived online review helpfulness: The mediating role of processing fluency. Journal of Business Research, 141, 299–307. [Google Scholar] [CrossRef]

- Xiao, Z., Zhou, M. X., Liao, Q. V., Mark, G., Chi, C., Chen, W., & Yang, H. (2020). Tell me about yourself: Using an AI-powered chatbot to conduct conversational surveys with open-ended questions. ACM Transactions on Computer-Human Interaction, 27(3), 1–37. [Google Scholar] [CrossRef]

- Xie, T., Pentina, I., & Hancock, T. (2023). Friend, mentor, lover: Does chatbot engagement lead to psychological dependence? Journal of Service Management, 34(4), 806–828. [Google Scholar] [CrossRef]

- Xu, F., Niu, W., Li, S., & Bai, Y. (2020). The mechanism of word-of-mouth for tourist destinations in crisis. SAGE Open, 10(2), 215824402091949. [Google Scholar] [CrossRef]

- Xu, Y., Zhang, J., Chi, R., & Deng, G. (2022a). Enhancing customer satisfaction with chatbots: The influence of anthropomorphic communication styles and anthropomorphised roles. Nankai Business Review International, 14(2), 249–271. [Google Scholar] [CrossRef]

- Xu, Y., Zhang, J., & Deng, G. (2022b). Enhancing customer satisfaction with chatbots: The influence of communication styles and consumer attachment anxiety. Frontiers in Psychology, 13, 902782. [Google Scholar] [CrossRef] [PubMed]

- Yang, K., & Qian, S. (2024). Your smiling face is impolite to me: A study of the smiling face emoji in Chinese computer-mediated communication. Social Science Computer Review, 42(4), 947–960. [Google Scholar] [CrossRef]

- Yanxia, C., Shijia, Z., & Yuyang, X. (2023). A meta-analysis of the effect of chatbot anthropomorphism on the customer journey. Marketing Intelligence & Planning, 42(1), 1–22. [Google Scholar] [CrossRef]

- Yoon, J., & Yu, H. (2022). Impact of customer experience on attitude and utilization intention of a restaurant-menu curation chatbot service. Journal of Hospitality and Tourism Technology, 13(3), 527–541. [Google Scholar] [CrossRef]

- Youn, K., & Cho, M. (2023). Business types matter: New insights into the effects of anthropomorphic cues in AI chatbots. Journal of Services Marketing, 37(8), 1032–1045. [Google Scholar] [CrossRef]

- Yu, S., & Zhao, L. (2024). Emojifying chatbot interactions: An exploration of emoji utilization in human-chatbot communications. Telematics and Informatics, 86, 102071. [Google Scholar] [CrossRef]

- Zhou, A., Tsai, W.-H. S., & Men, L. R. (2024). Optimizing AI social chatbots for relational outcomes: The effects of profile design, communication strategies, and message framing. International Journal of Business Communication, 23294884241229223. [Google Scholar] [CrossRef]

- Zhu, Y., Zhang, J., & Wu, J. (2023a). Who did what and when? The effect of chatbots’ service recovery on customer satisfaction and revisit intention. Journal of Hospitality and Tourism Technology, 14(3), 416–429. [Google Scholar] [CrossRef]

- Zhu, Y., Zhang, R., Zou, Y., & Jin, D. (2023b). Investigating customers’ responses to artificial intelligence chatbots in online travel agencies: The moderating role of product familiarity. Journal of Hospitality and Tourism Technology, 14(2), 208–224. [Google Scholar] [CrossRef]

| Perspectives | Sources | Methods/Experimental Design | Findings |

|---|---|---|---|

| Interaction modes | (Keidar et al., 2024) | Two (interaction modes: proactive vs. reactive) × two (types of user populations: with vs. without technological backgrounds) | The proactive mode can increase the user’s situation awareness, improve the user’s performance, and reduce the users’ perceived workload in the reactive mode. |

| Social manner and expressive behavior | (Chien et al., 2024) | Two (social manner: proactive vs. reactive) × two (expressive behavior: intimate vs. impassive) | When a social humanoid robot behaves in a more proactive manner and is perceived as more intimate, users are more willing to trust the robot, thereby increasing their intention to use. |

| Politeness strategy | (Song et al., 2023) | Two (politeness strategies: appreciation vs. apology) × two (time pressure: high vs. low) | Following a service failure, the appreciation politeness strategy enhances consumers’ post-recovery satisfaction more effectively than the apology strategy. This effect is mediated by face concern and moderated by time pressure. |

| Interaction style | (De Cicco et al., 2020) | Two (visual cues: avatar presence vs. avatar absence) × two (interaction styles: social-oriented vs. task-oriented) | Applying a social-oriented interaction style increases users’ perception of social presence, while an insignificant effect was found for avatar presence. |

| Conversation initiation | (Pizzi et al., 2021) | Two (assistant type: anthropomorphic vs. non-anthropomorphic) × two (assistant initiation: user- vs. system-initiated) | An automatically activated non-anthropomorphic digital assistant yields higher satisfaction and user experience compared to a human-like, consumer-activated one. |

| Conversational style | (Roy & Naidoo, 2021) | Two (time orientation: present vs. future) × two (anthropomorphic conversation style: warm vs. competent) | For a present orientation, a warm chatbot conversation boosts attitude and purchase intention through brand warmth. For a future orientation, a competent chatbot conversation enhances these outcomes via brand competence. |

| Communication strategy | (Tsai et al., 2021) | Two (social presence communication: high vs. low) × two (anthropomorphic vs. non-anthropomorphic bot profile) | The impact of chatbots’ high social presence communication on consumer engagement is mediated by perceived parasocial interaction and dialog. Moreover, anthropomorphic profile design enhances these effects through psychological mediators. |

| Communication style | (Shin et al., 2023) | (i) Two (humor: no humor vs. humor) × two (agent type: chatbot vs. human) (ii) humor style (affiliative vs. aggressive) | Humor used by chatbots boosts service satisfaction, with this effect mediated by increased anthropomorphism and interestingness of the interactions. Socially appropriate (i.e., affiliative) humor, compared to inappropriate (i.e., aggressive) humor, leads to greater service satisfaction. |

| Communication style | (Maar et al., 2023) | Two (communication style: high vs. low social orientation) × two (customer generation: generation X [GenX] vs. generation Z [GenZ]) × two (service context: restaurant vs. medical) | GenZ views chatbots more positively than GenX, due to greater perceptions of warmth and competence. While GenZ’s attitudes toward chatbots are similar regardless of social orientation, GenX prefers chatbots with high social orientation, seeing them as warmer and more favorable. |

| Language style | (Y. Huang & Gursoy, 2024) | Two (language style: abstract language vs. concrete language) × two (decision-making journey stage: informational stage vs. transactional stage) | During the informational stage, an abstract language style in chatbots strongly influences service satisfaction through emotional support. In the transactional stage, a concrete language style in chatbots strongly impacts service satisfaction through informational support. |

| Language style | (M. Li & Wang, 2023) | Two (chatbot language style: formal vs. informal) × two (brand affiliation: customer vs. non-customer) | Informal chatbot language increases customers’ continued usage intention and brand attitude through parasocial interaction. Brand affiliation moderates this effect, weakening it for those with no prior brand relationship. |

| Language concreteness | (Jiménez-Barreto et al., 2023). | Two (language concreteness: high vs. low) × three (conversation stage: opening vs. query/response vs. closing) | High chatbot language concreteness enhances perceived chatbot and consumer competence, satisfaction, and perceived shopping efficiency. |

| Service scripts | (Sands et al., 2021) | Two (service interaction: human vs. chatbot) × two (service script: education vs. entertainment) | When using an education script, human service agents significantly outperform chatbots in satisfaction and purchase intention, with emotion and rapport fully mediating these effects. |

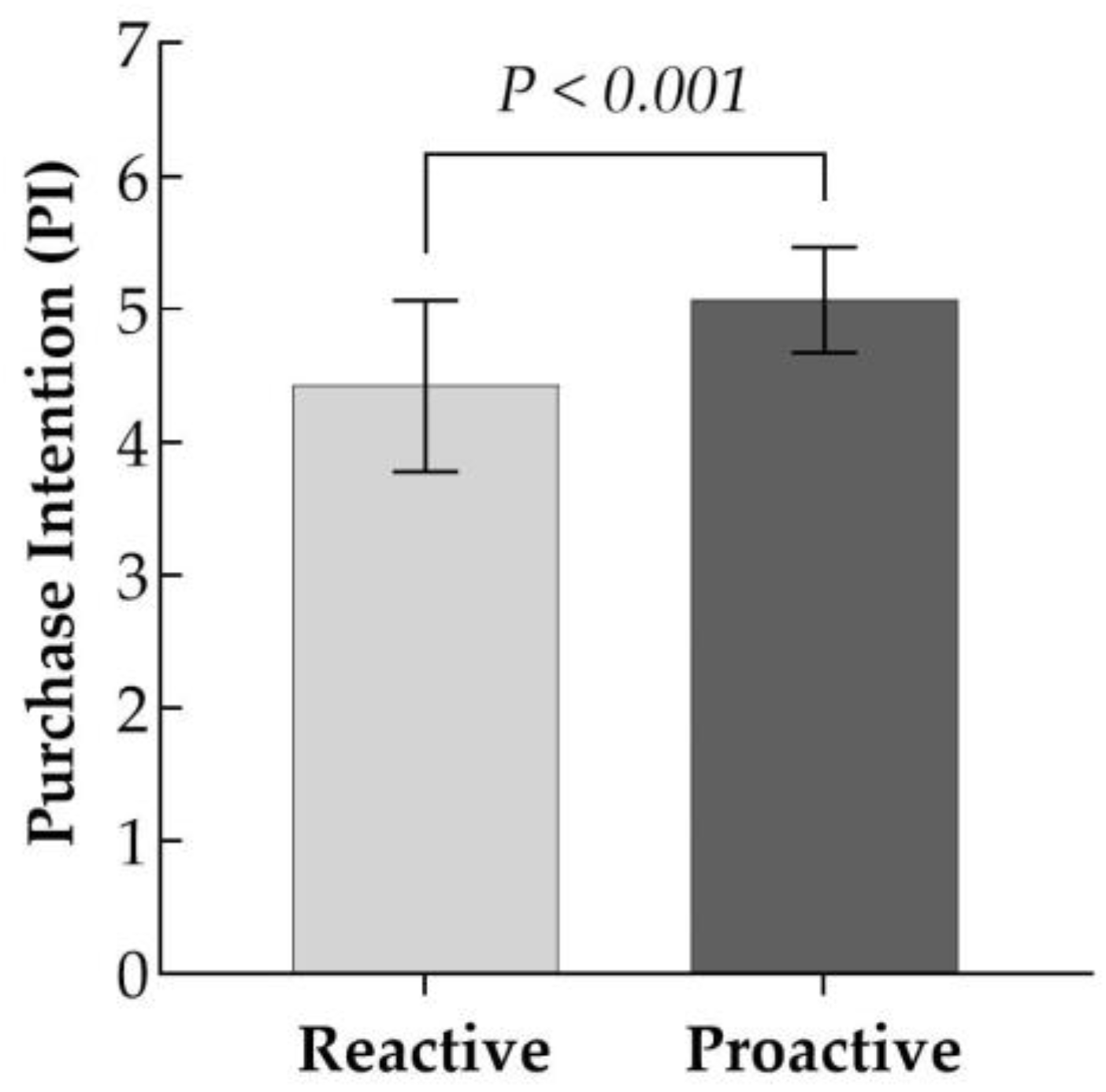

| Variables | Experimental Groups | F | p | |

|---|---|---|---|---|

| Proactive | Reactive | |||

| Psychological distance | 4.853 | 4.054 | 94.730 | 0.000 |

| Performance expectancy | 4.787 | 3.986 | 78.902 | 0.000 |

| Purchase intention | 5.186 | 4.495 | 83.471 | 0.000 |

| Effects | Mediating Variables | Effects | se | t | p | 95% CI |

|---|---|---|---|---|---|---|

| Direct effect | — | 0.237 | 0.082 | 2.900 | 0.004 | [0.075, 0.398] |

| Mediating effects | Psychological distance | 0.221 | 0.086 | — | — | [0.048, 0.388] |

| Performance expectancy | 0.234 | 0.078 | — | — | [0.086, 0.398] |

| Variables | Experimental Groups with Emojis | Experimental Groups without Emojis | ||||||

|---|---|---|---|---|---|---|---|---|

| Proactive | Reactive | F | p | Proactive | Reactive | F | p | |

| Psychological distance | 5.639 | 3.961 | 440.068 | 0.000 | 4.930 | 3.667 | 184.091 | 0.000 |

| Performance expectancy | 4.877 | 3.481 | 272.934 | 0.000 | 4.829 | 3.661 | 143.639 | 0.000 |

| Purchase intention | 5.881 | 4.251 | 429.216 | 0.000 | 4.952 | 3.811 | 161.106 | 0.000 |

| Type III Sums of Squares | df | Mean Square | F | Sig. | |

|---|---|---|---|---|---|

| Corrected Model | 183.534 | 3 | 61.178 | 214.74 | 0.000 |

| Response Strategies (RS) | 163.236 | 1 | 163.236 | 572.972 | 0.000 |

| Emojis | 19.012 | 1 | 19.012 | 66.733 | 0.000 |

| RS × Emojis | 3.251 | 1 | 3.251 | 11.410 | 0.001 |

| Error | 84.898 | 298 | 0.285 |

| Type III Sums of Squares | df | Mean Square | F | Sig. | |

|---|---|---|---|---|---|

| Corrected Model | 125.415 | 3 | 41.805 | 132.918 | 0.000 |

| Response Strategies (RS) | 124.032 | 1 | 124.032 | 394.359 | 0.000 |

| Emojis | 0.333 | 1 | 0.333 | 1.060 | 0.304 |

| RS × Emojis | 0.985 | 1 | 0.985 | 3.130 | 0.078 |

| Error | 93.725 | 298 | 0.315 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, H.; Lu, X.; Xu, J. The Impact of Chatbot Response Strategies and Emojis Usage on Customers’ Purchase Intention: The Mediating Roles of Psychological Distance and Performance Expectancy. Behav. Sci. 2025, 15, 117. https://doi.org/10.3390/bs15020117

Meng H, Lu X, Xu J. The Impact of Chatbot Response Strategies and Emojis Usage on Customers’ Purchase Intention: The Mediating Roles of Psychological Distance and Performance Expectancy. Behavioral Sciences. 2025; 15(2):117. https://doi.org/10.3390/bs15020117

Chicago/Turabian StyleMeng, Hua, Xinyuan Lu, and Jiangling Xu. 2025. "The Impact of Chatbot Response Strategies and Emojis Usage on Customers’ Purchase Intention: The Mediating Roles of Psychological Distance and Performance Expectancy" Behavioral Sciences 15, no. 2: 117. https://doi.org/10.3390/bs15020117

APA StyleMeng, H., Lu, X., & Xu, J. (2025). The Impact of Chatbot Response Strategies and Emojis Usage on Customers’ Purchase Intention: The Mediating Roles of Psychological Distance and Performance Expectancy. Behavioral Sciences, 15(2), 117. https://doi.org/10.3390/bs15020117