Exploring the Role of Foveal and Extrafoveal Processing in Emotion Recognition: A Gaze-Contingent Study

Abstract

:1. Introduction

1.1. Literature Review

1.2. The Present Study

2. Materials and Methods

2.1. Design

2.2. Participants

2.3. Stimuli and Apparatus

2.4. Procedure

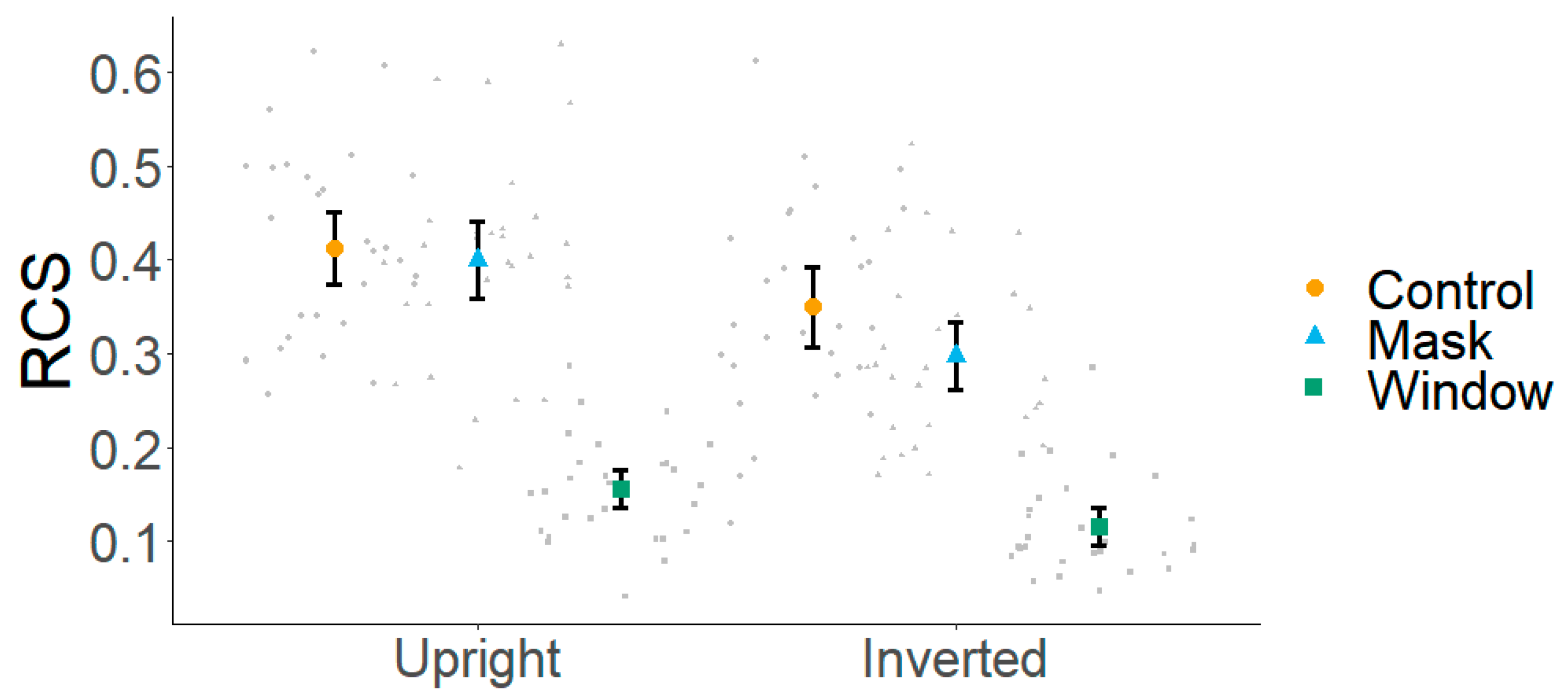

3. Results

4. Discussion

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Althoff, R. R., & Cohen, N. J. (1999). Eye-movement-based memory effect: A reprocessing effect in face perception. Journal of Experimental Psychology. Learning, Memory, and Cognition, 25(4), 997–1010. [Google Scholar] [CrossRef] [PubMed]

- Atkinson, A. P., & Smithson, H. E. (2020). The impact on emotion classification performance and gaze behavior of foveal versus extrafoveal processing of facial features. Journal of Experimental Psychology. Human Perception and Performance, 46(3), 292–312. [Google Scholar] [CrossRef]

- Balota, D. A., & Rayner, K. (1983). Parafoveal visual information and semantic contextual constraints. Journal of Experimental Psychology: Human Perception and Performance, 9(5), 726–738. [Google Scholar] [CrossRef] [PubMed]

- Barabanschikov, V. A. (2015). Gaze dynamics in the recognition of facial expressions of emotion. Perception, 44(8–9), 1007–1019. [Google Scholar] [CrossRef] [PubMed]

- Bayle, D. J., Schoendorff, B., Hénaff, M.-A., & Krolak-Salmon, P. (2011). Emotional facial expression detection in the peripheral visual field. PLoS ONE, 6(6), e21584. [Google Scholar] [CrossRef]

- Beard, B. L., Levi, D. M., & Reich, L. N. (1995). Perceptual learning in parafoveal vision. Vision Research, 35(12), 1679–1690. [Google Scholar] [CrossRef] [PubMed]

- Beaudry, O., Roy-Charland, A., Perron, M., Cormier, I., & Tapp, R. (2014). Featural processing in recognition of emotional facial expressions. Cognition and Emotion, 28(3), 416–432. [Google Scholar] [CrossRef] [PubMed]

- Billino, J., van Belle, G., Rossion, B., & Schwarzer, G. (2018). The nature of individual face recognition in preschool children: Insights from a gaze-contingent paradigm. Cognitive Development, 47, 168–180. [Google Scholar] [CrossRef]

- Bindemann, M. (2010). Scene and screen center bias early eye movements in scene viewing. Vision Research, 50(23), 2577–2587. [Google Scholar] [CrossRef]

- Blais, C., Jack, R. E., Scheepers, C., Fiset, D., & Caldara, R. (2008). Culture shapes how we look at faces. PLoS ONE, 3(8), e3022. [Google Scholar] [CrossRef] [PubMed]

- Blais, C., Linnell, K. J., Caparos, S., & Estéphan, A. (2021). Cultural differences in face recognition and potential underlying mechanisms. Frontiers in Psychology, 12, 627026. [Google Scholar] [CrossRef] [PubMed]

- Boucart, M., Dinon, J.-F., Despretz, P., Desmettre, T., Hladiuk, K., & Oliva, A. (2008). Recognition of facial emotion in low vision: A flexible usage of facial features. Visual Neuroscience, 25(4), 603–609. [Google Scholar] [CrossRef] [PubMed]

- Calder, A. J., Young, A. W., Keane, J., & Dean, M. (2000). Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance, 26(2), 527–551. [Google Scholar] [CrossRef] [PubMed]

- Calvo, M. G., Fernández-Martín, A., & Nummenmaa, L. (2014). Facial expression recognition in peripheral versus central vision: Role of the eyes and the mouth. Psychological Research, 78(2), 180–195. [Google Scholar] [CrossRef] [PubMed]

- Calvo, M. G., & Marrero, H. (2009). Visual search of emotional faces: The role of affective content and featural distinctiveness. Cognition and Emotion, 23(4), 782–806. [Google Scholar] [CrossRef]

- Calvo, M. G., Nummenmaa, L., & Avero, P. (2010). Recognition advantage of happy faces in extrafoveal vision: Featural and affective processing. Visual Cognition, 18(9), 1274–1297. [Google Scholar] [CrossRef]

- Canas-Bajo, T., & Whitney, D. (2022). Relative tuning of holistic face processing towards the fovea. Vision Research, 197, 108049. [Google Scholar] [CrossRef] [PubMed]

- Castelhano, M. S., & Pereira, E. J. (2018). The influence of scene context on parafoveal processing of objects. Quarterly Journal of Experimental Psychology, 71(1), 229–240. [Google Scholar] [CrossRef] [PubMed]

- Chen, L. F., & Yen, Y. S. (2007). Taiwanese facial expression image database. Brain Mapping Laboratory, Institute of Brain Science, National Yang-Ming University. [Google Scholar]

- Darwin, C. (1872). The expression of the emotions in man and animals (pp. vi, 374). John Murray. [Google Scholar] [CrossRef]

- Duran, N., & Atkinson, A. P. (2021). Foveal processing of emotion-informative facial features. PLoS ONE, 16(12), e0260814. [Google Scholar] [CrossRef]

- Eisenbarth, H., & Alpers, G. W. (2011). Happy mouth and sad eyes: Scanning emotional facial expressions. Emotion, 11(4), 860–865. [Google Scholar] [CrossRef] [PubMed]

- Estudillo, A. J., & Bindemann, M. (2017). Can gaze-contingent mirror-feedback from unfamiliar faces alter self-recognition? Quarterly Journal of Experimental Psychology, 70(5). [Google Scholar] [CrossRef] [PubMed]

- Evers, K., Van Belle, G., Steyaert, J., Noens, I., & Wagemans, J. (2018). Gaze-contingent display changes as new window on analytical and holistic face perception in children with autism spectrum disorder. Child Development, 89(2), 430–445. [Google Scholar] [CrossRef] [PubMed]

- Goren, D., & Wilson, H. R. (2006). Quantifying facial expression recognition across viewing conditions. Vision Research, 46(8), 1253–1262. [Google Scholar] [CrossRef] [PubMed]

- Grundmann, F., Epstude, K., & Scheibe, S. (2021). Face masks reduce emotion-recognition accuracy and perceived closeness. PLoS ONE, 16(4), e0249792. [Google Scholar] [CrossRef] [PubMed]

- Hagen, S., Vuong, Q. C., Jung, L., Chin, M. D., Scott, L. S., & Tanaka, J. W. (2023). A perceptual field test in object experts using gaze-contingent eye tracking. Scientific Reports, 13(1), 11437. [Google Scholar] [CrossRef] [PubMed]

- Ji, L. J., Peng, K., & Nisbett, R. E. (2000). Culture, control, and perception of relationships in the environment. Journal of Personality and Social Psychology, 78(5), 943–955. [Google Scholar] [CrossRef]

- Kim, G., Seong, S. H., Hong, S.-S., & Choi, E. (2022). Impact of face masks and sunglasses on emotion recognition in South Koreans. PLoS ONE, 17(2), e0263466. [Google Scholar] [CrossRef]

- Larson, A. M., & Loschky, L. (2009). The contributions of central versus peripheral vision to scene gist recognition. Journal of Vision, 9(10), 6. [Google Scholar] [CrossRef] [PubMed]

- Lee, J. K. W., Janssen, S. M. J., & Estudillo, A. J. (2022). A more featural based processing for the self-face: An eye-tracking study. Consciousness and Cognition, 105, 103400. [Google Scholar] [CrossRef] [PubMed]

- Loschky, L., Szaffarczyk, S., Beugnet, C., Young, M. E., & Boucart, M. (2019). The contributions of central and peripheral vision to scene-gist recognition with a 180° visual field. Journal of Vision, 19(5), 15. [Google Scholar] [CrossRef] [PubMed]

- Loschky, L., Yang, J., Miller, M., & McConkie, G. (2005). The limits of visual resolution in natural scene viewing. Visual Cognition, 12(6), 1057–1092. [Google Scholar] [CrossRef]

- Mayrand, F., Capozzi, F., & Ristic, J. (2023). A dual mobile eye tracking study on natural eye contact during live interactions. Scientific Reports, 13(1), 11385. [Google Scholar] [CrossRef]

- McConkie, G. W., & Rayner, K. (1975). The span of the effective stimulus during a fixation in reading. Perception & Psychophysics, 17(6), 578–586. [Google Scholar] [CrossRef]

- McKone, E. (2004). Isolating the special component of face recognition: Peripheral identification and a Mooney face. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30(1), 181. [Google Scholar] [CrossRef]

- McKone, E., Aimola Davies, A., Fernando, D., Aalders, R., Leung, H., Wickramariyaratne, T., & Platow, M. J. (2010). Asia has the global advantage: Race and visual attention. Vision Research, 50(16), 1540–1549. [Google Scholar] [CrossRef] [PubMed]

- Miellet, S., & Caldara, R. (2012). When East meets West: Gaze-contingent Blindspots abolish cultural diversity in eye movements for faces. Journal of Vision, 5(2), 703. [Google Scholar] [CrossRef]

- Paparelli, A., Sokhn, N., Stacchi, L., Coutrot, A., Richoz, A.-R., & Caldara, R. (2024). Idiosyncratic fixation patterns generalize across dynamic and static facial expression recognition. Scientific Reports, 14(1), 16193. [Google Scholar] [CrossRef]

- Peterson, M. F., & Eckstein, M. P. (2012). Looking just below the eyes is optimal across face recognition tasks. Proceedings of the National Academy of Sciences of the United States of America, 109(48), E3314–E3323. [Google Scholar] [CrossRef]

- Rosenholtz, R. (2016). Capabilities and limitations of peripheral vision. Annual Review of Vision Science, 2(1), 437–457. [Google Scholar] [CrossRef]

- Rossion, B. (2008). Picture-plane inversion leads to qualitative changes of face perception. Acta Psychologica, 128(2), 274–289. [Google Scholar] [CrossRef]

- Schmidt, K. L., & Cohn, J. F. (2001). Human facial expressions as adaptations: Evolutionary questions in facial expression research. American Journal of Physical Anthropology, 116(S33), 3–24. [Google Scholar] [CrossRef]

- Schurgin, M. W., Nelson, J., Iida, S., Ohira, H., Chiao, J. Y., & Franconeri, S. L. (2014). Eye movements during emotion recognition in faces. Journal of Vision, 14(13), 14. [Google Scholar] [CrossRef] [PubMed]

- Smith, F. W., & Rossit, S. (2018). Identifying and detecting facial expressions of emotion in peripheral vision. PLoS ONE, 13(5), e0197160. [Google Scholar] [CrossRef] [PubMed]

- Smith, F. W., & Schyns, P. G. (2009). Smile through your fear and sadness: Transmitting and identifying facial expression signals over a range of viewing distances. Psychological Science, 20(10), 1202–1208. [Google Scholar] [CrossRef]

- Smith, M. L., Cottrell, G. W., Gosselin, F., & Schyns, P. G. (2005). Transmitting and decoding facial expressions. Psychological Science, 16(3), 184–189. [Google Scholar] [CrossRef] [PubMed]

- Snodgrass, J. G., & Corwin, J. (1988). Pragmatics of measuring recognition memory: Applications to dementia and amnesia. Journal of Experimental Psychology: General, 117(1), 34–50. [Google Scholar] [CrossRef]

- Stewart, E. E. M., Valsecchi, M., & Schütz, A. C. (2020). A review of interactions between peripheral and foveal vision. Journal of Vision, 20(12), 2. [Google Scholar] [CrossRef]

- Strasburger, H., Rentschler, I., & Jüttner, M. (2011). Peripheral vision and pattern recognition: A review. Journal of Vision, 11(5), 13. [Google Scholar] [CrossRef] [PubMed]

- Susskind, J. M., Lee, D. H., Cusi, A., Feiman, R., Grabski, W., & Anderson, A. K. (2008). Expressing fear enhances sensory acquisition. Nature Neuroscience, 11(7), 843–850. [Google Scholar] [CrossRef]

- Van Belle, G., De Graef, P., Verfaillie, K., Busigny, T., & Rossion, B. (2010a). Whole not hole: Expert face recognition requires holistic perception. Neuropsychologia, 48(9), 2620–2629. [Google Scholar] [CrossRef]

- Van Belle, G., Verfaillie, K., Rossion, B., & Lefèvre, P. (2010b). Face inversion impairs holistic perception: Evidence from gaze-contingent stimulation. Journal of Vision, 10, 10. [Google Scholar] [CrossRef]

- Vandierendonck, A. (2017). A comparison of methods to combine speed and accuracy measures of performance: A rejoinder on the binning procedure. Behavior Research Methods, 49(2), 653–673. [Google Scholar] [CrossRef] [PubMed]

- Verfaillie, K., Huysegems, S., De Graef, P., & Van Belle, G. (2014). Impaired holistic and analytic face processing in congenital prosopagnosia: Evidence from the eye-contingent mask/window paradigm. Visual Cognition, 22(3–4), 503–521. [Google Scholar] [CrossRef]

- Wegrzyn, M., Vogt, M., Kireclioglu, B., Schneider, J., & Kissler, J. (2017). Mapping the emotional face. How individual face parts contribute to successful emotion recognition. PLoS ONE, 12(5), e0177239. [Google Scholar] [CrossRef]

- Williams, C. C., & Henderson, J. M. (2007). The face inversion effect is not a consequence of aberrant eye movements. Memory & Cognition, 35(8), 1977–1985. [Google Scholar]

- Woltz, D. J., & Was, C. A. (2006). Availability of related long-term memory during and after attention focus in working memory. Memory & Cognition 34, 668–684. [Google Scholar] [CrossRef]

- Wong, H. K., & Estudillo, A. J. (2022). Face masks affect emotion categorisation, age estimation, recognition, and gender classification from faces. Cognitive Research: Principles and Implications, 7(1), 91. [Google Scholar] [CrossRef]

- Yitzhak, N., Pertzov, Y., & Aviezer, H. (2021). The elusive link between eye-movement patterns and facial expression recognition. Social and Personality Psychology Compass, 15(7), e12621. [Google Scholar] [CrossRef]

- Zoghlami, F., & Toscani, M. (2023). Foveal to peripheral extrapolation of facial emotion. Perception, 52(7), 514–523. [Google Scholar] [CrossRef]

| Emotion | Orientation | Viewing Condition | Orientation × Viewing Condition |

|---|---|---|---|

| Fear | F = 20.28, p < 0.001, η2p = 0.42 | F = 69.47, p < 0.001, η2p = 0.71 | F = 4.44, p < 0.05, η2p = 0.13 |

| Sadness | F = 29.79, p < 0.001, η2p = 0.516 | F = 141.29, p < 0.001, η2p = 0.835 | F = 10.78, p < 0.001, η2p = 0.27 |

| Happiness | F = 5.03, p < 0.05, η2p = 0.15 | F = 122.80, p < 0.001, η2p = 0.81 | F = 0.78, p = 0.46 |

| Surprise | F = 0.01, p = 0.97 | F = 85.64, p < 0.001, η2p = 0.75 | F = 2.82, p = 0.06 |

| Anger | F = 46.66, p < 0.001, η2p = 0.62 | F = 52.86, p < 0.001, η2p = 0.65 | F = 2.84, p = 0.09 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Estudillo, A.J. Exploring the Role of Foveal and Extrafoveal Processing in Emotion Recognition: A Gaze-Contingent Study. Behav. Sci. 2025, 15, 135. https://doi.org/10.3390/bs15020135

Estudillo AJ. Exploring the Role of Foveal and Extrafoveal Processing in Emotion Recognition: A Gaze-Contingent Study. Behavioral Sciences. 2025; 15(2):135. https://doi.org/10.3390/bs15020135

Chicago/Turabian StyleEstudillo, Alejandro J. 2025. "Exploring the Role of Foveal and Extrafoveal Processing in Emotion Recognition: A Gaze-Contingent Study" Behavioral Sciences 15, no. 2: 135. https://doi.org/10.3390/bs15020135

APA StyleEstudillo, A. J. (2025). Exploring the Role of Foveal and Extrafoveal Processing in Emotion Recognition: A Gaze-Contingent Study. Behavioral Sciences, 15(2), 135. https://doi.org/10.3390/bs15020135