Factors Influencing AI Chatbot Adoption in Government Administration: A Case Study of Sri Lanka’s Digital Government

Abstract

1. Introduction

Current Availability of E-Government Services in Sri Lanka

2. Theoretical Framework and Hypotheses Development

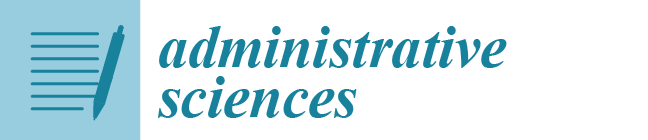

2.1. Technology Acceptance Model (TAM)

2.2. Hypothesis and Model Development

2.2.1. TAM-Fundamental Constructs

Behavior Intention (BI)

Attitude (AT)

Perceived Usefulness (PU)

Perceived Ease of Use (PE)

2.2.2. External Constructs

Trust (TR)

Application Design and Appearance (AD)

Social Influence (SI)

3. Research Methods

3.1. Questionnaire Development and Pilot Study

3.2. Data Collection

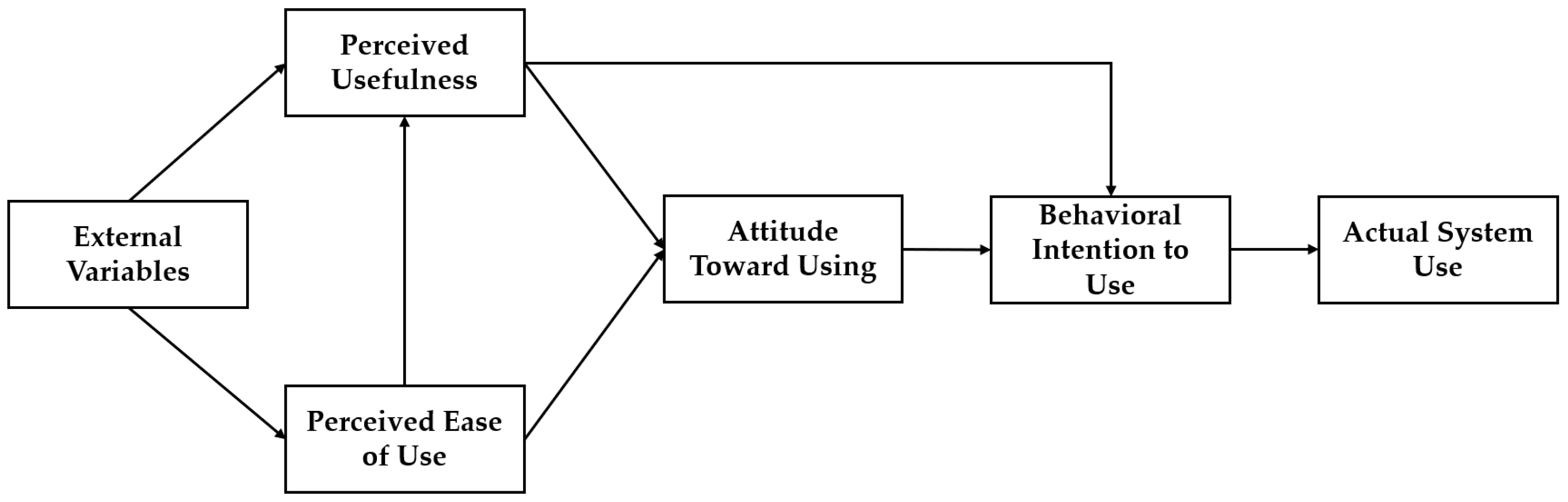

3.3. Data Analysis

4. Results

4.1. Measurement Model Assessment—Reliability, Validity, and Cross Loadings

4.2. Model Fit Measures

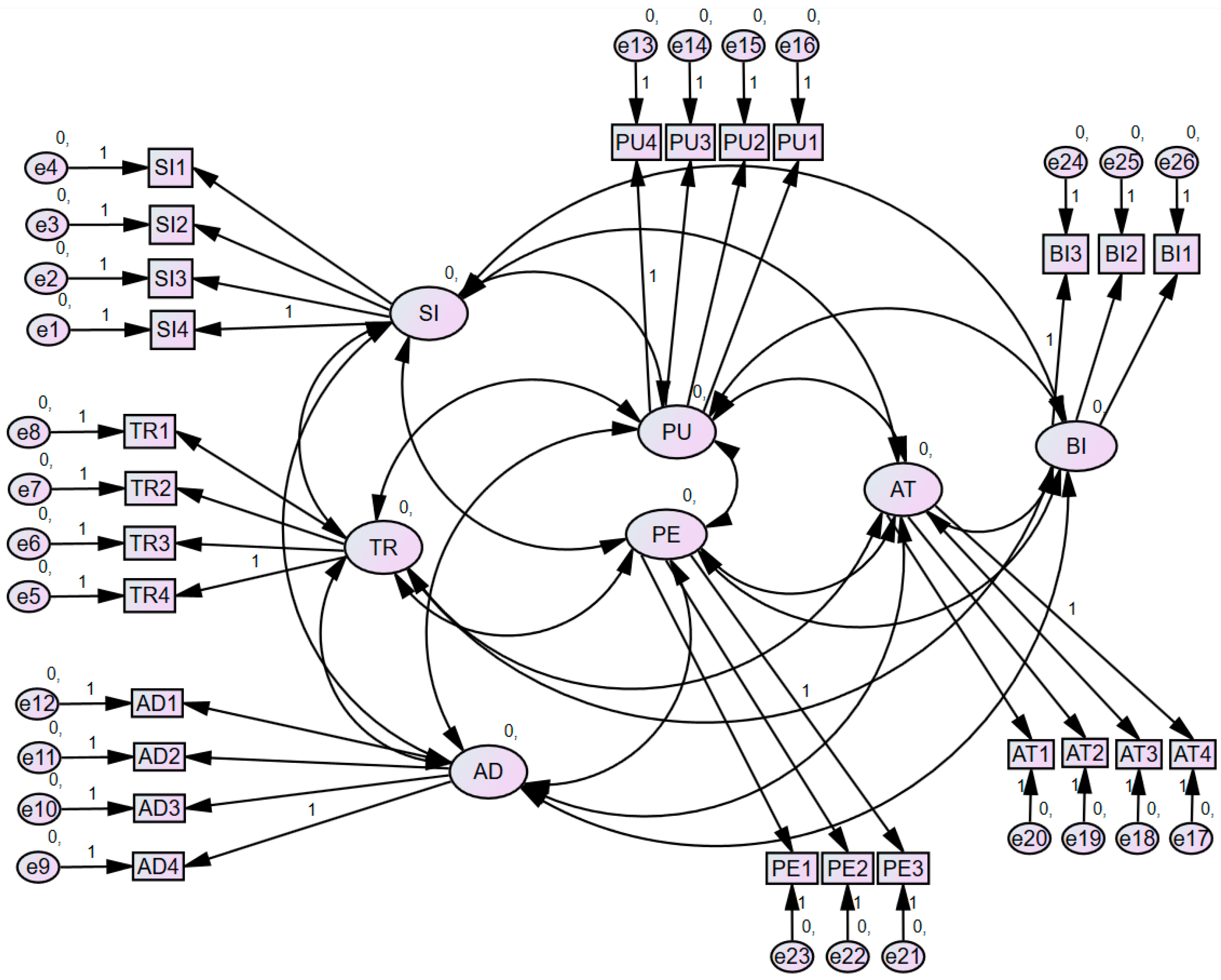

4.3. Structural Model Assessment

4.4. Direct Effect, Indirect Effect, and Total Effect

5. Discussion

6. Conclusions

6.1. Research Implications

6.2. Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adams, D. A., Nelson, R. R., & Todd, P. A. (1992). Perceived usefulness, ease of use, and usage of information technology: A replication. MIS Quarterly, 16, 227–247. [Google Scholar] [CrossRef]

- Ajzen, I., & Fishbein, M. (1970). The prediction of behavior from attitudinal and normative variables. Journal of Experimental Social Psychology, 6, 466–487. [Google Scholar] [CrossRef]

- Ajzen, I., & Fishbein, M. (1977). Attitude-behavior relations: A theoretical analysis and review of empirical research. Psychological Bulletin, 84, 888–918. [Google Scholar] [CrossRef]

- Ajzen, I., & Fishbein, M. (2000). Attitudes and the attitude-behavior relation: Reasoned and automatic processes. European Review of Social Psychology, 11, 1–33. [Google Scholar] [CrossRef]

- Ajzen, I., & Fishbein, M. (2005). The influence of attitudes on behavior. In The handbook of attitudes (Vol. 173, pp. 173–221). Lawrence Erlbaum Associates Publishers. [Google Scholar]

- Alagarsamy, S., & Mehrolia, S. (2023). Exploring chatbot trust: Antecedents and behavioural outcomes. Heliyon, 9(5), e16074. [Google Scholar] [CrossRef]

- Alalwan, A., Baabdullah, A., Rana, N., Tamilmani, K., & Dwivedi, Y. (2018). Examining adoption of mobile internet in Saudi Arabia: Extending TAM with perceived enjoyment, innovativeness and trust. Technology in Society, 55, 100–110. [Google Scholar] [CrossRef]

- Alam, A., & Saputro, I. A. (2022). A qualitative analysis of user interface design on a Sharia Fintech application based on technology acceptance model (TAM). Jurnal TAM (Technology Acceptance Model), 13(1), 9. [Google Scholar] [CrossRef]

- Alenazy, W., Al-Rahmi, W., & Khan, M. S. (2019). Validation of TAM model on social media use for collaborative learning to enhance collaborative authoring. IEEE Access, 7, 71550–71562. [Google Scholar] [CrossRef]

- Alzahrani, L., Al-Karaghouli, W., & Weerakkody, V. (2017). Analysing the critical factors influencing trust in e-government adoption from citizens’ perspective: A systematic review and a conceptual framework. International Business Review, 26(1), 164–175. [Google Scholar] [CrossRef]

- Bagozzi, R. (1981). Attitudes, intentions, and behavior: A test of some key hypotheses. Journal of Personality and Social Psychology, 41, 607–627. [Google Scholar] [CrossRef]

- Baharum, A., Amirul, S. M., Yusop, N. M. M., Halamy, S., Fabeil, N. F., & Ramli, R. Z. (2017, November 28–30). Development of questionnaire to measure user acceptance towards user interface design. Advances in Visual Informatics: 5th International Visual Informatics Conference, IVIC 2017, Bangi, Malaysia. Proceedings 5. [Google Scholar]

- Banjarnahor, L. (2017). Factors influencing purchase intention towards consumer-to-consumer e-commerce. Intangible Capital, 13, 948. [Google Scholar] [CrossRef]

- Bonn, M. A., Kim, W. G., Kang, S., & Cho, M. (2016). Purchasing wine online: The effects of social influence, perceived usefulness, perceived ease of use, and wine involvement. Journal of Hospitality Marketing & Management, 25(7), 841–869. [Google Scholar]

- Burke, C. S., Sims, D. E., Lazzara, E. H., & Salas, E. (2007). Trust in leadership: A multi-level review and integration. The Leadership Quarterly, 18(6), 606–632. [Google Scholar] [CrossRef]

- Calisir, F., & Calisir, F. (2004). The relation of interface usability characteristics, perceived usefulness, and perceived ease of use to end-user satisfaction with enterprise resource planning (ERP) systems. Computers in Human Behavior, 20, 505–515. [Google Scholar] [CrossRef]

- Celik, A., Huseyinli, T., & Can, M. (2022). What are the drivers of using chatbots in online shopping a cross-country analysis. Journal of Business Research—Turk, 14, 2201–2222. [Google Scholar] [CrossRef]

- Chaouali, W., Ben Yahia, I., & Souiden, N. (2016). The interplay of counter-conformity motivation, social influence, and trust in customers’ intention to adopt Internet banking services: The case of an emerging country. Journal of Retailing and Consumer Services, 28, 209–218. [Google Scholar] [CrossRef]

- Chen, T., Gascó-Hernandez, M., & Esteve, M. (2023). The adoption and implementation of artificial intelligence chatbots in public organizations: Evidence from U.S. state governments. The American Review of Public Administration, 54(3), 255–270. [Google Scholar] [CrossRef]

- Cheng, M., Li, X., & Xu, J. (2022). Promoting healthcare workers’ adoption intention of artificial-intelligence-assisted diagnosis and treatment: The chain mediation of social influence and human–computer trust. International Journal of Environmental Research and Public Health, 19(20), 13311. [Google Scholar] [CrossRef]

- Chiancone, C. (2023). Understanding the role of AI in government decision making. Available online: https://www.linkedin.com/pulse/understanding-role-ai-government-decision-making-chris-chiancone (accessed on 15 January 2025).

- Cohen, L., Manion, L., & Morrison, K. (2017). Research methods in education (8th ed.). Routledge. [Google Scholar] [CrossRef]

- Cuel, R., & Ferrario, R. (2009). The impact of technology in organizational communication. In Nursing and clinical informatics: Socio-technical approaches. IGI Global. [Google Scholar] [CrossRef]

- Davis, F. (1985). A technology acceptance model for empirically testing new end-user information systems [Doctoral dissertation, Massachusetts Institute of Technology]. [Google Scholar]

- Davis, F., Bagozzi, R., & Warshaw, P. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35, 982–1003. [Google Scholar] [CrossRef]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. [Google Scholar] [CrossRef]

- Davis, F. D., & Venkatesh, V. (1996). A critical assessment of potential measurement biases in the technology acceptance model: Three experiments. International Journal of Human-Computer Studies, 45(1), 19–45. [Google Scholar] [CrossRef]

- Delone, W., & McLean, E. (1992). Information systems success: The quest for the dependent variable. Information Systems Research, 3, 60–95. [Google Scholar] [CrossRef]

- Delone, W., & McLean, E. (2003). The DeLone and McLean model of information systems success: A ten-year update. Journal of Management Information Systems, 19, 9–30. [Google Scholar] [CrossRef]

- Dhagarra, D., Goswami, M., & Kumar, G. (2020). Impact of trust and privacy concerns on technology acceptance in healthcare: An indian perspective. International Journal of Medical Informatics, 141, 104164. [Google Scholar] [CrossRef]

- Edo, O. C., Ang, D., Etu, E.-E., Tenebe, I., Edo, S., & Diekola, O. A. (2023). Why do healthcare workers adopt digital health technologies—A cross-sectional study integrating the TAM and UTAUT model in a developing economy. International Journal of Information Management Data Insights, 3(2), 100186. [Google Scholar] [CrossRef]

- Effendy, F., Hurriyati, R., & Hendrayati, H. (2021, August 8). Perceived usefulness, perceived ease of use, and social influence: Intention to use e-wallet. 5th Global Conference on Business, Management and Entrepreneurship (GCBME 2020), Bandung, Indonesia. [Google Scholar]

- Eiser, J., Miles, S., & Frewer, L. (2006). Trust, perceived risk, and attitudes toward food technologies. Journal of Applied Social Psychology, 32, 2423–2433. [Google Scholar] [CrossRef]

- Faqih, K. M. (2020). The influence of perceived usefulness, social influence, internet self-efficacy and compatibility ON USERS’INTENTIONS to adopt e-learning: Investigating the moderating effects of culture. IJAEDU-International E-Journal of Advances in Education, 5(15), 300–320. [Google Scholar] [CrossRef]

- Fishbein, M., & Ajzen, I. (1975). Belief, attitude, intention and behaviour: An introduction to theory and research. Addison-Wesley Publishing Company. [Google Scholar]

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. [Google Scholar] [CrossRef]

- Fulk, J., Schmitz, J., & Ryu, D. (1995). Cognitive elements in the social construction of communication technology. Management Communication Quarterly, 8, 259–288. [Google Scholar] [CrossRef]

- Fulk, J., & Yuan, Y. C. (2017). Social construction of communication technology. Academy of Management Journal, 36, 921–950. [Google Scholar] [CrossRef]

- Gefen, D., Karahanna, E., & Straub, D. W. (2003). Trust and TAM in online shopping: An integrated model. MIS Quarterly, 27, 51–90. [Google Scholar] [CrossRef]

- Gefen, D., & Straub, D. (2000). The relative importance of perceived ease of use in is adoption: A study of e-commerce adoption. Journal of the Association for Information Systems, 1, 8. [Google Scholar] [CrossRef]

- Golbeck, J., & Kuter, U. (2009). The ripple effect: Change in trust and its impact over a social network. In Computing with social trust. Human–computer interaction series (pp. 169–181). Springer. [Google Scholar] [CrossRef]

- Gopinath, K., & Kasilingam, D. (2023). Antecedents of intention to use chatbots in service encounters: A meta-analytic review. International Journal of Consumer Studies, 47(6), 2367–2395. [Google Scholar] [CrossRef]

- Gorsuch, R., & Ortberg, J. (1983). Moral obligation and attitudes: Their relation to behavioral intentions. Journal of Personality and Social Psychology, 44, 1025–1028. [Google Scholar] [CrossRef]

- Gould, J. D., & Lewis, C. H. (1985). Designing for usability: Key principles and what designers think. Commun. ACM, 28, 300–311. [Google Scholar]

- Granić, A., & Marangunić, N. (2019). Technology acceptance model in educational context: A systematic literature review. British Journal of Educational Technology, 50, 2572–2593. [Google Scholar] [CrossRef]

- Hackbarth, G., Grover, V., & Yi, M. (2003). Computer playfulness and anxiety: Positive and negative mediators of the system experience effect on perceived ease of use. Information & Management, 40, 221–232. [Google Scholar] [CrossRef]

- Hair, J., Hult, G. T. M., Ringle, C., & Sarstedt, M. (2016). A primer on partial least squares structural equation modeling (PLS-SEM) (2nd ed.). Sage Publications. [Google Scholar]

- Hair, J., Hult, G. T. M., Ringle, C., & Sarstedt, M. (2022). A primer on partial least squares structural equation modeling (PLS-SEM). Sage Publications. [Google Scholar]

- Hair, J. F., Hult, G. T. M., Ringle, C. M., Sarstedt, M., & Thiele, K. O. (2017). Mirror, mirror on the wall: A comparative evaluation of composite-based structural equation modeling methods. Journal of the Academy of Marketing Science, 45, 616–632. [Google Scholar] [CrossRef]

- Hair, J. F., Risher, J. J., Sarstedt, M., & Ringle, C. M. (2019). When to use and how to report the results of PLS-SEM. European Business Review, 31(1), 2–24. [Google Scholar] [CrossRef]

- Hasija, A., & Esper, T. (2022). In artificial intelligence (AI) we trust: A qualitative investigation of AI technology acceptance. Journal of Business Logistics, 43, 388–412. [Google Scholar] [CrossRef]

- Haverila, M. J., McLaughlin, C., & Haverila, K. (2023). The impact of social influence on perceived usefulness and behavioral intentions in the usage of non-pharmaceutical interventions (NPIs). International Journal of Healthcare Management, 16(1), 145–156. [Google Scholar] [CrossRef]

- Hillemann, D. (2023). Navigating the challenges of implementing artificial intelligence in the public sector: An in-depth analysis. Available online: https://dhillemann.medium.com/navigating-the-challenges-of-implementing-artificial-intelligence-in-the-public-sector-an-in-depth-cb714fe6616b (accessed on 15 January 2025).

- Hoehle, H., & Venkatesh, V. (2015). Mobile application usability. Conceptualization and instrument development. MIS Quarterly, 39(2), 435–472. Available online: https://www.jstor.org/stable/26628361 (accessed on 15 January 2025). [CrossRef]

- Hong, S. J. (2025). What drives AI-based risk information-seeking intent? Insufficiency of risk information versus (Un)certainty of AI chatbots. Computers in Human Behavior, 162, 108460. [Google Scholar] [CrossRef]

- Hsu, C.-L., & Lu, H.-P. (2004). Why do people play on-line games? An extended TAM with social influences and flow experience. Information & Management, 41, 853–868. [Google Scholar] [CrossRef]

- Huang, M.-H., & Rust, R. (2018). Artificial intelligence in service. Journal of Service Research, 21, 109467051775245. [Google Scholar] [CrossRef]

- Igbaria, M., Schiffman, S. J., & Wieckowski, T. J. (1994). The respective roles of perceived usefulness and perceived fun in the acceptance of microcomputer technology. Behaviour & Information Technology, 13, 349–361. [Google Scholar]

- Ikhsan, R. B., Fernando, Y., Prabowo, H., Yuniarty, Gui, A., & Kuncoro, E. A. (2025). An empirical study on the use of artificial intelligence in the banking sector of Indonesia by extending the TAM model and the moderating effect of perceived trust. Digital Business, 5(1), 100103. [Google Scholar] [CrossRef]

- Ilyas, M., ud din, A., Haleem, M., & Ahmad, I. (2023). Digital entrepreneurial acceptance: An examination of technology acceptance model and do-it-yourself behavior. Journal of Innovation and Entrepreneurship, 12(1), 15. [Google Scholar] [CrossRef]

- Jarvenpaa, S., Tractinsky, N., & Vitale, M. (2000). Consumer trust in an internet store. International Journal of Information Technology and Management—IJITM, 1, 45–71. [Google Scholar] [CrossRef]

- Karahanna, E., & Straub, D. W. (1999). The psychological origins of perceived usefulness and ease-of-use. Information & Management, 35, 237–250. [Google Scholar]

- Kasilingam, D., & Krishna, R. (2022). Understanding the adoption and willingness to pay for internet of things services. International Journal of Consumer Studies, 46(1), 102–131. [Google Scholar] [CrossRef]

- Kelly, S., Kaye, S.-A., & Oviedo-Trespalacios, O. (2023). What factors contribute to the acceptance of artificial intelligence? A systematic review. Telematics and Informatics, 77, 101925. [Google Scholar] [CrossRef]

- Kesharwani, A., & Bisht, S. (2012). The impact of trust and perceived risk on Internet banking adoption in India. International Journal of Bank Marketing, 30, 303–322. [Google Scholar] [CrossRef]

- Kunz, W. H., & Wirtz, J. (2023). AI in customer service: A service revolution in the making. In Artificial intelligence in customer service: The next frontier for personalized engagement (pp. 15–32). Springer. [Google Scholar]

- Kurniawan, I. A., Mugiono, M., & Wijayanti, R. (2022). The effect of perceived usefulness, perceived ease of use, and social influence toward intention to use mediated by Trust. Jurnal Aplikasi Manajemen, 20(1), 117–127. [Google Scholar] [CrossRef]

- Kurosu, M., & Kashimura, K. (1995, May 7–11). Apparent usability vs. inherent usability: Experimental analysis on the determinants of the apparent usability. Conference companion on Human factors in Computing Systems, Denver, CO, USA. [Google Scholar]

- Landis, D., Triandis, H., & Adamopoulos, J. (1978). Habit and behavioral intentions as predictors of social behavior. Journal of Social Psychology, 106, 227–237. [Google Scholar] [CrossRef]

- Ltifi, M. (2023). Trust in the chatbot: A semi-human relationship. Future Business Journal, 9(1), 109. [Google Scholar] [CrossRef]

- Lun, L., Zetian, D., Hoe, T. W., Juan, X., Jiaxin, D., & Fulai, W. (2024). Factors influencing user intentions on interactive websites: Insights from the technology acceptance model. IEEE Access, 12, 122735–122756. [Google Scholar] [CrossRef]

- Malhotra, Y., & Galletta, D. F. (1999, January 5–8). Extending the technology acceptance model to account for social influence: Theoretical bases and empirical validation. Proceedings of the 32nd Annual Hawaii International Conference on Systems Sciences, Maui, HI, USA. HICSS-32. Abstracts and CD-ROM of Full Papers. [Google Scholar]

- Mansoor, M. (2021). Citizens’ trust in government as a function of good governance and government agency’s provision of quality information on social media during COVID-19. Government Information Quarterly, 38, 101597. [Google Scholar] [CrossRef]

- Maria, V., & Sugiyanto, L. B. (2023). Perceived usefulness, perceived ease of use, perceived enjoyment on behavioral intention to use through trust. Indonesian Journal of Multidisciplinary Science, 3, 1–7. [Google Scholar] [CrossRef]

- Matemba, E., & Li, G. (2017). Consumers’ willingness to adopt and use WeChat wallet: An empirical study in South Africa. Technology in Society, 53, 55–68. [Google Scholar] [CrossRef]

- Mathieson, K. (1991). Predicting user intentions: Comparing the technology acceptance model with the theory of planned behavior. Information Systems Research, 2, 173–191. [Google Scholar] [CrossRef]

- McCloskey, D. (2006). The importance of ease of use, usefulness, and trust to online consumers: An examination of the technology acceptance model with older customers. Journal of Organizational and End User Computing, 18, 47–65. [Google Scholar] [CrossRef]

- Medaglia, R., & Tangi, L. (2022, October 4–7). The adoption of Artificial Intelligence in the public sector in Europe: Drivers, features, and impacts. ACM International Conference Proceeding Series, ICEGOV’22: Proceedings of the 15th International Conference on Theory and Practice of Electronic Governance, Guimarães, Portugal. [Google Scholar]

- Mikalef, P., Lemmer, K., Schaefer, C., Ylinen, M., Fjørtoft, S. O., Torvatn, H. Y., Gupta, M., & Niehaves, B. (2023). Examining how AI capabilities can foster organizational performance in public organizations. Government Information Quarterly, 40(2), 101797. [Google Scholar] [CrossRef]

- Mostafa, R. B., & Kasamani, T. (2022). Antecedents and consequences of chatbot initial trust. European Journal of Marketing, 56(6), 1748–1771. [Google Scholar] [CrossRef]

- Nakisa, B., Ansarizadeh, F., Oommen, P., & Kumar, R. (2023). Using an extended technology acceptance model to investigate facial authentication. Telematics and Informatics Reports, 12, 100099. [Google Scholar] [CrossRef]

- Natasia, S. R., Wiranti, Y. T., & Parastika, A. (2022). Acceptance analysis of NUADU as e-learning platform using the Technology Acceptance Model (TAM) approach. Procedia Computer Science, 197, 512–520. [Google Scholar] [CrossRef]

- Oldeweme, A., Märtins, J., Westmattelmann, D., & Schewe, G. (2021). The role of transparency, trust, and social influence on uncertainty reduction in times of pandemics: Empirical study on the adoption of COVID-19 tracing apps. Journal of Medical Internet Research, 23(2), e25893. [Google Scholar] [CrossRef]

- Omrani, N., Rivieccio, G., Fiore, U., Schiavone, F., & Agreda, S. G. (2022). To trust or not to trust? An assessment of trust in AI-based systems: Concerns, ethics and contexts. Technological Forecasting and Social Change, 181, 121763. [Google Scholar] [CrossRef]

- Pesonen, J. A. (2021). ‘Are you ok?’ Students’ trust in a Chatbot providing support opportunities. In International conference on human-computer interaction. Springer. [Google Scholar]

- Prabowo, G., & Nugroho, A. (2019). Factors that influence the attitude and behavioral intention of indonesian users toward online food delivery service by the go-food application. Atlantis Press. [Google Scholar] [CrossRef]

- Prastiawan, D. I., Aisjah, S., & Rofiaty, R. (2021). The effect of perceived usefulness, perceived ease of use, and social influence on the use of mobile banking through the mediation of attitude toward use. APMBA (Asia Pacific Management and Business Application), 9(3), 243–260. [Google Scholar] [CrossRef]

- Przegalinska, A., Ciechanowski, L., Stroz, A., Gloor, P., & Mazurek, G. (2019). In bot we trust: A new methodology of chatbot performance measures. Business Horizons, 62(6), 785–797. [Google Scholar] [CrossRef]

- Rice, R. E., & Aydin, C. E. (1991). Attitudes toward new organizational technology: Network proximity as a mechanism for social information processing. Administrative Science Quarterly, 36, 219. [Google Scholar] [CrossRef]

- Saade, R., & Bahli, B. (2005). The impact of cognitive absorption on perceived usefulness and perceived ease of use in online learning: An extension of the Technology Acceptance Model. Information & Management, 42, 317–327. [Google Scholar] [CrossRef]

- Saif, N., Khan, S. U., Shaheen, I., Alotaibi, F. A., Alnfiai, M. M., & Arif, M. (2024). Chat-GPT; validating Technology Acceptance Model (TAM) in education sector via ubiquitous learning mechanism. Computers in Human Behavior, 154, 108097. [Google Scholar] [CrossRef]

- Shareef, M. A., Kumar, V., Kumar, U., & Dwivedi, Y. K. (2011). e-Government Adoption Model (GAM): Differing service maturity levels. Government Information Quarterly, 28(1), 17–35. [Google Scholar] [CrossRef]

- Sharma, S., & Agarwal, M. (2024). Impact of AI-based chatbots on faculty performance in higher education institutions. In Innovation in the university 4.0 system based on smart technologies (pp. 83–100). Chapman and Hall/CRC. [Google Scholar]

- Sheppard, B., Hartwick, J., & Warshaw, P. (1988). The theory of reasoned action: A meta-analysis of past research with recommendations for modifications and future research. Journal of Consumer Research, 15, 325–343. [Google Scholar] [CrossRef]

- Slovic, P. (1987). Perception of risk. Science, 236, 280–285. [Google Scholar] [CrossRef]

- Slovic, P., Fischhoff, B., & Lichtenstein, S. (1985). Characterizing perceived risk. In Perilous progress: Managing the hazards of technology. Westview. ERN: Uncertainty & Risk Modeling (Topic). [Google Scholar]

- Venkatesh, V. (2000). Determinants of perceived ease of use: Integrating control, intrinsic motivation, and emotion into the technology acceptance model. Information Systems Research, 11, 342–365. [Google Scholar] [CrossRef]

- Venkatesh, V., & Bala, H. (2008). technology acceptance model 3 and a research agenda on interventions. Decision Sciences, 39, 273–315. [Google Scholar] [CrossRef]

- Venkatesh, V., & Davis, F. (2000). A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science, 46, 186–204. [Google Scholar] [CrossRef]

- Venkatesh, V., & Davis, F. D. (1996). A model of the antecedents of perceived ease of use: Development and test. Decision Sciences, 27(3), 451–481. [Google Scholar] [CrossRef]

- Wang, C., Ahmad, S. F., Bani Ahmad Ayassrah, A. Y. A., Awwad, E. M., Irshad, M., Ali, Y. A., Al-Razgan, M., Khan, Y., & Han, H. (2023). An empirical evaluation of technology acceptance model for artificial intelligence in e-commerce. Heliyon, 9(8), e18349. [Google Scholar] [CrossRef]

- Warshaw, P. R. (1980). A new model for predicting behavioral intentions: An alternative to fishbein. Journal of Marketing Research, 17, 153–172. [Google Scholar] [CrossRef]

- Warshaw, P. R., & Davis, F. D. (1985). Disentangling behavioral intention and behavioral expectation. Journal of Experimental Social Psychology, 21, 213–228. [Google Scholar] [CrossRef]

- Wirtz, B. W., Weyerer, J. C., & Geyer, C. (2019). Artificial intelligence and the public sector—Applications and challenges. International Journal of Public Administration, 42(7), 596–615. [Google Scholar] [CrossRef]

- Yigitcanlar, T., David, A., Li, W., Fookes, C., Bibri, S. E., & Ye, X. (2024). Unlocking artificial intelligence adoption in local governments: Best practice lessons from real-world implementations. Smart Cities, 7, 1576–1625. [Google Scholar] [CrossRef]

- Zhang, T., Tao, D., Qu, X., Zhang, X., Zeng, J., Zhu, H., & Zhu, H. (2020). Automated vehicle acceptance in China: Social influence and initial trust are key determinants. Transportation Research Part C: Emerging Technologies, 112, 220–233. [Google Scholar] [CrossRef]

- Zhou, T., Lu, Y., & Wang, B. (2009). The relative importance of website design quality and service quality in determining consumers’ online repurchase behavior. Information Systems Management, 26(4), 327–337. [Google Scholar] [CrossRef]

- Zuiderwijk, A., Chen, Y.-C., & Salem, F. (2021). Implications of the use of artificial intelligence in public governance: A systematic literature review and a research agenda. Government Information Quarterly, 38(3), 101577. [Google Scholar] [CrossRef]

| External Construct | General Conceptualization | Source(s) |

|---|---|---|

| Trust | Trust involves the confidence that users place in a technology, believing that it can perform its functions reliably and will act in the users’ best interest. | (Burke et al., 2007; Dhagarra et al., 2020; Hasija & Esper, 2022; Hong, 2025; Jarvenpaa et al., 2000) |

| Application Design/ Appearance | Application design and appearance refer to the visual and functional elements of technology that influence user perceptions, affecting usability, satisfaction, and acceptance. | (Hoehle & Venkatesh, 2015; Zhou et al., 2009) |

| Social Influence | Social influence pertains to the impact that peers, family, and larger social networks have on individuals’ attitudes and behaviors toward adopting new technologies. | (Chaouali et al., 2016; Cheng et al., 2022) |

| Category | Factor | Frequency | Percentage |

|---|---|---|---|

| Gender | Male | 96 | 46.40% |

| Female | 111 | 53.60% | |

| Age | Below 20 | 1 | 0.50% |

| 20–29 | 21 | 10.10% | |

| 30–39 | 105 | 50.70% | |

| 40–49 | 57 | 27.50% | |

| 50 and above | 23 | 11.10% | |

| Occupation | Private Sector | 50 | 24.20% |

| Public Sector | 150 | 72.50% | |

| Unemployed | 7 | 3.40% | |

| Education | High School | 39 | 18.84% |

| First Degree | 106 | 51.21% | |

| Masters or Higher | 62 | 29.95% | |

| IT usability/knowledge | None | 3 | 1.45% |

| Very limited | 3 | 1.45% | |

| Some experience | 94 | 45.41% | |

| Quite a lot | 76 | 36.71% | |

| Extensive | 31 | 14.98% | |

| Mobile application use experience | None | 4 | 1.93% |

| Very limited | 4 | 1.93% | |

| Some experience | 82 | 39.61% | |

| Quite a lot | 78 | 37.74% | |

| Extensive | 39 | 18.84% |

| Factor | Code | Description | Loading | AVE | C.R | C.A |

|---|---|---|---|---|---|---|

| Trust | TR1 | Chatbot application is trustworthy | 0.767 | 0.589 | 0.850 | |

| TR2 | Chatbot application providers give the impression that they keep promises and commitments on information provided | 0.752 | 0.765 | |||

| TR3 | Chatbot application providers keep my best interests in mind. | 0.854 | ||||

| TR4 | Chatbot can address my issues | 0.686 | ||||

| Application Design/ Appearance | AD1 | I will accept this chatbots application if the design to be similar to other systems that I used or know of. | 0.698 | 0.716 | 0.909 | 0.864 |

| AD2 | I will accept this chatbot application if the chatbots service application is simple to navigate. | 0.898 | ||||

| AD3 | I will accept this chatbot application if it clearly generates and shows my required response. | 0.908 | ||||

| AD4 | I will accept this chatbot application if it operates effectively and free from technical issues. | 0.865 | ||||

| Social Influence | SI1 | I will use this chatbot application if the service is widely used by people in my community. | 0.658 | 0.704 | 0.903 | 0.858 |

| SI2 | I think that I will adopt this chatbot application if my supervisors/seniors use it. | 0.862 | ||||

| SI3 | I think that I will adopt this chatbot application if my friends use it. | 0.921 | ||||

| SI4 | I will adopt this chatbot application if my family members/relatives use it. | 0.889 | ||||

| Perceived Ease of Use | PE1 | I think learning to operate the chatbot application would be easy for me | 0.789 | 0.683 | 0.866 | 0.767 |

| PE2 | I believe it would be easy to get the chatbot application to accomplish what I want to do. | 0.841 | ||||

| PE3 | It is easy for me to become skillful at using this chatbot application. | 0.848 | ||||

| Perceived Usefulness | PU1 | Using this chatbot application would improve the quality of public service. | 0.861 | 0.771 | 0.931 | 0.900 |

| PU2 | Using this chatbot application would increase my productivity. | 0.866 | ||||

| PU3 | Using this chatbot application would save time on getting government information and services. | 0.905 | ||||

| PU4 | I believe this chatbot application is useful for delivery of public services online to citizens. | 0.879 | ||||

| Attitude | AT1 | It is a good idea to use a chatbot application in the public sector. | 0.889 | 0.815 | 0.946 | 0.923 |

| AT2 | It is wise to use a chatbot application in the public sector. | 0.907 | ||||

| AT3 | I like to use a chatbot application in the public sector. | 0.930 | ||||

| AT4 | It is pleasant to use a chatbot application in public sector. | 0.883 | ||||

| Behavioral Intention | BI1 | If I have access to this chatbot application, I intend to use it. | 0.878 | 0.740 | 0.895 | 0.817 |

| BI2 | If I have access to this chatbot application, I will use it. | 0.899 | ||||

| BI3 | I plan to use this chatbot application within the next 6 months. | 0.800 |

| TR | AD | SI | PE | PU | AT | BI | |

|---|---|---|---|---|---|---|---|

| TR | 0.767 | ||||||

| AD | 0.223 | 0.846 | |||||

| SI | 0.356 | 0.341 | 0.839 | ||||

| PE | 0.455 | 0.516 | 0.296 | 0.826 | |||

| PU | 0.323 | 0.440 | 0.227 | 0.652 | 0.878 | ||

| AT | 0.215 | 0.221 | 0.166 | 0.395 | 0.600 | 0.903 | |

| BI | 0.258 | 0.553 | 0.217 | 0.544 | 0.658 | 0.401 | 0.860 |

| TR | AD | SI | PE | PU | BI | AT | |

|---|---|---|---|---|---|---|---|

| TR1 | 0.761 | −0.032 | 0.123 | 0.042 | 0.058 | 0.154 | 0.077 |

| TR2 | 0.821 | −0.011 | −0.075 | −0.086 | 0.220 | 0.110 | −0.048 |

| TR3 | 0.786 | 0.109 | 0.206 | 0.268 | −0.004 | 0.023 | 0.068 |

| TR4 | 0.519 | 0.197 | 0.243 | 0.374 | 0.056 | −0.147 | 0.237 |

| AD1 | 0.105 | 0.657 | 0.298 | 0.037 | 0.143 | 0.034 | −0.045 |

| AD2 | −0.009 | 0.865 | 0.141 | 0.121 | 0.075 | 0.081 | 0.140 |

| AD3 | 0.005 | 0.845 | 0.091 | 0.187 | 0.202 | 0.188 | 0.014 |

| AD4 | 0.041 | 0.794 | 0.055 | 0.189 | 0.084 | 0.244 | 0.087 |

| SI1 | 0.039 | 0.309 | 0.526 | 0.011 | 0.152 | 0.307 | 0.233 |

| SI2 | 0.122 | 0.093 | 0.862 | −0.022 | −0.018 | 0.023 | 0.055 |

| SI3 | 0.090 | 0.139 | 0.895 | 0.133 | 0.074 | 0.054 | 0.039 |

| SI4 | 0.079 | 0.125 | 0.879 | 0.122 | 0.046 | 0.003 | 0.009 |

| PE1 | 0.067 | 0.252 | 0.109 | 0.741 | 0.026 | 0.050 | 0.253 |

| PE2 | 0.148 | 0.083 | 0.045 | 0.689 | 0.329 | 0.237 | 0.092 |

| PE3 | 0.086 | 0.172 | 0.075 | 0.742 | 0.307 | 0.159 | 0.046 |

| PU1 | 0.112 | 0.218 | 0.025 | 0.133 | 0.776 | 0.120 | 0.248 |

| PU2 | 0.103 | 0.219 | 0.009 | 0.253 | 0.661 | 0.265 | 0.303 |

| PU3 | 0.119 | 0.119 | 0.083 | 0.192 | 0.817 | 0.177 | 0.237 |

| PU4 | 0.069 | 0.070 | 0.106 | 0.127 | 0.781 | 0.241 | 0.299 |

| BI1 | 0.061 | 0.313 | 0.074 | 0.221 | 0.155 | 0.736 | 0.216 |

| BI2 | 0.026 | 0.282 | 0.028 | 0.084 | 0.320 | 0.740 | 0.174 |

| BI3 | 0.170 | 0.052 | 0.087 | 0.101 | 0.205 | 0.785 | 0.032 |

| AT1 | 0.033 | 0.050 | 0.066 | 0.139 | 0.254 | 0.102 | 0.827 |

| AT2 | 0.089 | 0.032 | 0.101 | 0.112 | 0.204 | 0.015 | 0.862 |

| AT3 | −0.001 | 0.078 | 0.046 | 0.035 | 0.253 | 0.056 | 0.894 |

| AT4 | 0.081 | 0.052 | 0.008 | 0.121 | 0.127 | 0.216 | 0.844 |

| Measures of Fit | Indices | Values | Recommended Values |

|---|---|---|---|

| Discrepancy measurements | CMIN/DF | 1.860 | (<2) |

| (RMSEA) | 0.065 | (0–0.1) | |

| Comparative Fit Index (CFI) | 0.923 | (0.9–1) | |

| Incremental adjustment measures | Normed Fit Index (NFI) | 0.902 | (0.9–1) |

| Tucker–Lewis Index (TLI) | 0.906 | (0.9–1) | |

| Parsimony-adjusted and related measures | Incremental Fit Index (IFI) | 0.925 | (0.9–1) |

| Parsimony-Goodness Measures (PGFI) | 0.757 | (0.5–1) | |

| Goodness-of-Fit Index (GFI) | 0.914 | (0.9–1) |

| Hypothesis | Path | Standard Estimates | Standard Error | Critical Ratio | p-Value |

|---|---|---|---|---|---|

| H1 | BI ← AT | 0.370 | 0.068 | 5.481 | *** |

| H2 | AT ← PU | 0.745 | 0.127 | 5.865 | *** |

| H3 | AT ← PE | −0.041 | 0.179 | −0.228 | 0.820 |

| H4 | PU ← PE | 0.855 | 0.137 | 6.22 | *** |

| H5 | AT ← TR | 0.247 | 0.139 | 1.777 | 0.076 |

| H6 | PE ← TR | 0.401 | 0.100 | 4.03 | *** |

| H7 | PE ← AD | 0.404 | 0.084 | 4.829 | *** |

| H8 | PU ← SI | 0.053 | 0.094 | 0.56 | 0.575 |

| H9 | TR ← SI | 0.445 | 0.105 | 4.217 | *** |

| Path | Estimates | ||

|---|---|---|---|

| Total | Direct | Indirect | |

| TR->PE | 0.401 | 0.401 | 0 |

| TR->PU | 0.343 | 0 | 0.343 |

| TR->BI | 0.180 | 0 | 0.180 |

| SI->TR | 0.445 | 0.445 | 0 |

| SI->PE | 0.178 | 0 | 0.178 |

| SI->AT | 0.256 | 0 | 0.256 |

| SI->BI | 0.095 | 0 | 0.095 |

| AD->PE | 0.404 | 0.404 | 0 |

| AD->PU | 0.345 | 0 | 0.345 |

| AD->AT | 0.241 | 0 | 0.241 |

| AD->BI | 0.089 | 0 | 0.089 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rathnayake, A.S.; Nguyen, T.D.H.N.; Ahn, Y. Factors Influencing AI Chatbot Adoption in Government Administration: A Case Study of Sri Lanka’s Digital Government. Adm. Sci. 2025, 15, 157. https://doi.org/10.3390/admsci15050157

Rathnayake AS, Nguyen TDHN, Ahn Y. Factors Influencing AI Chatbot Adoption in Government Administration: A Case Study of Sri Lanka’s Digital Government. Administrative Sciences. 2025; 15(5):157. https://doi.org/10.3390/admsci15050157

Chicago/Turabian StyleRathnayake, Arjuna Srilal, Truong Dang Hoang Nhat Nguyen, and Yonghan Ahn. 2025. "Factors Influencing AI Chatbot Adoption in Government Administration: A Case Study of Sri Lanka’s Digital Government" Administrative Sciences 15, no. 5: 157. https://doi.org/10.3390/admsci15050157

APA StyleRathnayake, A. S., Nguyen, T. D. H. N., & Ahn, Y. (2025). Factors Influencing AI Chatbot Adoption in Government Administration: A Case Study of Sri Lanka’s Digital Government. Administrative Sciences, 15(5), 157. https://doi.org/10.3390/admsci15050157