Abstract

The combination of active contour models (ACMs) for both contour and salient object detection is an attractive approach for researchers in image segmentation. Existing active contour models fail when improper initialization is performed. We propose a novel active contour model with salience detection in the complex domain to address this issue. First, the input image is converted to the complex domain. The complex transformation gives salience cue. In addition, it is well suited for cyclic objects and it speeds up the iteration of the active contour. During the process, we utilize a low-pass filter that lets the low spatial frequencies pass, while attenuating, or completely blocking, the high spatial frequencies to reduce the random noise connected with favorable or higher frequencies. Furthermore, the model introduces a force function in the complex domain that dynamically shrinks a contour when it is outside of the object of interest and expands it when the contour is inside the object. Comprehensive tests on both synthetic images and natural images show that our proposed algorithm produces accurate salience results that are close to the ground truth. At the same time, it eliminates re-initialization and, thus, reduces the execution time.

1. Introduction

Salience detection is a key problem for computer vision and image processing. It plays a vital role in many applications, facilitating object tracking [1,2,3], image and video compression [4], remote sensing image segmentation [5], video surveillance [6], and image retrieval [7]. However, salience detection faces many challenges, such as noisy and complex backgrounds [8] and intensity inhomogeneity [9,10,11]. These challenges make the current solutions very uncertain. Researchers are working to address these limitations and improve the accuracy of salient object detection.

Salience detection [12,13,14] extracts important and meaningful regions from an image [15]. It finds the most informative and interesting regions. During the last few decades, salience detection has been widely investigated due to its similarity to the human visual system and it has been popularly applied in computer vision. It is closely related to selective processing in the human visual system. Humans can capture or select relevant information within a large visual field. The human visual system is sensitive to salient regions and can quickly identify saliency for additional cognitive processing. Salience detection mimics the human capability to automatically discover salient regions.

There are two types of motivated approaches in salience detection [16] to combine object information: bottom up and top down. Bottom-up methods only use the visual signal. They automatically estimate meaningful objects without any prior assumptions or knowledge [16]. Top-down methods work from some visual priors [17,18]. Our proposed model bis a bottom-up approach, which does not require any prior knowledge.

In recent years, many models have been proposed for salience detection. Inspired by the biological model, Koch and Ullman [19] proposed a salience model that shows the original concepts of salient objects, representing visual attention. Their model uses biological inspiration, which is, the center-surround strategy of color and intensity, for identifying the salient region in an image. Zhang et al. [20] presented a fuzzy growing method to squeeze out regions of interest and extracted saliency while using local contrast. The model for eye fixation that was proposed by Bruce et al. [21] introduced an attentive object maximization model from eye fixation. The model uses Shannon’s self-information for salient region detection. Cheng et al. [22] extracted a saliency map by taking advantage of the global region contrast of the image and defined a spatial relation on the region for salience detection. The bottom-up approach that was used by Judd et al. [23] developed a saliency model that merges low-level features, mid-level features, and high-level features to extract the salient object. However, the local contrast cannot handle the global influence and, thus, only detects the boundaries of the object. Xie et al. [24] proposed a model that uses a Bayesian formwork to exploit low-level and mid-level cues. However, these methods are facing the limitation of computation cost and unsatisfactory results in object detection. We have proposed novel, automatic, and accurate active contour model to address and fixed these limitations. Our unit for salience detection utilizes a global mechanism. The global mechanism depends on the global context to explore salient cues.

Existing active contour models can be classified into two categories: region-based [25,26,27,28,29,30,31,32,33,34] and edge-based [35,36]. Region-based active contour models utilize the statistical information of image intensity in different subsets. Edge-based active contour models use edge indicators to localize the boundaries. Chan and Vese [29] proposed a region-based model that adds curve length as a penalty term. The method uses intensity statistics for object segmentation and fails when inhomogeneity is present. Chan and Vese [37] proposed a piecewise smooth-function approximation for separate regions. The model deals well with the inhomogeneity issue, but wastes time in iteration. Li [38] constructed a local binary fitting model by introducing a local kernel function into the Chan and Vese (CV) model. The model utilizes spatial connections among pixels and extracts local information using a Gaussian function. However, it fails when improper initialization is performed. A new level set introduced by Li [39] successfully reduces reinitialization and avoids numerical error. Zhang [40] suggests using local fitting energy to extract local image information. Local binary fitting decreases the dissimilarity of the image. Zhang [41] introduced an active contour model with selective local or global segmentation (ACSLGS). The ACSLGS model combines the ideas of the Chan-Vese and geodesic active contour (GAC) models and utilizes statistical information. A region-based function is used to control the direction of evolution. The signed pressure function creates pressure to shrink the contour when it is outside the object or expand it when the contour is inside the object, but this model fails on intensity inhomogeneity images.

The previous algorithms discussed have severe drawbacks. The novel active contours in the complex domain for salient object detection (ACCD_SOD) eliminates the drawbacks of existing state-of-the-art models, including initialization and parameter setting.

- An important consideration is that the proposed algorithm can assist as a smooth-edge detector that draws strong edges and helps to preserve edges. This is beneficial in the implementation of the edge sensitivity contour method.

- Initialization is a critical step that significantly influences the final performance. In a complex domain, the initialization process is seamlessly carried out, which is most suitable for salient object detection.

- In practice, the reinitialization process can be quite complex and costly and have side effects. Our algorithm eliminates reinitialization.

- The proposed ACCD_SOD algorithm has been applied to both simulated and real images and outputs precise results. In particular, it appears to perform robustly when there are weak boundaries.

- The complex force function has a relatively extensive capture range and accommodates small concavities.

- The parameter setting process plays an important role in the ultimate result of ACMs and it can produce a great performance improvement if the proper parameters are given to an active contour model. Our model uses a fixed parameter and it shows good performance when compared to state-of-the-art models.

Salience detection can automatically discover salient regions, but it relies on post-processing for accurate boundary detection. The active contour model performs well at localizing boundaries, but it suffers from poor initialization. It requires extra effort to discover the significant regions in the image. The proposed method integrates the salience discovery unit into the active model and uses an active contour model in the complex domain for salient object detection, which eliminates the reinitialization of classic active contour models.

We propose a novel active contour model in the complex domain for salient object detection to address the limitations of the existing models. Our proposed model improves detection accuracy with low computational cost. The main contributions of the proposed algorithm are as follows:

- First, we convert the image to the complex domain. The complex transformation provides salience cues. Salience detection serves as the initialization for our active contour model, which quickly converges to the edge structure of the input image.

- We then use a low-pass filter in the complex domain to discover the objects of interest, which serves as the initialization step and eliminates reinitialization.

- Subsequently, we define a force function in the complex domain, resulting in a complex-force function, which is used to distinguish the object from the background for the active contour.

- Finally, we combine salient object discovery and the complex force and implement the active contours in the complex domain for salient object detection.

In the above, we discussed that previous research investigated different models to handle the limitation, such as improper initialization that influences on the final performance and expansive re-initialization. Second intensity inhomogeneity occurs in many real images of different modalities, which is worse for object detection in higher field imaging and etc.

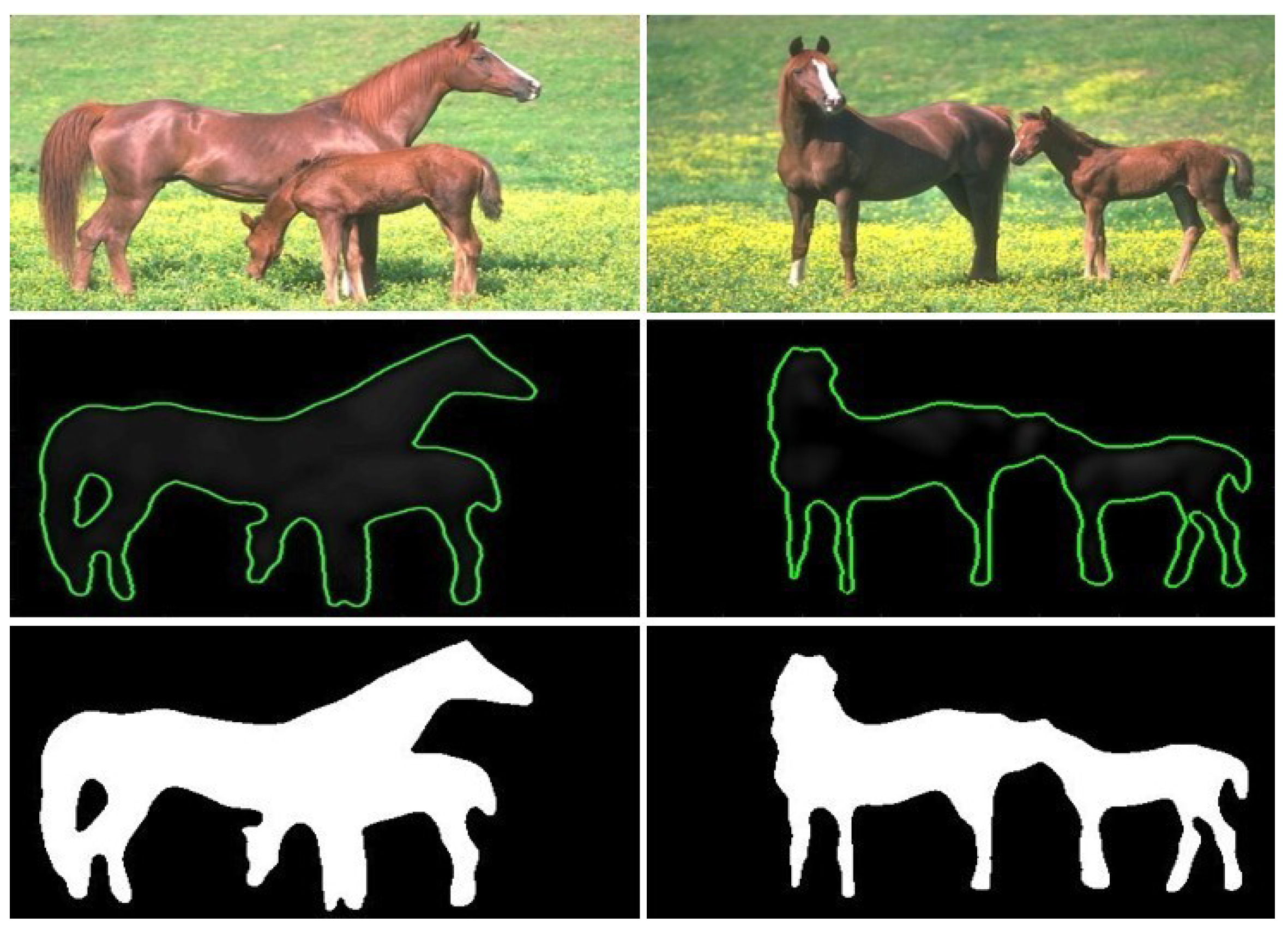

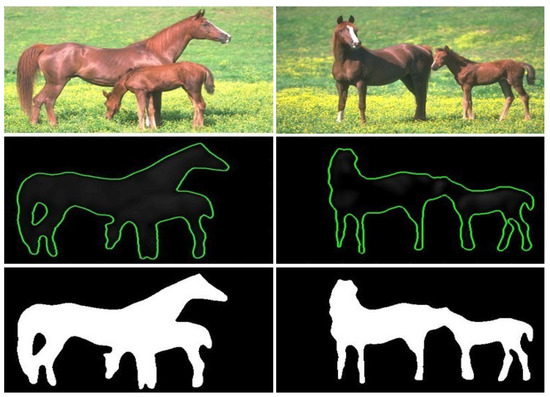

Our primarily aimed is introducing a model, which has the capability (1) to draw straight forward automatic active contour on the object boundary which in process leads to detect salient object. The model is also capable to handle (2) improper initialization, (3) eliminates reinitialization costly implementation, and (4) reducing the computational cost. Figure 1 concludes our aim and illustrates motivation results.

Figure 1.

Illustration of our proposed algorithm. The 1st row contains the original input images, the 2nd row shows the detection of the object boundary during curve initialization and the 3rd represents the salience of the object.

Therefore, our proposed model avails the advantages of complex transformations. Complex transformation creates hints of saliency that serve as the initialization and overcomes the difficulty of improper initialization. Complex transformation gives smooth curve to handles the inhomogeneity problem. Our model eliminates the re-initialization process while using low pass filter by the discovered object of interest. The model also reduces the computational cost in terms of iteration.

The rest of the paper is organized, as follows: In Section 2, we provide a brief overview of related works. Based on this, Section 3 illustrates our formulation of the active contour in the complex domain with its detailed implementation. In Section 4, we describe experiments conducted on both synthetic and natural images, which is followed by the conclusion in Section 5.

2. Related Work

Recently, the active contour model has been widely used in applications, such as object tracking, edge detection, and shape recognition. This section consists of a brief review of related active contour models for image segmentation.

2.1. Geodesic Active Contour (GAC) Model

The standard active contour model (GAC) uses gradient information to detect object boundaries. Let the image be a function defined in the domain, . The GAC model can be formulated by minimizing the energy functional as

where is a closed curve parameterized by . In fact, the natural parameterization is arbitrary. It does not impose constraints on the curve, while the curve has a relation only with velocity defined by the image feature, as follows

where g is the edge extractor and denotes the convolution of image with the Gaussian derivative kernel, , where represents the standard deviation of the Gaussian kernel. By employing the calculus of variation [31] on Equation (1), we obtain the Euler–Lagrange equation, as

where represents the curvature; the inward normal is represented by . Usually, we add a constant velocity to increase the speed of propagation. Subsequently, Equation (3) can be formulated as

where plays the role of the balloon force, which regulates the shrinking and expending the dynamic contour. It also has the capability to increase the propagation speed of the dynamic contour. The gradient can capture the object with a high computational cost. The level set formulation of GAC is

2.2. Chan-Vese Model

Chan and Vese [29] define a region-based model under the framework of Mumford-Shah [42]. The Chan-Vese (CV) model is based on the level set. The contour of the object corresponds to the zero-level set of the Lipschitz function . Chan and Vese defined the energy minimization functional as

where are positive weights, extracts the contour length and counts the region enclosed by the contour. The constant values and are the average intensities for the foreground and background, respectively. The average intensity is used for the region inside the contour, and for the region outside the contour, . Given the level set function, we can obtain and as then, the optimal solution for the CV model is as follows

where is the gradient operator, is the Heaviside function, and is the Dirac delta function. and are fixed parameters, controls the smoothness of the zero-level set, and is used for speeding up the dynamic contour. The driven force is controlled by and The Heaviside function and Dirac measure are given by and , respectively. They are usually approximated by

The CV model has constant intensities for the background and foreground. Although it is robust against noise, it fails when inhomogeneity is present.

2.3. Active Contours with Selective Local or Global Segmentation (ACSLGS)

The level set formulation of K. Zhang et al. [41] used the signed pressure force in [29], which assumes that object intensities are homogenous. ACSLGS [41] proposed a signed pressure function that is different from that in [29] and it plays an important role in segmentation. It acts as a global force that drags the corresponding pixels to the foreground or background. The force function makes the region shrink when it is outside the object and expand when it is inside the object. Their pressure function is in the range of and it has the form

where and are defined in Equations (7) and (8). By plugging them into Equation (5), the solution for the ACSLGS model is

Assume that both of the intensities are homogenous. Since the inside and outside intensities cannot be obtained concurrently, where the contour is. Therefore,

The dynamic contour has distinct intensity signs inside and outside of the object and the level-set function is initialized to a constant. is used for regularization of the level-set function [43]. By removing both functions in Equation (14), which is unnecessary, the level set formulation can finally be written as

The initialization of the active contour with selective local or global segmentation is

where is the image domain, is a subset of for the initialization, defines the boundary of the initialized region, and is a constant, where . The active contour with selective local and global segmentation model has the same disadvantages as the CV model.

3. Proposed Method

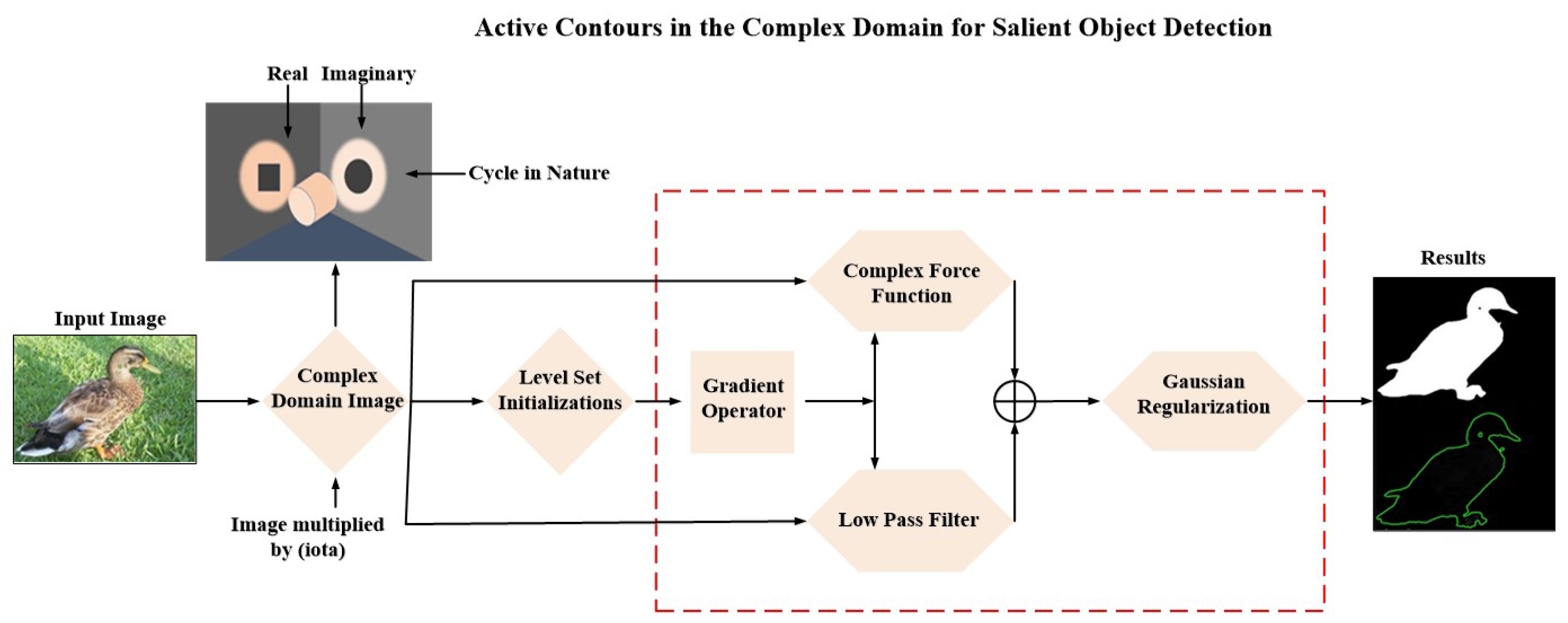

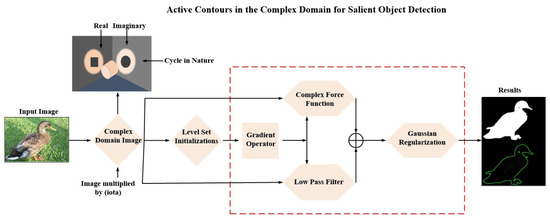

The previous models of the active contour suffer from improper initialization and sometimes require reinitialization. We propose a novel method, as illustrated in Figure 2, in order to reduce the limitations discovered in Section 2 and use the active contour in the complex domain for salient object detection. In this section, we will describe the complex domain transformation and then our formulation for the active contour model. We will discuss and formulate a low-pass filter in the complex domain and, finally, the complex force function will be defined.

Figure 2.

Illustration of the architecture of our ACCD_SOD algorithm.

3.1. Complex Domain Transformation

The active contour model displays unstable behavior in the energy minimization problem and it suffers from improper initialization. Pixelwise salience detection provides a solution for initialization and further foreground identification. The conventional method of the active contour model has limitations in the case of noise. We propose a complex domain algorithm (ACCD_SOD) to resolve the problem in order to obtain clear and accurate salient objects. Our proposed algorithm for saliency operates on the complex domain. We combine the complex domain with the Schrödinger equation [44] to develop a fundamental solution for the linear case [45,46]. The complex domain has a general property of forward and inverse diffusion [47]. The complex domain contains salience cues and gives favorable results for cyclic objects, which makes it suitable for active contours [48]. The complex domain consists of two–two signals, real and imaginary. We transform the image to the complex domain by multiplying the input image by a complex number (iota).

Let Ω define the image domain and a complex function f:Ω → C, where gives the complex value. The special symbol iota (i) stands for the unit of the imaginary part and can be represented as . It has the property that . The standard form of a complex number is . Complex numbers are the extension of real numbers. If , it is a real number, and, if , it is a pure imaginary number. We convert a real image to the complex domain via

The transformations between the complex function and the original real image are summarized, as follows:

where is an odd number

Let a value , where is the integer domain. The function gives a complex value. Its output complex value preserves the characteristics that

If the number n increases to infinity, then the value has the following limit:

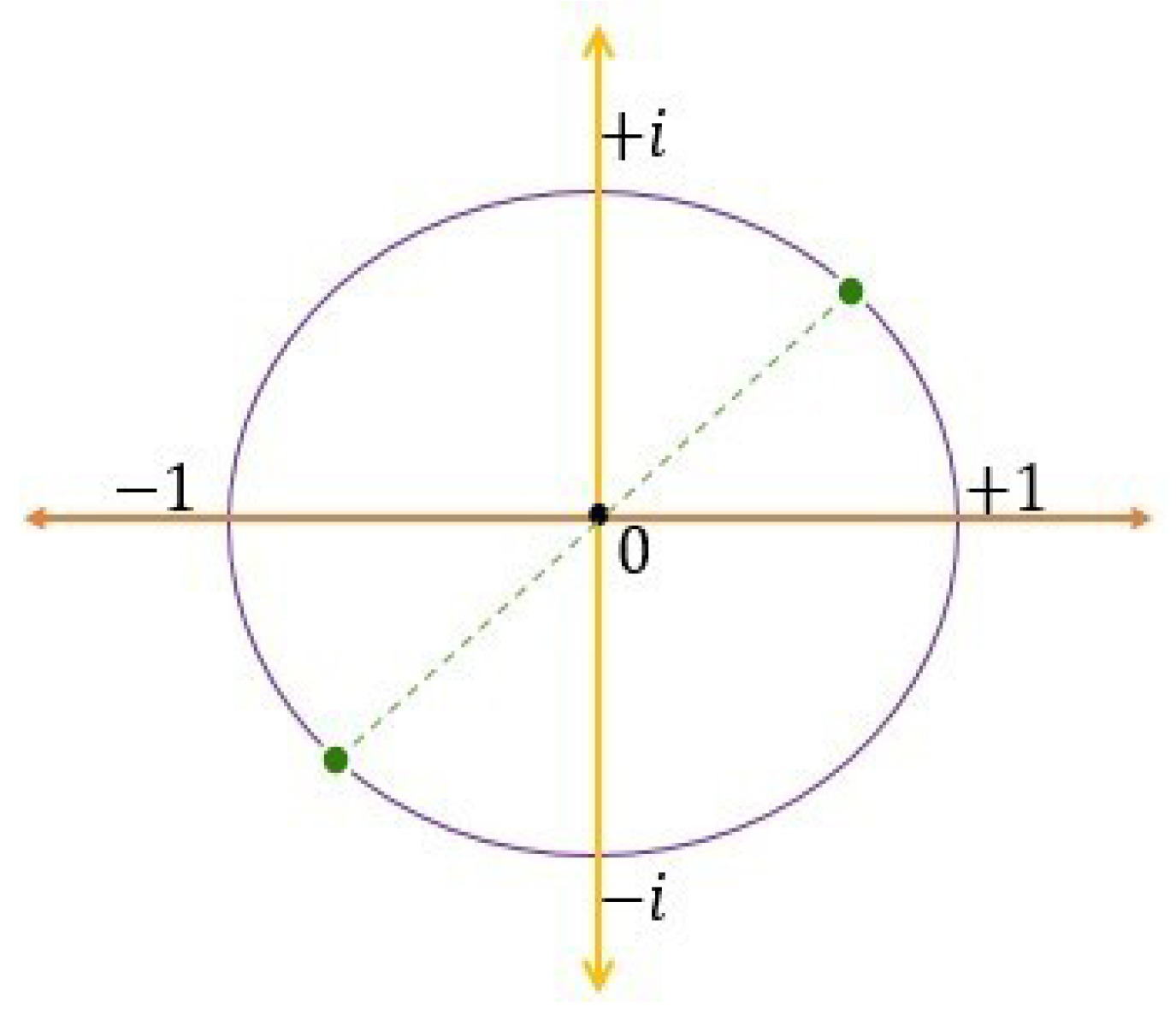

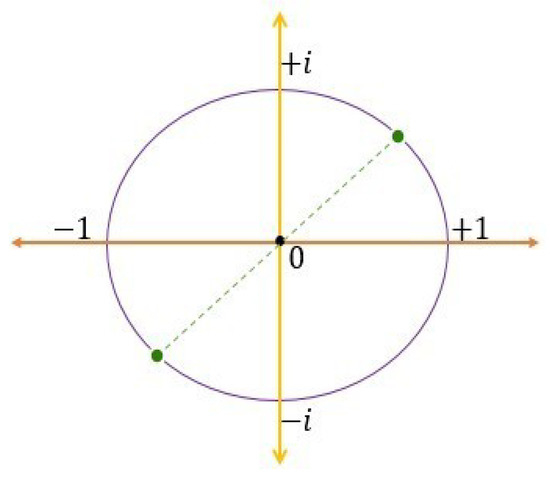

We use the geometrical representation in Figure 3 to explain the limits. Multiplication by is alternately equal to rotation by 360 degrees, and it will maintain the complex value at the same position. When we multiply a complex value by −1, it is equivalent to rotation by 180 degrees and, if we multiply by two times, it is equal to . Multiplication by is equivalent to 90-degree rotation. If we multiply by , this can also be expressed as a rotation of 45 degrees. Multiplying by eight times is equal to a 360-degrees rotation. The value on the imaginary unit circle of 45 degrees gives the value of . In general, multiplication by is the same as a -degree rotation.

Figure 3.

Geometrical representation of complex values.

3.2. Low-Pass Filter

The algorithm uses a low-pass filter in the complex domain to suppress noise and provide a hierarchical framework for determining salience. The low-pass filter used is a penalizing term, which works as the initialization and eliminates the reinitialization of the curve. The low-pass filter is employed on the active contour while using the gradient operator. The gradient operator suppresses the high-level spatial frequencies. In each iteration, each intermediate result is passed through the low-pass filter. The low-pass filter captures the details of the salient region in the specific frequency range to pass and attenuates frequencies outside that range. The low pass filter gives the best representation of the salient region at multiple resolutions. The output produces detailed information in the form of salience cues. This process extracts saliency results in the complex domain while fluctuating function when it is connected to high illumination. The low pass formulation can be written as

where represents the propagation speed, represents gradient operator, and S is a complex filter. Here, with a slight abuse of notation, we use |.|L2 to denote the square root of the sum of the squared values of the real and imaginary part. The low-pass filter in the complex domain discovers the objects of interest and helps the contour to converge on those areas.

3.3. Complex Force Function

The signed pressure force function has an important role in expanding the dynamic contour [41] when the contour is inside the object boundary and shrinking when the dynamic contour is outside the object boundary. The function that is introduced here is different from ACSLGS and the design of complex signed pressure [41,49]. We propose a pressure force, also called a complex force function, which utilizes the statistical information in the complex domain to change the intensities inside the contour to the foreground and those outside the contour to the background. The proposed complex force function can be written as

The complex force function dynamically increases the interior and exterior energies of the curves in order to reduce the chance of entrapment in a local minimum, while the curve is far from the boundary of the object. When the curve is close to the object boundary, then the energy is decreased, and the curve is automatically stopped near the boundary of the object. The introduced model reduces the computational cost efficiently. The same analogy is adopted for and , defined in Equation (7) and (8).

The global complex force function is created by multiplying the complex domain image per pixel with a low-pass filter and a complex force function. The proposed Algorithm 1 of active contour salience formulation is adopted according to Equation (15).

| Algorithm 1. Steps for our proposed algorithm active contours in the complex domain for salient object detection (ACCD_SOD) |

| Input: Reading in an image. Image transformation: 1: Transformation of original image into complex domain according to (16); Initialization: 2: Initialize contour according to (23); if ; otherwise ; 3: Initialize the related parameters α = 0.4, σ = 4 and ; set n = 0; Repeat: 4: While n < iteration, do 5: Compute low-pass filter with Equation (21); 6: Compute complex force function with Equation (22); 7: Update the level-set function according to Equation (23); 8: n = n + 1; end 9: If converge, end 10: Compute Gaussian filter for regularization according to Equation (2); 11: end 12: Output: The resultant salient object . |

This algorithm maintains a fixed value 0.5 of λ, which controls the evolution of the contour, while the ACSLGS model uses a parameter that is not fixed. During contour evolution, the proposed algorithm needs to initialize the level-set function ϕ periodically for the signed distance function. We initialize the algorithm using a Gaussian filter to regularize the level-set function and avoid reinitialization. We define the initial level-set function and the initialization of for the proposed algorithm, as

where the representation of the image domain is , is a subset of and detects the boundary of the image domain; is a constant, where , and is important on the moving interface to control propagation on the specified interval.

4. Experiment Analysis and Results

4.1. Computational Efficiency

The performance, efficiency, and average running time of the proposed Algorithm 1 are tested on a desktop machine with a Core I-7 2.50 GHz CPU and 8 GB RAM on Windows 7. The proposed algorithm is implemented in MATLAB. The results are summarized in figures and tables. The results show that our algorithm is the fastest, has low computational cost, and provides high-quality of salient object results, as illustrated in Figure 1.

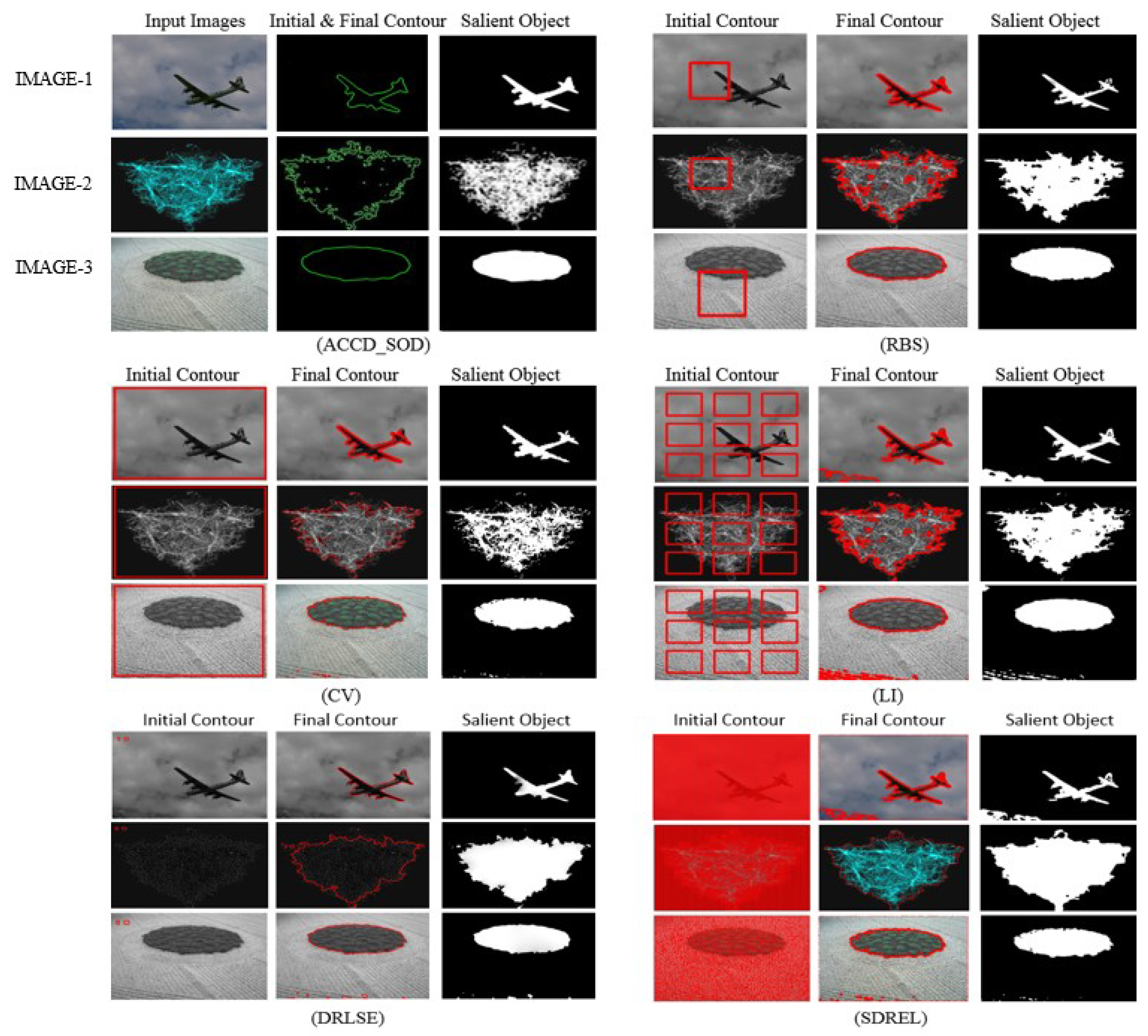

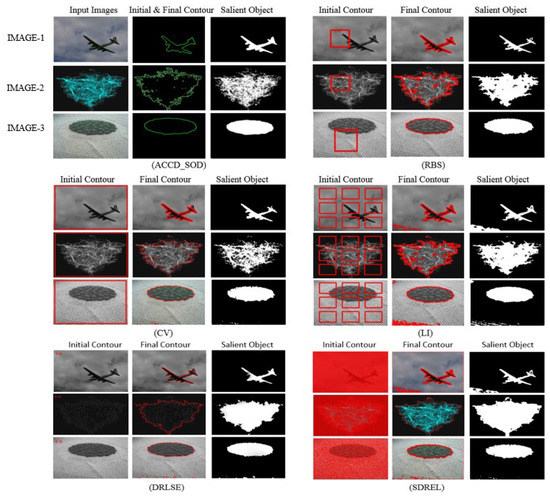

4.2. Comparison with Recent State-of-the-Art Models for Active Contours

We evaluate our algorithm qualitatively and quantitively on IMAGE_1, IMAGE_2 and IMAGE_3, as shown in Figure 4, and on real images by comparison with SDREL [50], RBS [51], the CV model [29], the LI model [52], and the DRLSE model [39]. The results show the illustration of initialization additionally, the initial contour, the final contour and the salient object.

Figure 4.

Initial contour, final contour and salient object results of RBS, Chan and Vese (CV), LI, DRSLE, SDREL, and our proposed algorithm ACCD_SOD. Our algorithm directly fitted during the initial stage, while the other state-of-the-art models failed during the initialization stage, which increased the computational cost.

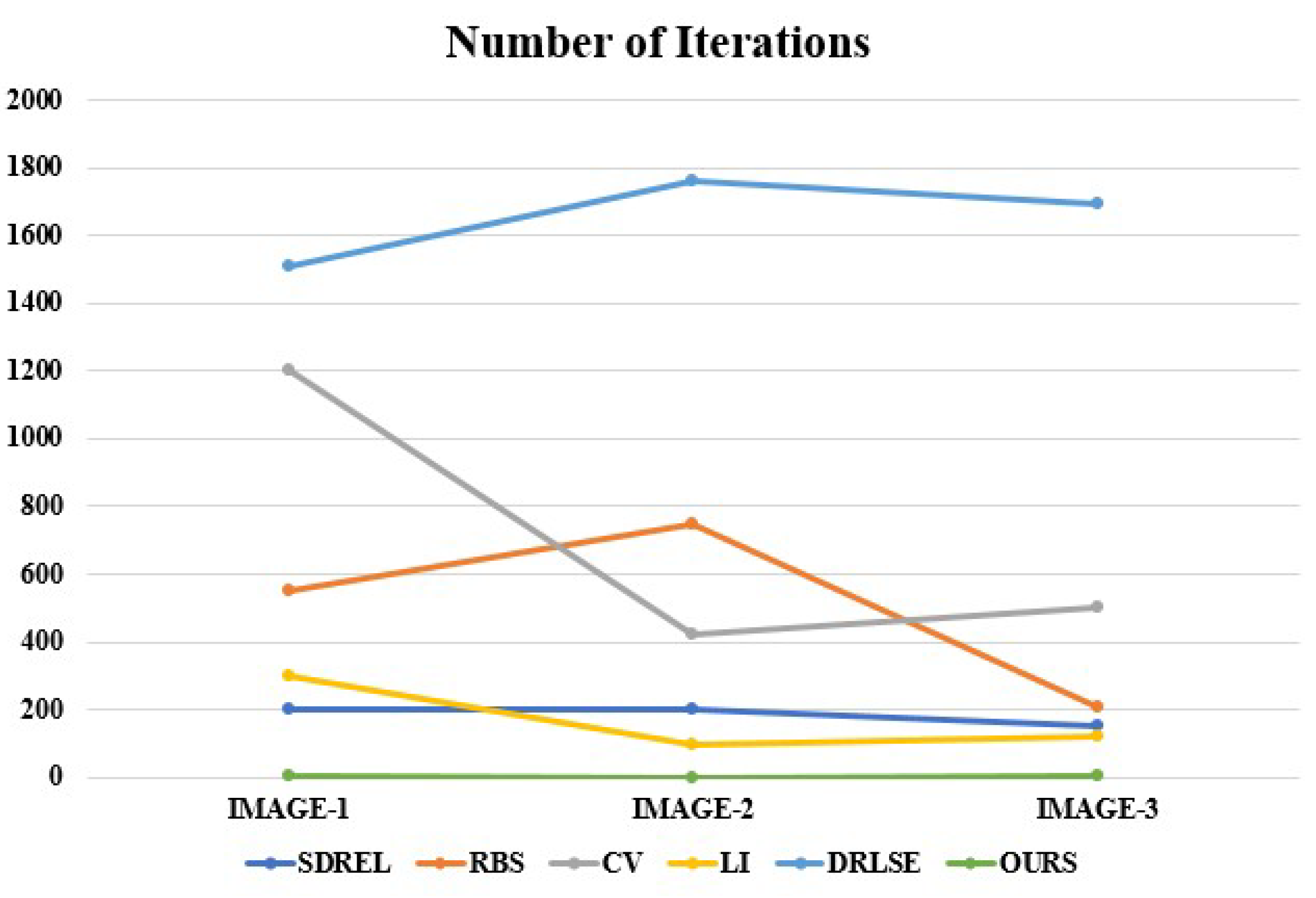

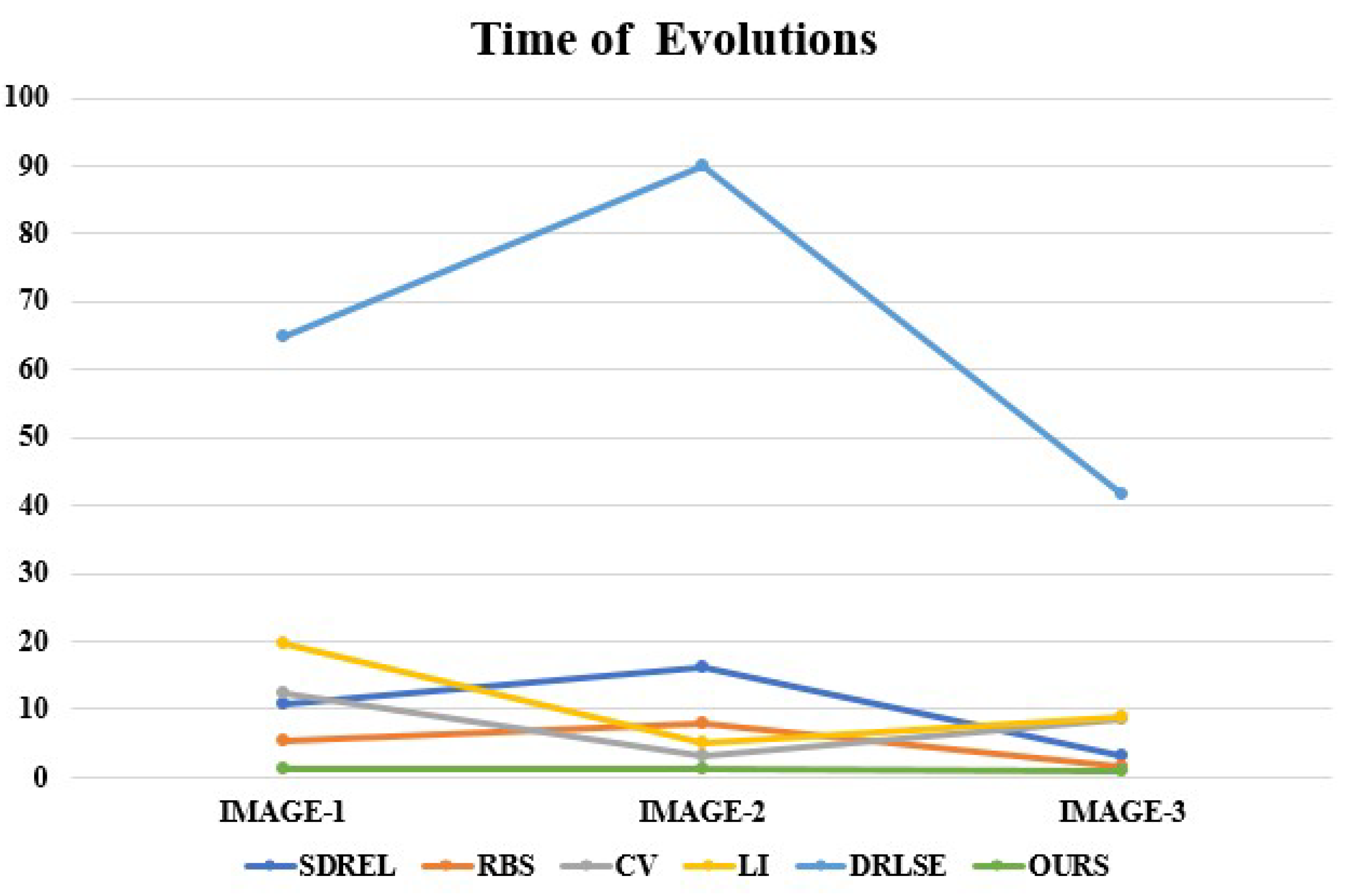

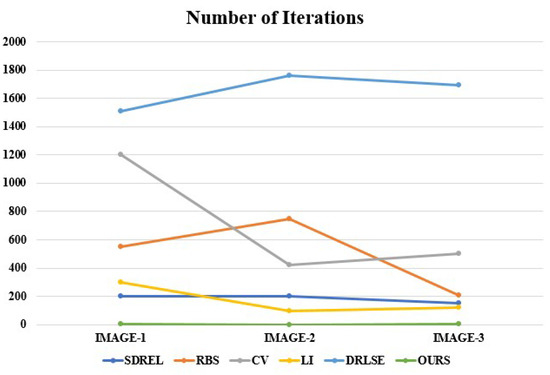

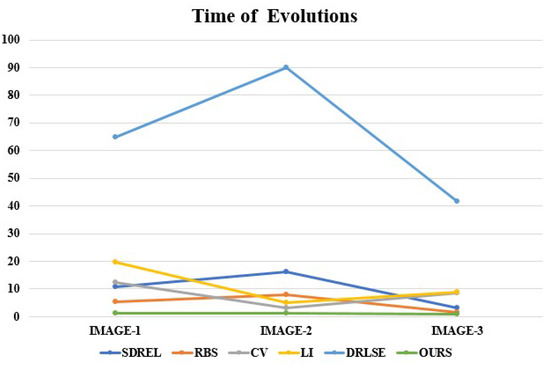

The iterations represented in Figure 5 and Figure 6 describe the time of evolution, represented in the form of a curve. The comparison results in Figure 5 demonstrates that our algorithm has iterate only once for all the three images to detect initial and final contour of the object, while the other state-of-the-art models fails to detect boundary of the object in first iteration. Figure 6 also shows that our proposed algorithm has a very low computational cost when compared to the other methods.

Figure 5.

Comparison of our algorithm with five method on the basis of the number of iterations.

Figure 6.

Comparison of our algorithm with five methods on the basis of time in seconds.

Figure 1, Figure 4 and Figure 7, show that our proposed algorithm initializes on the boundary of the object, while the other models fail to find the boundary during the initialization time. The initialization of the curve plays an important role in object detection.

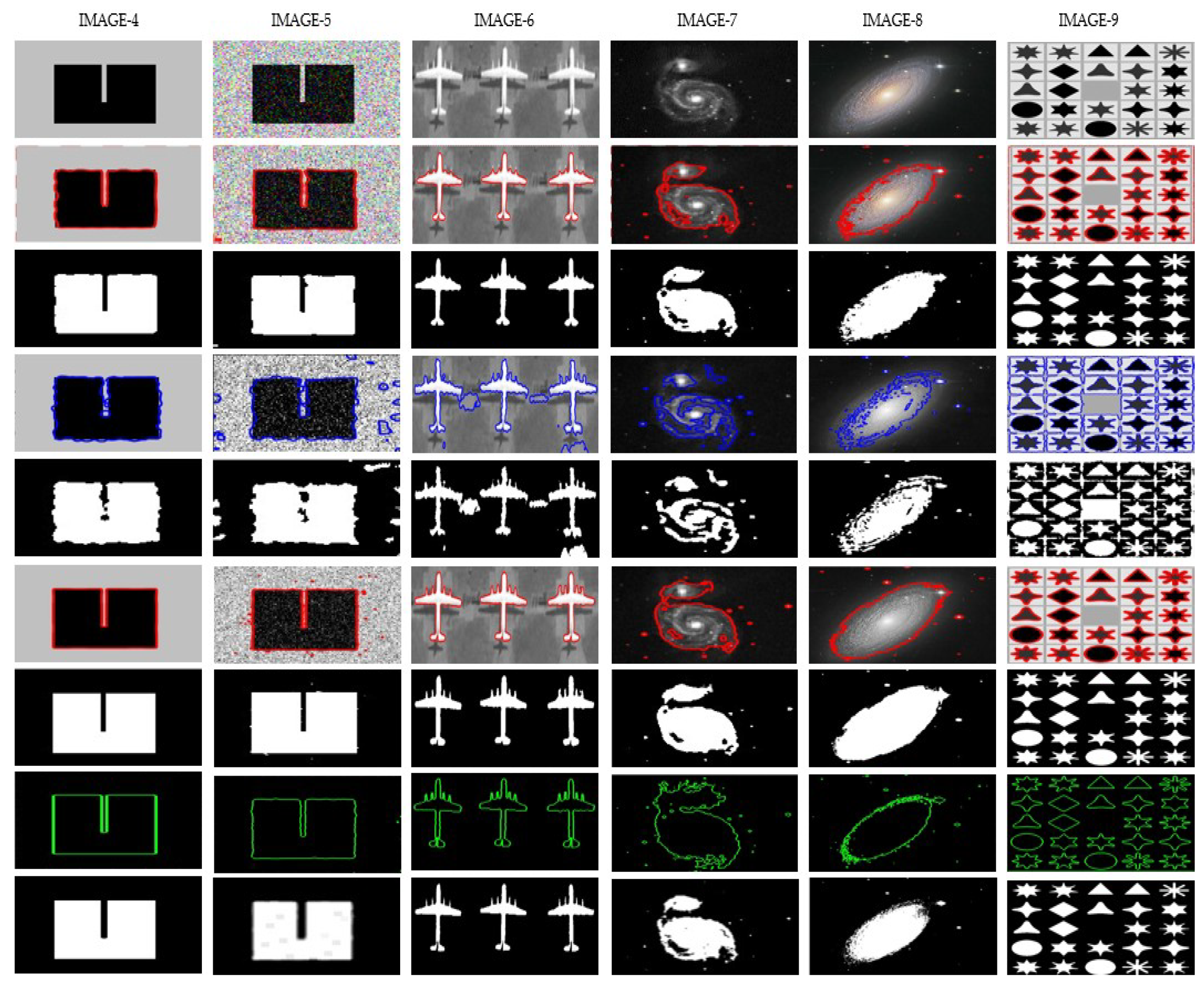

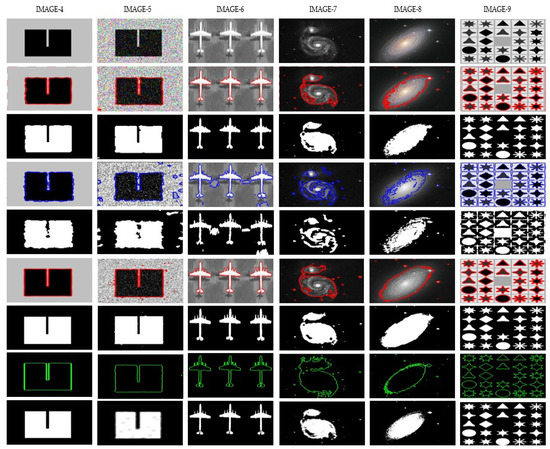

Figure 7.

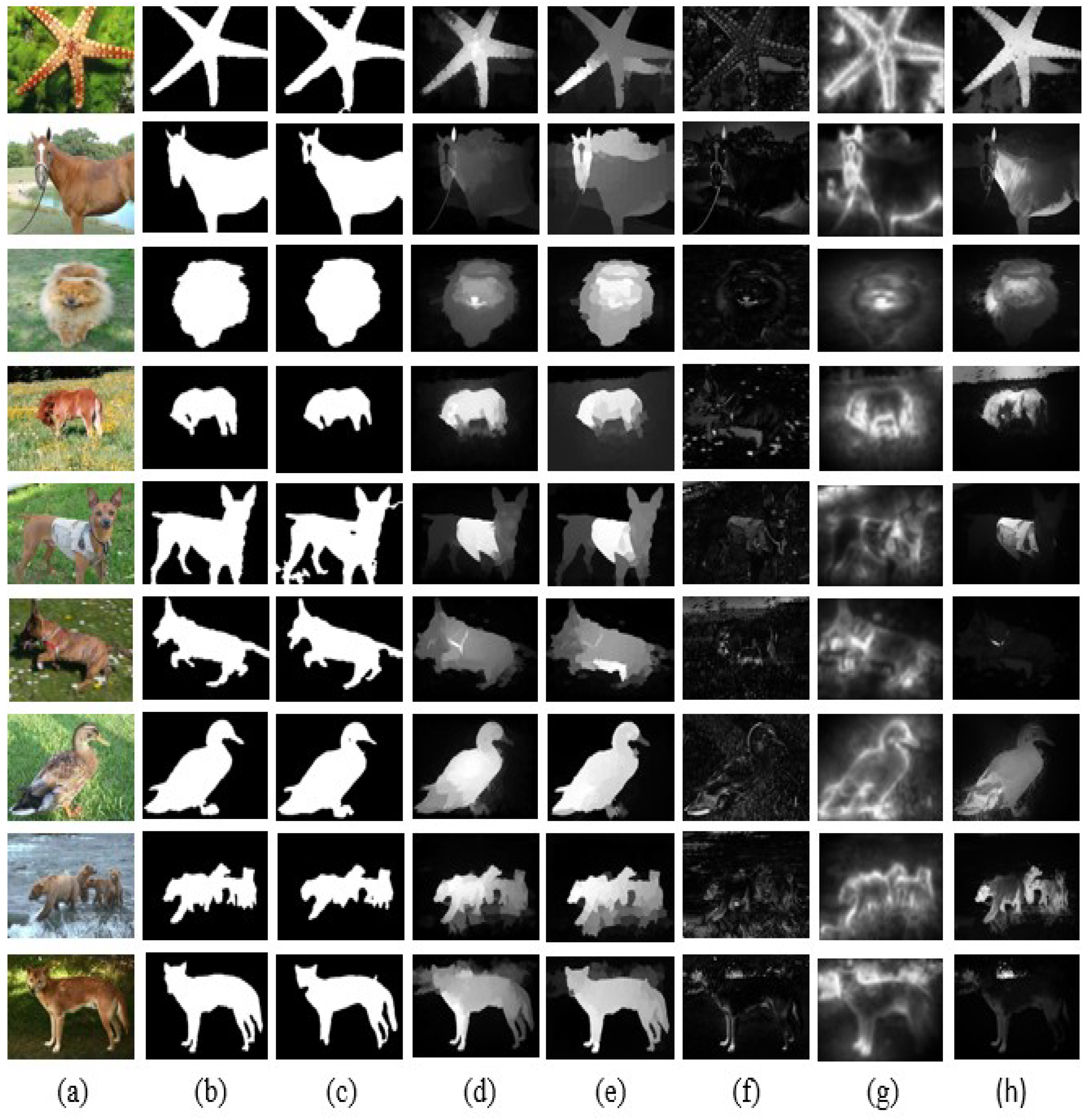

The first row consists of the original images. The second row demonstrates the object contours of the SDREL model, the third row gives the SDREL salience results, the fourth row shows the contours of LFE, the fifth row shows the salience results of the LFE model, the sixth row shows the contour of the LI model, and the salience results are in the seventh row. The eighth row shows the contour results of our algorithm, and the final salience is in the last row.

Next, we conduct experiments on real images. The proposed method is evaluated on the Microsoft research Asia (MSRA-1000) dataset [53]. MSRA-1000 is a traditional dataset for saliency detection and it is designed to promote research in image via open and metrics-based evaluation. The dataset contains 1000 images with accurate pixel wise ground truth saliency annotations. MSRA-1000 dataset images have a large variety in their content, background structures are essentially simplistic and smooth. The dataset available on line: https://mmcheng.net/msra10k/. Our algorithm used fixed values of sigma and alpha throughout the comparison. We comparatively evaluate the salient region of our algorithm and compare it with four state-of-the-art models on the basis of four metrics, i.e., the rand index [54], global consistency, variation information (VOI) [55], and the F-measure. We select 886 images from the MSRA dataset. Table 1 shows the results. The methods that are involved in the comparison are SDREL [50], RBS [51], the CV model [29], the LI model [52], and the DRLSE model [39]. Our ACCD_SOD achieves the highest RI (higher is better), lowest GCE and VOI (lower is better), and highest F-measure. In terms of running time, our algorithm is faster than the state-of-the-art models.

Table 1.

Statistical Results of Evaluation on the MSRA-1000 Dataset.

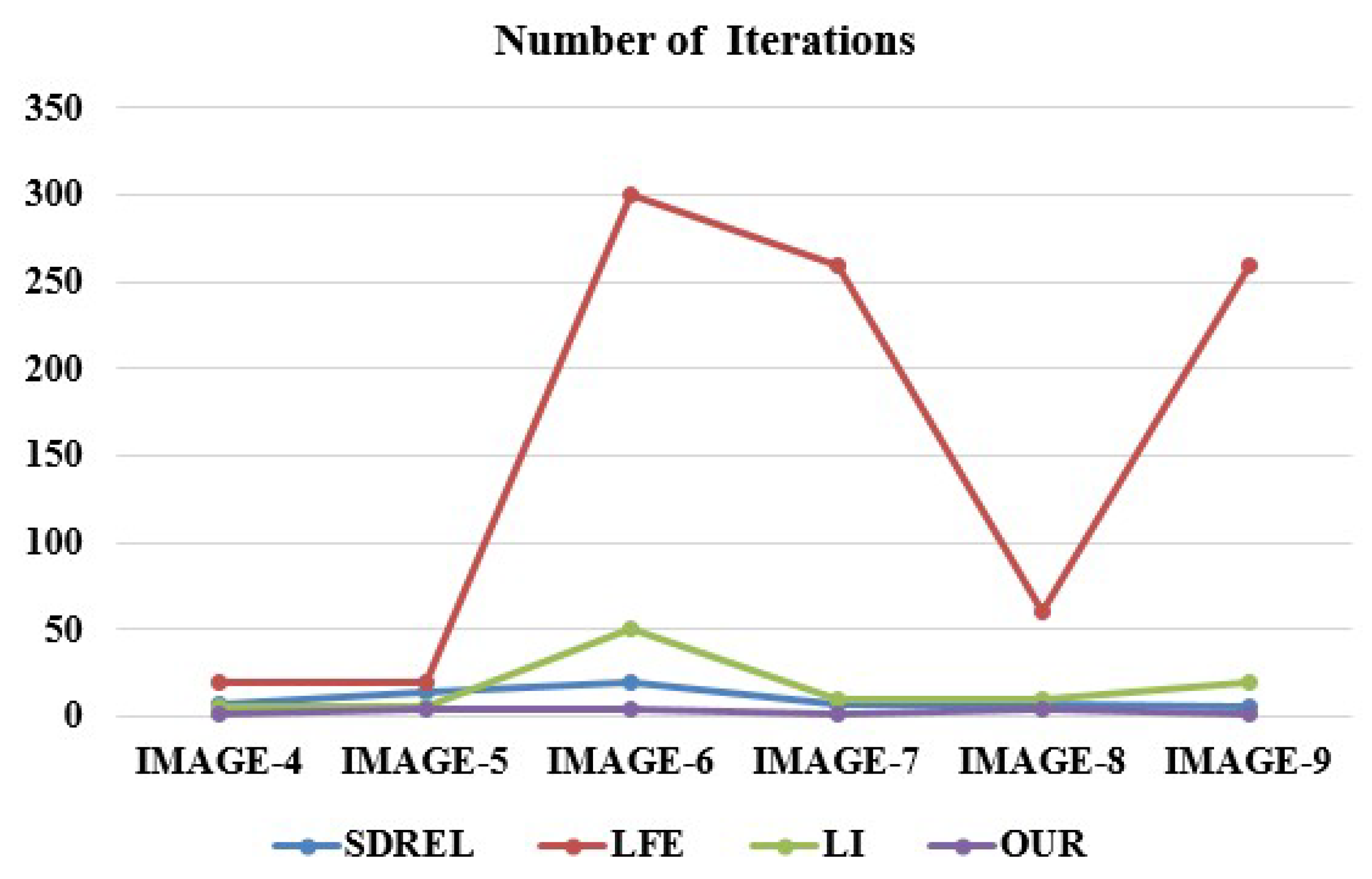

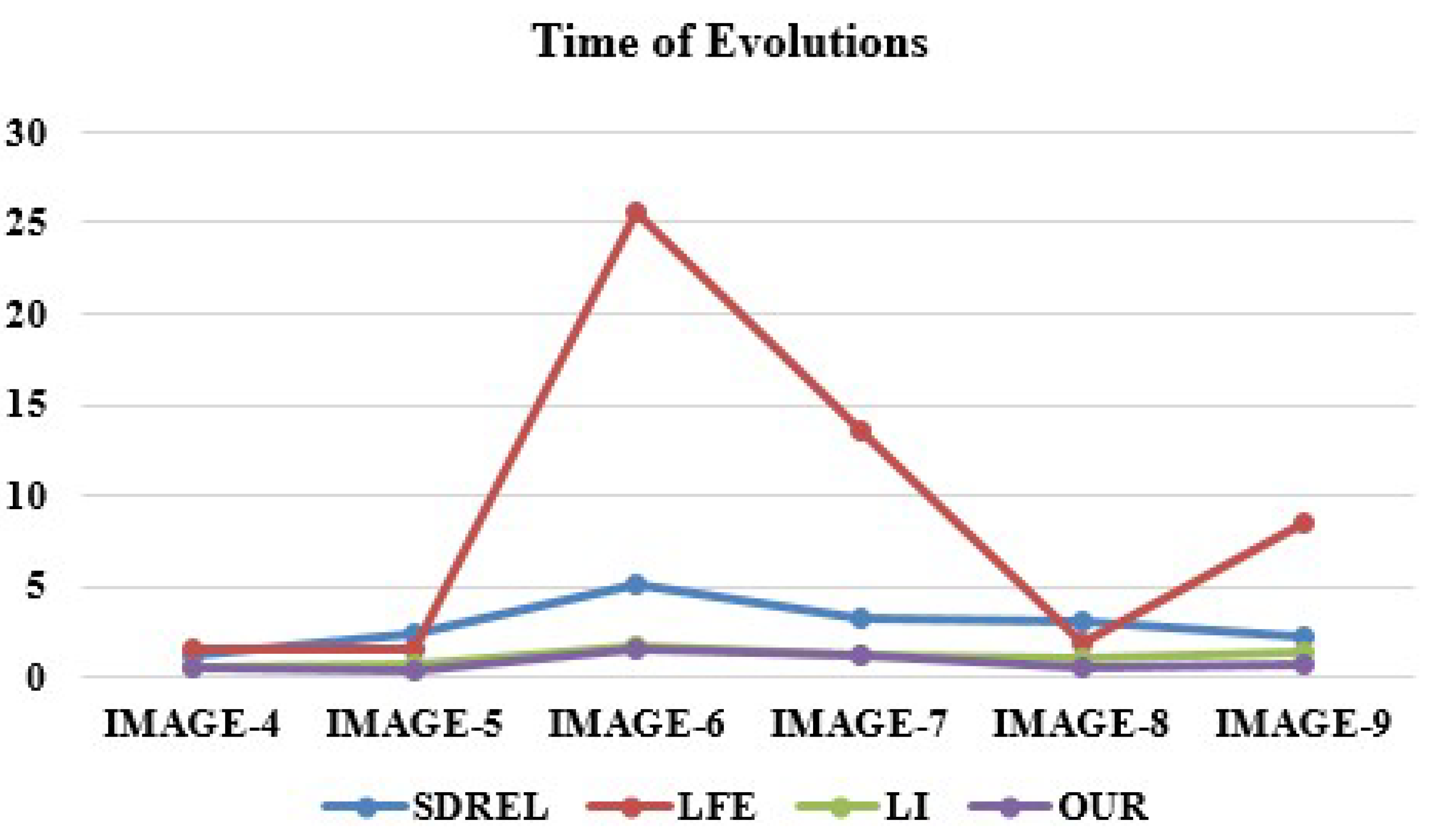

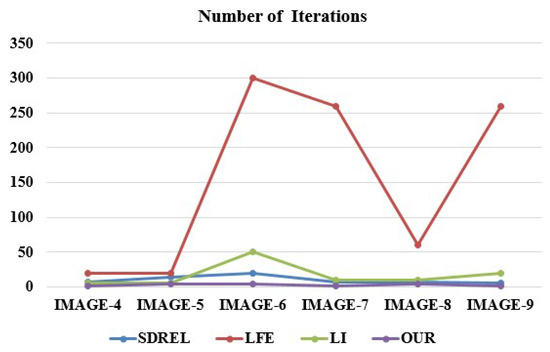

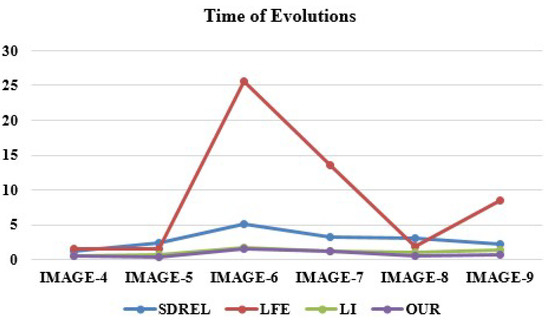

We also compare our ACCD-SOD algorithm with SDREL [50], LFE [40], and LI [52] on the six synthetic images given in Figure 7. The comparison results that are based on the number of iterations are summarized in Figure 8, with the evolutions time being summarized in Figure 9.

Figure 8.

Comparison of our algorithm with three methods on the basis of the number of iterations.

Figure 9.

Comparison of our algorithm with three state-of-the-art methods on the basis of time in seconds.

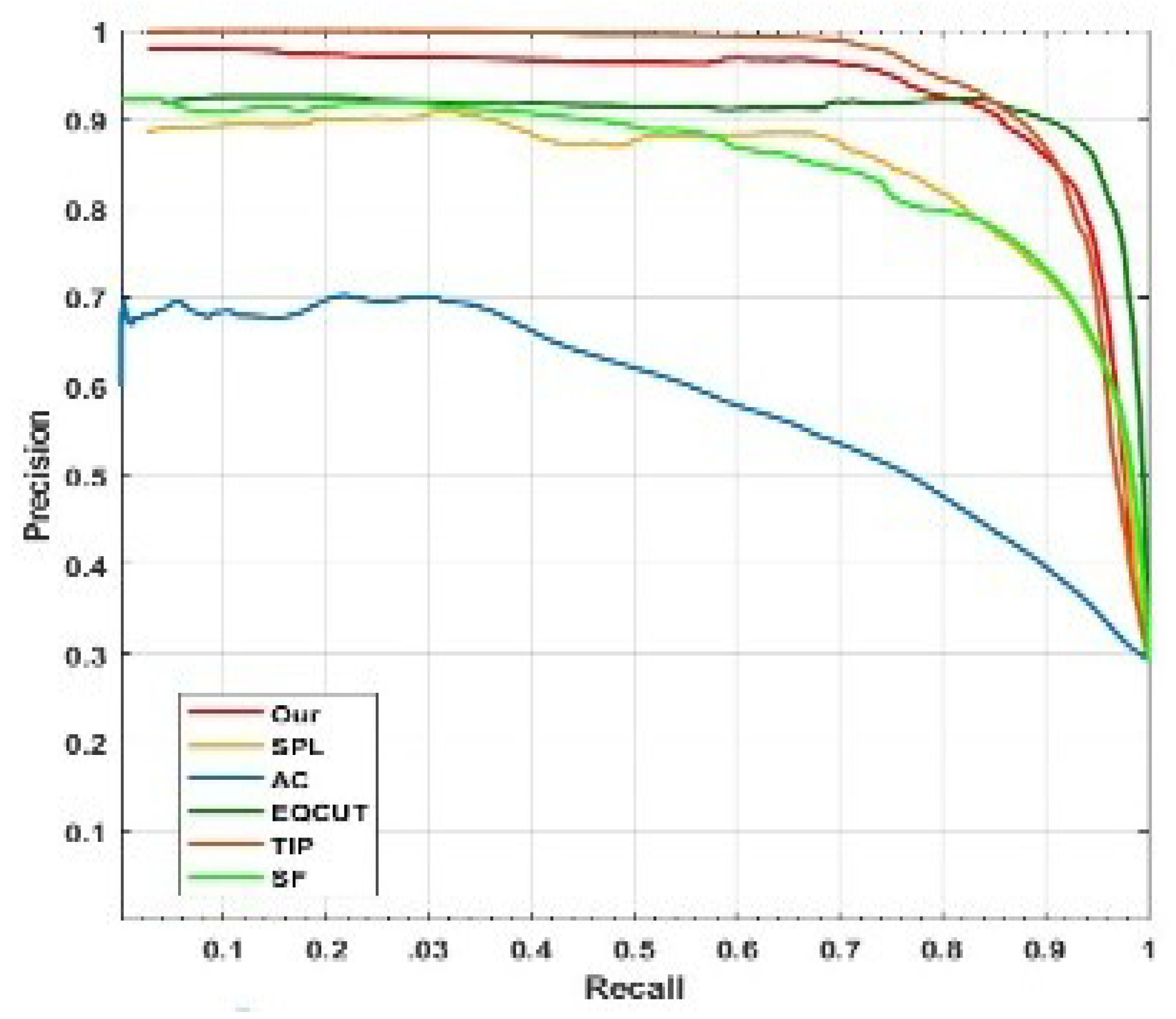

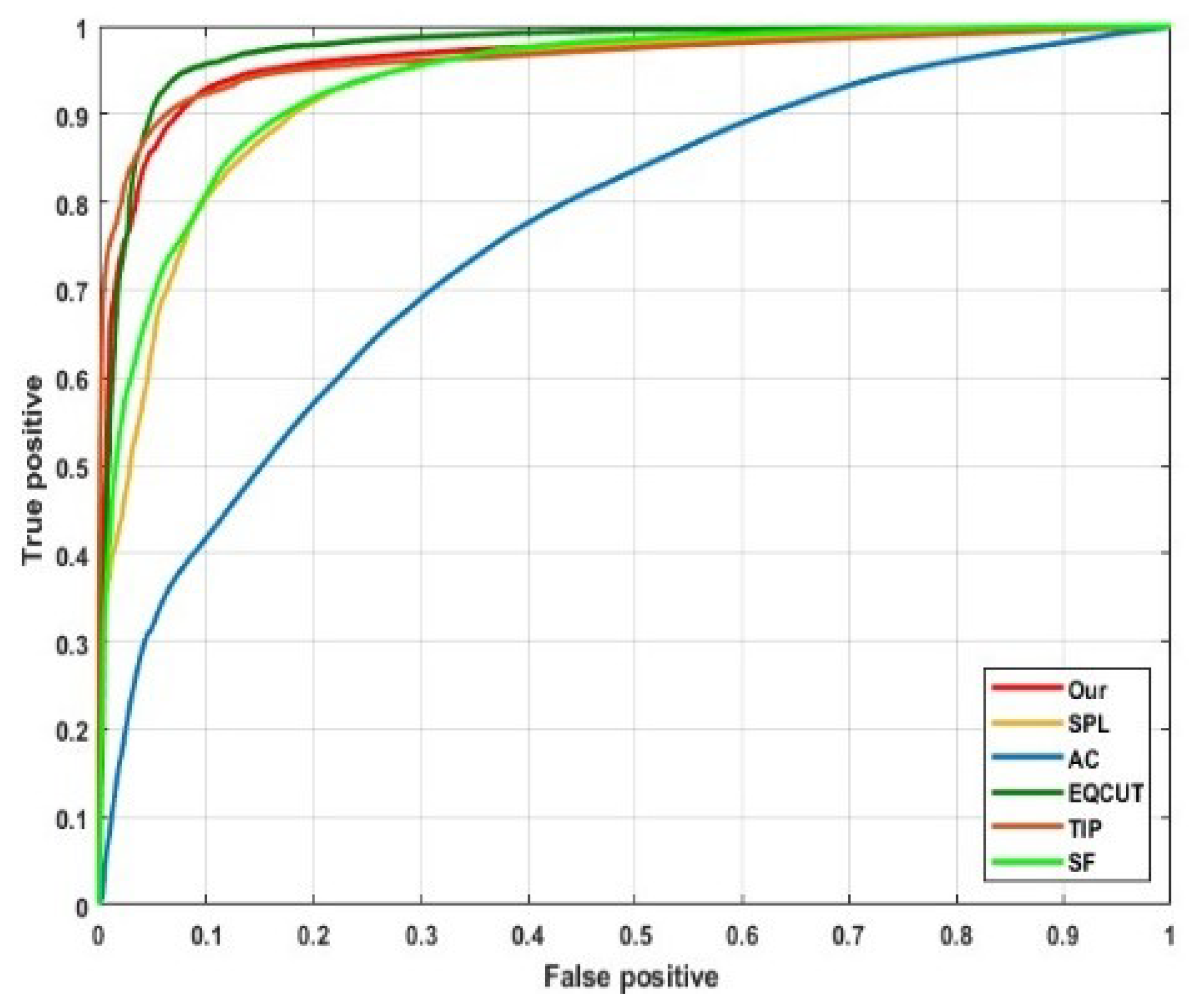

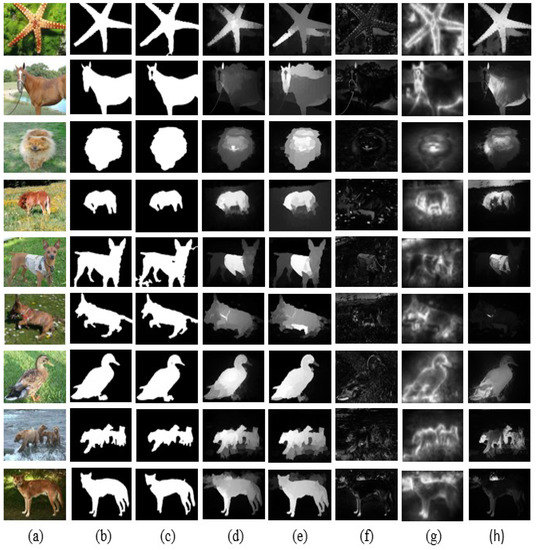

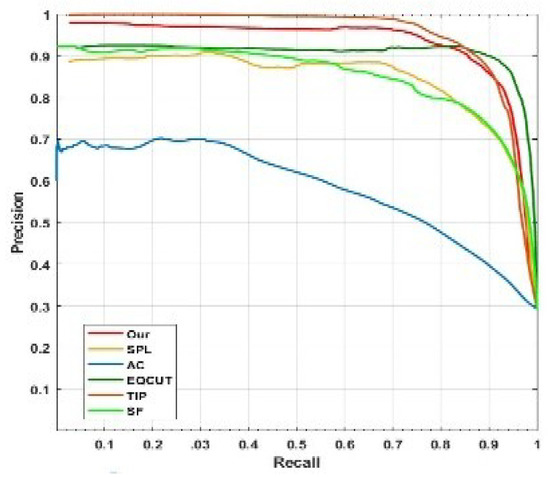

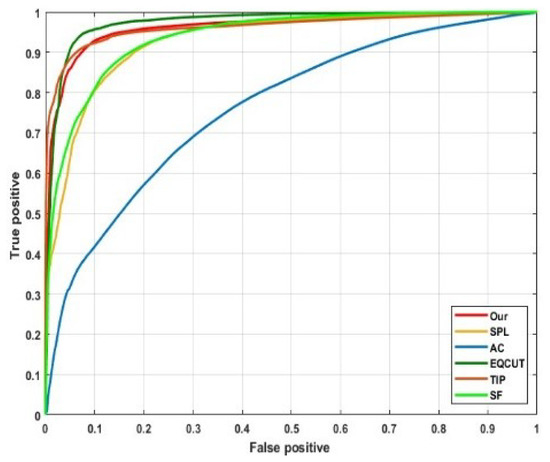

4.3. Comparison with Recent Models without Active Contour

The proposed method is qualitatively and quantitatively compared with state-of-the-art algorithms, including Normalized cut-based saliency detection by adaptive multi-level region merging published in Transactions on Image Processing simply abbreviated (TIP) [56], Visual saliency by extended quantum cuts (EQCUT) [57], Context-aware saliency detection (CA) [58], Saliency detection using maximum symmetric surround abbreviated on the name of Achanta (AC), [59] and Saliency filters: Contrast based filtering for salient region detection (SF) [60]. The proposed method is evaluated on part of the MSRA 1000 dataset. The aim of our complex domain algorithm is to boost the salient region that is based on the active contour. Figure 10 shows the comparison results, and Figure 11 shows the precision and recall curves. In Figure 12, we present the curves of true positives vs. false positives. The curves show that the proposed algorithm surpasses the others, except TIP. The Table 2 shows average running time for each algorithm image (image per second) on MSRA-1000 datast.

Figure 10.

Qualitative comparison of the salience results of our model with those of five state-of-the-art models. (a) Original images; (b) ground truth; (c) results of our proposed algorithm ACCD-SOD; (d) results of the Transactions on Image Processing (TIP) method; (e) results of Visual saliency by extended quantum cuts (EQCUT); (f) results of the Context-aware saliency detection (CA) model; (g) results of the Achanta (AC) model; and, (h) results of the Saliency filters: Contrast based filtering for salient region detection (SF) model.

Figure 11.

Precision-recall (PR) curves comparing the proposed approach with existing ones. Our algorithm outperforms the others, except TIP.

Figure 12.

ROC curves comparing the performance of our proposed algorithm with that of five state-of-the-art models.

Table 2.

Average Runtime (Images Per Second) for Five State-of-the-art Methods and Our Method.

We summarize the precision–recall curves and report the F-measure, defined by

The parameter is the tradeoff in precision and recall . The recall and prediction are calculated by and , where is the detected object and is the ground truth.

4.4. Discussion

The effectiveness and stability of the proposed algorithm are verified based on both synthetic and natural images in this section. The major contribution of our ACCD_SOD model is the complex domain. Our model introduces a novel algorithm while using the complex domain that simultaneously gives salience cues and finds the exact boundary. The proposed ACCD_SOD model obtains salience detection results using the global complex force function. Our primary aim is proposing a model that controls the initialization irregularity in active contour and is also capable of eliminating the expensive re-initialization. Pixelwise salience detection provides such a solution for initialization and the subsequent foreground identification. The model resolves not only the problem of initialization by complex domain, but, precisely makes the most excellent use of low pass filter in complex domain to eliminate the problem of re-initialization. It is clear from the results that our algorithm detects the boundary accurately without repeated iterations or an extra initialization step. Figure 1, Figure 4 and Figure 7 prove that the algorithm directly initialize on the object boundary.

Among the methods that are involved in comparison, the DRLSE model is over reliant to the initialization and creating numerical error. The LI model requires the convolution before the repetition and, therefore, the functioning time of the computing is decreased, but the initial contour is ineffective in robustness. The RBS model used the local interior and exterior statistics to initialize every pixel, which increases the computational complexity. The LFE model applies local information to handle intensity-inhomogeneity for creating acceptable segmentation decisions but sensitive to noise. SDREL fail on images with complex background, as investigated by a researcher [44,45,46,47]. Proved from our results, the complex domain provides a smooth curve and, meanwhile, its implementation reduces the computational cost when comparing to the other model.

Our proposed model shows high robustness compared to the SDREL, RBS, CV, LI, DRLSE, and LFE models and accommodates inhomogeneous images. It avoids reinitialization and thus also reduces the computational cost. The complex force function has a relatively extensive capture range and accommodates small concavities. We examine that, in both situations with strong objects or week objects, our complex domain approach achieves encouraging results. The proposed approach can be implemented and suited to different modality of images. However, the proposed approach detects and recognizes approximate boundaries in the presence of weak objects. Therefore, the evaluation capacity is reduced in the presence of poor and low quality or heavy blur. The heavy noise also reduced the estimation ability due to the impact on the edge of the object. Our proposed model is not considerable for images, like texture.

The parameter setting process plays an important role in the ultimate result of ACMs and it can produce a great performance improvement if the proper parameters are given to an active contour model. If the value of parameter α is large, the propagation of dynamic interface cause results and it cannot be controlled. In the case of small α, then contour lost its propagation speed. It is difficult to choose a proper value of α. In both cases, if value is too large or in the case of too small value of α, the performance and result will be effected and undesirable. Therefore, we set proper and fixed value of the parameter alpha (α) = 0.4 for all synthetic and natural images. The value of the computation parameter should be accurately used. It is well known that a very small value might generate undesirable results, whereas extremely large values can drive to high computational complexity. Sigma has a significant role, the sensitivity to noise arises in case of keeping the value of sigma is very small. However, the computational cost and edge leakage arise in the case of increasing sigma. Therefore, to achieve a more precise saliency result, setting the computation parameter σ to be a comparatively small value will give stable propagation. Our model uses a fixed parameter and it shows good performance when compared to state-of-the-art models. The local test shows that we can obtain better results if the parameter is optimized for every single image.

5. Conclusions and Future Research

In this paper, a new complex domain model is proposed, while using a low-pass filter as a penalty term and a complex force function to distinguish the object from the background for the active contour. It is well known that there is no perfect method that can succeed for all types of images. The detection results depend on many factors, such as the texture, intensity distribution, image content, and problem domain. Therefore, it is impossible to consider a single method for all types of images. We focus on the initialization and reinitialization problem and propose a novel complex-domain-based transformation for joint salience detection and object boundary detection. First, we transform the input image to the complex domain. The complex transformation provides salience cues. We use a low-pass filter for distinguishing the salient region from the background regions. Finally, the complex-force function is used to fine-tune the detected boundary. We compare the proposed model with state-of-the-art techniques and find that the proposed technique outperforms other methods and produces better results at low computational cost.

In the future, the following advantages are furnished by the ACM in the complex domain according to the analysis that is offered in the existing study. Here, we would like to provide some recommendations for researchers. First, the complex domain can be used in other areas; for example, the complex domain can help to efficiently extract more local information in medical images. Second, initialization and parameter settings play a very important role in the final results. The optimization algorithm for setting parameters should be studied in the future, and more suitable initialization methods for active contour models in the complex domain should be proposed in order to reduce the computational cost in the future. Finally, combining complex domain with other theories is a feasible and efficient way to address the deficiencies of ACMs; for example, the complex domain could be combined with a neural network to obtain a high level of accuracy.

Author Contributions

The authors’ contributions are as follows: U.S.K. conceived the algorithm, designed and implemented the experiments and wrote the manuscript; resources were provided by X.Z.; Y.S. reviewed and edited. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Key Research and Development Program of China under Grant 2016YFB0200902.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gong, M.; Qian, Y.; Cheng, L. Integrated Foreground Segmentation and Boundary Matting for Live Videos. IEEE Trans. Image Process. 2015, 24, 1356–1370. [Google Scholar] [CrossRef] [PubMed]

- Qian, Y.; Gong, M.; Cheng, L. STOCS: An efficient self-tuning multiclass classification approach. In Applications of Evolutionary Computation; Springer International Publishing: Cham, Switzerland, 2015; Volume 9091, pp. 291–306. [Google Scholar]

- Qian, Y.; Yuan, H.; Gong, M. Budget-driven big data classification. In Applications of Evolutionary Computation; Springer International Publishing: Cham, Switzerland, 2015; Volume 9091, pp. 71–83. [Google Scholar]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Yin, S.; Zhang, Y.; Karim, S. Large scale remote sensing image segmentation based on fuzzy region competition and gaussian mixture model. IEEE Access 2018, 6, 26069–26080. [Google Scholar] [CrossRef]

- Singh, D.; Mohan, C.K. Graph formulation of video activities for abnormal activity recognition. Pattern Recognit. 2017, 65, 265–272. [Google Scholar] [CrossRef]

- Amit, Y. 2D Object Detection and Recognition; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Shan, X.; Gong, X.; Nandi, A.K. Active contour model based on local intensity fitting energy for image segmentation and bias estimation. IEEE Access 2018, 6, 49817–49827. [Google Scholar] [CrossRef]

- Ammar, A.; Bouattane, O.; Youssfi, M. Review and comparative study of three local based active contours optimizers for image segmentation. In Proceedings of the 2019 5th International Conference on Optimization and Applications (ICOA), Kenitra, Morocco, 25–26 April 2019; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Zhang, K.; Zhang, L.; Lam, K.-M.; Zhang, L. A Level Set Approach to Image Segmentation with Intensity Inhomogeneity. IEEE Trans. Cybern. 2016, 46, 546–557. [Google Scholar] [CrossRef]

- Yu, H.-P.; He, F.; Pan, Y. A novel segmentation model for medical images with intensity inhomogeneity based on adaptive perturbation. Multimedia Tools Appl. 2018, 78, 11779–11798. [Google Scholar] [CrossRef]

- Lopez-Alanis, A.; Lizarraga-Morales, R.A.; Sanchez-Yanez, R.E.; Martinez-Rodriguez, D.E.; Contreras-Cruz, M.A. Visual Saliency Detection Using a Rule-Based Aggregation Approach. Appl. Sci. 2019, 9, 2015. [Google Scholar] [CrossRef]

- Yang, L.; Xin, D.; Zhai, L.; Yuan, F.; Li, X. Active Contours Driven by Visual Saliency Fitting Energy for Image Segmentation in SAR Images. In Proceedings of the 2019 IEEE 4th International Conference on Cloud Computing and Big Data Analysis (ICCCBDA), Chengdu, China, 12–15 April 2019; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2019; pp. 393–397. [Google Scholar]

- Zhu, X.; Xu, X.; Mu, N. Saliency detection based on the combination of high-level knowledge and low-level cues in foggy images. Entropy 2019, 21, 374. [Google Scholar] [CrossRef]

- Xia, X.; Lin, T.; Chen, Z.; Xu, H. Salient object segmentation based on active contouring. PLoS ONE 2017, 12, e0188118. [Google Scholar] [CrossRef]

- Li, N.; Bi, H.; Zhang, Z.; Kong, X.; Lü, D. Performance comparison of saliency detection. Adv. Multimedia 2018, 2018, 1–13. [Google Scholar] [CrossRef]

- Zhu, G.; Wang, Q.; Yuan, Y. Tag-Saliency: Combining bottom-up and top-down information for saliency detection. Comput. Vis. Image Underst. 2014, 118, 40–49. [Google Scholar] [CrossRef]

- Borji, A.; Sihite, D.N.; Itti, L. Probabilistic learning of task-specific visual attention. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2012; pp. 470–477. [Google Scholar]

- Koch, C.; Ullman, S. Shifts in selective visual attention: Towards the underlying neural circuitry. In Matters of Intelligence; Springer: Dordrecht, The Netherlands, 1987; pp. 115–141. [Google Scholar]

- Ma, Y.-F.; Zhang, H.-J. Contrast-Based Image Attention Analysis by Using Fuzzy Growing. In Proceedings of the eleventh ACM international conference on Multimedia, Berkeley, CA, USA, November 2003; pp. 374–381. [Google Scholar]

- Bruce, N.; Tsotsos, J. Saliency based on information maximization. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2006; pp. 155–162. [Google Scholar]

- Cheng, M.-M.; Mitra, N.J.; Huang, X.; Torr, P.H.S.; Hu, S.-M. Global Contrast Based Salient Region Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef] [PubMed]

- Judd, T.; Ehinger, K.; Durand, F.; Torralba, A. Learning to predict where humans look. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2009; pp. 2106–2113. [Google Scholar]

- Xie, Y.; Lu, H.; Yang, M.-H. Bayesian Saliency via Low and Mid Level Cues. IEEE Trans. Image Process. 2012, 22, 1689–1698. [Google Scholar] [CrossRef] [PubMed]

- Chan, T.; Vese, L. An active contour model without edges. In International Conference on Scale-Space Theories in Computer Vision; Springer: Berlin/Heidelberg, Germany, 1999; pp. 141–151. [Google Scholar]

- Lie, J.; Lysaker, M.; Tai, X.-C. A binary level set model and some applications to Mumford-Shah image segmentation. IEEE Trans. Image Process. 2006, 15, 1171–1181. [Google Scholar] [CrossRef] [PubMed]

- Ronfard, R. Region-based strategies for active contour models. Int. J. Comput. Vis. 1994, 13, 229–251. [Google Scholar] [CrossRef]

- Samson, C.; Blanc-Feraud, L.; Aubert, G.; Zerubia, J. A variational model for image classification and restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 460–472. [Google Scholar] [CrossRef]

- Chan, T.F.; Vese, L. Active contours without edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef]

- Xu, J.; Wang, H.; Cui, C.; Liu, P.; Zhao, Y.; Li, B. Oil spill segmentation in ship-borne radar images with an improved active contour model. Remote. Sens. 2019, 11, 1698. [Google Scholar] [CrossRef]

- Wu, H.; Liu, L.; Lan, J. 3D flow entropy contour fitting segmentation algorithm based on multi-scale transform contour constraint. Symmetry 2019, 11, 857. [Google Scholar] [CrossRef]

- Fang, J.; Liu, H.; Zhang, L.; Liu, J.; Liu, H. Active contour driven by weighted hybrid signed pressure force for image segmentation. IEEE Access 2019, 7, 97492–97504. [Google Scholar] [CrossRef]

- Wong, O.Q.; Rajendran, P. Image Segmentation using Modified Region-Based Active Contour Model. J. Eng. Appl. Sci. 2019, 14, 5710–5718. [Google Scholar]

- Li, Y.; Cao, G.; Wang, T.; Cui, Q.; Wang, B. A novel local region-based active contour model for image segmentation using Bayes theorem. Inf. Sci. 2020, 506, 443–456. [Google Scholar] [CrossRef]

- Kimmel, R.; Amir, A.; Bruckstein, A. Finding shortest paths on surfaces using level sets propagation. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 635–640. [Google Scholar] [CrossRef]

- Caselles, V.; Kimmel, R.; Sapiro, G. Geodesic active contours. Int. J. Comput. Vis. 1997, 22, 61–79. [Google Scholar] [CrossRef]

- Vese, L.; Chan, T.F. A multiphase level set framework for image segmentation using the mumford and shah model. Int. J. Comput. Vis. 2002, 50, 271–293. [Google Scholar] [CrossRef]

- Li, C.; Kao, C.-Y.; Gore, J.C.; Ding, Z. Implicit Active Contours Driven by Local Binary Fitting Energy. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2007; pp. 1–7. [Google Scholar]

- Li, C.; Xu, C.; Gui, C.; Fox, M.D. Distance regularized level set evolution and its application to image segmentation. IEEE Trans. Image Process. 2010, 19, 3243–3254. [Google Scholar] [CrossRef]

- Zhang, K.; Song, H.; Zhang, K. Active contours driven by local image fitting energy. Pattern Recognit. 2010, 43, 1199–1206. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, K.; Song, H.; Zhou, W. Active contours with selective local or global segmentation: A new formulation and level set method. Image Vis. Comput. 2010, 28, 668–676. [Google Scholar] [CrossRef]

- Gobbino, M. Finite difference approximation of the Mumford-Shah functional. Courant Inst. Math. Sci. 1998, 51, 197–228. [Google Scholar] [CrossRef]

- Osher, S.; Fedkiw, R.; Piechor, K. Level set methods and dynamic implicit surfaces. Appl. Mech. Rev. 2004, 57, B15. [Google Scholar] [CrossRef]

- Schrödinger, E. Energieaustausch nach der Wellenmechanik. Ann. der Phys. 1927, 388, 956–968. [Google Scholar] [CrossRef]

- Cross, M.C.; Hohenberg, P.C. Pattern formation outside of equilibrium. Rev. Mod. Phys. 1993, 65, 851–1112. [Google Scholar] [CrossRef]

- Newell, A.C. Nonlinear wave motion. LApM 1974, 15, 143–156. [Google Scholar]

- Tanguay, P.N. Quantum wave function realism, time, and the imaginary unit i. Phys. Essays 2015. [Google Scholar] [CrossRef]

- Gilboa, G.; Sochen, N.; Zeevi, Y. Image enhancement and denoising by complex diffusion processes. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1020–1036. [Google Scholar] [CrossRef]

- Hussain, S.; Khan, M.S.; Asif, M.R.; Chun, Q. Active contours for image segmentation using complex domain-based approach. IET Image Process. 2016, 10, 121–129. [Google Scholar] [CrossRef]

- Zhi, X.-H.; Shen, H.-B. Saliency driven region-edge-based top down level set evolution reveals the asynchronous focus in image segmentation. Pattern Recognit. 2018, 80, 241–255. [Google Scholar] [CrossRef]

- Lankton, S.; Tannenbaum, A. Localizing region-based active contours. IEEE Trans. Image Process. 2008, 17, 2029–2039. [Google Scholar] [CrossRef]

- Li, C.; Kao, C.-Y.; Gore, J.C.; Ding, Z. Minimization of region-scalable fitting energy for image segmentation. IEEE Trans. Image Process. 2008, 17, 1940–1949. [Google Scholar] [CrossRef]

- Shi, J.; Yan, Q.; Xu, L.; Jia, J. Hierarchical image saliency detection on extended cssd. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 717–729. [Google Scholar] [CrossRef] [PubMed]

- Rand, W.M. Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 1971, 66, 846–850. [Google Scholar] [CrossRef]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A Database of Human Segmented Natural Images and Its Application to Evaluating Segmentation Algorithms and Measuring Ecological Statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001. [Google Scholar]

- Fu, K.; Gong, C.; Gu, I.Y.-H.; Yang, J. Normalized cut-based saliency detection by adaptive multi-level region merging. IEEE Trans. Image Process. 2015, 24, 5671–5683. [Google Scholar] [CrossRef] [PubMed]

- Aytekin, Ç.; Ozan, E.C.; Kiranyaz, S.; Gabbouj, M. Visual saliency by extended quantum cuts. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 1692–1696. [Google Scholar]

- Goferman, S.; Zelnik-Manor, L.; Tal, A. Context-aware saliency detection. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1915–1926. [Google Scholar] [CrossRef]

- Achanta, R.; Susstrunk, S. Saliency detection using maximum symmetric surround. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 2653–2656. [Google Scholar]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2012; pp. 733–740. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).