Abstract

For more than a decade, both academia and industry have focused attention on the computer vision and in particular the computational color constancy (CVCC). The CVCC is used as a fundamental preprocessing task in a wide range of computer vision applications. While our human visual system (HVS) has the innate ability to perceive constant surface colors of objects under varying illumination spectra, the computer vision is facing the color constancy challenge in nature. Accordingly, this article proposes novel convolutional neural network (CNN) architecture based on the residual neural network which consists of pre-activation, atrous or dilated convolution and batch normalization. The proposed network can automatically decide what to learn from input image data and how to pool without supervision. When receiving input image data, the proposed network crops each image into image patches prior to training. Once the network begins learning, local semantic information is automatically extracted from the image patches and fed to its novel pooling layer. As a result of the semantic pooling, a weighted map or a mask is generated. Simultaneously, the extracted information is estimated and combined to form global information during training. The use of the novel pooling layer enables the proposed network to distinguish between useful data and noisy data, and thus efficiently remove noisy data during learning and evaluating. The main contribution of the proposed network is taking CVCC to higher accuracy and efficiency by adopting the novel pooling method. The experimental results demonstrate that the proposed network outperforms its conventional counterparts in estimation accuracy.

1. Introduction

The appearance of an object’s color is often influenced by surface spectral reflectance, illumination condition and relative position, which makes it very challenging for the computer vision to recognize an object in both still image and video. However, the computer vision can benefit from adopting the computational color constancy (CVCC) as a pre-processing step which enables the recorded colors of the object to stay relatively constant under different illumination conditions. Obviously, color plays a large part in the performance of computer vision applications such as human computer vision, color feature extraction, and color appearance model [,]. However, it is imperative to cope with undesirable effects arising from the significant impact of the illumination color on the perceived color of an object in a real-life scene. While the human visual system (HVS) has the innate ability to recognize the actual color of an object even under different light source colors, the computer vision finds it tough and challenging to identify the actual color of an object under the influence of changing illumination conditions. In an effort to mimic the HVS, the CVCC is designed to predict the actual color of an object in a real-world scene independent of varying illuminant conditions. The CVCC algorithms roughly fall into three categories: statistics-based, physics-based, and learning-based methods.

For several decades, the statics-based method has dominated the CVCC technology and the three best-known algorithms are the gray-world [], the shades-of-gray [] and the max-RGB (red, green, blue) [] or the gray-edge []. They have a strong empirical assumption based on the statistics of real color images. There are some other statistics-based techniques which also contributed to solving the color constancy problem of the computer vision [,,]. The physics-based method has mainly evolved from the dichromatic reflectance model of Shafer [,]. This method uses accurate reflection models but is still required to take complicated additional steps such as specularity estimation [] or image segmentation []. The learning-based method includes Gamut mapping methods [,,,] and a recent patch-based approach []. The recent patch-based approach is intended to estimate the color of a light source in the local region. In this approach, the network is given a set of ground truth regions and is designed to learn and minimize their differences from the local regions. The learning-based algorithms produce state-of-the-art results but come with several drawbacks. For instance, to implement such a network, the computer is required to have a memory capable of storing thousands of patches. In addition, they need to take complicated steps to estimate local and global light sources, such as segmentation, feature extraction, and calculation of the nearest neighbor to the training set. However, Finlayson proposes the fastest learning-based method []. The key idea of his method is to apply the traditional gray-world assumption to estimating the color of the light source and devise a matrix to correct resultant estimation error. The network builds the matrix through dataset learning. The network learns colors and edge moments of a given image and as a result generates the elements of the matrix. Furthermore, Bianco and his colleagues [] achieved state-of-the-art color constancy results by introducing a new method which uses a convolutional neural network. Their network has three parts: one convolutional layer for max pooling, one fully connected layer, and three output nodes. With their network, illumination estimation and fine-tuning are conducted on an image basis, not on a patch basis, and the purpose of fine-tuning is to minimize learning loss. This approach achieves a successful outcome from experimenting with one specific dataset only, so it needs to further experiment with more datasets. In addition, Lou et al. [] proposed a deep convolutional neural network (DCNN) that is pre-trained to classify the big ImageNet dataset with labels. The performance of the network is assessed by hand-crafted color constancy algorithms. In the DCNN, ground truth labels are used to fine-tune each single dataset.

As overviewed above, there has been a decent amount of color constancy research and a number of proposed approaches. Given the structural nature of the computer vision, some challenges remain unsolved. More recently, Gijsenij et al. [] proposed a scene semantics-based color constancy method where natural image statistics are used to identify the most important characteristics of color images. Akbarinia et al. [] suggested a color constancy method that intends to overlap two asymmetric Gaussian kernels of different sizes in a similar way of changing the receptive field (RF) and the kernels come in different sizes depending on the contrast of surrounding pixels. Hu and colleagues [] introduced a color constancy method which uses AlexNet and SqueezeNet in estimating illumination. Their color constancy method outperforms conventional methods by delivering state-of-the-art results. Despite their cutting-edge performance, the methodology is still with some inherent problems such as overfitting, gradient degradation, and vanishing gradient. Hussain et al. [] proposed a color constancy method in which a histogram-based algorithm was used to determine an appropriate number of segments and efficiently split an input image into its key color variation areas. Zhan and colleagues [] researched convolutional neural networks (CNNs) which use cross-level architecture for color constancy.

In this light, this article proposes a new network architecture-based approach and the new architecture uses the residual neural network which consists of pre-activation, atrous or dilated convolution and batch normalization. When receiving input image data, the proposed network crops each image into image patches before training. Once the network begins training, local semantic information is automatically extracted from the image patches and fed to put its novel pooling layer. Simultaneously, the extracted information is estimated and combined to form global information during training. While conventional patch-based CNNs handle patches sequentially and individually, the proposed network takes into account all image patches simultaneously, which makes it more efficient and simpler for the network to compare and learn patches during training. The illumination estimation with the use of the image patches is formulated in this work.

Among the CNN-based color constancy approaches, some methods estimate illumination based on local image patches like the proposed approach in this work, while others rely on full image data in its entirety. In the case of the latter, the full image data comes in the form of various chroma histograms. When the network takes the full image data in chroma histograms, the convolutional filters learn to assess and identify possible illumination color solutions in chroma plane. However, spatial information is only weakly encoded in these histograms, and thus semantic context is largely ignored. When considering semantic information at the global level, it is difficult for the network to learn and discern the significance of a semantically valuable local region. To supplement this, researchers have proposed conventional convolutional networks [,] designed to extract and pool local features. Especially in a study by Hu and colleagues [], the authors proposed a pooling method to extract the local confidence region from the original image and thus to form a weighted map or a mask. By using fully connected CNNs, their color constancy method shows better performance relative to its conventional counterparts. Yet it is important to challenge the estimation accuracy of the weighted map in their approach. In the fully connected layer method, each convolutional layer gets the input of all the features combined as a result of output in an earlier layer and each convolutional layer relies on local spatial coherence with a small receptive field. On the other hand, the fully connected layers have several well-known vital problems and incur incredibly high computational cost. Motivated to solve these problems, the proposed CNN method uses the residual network to improve the estimation accuracy and reduce expensive computational cost. In addition, the proposed network employs a pooling mechanism to reduce estimation ambiguities as in previous studies [,].

With patch processing and semantic pooling together, the proposed network is able to distinguish between useful data and noisy data during training and evaluating. In the proposed network, semantic pooling designed to extract local semantic information from the original image is performed to form a mask and the resulting image turns out a weighted map. By enabling the network to learn the semantic information in the local region and remove noisy data, the proposed color constancy approach becomes more robust to estimation ambiguities. In addition, the proposed network features end-to-end training, direct processing of arbitrary-sized images and faster computation.

To the best of our knowledge, the proposed approach is the first study to investigate and use the residual network-based CNNs to achieve color constancy. In particular, the novelty of this approach is the use of the residual network, mainly distinct from its conventional CNN-based counterparts. The residual network allows the proposed architecture to predict scene illuminant on the local region, as opposed to many previous approaches where features are extracted from the entire image to obtain statistics and estimate the overall illuminant. In addition, the dilated convolution of the residual network is designed to handle multiscale appearance, contributing to efficiency. While there are only a few methods proposed to estimate spatially varying illuminants, the proposed approach has significance and the potential to advance CNN-based illumination estimation accuracy. The experimental results demonstrate that the proposed network stays ahead of other state-of-the-art techniques in predicting illumination and is less likely to cause large errors in estimation as the conventional methods. Moreover, the proposed scheme is further applicable to solving other computer vision problems because of its strength of aggregating local estimation to determine global estimation.

2. Technical Approach

In recent years, deep learning techniques have become remarkably advanced and contributed to addressing computer vision challenges. The proposed network is one of the cutting-edge deep learning techniques based on ResNet []. ResNet is composed of several units: pre-activation, atrous convolution, batch-normalization, and layers. ResNet performs better imageNet classification when its layers use skip connection. ResNet allows researchers and developers to design much deeper networks without gradient degradation and acquire much larger receptive fields often with highly distinct features. On receiving different input images (or values), the proposed network crops each input image into image patches which carry different semantic information automatically. Next, the network learns and applies the semantic information to its novel pooling layer where all local semantic information is estimated and combined to form global information.

2.1. Color Constancy Approach

In general, given an RGB image, F, the color constancy approach is designed to estimate the global illuminant color (or color cast), using a canonical light source color, usually perfect white , and normalize the estimated global illuminant color . The approach then replaces the estimated global illuminant color with the normalized global illuminant color. However, in real-life scenes, there exist multiple illuminants, which possibly impact on the perceived color of an object. To address this problem, conventional methods attempt to estimate a single global illuminant color. Similarly, the proposed approach is designed to estimate and get the replacement,, and notably uses the convolutional neural network (CNN) to estimate , which gets the replacement closer to the ground truth illuminant color. refers to parameters.

Let defined as the ground truth illuminant color. During dataset learning, the CNN minimizes a loss function. The loss function represents an angular error (in degrees) between the estimated color and the ground truth illuminant color , described as follows:

In the CNN, is the estimation of all the semantically informative regions, ideally avoiding any repercussion of ambiguous light. Equation (2) explains how to calculate the final global illumination estimation. Let be the local regions in , and be the output of the regional illuminant color estimation, . The (F) is the normalization of the sum of the product of semantic information, , and regional illumination estimation, , and as a result delivers the final global illuminant estimation color as follows []:

Intuitively, supposing that are local regions that contain useful semantic information for illuminant estimation, should be large values.

In detail, the semantic pooling in Equation (2) is described as follows:

where

where is the coordinate of local region in the image and is the total number of pixels in the local region. refers to mean of local semantic information.

where and refer to semantic information and local illuminant estimation function, respectively.

Using the chain rule, Equation (5) is transformed into Equation (6) below:

In Equation (6), the estimation has different magnitudes with different semantic information . In estimating local illuminants, semantic information serves as a mask within the salience region, which helps prevent the proposed network from learning noisy data. Similarly, semantic information is calculated as follows:

By intuition, in global estimation which uses local illumination estimation colors, it is supposed to get the global estimation color closer to the ground truth illumination color.

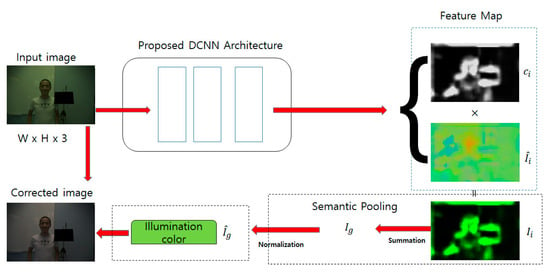

Figure 1 depicts a block diagram of the proposed color constancy method. As shown below, as a result of performing the proposed DCNN architecture, feature maps are generated. The feature maps turn into the weighted maps or masks through semantic pooling where the proposed network distinguishes between useful data and noisy data. The semantic pooling is formulated in Equation (2) to Equation (7). To achieve color constancy and improve the performance, it is important to pay close attention to the estimation accuracy of the proposed DCNN architecture now that it has a significant impact on the accuracy of the weighted maps and eventually on the accuracy of the global illumination estimation. In this respect, the proposed method has adopted the proposed DCNN architecture to accurately estimate the local semantic information and the accuracy is prove in the experimental results and evaluation section. The next subsection focuses on the proposed DCNN architecture.

Figure 1.

Block diagram of the proposed method.

2.2. The Proposed DCNN Architecture

A deep convolutional neural network (DCNN) is a major breakthrough in image classification. The DCNN naturally incorporates low, mid, and high-level image features and classifiers in an end-to-end multi-layer form. Its depth, or the number of stacked layers, can enrich the “level” of image features. However, some deeper networks like AlexNet and VGG-16 have a degradation problem. Increasing depth of the network accelerates accuracy degradation as well as accuracy saturation. That is, an increasing number of layers cause some deeper network models to make more training errors. This is why the proposed method has adopted the well-known residual block to solve the issues with Alexnet and VGG-16 such as expensive computational cost, gradient degradation, and vanishing gradient, which are common issues in handling deep convolutional neural networks. In the proposed approach, a residual network is comprised of multiple convolutional layers. With the input of , the output of the block is recursively defined as follows:

Let each layer take the sequential steps of convolution , batch normalization, and rectified linear unit (ReLU) as nonlinearities, and is defined as follows:

where and are weight matrices and denotes convolution, is batch normalization, and . The proposed ResNet architecture shows that the resolution of feature maps drop down to a fourth of input resolution after passing through the first three layers. This allows the architecture to aggregate contexts and train faster. However, smaller feature maps constrain the architecture from learning high-resolution features which is useful and required at later stages. To support the learning of the high-resolution feature, the proposed network has an additional convolution layer with a kernel before the first convolution layer. This enables the network to learn high-resolution features, without increasing the inference time by much. Furthermore, down-sampling principally reduces the resolution of feature maps. Although deconvolution layers are able to up-sample low-resolution feature maps, they cannot recover all the details completely. In addition, this procedure requires higher computational cost as well as intensive memory. To address such problems, the proposed method uses atrous convolution, also called dilated convolution []. Atrous convolution widens the kernel and simulates a larger field of perception. For a 1D input signal with a filter of in length, the atrous convolution is described as follows:

The rate refers to a stride with regard to sampling of the input signal. For instance, a rate of 2 represents a convolution on a pooled feature map. The proposed network has changed the stride of the last convolution from 2 to 1 and set the others at . In this way, the smallest resolution is 16 times down-sampled, not 32 times, but still preserves the higher resolution details, as well as aggregates the usual number of contexts. Every object in a scene potentially varies in size, distance, and position. DCNN filters usually do not fit in this multiscale appearance. This has motivated researchers to investigate how DCNN [,] learns the multiscale feature. Their finding is that DCNN is given multi-resolution input images, which thus incur a higher computational cost. To reduce expensive computational cost and increase estimation efficiency, the proposed method gets the ResNet blocks made up of several different scale atrous convolutions with . In this way, the network is enabled to learn multiscale features in every block. Furthermore, the concatenation preserves all the features within the block so that the network can learn to combine features generated on different scales.

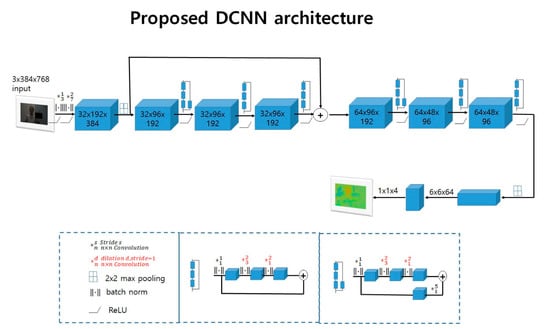

Figure 2 depicts the proposed DCNN architecture. To explain in more detail, the top half of the figure illustrates the whole process of the proposed DCNN architecture. The blue boxes are not all residual networks. There are six residual networks: two consisting of four layers and four consisting of three layers. A residual network is marked with its structure on its top right, which looks like a superscript. The bottom half of the figure gives explanatory notes and illustrates the two types of residual networks in detail. As in the explanatory notes, a convolutional layer is described in black; and the top s stands for a stride and the bottom n indicates the n by n filter kernel size, with a symbol * to the middle left. A dilated convolution is described in red; and the top d stands for dilated convolution with a stride of 1 and the bottom n indicates the n by n filter kernel size, with a symbol * to the middle left. For instance, 1 and 1 with a symbol * in black translates as a convolutional layer with a stride of 1 and a 1 × 1 filter kernel in size. As another example, 2 and 3 with the symbol * in red mean that the rate r of dilated convolution is 2, as in Equation (10), and the filter kernel size is 3 × 3. The emphasis of using the proposed DCNN architecture is on increasing the accuracy of estimating the local semantic information, which is vital to the final performance of the network, and training the network to optimally combine the local estimates by adaptively using the corresponding and , as in Equation (2), for each local region, which will suppress the impact of ambiguous patches.

Figure 2.

Proposed deep convolutional neural network (DCNN) architecture with pre-activation, atrous convolution, and batch-normalization.

3. Experimental Results and Evaluations

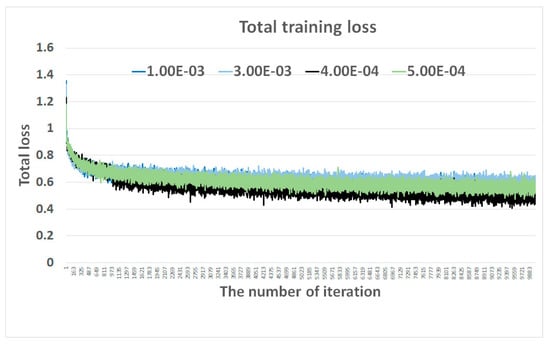

A feasibility study uses two benchmark standard datasets: the reprocessed [] Color Checker Dataset [] and the NUS 8-Camera Dataset []. These datasets consist of 568 and 1736 raw images, respectively. The 768 × 384 input image in Figure 2 is resized to 512 × 512 pixels and then cropped into overlapping 224 × 224 image patches. There is a trade-off between patch coverage (and accuracy) and efficiency. With more patches, the CNN performs higher coverage and accuracy, but gets lower efficiency. Through additional pooling, the proposed network combines patch-based estimates to obtain a global illuminant. The proposed network is trained in an end-to-end fashion with back-propagation. For the proposed network, Adam [] is used to optimize parameter setting for all layers, which reduces overfitting and improves performance. The experiment with the proposed network is performed to compare total training losses at four different learning rates with 10,000 iterations (or epochs) using a server with Titan XP GPU and taking 1.5 days. Figure 3 illustrates the comparative experimental results and finds that is the optimized base learning rate. The symbol “1.00E-03” represents a learning rate of . Likewise, parameters are optimized, including a dropout probability of 0.5 for the 6 × 6 × 64 convolution layer in Figure 2, a batch size of 16, a weight decay of , and so forth.

Figure 3.

Total training loss comparison in the logarithm space of four different learning rates to optimize the base learning rate.

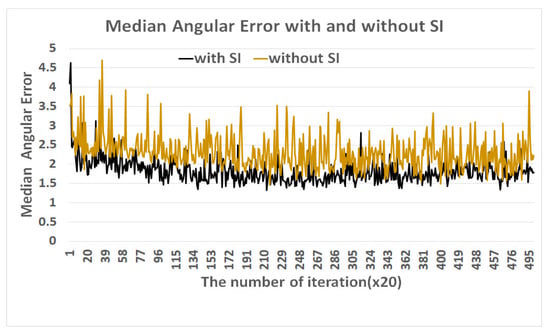

Figure 4 compares median angular errors with and without semantic information, recording the errors every 20 iterations (or epochs). As a result, the errors sharply drop with semantic information. From an illuminant estimation point of view, the choice of semantic information has the effect of improving computational color constancy significantly.

Figure 4.

Comparison of median angular errors with and without semantic information (SI).

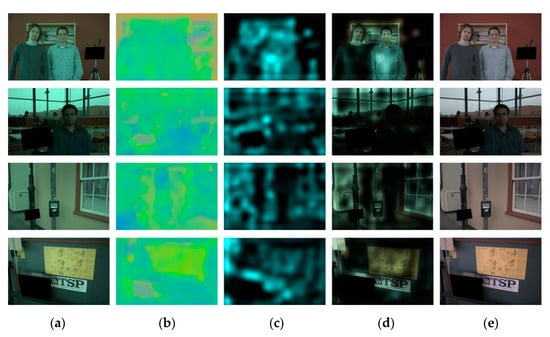

Figure 5 shows Shi’s re-processed dataset [] and their resulting images from implementing the proposed network in Tensorflow []. The proposed DCNN architecture is focused on increasing the accuracy of estimating the local semantic information to improve performance. As a result, in Figure 5e, the greenish blue illuminant of the original image is efficiently removed, and the true colors of objects are well represented without color distortion compared with the original image in Figure 5a.

Figure 5.

Shi’s re-processed dataset and their resulting images: (a) original image, (b) illumination estimation map, (c) weighted map, (d) image weighted map, and (e) corrected image.

The proposed network is compared with 27 different state-of-the-art methods which include both unitary and combinational methods. The 27 different methods are benchmarked from several sources. Specifically, AlexNet-FCand SqueezeNet-FC are benchmarked from []; and except for DS-Net, the other 22 methods are from [,,,,,,,]. DS-Net is cited from []. In this comparative study, the source codes of AlexNet-FC and SqueezeNet-FC are downloaded from GitHub website [] and DS-Net is downloaded from GitHub as well []. The source codes of CCATI [] and Zhan et al. [] are implemented by MATLAB and Tensorflow, with parameters fixed as suggested by those articles.

For quantitative comparison purposes only, Table 1 compares the proposed method with previous mainstream algorithms in terms of the illuminant estimation accuracy. It illustrates several standard metrics: mean, median, trimean, mean of the best quarter (best 25%), and mean of the worst quarter (worst 25%) of angular error (Equation (1)). This comparative study uses the well-known dataset, the Gehler and Shi’s dataset [], which contains 568 images of people, places, and objects in indoor and outdoor scenes, where the Macbeth color checker chart is placed in a known location of every scene. This dataset includes both single- and multiple-illuminant natural images. The proposed network surpasses all its conventional counterparts in trimean and worst 25%.

Table 1.

Comparative statistical metrics between the proposed network and conventional methods with Shi’s re-processed dataset and the NUS-8 Camera Dataset (the lower, the better).

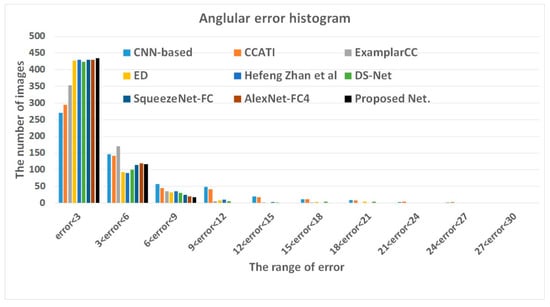

Figure 6 is an angular error (AE) histogram comparison between the proposed network and several best-performing conventional methods selected from Table 1: CNN-based method, CCATI, ExemplarCC, ensemble of decision (ED) tree based method, Zhan et al., DS-Net, SqueezeNet-FC, and AlexNet-FC. Joze and Drew [] proposed an exemplar method which estimates the local source illuminant by finding the neighboring surfaces in the training data which consists of the weak color constant RGB values and the texture features. Ensemble of decision tree based method [] is a discrete version of Gamut mapping which uses the correlation matrix, instead of the canonical Gamut for the considered illuminants, and uses the image data to calculate the probability that the illumination in the test image is caused by which of the known illuminants. Shi et al. [] proposed a branch-level ensemble of neural networks consisting of two interacting sub-networks: a hypotheses network and a selection network. The selection network picks confident estimations from the plausible illuminant estimations generated from the hypotheses network. Shi’s method produces accurate results, but the model size is huge, and its processing speed is slow. That is, when the CNNs go deeper by adding layers, fully connected layers have several well-known vital problems including incredibly expensive computational problems. To solve these problems, the proposed CNN method adopts the residual network to improve the estimation accuracy and reduce expensive computational cost. Further the pooling mechanism employed by the proposed network contributes to reducing estimation ambiguities.

Figure 6.

Comparative angular error (AE) histogram of convolutional neural network (CNN)-based, exemplar-based, ensemble of decision (ED) tree based methods, DS-Net, AlexNet-FC, SqueezeNet-FC, CCATI, Zhan et al., and the proposed network (proposed net.) with Shi’s re-processed dataset and the NUS 8-Camera Dataset.

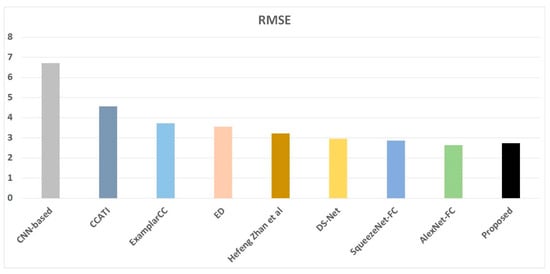

In estimating illuminants, the proposed network stays ahead of the state-of-the-art methods, with 76.41% of the tested images under an angular error of 3° and 97% under an angular error of 6°. Figure 7 is a comparison of the root mean square error (RMSE) results among CNN-based, exemplar-based, ensemble of decision (ED) tree based methods, Alex-FC, CCATI, Zhan et al., DS-Net, SqueezeNet-FC, and the proposed network (proposed), with the input of the angular error. The proposed network records the lower RMSE relative to its conventional counterparts. Therefore, the proposed network is deemed to be robust and generates lower AE and RMSE in estimating illumination of a wide range of image scenes.

Figure 7.

Comparison of root mean square error (RMSE) results of CNN-based, exemplar-based, ensemble of decision (ED) tree based methods, DS-Net, AlexNet-FC4, SqueezeNet-FC4, CCATI, Zhan et al., and the proposed network (proposed) with the angular error as input.

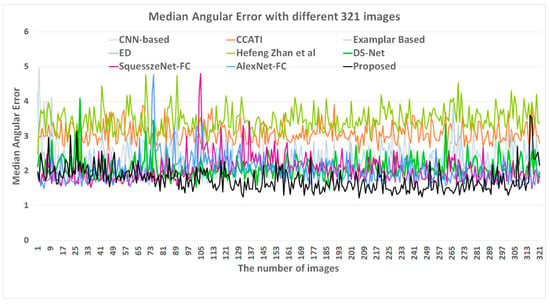

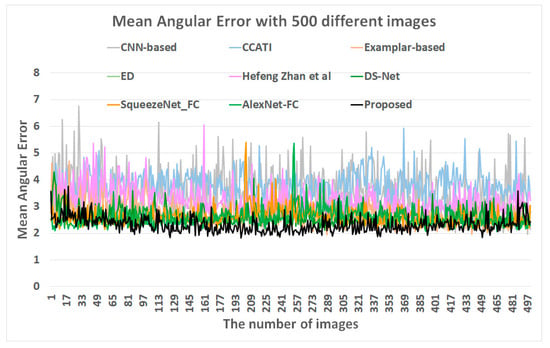

To further verify the proposed method, additional experiments were conducted using SFU-lab dataset [] and gray-ball dataset []. The SFU-lab dataset contains four different subsets: objects with minimal specularities (consisting of 22 scenes, 223 images in total), objects with at least one clear dielectric specularity (9 scenes, 98 images in total), objects with metallic specularities (14 scenes, 149 images in total), and objects with fluorescent surfaces (6 scenes, 59 images in total). A commonly used subset in literature is the union of the first two subsets. Furthermore, the gray-ball dataset [] has a total of 11,340 images of 360 × 240 pixels from a range of scenarios, which were taken under natural single- or mixed-illuminant lighting conditions and a gray-ball was placed in front of the video camera. Thus, many of the images are nearly identical scenes. Figure 8 illustrates the comparative results of median angular errors, using 321 different SFU-lab images, and Figure 9 depicts the comparative results of mean angular errors, using 500 different gray-ball dataset images. In both experimental results, the proposed network also records the lowest angular error in terms of median and mean. Therefore, the proposed method gets ahead of the conventional methods.

Figure 8.

Comparison of the median angular errors of 321 different SFU-lab images.

Figure 9.

Comparison of the mean angular errors of 500 different gray-ball images.

The NUS 8-Camera Dataset [] was additionally chosen to assess the camera invariant performance of the proposed method. The NUS 8-Camera Dataset is the most recent and well-known color constancy dataset which consists of 210 individual scenes captured by eight cameras, or a total of 1736 images. With the NUS 8-camera image dataset, 11 conventional methods and the proposed network are evaluated and compared. Table 2 displays the camera-wise performance comparison of the proposed network with the 11 conventional methods. As a result, the proposed network outperforms its 11 conventional counterparts. Accordingly, the proposed method is deemed robust regardless of camera conditions.

Table 2.

Performance comparison between grey world (GW) [], white patch (WP) [], shades of grey (SoG) [], general grey world (GGW) [], 1st-order grey edge (GE1) [], 2nd-order grey edge (GE2) [], local surface reflectance statistics (LSR) [], pixels-based Gamut (PG) [], Bayesian framework (BF) [], spatio-spectral statistics (SS) [], natural image statistics (NIS) [], and the proposed network (PN) with NUS dataset.

4. Conclusions

A color constancy algorithm is designed to remove color casts from images and manifest the actual colors of objects, as well as preserve constant distribution of the light spectrum across the digital images, in an effort to address the challenges faced by the computer vision algorithms or methods in nature.

Accordingly, this article presents novel network architecture that uses the residual neural network composed of pre-activation, atrous or dilated convolution and batch normalization. The proposed network is intended to enable image patches to carry different semantic information automatically, upon receiving different input values. The network learns and applies semantic information to its novel pooling layer for global estimation.

As in the comparative experimental results of AE, the proposed network achieves much higher accuracy than its state-of-the-art counterparts. In the comparative AE histogram, the proposed network gets ahead of its state-of-the-art counterparts, scoring 76.41% of the number of images under an AE of 3° and 97% under an AE of 6°. In the RMSE comparison as well, the proposed network records the lowest value. Therefore, the proposed network proves to be robust and causes lower AE and RMSE in estimating illumination of a wide range of image scenes. Furthermore, through additional experiments with two more datasets of different semantic information levels: SFU-lab and gray-ball datasets, the proposed network also results in lower median and mean angular errors, respectively. In addition, the proposed network is evaluated on NUS 8-Camera Dataset to verify the camera invariant performance. As a result, the proposed method outperforms its conventional counterparts as a camera invariant color constancy model by obtaining competitive results in uniform, non-uniform, and multiple illuminant conditions. Notwithstanding, the preprocessing method and CNN structure still need to advance in estimating color casts of light sources regardless of illumination condition as well as camera sensitivity. To this end, this study will continue to advance illumination estimation accuracy.

Author Contributions

Conceptualization, H.-H.C., B.-J.Y., and H.-S.K.; data curation, H.-H.C.; formal analysis, H.-H.C. and B.-J.Y.; funding acquisition, B.-J.Y. and H.-S.K.; investigation, H.-H.C.; methodology, H.-H.C., B.-J.Y., and H.-S.K.; project administration, B.-J.Y. and H.-S.K.; resources, H.-H.C. and B.-J.Y.; software, H.-H.C.; supervision, B.-J.Y. and H.-S.K.; validation, H.-H.C. and B.-J.Y.; visualization, H.-S.K. and H.-H.C.; writing—original draft, H.-H.C. and B.-J.Y.; writing—review and editing, B.-J.Y. and H.-S.K. All authors have read and agreed to the published version of the manuscript.

Acknowledgments

This research was supported by Basic Science Research Program through the National Research Foundation (NRF) of Korea funded by the Ministry of Education (NRF-2019R1I1A3A01061844), in part by the NRF of Korea funded by the Ministry of Education (NRF-2018R1D1A1B07040457), and in part of the research projects of “Development of IoT infrastructure Technology for Smart Port” financially supported by the Ministry of Oceans and Fisheries, Korea.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bianco, S.; Cusano, C.; Schettini, R. Single and Multiple illuminant Estimation Using Convolutional Neural Network. arXiv 2015, arXiv:1508.00998v2. [Google Scholar] [CrossRef] [PubMed]

- Kulkarni, S.G.; Kamalapur, S.M. Color Constancy Techniques. Int. J. Eng. Comput. Sci. 2014, 3, 9147–9150. [Google Scholar]

- Buchsbaum, G. A spatial processor model for object colour perception. J. Frankl. Inst. 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Finlayson, G.; Trezzi, E. Shades of gray and colour constancy. In Proceedings of the Twelfth Color Imaging Conference: Color Science and Engineering Systems, Technologies, Applications, CIC 2004, Scottsdale, AZ, USA, 9 November 2004; pp. 37–41. [Google Scholar]

- Funt, B.; Shi, L. The rehabilitation of maxrgb. In Proceedings of the 18th Color and Imaging Conference, San Antonio, TX, USA, 12 April 2010; pp. 256–259. [Google Scholar]

- van de Weijer, J.; Gevers, T.; Gijsenij, A. Edge-based color constancy. IEEE Trans. Image Process. 2007, 16, 2207–2214. [Google Scholar] [CrossRef]

- Gao, S.; Han, W.; Yang, K.; Li, C.; Li, Y. Efficient color constancy with local surface reflectance statistics. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Volume 8690, pp. 158–173. [Google Scholar]

- Cheng, D.; Prasad, D.K.; Brown, M.S. Illuminant estimation for color constancy: Why spatial-domain methods work and the role of the color distribution. J. Opt. Soc. Am. A 2014, 31, 1049–1058. [Google Scholar] [CrossRef] [PubMed]

- Yang, K.-F.; Gao, S.-B.; Li, Y.-J. Efficient illuminant estimation for color constancy using gray pixel. In Proceedings of the Computer Vision Foundation Conference: CVPR 2015, Boston, MA, USA, 7–12 June 2015; pp. 2254–2261. [Google Scholar]

- Tan, R.T.; Nishino, K.; Ikeuchi, K. Color constancy through inverse-intensity chromaticity space. J. Opt. Soc. Am. A 2004, 21, 321–334. [Google Scholar] [CrossRef]

- Finlayson, G.; Schaefer, G. Solving for colour constancy using a constrained dichromatic reflectance model. Int. J. Comput. Vis. 2001, 42, 127–144. [Google Scholar] [CrossRef]

- Gijsenij, A.; Gevers, T.; van de Weijer, J. Generalized gamut mapping using image derivative structures for color constancy. Int. J. Comput. Vis. 2010, 86, 127–139. [Google Scholar] [CrossRef]

- Forsyth, D.A. A novel algorithm of color constancy. Int. J. Comput. Vis. 1990, 5, 5–35. [Google Scholar] [CrossRef]

- Finlayson, G.D.; Hordley, S.D.; Hubel, P.M. Color constancy. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1209–1221. [Google Scholar] [CrossRef]

- Gehler, P.V.; Rother, C.; Blake, A.; Minka, T.; Sharp, T. Bayesian Color constancy revisited. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 26 June 2008; pp. 1–8. [Google Scholar]

- Joze, H.R.V.; Drew, M.S. Exemplar-based color constancy and multiple illumination. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 860–873. [Google Scholar] [CrossRef] [PubMed]

- Finlayson, G.D. Corrected-moment illuminant estimation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, NSW, Australia, 1–8 December 2013; pp. 1904–1911. [Google Scholar]

- Bianco, S.; Cusano, C.; Schettini, R. Color constancy using CNNs. In Proceedings of the Deep Vision: Deep Learning in Computer Vision (CVPR Workshop), Boston, MA, USA, June 11 2015. [Google Scholar]

- Lou, Z.; Gevers, T.; Hu, N.; Lucassen, M. Color constancy by deep learning. In Proceedings of the British Machine Vision Conference, Swansea, UK, 7–10 September 2015. [Google Scholar]

- Gijsenij, A.; Gevers, T. Color Constancy using Natural Image Statistics and Scene Semantics. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 687–698. [Google Scholar] [CrossRef] [PubMed]

- Akbarnia, A.; Parraga, A. Color Constancy beyond the Classical Receptive Field. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2081–2094. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Wang, B.; Lin, S. Fc4: Fully convolutional Color Constancy with Confidence-Weighted Pooling. In Proceedings of the CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 4085–4094. [Google Scholar]

- Hussain, M.A.; Akbari, A.S.; Abbott-Halpin, E. Color Constancy for Uniform and Non-Uniform Illuminant Using Image Texture. IEEE Access 2019, 7, 7294–72978. [Google Scholar] [CrossRef]

- Zhan, H.; Shi, S.; Huo, Y. Computational colour constancy based on convolutional neural networks with a cross- level architecture. IET Image Process. 2019, 13, 1304–1313. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the CVPR, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolution. In Proceedings of the ICLR, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Ghiasi, G.; Fowlkes, C.C. Laplacian reconstruction and refinement for semantic segmentation. In Proceedings of the ECCV, Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab Semantic image segmentation with deep convolutional nets, atrous convolution, and fully conneted crfs. arXiv 2016, arXiv:1606.00915. [Google Scholar]

- Kngma, D.; Adam, J.B. A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Shi, L.; Funtes, B. Re-Processed Version of the Gehler Color Constancy Dataset of 568 Images. Simon Fraser University, 2010. Available online: http://www.cs.sfu.ca/~colour/data/ (accessed on 15 November 2019).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-scale machine learning on heterogeneous distributed system. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Land, E.H. The Retinex Theory of Color Vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef]

- Gijsenij, A.; Gevers, T. Color Constancy: Research Website on Illumination Estimation. 2011. Available online: http://colorconstancy.com (accessed on 15 November 2019).

- Xiong, W.; Funt, B. Estimating illumination chromaticity via support vector regression. J. Imaging Sci. Technol. 2006, 50, 341–348. [Google Scholar] [CrossRef]

- Zakizadeh, R.; Brown, M.S.; Finlayson, G.D. A hybrid strategy for illuminant estimation targeting hard images. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 16–23. [Google Scholar]

- Bianco, S.; Cusano, G.; Schettini, R. Automatic color constancy algorithm selection and combination. Pattern Recognit. 2010, 43, 695–705. [Google Scholar] [CrossRef]

- van de Weijer, J.; Schmid, C.; Verbeek, J. Using high-level visual information for color constancy. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Cheng, D.; Price, B.; Cohen, S.; Brown, M.S. Effective learning based illumination estimation using simple features. In Proceedings of the IEEE Conference Computer Vision and Patterns Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1000–1008. [Google Scholar]

- Finlayson, G.D.; Hordley, S.D.; Hubel, P.M. Color by correlation: A simple, Unifying Framework for color constancy. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1209–1221. [Google Scholar] [CrossRef]

- Shi, W.; Loy, C.C.; Tang, X. Deep specialized network for illuminant estimation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 371–387. [Google Scholar]

- Available online: https://github.com/yuanming-hu/fc4 (accessed on 24 May 2020).

- Available online: https://github.com/swift-n-brutal/illuminant_estimation (accessed on 24 May 2020).

- Ciurea, F.; Funt, B. A large image database for color constancy research. In Proceedings of the 11th Color Imaging Conference Final Program, Scottsdale, AZ, USA, 4–7 November 2003; pp. 160–164. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).