A Distributed Automatic Video Annotation Platform

Abstract

:Featured Application

Abstract

1. Introduction

- We introduce a new distributed spatiotemporal-based video annotation platform that provides visual information and spatiotemporal information of a video.

- Moreover, we propose a spatiotemporal feature descriptor named volume local directional ternary pattern-three orthogonal planes (VLDTP–TOP) on top of Spark.

- We developed several state-of-the-art appearance and spatiotemporal-based video annotation APIs for the developer and video annotation services for the end-users.

- Furthermore, we propose a video dataset that supports both spatial and spatiotemporal ground truth.

- Extensive experiments have been done to validate the performance, scalability, and effectiveness.

2. Related Work

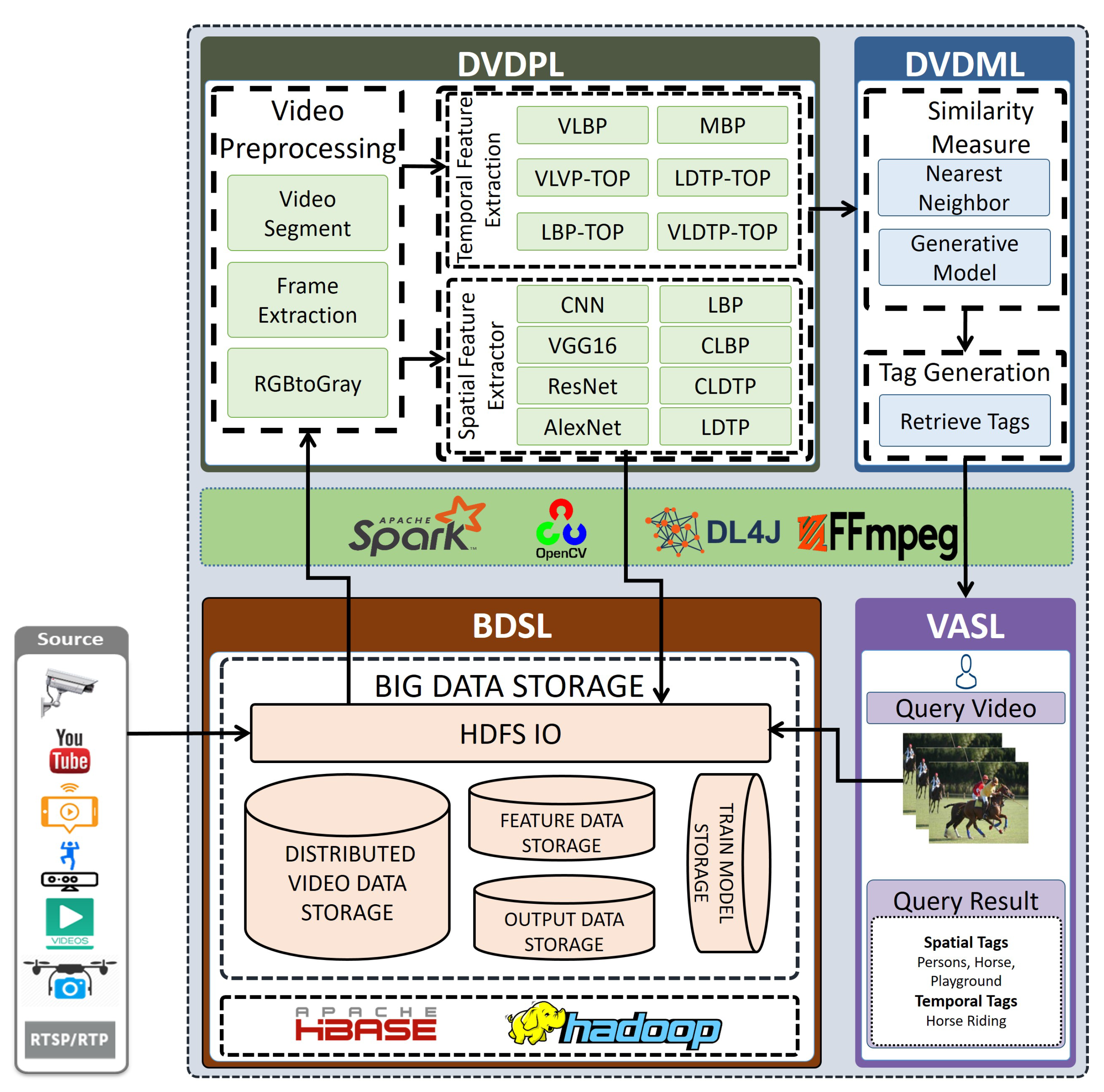

3. Video Annotation Platform

3.1. Big Data Storage Layer

3.2. Distributed Video Data Processing Layer (DVDPL)

3.3. Distributed Video Data Mining Layer (DVDML)

3.4. Video Annotation Service Layer (VASL)

3.5. Video Annotation APIs

4. Spatiotemporal Based Video Annotation

4.1. Color Local Directional Ternary Pattern (CLDTP)

4.2. Local Directional Ternary Pattern–Three Orthogonal Plane (LDTP–TOP)

4.3. Spatiotemporal Local Directional Ternary Pattern (VLDTP)

4.4. Volume Local Directional Ternary Pattern–Three Orthogonal Plane (VLDTP–TOP)

4.5. Similarity Measure

5. Evaluation and Analysis

5.1. Experimental Setup

5.2. STAD Dataset

5.3. Experimental Analysis

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Proposed Video Annotation APIs

| Category | APIs | Description |

|---|---|---|

| siat.vdpl.videoAnnotation. preprocessing.segment (frame, size) | Segment a given frame from a frame according to given number of segment | |

| Pre- processing | siat.vdpl.videoAnnotation. preprocessing.frameExtract(video) | Extract each frame from a video |

| siat.vdpl.videoAnnotation. preprocessing.rgb2gray (frame) | Convert RGB frame to gray frame | |

| siat.vdpl.videoAnnotation. sceneBasedFeatureExtractor.AlexNet(Mat frame) | Capture the deep spatial feature from each frame of a video based on AlexNet | |

| Spatial Feature Extraction | siat.vdpl.videoAnnotation. sceneBasedFeatureExtractor.VGGNet(Mat frame) | Capture the deep spatial feature from each frame of a video based on VGG16 |

| siat.vdpl.videoAnnotation. sceneBasedFeatureExtractor.lbp(frame, neighbor, radius) | Capture the spatial texture feature from each frame of a video based on Local Binary Pattern(LBP) | |

| siat.vdpl.videoAnnotation. sceneBasedFeatureExtractor.ltp(frame, neighbor, radius) | Capture the spatial texture feature from each frame of a video based on Local Ternary Pattern(LTP) | |

| siat.vdpl.videoAnnotation. sceneBasedFeatureExtractor.clbp(frame, neighbor, radius) | Capture the color spatial feature from each frame of a video based on Color Local Binary Pattern(CLBP) | |

| siat.vdpl.videoAnnotation. sceneBasedFeatureExtractor.ldtp(frame, neighbor, radius) | Capture the color spatial feature from each frame of a video based on Local Directional Ternary Pattern(LDTP) | |

| siat.vdpl.videoAnnotation. sceneBasedFeatureExtractor.cldtp(frame, neighbor, radius) | Capture the color spatial feature from each frame of a video based on Color Local Directional Ternary Pattern(CLDTP) | |

| siat.vdpl.videoAnnotation. dynamicFeatureExtractor.VLBC (FormerFrame, CurrentFrame,NextFrame, neighbor, radius) | Capture the dynamic texture feature from each video based on Volume Local Binary Count(VLBC) | |

| siat.vdpl.videoAnnotation. dynamicFeatureExtractor.VLBP (FormerFrame, CurrentFrame,NextFrame, neighbor, radius) | Capture the dynamic texture feature from each video based on Volume Local Binary Pattern (VLBP) | |

| Dynamic Feature Extraction | siat.vdpl.videoAnnotation. dynamicFeatureExtractor.VLTrP (FormerFrame, CurrentFrame,NextFrame, neighbor, radius) | Capture the dynamic texture feature from each video based on Volume Local Transition Pattern (VLTrP) |

| siat.vdpl.videoAnnotation. dynamicFeatureExtractor.LBP–TOP (FormerFrame, CurrentFrame,NextFrame, neighbor, radius) | Capture the dynamic texture feature from each video based on Volume Local Binary Pattern-Three Orthogonal Planes (LBP–TOP) | |

| siat.vdpl.videoAnnotation. dynamicFeatureExtractor.VLDTP-TOP (FormerFrame, CurrentFrame,NextFrame, neighbor, radius) | Capture the dynamic texture feature from each video based on Volume Local Directional Ternary Pattern-Three Orthogonal Planes (VLDTP-TOP) | |

| Similarity Measure | siat.vdml.videoAnnotation. SearchSimilarFrame.nearestNeighbor (feature) | Measure similar frames between train model and test frames |

| siat.vdml.videoAnnotation. SearchSimilarClip.nearestNeighbor (feature) | Measure similar video between train model and test video |

References

- Peng, Y.; Ngo, C.W. Clip-based similarity measure for query-dependent clip retrieval and video summarization. IEEE Trans. Circuits Syst. Video Technol. 2006, 16, 612–627. [Google Scholar] [CrossRef]

- Lew, M.S.; Sebe, N.; Djeraba, C.; Jain, R. Content-based multimedia information retrieval: State of the art and challenges. ACM Trans. Multimed. Comput. Commun. Appl. 2006, 2, 1–19. [Google Scholar] [CrossRef]

- Smith, J.R. VideoZoom spatio-temporal video browser. IEEE Trans. Multimed. 1999, 1, 157–171. [Google Scholar] [CrossRef]

- Jiang, Y.G.; Dai, Q.; Wang, J.; Ngo, C.W.; Xue, X.; Chang, S.F. Fast semantic diffusion for large-scale context-based image and video annotation. IEEE Trans. Image Process. 2012, 21, 3080–3091. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yi, J.; Peng, Y.; Xiao, J. Exploiting semantic and visual context for effective video annotation. IEEE Trans. Multimed. 2013, 15, 1400–1414. [Google Scholar] [CrossRef]

- Vondrick, C.; Patterson, D.; Ramanan, D. Efficiently scaling up crowdsourced video annotation. Int. J. Comput. Vis. 2013, 101, 184–204. [Google Scholar] [CrossRef]

- Markatopoulou, F.; Mezaris, V.; Patras, I. Implicit and explicit concept relations in deep neural networks for multi-label video/image annotation. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 1631–1644. [Google Scholar] [CrossRef]

- Aote, S.S.; Potnurwar, A. An automatic video annotation framework based on two level keyframe extraction mechanism. Multimed. Tools Appl. 2019, 78, 14465–14484. [Google Scholar] [CrossRef]

- Snoek, C.G.; Worring, M.; Van Gemert, J.C.; Geusebroek, J.M.; Smeulders, A.W. The challenge problem for automated detection of 101 semantic concepts in multimedia. In Proceedings of the 14th ACM international conference on Multimedia, Santa Barbara, CA, USA, 23–27 October 2006; pp. 421–430. [Google Scholar]

- Jiang, Y.G.; Yang, J.; Ngo, C.W.; Hauptmann, A.G. Representations of keypoint-based semantic concept detection: A comprehensive study. IEEE Trans. Multimed. 2009, 12, 42–53. [Google Scholar] [CrossRef] [Green Version]

- Nazare, A.C., Jr.; Schwartz, W.R. A scalable and flexible framework for smart video surveillance. Comput. Vis. Image Underst. 2016, 144, 258–275. [Google Scholar] [CrossRef]

- Tu, N.A.; Huynh-The, T.; Lee, Y. Scalable Video Classification using Bag of Visual Words on Spark. In Proceedings of the 2019 Digital Image Computing: Techniques and Applications (DICTA), Perth, Australia, 2–4 December 2019; pp. 1–8. [Google Scholar]

- Uddin, M.A.; Alam, A.; Tu, N.A.; Islam, M.S.; Lee, Y.K. SIAT: A distributed video analytics framework for intelligent video surveillance. Symmetry 2019, 11, 911. [Google Scholar] [CrossRef] [Green Version]

- Shidik, G.F.; Noersasongko, E.; Nugraha, A.; Andono, P.N.; Jumanto, J.; Kusuma, E.J. A Systematic Review of Intelligence Video Surveillance: Trends, Techniques, Frameworks, and Datasets. IEEE Access 2019, 7, 170457–170473. [Google Scholar] [CrossRef]

- Li, D.; Zhang, Z.; Yu, K.; Huang, K.; Tan, T. ISEE: An Intelligent Scene Exploration and Evaluation Platform for Large-Scale Visual Surveillance. IEEE Trans. Parallel Distrib. Syst. 2019, 30, 2743–2758. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Jiang, S.; Liang, S.; Chen, C.; Zhu, Y.; Li, X. Class agnostic image common object detection. IEEE Trans. Image Process. 2019, 28, 2836–2846. [Google Scholar] [CrossRef] [PubMed]

- Luo, A.; Li, X.; Yang, F.; Jiao, Z.; Cheng, H. Webly-supervised learning for salient object detection. Pattern Recognit. 2020, 103, 107308. [Google Scholar] [CrossRef]

- Zhang, Q.; Shi, Y.; Zhang, X. Attention and boundary guided salient object detection. Pattern Recognit. 2020, 107, 107484. [Google Scholar] [CrossRef]

- Cheng, Q.; Zhang, Q.; Fu, P.; Tu, C.; Li, S. A survey and analysis on automatic image annotation. Pattern Recognit. 2018, 79, 242–259. [Google Scholar] [CrossRef]

- Makadia, A.; Pavlovic, V.; Kumar, S. A new baseline for image annotation. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2008; pp. 316–329. [Google Scholar]

- Guillaumin, M.; Mensink, T.; Verbeek, J.; Schmid, C. Tagprop: Discriminative metric learning in nearest neighbor models for image auto-annotation. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 Ovctober 2009; pp. 309–316. [Google Scholar]

- Dorado, A.; Calic, J.; Izquierdo, E. A rule-based video annotation system. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 622–633. [Google Scholar] [CrossRef]

- Qi, G.J.; Hua, X.S.; Rui, Y.; Tang, J.; Mei, T.; Zhang, H.J. Correlative multi-label video annotation. In Proceedings of the 15th ACM International Conference on Multimedia, New York, NY, USA, 10–16 October 2007; pp. 17–26. [Google Scholar]

- Tang, J.; Hua, X.S.; Wang, M.; Gu, Z.; Qi, G.J.; Wu, X. Correlative linear neighborhood propagation for video annotation. IEEE Trans. Syst. Man, Cybern. Part B 2008, 39, 409–416. [Google Scholar] [CrossRef]

- Wang, M.; Hua, X.S.; Tang, J.; Hong, R. Beyond distance measurement: Constructing neighborhood similarity for video annotation. IEEE Trans. Multimed. 2009, 11, 465–476. [Google Scholar] [CrossRef]

- Wang, M.; Hua, X.S.; Hong, R.; Tang, J.; Qi, G.J.; Song, Y. Unified video annotation via multigraph learning. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 733–746. [Google Scholar] [CrossRef]

- Lai, J.L.; Yi, Y. Key frame extraction based on visual attention model. J. Vis. Commun. Image Represent. 2012, 23, 114–125. [Google Scholar] [CrossRef]

- Thakar, V.B.; Hadia, S.K. An adaptive novel feature based approach for automatic video shot boundary detection. In Proceedings of the 2013 International Conference on Intelligent Systems and Signal Processing (ISSP), Gujarat, India, 1–2 March 2013; pp. 145–149. [Google Scholar]

- Xu, S.; Tang, S.; Zhang, Y.; Li, J.; Zheng, Y.T. Exploring multi-modality structure for cross domain adaptation in video concept annotation. Neurocomputing 2012, 95, 11–21. [Google Scholar] [CrossRef]

- Chamasemani, F.F.; Affendey, L.S.; Mustapha, N.; Khalid, F. Automatic video annotation framework using concept detectors. J. Appl. Sci. 2015, 15, 256. [Google Scholar] [CrossRef] [Green Version]

- Chou, C.L.; Chen, H.T.; Lee, S.Y. Multimodal video-to-near-scene annotation. IEEE Trans. Multimed. 2016, 19, 354–366. [Google Scholar] [CrossRef]

- Xu, C.; Wang, J.; Lu, H.; Zhang, Y. A novel framework for semantic annotation and personalized retrieval of sports video. IEEE Trans. Multimed. 2008, 10, 421–436. [Google Scholar]

- Li, Y.; Tian, Y.; Duan, L.Y.; Yang, J.; Huang, T.; Gao, W. Sequence multi-labeling: A unified video annotation scheme with spatial and temporal context. IEEE Trans. Multimed. 2010, 12, 814–828. [Google Scholar] [CrossRef] [Green Version]

- Altadmri, A.; Ahmed, A. A framework for automatic semantic video annotation. Multimed. Tools Appl. 2014, 72, 1167–1191. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Yan, G.; Wang, H.; Fu, J.; Hua, J.; Wang, J.; Yang, Y.; Zhang, G.; Bao, H. Semantic annotation for complex video street views based on 2D–3D multi-feature fusion and aggregated boosting decision forests. Pattern Recognit. 2017, 62, 189–201. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.C.; Chiou, C.Y.; Huang, C.R.; Chung, P.C.; Huang, W.Y. Spatiotemporal Coherence-Based Annotation Placement for Surveillance Videos. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 787–801. [Google Scholar] [CrossRef]

- Kavasidis, I.; Palazzo, S.; Di Salvo, R.; Giordano, D.; Spampinato, C. An innovative web-based collaborative platform for video annotation. Multimed. Tools Appl. 2014, 70, 413–432. [Google Scholar] [CrossRef] [Green Version]

- Wu, J.; Feng, Y.; Sun, P. Sensor fusion for recognition of activities of daily living. Sensors 2018, 18, 4029. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Chahi, A.; Ruichek, Y.; Touahni, R. Local directional ternary pattern: A new texture descriptor for texture classification. Comput. Vis. Image Underst. 2018, 169, 14–27. [Google Scholar]

- Tan, X.; Triggs, B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans. Image Process. 2010, 19, 1635–1650. [Google Scholar]

- Karen, S.; Andrew, Z. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zhao, G.; Pietikainen, M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 915–928. [Google Scholar] [CrossRef] [Green Version]

- Baumann, F.; Lao, J.; Ehlers, A.; Rosenhahn, B. Motion Binary Patterns for Action Recognition. In Proceedings of the 3rd International Conference on Pattern Recognition Applications and Methods (ICPRAM-2014), Angers, France, 6–8 March 2014; pp. 385–392. [Google Scholar]

- Thomas, A.; Sreekumar, K. A survey on image feature descriptors-color, shape and texture. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 7847–7850. [Google Scholar]

- Jabid, T.; Kabir, M.H.; Chae, O. Local directional pattern (LDP) for face recognition. In Proceedings of the 2010 digest of technical papers international conference on consumer electronics (ICCE), Las Vegas, NV, USA, 9–13 January 2010; pp. 329–330. [Google Scholar]

- Singh, C.; Walia, E.; Kaur, K.P. Color texture description with novel local binary patterns for effective image retrieval. Pattern Recognit. 2018, 76, 50–68. [Google Scholar] [CrossRef]

- Team, E.D.D. Deeplearning4j: Open-Source Distributed Deep Learning for the JVM, Apache Software Foundation License 2.0. 2016. Available online: http://deeplearning4j.org (accessed on 20 January 2020).

- Team, N.D. ND4J: N-Dimensional Arrays and Scientific Computing for the JVM, Apache Software Foundation License 2.0. 2016. Available online: http://nd4j.org (accessed on 20 January 2020).

- Abir, M.A.I. STAD. 2020. Available online: https://drive.google.com/drive/folders/1AWUkdILnAKuYLtxLpx3KRaAmdnKIyz59?usp=sharing (accessed on 20 May 2020).

- Reddy, K.K.; Shah, M. Recognizing 50 human action categories of web videos. Mach. Vis. Appl. 2013, 24, 971–981. [Google Scholar] [CrossRef] [Green Version]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Hadji, I.; Wildes, R.P. A New Large Scale Dynamic Texture Dataset with Application to ConvNet Understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 320–335. [Google Scholar]

- Abu-El-Haija, S.; Kothari, N.; Lee, J.; Natsev, P.; Toderici, G.; Varadarajan, B.; Vijayanarasimhan, S. Youtube-8m: A large-scale video classification benchmark. arXiv 2016, arXiv:1609.08675. [Google Scholar]

- Wang, H.; Schmid, C. Action recognition with improved trajectories. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3551–3558. [Google Scholar]

| Category | Sub-Category | Dynamic Information | Spatial Appearances |

|---|---|---|---|

| Human Action | Single Movement | BaseballPitch | Person, Ball, Field, Grass/Green_field, Sky |

| Biking | Person, Cycle, Tree, Car, Road, Fence, Sky, Grass/Green_field | ||

| Horse Riding | Person, Horse, Tree, Bush, Fence, Sky, Grass/Green_field | ||

| Skate Boarding | Person, Road, Tree, Sky | ||

| Swing | Person, Tree, Bush, Grass/Green_field | ||

| Crowd Movement | Band Marching | Person, Road, Tree, Sky, Building | |

| Run | Person, Road, Tree, Sky, Building, Car | ||

| Emergency | Explosions | Person, Fire, Building, Sky, Hill, Fire, Volcano | |

| Tornado | Tornado, Grass/Green_field, Stamp | ||

| Traffic | Car | Car, Road, Sky, Tree, Bush, Building, Grass/Green_field | |

| Nature | Birds Fly | Birds, Sky, Person, Building, Sea, Grass/Green_field |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Islam, M.A.; Uddin, M.A.; Lee, Y.-K. A Distributed Automatic Video Annotation Platform. Appl. Sci. 2020, 10, 5319. https://doi.org/10.3390/app10155319

Islam MA, Uddin MA, Lee Y-K. A Distributed Automatic Video Annotation Platform. Applied Sciences. 2020; 10(15):5319. https://doi.org/10.3390/app10155319

Chicago/Turabian StyleIslam, Md Anwarul, Md Azher Uddin, and Young-Koo Lee. 2020. "A Distributed Automatic Video Annotation Platform" Applied Sciences 10, no. 15: 5319. https://doi.org/10.3390/app10155319