An Aerial Mixed-Reality Environment for First-Person-View Drone Flying

Abstract

1. Introduction

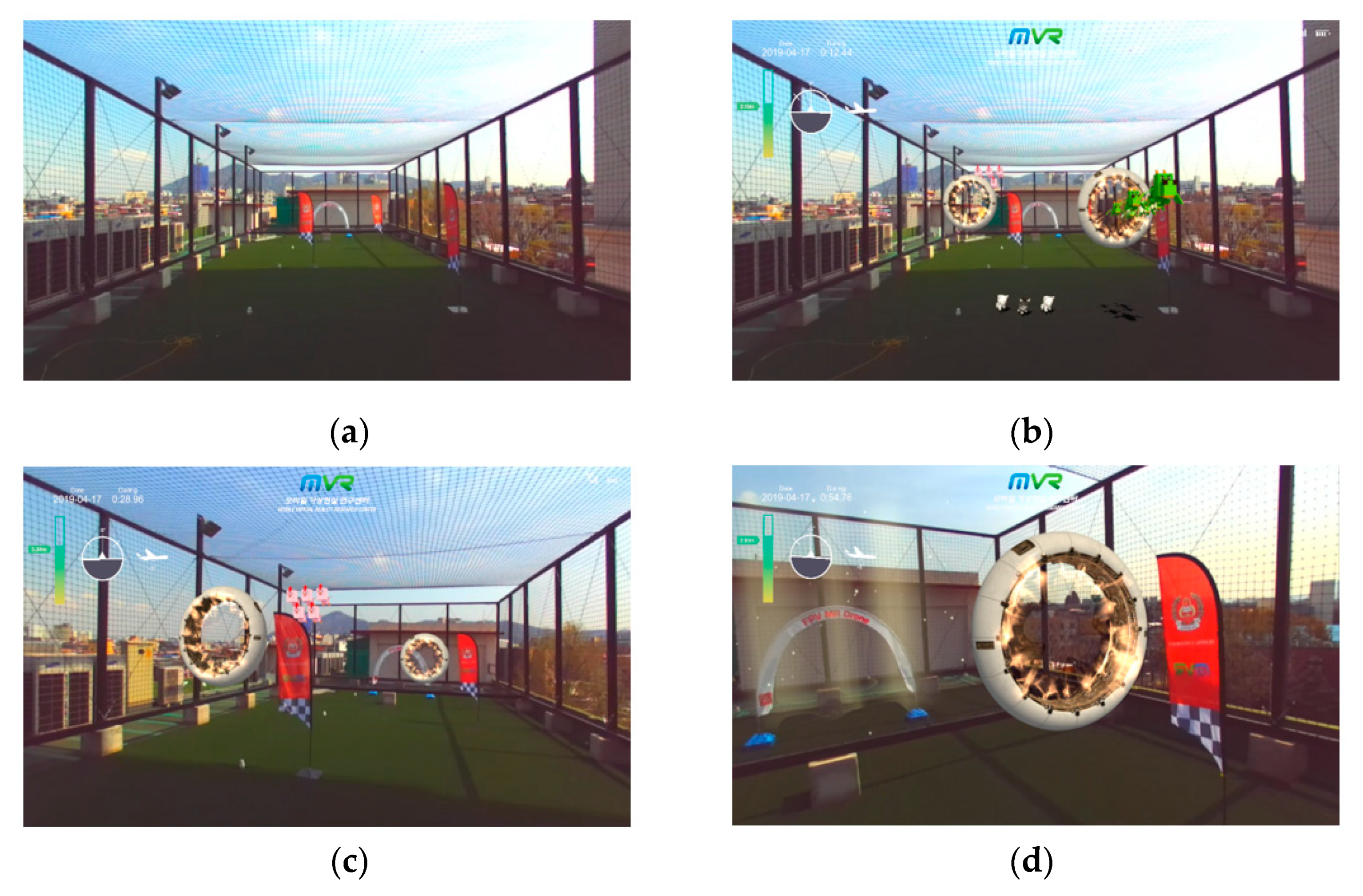

- We propose a new aerial MR environment using an FPV drone with a stereo camera to provide users with a safe and engaging flight environment for flight training. The stereo camera improves the accuracy of the pilot’s distance estimation from binocular disparities, and a head-mounted display (HMD) with a wide field of view increases immersion.

- Different MR environments for drone flying can be created easily by placing several drone flags in the real world. The flags are recognized by machine learning techniques and virtual objects are synthesized with a real scene when detecting the flags during flight.

- The proposed system is evaluated on the basis of user studies to compare the experiences of using our FPV-MR drone system with a VR drone flight simulator.

2. Related Work

2.1. Uses of Drones with Cameras

2.2. Virtual and Augmented Reality for Drones

2.3. Object Recognition and Immersion Measurement in Mixed Reality

3. Mixed Reality for Drones

3.1. System Overview

3.2. Aerial Mixed Reality Using a Drone with a Stereo Camera

3.3. Flight in a Mixed Reality Environment

4. Experimental Results

4.1. Experimental Environment

4.2. Immersion in FPV Flights

4.3. Actual Flight Experiences

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Gebhardt, C.; Stevšić, S.; Hilliges, O. Optimizing for aesthetically pleasing quadrotor camera motion. ACM Trans. Graph. 2018, 37, 1–11. [Google Scholar] [CrossRef]

- Cacace, J.; Finzi, A.; Lippiello, V.; Furci, M.; Mimmo, N.; Marconi, L. A control architecture for multiple drones operated via multimodal interaction in search & rescue mission. In Proceedings of the IEEE International Symposium on Safety, Security, and Rescue Robotics, Lausanne, Switzerland, 23–27 October 2016; pp. 233–239. [Google Scholar]

- Hasan, K.M.; Newaz, S.S.; Ahsan, M.S. Design and development of an aircraft type portable drone for surveillance and disaster management. Int. J. Intell. Unmanned Syst. 2018. [Google Scholar] [CrossRef]

- Kim, S.J.; Jeong, Y.; Park, S.; Ryu, K.; Oh, G. A survey of drone use for entertainment and AVR (augmented and virtual reality). In Augmented Reality and Virtual Reality; Springer: Cham, Switzerland, 2018; pp. 339–352. [Google Scholar]

- FAT SHARK. Available online: https://www.fatshark.com/ (accessed on 4 August 2020).

- SKY DRONE. Available online: https://www.skydrone.aero/ (accessed on 4 August 2020).

- Chen, J.Y.C.; Haas, E.C.; Barnes, M.J. Human performance issues and user interface design for teleoperated robots. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 1231–1245. [Google Scholar] [CrossRef]

- Samland, F.; Fruth, J.; Hildebrandt, M.; Hoppe, T.C. AR.Drone: Security threat analysis and exemplary attack to track persons. In Proceedings of the Intelligent Robots and Computer Vision XXIX: Algorithms and Techniques, Burlingame, CA, USA, 23–24 January 2012; Volume 8301, p. 15. [Google Scholar]

- Navarro González, J. People Exact-Tracking Using a Parrot AR.Drone 2.0. Bachelor’s Thesis, Universitat Politècnica de Catalunya, Barcelona, Spain, 2015. [Google Scholar]

- Xie, K.; Yang, H.; Huang, S.; Lischinski, D.; Christie, M.; Xu, K.; Gong, M.; Cohen-Or, D.; Huang, H. Creating and chaining camera moves for quadrotor videography. ACM Trans. Graph. 2018, 37, 88. [Google Scholar] [CrossRef]

- Nägeli, T.; Meier, L.; Domahidi, A.; Alonso-Mora, J.; Hilliges, O. Real-time planning for automated multi-view drone cinematography. ACM Trans. Graph. 2017, 36, 132. [Google Scholar] [CrossRef]

- Galvane, Q.; Lino, C.; Christie, M.; Fleureau, J.; Servant, F.; Tariolle, F.; Guillotel, P. Directing cinematographic drones. ACM Trans. Graph. 2018, 37, 34. [Google Scholar] [CrossRef]

- Moon, H.; Sun, Y.; Baltes, J.; Kim, S. The IROS 2016 competitions [competitions]. IEEE Robot. Autom. Mag. 2017, 24, 20–29. [Google Scholar] [CrossRef]

- DRONE RACING LEAGUE. Available online: https://thedroneracingleague.com/ (accessed on 4 August 2020).

- Jung, S.; Hwang, S.; Shin, H.; Shim, D.H. Perception, guidance, and navigation for indoor autonomous drone racing using deep learning. IEEE Robot. Autom. Lett. 2018, 3, 2539–2544. [Google Scholar] [CrossRef]

- Tomic, T.; Schmid, K.; Lutz, P.; Domel, A.; Kassecker, M.; Mair, E.; Grixa, I.L.; Ruess, F.; Suppa, M.; Burscchka, D. Toward a fully autonomous UAV: Research platform for indoor and outdoor urban search and rescue. IEEE Robot. Autom. Mag. 2012, 19, 46–56. [Google Scholar] [CrossRef]

- Apvrille, L.; Tanzi, T.; Dugelay, J. Autonomous drones for assisting rescue services within the context of natural disasters. In Proceedings of the 2014 XXXIth URSI General Assembly and Scientific Symposium, Beijing, China, 16–23 August 2014; pp. 1–4. [Google Scholar]

- Smolyanskiy, N.; Gonzalez-Franco, M. Stereoscopic First Person View System for Drone Navigation. Front. Robot. AI 2017, 4, 1–10. [Google Scholar] [CrossRef]

- Akbarzadeh, A.; Frahm, J.-M.; Mordohai, P.; Clipp, B.; Engels, C.; Gallup, D.; Merrell, P.; Phelps, M.; Sinha, S.; Talton, B.; et al. Towards urban 3d reconstruction from video. In Proceedings of the Third International Symposium on 3D Data Processing, Visualization, and Transmission, Chapel Hill, NC, USA, 14–16 June 2006; pp. 1–8. [Google Scholar]

- Geiger, A.; Ziegler, J.; Stiller, C. Stereoscan: Dense 3d reconstruction in real-time. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium, Baden, Germany, 5–9 June 2011; pp. 963–968. [Google Scholar]

- Zhang, G.; Shang, B.; Chen, Y.; Moyes, H. SmartCaveDrone: 3D cave mapping using UAVs as robotic co-archaeologists. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems, Miami, FL, USA, 13–16 June 2017; pp. 1052–1057. [Google Scholar]

- Deris, A.; Trigonis, I.; Aravanis, A.; Stathopoulou, E.K. Depth Cameras on UAVs: A First Approach. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 231–236. [Google Scholar] [CrossRef]

- Erat, O.; Isop, W.A.; Kalkofen, D.; Schmalstieg, D. Drone-augmented human vision: Exocentric control for drones exploring hidden areas. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1437–1446. [Google Scholar] [CrossRef] [PubMed]

- Ai, Z.; Livingston, M.A.; Moskowitz, I.S. Real-time unmanned aerial vehicle 3D environment exploration in a mixed reality environment. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems, Arlington, VA, USA, 7–10 June 2016; pp. 664–670. [Google Scholar]

- Okura, F.; Kanbara, M.; Yokoya, N. Augmented telepresence using autopilot airship and omni-directional camera. In Proceedings of the 2010 IEEE International Symposium on Mixed and Augmented Reality, Seoul, Korea, 13–16 October 2010; pp. 259–260. [Google Scholar]

- Aleotti, J.; Micconi, G.; Caselli, S.; Benassi, G.; Zambelli, N.; Bettelli, M.; Zappettini, A. Detection of nuclear sources by UAV teleoperation using a visuo-haptic augmented reality interface. Sensors 2017, 17, 2234. [Google Scholar] [CrossRef] [PubMed]

- Zollmann, S.; Hoppe, C.; Langlotz, T.; Reitmayr, G. FlyAR: Augmented reality supported micro aerial vehicle navigation. IEEE Trans. Vis. Comput. Graph. 2014, 20, 560–568. [Google Scholar] [CrossRef]

- Zollmann, S.; Hoppe, C.; Kluckner, S.; Poglitsch, C.; Bischof, H.; Reitmayr, G. Augmented reality for construction site monitoring and documentation. Proc. IEEE 2014, 102, 137–154. [Google Scholar] [CrossRef]

- Sun, M.; Dong, N.; Jiang, C.; Ren, X.; Liu, L. Real-Time MUAV Video Augmentation with Geo-information for Remote Monitoring. In Proceedings of the 2013 Fifth International Conference on Geo-Information Technologies for Natural Disaster Management, Mississauga, ON, Canada, 9–11 October 2013; pp. 114–118. [Google Scholar]

- Enzweiler, M.; Gavrila, D.M. Monocular pedestrian detection: Survey and experiments. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 2179–2195. [Google Scholar] [CrossRef]

- Erik, H.; Low, B.K. Face detection: A survey. Comput. Vis. Image Underst. 2001, 83, 236–274. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; pp. 511–518. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 1–9. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Jennett, C.; Cox, A.L.; Cairns, P.A.; Dhoparee, S.; Epps, A.; Tijs, T.J.; Walton, A. Measuring and defining the experience of immersion in games. Int. J. Hum. Comput. Stud. 2008, 66, 641–661. [Google Scholar] [CrossRef]

- Tcha-Tokey, K.; Christmann, O.; Loup-Escande, E.; Richir, S. Proposition and validation of a questionnaire to measure the user experience in immersive virtual environments. Int. J. Virtual Real. 2016, 16, 33–48. [Google Scholar] [CrossRef]

- Brockmyer, J.H.; Fox, C.M.; Curtiss, K.; McBroom, E.; Burkhart, K.; Pidruzny, J.N. The development of the Game Engagement Questionnaire: A measure of engagement in video game-playing. J. Exp. Soc. Psychol. 2009, 45, 624–634. [Google Scholar] [CrossRef]

- STEREO LABS. Available online: https://www.stereolabs.com/zed-mini/ (accessed on 4 August 2020).

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Computer Vision and Pattern Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Canziani, A.; Paszke, A.; Culurciello, E. An analysis of deep neural network models for practical applications. arXiv 2016, arXiv:1605.07678. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- COCO—Common Objects in Context. Available online: https://cocodataset.org (accessed on 4 August 2020).

- Ortiz, L.E.; Cabrera, E.V.; Gonçalves, L.M. Depth Data Error Modeling of the ZED 3D Vision Sensor from Stereolabs. ELCVIA Electron. Lett. Comput. Vis. Image Anal. 2018, 17, 1–15. [Google Scholar] [CrossRef]

- MacQuarrie, A.; Steed, A. Cinematic virtual reality: Evaluating the effect of display type on the viewing experience for panoramic video. In Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces, Los Angeles, CA, USA, 18–22 March 2017; pp. 45–54. [Google Scholar]

- Jeong, D.; Yoo, S.; Yun, J. Cybersickness analysis with eeg using deep learning algorithms. In Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces, Osaka, Japan, 23–27 March 2019; pp. 827–835. [Google Scholar]

- So, R.H.Y.; Lo, W.T.; Ho, A.T.K. Effects of navigation speed on motion sickness caused by an immersive virtual environment. Hum. Factors 2001, 43, 452–461. [Google Scholar] [CrossRef]

- Bowman, D.A.; McMahan, R.P. Virtual reality: How much immersion is enough? Computer 2007, 40, 36–43. [Google Scholar] [CrossRef]

- Lin, J.J.-W.; Duh, H.B.L.; Parker, D.E.; Abi-Rached, H.; Furness, T.A. Effects of field of view on presence, enjoyment, memory, and simulator sickness in a virtual environment. In Proceedings of the IEEE Virtual Reality, Orlando, FL, USA, 24–28 March 2002; pp. 164–171. [Google Scholar]

- Padrao, G.; Gonzalez-Franco, M.; Sanchez-Vives, M.V.; Slater, M.; Rodriguez-Fornells, A. Violating body movement semantics: Neural signatures of self-generated and external-generated errors. Neuroimage 2016, 124, 147–156. [Google Scholar] [CrossRef] [PubMed]

| Scales | P/N | |

|---|---|---|

| Immersion | ||

| I enjoyed being in this environment. | Emotion | P |

| I was worried that the drone could crash due to collisions in flight. | Emotion | P |

| I liked the graphics and images. | Emotion | P |

| I wanted to stop while doing it. | Emotion | N |

| When I mentioned the experience in the virtual environment, I experienced emotions that I would like to share. | Flow | P |

| After completing the flight, the time passed faster than expected. | Flow | P |

| The sense of moving around inside the environment was compelling. | Flow | P |

| I felt physically fit in the environment. | Immersion | P |

| I felt like I was boarding a drone in this environment. | Immersion | P |

| I needed time to immerse myself in the flight. | Immersion | N |

| Personally, I would say this environment is confusing. | Judgement | N |

| I felt that this environment was original. | Judgement | P |

| I want to fly again in this environment. | Judgement | P |

| My interactions with the virtual environment appeared natural. | Presence | P |

| I was able to examine objects closely. | Presence | P |

| I felt a sense of depth when the obstacles got closer. | Presence | P |

| I felt a sense of realness in the configured environment. | Presence | P |

| I thought that the inconsistency in this environment was high. | Presence | N |

| Simulator Sickness | ||

| I suffered from fatigue during my interaction with this environment. | Experience consequence | N |

| I suffered from headache during my interaction with this environment. | Experience consequence | N |

| I suffered from eyestrain during my interaction with this environment. | Experience consequence | N |

| I felt an increase in sweat during my interaction with this environment. | Experience consequence | N |

| I suffered from nausea during my interaction with this environment. | Experience consequence | N |

| I suffered from vertigo during my interaction with this environment. | Experience consequence | N |

| FPV MR Drone: Comparison with Real FPV Flight | |

|---|---|

| Positive |

|

| Negative |

|

| FPV MR Drone: Comparison with Virtual FPV Flight | |

|---|---|

| Positive |

|

| Negative |

|

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, D.-H.; Go, Y.-G.; Choi, S.-M. An Aerial Mixed-Reality Environment for First-Person-View Drone Flying. Appl. Sci. 2020, 10, 5436. https://doi.org/10.3390/app10165436

Kim D-H, Go Y-G, Choi S-M. An Aerial Mixed-Reality Environment for First-Person-View Drone Flying. Applied Sciences. 2020; 10(16):5436. https://doi.org/10.3390/app10165436

Chicago/Turabian StyleKim, Dong-Hyun, Yong-Guk Go, and Soo-Mi Choi. 2020. "An Aerial Mixed-Reality Environment for First-Person-View Drone Flying" Applied Sciences 10, no. 16: 5436. https://doi.org/10.3390/app10165436

APA StyleKim, D.-H., Go, Y.-G., & Choi, S.-M. (2020). An Aerial Mixed-Reality Environment for First-Person-View Drone Flying. Applied Sciences, 10(16), 5436. https://doi.org/10.3390/app10165436