Abstract

This paper puts forward a new adaptive observer scheme for joint estimation of state and multi-parameters for nonlinear dynamic systems. The adaptive observer uses chaos differential evolution algorithm to improve global optimality of estimation in the case of multi-parameter and system nonlinearity. Slide time window is used to realize real-time estimation. The simulation result shows the effectiveness of the adaptive observer.

1. Introduction

Parameter and state estimation has played a significant role in system identification, controller designs, signal filtering and fault diagnosis for decades [1]. Accurate estimation of states and parameters is essential for effective monitoring, control and identification in many fields, such as chemical, biological and mechanical [2,3,4,5,6,7,8]. However, the majority of the system parameters and state variables are difficult to measure and have to be inferred from the available online data and the models relating the measurements. Further, unmeasured model parameters may slowly drift from their nominal values over a period of time. Thus, it becomes necessary to track the changing parameters/unmeasured states and use them for improving performances of monitoring, control and real-time fault diagnosis [9].

The typical state-parameter estimation algorithms include the recursive methods and the iterative methods [1]. Estimation and identification problems are equivalent to the state and parameter estimation problems, respectively. The two problems have conventionally been viewed as two separate problems [10]. To address them, one conventional strategy is to employ two step process–first identify the process parameters in an offline settings, and then use the identified model for real-time state estimation. The second one is to employ dual filters [11], where one filter is used for state estimation, and another one for parameter estimation. In dual filters, the estimation and identification are achieved through iterations between the two filters. The third one is to design adaptive observer which augment the state space with the parameters for the joint estimation [12]. This formulates the estimation and identification problems simultaneously under a single observer framework. Usually the problem of joint state-parameter estimation is solved by recursive algorithms known as adaptive observers [10]. While the adaptive observers were originally developed in continuous system, the estimation and identification are guaranteed to asymptotically converge to the true values only if the process is run long enough. If the parameters change faster than the convergence rate, it is difficult to converge within a shorter duration time. So observation with time constraints (non-asymptotic observation) become a more practical problem for both control theory and practice [13]. In term of the time varying parameters estimation for nonlinear systems, it is usually very difficult to analyze the convergence and stability of the observer [14]. Similarly, it is difficult to adjust the gain matrix of the adaptive observer to obtain satisfactory convergence performance whether it is stable or time-varying [12].

Adaptive observer can be widely used to solve the state estimation problem of a nonlinear system. However, existing methods generally perform state estimation based on the assumption that the initial state is known and accurate. Nevertheless, in the actual industrial process, the initial state is generally unknown or biased. Improper initial value of the state can lead to inaccuracy state estimation result. In this paper, the problem of initial state uncertainty in state estimation of nonlinear systems is considered.

Although a great deal of knowledge has been accumulated in the literature about adaptive observer design for nonlinear systems, a customary approach is to linearise the nonlinear model around the current states estimate, and then to apply linear systems techniques for states estimation of nonlinear system. However, this strategy is only effective if linearization does not result in a large mismatch between the linear model and the nonlinear behavior. In order to improve the estimation performance, it is necessary to constrain the nonlinear system when designing the observer. As a result, the analysis and design process is often very complex. To overcome this problem, Porter and Passino propose a genetic adaptive observer [15]. In this paper a genetic algorithm(GA) was applied to estimate the state vector of a possibly nonlinear system.They show how to construct the gain matrix of such an observer using GA real-time evolution observer to minimize the output error. Apart from the relatively simple design procedure, the authors did not provide the convergence conditions of the observer and did not consider robustness issues with respect to model uncertainty. The convergence condition of the observer was proposed by Witczak et al. [16]. In particular, the authors showed a technique for increasing its convergence rate with genetic programming. In Reference [17], the authors presented a robust observer design method, by which the optimal selection of observer operating points can be realized in the presence of measurement noise and inaccurate knowledge of mathematical model parameters. In Reference [18], a multiple model adaptive nonlinear observer was proposed and all observer parameters are optimized using particle swarm optimization.

The research shows that the intelligent optimization algorithm has excellent performance for solving multivariable systems. But the above researches mainly focus on the optimization of the gain matrix of the traditional adaptive observer. In Reference [19], the authors utilized the GA searching ability to determine unobtainable state variables in the control system and constructed an adaptive GA observer to estimate a parameter. However, this study does not involve robust estimation of multi-dimensional time-varying parameters. In Reference [20], a neural network-based adaptive observer is designed to estimate the states of de-icing robot, but the initial state deviation is not considered

When the input and output of the system are known, the parameters and states to be identified can be considered as solving the inverse optimization problem. In general, the optimization task is complicated by the existence of non-linear objective functions with multiple local minima [21]. For a dynamic nonlinear system, accurate estimation of multidimensional states and parameters is a complex optimization problem. The DE (Differential evolutionary) algorithm emerged as a very competitive form of evolutionary computing more than a decade ago [21]. It is a calculation strategy that use mechanisms inspired by biological evolution, such as reproduction, mutation and recombination. Therefore, DE has some intelligent characteristics, including self-organizing, self-adaptive and self-learning features. It is often used to tackle complex optimization problems. In this paper, a class of nonlinear systems with uncertain time-varying parameters and uncertain initial state are studied. Differential evolutionary algorithms (DE) is introduced for dynamic state and parameter estimation. The results obtained are applied to a three tanks systems, and simulation results are presented to demonstrate the effectiveness and feasibility of the developed results.

The main contribution includes—the unmeasurable parameters and states within the nonlinear system can be estimated by an novel adaptive observer by creating an augmented states vector . A novel adaptive observer based on intelligent optimization algorithm is built. Parameter estimation and state tracking are considered as an optimal problem and the fitness function is constructed by maximizing the correlation coefficient or minimizing the Euclide distance between the real output and the estimated output. Through iterative optimization of DE algorithm, the initial value of state and parameters of the dynamic system can be estimated correctly, so as to reconstruct the output trajectory of the system and the unknown states. In order to avoid the accumulation of estimation errors, an initial condition correction is carried out for each time window, so the observer has a certain robustness.

This paper is organized as follows—the considered problem is formulated in Section 2. Section 3 derives the parameter identification model of the systems.Section 4 presents a adaptive observer based on chastic DE for parameter and state estimation. Section 5 provides an illustrative example to illustrate the performance of the proposed observer. Finally, Concluding remarks are made in Section 6.

2. Problem Statement

Let us consider a nonlinear parameter varying system:

where: , , and denote the system state, parameter, input and output vector respectively. is a independent and identically distributed Gaussian noises with covariance matrices Q. The is unknown time varying parameter. The term is known as nonlinear functions with appropriate dimensions.

Assumption 1.

The function is globally Lipschitz in x and u.

Assumption 2.

The uncertain time varying parameter satisfy

where is known parameter nominal values, Γ is known constant vector. Here, the assumed nonlinear system S is linear on parameters. If the process parameter nominal value is known, the nominal model of the system is

The goal of this paper is twofold:

- Design a dynamic observer that estimate the state and parameter of the noiseless system (1) in a finite time or in a fixed time, under the assumption that the initial state is unknown.

- The observer must be robust (in an input-to-state sense) with respect to measurement noise .

3. Simultaneous Estimation Scheme for Parameter and State

3.1. Observability Of System

Is a reliable observer available for the system (1) based on the past values of and ? This problem leads to the concept of observability [22,23,24,25].

In the system (1), inputs are also called excitation of the system. Admissible inputs are assumed to be taken in some measurable and bounded functions set . Let denotes the system solution at time t, with initial condition at time , and control .

Definition 1.

Indistinguishability

A pair (, ) will be said to be indistinguishable by u if , . The pair is just said to be indistinguishable, if it is so for any u.

Definition 2.

Observability

The system (1) is observable if it does not have any indistinguishable pair of states [26]. Observability is the necessary condition of a system to obtain an available observer. If a system is not observable, one can not obtain the reliable estimate of the initial state . While depending the initial state the system solution under the input can be estimated. It means the availability of the observer.

The observability defined here does not exclude the possible existence of inputs for which some states are indistinguishable. In other word this defined observability depends on the excitation and it is not an uniform observability, it is not enough to design an reliable observer unless the action of inputs is taken into account. The observability independent of the input, that is, the uniform observability requires strong conditions on the system structure. However, in this paper, we assume that the considered systems are observable with their normal working excitation and the observability under the normal working excitation ensures the available of the observer. We will not further discuss the problem of observability of nonlinear systems for which the interested reader is referred to Reference [27].

3.2. Observer Structure Based on Optimization Theory

The design of observer implies the fulfilment of the observability condition that the unmeasured states can be inferred(or estimated) based on the available measured outputs. In this paper, it is assumed that the system (1) is observable with their normal working excitation and the system parameters are slow time variable.

In order to esimate the states and unknown parameters, a way to solve state and parameter estimation is to consider the parameters as the extended state variables with zero dynamic [3] in a time window T, that is,

The estimated state of observer (5) is given by the following integral with initial state .

If is determined in a unique continuous system, then can be calculated immediately according to (7). As a result, the estimating of the initial values of state allows us to estimate the current values of the unobtained state variables. However, in practical engineering applications, the integral of is difficult to be solved accurately. Therefore, the optimization method is considered to be used to obtain the approximate solution of multi-dimensional state estimation. Before we construct the optimization objective function, the generalized vector deviation is defined as follows

Definition 3.

Generalized Vector Deviation Let be a non-empty vector set, for any elements , if the real value of d(X,Y) satisfies:

- (i)

- , iff ;

- (ii)

- ; then is a generalized vector deviation on .

The selection of the formulation of the sample vector generalized vector deviation should have a clear engineering physical meaning, so that the value of the generalized vector deviation should reflect the closeness degree of the vectors in engineering sense, so as to reflect the closeness degree of the pre-estimate initial state and the actual initial state .

In order to achieve the optimal state estimation, in this work, the object function describing the closeness of observer output vector to the real output vector Y is designed which satisfies:

where, is the generalized vector deviation between the vector and the vector Y which is defined in the definition (3). means .

Let vector , vector , then the object function is:

The constraint condition for this optimization problem(9) is a polyhedron which is constructed by the range of parameters and state.

Consequently, the following adaptive observer based on constraint Optimization is obtained:

where presents the optimization calculation using the optimization algorithm.

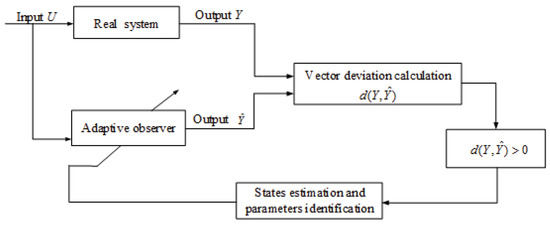

To summarize, the estimation scheme based on adaptive observer using optimization is shown as the Figure 1 below.

Figure 1.

Dynamic observer structure block diagram.

4. Adaptive Observer Based on Chaotic De

4.1. Chaotic De Algorithm

DE is an efficient global optimization algorithm in non-continuous, non-convex, highly nonlinear, noisy, time-dependent or flat solution spaces. It is a population based stochastic optimization algorithm. DE has become popular due to its great convergence characteristics with few control parameters, lower computational complexity and strong global optimization ability.

The basic idea of the algorithm is: starting from a randomly generated initial population, new individual is generated by summing the vector difference of any two individuals in the population with the third individual, and then the new individual is compared with the corresponding individual in the contemporary population. If the new individual is more adaptable than the current individual, the new individual will replace the old one in the next generation, otherwise the old individual is still preserved. Through continuous evolution, the population keeps the good individuals, eliminates the bad individuals, and the population intelligence guides the search to the best solution.

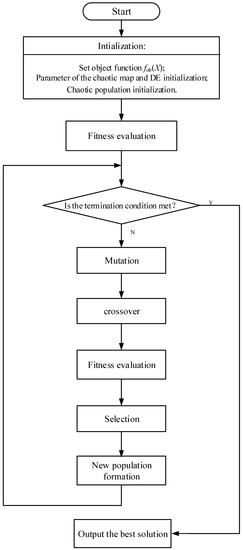

Despite all the advantages of DE, it can hinder its performance when dealing with complex optimization problem, such as estimation of dynamic system state and parameters in this article. A common disadvantage of evolution is the loss of diversity as search proceeds, resulting in population stagnation or premature convergence. Therefore, a chaotic strategy is needed to maintain the diversity of population. A fundamental characteristic of chaotic systems is random, ergodic and no-period behavior. Applying chaotic sequence instead of random sequence in DE is powerful and efficient. The DE performance can be improved to prevent premature convergence to local minimum [28]. A variety of chaotic mappings are available for intelligent optimization algorithms to improve their performance, which interested readers may refer to References [28,29]. In order to enhance the global convergence and to improve the accuracy of state estimation, the Cubic chaotic sequence is considered to substitute random numbers for initial population of basic DE. The flow chart of the algorithm is shown in Figure 2.

Figure 2.

Flow chat of a chaotic differential evolutionary algorithm (DE).

4.2. Fitness Judgement and Convergence Analysis

In studying the genetic and evolutionary phenomena of biological organisms in nature, biologists use the term fitness to measure the extent to which a species adapt to its living environment. Species that have more adaptation to the environment will have more reproductive opportunities. The species that have a low degree of adaptation to the environment are relatively small and even extinct. The fitness of the individual (fitnessy) refers to a measure of the extent of the body’s survival in the population, which is used to distinguish the individual’s "good and bad". The probability that the fitness is higher is the probability of the next generation. And the relatively low fitness of the next generation is relatively small. In this case, the evolutionary algorithm also use the concept of fitness to measure the ability of individuals in the optimization of the optimization of or to achieve or help to find the optimal solution. The function of measuring the fitness of the individual is a fitness function system. The fitness function is also called the evaluation function, which is mainly through individual characteristics to determine the fitness of the individual.

The design of fitness function needs to be proper, otherwise it is easy to make the algorithm premature and fall into the local optimal. The evolutionary search of DE algorithm is mainly based on fitness function and makes little use of external information. Therefore, the selection of fitness function directly affects the convergence performance. The fitness function is the main component of DE algorithm complexity, so the design of fitness function should be as simple as possible to minimize the time complexity of calculation.

The fitness function can be directly transformed by the objective function , and its design requirements are as follows:

- (1)

- Single value, continuous, non-negative and maximized;

- (2)

- Reasonable and consistent;

- (3)

- Small amount of calculation;

- (4)

- Strong versatility.

According to the above requirements, we define the fitness function

and a searching vector

where elements are in accordance with initial values of the unobtained state variables and unknown parameters. k is the dimension of the estimator system, Np is the number of individual of population. The fitness is greater, the observer output vector is closer to the real system output vector .

Theorem 1.

Convergence of chaotic DE based State Estimate

Chaotic DE based state estimate guarantees global convergence of pre-estimated value , to with the iteration times g.

Proof .

(i) converging to implies converging to ;

It is obvious that if the system is observable through the output . According to the definition of system observability, corresponds one-to-one to the output trajectory , corresponds one-to-one to the output trajectory , so if the sampling points of the output sample are enough, will correspond to the sample vector one-to-one, and will correspond to the sample vector one-to-one. Therefore converging to implies converging to .

(ii) The greedy selection mechanism of the chaotic DE algorithm guarantees global convergence of sample vector to with the iteration times g.

According to greedy selection mechanism, if

then

so

otherwise

so

That is to say, if

then

otherwise

Therefore:

□

According to (7), , iff , therefore can be regarded as a Lyapunov function of the generalized vector deviation of the sample vectors with respect to the iteration times g. In the inequality (11), it should be noted that the iteration of DE is not a derivative driven process, and due the global ergodicity and the greedy selection mechanism of the chaotic DE algorithm, the iterative process will not cease even if in the case of equal sign "=", unless the optimal point is reached. That is to say the equal sign "=" only for the global optimal point is permanent, while for any point , the equal sign "=" is temporary. Therefore the generalized vector deviation of the sample vectors is stable and converges to the minimum value with the iteration time g, and converges to the optimal value with the iteration times g.

On the other hand, the iterative process of the chaotic DE algorithm has global ergodicity, so the global optimal value always has the chance to become the pre-estimated value of , and becomes sample vector , in an iteration. Once the global optimal point value is selected as the pre-estimated state initial value, the greedy selection mechanism will irreversibly select it as the optimal result, and since there is no better point to replace it, the optimal selection result will remain at this global optimal point and will never change.

The proof is completed. There are many ways to calculate the generalized vector deviation of the sample vector, among which the commonly used ones are the Euclide distance and the correlation coefficient.

4.2.1. Euclide Distance Based State Estimate

Let generalized vector deviation of the sample vector be:

It satisfies the definition (3) of generalized vector deviation.

The fitness function is selected as:

Then:

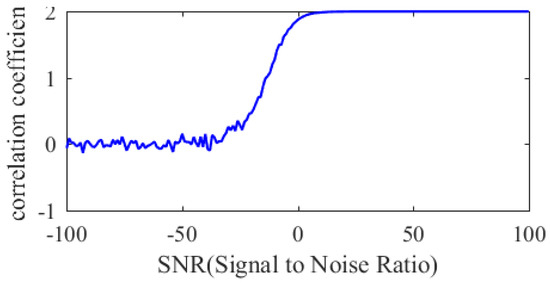

4.2.2. Correlation Coefficient Based State Estimate

The correlation coefficient between the two variable samples can reflect the direction and the waveform similarity degrees of the two variables. As a statistical method, correlation analysis has been applied in evaluating the strength of the relationship between two quantitative variables with available statistical data. This technique is strictly connected to the linear regression analysis that is a statistical approach for modeling the association between a dependent variable and one or more explanatory or independent variables. Here, the Pearson correlation coefficient is chosen to extract feature similarity between the sample data.

Let generalized vector deviation of the sample vector be:

where: N is the dimensions of the output variable .

When , . Therefore, (14) satisfies the definition (3) of generalized vector deviation.

The fitness function

is selected. At this point, the fitness of an individual is the correlation coefficient. The greater the correlation coefficient is, the greater the fitness value is and the more likely the individual is to be selected. The generalized vector deviation (14) of the sample vector can be verified that:

By combining the greedy selection, it is known that , that is,., the generalized vector deviation of the sample vector (14) is a Lyapunov function of itself, that is,., of the generalized vector deviation (14) of the sample vector. Under the DE algorithm, the generalized vector deviation (14) will converge to 0, according to the definition (3), will converge to with g, and () will converge to with g.

4.3. Structure Of Observer

The mathematical model of the real process is used as the observer model, the observer model receives the control signal of the real process as the control input signal of its self. A time window from to is used as the time domain of the DE algorithm optimization calculation.

In order to reduce the computation, the measurable state variables will not be estimated and their measurements will be used in the observer model. The observer structure is same as Figure 1.

4.4. The Process of State Estimation

4.4.1. Initialization Of The Observer

The start time of the time window is considered as the initial time of the real process and of the observer, consequently the state of the real process at this time and the state of the pre-estimate state of the observer at this time are considered as their initial states.

The trajectory of the measurable output from to of the real process is sampled with a fixed sampling period, the output sample is obtained. The m trajectories of the observer model output from to with the pre-estimate initial state are also sampled, the output samples are obtained. The sample and the samples are used in the DE based state estimate.

4.4.2. Real Time Tracking Estimation of the Observer State

In order to compensate the influence of external disturbances and of the system model changes on the state estimate, the state estimate needs to track the system state on-line in real time manner.

After complete the state estimate of the last time window, a new time window with a same time length as the previous time window is recreated. The start time and the terminal time of the new time window are re-denoted by and , and all the variables use the same notations in the previous time window. The DE based state estimate procedure which was executed in the previous time window is repeated.

The state estimation value at the beginning time of the new time window calculated using the estimated initial state in the previous time window is reserved as a pre-estimate initial state , that is,., an individual of the initial population in the new time window state estimate calculation, so as to inherit the state estimate result of the previous time window to accelerate the search of optimal point. However, in order to track the changes in real time, the remaining pre-estimate initial states are reset according to the rule of the chaos DE algorithm. If the state estimation of the previous time window is still globally optimal, it will be retained in the new time window, otherwise it will be replaced by the new optimal estimation.

After the task of the current time window is finished, another new time window is recreated, and the procedure in the previous time window is repeated. It operates repeatedly in this way all the time. The new time window can partially overlap, not overlap or even not connect with the previous time window according to the necessary of the update. Generally, the number m of the individuals of the initial population in the normal time windows is smaller than the one of the first time window.

The purpose of adaptive observer is to realize the joint estimate of state and parameter, and the detection of system parameter changes. The system parameters to be estimated are considered as the extended state variables with zero dynamics as (4), thus the adaptive observer is equivalent to the nonlinear state observer. When the system parameters are considered as the extended state variables, their values are hence the initial values of the extended state variables if they remain unchange.

It is easy to understand that the dimension of state directly affects the calculation load of the estimate analysis. Therefore, the dimension of state to be estimated should be minimized, as mentioned previously, the measurable state variable should not be considered in the estimation. For the system parameters which are considered as the extended state variables, only unknown parameters and the parameters that maybe subject to change are considered, while other parameters are considered as the parts of the system model and are not included in the estimation. Even the unknown parameters if they do not subject to change, they should not be included in the estimation unless in the first time window. Because they have been estimated in the period of the first time window therefore their values become known.

5. Simulation Experiment

5.1. The Experiment Process

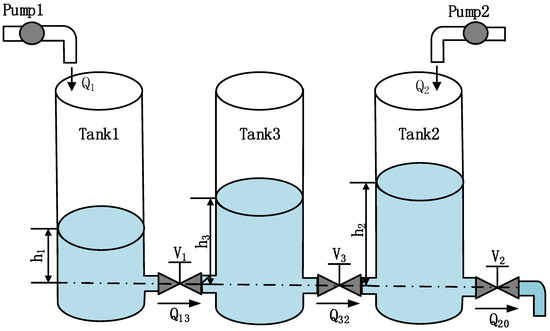

To illustrate the effectiveness of the proposed methodology, in this section, a simulation experiment on three tank systems(TTS) is introduced. In a lot of literature, TTS have been considered as a benchmark to solve the problems such as system control, identification and fault diagnosis purposes [30,31,32,33]. It is a multi-input, multi-output (MIMO) plant. Most available control techniques have been applied to this benchmark, allowing the comparison of their performances. It possesses a rich nonlinear dynamic, high complexity and strong coupling. The TTS is shown in Figure 3.

Figure 3.

Three tanks system.

Consider three tank systems as described in Reference [19]. It is described by a state-space equation with

where A is the cross section area of tank (); An is the cross section area of pipe (); , are incoming mass flow ();, i = 1, 2, 3, are coefficient of flow for valves; i = 1, 2, 3, are the water level (cm) of each tank and can not be measured but can be estimated. The state vector X, input U and output vector Y will be

.

The system parameters are , , .

It is necessary to monitor the valves because their opening has a great influence on the dynamic characteristics of the system. Then the parametric model of the three tank system can be defined as follows:

where p denotes the opening of valve, , , .

It is assumed that the liquid levels and are measurable and the parameters used to represent the valve opening and are unknown. Our aim here is to estimate the parameters p and state by using adaptive observer based on chaotic DE. In order to verify the effect of fitness function on the performance of the proposed observer, in this paper, the two fitness functions are used to adaptive observer. The one is negative Euclidean distance based and the other is correlation coefficient based.

The first fitness function based on the residual norm is as follows

The second fitness function based on correlation coefficient is as follows

The constraint conditions of the above fitness functions are as follows

The initial states of the real system are . The initial states of the adaptive observer . . The initial values of parameters of the real system are equal to the ones of adaptive observer, namely .The time window T = 1000 s.The parameters of DE are as follows: Gm=85, Np=25, F0=0.7, CR=0.7. Gm, Np, F0 and CR respectively represent the number of iterations, population number, contraction factor and crossover probability of DE.

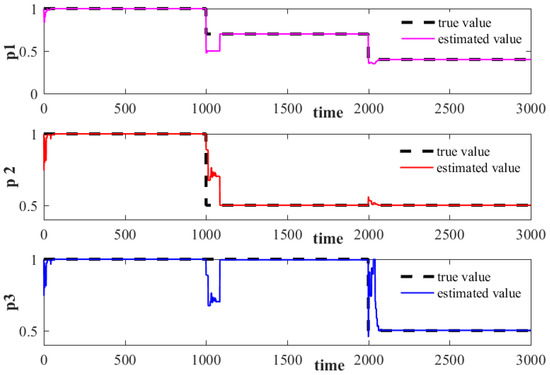

5.2. Results

5.2.1. Output Noiseless Case

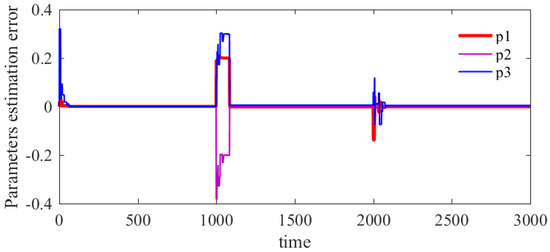

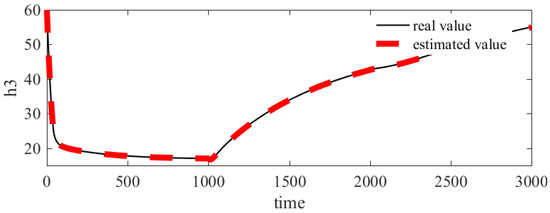

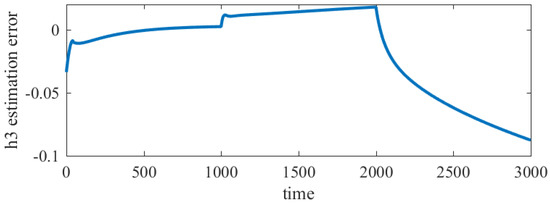

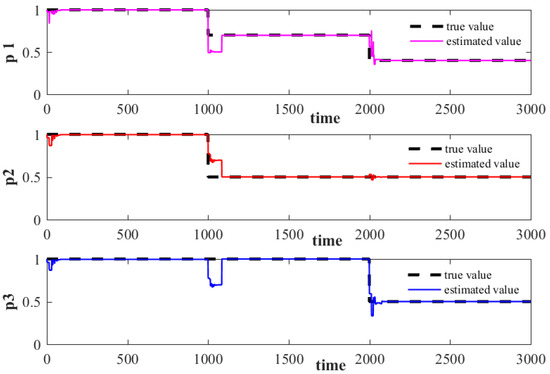

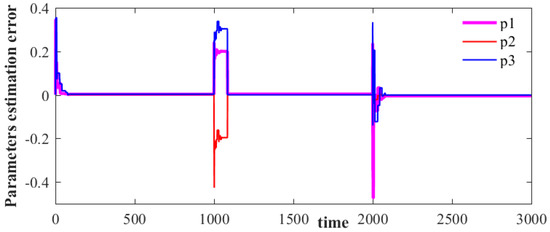

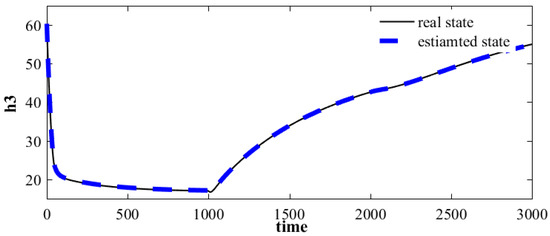

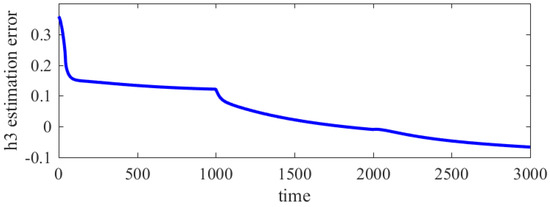

In order to verify the effectiveness of the proposed adaptive observer in the paper, the noise-free case is first examined with the aid of the three tanks system. Figure 4, Figure 5, Figure 6 and Figure 7 show the estimation of state() and parameters() based on Euclidean distance. Figure 8, Figure 9, Figure 10 and Figure 11 present the estimation of state() and parameters() based on correlation coefficient. In the noise-free case, the two fitness functions have similar performance in parameter estimation. In the aspect of state estimation, the method based on Euclidean distance has better performance than that based on correlation coefficient.

Figure 4.

Euclide distance based parameters estimation (noise free).

Figure 5.

Euclide distance based parameters estimation error (noise free).

Figure 6.

Euclide distance based estimation (noise free).

Figure 7.

Euclide distance based estimation error (noise free).

Figure 8.

Correlation oefficient based parameters estimation (noise free).

Figure 9.

Correlation oefficient based parameters estimation error (noise free).

Figure 10.

Correlation oefficient based estimation (noise free).

Figure 11.

Correlation oefficient based estimation error (noise free).

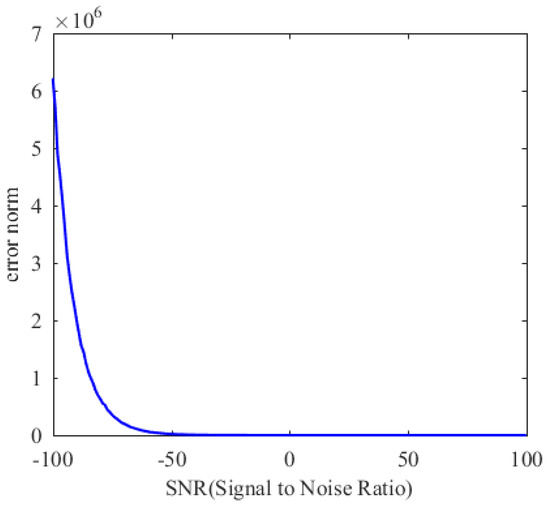

5.2.2. Output Noise Case

Figure 12 and Figure 13 show the relationship of the Euclide distance and correlation coefficient to signals to noise ratio (SNR), respectively. It can be seen from Figure 12 that even if SNR is small, the Euclide distance can converge to the optimal value of 0. This indicates that the fitness function based on the Euclide distance is more robust to output noise. Figure 13 shows that the optimization method based on correlation coefficient is more affected by noise. Table 1 and Table 2 respectively show the optimization results of the two optimization methods under different SNR. The values of the estimated parameters and state indicate that the higher the SNR, the better the estimation performance. It can also be seen from Table 1 and Table 2 that under the same SNR conditions, the optimization results of the method based on Euclide distance is better than that based on correlation coefficient. This is consistent with the results shown in Figure 12 and Figure 13.

Figure 12.

The relationship between the output Euclide distance and signal to noise ratio (SNR).

Figure 13.

The relationship between the correlation coefficient and signal to noise ratio (SNR).

Table 1.

Euclide distance based State Estimation with different SNR in different time window.

Table 2.

Correlation coefficient based state estimate with different SNR in different time window.

6. Conclusions

State observer is very important in the control theory. In the past, the study on the state observer have focused on linear system. For the design of observer of nonlinear systems, the linear design methods are often used after linearization of nonlinear systems. When unknown state initial value, output noise and uncertain model parameters are considered, state observation will become more difficult. To address the challenge, a intelligent adaptive observer based on intelligent optimization algorithm is proposed in this paper. As a simple and efficient heuristic for global optimization over continuous spaces, chaotic DE is used to construct a adaptive scheme based on dynamic optimization which allow the direct estimation of the states and parameters.When the necessary observability condition is satisfied, the observer can realize the robust estimation of system parameters and states with less measurement information.The simulation proves the effectiveness of the algorithm. In addition, we found that fitness function plays an important role on the performance of observer. Two fitness functions are used in this paper. Finally, it is found that the two fitness functions have different robustness under the same algorithm control parameters. The simulation verified the difference on performance. On the whole, the method based on the residual norm is better than the one based on correlation coefficient. Therefore, it is necessary to select an appropriate fitness function before designing the proposed observer. Further study on this problem is valuable, as it can improve the performance of the observer. In conclusion, this paper expands the application of DE in practical engineering problems. In the future, it is interesting to combine more intelligent optimization algorithms with the practical application.

Author Contributions

Methodology, X.Y. and Z.L.; validation, X.Y.; writing—original draft preparation, Z.L. and X.Y.; writing-review and editing, B.D.; All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China [No.61963009], Collaborative Foundation of Guizhou Province [No.7228(2017)], Platform Talent Project of Guizhou Province [No.5788(2017)], Science and Technology Planning Project of Guizhou Province(No.2154[2019]) and (No.2302 [2016]) and Special fund project of provincial governor for outstanding science and technology education talents in Guizhou Province, grant number 4[2010].

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Xu, L.; Ding, F.; Gu, Y.; Alsaedi, A.; Hayat, T. A multi-innovation state and parameter estimation algorithm for a state space system with d-step state-delay. Signal Process. 2017, 140, 97–103. [Google Scholar] [CrossRef]

- Ge, X.; Luo, Z.; Ma, Y.; Liu, H.; Zhu, Y. A novel data-driven model based parameter estimation of nonlinear systems. J. Sound Vib. 2019, 453, 188–200. [Google Scholar] [CrossRef]

- Sosnovskiy, L.A.; Sherbakov, S. A Model of Mechanothermodynamic Entropy in Tribology. Entropy 2017, 419, 115. [Google Scholar] [CrossRef]

- Sosnovskiy, L.A.; Sherbakov, S. On the Development of Mechanothermodynamics as a New Branch of Physics. Entropy 2019, 21, 1188. [Google Scholar] [CrossRef]

- Sherbakov, S.S.; Zhuravkov, M.A.; Sosnovskiy, L.A. Contact interaction, volume damageability and multicriterial limiting states of multielement tribo-fatigue systems. In Selected Problems on Experimental Mathematics; Wydawnictwo Politechniki Slaskiej: Gliwice, Poland, 2018. [Google Scholar]

- Sherbakov, S. Three-dimensional stress-strain state of a pipe with corrosion damage under complex loading. In Tribology-Lubricants and Lubrication; InTech: London, UK, 2011. [Google Scholar]

- Sherbakov, S.S. Measurement and Real Time Analysis of Local Damage in Wear-and-Fatigue Tests. Devices Methods Meas. 2019, 10, 207–214. [Google Scholar] [CrossRef]

- Sherbakov, S.S.; Zhuravkov, M.A. Interaction of several bodies as applied to solving tribo-fatigue problems. Acta Mech. 2013, 224, 1541–1553. [Google Scholar] [CrossRef]

- Valluru, J.; Patwardhan, S.C.; Biegler, L.T. Development of robust extended Kalman filter and moving window estimator for simultaneous state and parameter/disturbance estimation. J. Process. Control 2018, 69, 158–178. [Google Scholar] [CrossRef]

- Zhao, Z.; Tulsyan, A.; Huang, B.; Liu, F. Estimation and identification in batch processes with particle filters. J. Process. Control 2019, 81, 1–14. [Google Scholar] [CrossRef]

- Yu, A.; Liu, Y.; Zhu, J.; Dong, Z. An Improved Dual Unscented Kalman Filter for State and Parameter Estimation. Asian J. Control 2016, 18, 1427–1440. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Q.; Su, H. An adaptive observer for joint estimation of states and parameters in both state and output equations. Int. J. Adapt. Control. Signal Process. 2011, 25, 831–842. [Google Scholar] [CrossRef]

- Lopez-Ramirez, F.; Polyakov, A.; Efimov, D.; Perruquetti, W. Finite-time and fixed-time observer design: Implicit Lyapunov function approach. Automatica 2018, 87, 52–60. [Google Scholar] [CrossRef]

- Mohamed, M.; Yan, X.G.; Mao, Z.; Jiang, B. Adaptive Observer Design for a Class of Nonlinear Interconnected Systems with Uncertain Time Varying Parameters. IFAC-Pap. Line 2017, 50, 1421–1426. [Google Scholar] [CrossRef]

- Porter, L.M.; Passino, K.M. Genetic adaptive observers. Eng. Appl. Artif. Intell. 1995, 8, 261–269. [Google Scholar] [CrossRef]

- Witczak, M.; Obuchowicz, A.; Korbicz, J.Â. Genetic programming based approaches to identification and fault diagnosis of non-linear dynamic systems. Int. J. Control 2002, 75, 1012–1031. [Google Scholar] [CrossRef]

- Andrzej, P.; Jelonkiewicz, J. Genetic Algorithm for Observer Parameters Tuning in Sensorless Induction Motor Drive. In Neural Networks and Soft Computing; Physica-Verlag HD: Heidelberg, Germany, 2003. [Google Scholar]

- Xie, Y.; Lin, X.; Bian, X.; Zhao, D. Multiple Model Adaptive Nonlinear Observer of Dynamic Positioning Ship. Math. Probl. Eng. 2013, 2013, 1–10. [Google Scholar] [CrossRef]

- Kinjo, H.; Maeshiro, M.; Uezato, E.; Yamamoto, T. Adaptive Genetic Algorithm Observer and its Application to a Trailer Truck Control System. In Proceedings of the 2006 SICE-ICASE International Joint Conference, Busan, Korea, 18–21 October 2006. [Google Scholar]

- Vijay, M.; Jena, D. Intelligent adaptive observer-based optimal control of overhead transmission line de-icing robot manipulator. Adv. Robot. 2016, 30, 1215–1227. [Google Scholar] [CrossRef]

- Das, S.; Suganthan, P.N. Differential Evolution: A Survey of the State-of-the-Art. IEEE Trans. Evol. Comput. 2011, 15, 4–31. [Google Scholar] [CrossRef]

- Alaeddini, A.; Morgansen, K.A. Trajectory design for a nonlinear system to insure observability. In Proceedings of the 2014 European Control Conference (ECC), Strasbourg, France, 24–27 June 2014; pp. 2520–2525. [Google Scholar]

- Besanon, G. A viewpoint on observability and observer design for nonlinear systems, Lecture notes in control and information sciences. In New Directions in Nonlinear Observer Design; Springer: London, UK, 1999; pp. 3–22. [Google Scholar]

- Gauthier, J.P.; Kupka, I.A.K. Observability and Observers for Nonlinear Systems. Siam Control. Optim. 1994, 32, 975–994. [Google Scholar] [CrossRef]

- Shauying, R.K.; David, L.E.; Tzyh, T.J. Observability of nonlinear systems. Inf. Control 1973, 22, 89–99. [Google Scholar]

- Liu, Y.; Wang, X.; Men, Q.; Meng, X.; Zhang, Q. Heat Transfer Analysis of Passive Residual Heat Removal Heat Exchanger under Tube outside Boiling Condition. Sci. Technol. Nucl. Install. 2017, 2017, 1–10. [Google Scholar] [CrossRef]

- Luenberger, D.G. An introduction to observers. IEEE Trans. Autom. Control 1971, 16, 596–602. [Google Scholar] [CrossRef]

- Chelliah, T.R.; Thangaraj, R.; Allamsetty, S.; Pant, M. Coordination of directional overcurrent relays using opposition based chaotic differential evolution algorithm. Int. J. Electr. Power Energy Syst. 2014, 55, 341–350. [Google Scholar] [CrossRef]

- Wang, G.G.; Guo, L.; Gomi, A.H.; Hao, G.S.; Wang, H. Chaotic krill herd algorithm. Inf. Ences 2014, 274, 17–34. [Google Scholar] [CrossRef]

- Sarailoo, M.; Rahmani, Z.; Rezaie, B. A novel model predictive control scheme based on bees algorithm in a class of nonlinear systems: Application to a three tank system. Neurocomputing 2015, 152, 294–304. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Z.; Ma, L.; Alsaadi, F.E. Annulus-event-based fault detection, isolation and estimation for multirate time-varying systems: Applications to a three-tank system. J. Process. Control 2019, 75, 48–58. [Google Scholar] [CrossRef]

- He, X.; Wang, Z.; Qin, L.; Zhou, D. Active Fault-Tolerant Control for an Internet-Based Networked Three-Tank System. IEEE Trans. Control Syst. Technol. 2016, 24, 2150–2157. [Google Scholar] [CrossRef]

- Besancon, G.; Ticlea, A. On adaptive observers for systems with state and parameter nonlinearities. IFAC Pap. 2017, 50–51, 15416–15421. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).