Automatic Chinese Font Generation System Reflecting Emotions Based on Generative Adversarial Network

Abstract

1. Introduction

- (1)

- We propose and design a questionnaire system to quantitatively and qualitatively study the relationship between fonts and facial expressions. Data analysis shows that the system has high credibility, and the result provides a dataset for further research.

- (2)

- In our model, we propose an Emotional Guidance GAN (EG-GAN) algorithm; employing an emotion-guided operation on the font generation module, the automatic Chinese font generation system is able to generate new style of Chinese fonts with corresponding emotions.

- (3)

- We incorporate EM Distance, Gradient Penalty, and a classification strategy to enable the font generation module to generate high-quality font images and make sure that each single font has a consistent style.

- (4)

- We conduct various experimental strategies on various Chinese font datasets. The experimental results are utilized as the basis for other questionnaires we propose to analyze, and it shows that generated fonts are credible with specific emotions.

2. Related Works

2.1. Font Emotion Research

2.2. Generative Adversarial Network (GAN)

2.3. Automatic Font Generation

2.4. Style Embedding Generation

3. Questionnaire for the Relationship between Facial Expressions and Fonts

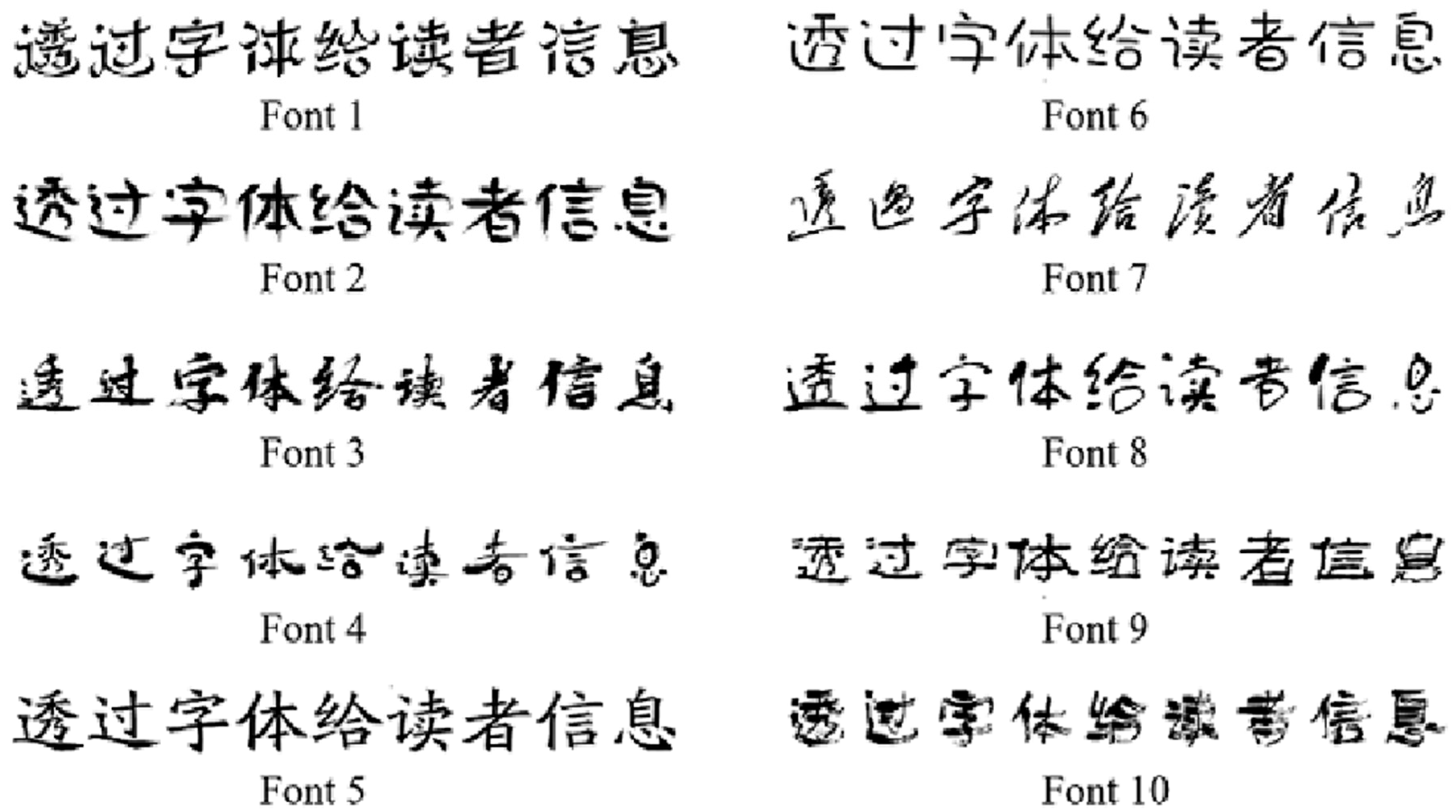

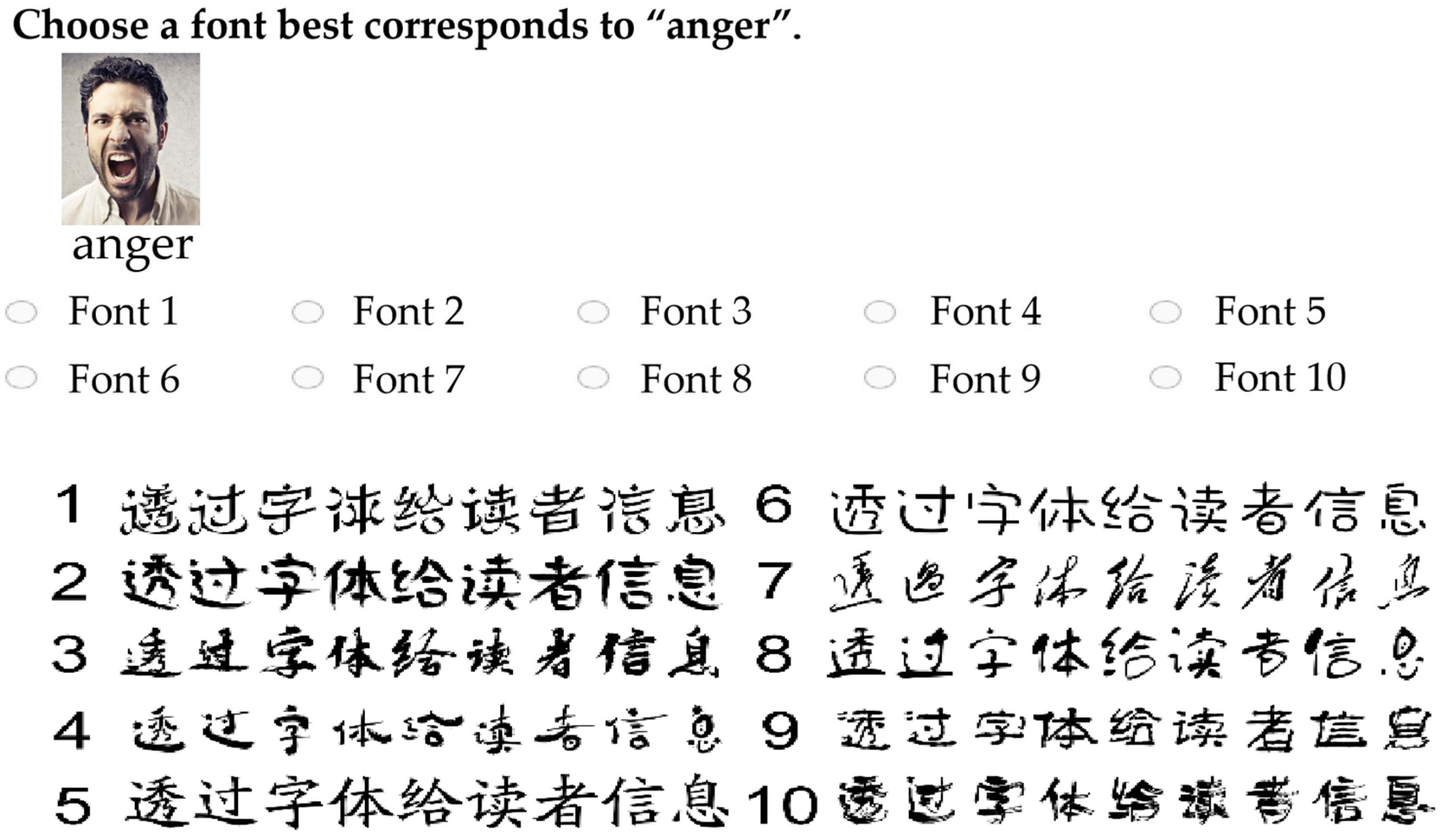

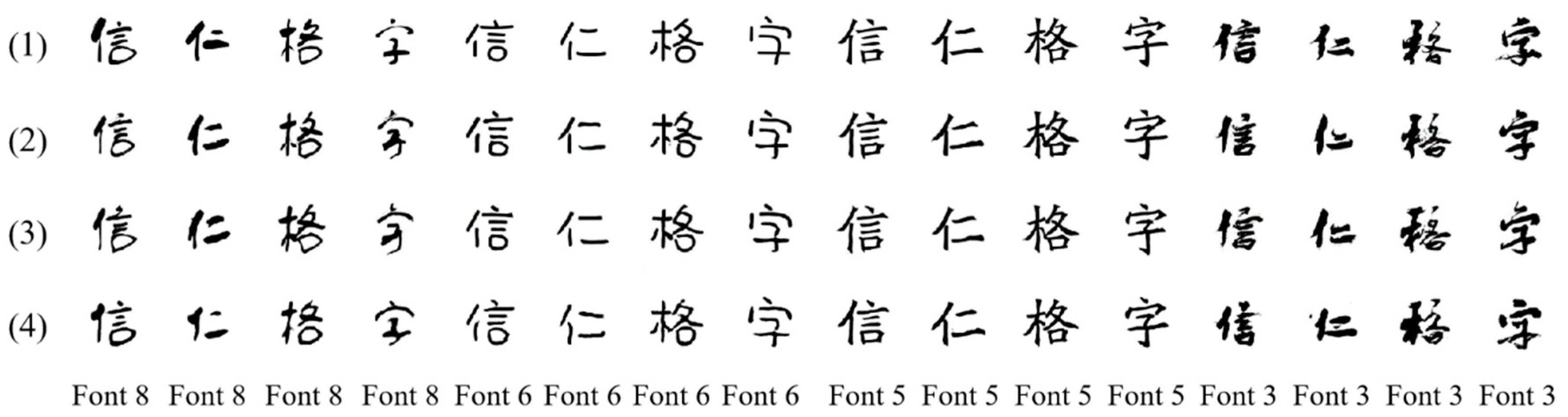

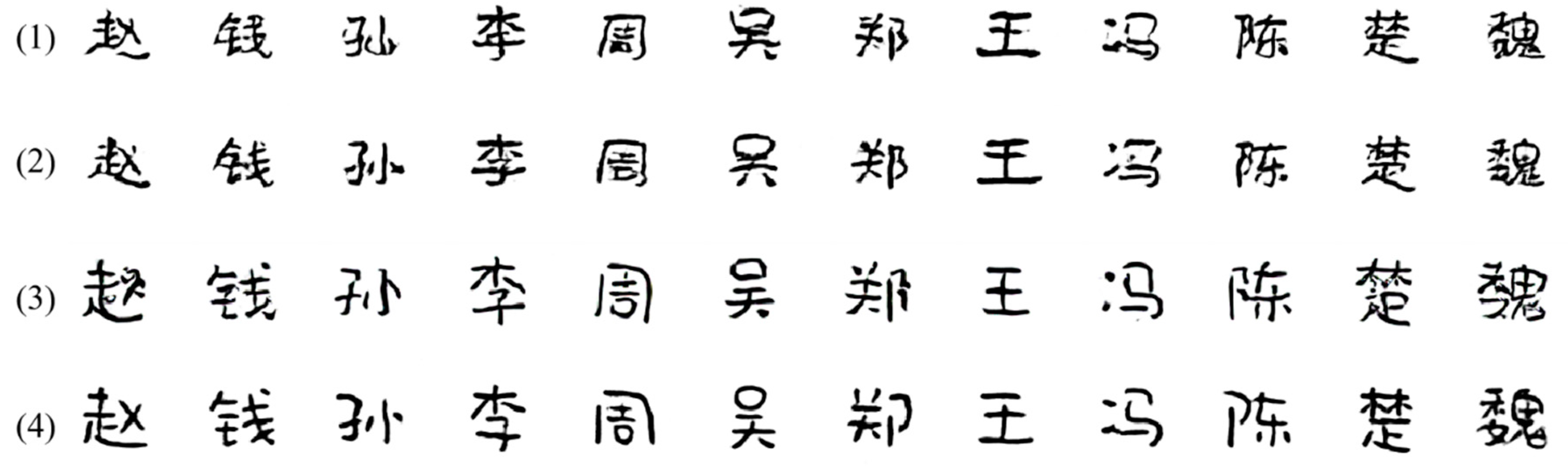

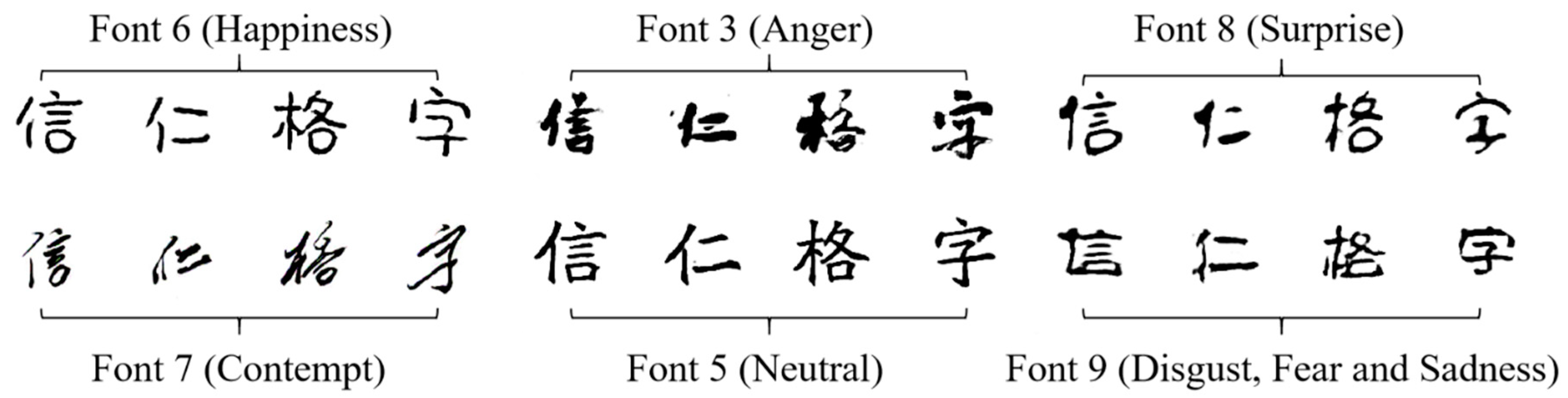

3.1. Questionnaire Design

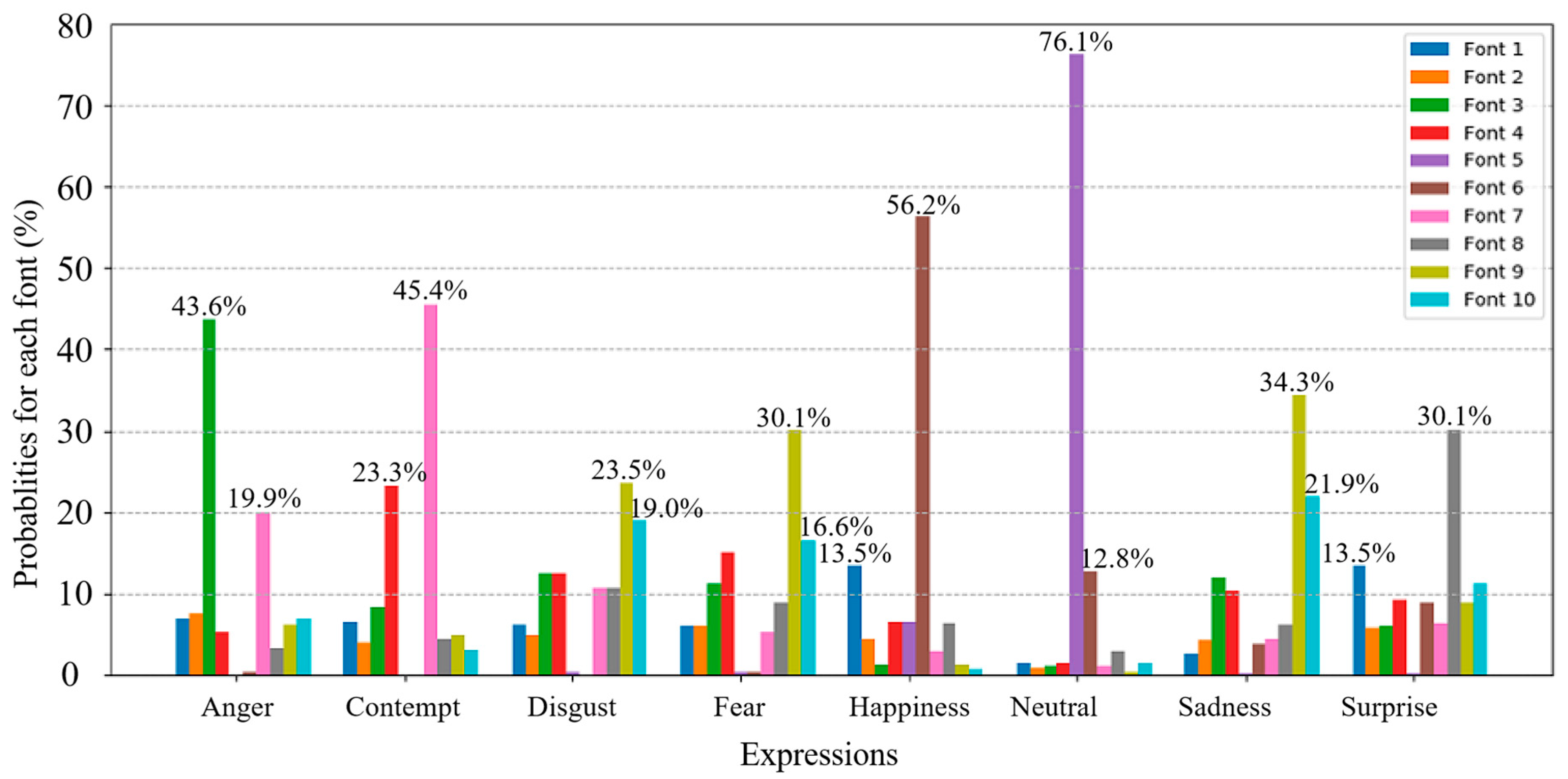

3.2. Questionnaire Results

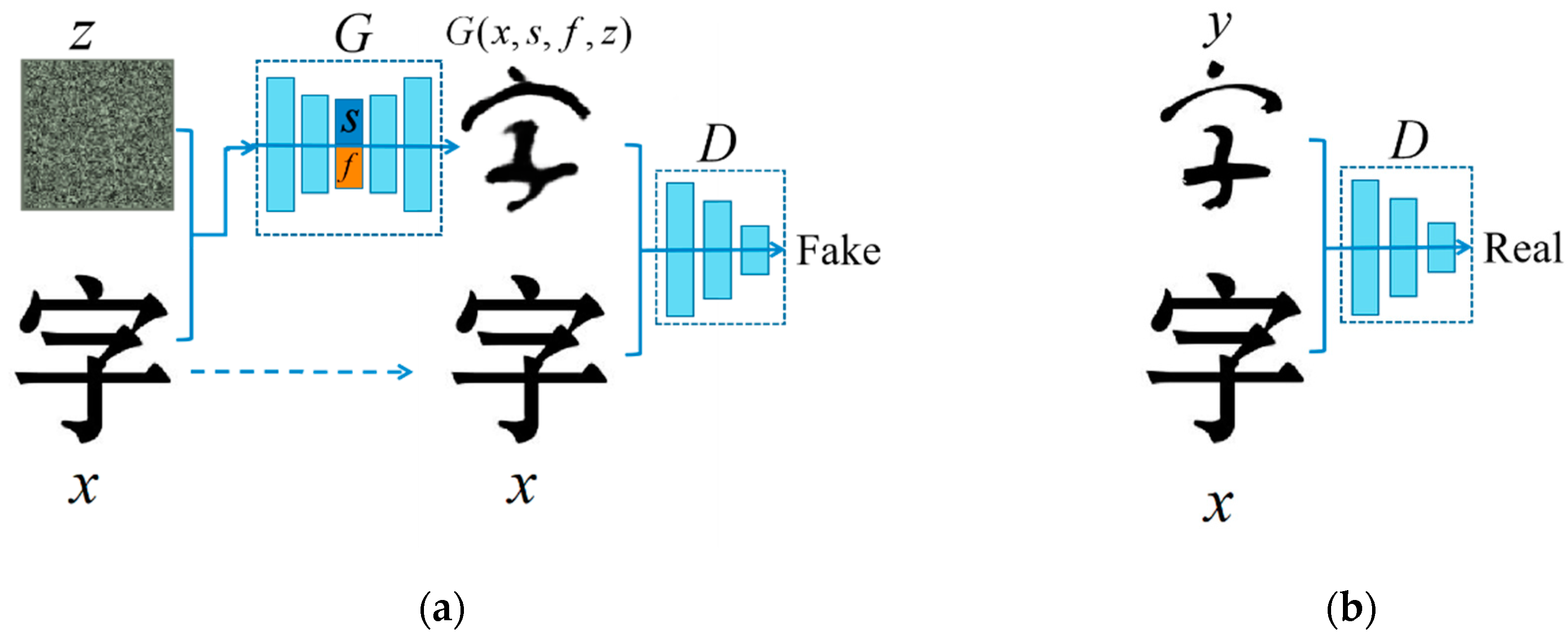

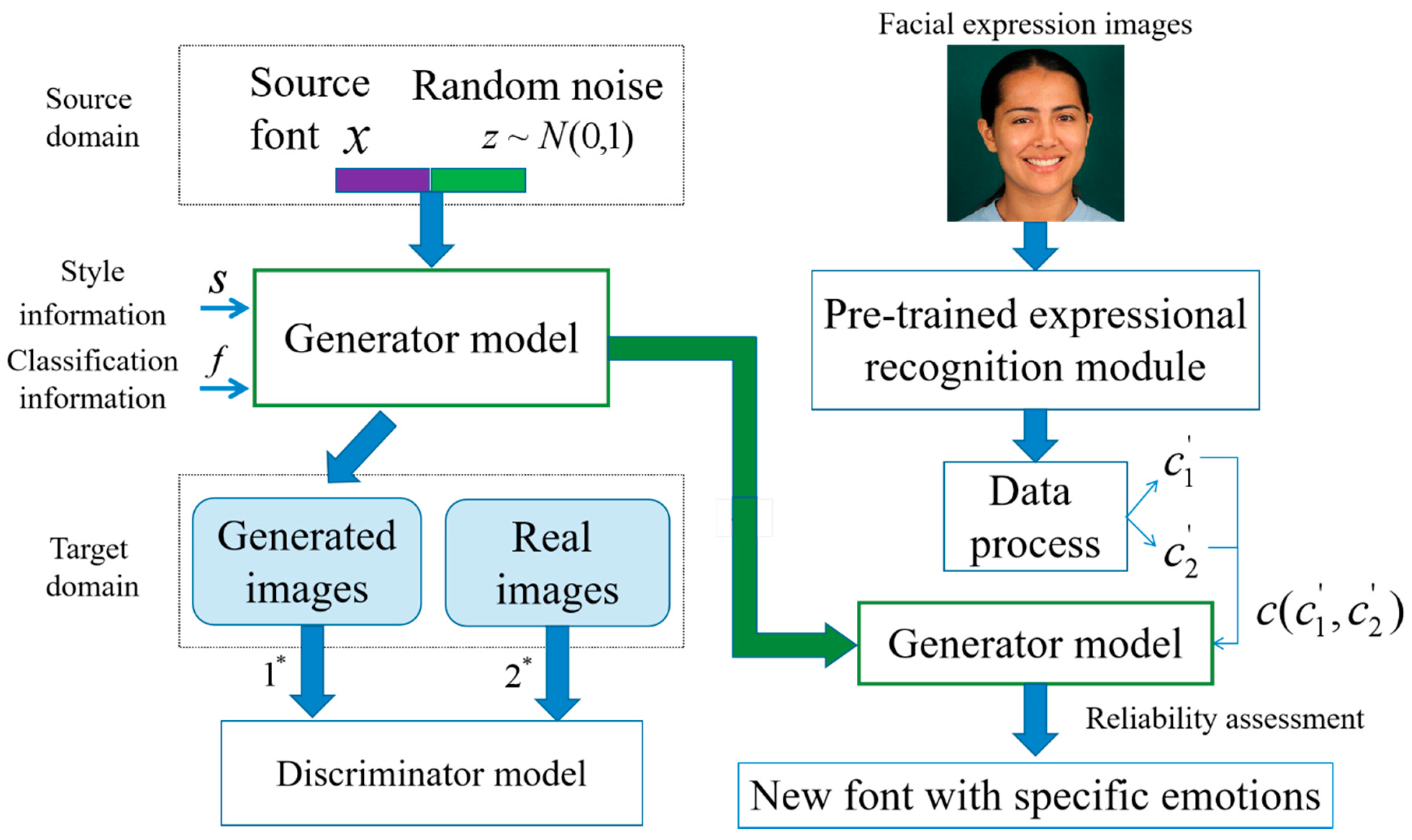

4. Architecture

4.1. Network Architectures

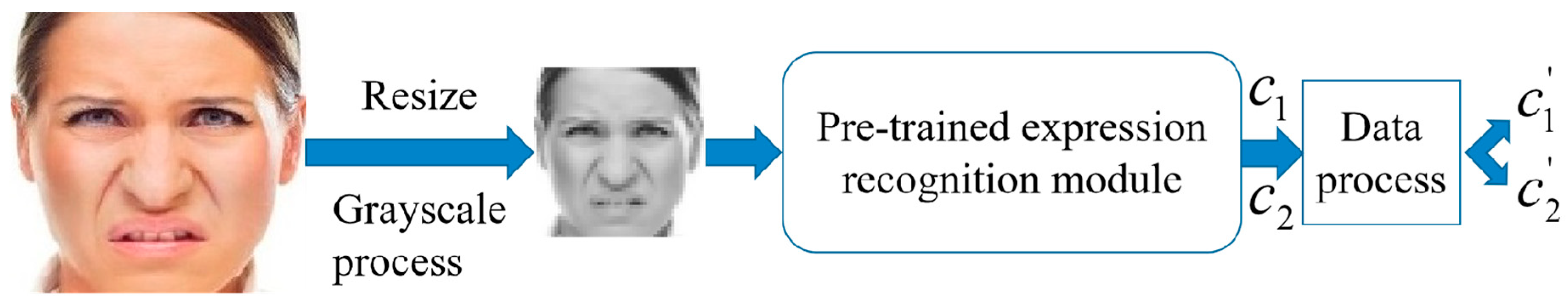

4.1.1. Facial Information Extraction Module

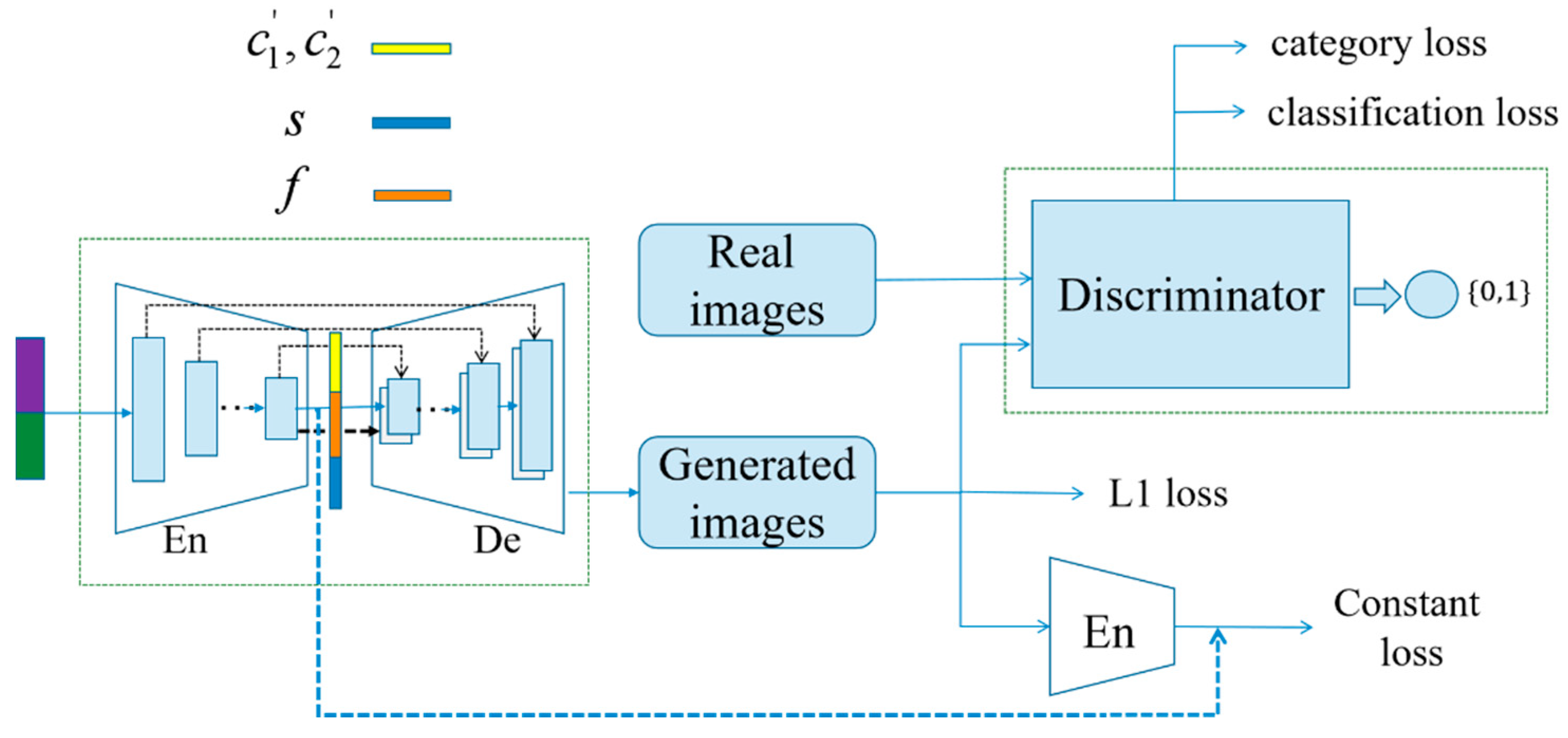

4.1.2. Font Generation Module

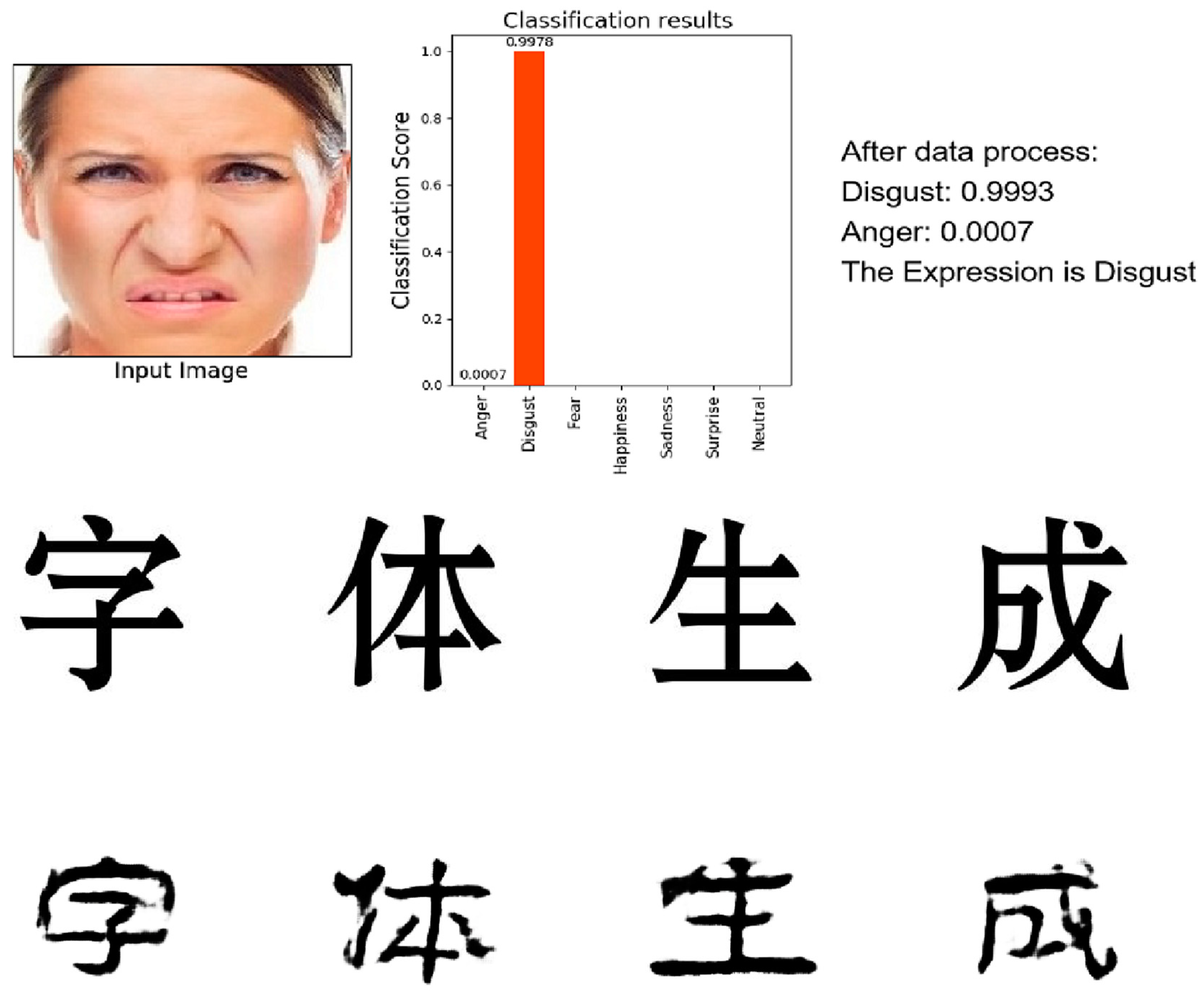

5. Experimental Results and Analyses

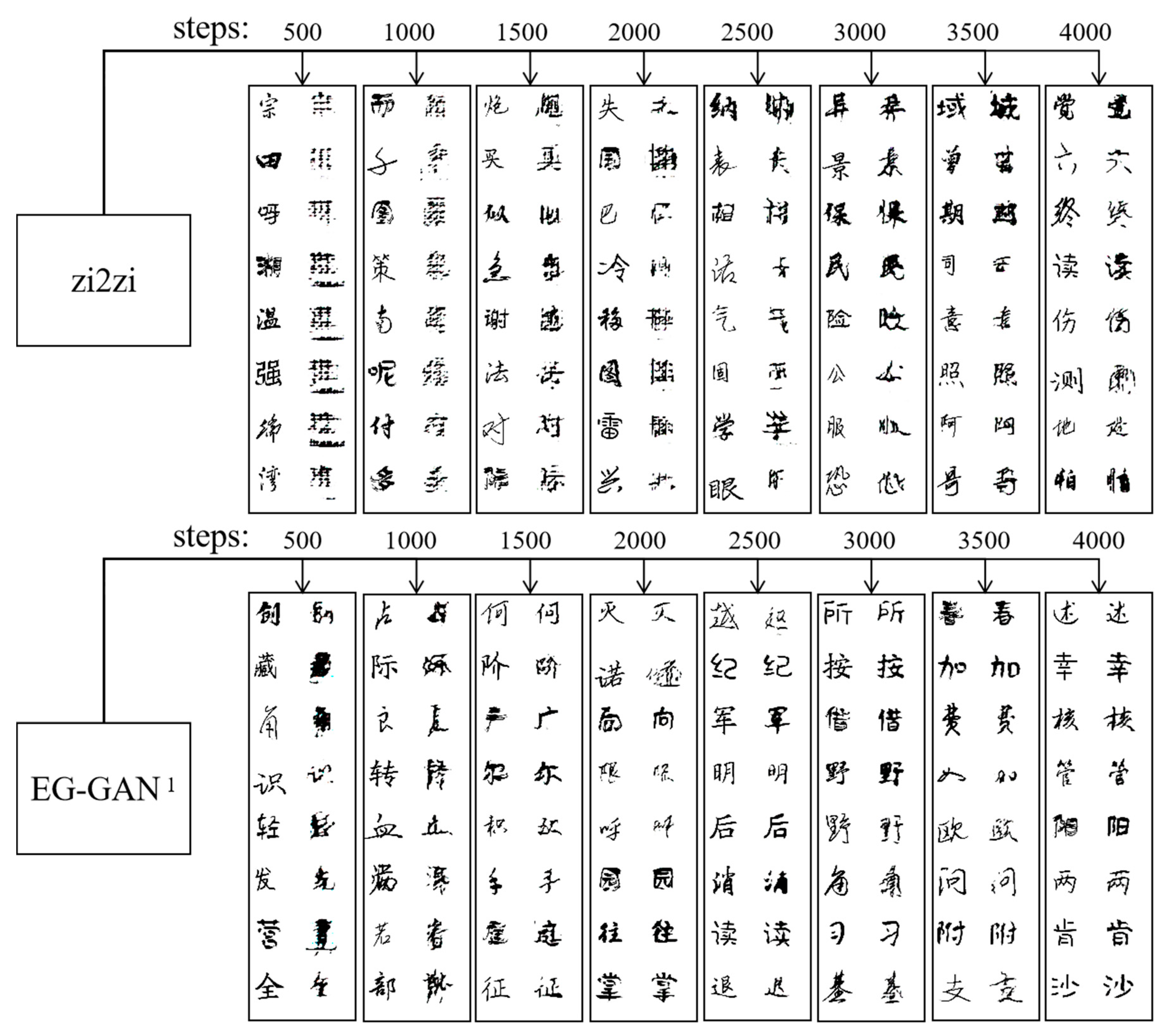

5.1. Comparison Experiments and Results

5.2. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Choi, S.; Aizawa, K. Emotype: Expressing emotions by changing typeface in mobile messenger texting. Multimed. Tools Appl. 2019, 78, 14155–14172. [Google Scholar] [CrossRef]

- Amare, N.; Manning, A. Seeing typeface personality: Emotional responses to form as tone. In Proceedings of the 2012 IEEE International Professional Communication Conference, Orlando, FL, USA, 8–10 October 2012; pp. 1–9. [Google Scholar]

- Hayashi, H.; Abe, K.; Uchida, S. GlyphGAN: Style-consistent font generation based on generative adversarial networks. Knowl. Based Syst. 2019, 186, 104927. [Google Scholar] [CrossRef]

- Tian, Y.C. zi2zi: Master Chinese Calligraphy with Conditional Adversarial Networks. Available online: https://kaonashi-tyc.github.io/2017/04/06/zi2zi.html (accessed on 30 July 2020).

- Isola, P.; Zhu, J.Y.; Zhou, T.H.; Efros, A.A. Image-to-image translation with adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A. Improved training of wasserstein GANs. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 5769–5779. [Google Scholar]

- Wada, A.; Hagiwara, M. Japanese font automatic creating system reflecting user’s kansei. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Washington, DC, USA, 8 October 2003; pp. 3804–3809. [Google Scholar]

- Dai, R.W.; Liu, C.L.; Xiao, B.H. Chinese character recognition: History, status and prospects. Front. Comput. Sci. China 2007, 1, 126–136. [Google Scholar] [CrossRef]

- Liu, C.L.; Jaeger, S.; Nakagawa, M. Online recognition of chinese characters: The state-of-the-art. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 198–213. [Google Scholar] [PubMed]

- Lan, Y.J.; Sung, Y.T.; Wu, C.Y.; Wang, R.L.; Chang, K.E. A cognitive-interactive approach to chinese characters learning: System design and development. In Learning by Playing. Game-Based Education System Design and Development. Edutainment 2009; Chang, M., Kuo, R.K., Chen, G.D., Hirose, M., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; pp. 559–564. [Google Scholar]

- Chuang, H.C.; Ma, M.Y.; Feng, Y.C. The features of chinese typeface and its emotion. In Proceedings of the International Conference on Kansei Engineering and Emotion Research, Paris, French, 2–4 March 2010. [Google Scholar]

- Shen, H.Y. The Image and the meaning of the chinese character for ‘enlightenment’. J. Anal. Psychol. 2019, 64, 32–42. [Google Scholar] [CrossRef] [PubMed]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems 27(NIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Mao, X.D.; Li, Q.; Xie, H.R.; Lau, R.Y.K.; Wang, Z.; Smolley, S.P. Least squares generative adversarial networks. arXiv 2016, arXiv:1611.04076. [Google Scholar]

- Yuan, D.J.; Feng, H.X.; Liu, T.L. Research on new font generation system based on generative adversarial network. In Proceedings of the 4th International Conference on Mechanical, Control and Computer Engineering (ICMCCE), Hohhot, China, 24–26 October 2019; pp. 18–21. [Google Scholar]

- Zong, A.; Zhu, Y. Strokebank: Automating personalized chinese handwriting generation. In Proceedings of the 28th AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 29–31 July 2014; pp. 3024–3029. [Google Scholar]

- Pan, W.Q.; Lian, Z.H.; Sun, R.J.; Tang, Y.M.; Xiao, J.G. Flexifont: A flexible system to generate personal font libraries. In Proceedings of the 2014 ACM Symposium on Document Engineering, Fort Collins, CO, USA, 16–19 September 2014; pp. 17–20. [Google Scholar]

- Kong, W.R.; Xu, B.C. Handwritten chinese character generation via conditional neural generative models. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–7 December 2017. [Google Scholar]

- Chang, B.; Zhang, Q.; Pan, S.Y.; Meng, L.L. Generating handwritten chinese characters using CycleGAN. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 199–207. [Google Scholar]

- Chen, J.F.; Ji, Y.L.; Chen, H.; Xu, X. Learning one-to-many stylised chinese character transformation and generation by generative adversarial networks. IET Image Process. 2019, 13, 2680–2686. [Google Scholar] [CrossRef]

- Balouchian, P.; Foroosh, H. Context-sensitive single-modality image emotion analysis: A unified architecture from dataset construction to CNN classification. In Proceedings of the 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 1932–1936. [Google Scholar]

- GitHub. Available online: https://github.com/yuweiming70/Style_Migration_For_Artistic_Font_With_CNN (accessed on 29 July 2020).

- Azadi, S.; Fisher, M.; Kim, V.; Wang, Z.W.; Shechtman, E.; Darrell, T. Multi-content gan for few-shot font style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7564–7573. [Google Scholar]

- Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.J.; Hawk, S.T.; Knippenberg, A.V. Presentation and validation of the radboud faces database. Cogn. Emot. 2010, 24, 1377–1388. [Google Scholar] [CrossRef]

- GitHub. Available online: https://github.com/WuJie1010/Facial-Expression-Recognition.Pytorch (accessed on 29 July 2020).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.-H.; et al. Challenges in representation learning: A report on three machine learning contests. Neural Netw. 2015, 64, 59–63. [Google Scholar] [CrossRef] [PubMed]

- Taigman, Y.; Polyak, A.; Wolf, L. Unsupervised cross-domain image generation. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Odena, A.; Olah, C.; Shlens, J. Conditional image synthesis with auxiliary classifier GANs. In Proceedings of the 34 International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2642–2651. [Google Scholar]

- Johnson, M.; Schuster, M.; Le, Q.V.; Krikun, M.; Wu, Y.; Chen, Z.; Thorat, N.; Viégas, F.; Wattenberg, M.; Corrado, G.; et al. Google’s multilingual neural machine translation system: Enabling zero-shot translation. TACL 2017, 5, 339–351. [Google Scholar] [CrossRef]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs trained by a two time-scale update rule converge to a local nash equilibrium. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6629–6640. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Horé, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

| Question Sequence | Question Content |

|---|---|

| Question 1 | What’s your name? |

| Question 2 | What’s your gender? |

| Question 3 | How about your experience on calligraphy? |

| Question 4 | Talking about fonts reflecting emotions |

| Question 5 | Choose a font best corresponds to “anger”. |

| Question 6 | Choose a font best corresponds to “contempt”. |

| Question 7 | Choose a font best corresponds to “disgust”. |

| Question 8 | Choose a font best corresponds to “fear”. |

| Question 9 | Choose a font best corresponds to “happiness”. |

| Question 10 | Choose a font best corresponds to “neutral”. |

| Question 11 | Choose a font best corresponds to “sadness”. |

| Question 12 | Choose a font best corresponds to “surprise”. |

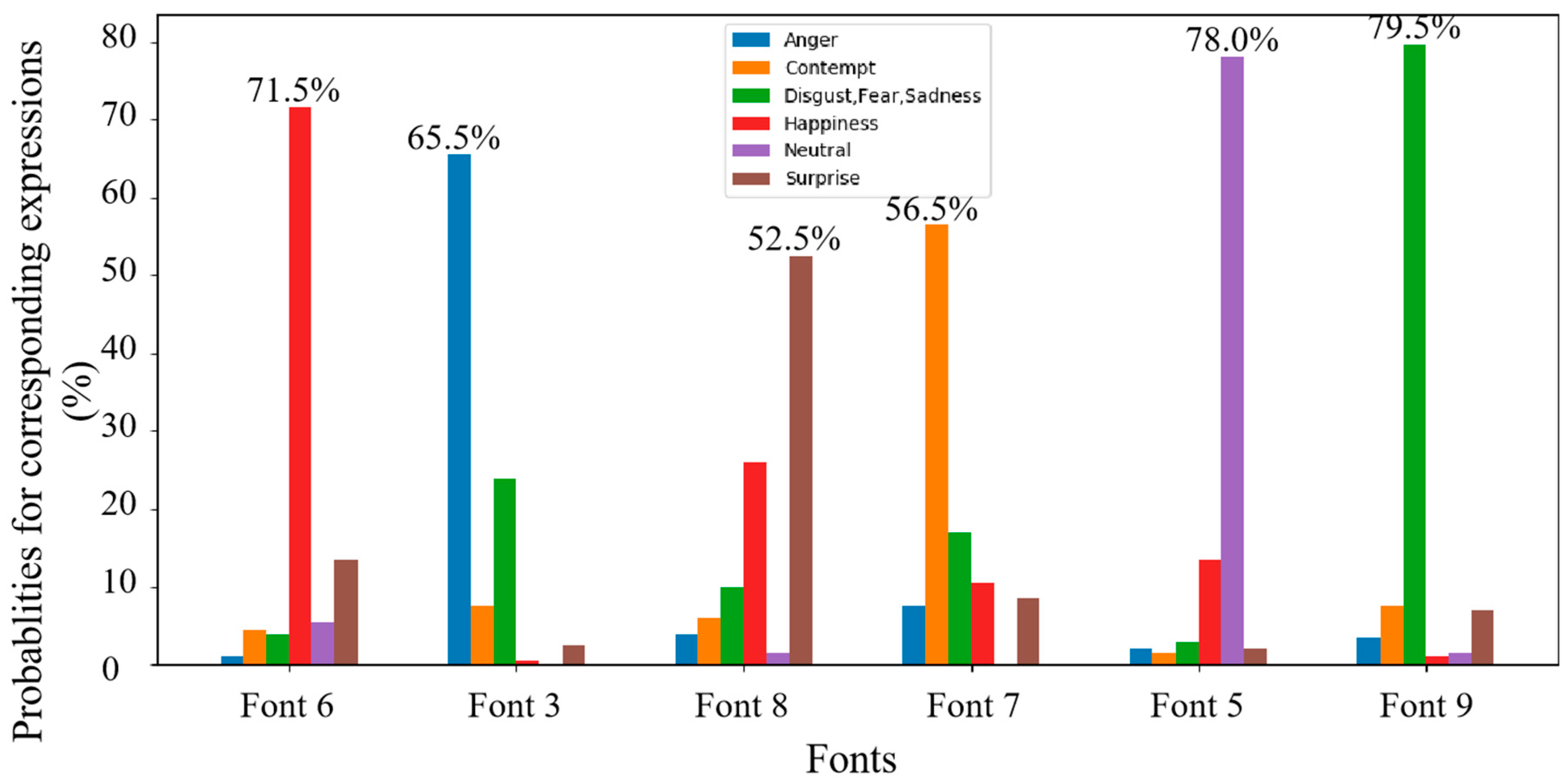

| Emotion | Font |

|---|---|

| Anger | Font 3 |

| Contempt | Font 7 |

| Happiness | Font 6 |

| Neutral | Font 5 |

| Disgust, Fear, Sadness | Font 9 |

| Surprise | Font 8 |

| Model | SSIM | PSNR |

|---|---|---|

| Zi2Zi | 0.8854 | 14.7226 |

| EG-GAN 1 | 0.8865 | 14.7501 |

| EG-GAN | 0.8953 | 15.3035 |

| Model | Font 3 | Font 5 | Font 6 | Font 7 | Font 8 | Font 9 |

|---|---|---|---|---|---|---|

| Zi2Zi | 0.8670 | 0.9006 | 0.9049 | 0.8820 | 0.8794 | 0.8809 |

| EG-GAN 1 | 0.8614 | 0.9036 | 0.9158 | 0.8732 | 0.8831 | 0.8835 |

| EG-GAN | 0.8690 | 0.9082 | 0.9092 | 0.8997 | 0.8940 | 0.8915 |

| Model | Font 3 | Font 5 | Font 6 | Font 7 | Font 8 | Font 9 |

|---|---|---|---|---|---|---|

| Zi2Zi | 14.2367 | 16.9022 | 16.3358 | 13.6608 | 13.7157 | 14.2021 |

| EG-GAN 1 | 14.1828 | 17.1461 | 17.1391 | 13.1789 | 14.0231 | 14.4951 |

| EG-GAN | 14.6932 | 17.3627 | 16.4064 | 14.8043 | 14.8671 | 15.0289 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Lee, F.; Chen, H.; Yao, W.; Cai, J.; Chen, Q. Automatic Chinese Font Generation System Reflecting Emotions Based on Generative Adversarial Network. Appl. Sci. 2020, 10, 5976. https://doi.org/10.3390/app10175976

Chen L, Lee F, Chen H, Yao W, Cai J, Chen Q. Automatic Chinese Font Generation System Reflecting Emotions Based on Generative Adversarial Network. Applied Sciences. 2020; 10(17):5976. https://doi.org/10.3390/app10175976

Chicago/Turabian StyleChen, Lu, Feifei Lee, Hanqing Chen, Wei Yao, Jiawei Cai, and Qiu Chen. 2020. "Automatic Chinese Font Generation System Reflecting Emotions Based on Generative Adversarial Network" Applied Sciences 10, no. 17: 5976. https://doi.org/10.3390/app10175976

APA StyleChen, L., Lee, F., Chen, H., Yao, W., Cai, J., & Chen, Q. (2020). Automatic Chinese Font Generation System Reflecting Emotions Based on Generative Adversarial Network. Applied Sciences, 10(17), 5976. https://doi.org/10.3390/app10175976