1. Introduction

The World Wide Web contains abundant knowledge by virtue of the contributions of its great number of users and the knowledge is being utilized in diverse fields. Since ordinary users of the Web use, in general, a natural language as their major representation for generating and acquiring knowledge, unstructured texts constitute a huge proportion of the Web. Although human beings regard unstructured texts naturally, such texts do not allow machines to process or understand the knowledge contained within them. Therefore, these unstructured texts should be transformed into a structural representation to allow them to be machine-processed.

The objective of knowledge base enrichment is to bootstrap a small seed knowledge base to a large one. In general, a knowledge base consists of triples of a subject, an object and their relation. Existing knowledge bases are not perfect in two respects of relations and triples (instances). Note that even a massive knowledge base like DBpedia, freebase or YAGO is not perfect for describing all relations between entities in the real world. However, this problem is often solved by restricting target applications or knowledge domains [

1,

2]. Another problem is lack of triples. Although existing knowledge bases contain a massive amount of triples, they are still far from perfect compared to infinite real-world facts. This problem can be solved only by creating triples infinitely. Especially, according to the work by Paulheim [

3], the cost of making a triple manually is 15 to 250 times more expensive than that of automatic method. Thus, it is very important to generate triples automatically.

As mentioned above, a knowledge base uses a triple representation for expressing facts, but new knowledge usually comes from unstructured texts written in a natural language. Thus, knowledge enrichment aims at extracting as many entity pairs for a specific relation from unstructured texts as possible. From this point of view, pattern-based knowledge enrichment is one of the most popular methods among various implementations of knowledge enrichment. Its popularity comes from the fact that it can manage diverse types of relations and the patterns can be interpreted with ease. In pattern-based knowledge enrichment where a relation and an entity pair connected by the relation are given as a seed knowledge, it is assumed that a sentence that mentions the seed entity pair contains a lexical expression for the relation and this expression becomes a pattern for extracting new knowledge for the relation. Since the quality of the newly extracted knowledge is strongly influenced by that of the patterns, it is important to generate high-quality patterns.

The quality of patterns depends primarily on the method used to extract the tokens in a sentence and to measure the confidence of pattern candidates. Many previous studies such as NELL [

4], ReVerb [

5], and BOA [

6] adopt lexical sequence information to generate patterns [

7,

8]. That is, when a seed knowledge is expressed as a triple of two entities and their relation, an intervening lexical sequence between the two entities in a sentence becomes a pattern candidate. Such lexical patterns have been reported to show reasonable performances in many knowledge enriching systems [

4,

6]. However, they have obvious limitations that (i) they fail in detecting long distance dependencies among words in a sentence, and (ii) a lexical sequence does not always deliver the correct meaning of a relation.

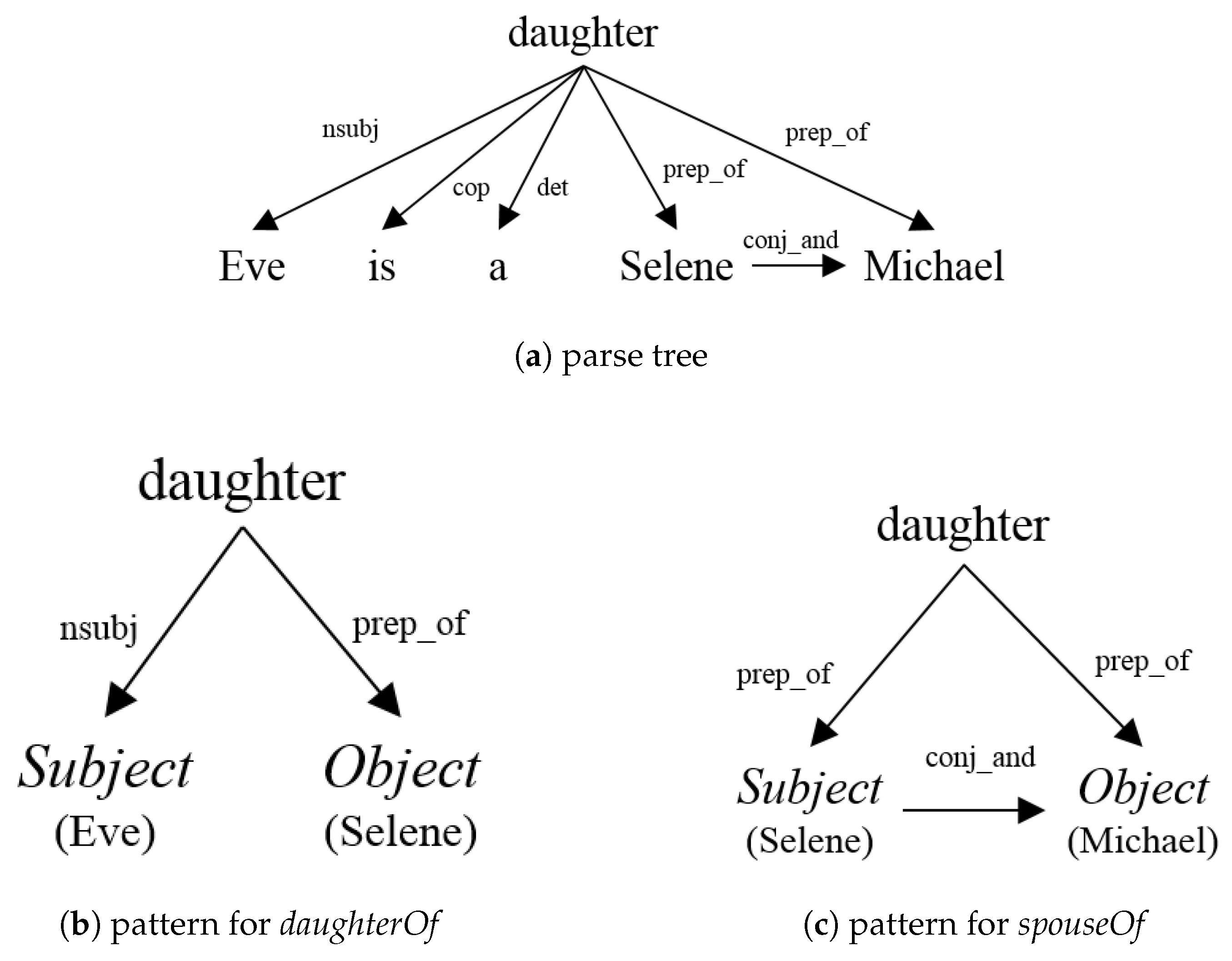

Assume that a sentence “

Eve is a daughter of Selene and Michael.” is given. A simple lexical pattern generator like BOA harvests from this sentence the patterns shown in

Table 1 by extracting a lexical sequence between two entities. The first pattern is for a relation

childOf, and is adequate for expressing the meaning of a parent-child relation. Thus, it can be used to extract new triples for

childOf from other sentences. However, the second pattern, “

and

” fails in delivering the meaning of a relation

spouseOf. In order to deliver the correct meaning of

spouseOf, a pattern “a daughter of

and

” should be made. Since the phrase “

a daughter of” is located out of ‘

Selene’ and ‘

Michael’, such a pattern can not be generated from the sentence. Therefore, a more effective representation for patterns is needed to express dependencies of the words that are not located within entities.

An entity pair can have more than two relations in general. Thus, a sentence that mentions both entities in a seed triple can express other relations that are different from the relation of the seed triple. Then, the patterns extracted from such sentences become useless in gathering new knowledge for the relation of the seed triple. For instance, assume that a triple is given as a seed knowledge. Since only entities are considered in generating patterns, “{arg1} is a daughter of {arg2}” from the sentence “Eve is a daughter of Selene and Michael.” becomes a pattern for a relation workFor, while the pattern does not deliver the meaning of workFor at all. Therefore, it is important for generating high-quality patterns to filter out the pattern candidates that do not deliver the meaning of the relation in a seed triple.

One possible solution to this problem is to define a confidence of a pattern candidate according to the relatedness between a pattern candidate and a target relation. Statistical information such as frequency of pattern candidates or co-occurrence information of pattern candidates and some predefined features has been commonly used as a pattern confidence in the previous work [

6,

9]. However, such statistics-based confidence does not reflect semantic relatedness between a pattern and a target relation directly. That is, even when two entities co-occur very frequently to express the meaning of a relation, there could be also many cases in which the entities have other relations. Therefore, in order to determine if a pattern is expressing the meaning of a relation semantically correctly, the semantic relatedness between a pattern and a relation should be investigated.

In this paper, we propose a novel but simple system for bootstrapping a knowledge base expressed in triples from a large volume of unstructured documents. Especially, we show that the dependencies between entities and semantic information can improve the performance over the previous approaches without much effort. For overcoming the limitations of lexical sequence patterns, the system expresses a pattern as a parse tree, not as a lexical sequence. Since a parse tree of a sentence presents deep linguistic analysis of the sentence and expresses long distance dependencies easily, the use of parse tree patterns results in higher performance in knowledge enrichment than lexical sequences. In addition, the deployment of a semantic confidence for parse tree patterns allows irrelevant pattern candidates to be filtered out.

The semantic confidence between a pattern and a relation in a seed knowledge is defined as an average similarity between the words of the pattern and those of the relation. Among various similarity measurements, we adopt two common semantic similarity measurements: a WordNet-based similarity and a word embedding similarity. Generally, WordNet similarity shows plausible results but sometimes it suffers from out-of-vocabulary (OOV) problem [

10]. Since patterns can contain many words that are not listed in WordNet, the similarity is supplemented by word similarity in a word embedding space. Thus, the final word similarity is the combination of similarity by WordNet and that in a word embedding space. The final the semantic confidence between a pattern and a relation in a seed knowledge is defined as an average similarity between the words of the pattern and those of the relation.

3. Overall Structure of Knowledge Enrichment

Figure 1 depicts the overall structure of the proposed knowledge enriching system. For each relation

r in a seed knowledge base, we first generate a set of patterns

for the relation

r. When a seed knowledge is given as a triple

with two entities (

and

) and a relation (

r), the pattern for the seed knowledge is defined as a subtree of the parse tree of a sentence that contains both

and

. In order to obtain

, the sentences that mention

and

at the same time are first chosen. Since our pattern is a parse tree, the chosen sentences are parsed by a natural language parser, and then transformed into parse tree patterns. Then, we exclude the parse tree patterns that do not deliver the meaning of the relation

r. After filtering out such irrelevant tree patterns, the remaining become

.

Once is prepared, it is used to generate new triples for r from the set of documents. If a sentence in the document set matches a parse tree pattern in , a new triple extracted from the sentence is added into the original seed knowledge base. Since a pattern has a tree structure, all sentences in the document set are also parsed by a natural language parser in advance. A new triple is extracted from a parse tree, when a pattern matches the parse tree exactly. Finally, the new triples are added into the knowledge base.

5. New Knowledge Extraction

Once

, a set of patterns for a relation

r, is prepared, new triples are extracted from a large set of documents using

. When the parse tree of a sentence matches a pattern for

r completely, a new triple for

r is made from the sentence. Algorithm 2 explains how new triples are made. The algorithm takes, as its input, a sentence

s from a document set, a target relation

r, and a pattern

. For a simple match of trees, a pattern

p is transformed into a string representation

by a function

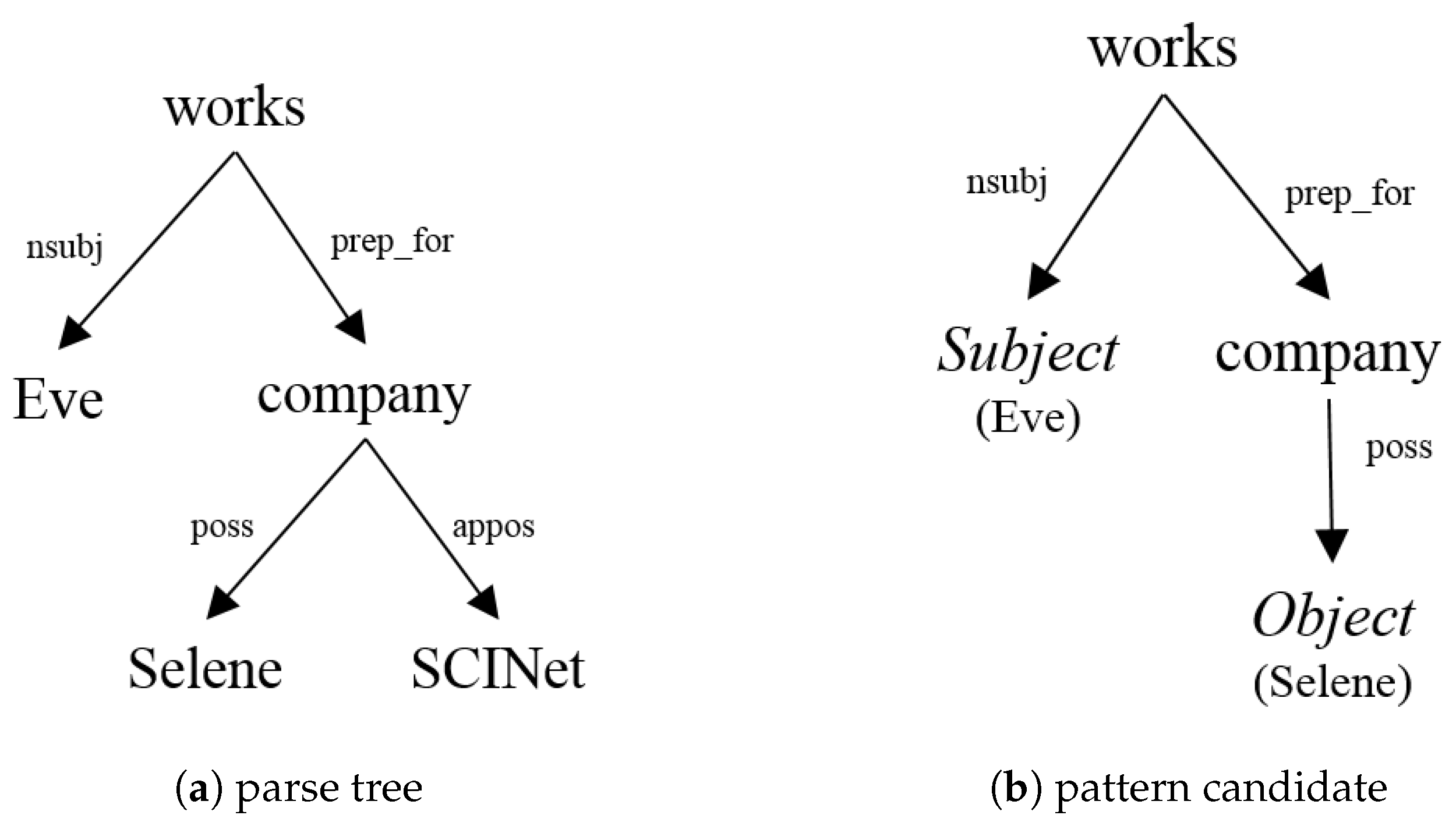

. This function converts a tree into a long single string by traversing the tree in order. The labels of edges are regarded as nodes since they play an important role in delivering the meaning of a relation. Let us consider the patterns in

Figure 2b and

Figure 3b for instance. The pattern in

Figure 2b is expressed as a string

[

Subject] ← nsubj ← [daughter] → prep-of → [

Object], while that in

Figure 3b becomes [

Subject] ← nsubj ← [works] → prep-for → [company] → poss → [

Object].

| Algorithm 2: New Knowledge Extraction |

![Applsci 10 06209 i001 Applsci 10 06209 i001]() |

The sentence s is changed into a parse tree t by a natural language parser, and all entities in s are extracted into E. For each combination of entity pairs in E, the subtree of t that subsumes the entity pair is matched with the pattern p. It matches p, is regarded as a parse tree that delivers the same meaning with p.

For matching and p, the nodes corresponding to and in t are first identified as and . Then, the subtree that subsumes and is extracted by the function used in Algorithm 1. After that, is also transformed into a string representation by . If and are same, a triple is believed to follow the meaning of the pattern p. Thus, it is added into a knowledge set K as a new triple for the relation r.

6. Experiments

To evaluate the proposed method, we perform the experiments with two datasets. The first dataset consists of Wikipedia and DBpedia. The DBpedia ontology is used as a knowledge base and the Wikipedia corpus is used as a corpus for generating patterns and extracting new knowledge triples. For quantitative evaluation, QALD-3 benchmark dataset (Ontology lexicalization task) is adopted, where the dataset consists of 30 predicates which is a subset of DBpedia. The second dataset is NYT (New York Times Corpus) benchmark dataset which is adopted in many previous studies [

36].

At the experiment with Wikipedia and DBpedia, recall of patterns and new triples cannot be calculated because there are no gold standard answers on the patterns and new triples in the corpus. Thus, only the accuracy (precision) of patterns and triples is measured. However, in order to show the relatedness between recall and precision indirectly, the accuracy (precision) at K with respect to the ranked triple lists is used. All evaluations are performed manually with two assessors. In every judgment, only the predictions which both assessors determined as true are considered to be correct. On the other hand, at the experiment with NYT, we also present top-K precision, which is evaluated automatically with test data.

The proposed method is evaluated with four experiments. The objective of the first two experiments is to show the effectiveness of our pattern generation step. In the first experiment, the proposed parse tree pattern is compared with the lexical sequence pattern, and the effectiveness of the proposed semantic filter is investigated in the second experiment. New triples extracted by our parse tree patterns are evaluated in the third experiment. In the final experiment, the proposed method is compared with previous studies using another benchmark dataset, NYT.

6.1. Evaluation of Parse Tree Patterns

We show the superiority of a parse tree representation of patterns by comparing it with a lexical representation. For the evaluation of patterns, ten most-frequent relations are selected among 30 relations. The ten relations used are artist, board, crosses, deathPlace, field, location, publisher, religion, spouse, and team. Although only one third of DBpedia relations are used, the ten relations can cover most of the pattern candidates. That is, 63,704 unique pattern candidates are generated from 30 relations, but 75% of them are covered by the ten relations.

All the triples of the DBpedia ontology that correspond to the ten predicates are employed as seed triples. In order to generate both kinds of patterns, 100 sentences are randomly sampled from the Wikipedia corpus for each relation. Since one pattern is generated from a sentence, every relation has 100 patterns for parse tree representation and lexical representation, respectively. In order to obtain lexical sequence patterns which are used for previous work such as BOA or OLLIE, we follow only the pattern search stage of BOA. The correctness of each pattern is measured by two human assessors. For each pattern of a relation, the assessors determine if the words in the pattern deliver the meaning of the relation accurately. Finally, only the patterns which both assessors agree with as true are considered to be correct.

Figure 4 shows the comparison result of parse tree and lexical sequence patterns. The X-axis of this figure represents relations, and the Y-axis is the accuracy of patterns. The proposed parse tree patterns show consistently higher accuracy than the lexical sequence patterns for all relations. The average accuracy of parse tree patterns is 68%, while that of lexical sequence patterns is just 52%. The maximum accuracy difference between the two pattern representations is 35% for the relation

publisher. Since parse trees represent dependency relations between words and thus it can reveal long distance dependencies of non-intervening words, higher accurate patterns are generated by parse trees.

After investigating all 1000 (=100 patterns · 10 relations) parse tree patterns, it is found that around 34% of words appearing at the patterns are non-intervening words and about 45% patterns contain at least one non-intervening word. The fact that many patterns contain non-intervening words implies that the proposed parse tree pattern represents long distance dependencies between words effectively. For example, consider the following sentence and a triple .

Most notably he produced and wrote lyrics for Julee Cruise’s first two albums,

Floating into the Night (1989) and The Voice of Love (1993).

From this sentence, the lexical pattern extracts

as a pattern, and the pattern contains meaningless words such as

first and

two. However, the following parse tree pattern excludes such non-intervening words.

6.2. Performance of Semantic Filter

The proposed semantic filter is based on a composite similarity of WordNet-based similarity and word-embedding similarity. Thus, we compare the composite similarity with each base similarity to see the superiority of the semantic filter. In addition, many lexical sequence representations of patterns remove irrelevant patterns based on a pattern frequency. Thus, a frequency-based filter is also compared with the proposed semantic filter.

For each relation, parse tree patterns are generated using all seed triples and the Wikipedia corpus. As a result, 47,390 parse tree patterns are generated. Thus, one relation has 4739 patterns on average. Then, four filters were applied to sort the patterns according to their similarity or frequency. Since it is impractical to investigate the correctness of 47,390 patterns manually, the correctness of top 100 patterns by each filter is checked.

Figure 5 shows the average top-

K accuracies of the four filters. In this figure, ‘WordNet + Word Embedding’ is the proposed semantic filter, ‘WordNet Only’ and ‘Word Embedding Only’ are two base filters, and ‘Frequency-Based’ is the frequency-based filter used in OLLIE [

9]. ‘WordNet + Word Embedding’ outperforms all other filters for all

k’s. In addition, the difference between ‘WordNet + Word Embedding’ and other filters gets larger as

k increases. These results imply that the proposed semantic filter preserves high-quality patterns and removes irrelevant patterns effectively.

Among the ten relations, the results for

deathPlace show the lowest accuracy. As shown in

Figure 6a, the accuracies of

deathPlace are below 50% for all filters. In the knowledge base, the concepts

Person and

Location are usually used as the domain and the range of

deathPlace, respectively. However, they are often used for many other relations such as

birthPlace and

Nationality. Thus, even if a number of patterns are generated from sentences with

Person as a subject and

Location as an object, many of them are not related at all to

deathPlace. For instance, a parse tree pattern

is generated from a sentence “

Kaspar Hauser lived in Ansbach from 1830 to 1833.” with a seed triple

. This pattern is highly ranked in our system, but its meaning is “

Subject lives in

Object”. Thus, it does not deliver the meaning of a death location.

When word embedding similarity is compared with WordNet-based similarity, it is more accurate than WordNet-based similarity. As seen in

Figure 5, its accuracy is always higher than that of WordNet-based similarity for all

k’s. However, its accuracy is extremely low with the relation

spouse as seen in

Figure 6b. Such extremely low accuracy happens when the similar words of a relation at the word embedding space are not synonyms of the relation. The similar words of

spouse in WordNet are its synonyms like ‘

wife’ and ‘

husband’, but those at the word embedding space are ‘

child’ and ‘

grandparent’. Even if ‘

child’ and ‘

grandparent’ imply a family relation, they do not correspond to

spouse. Since the proposed semantic filter uses a combination of WordNet-based similarity and word embedding similarity, the problem of word embedding space is compensated by WordNet-based similarity.

Figure 7 and

Figure 8 show Top-K accuracies of all relations except

deathPlace and

spouse. For most relations, the semantic-based scoring achieves higher performance than the frequency-based scoring.

6.3. Evaluation of Newly Extracted Knowledge

In order to investigate whether parse tree patterns and semantic filters produce accurate new triples, the triples extracted by the “parse tree + semantic filter” patterns are compared with those extracted by “lexical + frequency filter,” “lexical + semantic filter,” and “parse tree + frequency filter” patterns. Since the Wikipedia corpus is excessively large, 15 million sentences are randomly sampled from the corpus and new triples are extracted from the sentences.

Table 2 shows the detailed statistics of the number of matched patterns and triples extracted with the patterns. According to this table, the number of matched lexical sequence patterns is 255 and that of parse tree patterns is 713. As a result, the numbers of new triples extracted by lexical sequence patterns and parse tree patterns are 32,113 and 104,311 respectively. Though lexical sequence patterns and parse tree patterns are generated from and applied to an identical dataset, the parse tree patterns extract 72,198 more triples than the lexical sequence patterns, which implies that the coverage of parse tree patterns is much wider than that of lexical sequence patterns.

In the evaluation of new triples, top-100 triples are selected for each relation according to the ranks, and the correctness of the 4000 (=100 triples · 10 relations · 4 pattern types) triples is checked manually by two assessors. As done in previous experiments, only the triples marked as true by both assessors are considered correct.

Table 3 summarizes the accuracy of the triples extracted by “parse tree + semantic filter” patterns and those by “lexical + frequency filter,” “lexical + semantic filter,” and “parse tree + frequency filter” patterns. The triples extracted by “parse tree + semantic filter” patterns achieve 60.1% accuracy, while those by “parse tree + frequency filter,” “lexical + semantic filter,” and “lexical + frequency filter” achieve 53.9%, 38.2%, and 32.4% accuracy, respectively. The triples extracted by “parse tree + semantic filter” patterns outperform those by “lexical + frequency filter” patterns by 27.7%. They also outperform triples extracted by the “lexical + semantic filter” and “parse tree + frequency filter” patterns by 21.9% and 6.2%, respectively. These results prove that knowledge enrichment is much improved by using parse tree patterns and the proposed semantic filter.

Most incorrect triples by parse tree patterns come from three relations of deathPlace, field, and religion. The accuracy of new triples goes up to 74.0% without the relations. The reason why deathPlace produces many incorrect triples is explained above. For relations of field and religion, it is found out that a few incorrect patterns which are highly ranked by the semantic filter produce most new triples. Therefore, it is our future work to solve the problems.

After generating all possible pattern tree candidates, the irrelevant candidates are removed using Equation (

1).

’s of each relation

r used for filtering irrelevant candidates are given in

Table 4. On average, 71 patterns of each relation are matched with Wikipedia sentences, but only 37 patterns remain after semantic filtering. And then, among 104,311 triples, triples which are extracted from eliminated patterns are excluded from results. As a result, 12,522 new triples are extracted and added into the seed knowledge.

6.4. Comparison with Previous Work

In order to show the plausibility of the proposed method, we perform an additional experiment with a new benchmark dataset, NYT, which is generated with Freebase relations and New York Times corpus [

36]. The Freebase entities and relations aligned with the sentences of the corpus in the years 2005–2006. The triples generated by this alignment are regarded as training data, and those aligned with the sentences from 2007 are regarded as test data. The training data contain 570,088 instances with 63,428 unique entities and 53 relations with a special relation ‘NA’ which indicates there is no relation between subject and object entities. The test data contain 172,448 instances with 16,705 entities and 32 relations including ‘NA’. Note that ‘NA’ is adopted to represent negative instances. Thus, the triples with ‘NA’ relation do not deliver any information actually. Without the triples with ‘NA’ relation, there remain 156,664 and 6444 triples in training and test data.

Table 5 shows simple statistics on the NYT dataset.

The proposed method is compared with four variations of PCNN (piecewise convolutional neural network) which have used the NYT dataset for their evaluation [

19,

20,

21,

22]. These models are listed in

Table 6 in which ATT implies the attention method proposed by Lin et al. [

19], nc and cond_opt denote the noise converter and the conditional optimal selector by Wu et al. [

20], soft-label means the soft-label method by Liu et al. [

21], and ATT_RA and BAG_ATT are the relation-aware intra-bag attention method and inter-bag attention method proposed by Ye et al. [

22]. We measure the top-

K precision (Precision@

K) where

K is 100, 200, and 300.

Table 6 summarizes the performance comparison on the NYT dataset. According to this table, the proposed method achieves comparable performance to the neural network-based methods. PCNN+ATT_RA+BAG_ATT shows the highest mean precision of 84.8% while the proposed method achieves 79.2%. Thus, the difference between them is just 5.6%. The proposed method, however, is consistent against the change of

K. All neural network-based methods show about a 10% difference between

and

. On the other hand, the difference of the proposed method is just 5.4%, which implies that the proposed pattern generation and scoring approach is plausible for this task. In addition, the patterns generated by the proposed method can be interpreted with ease, and thus the pattern errors can be fixed without huge effort.

7. Conclusions and Future Work

The generation of accurate patterns is a key factor of pattern-based knowledge enrichment. In this paper, a parse tree pattern and semantic filter to remove irrelevant pattern candidates were proposed. The benefit of using parse tree representation for patterns is that long distance dependencies of words are expressed well by a parse tree. Thus, parse tree patterns contain many words that are not located between two entity words. In addition, the benefit of the semantic filter is that it finds irrelevant patterns more accurately than does a frequency-based filter because it reflects the meaning of relations directly.

The benefits of our system were empirically verified through experiments using the DBpedia ontology and Wikipedia corpus. The proposed system achieved 68% accuracy for pattern generation, which is 16% higher than that of lexical patterns. In addition, the knowledge extracted newly by parse tree patterns showed 60.1% accuracy, which is 27.7% higher than the accuracy of that extracted by lexical patterns and statistical scoring. Although in comparison with previous neural network based methods the proposed method could not achieve state-of-the-art performance, it showed excellent performance considering the simplicity of the model. Especially, it proves that our proposed approach is suitable for the knowledge enrichment task with robustness. These results imply that the proposed knowledge enrichment method populates new knowledge effectively.

As our future work, we will figure out a more suitable similarity metric between a pattern and a relation. We have shown through several experiments that WordNet and word embedding are appropriate for this task without further huge efforts. Nevertheless, there is still some room for performance improvement. Thus, we will explore a new semantic similarity to capture well the relatedness between a relation and a pattern in the future. Another weakness of the proposed method is that it cannot handle the unseen relations. It is critical to discover unseen relations in order to make a knowledge base as perfect as possible. Recently, translation-based knowledge base embeddings showed some potential for finding absent relations [

37,

38]. Therefore, in the future, we will investigate a way to discover absent relations and enrich a knowledge base by applying them to the knowledge base.