1. Introduction

Natural Interfaces allow human-device interaction through the translation of human intention into devices’ control commands, analyzing the user’s gestures, body movements or speech. This interaction mode arises from advancements of expert systems, artificial intelligence, gesture and speech recognition, dialog systems, semantic web, and natural language processing, bringing the concept of Intelligent Assistant. More and more often such solutions appear in novel cars and their task is to support drivers in the process of using the Advanced Driver Assistance Systems (ADAS) [

1,

2,

3,

4], including systems for detecting drivers’ fatigue [

5,

6] and mobile applications for traffic improvement [

7].

Conception of Natural User Interface (NUI) assumes free and natural interaction with electronic devices. The interface have to be imperceptible and should base on natural elements [

8]. From the car interior perspective it is impossible to incorporate the whole body movements but gestures and speech are well suited for communication with an intelligent assistant or multimedia system. It must be stressed that these communication modes are not well suited for all possible cases. When a driver is listening to the radio or is travelling through a noisy surrounding the voice operated interface will inevitable fail. In many cases both hands are used simultaneously for maneuvering (steering wheel handling and gear shifting) and it is impossible to perform additional gestures.

In the World report on disability, WHO and the World Bank suggests that more than a billion people in the world experience some form of disability [

9]. Even as many as one-fifth of them encounter serious difficulties in their daily lives [

10]. To improve the every-day activities of people with disabilities new assistive devices and technologies are being introduced. Many of such citizens, living in developed countries, can drive cars on their own thanks to special technical solutions like manipulators or steering aid tools. Still, handling a button console, e.g., of a multimedia system, is out of a range of a handicapped person (due to a distance or form of disability). NUI can prove its value and potential here.

The aim of the paper is to analyse the potential of hand gesture interaction in the vehicle environment by both able-bodied and physically challenged drivers. Test scenarios were defined, consisting of sets of gestures assigned to control multimedia activities in an exemplary vehicle on-board system. Gesture recognition evaluation was conducted for 13 participants, both for the left and right hand. The research was preceded by a survey among participants to check their knowledge of NUI and its practical use. The exact results of the survey are presented in the

Appendix A,

Figure A1 and discussed in

Section 4.1. It is worthy to notice that our experiment was not an invasive medical examination and did not disturb anyone’s ethical standards.

The article is organized as follows.

Section 2 presents related works. Research on hand operated interfaces is referred here together with its applications in vehicle environment. The main concepts of the interface are presented in

Section 3 where the gesture acquisition, gesture recognition and gesture employment are addressed. In

Section 4 a characteristic of research group is provided and scenarios of experiments are proposed. Results are presented in

Section 5 and discussed in

Section 6. The article ends with a summary.

2. Related Works

Vehicle interior designers use research results on potentially possible interaction areas, i.e., the best location for buttons and switches. At present, researchers’ attention is focused on the problem of gesture interaction. Driven by latest advancements in pervasive/ubiquitous technology, gesture control using different techniques such as the infrared image analysis from “depth cameras”, capacitive proximity sensing, etc., has finally found its way into the car. The principal technology allows to perform finger gestures in the gearshift area [

11] or micro-gestures on the steering wheel [

12]. It allows also to perform free multi-finger/hand gestures in the open space to control multimedia applications, or to interact with the navigation system [

13].

Research work related to gestures includes: intuitiveness of gesture use [

14], the quantity of gestures for efficient application [

15], or the ergonomics of in-vehicles gestures [

16,

17]. In [

18] authors proposed a classifier based on Approximate String Matching (ASM). Their approach achieves a high performance rates in gesture recognition. In [

19] authors explores the use of Kinect body sensor and let users interact with the virtual scene roaming system in a natural way.

In follow of [

20], activities carried out by the driver in the car can be divided into three categories: basic tasks, which are directly related to driving, secondary tasks-steering support activities, such as controlling direction indicators, and tertiary tasks-entertainment activities, such as radio control. Today’s widespread connections and popular trends, e.g., in social media allow increasing variety of types of these tertiary tasks. Drivers can use gestures for secondary and tertiary tasks (

Table 1), and can be distinguished by different criteria. Non-contact gestures were tested in the limited design space of the steering wheel and gear shifting area, but also as gestures in full motion in the air. They are most often made in the area including the rear-mirror, steering wheel and gearshift lever. The most commonly used solutions are based on the use of a depth camera directed towards the gear lever or a center console. Fingers (one or both hands) are used when using gear shifting or loosely resting a hand on it. However, it should be noted that people with disabilities use solutions such as hand gas and brake that restrict movement and tracking of the hands.

Holding the gear lever creates problems when trying to make gestures. Grasping it usually means that three fingers from the middle finger to the little finger hold one side of gear lever firmly. This makes them unsuitable for making well-recognized gestures. It is proposed to use those fingers to press the buttons to be attached to the side of the gear lever (one to three buttons) [

21]. They can be used to activate gesture recognition and as modifier buttons to switch between recognition modes. It is worth noting, however, that disabled drivers can use a manual gear lever configured with a pneumatic clutch. This solution requires the use of a sensor located on the gear lever, which in turn makes it difficult to press these buttons. The micro-gestures themselves should be performed with the thumb, forefinger and their combinations. It has been recognized that the actual duration of practical use can be limited: using roads such as motorways requires longer driving periods without shifting gears. Drivers would not have to keep their hands close to the gear lever. It is believed, however, that drivers perceive this as a natural position when the arm rests on the center armrest and the hand on/or near the gear shift lever [

21]. For gesture-based interactions, this can provide the driver with a more relaxed basic attitude than performing free-hand gestures in vertically higher interaction spaces, such as in front of the center console. Importantly, cars for people with disabilities are usually equipped with an automatic gear shifting, which excludes the need to change gears, and in turn such position is not natural.

It is worthy to notice that the recommendations of the general directions of traffic is necessity to use both hands at the wheel to drive if it is possible. However, the controlling of various on-board systems require drivers to take away one of their hands from the wheel to press a specific button or knob. In this context, making a gesture that does not require direct focus on the button that we want to use at the moment seems more natural. The certain gesture can be performed without distracting the driver’s attention focused on observing the traffic situation.

Usage of the camera mounted inside a vehicle can also serve other purposes besides the natural user interface. For example, it allows to analyze additional hand activities or important objects in the car that are important for advanced driver assistance systems. In addition, it allows to specify the system user (driver or passenger), which can consequently be used for further penalisation [

22].

It was found that the implementation of the gesture-based interfaces in the region bounded by a “triangle” of steering wheel, rear mirror, and gearshift is high acceptable by users [

21]. Data evaluation reveals that most of the subjects perform gestures in that area with similar spatial extent, on average below 2 s. In addition to allowing the interface location and non-invasive interface to be customized, the system can lead to further innovative applications, e.g., for use outside of the vehicle. The use of cameras and a gesture-based interface can also generate some potential cost and security benefits. The placement of the camera itself is relatively easy and relatively cheap. The use of non-contact interfaces and their impact on the visual and mental load of the driver require thorough examination. For example, accurate coordination may be less needed when using a contact-less interface as opposed to using a touch screen, which can reduce the view on natural user interface [

22].

Valuable information on the most desirable functions that drivers would like to use with gestures is provided by [

22]. The authors conducted a survey of 67 participants of different ages, sex and technological similarities. In accordance with latest research of short-term and working memory, which notified that the mental storage capacity is about 5 gestures [

23,

24], participants were asked about their preferred 5 functions They would like to control using a gesture-based in-car interface. They were also asked which finger/hand gesture They would like to use for each of these functions, and participants had then to get into a parked car and perform the particular gestures in a static setting, i.e., without driving the car. By applying approval voting of 18 driving experts (car drivers with high yearly mileage), the 5 functions receiving the most votes were chosen. In a later improvement, the one or other gesture was added or replaced to the gestures selected before to make the whole setting more homogeneous and to reduce potential side effects such as non-recognition and poor designs of the gesture-to-function mapping to a minimum [

25]. Furthermore, it is evident that free-hand gestures induce fatigue with prolonged usage [

25]. On account of these, safety related tasks should not be solely assigned to gestures.

Majority of car manufacturers offer touchscreen multimedia control systems. In the latest solutions, these systems are based on two touch screens and voice assistant [

26]. On premium vehicles, solutions based on the recognition of gestures can be found [

27]. In the case of BMW, the Gesture Control system allows to accept or reject a phone call, increase or decrease the volume, and define a favorite function with an assignment to the victory gesture.

In recent years, wireless approaches of human interaction with devices are also considered, both in the context of gesture control [

28] and human activities recognition [

29,

30]. The idea of wireless gesture control is to utilize the RFID signal phase changes to recognize different gestures. Gestures are performed in front of tags deployed in an environment. And then, the multipath of each tag’s signal propagation is changed along with hand movements, which can be captured by the RFID phase information. The first examination brings promising results [

28]. It seems that smart watches and wristbands, equipped with many sensors, will become another way of gesture control [

31]. Solutions of this kind are supported by the deep-learning methods [

32,

33]. However, it should be noted that it is customary to wear smart watches and smart wristbands on the left hand, which makes the use of such equipment for drivers reasonable for vehicles with the steering wheel placed on the right side. This is an interesting research area, but the authors of this article are not currently concerned with it.

Gesture recognition technology is widely expected to be the next generation in-car user interface. A market study conducted in 2013 by IHS Automotive examined gesture-recognition technology and proximity sensing. According to IHS, the global market for automotive proximity and gesture recognition systems that allow motorists to control their infotainment systems with a simple wave of their hand will grow to more than 38 million units in 2023, up from about 700,000 in 2013. Automakers including Audi, BMW, Cadillac, Ford, GM, Hyundai, Kia, Lexus, Mercedes-Benz, Nissan, Toyota and Volkswagen are all in the process of implementing some form of gesture technology into their automobiles [

34].

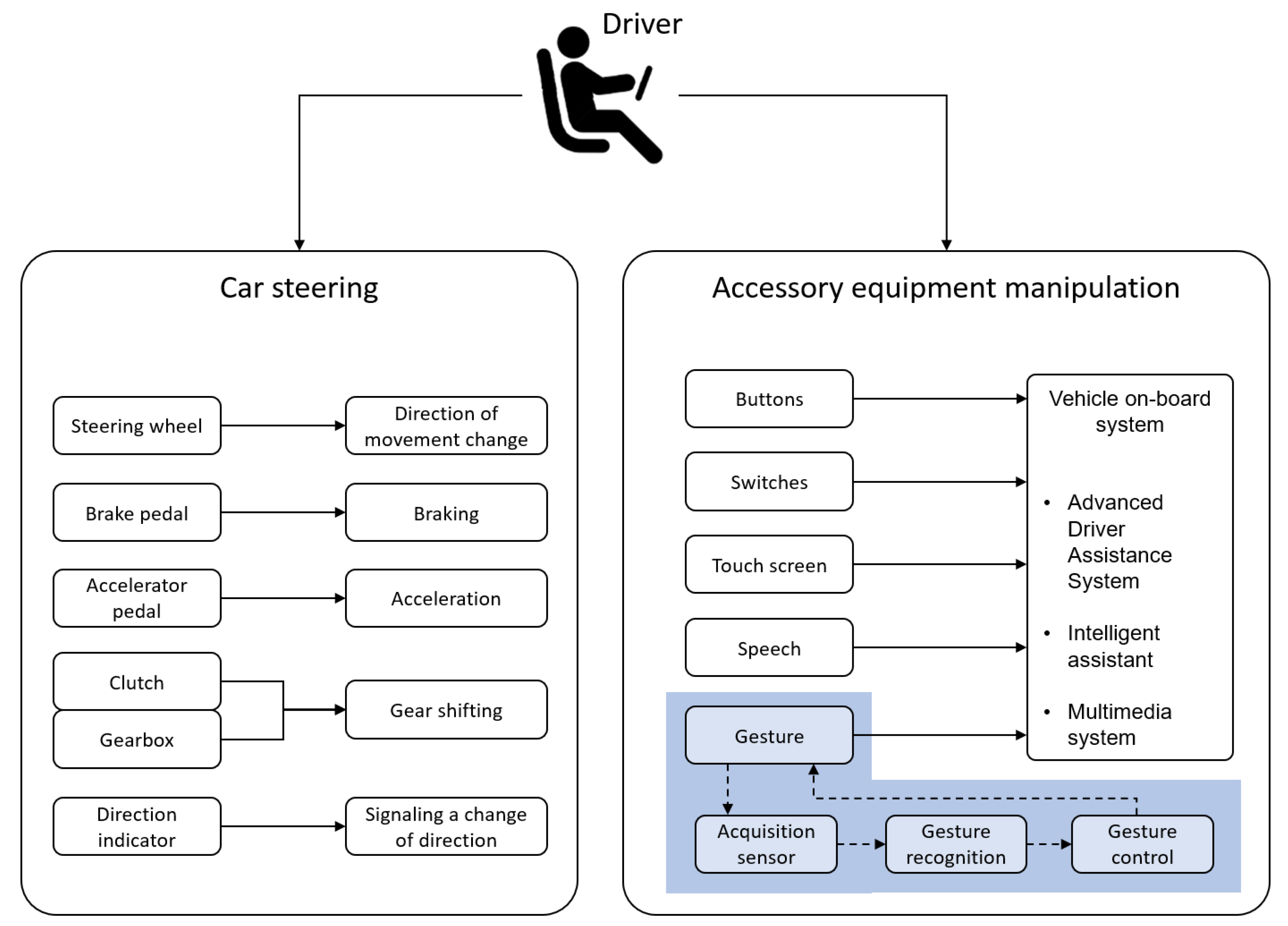

3. Proposed Approach of Gesture-Based Interaction Interface

While driving, a driver is obliged to perform maneuvers safely, paying attention to other road users. A safety critical actions include: acceleration, breaking and change of the movement direction (see

Figure 1 for clarity). These actions are performed with dedicated controllers, i.e., pedals and steering wheel or in the case of motor impaired drivers with the use of special assistive tools. Many cars still are equipped with a manual gearbox and a clutch. The direction indicator is also recognized as an important mechanism crucial for driving. Some researchers treat such activities as secondary tasks-steering support activities [

20]. Nevertheless, They constitute important tasks for safety reasons and we include them in one box.

On the right hand side of

Figure 1 five steering mechanism are listed: buttons, switches, a touch screen, speech and gesture. These modes are used for manipulation of accessory car equipment supporting a driver (tertiary tasks). The gesture is highlighted in the diagram since it is the scope of our investigation. In modern solutions, a gesture-based system requires a hardware for acquisition, a dedicated engine for gesture recognition and a feedback for control. All of the components are analysed in the following subsections.

3.1. Gesture Acquisition

As presented in the “Related works” section gestures can be acquired using different technologies. Wide use of devices such as smartwatches, equipped with additional sensors (such as accelerometers or gyroscopes), can significantly facilitate the gesture recognition. There are also other wireless technologies (e.g., RFID), but we focus our attention on computer vision, emphasizing its non-invasive nature.

The interior of the car is a difficult environment because the lighting conditions are very different [

6,

35]. To recognize the actions performed by a driver the scene appearance should be acquired first. Typical capture devices, i.e., cameras that operate in the visible spectrum (electromagnetic waves from about 380 to 780 nm of wavelengths), can work properly with good lighting conditions. Despite the fact that often the parameters of such cameras are very high, in terms of resolution, sensitivity and dynamic range, They suffer from poor lighting condition encountered in road conditions. It is obvious that the driver’s face while driving cannot be illuminated with any sort of additional light source and for this reason other acquisition mechanisms are employed. Insensitive to the light source, its power or type is thermal imagery technique. It registers the temperature of objects giving the thermal image of the scene. Despite the fact that this technique can be successfully used in human-computer interfaces (e.g., [

36]) the price of thermal cameras is still a serious barrier.

In view of the above, the most appropriate solution seems to be the depth map technique. Here, the distance information from the scene objects to the camera is represented as pixels brightness. There are three major approaches to acquire depth information: stereo vision, structured-light, and time-of-flight. The first requires a stereoscopic camera or a pair of calibrated cameras. Due to the use of regular cameras, this approach is not suitable for use in a vehicle. In the structured light approach, an infrared (invisible for the human eye) light pattern is projected on the scene. Distorted on 3-D objects, it is registered by the IR camera offering, after geometric reconstruction, the 3D information about the observed scene. The time-of-flight approach is based on the measurements of the time required for a light signal to travel from the source to the scene and back to the sensor.

For our study we have employed the Intel RealSense sr300 camera (presented in

Figure 2). The considered alternatives to this solution are MS Kinect and Leap Motion. The first and the second generation of Kinect hardware was excluded due to its dimensions. What is more, both versions are now considered obsolete and are not manufactured anymore. The latest release of Kinect (the Azure Kinect released this-2020-year) strictly base its operation on cloud computing, which exclude its usage on sparsely populated areas outside network coverage. The Leap Motion could have been a good alternative here for its small size and quite decent operation. However, the solution offers a limited number of movement patterns recognition (i.e., swipe, circle, key tap and screen tap).

In view of the above, the Intel RealSense provides the best suited solution for our research. It has a compact size suitable for the in-car use. The sensor includes both infrared and RGB colour cameras, IR laser projector and a microphone array. It captures a depth image of the scene in good and poor lighting conditions from a relatively close distances occurring in the car cabin. It offers 60 fps in 720 p resolution and 30 fps in 1080 p. The hardware load from today’s perspective also seems to be undemanding. The sensor requires at least 6th Generation Intel® Core™ Processor and 4 GB of memory. With a price slightly exceeding $100 the component of this kind seems to be well suited for the widespread use.

We performed all experiments on the laptop equipped with 8th generation Intel® Core™ i5 Processor, 8 GB of memory and observed no delays during the operation.

3.2. Gesture Recognition

Two types of hand gestures are defined in the scientific literature, namely: static and dynamic [

37]. Static gestures are sometimes called as postures while dynamic-as hand motions. A Static gesture denotes a specific orientation and position of hand in the space. The gesture lasts a specific period of time without any movement associated with it. A thumb up as an OK symbol is a good example here. A dynamic gesture requires a specific movement performed during a predefined time window. A hand moving forward in the air as if pressing a button or waving of hand are examples of dynamic gestures.

Figure 3 presents a set of established gestures we employed in our investigation. A gesture number one can be described as a movement of the hand, facing the sensor, to the left and immediately back to the starting position. Similar is the case with gesture number two, but the movement is to the right. Gestures number 3 and 4 mean respectively: a movement of the hand, facing the sensor, downwards and immediately back to the starting position and a movement of the hand, facing the sensor, upwards and immediately back to the starting position. These four gestures constitute for a typical swipe mechanism.

The gesture number 5 means: move a hand close to the sensor and move it back in a way that imitates pressing a button. All the first 5 gestures presented in

Figure 3 are typical dynamic gestures. The definition of gesture number 6 is: show a clenched hand towards the sensor. This is a static gesture similarly as the last one. The 7th gesture is defined as presentation of the hand with index and middle finger upwards with others hidden.

We have chosen for our investigations only those gestures that seems to be easy to perform, are natural, and engage small number of muscles. These are four directional swipe gestures, a push gesture and two static gestures (clenched fist and victory gesture). It must be noticed that many gestures are not well suited for the in-car environment. They require more space to be performed properly or engage too many muscles. The latter is especially important for people with physical limitations. For example, a “thumb down” gesture is performed with a closed hand and straightened thumb, but the rotary arm movement is required so that the thumb points down. Other gestures, like “spread fingers” might be extremely difficult for people with disabilities. We believe that our selected gestures are the easiest but for sure, in future, the user specific adaptation should be considered.

Gesture recognition is still an active research activity with many established solutions available for researchers. In our proposal we based the gesture recognition module on libraries provided with the Intel RealSense sr300. The hardware details of this solution has been provided in

Section 3.1. The gesture recognition mechanisms are based here on skeletal and blob (silhouette) tracking. The most advanced mode is called “Full-hand” and offers tracking of 22 joints of the hand in 3D [

38]. This mode requires the highest computational resources but offers the recognition of pre-defined gestures. The second is the “Extremities mode” and it offers tracking of 6 points: the hand’s top-most, bottom-most, right-most, left-most, center and closest (to the sensor) points [

38]. Other modes include “Cursor” and “Blob”. They are reserved for the simplest cases when central position of hand or any object is expected to be tracked.

3.3. Gesture Employment

Having a recognized gesture one can employ it for steering or communication purposes. Since our study focuses on multimedia system control by persons with disabilities we connected each gesture to a specific function. Our prototype vehicle on-board system is operated using gestured presented in

Figure 3 according to the scheme:

g-1: previous song/station,

g-2: next song/station,

g-3: previous sound source,

g-4: next sound source,

g-5: turn on the multimedia system,

g-6: turn off the multimedia system,

g-7: a favourite function.

As can be observed the dynamic swipe gestures are used for navigation through sound source and songs or the radio stations. The system can be lunched with the in the air push gesture and switched off with the fist gesture. A sign of victory is reserved for a favourite function.

4. Evaluations

Evaluation of HCIs in general is user-based. During the experiments participants have different tasks constituting a set of actions to be performed. These actions are called scenarios. The correctness of action execution is verified and some performance measures are gathered.

In our experiments we investigated the ease of gesture-based steering by people with physical disabilities.

Figure 4 presents experiment setup. For safety reasons, we decided not to conduct experiments in traffic. Operation of both hands have been evaluated and because we used cars with the steering wheel located on left-hand side, when tested the left-hand operation a driver was moved to the passenger seat. Depending on own preferences or degree of disability each user chose one of the two options for mounting the sensor: in the center dashboard (

Figure 4 top) or near the armrest and gear shift (

Figure 4 bottom). In the last case driver is able to perform gestures in horizontal plane, which is important for people with limited movements in the shoulder area. The sensor itself was attached with a double-sided tape. The exact location is denoted by the red circle.

In the examined version of the prototype the interface was not integrated with the car’s console. We assume that the control would take place via the CAN bus. The application would send the information to the multimedia system which functions should be activated. Currently, the application gives the message in JSON format and this is used for validation. The dedicated application is launched on laptop in the car environment.

In the next section we briefly characterise our study group. Then, we provide description of the experiments.

4.1. Characteristic of Research Group

Research study was conducted of two stages: (1) a NUI topic e-survey and (2) a set of gesture recognition experiments. A total of 40 voluntary participants took part in the first stage, including 23 fully physically fit people and 17 with mobility disabilities, regardless of gender. The survey results are presented in the

Appendix A,

Figure A1. The prepared survey included both questions about the determination of physical characteristics (e.g., degree of disability, type of disability) and determining knowledge about NUI. Most of the respondents (84%) were active vehicle drivers and 69% of all participants think that technology can significantly help decreasing the disability barrier during vehicle using. 74% of our respondents were severe disabled and 22% of them had a moderate disability. The remaining 4%, They were participants with a light disability. All of our respondents have any of steering aid tools in their cars, which means that their cars had to be specially adapted. Half of respondents knew what NUI means and They could identify popular NUI types (e.g., gesture-based recognition interface or voice-based interface). But, very important is, that most of them (52%) did not use NUI technology before. Thanks to this, these people could be invited for further research on GRI experiments.

Thus, a total of 13 voluntary participants took part in the second stage, including 4 fully physically fit people and 9 with mobility disabilities, regardless of gender (

Table 2). It must be noticed that all disabled participants were physically independent and all of them had all upper and lower limbs. The age distribution of 13 people who took part in the research experiment is as follows: under 30 (u_01, u_02, u_03, u_08, u_11), 31–40 (u_04, u_09, u_10, u_12) and over 41 (u_05, u_06, u_07, u_13). Below, there is a brief description of people with disabilities.

U_02 suffers from cerebral palsy, four-limb paresis with a predominance of the left side. The left hand of the subject is spastic. Three weeks before testing, this hand was operated on for wrist flexor tenotomy. The user is not able to activate by this hand any of car switches in a conventional way. Right hand is functional, and the strength in both limbs according to the Lovet scale is 4/5. Research for the right hand did not show any differences from the test scenarios. The results are different for the left hand. Some gestures are not recognized immediately and They need to be repeated several times. They also require additional focus of user to the movements performed. The left hand during the tests tired quickly, increased spasticity was observed. This could be due to the patient’s illness, as well as the surgery three weeks before the test.

U_03 suffers from a (lat. myelomeningocele) in the lumbosacral segment, between the L5 and S1 vertebrae. He has paresis of lower limbs, so he uses the manual mechanism of gas and brake. He has a problem with reaching for the side window opening buttons and controlling the multimedia system and air conditioning (he controls them only when the vehicle is not moving). This is due to the location of the buttons. For this person, a more convenient location of the depth camera turned out to be place near the gear shift lever, due to having in his car a specialized equipment that requires constant use of the hand to control the gas and brake. The same condition occurs in u_04, …, u_08.

U_09 suffers from a rare disease-type 4 of mucopolysaccharidoses, and because of this she moves with crutches every day. The biggest problem for this participant is getting in and out of the vehicle due to degeneration of the hip joints. She quickly got used to the gestures of the gesture-based system and it became intuitive for her. She preferred the location of the camera near the gear shift lever.

U_10 suffers from paralysis of the shoulder plexus and forearm of the right hand, which resulted in her paresis. Left hand fully functional. The participant has the biggest problem with controlling on-board devices, such as a multimedia system, air conditioning panel and wiper handle. The participant uses the steering wheel knob. Multimedia control is possible thanks to the multifunctional steering wheel. She was able to perform certain gestures with her right hand during the tests, but the system had a problem recognizing them. Directional gestures were slow. Static gestures in most cases were recognized except for the favorite gesture. Left hand tests were performed correctly. The participant said that such a solution in the right form would be very helpful for her. The participant’s illness allows her to make gestures only in the horizontal plane.

Half of respondents preferred to have the sensor of gesture recognition interface located near the armrest and gear shift (to be able to perform horizontal gestures) and another half, preferred to have it in the center dashboard (

Table 2 and

Figure 4).

4.2. Scenarios

We have prepared six scenarios to be performed by each participant. Each scenario has been realised twice by each participant, using left and right hand to perform gestures. Each scenario assumed a typical interaction of vehicle on-board multimedia system. It contains a sequence of gestures from a set presented in

Figure 3. Scenarios are as follow:

S-1: g-5, g-3, g-2, g-4, g-1;

S-2: g-4, g-1, g-5;

S-3: g-5, g-7, g-6;

S-4: g-2, g-7, g-4, g-4, g-4, g-3, g-3, g-3, g-3, g-5;

S-5: g-4, g-2, g-2, g-2, g-1, g-1;

S-6: g-5, g-6, g-5, g-6, g-5, g-6.

In the first scenario (S-1), a participant is expected to turn on the multimedia system with a push gesture (g-5), navigate to the previous sound source with a swipe down gesture (g-3), change the song to the next with a swipe right gesture (g-2), change the sound source with the swipe up gesture (g-4), and finally navigate to the previous song with a swipe left hand gesture (g-1).

All other scenarios (S-2, ⋯, S-6) can be easily read in a similar way. We preferred to exploit different scenarios instead of repeating the same scenario few times. Such approach is more similar to the ordinary, everyday operation.

7. Conclusions

In the article the touchless interaction with vehicle on-board systems is presented. In particular, the focus is on gesture-based interaction. The survey of 40 people was conducted collecting their opinions and their knowledge of the gesture control systems. It is important that the survey was attended by people who are both drivers and non-drivers, including physically challenged people.

A set of gestures was established. Later on, we developed six scenarios containing chosen gestures from a set and we conducted experimental studies with the participation of 13 volunteers. All experiments were carried out for each hand separately, giving the overall result of 73% for the left hand and 85% for the right hand.

Our study has significant practical importance. It shows that the location of the depth sensor is important for the comfort of the user (driver) of the gesture recognition system. Some of our respondents indicated that the placement of the sensor in the dashboard causes rapid hand fatigue and thus discourages the use of the system. The problem was solved by sensor relocation to the space between the gearshift lever and the armrest.

As research shows, there is an interest in gesture-based systems, and further research is necessary to fully understand the needs of drivers. It is worthy to establish standard gestures recognized by various car multimedia system manufacturers.