1. Introduction

Feature point detection is used as the fundamental step of image analysis and computer vision. As an important element, corner detection is still widely used today in applications including object detection, motion tracking, simultaneous localization and mapping, object recognition and stereo matching [

1,

2,

3]. A corner detector can be successfully used for these tasks if it has good consistency and accuracy [

4]. For this reason, a large amount of pioneering work has been performed on corner detection in recent years. These techniques can be broadly classified into two categories: intensity-based corner detectors [

5,

6,

7,

8,

9,

10] and contour-based corner detectors [

11,

12,

13,

14]. Both types of corner detectors have their respective merits and demerits.

The contour-based corner detectors are mainly based on the curvature scale space (CSS). An edge detector is used in these detectors, for example the Canny edge detector [

15], to obtain planar curves parameterized by arc-length. They are smoothed by a set of multi-scale Gaussian functions. Then the curvature is calculated on each point of the curves smoothed by the Gaussian functions. Finally, the candidate corners will be comprised of the absolute maximum curvature points from which weak and false corners will be excluded by thresholds. In order to improve localization, some CSS-based corner detectors additionally use a corner tracking step [

13]. These detectors perform well in corner detection, but they suffer from two main problems. First, the curvature estimation technique is sensitive to the local variation and noise on contours because it uses second order derivatives of curve-point locations. Second, the large-scale Gaussian functions fail to detect true corners while small-scale ones detect a number of false corners. In other words, scale selection is a difficult task. In order to overcome the aforementioned problems, the multi-scale detector based on the chord-to-point distance accumulation (CPDA) technique is developed [

14]. However, the complexity of the CPDA corner detector is high and it is difficult to improve from the algorithm architecture, so it cannot be applied to many computer vision systems. Other novel techniques are also proposed to improve performance, including angle difference of principal directions [

16], Laplacian scale-space [

17] and so on. However, all the methods in this category do not detect every corner region in an image. In particular, they fail to detect corner regions formed by a texture [

18].

The intensity-based detectors indicate the presence of a corner by using the first-order or second-order derivatives of images and calculating the corner response function (CRF) of each pixel. Moravec computes the local sum of squared differences (SSD) between an image patch and its shifted version in four different directions [

5]. The point with the largest SSD in a certain range is determined as a corner. But this technique is dramatically sensitive to noise and strong edges. Smith and Brady developed the smallest univalue segment assimilation nucleus (SUSAN) method for corner and edge detection [

7]. This method is mainly based on a circular mask applied to the interest region. The pixels which have similar brightness to the nucleus construct the univalue segment assimilating nucleus (USAN) area. However, the accuracy of this algorithm is heavily dependent on the threshold. If the threshold is too big or too small, corner detection errors will result. Rosten and Drummond proposed the feature from accelerated segment test (FAST) algorithm based on the SUSAN corner detector [

9,

10]. As with SUSAN, FAST also uses a Bresenham circle of radius 3 pixels as test mask. The difference is that FAST just uses the 16 pixels in the circle to decide whether a point is actually a corner. The FAST algorithm requires very short computation time, but it is very sensitive to noise and needs to be combined with other algorithms to improve its robustness. By developing Moravec’s idea, Chris Harris and Mike Stephens proposed the famous Harris corner detection algorithm, which is one of the most important corner detectors based on intensity [

6]. It computes the SSD in any direction. Simultaneously, it enhances the stability and robustness of the algorithm by introducing Gaussian smoothing factor. However, the computational efficiency of the technique is observably low. E. Mair et al. developed the FAST algorithm by constructing the optimal decision tree [

19]. Leutenegger et al. further proposed the binary robust invariant scalable keypoints (BRISK) algorithm by constructing a scale-space with the FAST score [

20]. Alcantarilla et al. proposed a fast multi-scale detector in non-linear scale spaces [

21]. Xiong et al. introduced directionality to FAST in order to avoid repeatedly searching [

22]. These FAST-based algorithms aim to speed up detection efficiency and retain good performance.

In this paper, a robust and efficient corner detector improved from the Harris corner detector, named the robust and efficient corner detector (RECD), is proposed. It is worth noting that the number of corners of an image is generally far fewer than 1% of the whole pixels. Thus, a key idea behind our method is to exclude a very large number of non-corners before detection. Because the non-maximum suppression (NMS) of the original Harris corner detector is complex, using an improved efficient NMS to reduce the complexity is another key idea. The original algorithm detects corners by three major steps. First, the image gradients are computed by the convolution of Gaussian first order partial derivatives in x and y directions with the image. Second, the CRF of each pixel is computed. Last, the maximum points are retained by NMS. In order to reduce the complexity, we analyze the three steps carefully and conduct many experiments. The experiment results show that the RECD possesses good robustness and efficiency. The RECD can be used for many visual processing domains because of its high speed and good performance.

The remainder of this paper is organized as follows. In

Section 2, we discuss briefly the Harris corner detector and two criteria for performance evaluation of corner detectors.

Section 3 introduces our detector, with a description of the algorithm and its implementation details.

Section 4 illustrates the results of comparative experiments and gives an analysis of the results. Finally, we conclude our paper in

Section 5.

3. Proposed Methods

Generally, a corner can be defined as the pixel of large gray change in two different directions. The RECD is mainly based on the Harris corner detector. To speed up this algorithm and enhance the robustness, the principle of the FAST algorithm is used in corner pre-detection to rule out many non-corners and retain those strong corners as real corners as described in

Section 3.1. Those uncertain corners are looked at as candidate corners to process further with Harris. Then, gradient calculation is analyzed as described in

Section 3.2. On the basis of the first two steps, we just conduct the Gaussian filtering at the neighborhood of the candidate corners, the size of the window is 5 × 5. We then only calculate the CRF of the candidate corners. This step is described in

Section 3.3. Finally, the resulting corners are obtained by the improved NMS as described in

Section 3.4. The test image sets we used are from standard databases [

24,

25] et al. In order to make the experimental results persuasive and convenient to compare with other methods, we selected these five images as test sets in the paper, which are shown in

Figure 1.

3.1. Non-Corners Exclusion

The FAST theory has good robustness for transformations, especially for rotation, owing to the use of a circular template. A corner is generally at the vertex of a sharp angle on the image edge. Transformations change the structure of an angle to some extent. But the continuity of an edge is relatively robust. The FAST can detect out those structural corners and retain those uncertain corners from a transformed image. By contrast with the FAST, the Harris corner detector directly conducts gradient computation with a small window of 3 × 3 on the whole image. So the uncertain corners can be detected further with the Harris detector. If an uncertain corner is also validated by the Harris, it is a real corner.

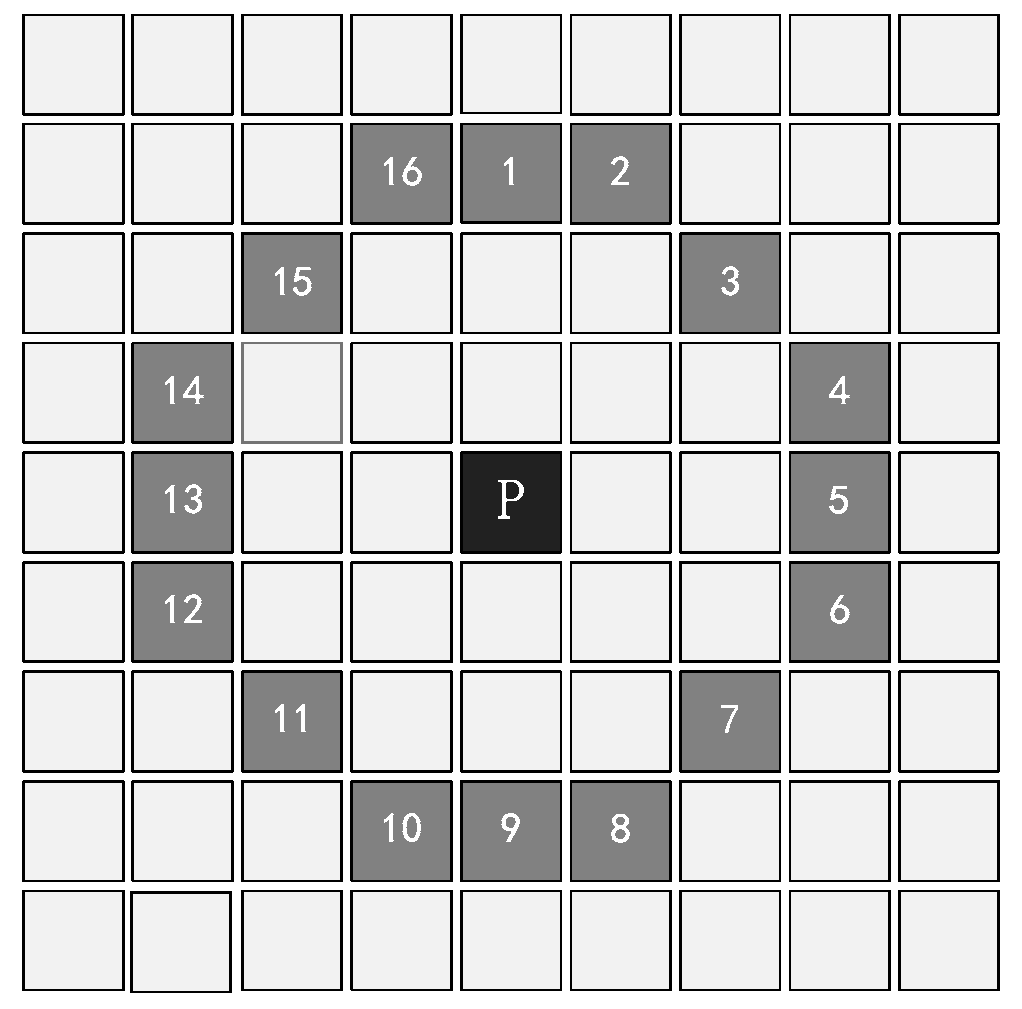

The key step of the FAST detector is that a circle of 16 pixels (a Bresenham circle of radius 3) is examined surrounding the point P as shown in the

Figure 2. The point P is classified as a corner if the intensities of at least

N contiguous pixels in the circle are all brighter (darker) than the intensity of the point plus (minus) a threshold

t.

N is actually a scale to judge the corners. Too large an

N can lead to several missed corners and the small

N will bring about too many weak corners. To ensure the accuracy and stability of the algorithm,

N is generally set to 12.

In this paper, fast corner detection theory is applied to the first step: corner pre-detection. Three of the four pixels 1, 5, 9 and 13 certainly exist in these 12 contiguous pixels. We first examine the pixels 1 and 9. If both of the intensities of the two pixels are in a range of t of the intensity of the point P, then P is excluded to be a corner and the computation for current pixel is terminated. If not, we examine the pixels 5 and 13. If at least three of the four pixels are all brighter (darker) than the intensity of the point plus (minus) a threshold t, the point P is a corner. This is because 12 contiguous pixels are bound to include three of the pixels which will be examined. Note that if only two nearest neighborhood pixels in the four pixels, for example pixels 1 and 5, are both extremely brighter or darker than P, then P still can be a corner. We called these uncertain corners candidate corners. For an image of n × n pixels, the time complexity of this step is O(n2). Using this method can reject most non-corners, so that the computational complexity is reduced to a great extent for the next step gradient calculation. The FAST principle can only detect those obvious corners when there are 12 contiguous pixels in the circle of radius 3. For the situation of contiguous pixels less than 12, it does not perform well. This method can also detect some corners adjacent to one another. The pixels can be divided into three types by the FAST: non-corners, uncertain corners and strong corners. Therefore, we use this principle to exclude those non-corners and mark those strong corners as real corners. The uncertain pixels are all considered candidate corners to be detected further with Harris. In this case, almost all of the real corners are retained in the set of candidate corners and strong corners.

3.2. Gradient Calculation

Let the candidate corners be the center of a 3 × 3 neighborhood. After step A, we calculate the gradients in x and y direction of candidate corners after filtering the image with the level difference operator and the vertical difference operator, respectively. It is efficient to reduce computation complexity so that only the gradients of candidate corners are calculated without filtering the whole image in the Harris detector. After step A, a majority of background pixels of low-frequency parts are ruled out, because those pixels are easy to distinguish. We assume that m (m << n2) pixels remain as candidate corners. The computation complexity of the step B depends on the results of step A. Generally, the background contains a majority of pixels in an image and step A can filter out most of them and m is much less than n2. It reduces many unnecessary operations in the background for the later steps. Actually, in the non-maximum suppression step, each pixel needs a 3 × 3 neighborhood to search the maximum of the CRF values. Thus, the gradients should be calculated for each pixel in this 3 × 3 neighborhood. Its time complexity is O (9m). In the Harris corner detector algorithm, this step has a time complexity of O (9n2).

3.3. Corner Response Function

The CRF step is computationally the most intensive and time-consuming stage of the Harris corner detector. The CRF of a pixel is calculated by determinant and trace of the autocorrelation matrix as in (2). The autocorrelation matrix is evaluated by Gaussian filtering for the squares and products of gradients as in (1). The CRF is calculated at each 3 × 3 neighborhood of candidate pixels. By employing the method in

Section 3.1, we can reject a large number of non-corners. Then we only calculate the CRF based on the results of step B. Hence, this step can be accelerated drastically. Because many false corners are rejected in

Section 3.1, the computational efficiency is improved much by calculating the CRF at the candidate corners. Also, the time complexity of this step depends on the

m and it is O (9

m).

3.4. Non-Maximum Suppression

NMS can be positively formulated as local maximum search [

26]. In the original Harris corner detector, if the CRF of a pixel is the local maximum of its neighborhood and greater than a given threshold value, the pixel is considered to be a corner. The neighborhood is usually (2

k + 1) × (2

k + 1) region centered around the pixel under consideration. The NMS is performed at each pixel. However, there is one main problem with this approach. Once a local maximum is found, this would imply that we can skip all other pixels in the neighborhood of the maximum pixel point, because they must be smaller than the maximum point. For solving this problem, Neubeck and Gool proposed the efficient non-maximum suppression (E-NMS) method [

26]. First, the algorithm partitions the input image into blocks of size (

k + 1) × (

k + 1). Then, it searches within each block for the greatest element. Finally, the full neighborhood of this greatest element is tested.Other pixels of each block can be skipped.

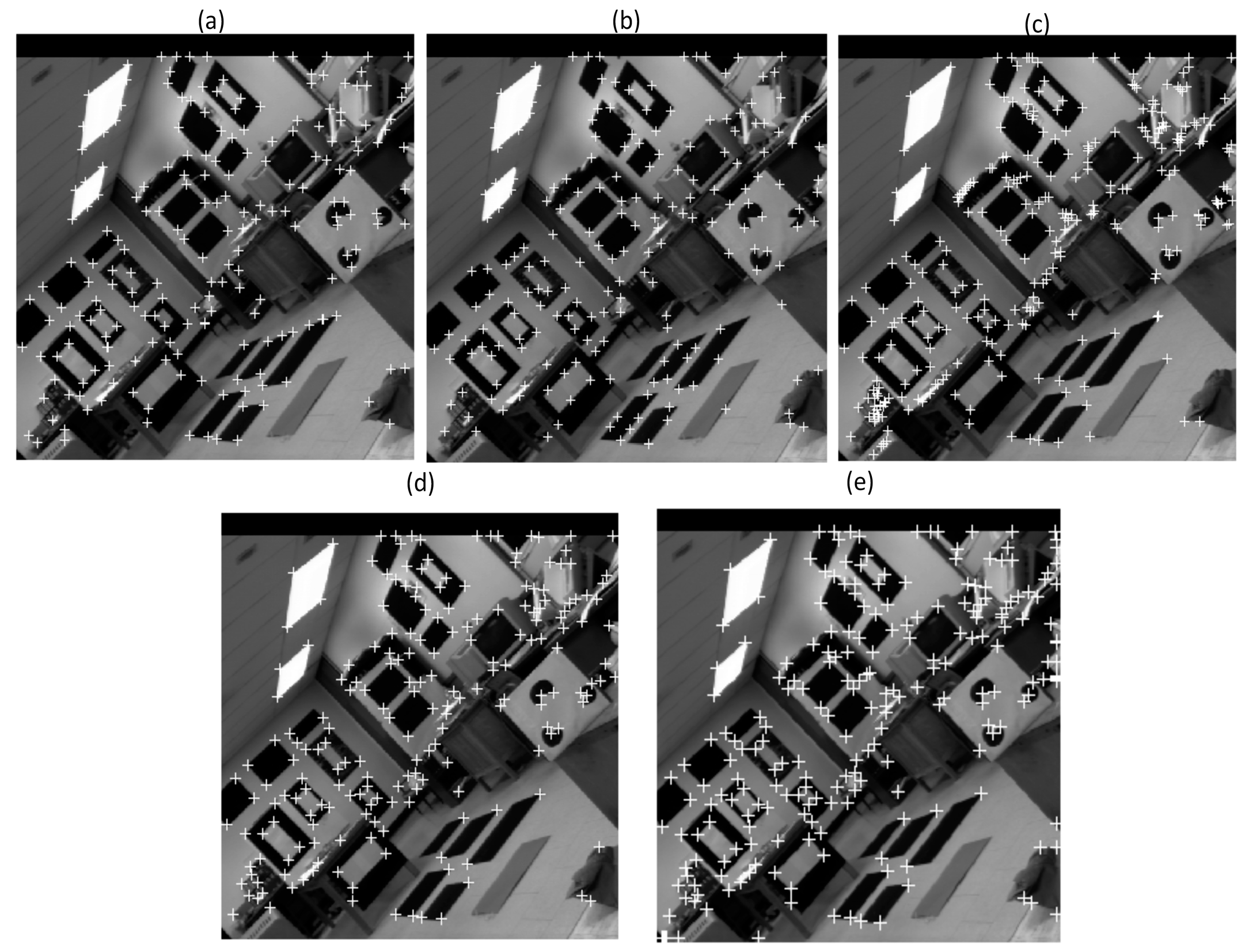

There is one main problem with the E-NMS algorithm. The areas of non-corners also execute the NMS procedure. This will inevitably increase complexity because of none existing corners. So, this paper improves the E-NMS algorithm. As an example shown in

Figure 3, let the neighborhood window be equal to 3 × 3. By employing the method in

Section 3.1, we have already calculated the candidate corners. First, we take a candidate corner as the center pixel to form a block of size 3 × 3 as shown in

Figure 3a. Second, we search within the four candidate corners for the maximum element. Here, we assume that the p is the maximum element. The maximum of time complexity is O (9

m) and the minimum is O (

m). If the maximum of black blocks is not the center element P, the center pixel is abandoned and the next candidate corner is tested. Third, the full neighborhood is tested for the maximum element and the other three candidate corners in the block of size 3 × 3 will be skipped as shown in

Figure 3b. Here the time complexity also can be taken as O (9

m). Finally, we continue to test until traversing all candidate corners which do not include the skipped candidate corners. This step can effectively solve the main problem and play a role in accelerating the proposed method. For the Harris, this step takes O (9

n2) owing to its operation for each pixel.

If the size of an image is very large with n, the total time complexity of Harris is O (9n2 + n2 + 9n2). The RECD takes O (n2 + 9m + 9m + 10m) or O (n2 + 9m + 9m + 18m). Thus if m < 4n2/7, the RECD has a faster speed. In fact, the m is far less than n2 for a realistic image because many non-corners are excluded. The proposed RECD can improve the detection efficiency.