1. Introduction

As an important alternative to the human eyes to detect, identify, and track moving targets steadily or continuously, electro-optical tracking systems (EOTSs) have drawn interest around the world [

1,

2,

3]. In an EOTS, in order to provide extremely accurate results within a short response time, one of the priorities is to have timely and accurate auto-focus technology (AFT) to capture the image information of the moving objects [

4,

5,

6].

At present, there are mainly two types of AFTs [

7,

8]: conventional AFTs based on measuring distance, and AFTs based on digital image processing. Conventional AFTs include the image deflection method and the position sensitive detector (PSD) measurement distance method [

9], which mainly depend on infrared or ultrasonic distance measurements [

10,

11] with transmitters and receivers, leading to increased cost of the systems. In addition, ultrasonic methods cannot auto-focus accurately on objects behind the glass [

12], thus limiting its usage scenarios. Compared to the conventional AFTs, auto-focusing methods based on digital image processing (DIP-AF) have been found to have the advantages of integrated, miniaturized, and low-cost applications [

13], such as digital camera, video camera, and microscope imaging [

14,

15]. However, little attention has been paid to AFTs with telescope systems, which are critical for capturing large amounts of image information through high-resolution CCDs in applications of astronomy observations [

16,

17]. To investigate the focusing performance of the telescope system, in this paper, we analyzed the effect of the image estimation function and focusing window selection, and we propose a novel adaptive dynamic focusing window selection method with high sensitivity. This paper is organized as follows: The principle of DIP-AF is firstly given in

Section 2, then the image estimation functions of different methods are compared in

Section 3. In

Section 4, we propose a novel method to improve the detecting accuracy and speed of the DIP-AF of a telescope system. Finally, conclusions are drawn in

Section 5.

2. Auto-Focus Experiment Set

Generally speaking, if a CCD camera sensor is off the focal plane of an optical system, one can only obtain defocused images with blurred edges. In the frequency domain, this means that the high-frequency components of an image with distinct edges are filtered out due to the low-frequency pass characteristics of the system [

18]. To capture a clear image, the basic idea of the AFT is to adjust the lens of the optical system to a proper position so that the CCD camera sensor is well positioned at the focal plane, and abundant high-frequency (HF) components are then obtained to provide a distinct boundary contour of the image. In digital image processing techniques, an HF filter is used to obtain the HF components of the fast Fourier transform (FFT) results of the CCD image [

19]. If the amplitudes of corresponding HF components are larger than some present value, then we can say that the CCD image is clear enough, i.e., auto-focus has been achieved [

20].

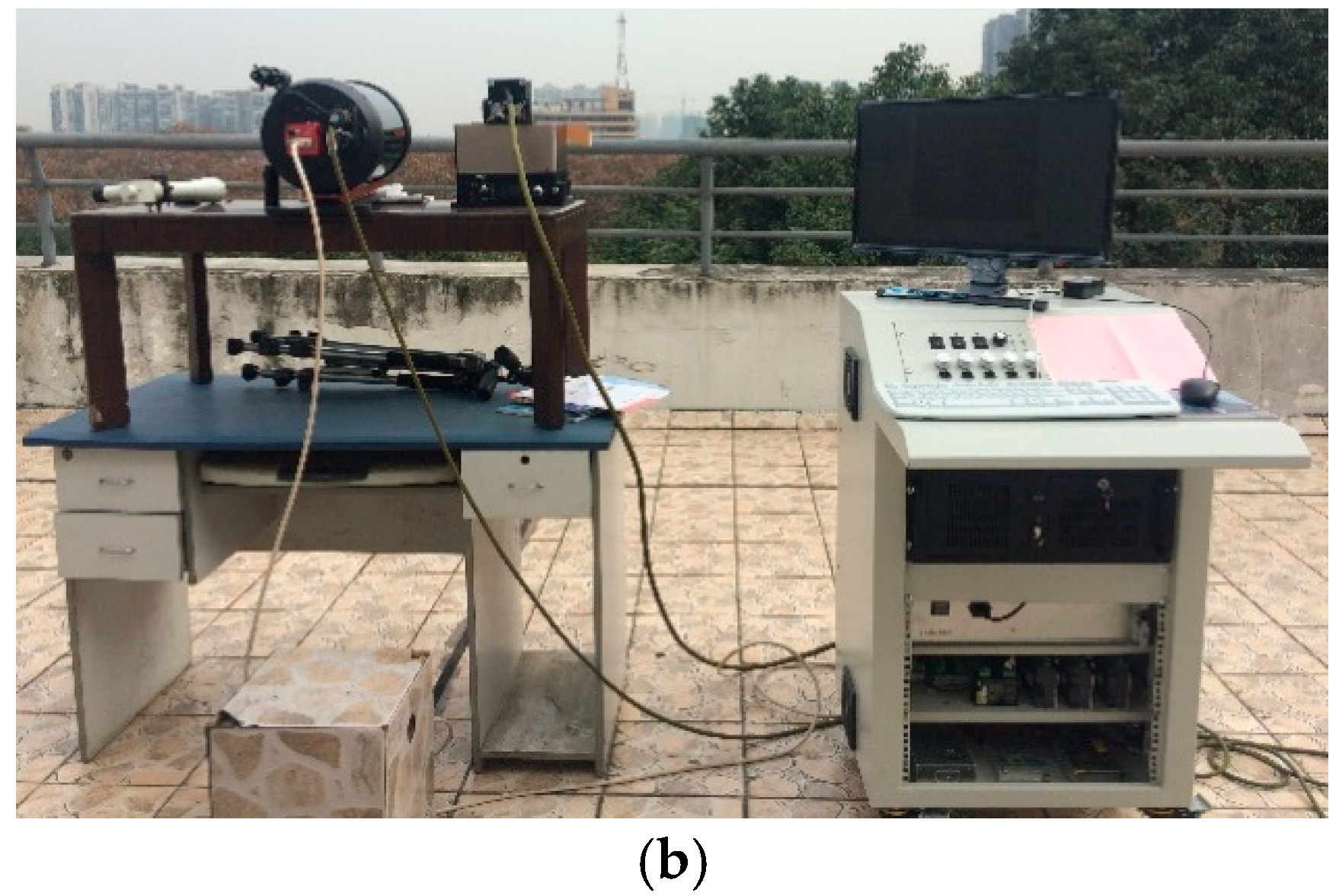

Applying the auto-focus principle above, the AFT of a Cassegrain telescope EOTS system was investigated, as shown in

Figure 1. The EOTS system mainly consists of five parts [

21]: Cassegrain telescope, CCD camera, PC control module, stepper motor, and mechanical conversion module. In the experiment, a Cassegrain telescope composed of a fold back mirror structure with two primary and secondary parabolic reflectors was used to collect the information of detected objects. To enhance the imaging quality, a Schmidt corrector was mounted in front of the telescope; there was also a CCD [

22] camera with a narrow band filter to detect the image and to send the filtered results to the PC control module. In the control module, based on the image estimation, a stepper motor was used to adjust the position of the primary mirror of the telescope in the control device.

In

Figure 1, the auto-focus process is given as follows [

23,

24]: firstly, the CCD camera captures information of tracked objects through the telescope and sends the image to the PC control program. Next, the PC control program performs an estimation of the CCD image and sends commands to rotate the stepper motor in a clockwise or counter-clockwise direction. Then, a mechanical conversion module turns the rotation force into a driver that adjusts the position of the primary mirror in the telescope forward or backward, and subsequently changes the imaging position. The above processes are repeated until the CCD camera is on the image plane of the telescope so that a clear image is obtained, making this auto-focus process a closed-loop system.

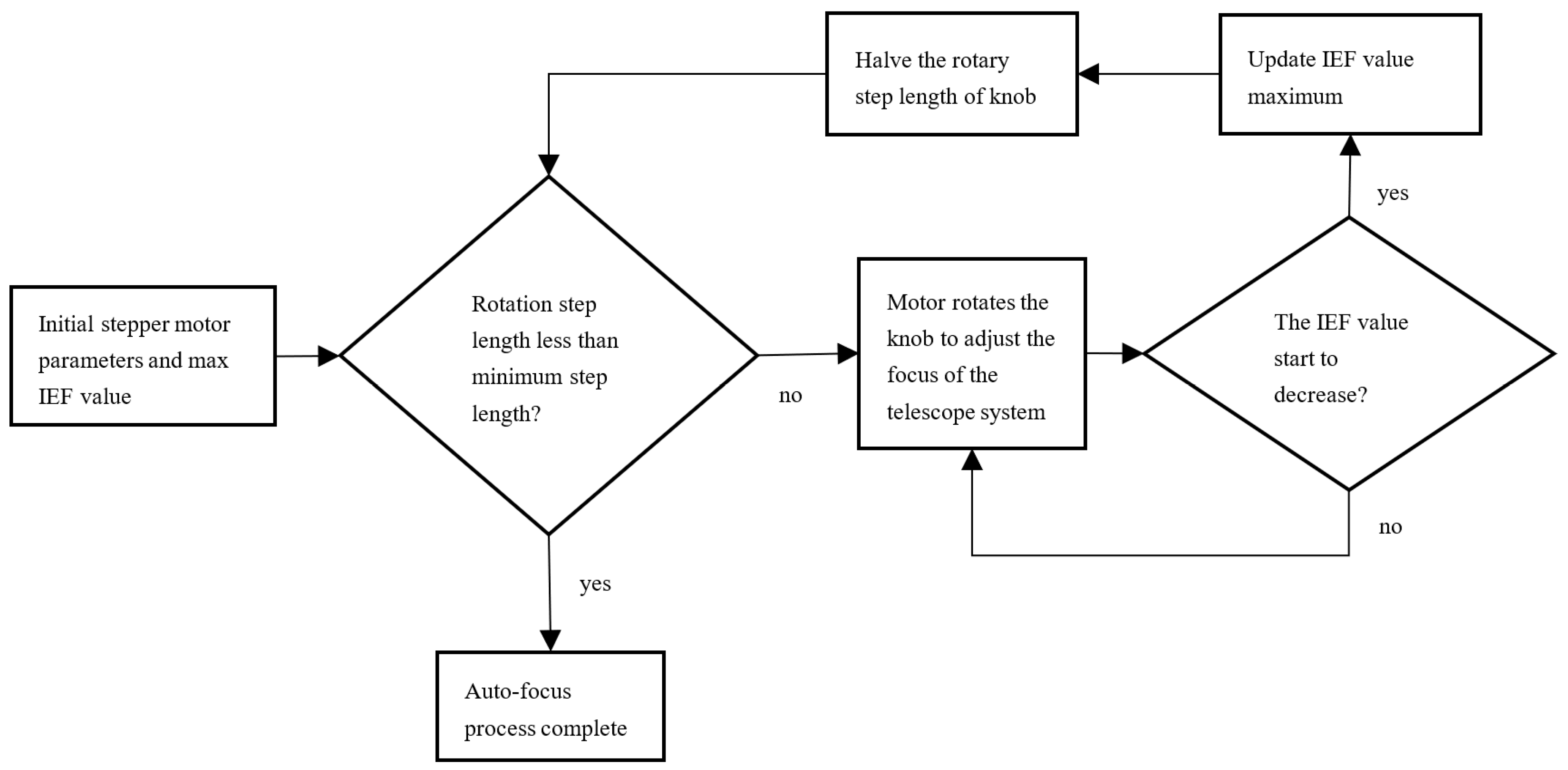

The focus search algorithm determines the concrete focus process of the system. In the experiment, the hill-climbing search method was used to achieve a better focusing effect. The specific steps are shown in

Figure 2. First, we initialize the starting position of the motor knob and set a large initial rotation step length, recording the initial IEF value as the maximum value. The stepper motor rotates the knob at the current step length to adjust the focal length of the system and constantly calculates the IEF value. When the IEF value starts to decrease, it means that the best focusing position has been missed, and the knob is then rotated one step backward. Then, the current step length size is halved and the above search process is repeated until the search is completed with the minimum step size (accuracy) of the stepper motor. Then, the auto-focus process can be considered as finished.

3. Comparison of Image Estimation Functions

Different scenes, as captured by the EOTS, are shown in

Figure 3. In the experiment, the CCD was first adjusted to find the correct focus position for each case. Then, the stepper motor was controlled to give a fixed clockwise and counterclockwise rotation angle, leading to a fixed moving distance of the primary mirror. When the CCD camera was in front of, located at, and behind the image plane, 17 pictures were obtained continuously for each of the scenarios shown in

Figure 3.

In our auto-focus process, the sharpness of the image captured by the CCD camera should be estimated firstly. Currently, the most commonly used image intelligibility estimation functions (IEF) are mainly performed in the spatial and frequency domains. Spatial domain IEFs include the Tenengrad function [

25], Brenner function [

26], variance function, and Laplace function [

27], while the frequency domain IEFs mainly include the wavelet function and statistical gray level entropy functions [

28]. Compared to blurred images, clear images have more detailed information in the spatial domain, and the gray scale gradients of adjacent pixels are relatively large. A suitable image function supports us in determining whether the image is focused or not, which is a pivotal step in the auto-focus process [

29]. In order to provide an accurate comparison of the focusing sensitivity, the estimation function was normalized by the ratio of the peak deviation to the maximum value of the function and defined as

where

S is the normalized function value, and

Emax and

Emin are the maximum and minimum values, respectively, of the original estimation function.

(1) Tenengrad Function

The Tenengrad function takes the sum of the squares of image gradients as the evaluation index, and the gradients include horizontal and vertical directions [

30]. The Sobel operator and image convolution are used to realize the function, which is written as

where

is the convolution of the pixel value of point

and the Sobel operator, and it can be calculated as

where

Gx and

Gy are the horizontal and vertical gradients extracted by the Sobel operator, respectively.

Table 1 lists the Tenengrad function evaluation values of the focus position and the position of the front and back three steps in eight scenes. The fourth row of data presents the focus position function values.

(2) Brenner Function

The traditional Brenner function can extract the gradient information by calculating the gray difference between two adjacent pixels. It is the simplest method of gradient image evaluation. It can be expressed as

In order to ensure the focal length of the EOTS, the field-of-view angle is very small, so even if the distance is several kilometers, the field of view of telescope observation is also very small, as only a small part of the object is the target. If this part of the object does not have obvious texture, the Brenner function only contains the gradient information in the transverse direction and does not provide statistics on the longitudinal gradient; this may cause that part of the edge information to be lost, which affects the focusing accuracy. Therefore, based on the Brenner function, the longitudinal gray gradient is added, and the calculation method is as follows [

31]:

Table 2 lists the Brenner function evaluation values of the focus position and the position of the front and back three steps in eight scenes. The fourth row of data presents the focus position function value.

(3) Variance

A clear image has a larger gray scale difference than a fuzzy image, which shows that the variance of the image is larger, so the variance function is usually used to represent the gradient information of the image. The variance function can be expressed as

where

u represents the average gray level of the image, and takes the following form:

where

M and

N are the image dimensions.

Table 3 lists the variance function evaluation values of the focus position and the position of the front and back three steps in eight scenes. The fourth row of data presents the focus position function value.

(4) Laplace Function

The Laplace function is also a commonly used method to evaluate the gray difference in an image, including 4-domain and 8-domain Laplace templates. In order to maximize the gradient information of the image, 8-domain Laplace templates were used in the experiment and can be obtained as

Table 4 lists the Laplace function evaluation values of the focus position and the position of the front and back three steps in eight scenes. The fourth row of data presents the focus position function value.

(5) Entropy

The entropy function is based on the premise that the clearer the image is, the more information it contains—that is, the greater the entropy of the image—so the entropy function can also be used to reflect the clarity of the image. The entropy function is calculated by

Table 5 lists the entropy function evaluation values of the focus position and the position of the front and back three steps in eight scenes. The fourth row of data presents the focus position function value.

For all these scenes, the sharpness estimations by the aforementioned IEFs of the captured image curves were calculated and are shown in

Figure 4. In order to analyze the performance of each IEF, a whole-area focusing window was adopted, i.e., the whole picture was involved in the image estimation. For each subfigure in

Figure 4, the abscissa and the ordinate are the picture sequence (where the ninth is the correct focus of the picture) and the normalized estimation function value, respectively.

In

Figure 4a–h, the entropy function has multiple peaks, indicating the presence of instability. In

Figure 4h, the Brenner function and the Laplace function also have multiple extrema which are not applicable. In terms of the unimodal property found in the corresponding eight scenarios, the Tenengrad and the variance IEFs are relatively stable. In addition, the normalized Tenengrad IEF value drops faster on both sides of the peak, indicating that the Tenengrad IEF has higher sensitivity than the variance one. In order to quantify the focusing effect of different IEFs, the efficiency factors was defined as

where

Emax and

Emin represent the maximum and minimum values of the IEF, respectively. The higher the value of Ef is, the better the focusing effect is.

Table 6 lists the Ef values of the different IEFs in the eight scenarios.

Based on the analysis above, the Tenengrad IEF shows its applicability in reflecting the focusing process in a variety of scenarios, and it can be used as an effective and stable estimation algorithm in the auto-focusing process of a telescope system. It was also found that all IEFs are also related to the properties of the scenarios themselves, and the sparser the scenarios (

Figure 3h), the smoother the change in the IEF value.

4. An Improved Focusing Window Selection Method

In

Section 3, we applied different IEFs to a whole-picture focusing window which contained lots of information. For telescope systems, it will take a long time to calculate the IEF values during digital image processing, resulting in the loss of the high-frequency part of the distinct edge and poor real-time performance of the system [

32]. Further, in Scene h of

Figure 3, because the image is sparse, all IEF values show smooth features, which will affect the accuracy of auto-focusing. In order to speed up the process and increase the accuracy of auto-focusing, a dynamic adaptive focusing window selection (DAFWS) method is presented.

In the DAFWS, the number of pixels is greatly reduced during sharpness estimation, and the time consumed by the calculation can be significantly shortened [

33]. In the meantime, the application of a focusing window will also improve the quality, accuracy, and sensitivity of image estimation [

34]. The traditional methods of sampling focusing windows [

35,

36], such as center window, multiple spots window, and inverted T window, only sample the pixels in the selected window uniformly, and information outside the window is completely lost. Besides, for these traditional approaches, the position of the target in the image must be determined before applying the auto-focus process, which will lead to an additional cost of calculation time [

37]. Another drawback of these conventional methods is that the sharpness scores significantly depend on whether the target is in the selected focus window. Once the actual imaging target is not in the window or in the central position of the selected window, there will be a greater discrepancy between the IEF value and the actual situation.

In order to resolve this inadequacy, an efficient and accurate method is proposed. Firstly, instead of sampling inside a certain window, the whole image is sampled evenly, and the whole gray distribution feature of the image is retained. There is no need to locate the target in advance. Second, rather than setting the focus window before starting the auto-focus process, an adaptive method is applied to provide a dynamic focusing window. The pixels are divided into two categories: ROI (region of interest) pixels and background pixels. Experiments determined that the IEF values of the ROI pixels change greatly compared to the ones of the background pixels during the focusing process. Therefore, the ROI region can be selected out of the background region and extracted as the focus window. For example, the texture and boundary on the railing of

Figure 3h have a greater impact on the estimation function, and they are the ROI. The sky part, belonging to the background region, is of no concern during the focusing process. The specific process of this even sampling–DAFW (ES-DAFW) method is given as follows.

An M × N image is divided into several

m ×

n sub-blocks. Then, the IEF value of each sub-block can be given by the following formula:

where (

x,

y) is the pixel coordinate in the sub-block, and

E represents the Tenengrad IEF value of the image sub-block. The IEF value of each sub-block is recalculated as

E′ after each rotation of the stepper motor. Then, the IEF value of each sub-block is normalized as

The threshold is set to

u, then the sub-blocks with normalized IEF value larger than

u (i.e.,

) are ROI sub-blocks, while the remaining ones are considered as background blocks. For example, in

Figure 5, a blurred picture with severe defocus of the scene in

Figure 3h is divided into 8 × 8 blocks. Then, we used the Tenengrad IEF to calculate the normalized value of all sub-blocks in the auto-focus process, as shown in

Figure 3h, and we chose five ROI sub-blocks with the largest change in the Δ value, as shown in black boxes in

Figure 5.

To verify the effectiveness of the improved dynamic focusing window, for each of the images in

Figure 3, we calculated the normalized values of the Tenengrad IEF for three focus window algorithms: whole-image focus window, traditional center window, and dynamic focus window.

Figure 6a–h gives a comparison of the calculated results from the three methods.

Table 7 shows the average slope of the IEF values around the peak value (positive focus position) under different window selection methods in the eight scenes. The larger the average slope, the more significant the change in the IEF value, which is helpful to improve the accuracy of the auto-focusing. From

Figure 6 and

Table 6, the curves of the whole-image focus window agree with the traditional center window very well for all scenarios, while the ES-DAFW method has sharper peaks than the other two methods. Especially in

Figure 6h and

Table 1, for Scene h, the improved performance of the ES-DAFW comes to a significant level when dealing with relatively sparse images like

Figure 3h. In

Figure 6h, the curves of the ES-DAFW method are much sharper than those calculated by the whole-image focus window and traditional center window methods, indicating a great improvement of the sensitivity of the IEF on both sides of the peak and, thereby, a fast auto-focus speed. We believe that in a telescope system with sparse imaging substance, this ES-DAFW would achieve a more accurate and much faster auto-focus process.

In order to compare the efficiency of different window selection methods in the auto-focusing process of the telescope EOTS system, we used whole-image focus (WIF), traditional center focus windows (TCFW), and ES-DAFW to test the auto-focus of the system ten times on the eight scenes in

Figure 3, and we recorded the average time required for the completion of the auto-focus process, as shown in

Table 8. It can be seen that in the case of sparse scenes, as shown in

Table 8, the traditional method takes longer to complete the auto-focus process. ES-DAFW can take less time to complete the auto-focus process in all scenes, including sparse scenes, because it only uses the blocks in the image whose IEF value changes greatly. This will significantly improve the auto-focus efficiency of the telescope EOTS system.