1.1. Context

In the Colombian context, coffee is the most exported agricultural product, followed by cut flowers, bananas, cocoa, and sugarcane [

12]. In the country, there are more than 903,000 hectares dedicated to it, and approximately 563,000 families depend directly on this economic activity. Colombian coffee has been considered one of the best soft coffees in the world, and this product has traditionally been of great importance for Colombian exports. Currently, 14,000,000 bags of 60 kg are exported every year to the USA, Japan, and Germany, among other countries [

13].

In terms of employment generation and income distribution, coffee growing is a sector with superlative relevance for local economies and the maintenance of the social fabric in many regions of the country. For this reason, it is justified to contribute by solutions that strengthen the profitability of families engaged in this activity and improve their life quality, either by increasing the selling price of the product, reducing production costs, or increasing the number of units produced per unit of cultivated area.

Among the main threats for strengthening the coffee growing families’ profitability, nutritional deficiencies and phytosanitary problems stand out. Phytosanitary problems are caused by pests such as the coffee borer beetle and diseases such as CLR, whose proliferation increases due to the drastic climate changes (from long drought periods to extended rainy seasons) that occur in Colombia. In the case of CLR, when the climatic conditions are unfavorable and the agronomic management deficient, at least 20% of the total expected harvest is not able to be collected. Additionally, the quality of coffee deteriorates dramatically, reducing the marketing price and increasing the costs associated with its control [

10]. In extreme cases of CLR, the disease has caused devastating losses that have represented between 70% and 80% of the total harvest.

Although it is a disease with vertiginous spread and highly negative repercussions for the coffee farmers’ economy, its detection and diagnosis are carried out using visual inspection while walking through the crops. This method refers to the recognition of plant diseases using visual inspections, development scales, and standard severity diagrams for their measurement [

14]. People in charge of the crops walk through them, watching and touching the plants to identify symptoms associated with the particular disease that produces them and calculate infection levels [

15].

Unfortunately, because the process consists of a visual inspection, which is not done with enough regularity, most of the time, the detection of the development stage of the disease is late, its control becomes more difficult, and considerable economic losses are inevitable.

1.2. State-of-the-Art

Plenty of research has been done on applying technological methods and strategies to diagnose diseases [

16], to detect pests [

17], and to obtain nutritional information [

18], among other objectives, for different types of crops. The phytosanitary status of the plantations is closely related to different crucial factors in their ecosystem, such as weather, altitude, and type of soil, among others. Therefore, several biological and engineering studies aim to implement practical solutions based on these factors to improve farming techniques to preserve healthy crops.

The most commonly used methods for monitoring the phytosanitary status efficiently, including those that make use of technology, are: (i) Remote Sensing (RS), (ii) visual detection, (iii) biological intervention, (iv) Wireless Sensor Networks (WSN), and (v) Machine Learning (ML) supported on a source of data. Thus, this work is intended to present recent relevant studies based on the mentioned methods for detecting anomalies on the plantations.

- (i)

Remote Sensing (RS)

RS is based on the interaction of electromagnetic radiation with any material. In the case of agriculture, it involves the non-contact measurement of the reflected radiation from soil or plants to assess different attributes such as the Leaf Area Index (LAI), chlorophyll content, water stress, weed density, and crop nutrients, among others. Those measurements can be made using satellites, aircraft, drones, tractors, and hand held sensors [

19]. In addition to measuring reflected radiation, there are two other RS techniques that analyze fluorescent and thermal energy emitted by the leaves. However, the most common technique is reflectance, because the amount of reflected radiation from the plants is inversely related to the radiation absorbed by their pigments, and this can serve as an indicator of their health status [

19]. RS helps the indirect detection of problems in agricultural fields since this method captures unusual behaviors in crops’ reflectance, which can be caused by factors like nutritional deficiencies, pests and diseases, and water stress. In 2017, Calvario et al. [

20] monitored agave crops using Unmanned Aerial Vehicles (UAVs) and integrating RS with unsupervised machine learning (

k-means) to classify agave plants and weed. In 2003, Goel et al. [

21] studied the detection of changes in the spectral response in corn (

Zea mays) due to nitrogen application rates and weed control. For that purpose, the researchers employed a hyperspectral sensor called the Compact Airborne Spectrographic Imager (CASI) and analyzed the reflectance values of 72 bands with a wavelength between 409 and 947 nm, which comprise part of the visible and Near-Infrared (NIR) regions of the electromagnetic spectrum. The obtained results demonstrated the potential of detecting weed infestations and nitrogen stress using the hyperspectral sensor CASI. Specifically, the researchers found that the best fitting bands for the detection were the wavelength regions near 498 nm and 671 nm, respectively, as seen in

Figure 2.

It has been shown that using satellites’ multispectral images, it is possible to detect the location of crops [

22], but the resolution of satellites images does not allow early detection of the phytosanitary of individual lots of plants. Regarding the phytosanitary status of the plants, the water and the type of soil are two components that play an essential role in their health. In 2017, Bolaños et al. [

23] proposed a characterization method using the visible and infrared spectrum to identify these components, through low cost cameras with two different filters, Roscolux #19 and Roscolux #2007, and a multi-rotor air vehicle. Through this method and using portable and highly qualified devices, those hard-to-reach places were monitored and analyzed to detect anomalies that may cause diseases in the crop. This monitored phase provided a characterization of the Normalized Difference Vegetation Index (NDVI), as seen in the example of

Figure 3, which was used to categorize essential characteristics of the crop, such as crop health, diseased plants or soil, and water or others.

In 2017, Chemura et al. proposed a method to predict the presence of diseases and pests early among coffee trees based on unnoticeable water stress. For that purpose, multispectral scanners with filters with wavebands from the visual spectrum and near infrared region were placed on a UAV [

25]. The wavebands scanner results showed inflections points between the regions 430 nm and 705–735 nm due to the water content in coffee trees.These results underlined the importance of a suitable irrigation plan according to the water requirements of the trees, causing an improvement in productivity. Although the later region indicated relevant values, the waveband of 430 nm was the most relevant band of remote sensing for predicting the water plant content directly related to its stress. However, in [

25], the authors remarked that although the results were promising, there were some missing valid components that could allow the model to be suitable and testable in real conditions. For that purpose, they recommended using hyperspectral cameras, which provide more precise measured waveband results.

- (ii)

Visual Detection

The detection of visual symptoms uses the changes in the plant’s appearance (colors, forms, lesions, spots) as an indicator of it being attacked by a disease or pest [

15]. In the survey of Hamuda et al. [

26], image based plant segmentation, which is the process of classifying an image into plant and non-plant, was used for detecting diseases in plants [

27]. For instance, for the evaluation of the CLR’s infection percentage in a specific lot, the number of diseased leaves in 60 random trees had to be divided into the total number of leaves in those trees and multiplied by 100 (see Equation (

1)). A leaf is considered diseased with CLR when chlorotic spots or orange dust are observed on it. The severity of the disease can be divided into five categories depending on the number and diameter of rust orange spots, as seen in

Figure 4.

A visual inspection can be carried out to detect the presence of chlorotic spots on the leaves, which are then used for measuring the incidence and severity of the disease [

10].

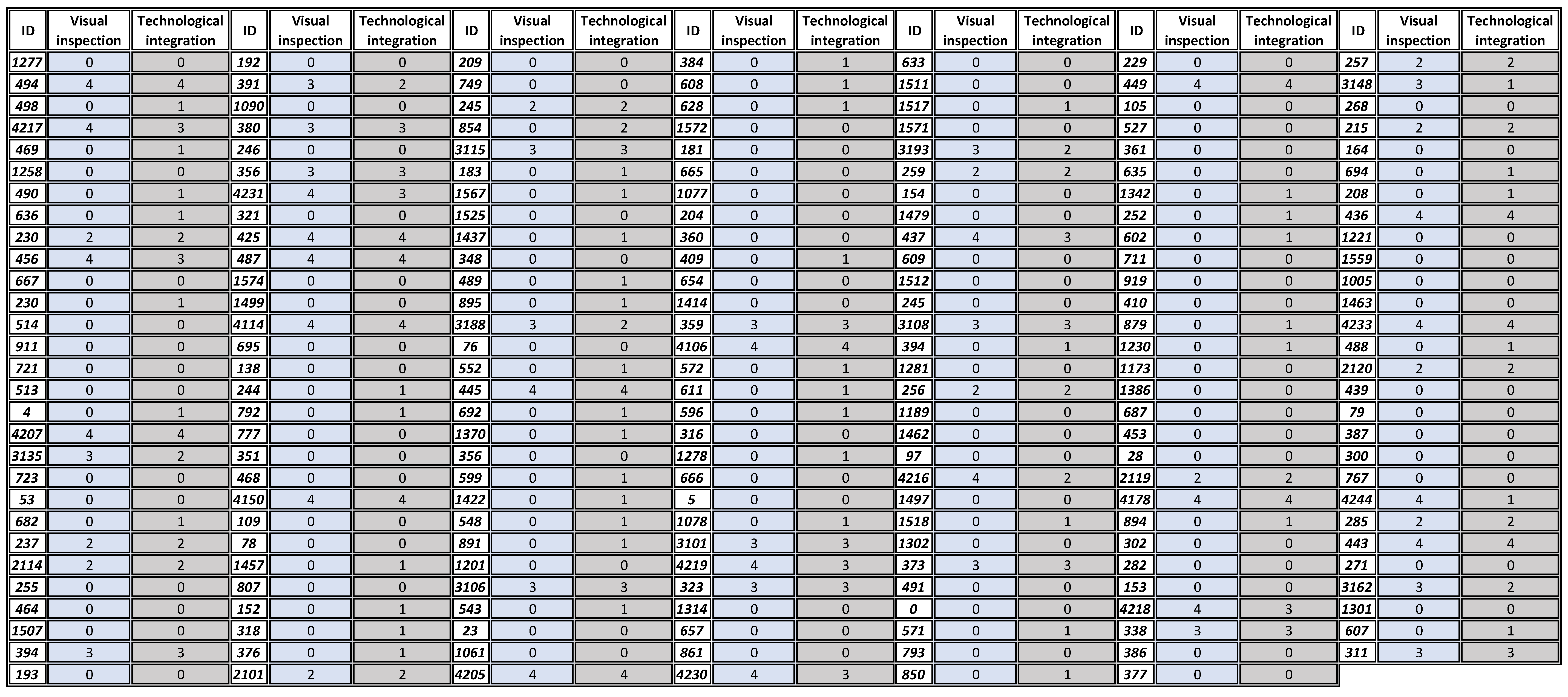

To understand the conditions conducive to the development of CLR and, subsequently, refine the disease control, Avelino et al. [

29] monitored such development on 73 coffee crops in Honduras for 1–3 years. Thereby, through the analysis of production situation variables such as climate, soil components, coffee tree productive characteristics, and crop management patterns, the researchers aimed to establish a relationship with the presence of rust. The result of this research indicated that CLR epidemics depend on the diverse production situations based on

Table 1, linked as well to the local conditions of the plantation. Due to the above, these results reflect the need for the consideration of a certified growing system that aims for sustainability, taking into consideration production situations and, thus, preventing the development of pests and diseases.

- (iii)

Biological Intervention

Several authors stated the importance of the relationship between living beings sharing the same environment. One of them was Haddad et al. [

30], who in 2009, proposed a study to determine if seven selected isolated bacteria under greenhouse conditions would efficiently detect and control CLR. For the development of this research, they inoculated these bacteria: six

Bacillus sp., B10, B25, B157, B175, B205, and B281, and one

Pseudomonas sp., P286, which help to detect and control CLR in the early development stages, according to a preliminary result presented by Haddad et al. (2007). For the experiment, two important coffee varieties, Mundo Novo and Catuai, were selected due to the high susceptibility to CLR. Therefore, for three years, the varieties with the disease interacted with different treatments (bacteria) to analyze the behavior evolution between them. Based on the results of the treatments, the isolates P286 and B157 were as efficient as the copper fungicide in controlling the rust. Hence, considering the harmful effects due to the copper fungicide, the application of biological control with the B157 isolate of

Bacillus sp. may be a reliable alternative solution to CLR management. That is why this research displayed the opportunity to successfully biocontrol CLR, for specialty coffee growers.

Jackson et al. [

31], in 2012, proposed as well a biological detection and control based on a fungus,

Lecanicillium lecanii. Their primary interest in the crops, in general, was the analogy of the coexistence of organisms in a specific environment with defined conditions that encounter a perfect balance. Given the above, the biological control system of the

A. instabilis ants were mutualistically associated with the white halos of the fungus,

Lecanicillium lecanii, based on the CLR effect.

However, the hypothesis stated the possibility that spores from

Lecanicillium lecanii help to attack the

Hemileia vastatrix before the rainy season. The effect of the time delay of

Lecanicillium lecanii in

Hemileia vastatrix resulted in a relationship between the two fungi and the ants not to be demonstrated, in spite of the control experiment resembling the real world. In conclusion, the restriction of biotic factors directly affects the development of CLR; therefore, for future work, it is important to consider the climate variation of an ecosystem to be able to predict such development [

31].

- (iv)

Wireless Sensor Networks (WSN)

Wireless Sensor Networks (WSN) are a technology that is being used in many countries worldwide to monitor different agricultural characteristics in real time and remotely. It consists of multiple non-assisted embedded devices, called sensor nodes, that collect data in the field and communicate them wirelessly to a centralized processing station, which is known as the Base Station (BS). The BS has data storage, data processing, and data fusion capabilities, and it is in charge of transmitting the received data to the Internet to present them to an end-user [

32]. Once the collected data are stored on a central server on the Internet, further analysis, processing, and visualization techniques are applied to extract valuable information and hidden correlations, which can help to detect changes in crop characteristics. These changes could be used as indicators of phytosanitary problems such as nutritional deficiencies, pests, diseases, and water stress. WSN is a powerful technology since the information of remote and inaccessible physical environments can be easily accessed through the Internet, with the help of the cooperative and constant monitoring of multiple sensors [

33]. The sensor nodes in a WSN setup can vary in terms of their functions. Some of them can serve as simple data collectors that monitor a single physical phenomenon, while more powerful nodes may also perform more complex processing and aggregation operations. Some sensors can even have GPS modules that help them determine their particular location with high accuracy [

33]. The most common sensors used in WSN for agriculture are the ones that collect climate data, images, and frequencies. Chaudhary et al. [

34] emphasized in 2011 the importance of WSN in the field of PA by monitoring and controlling different critical parameters in a greenhouse through a microcontroller technology called Programmable System on a Chip (PSoC). As a consequence of the disproportionate rainfall dynamics, the need for controlling a suitable water distribution meeting those parameters inside the greenhouse arises. Thereby, the study tested the integration of wireless sensor node structures., with high bandwidth spectrum telecommunication technology. Mainly, it was proven that the integration was useful to determine an ideal irrigation plan that met the specific needs of a crop based on the interaction of the nodes within the greenhouse. Furthermore, the researchers recommended using reliable hardware with low current consumption to develop WSN projects, because it generates more confidence for the farmers concerning its incorporation with their crops and provides a longer battery life.

Besides, Piamonte et al. [

35] proposed in 2017 a WSN prototype for monitoring the bud rot of the African oil palm. With the use of pH, humidity, temperature, and luminosity sensors, they aimed to measure climate variations and edaphic (related to the soil) factors to detect the presence of the fungus that causes the disease indirectly.

- (v)

Machine Learning

The domain concerned with building intelligent machines that can perform specific tasks just like a human is called Artificial Intelligence (AI) [

36]. One of the main subareas of AI is Machine Learning (ML), which aims to extract complex patterns from large amounts of raw data automatically to predict future behaviors. When the extracting process of those patterns is taken to a more detailed level, where computers learn complicated real-world concepts by building them out of simpler ones in a hierarchical way, ML enters one of its most relevant subsets: Deep Learning (DL) [

37]. The functionality of DL is an attempt to mimic the activity in layers of neurons in the human brain. The central structure that DL uses is called an Artificial Neural Network (ANN), which is composed of multiple layers of neurons and weighted connections between them. The neurons are excitable units that transform information, whereas the connections are in charge of rescaling the output of one layer of neurons and transmitting it to the next one to serve as its input [

38]. Inputting data such as images, videos, sound, and text through the ANN, DL builds hierarchical structures and levels of representation and abstraction that enable the identification of underlying patterns [

36]. One application of finding patterns through DL can be for estimating plant characteristics using non-invasive methodologies by means of digital images and machine learning. Sulistyo et al. [

39] presented a computational intelligence vision sensing approach that estimated nutrient content in wheat leaves. This approach analyzed color features of the leaves’ images captured in the field with different lighting conditions to estimate nitrogen content in wheat leaves. Another work of Sulistyo et al. [

40] proposed a method to detect nitrogen content in wheat leaves by using color constancy with neural networks’ fusion and a genetic algorithm that normalized plant images due to different sunlight intensities. Sulistyo et al. [

41] also developed a method for extracting statistical features from wheat plant images, more specifically to estimate the nitrogen content in real context environments that can have variations in light intensities. This work provided a robust method for image segmentation using deep layer multilayer perceptron to remove complex backgrounds and used genetic algorithms to fine tune the color normalization. The output of the system after image segmentation and color normalization was then used as an input to several standard multi-layer perceptrons with different hidden layer nodes, which then combined their outputs using a simple and weighted averaging method. Fuentes et al. [

42] presented a robust deep learning based detector to classify in real-time different types of diseases and pests in tomatoes. For such a task, the detector used images from RGB cameras (multiple resolutions and different devices such as mobile phones or digital cameras). This method detected if the crop had a disease or pest and which type it was. Similarly, Picon et al. [

43] developed an automatic deep residual neural network algorithm to detect multiple plant diseases in real time, using mobile devices’ cameras as the input source. The algorithm was capable of detecting three types of diseases on wheat crops: (i)

Septoria (

Septoria tritici), (ii) tan spot (

Drechslera tritici-repentis), and (iii) rust (

Puccinia striiformis and

Puccinia recondita). Related to CLR, research has been done, such as that by Chemura et al. [

44], who evaluated the potential of Sentinel-2 bands to detect the CLR infection levels early due to its devastating rates. Through the employment of the Random Forest (RF) and Partial Least Squares Discriminant Analysis (PLS-DA) algorithms, such levels could be identified for early CLR management. The researchers employed the variety of Yellow Catuai, which was chosen due to its CLR susceptibility. In this matter, Chemura et al. considered only seven Sentinel-2 Multispectral Instrument (MSI) bands due to the high resolution stated by previous works in biological studies. Based on the selected bands, the research results determined that the CLR reflectance was higher in NIR regions of the spectrum, as could be seen in leaves from the bands B4 (665 nm), B5 (705 nm), and B6 (740 nm). These bands achieved a high overall CLR discrimination of 28.5% and 71.4% using the RF and PLS-DA algorithms respectively. Thus, the band and vegetation indices derived from the MSI of Sentinel-2 achieved the detection of the disease and an evaluation of CLR in the early stages, avoiding unnecessary chemical protection in healthy trees.

In 2017, Chemura et al. [

45] studied the detection of CLR through the reflectance of the leaves at specific electromagnetic wavelengths. The objective of their investigation was to assess the utility of the wavebands used by the Sentinel-2 Multispectral Imager in detection models. The models were created using Partial Least Squares Regression (PLSR) and the non-linear Radial Basis Function partial Least Squares Regression (RBF-PLS) machine learning algorithm. Then, both models were compared, resulting in a low accuracy prediction of the state of the disease for the PLSR, due to its over-fitting, and a high accuracy prediction for the RBS-PLS model. Additionally, Chemura et al., through weighting of the importance of the variables, found that the blue, red, and RE1 bands had a high model correlation, but the implementation excluding the remaining four bands led to lower accuracy in both models. On the other hand, if more than one NIR or red edge (RE) band were used, then the RBS-PLS model developed would over-fit, resulting in a non-transferable model. However, Chemura et al. emphasized the utilization of the RBS-PLS model due to its machine learning advantage and its excellent adaptation to possible model over-fitting.