The whole algorithm was divided into two parts and three processing processes.

Figure 4 provides an overview of the processing workflow, which displays the components of our proposed vision system. Our system was roughly divided into two parts: a distortion correction network and a crack detection network. In the distortion correction network branch, the distortion caused by the expansion of the frontal annular interface to the rectangular image is mapped by using the nonlinear fitting ability between convolution layers of the neural network. In the crack detection network branch, the distorted images were sampled up. Moreover, a simple crack detection network was used to traverse the image, and the patch with the detected crack was passed to the position of the original image to finally form the overall crack map. Combined with the information from the IMU and meter, a 3D crack map was formed. The image we captured was between 3 and 5 cm in the focal section of the front lens, and when we extracted the image, we used a gamma correction to correct the difference in brightness due to different shooting distances.

Considering that this system is not a nonlinear fitting of a single task, we created a special processing method for the training data. Real stickers were used in combination with geotechnical internal photographs, and data enhancement was used in the learning process. The original signal we obtained was the video signal because the radial feed we shot was controlled by the motor. Therefore, we only needed to adjust the feed speed and the corresponding length in the extracted frame to obtain relatively stable and non-repetitive area pictures.

3.1. Distortion Correction Network

We grayscaled all image patches used for training. The picture directly taken by the camera was a three-channel color picture. It contained the information of an RGB (red, green, and blue) three-channel, while the gray image we want only needed to retain the brightness information of the image; therefore, the color information was discarded. For the bridge crack images to be processed in this paper, the brightness information of grayscale images could fully express the overall and local features of the image; furthermore, the grayscale processing of the image greatly reduced the amount of calculation for subsequent work.

Since the distortion correction itself is a reconstruction process, we constructed a full convolution neural network for this task. Because we needed to maintain the details in the whole image while reconstructing the image, we used the method of cascading detail layers to fuse the low-dimensional detail features with the high-dimensional features [

20]. As shown in the figure, after weighing the operation speed and reconstruction accuracy, we adopted the following network structure as the distortion correction terminal. The receptive field of the neural network should be large enough to cover the entire field of view (FOV), and the network should be deep enough.

The input image size of our system was 408 × 568. All the network structures in this system were determined through the tradeoff between accuracy and speed. The first layer was a convolution layer that filtered the input with 64 kernels and each filter was of size 7 × 7 × 1. The output image, which was as large as the input image, could be obtained using the transposed convolution decoding region [

21]. The structure of the network is shown in

Figure 5. The method of skipping connections could be simply realized with backpropagation without gradient vanishing. Although models with a higher resolution may lead to higher simulation accuracy, a longer computation time is required.

Table 1 shows the detailed structure of the distortion correction network.

Based on the relationship between the pixels, we defined a set of loss functions. Here, we used two groups of loss functions to represent the learning effect of the distortion correction network, and these loss functions were as follows.

The reconstruction loss function was calculated using:

where

i,

j represent the values in the horizontal and vertical coordinates of the pixels in the time spectrum diagram

I, respectively,

Vx and

Vy are the estimated pixels in the horizontal and vertical directions. To reduce the influence of outliers, we used the Charbonnier penalty

.

and

are the height and width of the images (

I1 and

I2), respectively.

Because most of the distortion will cause a non-closed interval, we used the smoothness loss to manage the aperture problem that caused ambiguity in estimating details of the distortion in non-textured regions. It was calculated using:

where

and

are the gradients of the estimated

in each direction, and

and

are the same as

.

By comparing the characteristic graphs and before and after the partition reconstruction, we can know the degree of correction within the network.

Finally, we combined several loss functions to form an objective function, as shown in Equation (4):

In order to evaluate the distortion performance of the system, we used the image similarity coefficient as the reference index. For this similarity evaluation, we used a comparison parameter to evaluate the reconstruction quality.

SSIM represents the structural similarity between the target and the predicted image [

22], as follows:

To calculate the efficiency, we split and compared the whole graph, and finally obtained the similarity coefficients, where

and

are the mean values of the input images.

and

are the variance of the images, and

is the covariance of these inputs.

and

are constants used to stabilize the division by a small denominator, which were 0.0001 and 0.001, respectively.

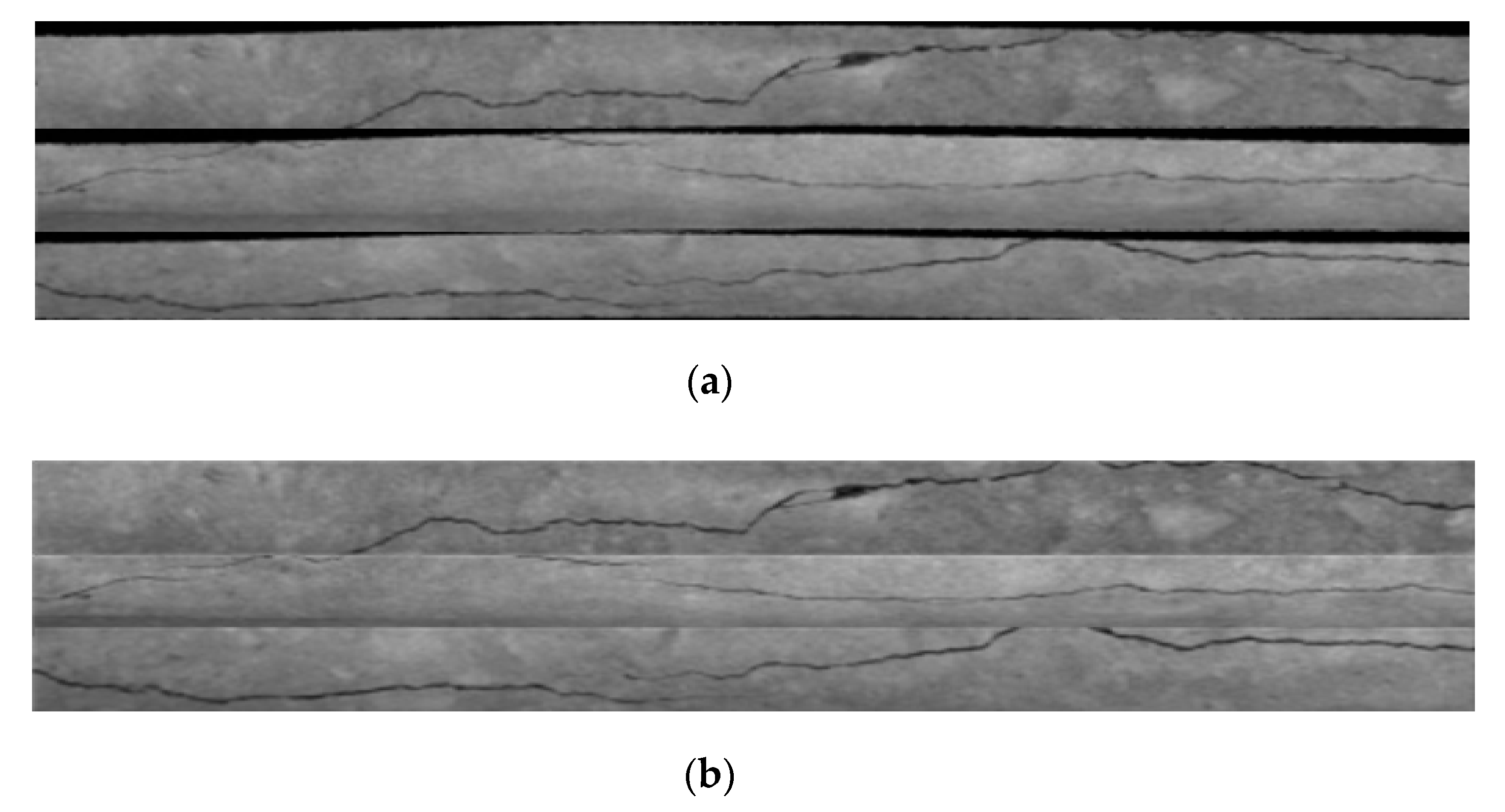

Figure 6 shows the schematic diagram before and after the distortion correction.

After reconstruction, it was necessary to conduct crack detection and the patch traversal on the image. Therefore, it was necessary to conduct up-sampling on the distorted image. For example, as Rong et al. [

23] did, we processed the image. The schematic diagram of the up-sampling network is shown in

Figure 7. The detailed structure of the distortion correction network is shown in

Table 2. Because the lower sampling was different from the upper sampling, the lost information was abstract and high-dimensional. As a result, we adopted the method of bicubic down-sampling to conduct lower sampling on the collected original large images to make labels. Using the learned up-sampling filters effectively suppressed reconstruction artifacts caused by the bicubic interpolation. The crack characteristics in different scales could be learned by comparing the label values after each sampling rate reduction. Since the L2 loss function made the image smooth and lost many features of details when learning the mapping of super pixels, we used the Charbonnier function to learn the mapping, which is defined as:

where

represents the input frame,

represents the up-sampling frame, and

θ represents the nonlinear function relationship between the two frames.

is the Charbonnier penalty function.

N is the number of training samples in each batch, and

L is the number of levels in our pyramid (

L = 3, in our paper). We empirically set

ε to 1 × 10

−3.

We used the method of Lai et al. [

24] to up-sample the distorted image and used the loss function. The output of each transposed convolution layer was connected to two different layers:

- (1)

Convolution layer 1, which was used to reconstruct residual images at this level;

- (2)

Convolution layer 2, which was used to extract features at the finer s+1 level.

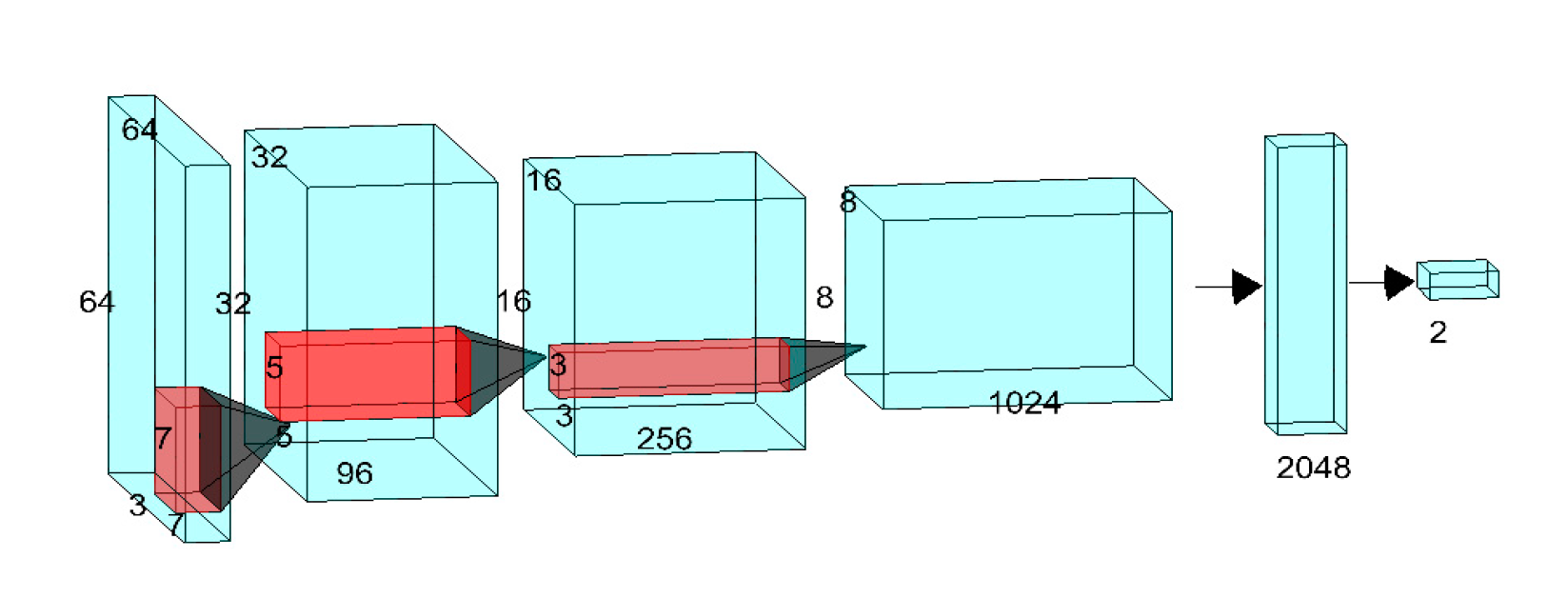

3.2. Crack Detection Network

We used the method of scanning the cracks and finally spliced the images [

25]. Because the processed image was very large, we used a shallow classification network to identify the distorted image. To evaluate the stability of the system, we transformed the classification problem into a regression problem by using the ground truth labels. To effectively evaluate the relationship with the real crack distribution, we did some processing on the pixel of the label boundary.

Figure 8 shows the network structure for identifying the cracks in a patch, which was simple and fast. The details of the structure of the crack detection neural network is shown in

Table 3. In this article, the image resolution of 3264 × 4544 was collected and it was necessary to directly manage such a large image and its associated large computational burden. A picture that is too small, however, could not identify cracks or the overall characteristics of the reaction. After several experiments, we selected 64 × 64 pixels per image cut into small images. This size could guarantee the accurate identification of the fracture and capture the overall layout of the cracks.

By combining the inertial navigation information, we obtained the coordinates of each patch, combined the real-time coordinates with the propulsion distance, and referred them to the initial coordinates. We could also obtain the real-time coordinates of each crack in the video, and constructed the 3D image. This could also easily and efficiently construct the defect environment inside the detected object, forming an intuitive detection system.

3.3. Three-Dimensional Visualization of the Crack Structure

Because the required result in this paper was not a simple classification problem, and we used patch traversal to map the image of the same height, we needed a mechanism to evaluate the confidence of the patch to reduce the system noise. We determined whether the recognition result in the patch adjacent to it was the wrong judgment of the neural network through the discriminant result in a patch and the position relation. Based on the identification of the presence of cracks in a patch, whether two cracks were adjacent determined the recognition confidence. If there were cracks in the three positions to the left of a crack, while there were none on the right, the crack was at the clockwise rotating end. The discriminant method was also applicable to the identification of the right terminal crack. If the patch was an isolated patch, it was considered to be an interference or an excessively short crack, and the following decision rules were obtained by analogy:

- (1)

If there were zero identical cracks in the eight patches around the current patch, the cracks at the current patch location were invalid and the results were suppressed.

- (2)

If there was an identical judgment in the current position, the crack in the current position was valid and the patch was at the reverse end of the crack.

- (3)

If there were cracks all around the patch, the crack at the current location was valid and was located on a section of the crack in the middle.

- (4)

If there were three or four identical judgments at the current position, the crack in the current position was valid and was located at the intersection position.

Figure 9 shows the identification of cracks in our system.

The system structure of this section is shown below. After the recognition achieved in the above manner, we combined the information of the inertial navigation and electronic meter with the corresponding image to obtain the expansion diagram of the radial extension. The simplified version of the crack detection network was composed of the following four parts:

Identification of crack: By using a 64 × 64 sliding window, the convolution neural network could identify the crack.

The patch map: The patch identified as a crack was stored in a blank page. It was then combined with other images in the patch identified through traversal to obtain the image of the whole crack, which was in the same image frame.

Coordinate calculation: With the information from the meter counter and the IMU, it recorded the corresponding position of the extracted patch and pasted the patch to the corresponding position of the original picture.

The output direction: According to the relationship between the relative position of the patch and the adjacent frames, the spatial direction of the inner wall crack could be obtained.

Figure 10 shows the expanded image extracted from different frames after the ring distortion. The recognition result diagram was based on a patch of the crack after the loss of distortion and the 3D image visualized by combining the IMU signal and depth data of the meter.

Previous data were manually labeled to identify the existence of cracks and were then described and observed. Through the above mechanism of crack confidence, we could get a more accurate crack distribution structure. Through the above spatial visualization mapping operation, the end-to-end module of the crack direction identification was completed. Because the system directly outputted the spatial information of the crack, it greatly reduced the workload of operators and analysts and the technical difficulty for testers.