Fast Planar Detection System Using a GPU-Based 3D Hough Transform for LiDAR Point Clouds

Abstract

:1. Introduction

2. Related Works

2.1. Clustering Methods

2.2. Stochastic Methods

2.3. Parameter Spaces Methods

3. Planar Detection System Using GPU-Based 3D Hough Transform

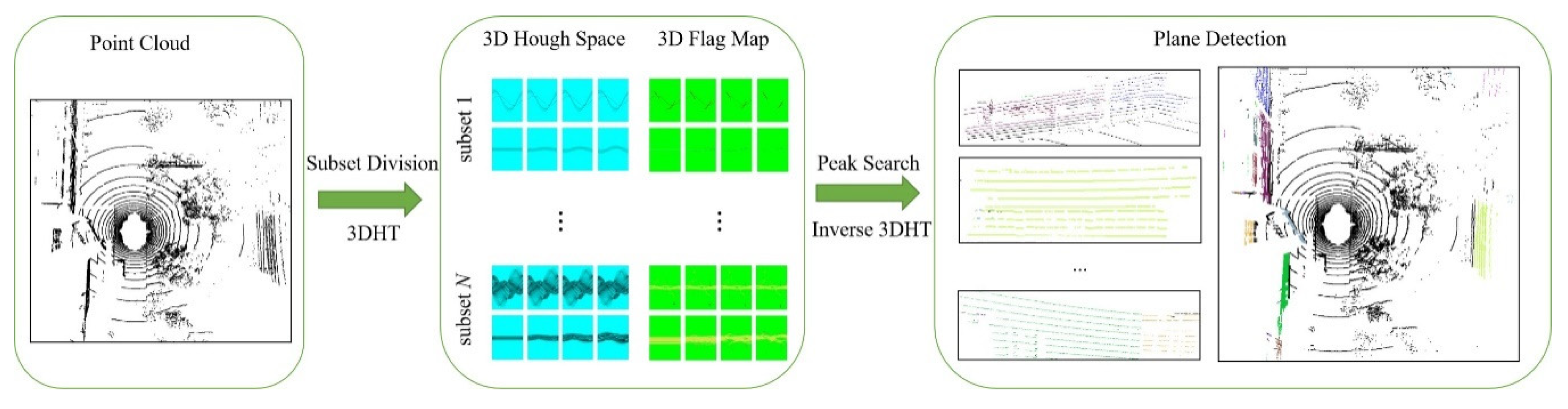

3.1. System Overview

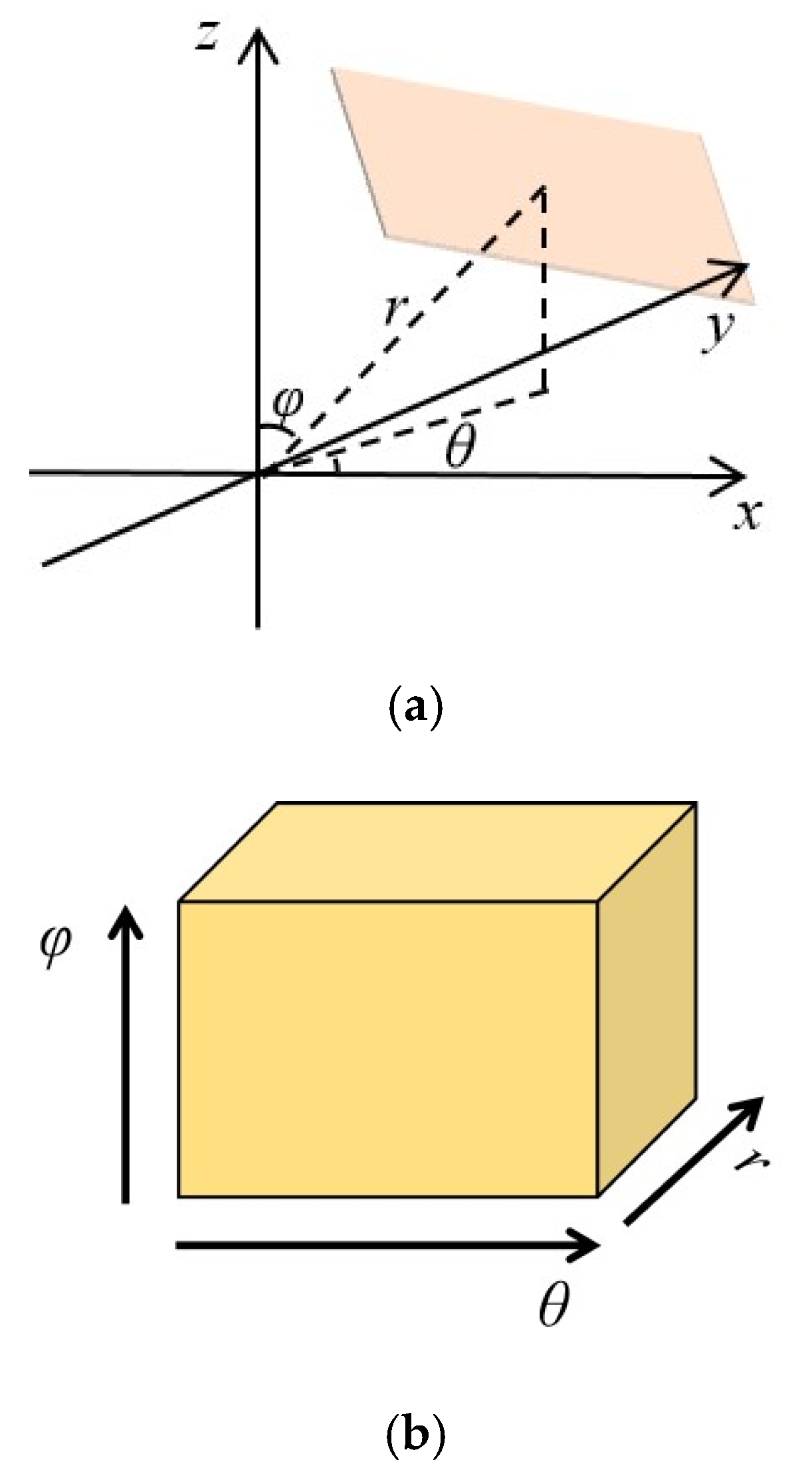

3.2. 3D Hough Space Generation

3.3. Flag Map Generation

3.4. CPU–GPU Hybrid System

4. Experiments and Analysis

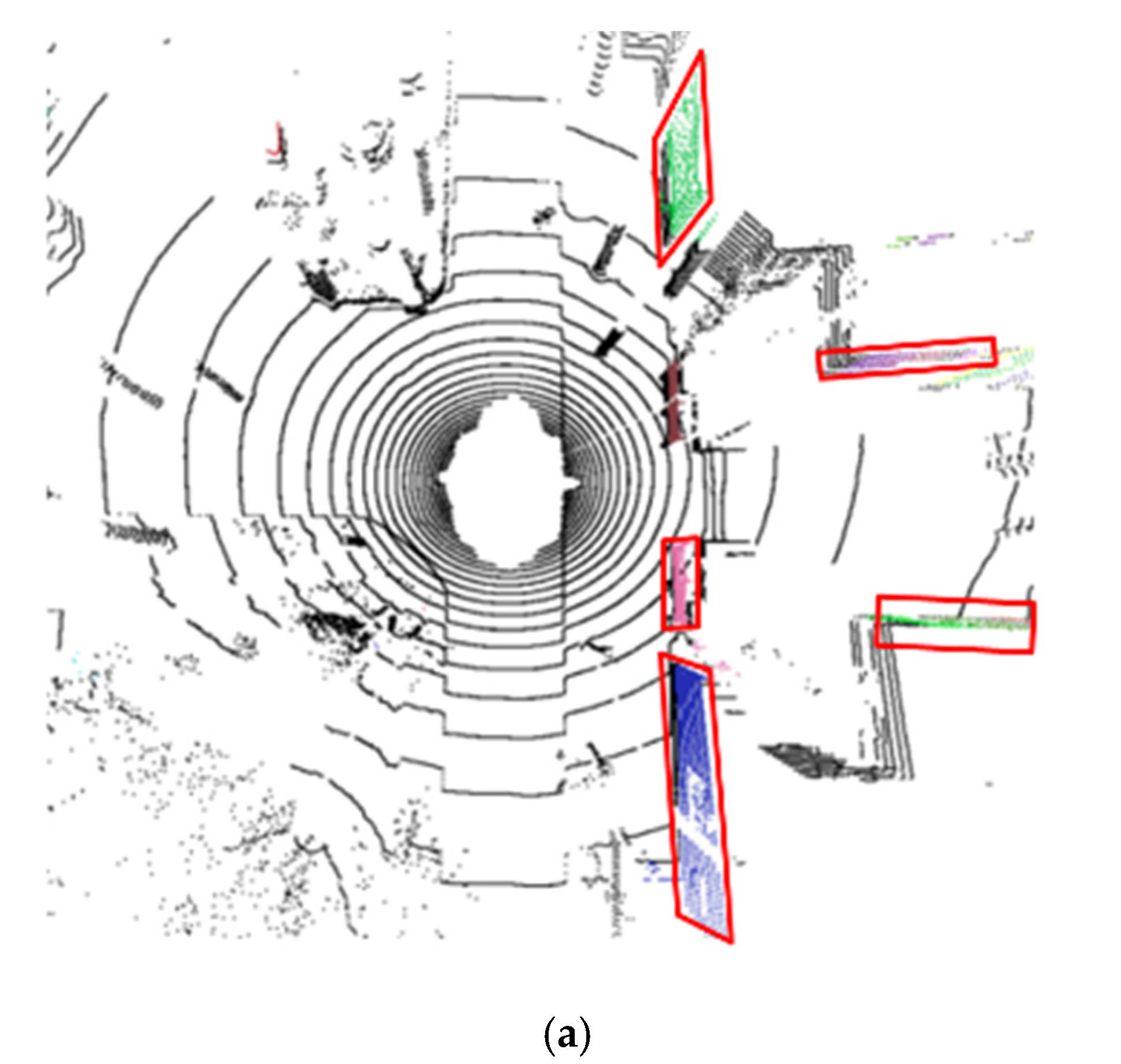

4.1. Three-Dimensional Hough Space and Flag Map Performance

4.2. Multiple Fraction Integration

4.3. Parallel 3DHT Performance

4.4. Multiple Fraction Integration

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Asvadi, A.; Premebida, C.; Peixoto, P.; Nunes, U. 3D Lidar-based static and moving obstacle detection in driving environments: An approach based on voxels and multi-region ground planes. Robot. Auton. Syst. 2016, 83, 299–311. [Google Scholar] [CrossRef]

- Ramiya, A.M.; Nidamanuri, R.R.; Krishnan, R. Segmentation based building detection approach from LiDAR point cloud. Egypt. J. Remote Sens. Space Sci. 2017, 20, 71–77. [Google Scholar] [CrossRef] [Green Version]

- Kim, P.; Chen, J.; Cho, Y.K. SLAM-Driven robotic mapping and registration of 3D point clouds. Autom. Constr. 2018, 89, 38–48. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Zhang, C.; Fraser, C.S. Automatic extraction of building roofs using LIDAR data and multispectral imagery. ISPRS J. Photogramm. 2013, 83, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Wang, B.; Liu, B.; Meng, X.; Yang, G. Pedestrian recognition and tracking using 3D LiDAR for autonomous vehicle. Robot. Auton. Syst. 2017, 88, 71–78. [Google Scholar] [CrossRef]

- Limberger, F.A.; Oliveira, M.M. Real-Time detection of planar regions in unorganized point clouds. Pattern Recognit. 2015, 48, 2043–2053. [Google Scholar] [CrossRef] [Green Version]

- Sayed, E.E.; Kader, R.F.A.; Nashaat, H.; Marie, M. Plane detection in 3D point cloud using octree-balanced density down-sampling and iterative adaptive plane extraction. IET Image Process. 2018, 12, 1595–1605. [Google Scholar] [CrossRef] [Green Version]

- Czerniawski, T.; Nahangi, M.; Walbridge, S.; Haas, C. Automated removal of planar clutter from 3D point clouds for improving industrial object recognition. In Proceedings of the 33rd International Symposium on Automation and Robotics in Construction (ISARC 2016), Auburn, AL, USA, 18–21 July 2016. [Google Scholar]

- Feng, C.; Taguchi, Y.; Kamat, V.R. Fast plane extraction in organized point clouds using agglomerative hierarchical clustering. In Proceedings of the IEEE International Conference on Robotics & Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Hulik, R.; Spanel, M.; Smrz, P.; Materna, Z. Continuous plane detection in point-cloud data based on 3D Hough Transform. J. Vis. Commun. Image 2014, 25, 86–97. [Google Scholar] [CrossRef]

- Liang, Y.B.; Zhan, Q.M.; Che, E.Z.; Chen, M.-W.; Zhang, D.-L. Automatic registration of terrestrial laser scanning data using precisely located artificial planar targets. IEEE Geosci. Remote Sens. 2014, 11, 69–73. [Google Scholar] [CrossRef]

- Abdullah, S.; Awrangjeb, M.; Lu, G. LiDAR segmentation using suitable seed points for 3D building extraction. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 3, 1–8. [Google Scholar] [CrossRef]

- Deschaud, J.; Goulette, F. A Fast and accurate plane detection algorithm for large noisy point clouds using filtered normals and voxel growing. J. Vis. Commun. Image Represent. 2014, 25, 86–97. [Google Scholar]

- Vo, A.V.; Linh, T.; Laefer, D.F.; Bertolotto, M. Octree-Based region growing for point cloud segmentation. ISPRS J. Photogramm. 2015, 104, 88–100. [Google Scholar] [CrossRef]

- Whelan, T.; Ma, L.; Bondarev, E.; de With, P.N.H.; McDonald, J. Incremental and batch planar simplification of dense point cloud maps. Robot. Auton. Syst. 2015, 69, 3–14. [Google Scholar] [CrossRef] [Green Version]

- Nurunnabi, A.; Belton, D.; West, G. Robust statistical approaches for local planar surface fitting in 3D laser scanning data. ISPRS J. Photogramm. 2014, 96, 106–122. [Google Scholar] [CrossRef] [Green Version]

- Yeon, S.; Jun, C.; Choi, H.; Kang, J.; Yun, Y.; Lett Doh, N. Robust-PCA-based hierarchical plane extraction for application to geometric 3D indoor mapping. Ind. Robot. 2014, 41, 203–212. [Google Scholar] [CrossRef]

- Galloa, O.; Manduchia, R.; Rafiib, A. CC-RANSAC: Fitting planes in the presence of multiple surfaces in range data. Pattern Recogn. Lett. 2011, 32, 403–410. [Google Scholar] [CrossRef] [Green Version]

- Qian, X.; Ye, C. NCC-RANSAC: A fast plane extraction method for 3-D range data segmentation. IEEE Trans. Cybern. 2014, 44, 2771–2783. [Google Scholar] [CrossRef]

- Yue, W.; Lu, J.; Zhou, W.; Miao, Y. A new plane segmentation method of point cloud based on mean shift and RANSAC. Chin. Control Decis. Conf. 2018, 1658–1663. [Google Scholar] [CrossRef]

- Li, L.; Yang, F.; Zhu, H. An improved RANSAC for 3D point cloud plane segmentation based on normal distribution transformation cells. Remote Sens. 2017, 9, 5. [Google Scholar] [CrossRef] [Green Version]

- Alehdaghi, M.; Esfahani, M.A.; Harati, A. Parallel RANSAC: Speeding up plane extraction in RGBD image sequences using GPU. In Proceedings of the 5th International Conference on Computer and Knowledge Engineering (ICCKE), Zurich, Switzerland, 29–30 October 2015; pp. 295–300. [Google Scholar]

- Vera, E.; Lucio, D.; Fernandes, L.A.F.; Velho, L. Hough transform for real-time plane detection in depth images. Pattern Recogn Lett. 2018, 103, 8–15. [Google Scholar] [CrossRef]

- Jeltsch, M.; Dalitz, C.; Fröhlich, R.P. Hough parameter space regularisation for line detection in 3D. In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP), Rome, Italy, 17 October 2016; pp. 345–352. [Google Scholar]

- Maltezos, E.; Ioannidis, C. Plane detection of polyhedral cultural heritage monuments: The case of tower of winds in Athens. J. Archaeol. Sci. Rep. 2018, 19, 562–574. [Google Scholar] [CrossRef]

- Marriott, R.T.; Pashevich, A.; Horaud, R. Plane-Extraction from depth-data using a Gaussian mixture regression model. Pattern Recogn. Lett. 2018, 110, 44–50. [Google Scholar] [CrossRef] [Green Version]

- Lan, J.; Tian, Y.; Song, W.; Fong, S.; Su, Z. A fast planner detection method in LiDAR point clouds using GPU-based RANSAC. In Proceedings of the KDD 2018 Workshop on Knowledge Discovery and User Modelling for Smart Cities, London, UK, 20 August 2018; pp. 8–35. [Google Scholar]

- Song, W.; Liu, L.; Tian, Y.; Sun, G.; Fong, S.; Cho, G. A 3D localisation method in indoor environments for virtual reality applications. Hum. Centric Comput. Inf. Sci. 2017, 7, 39. [Google Scholar] [CrossRef] [Green Version]

- Vega-Rodríguez, M.A.; Pavón, N.; Ferruz, J. A Comparative study of parallel RANSAC implementations in 3D space. Int. J. Parallel Program. 2014, 43, 703–720. [Google Scholar]

| Type and Resolution | Fps |

|---|---|

| CPU-based RANSAC | 0.1999 |

| GPU-based RANSAC | 4.6109 |

| GPU-based Hough transform (resolution 1.0, single frame) | 3.1167 |

| GPU-based Hough transform (resolution 2.0, single frame) | 12.3693 |

| GPU-based Hough transform (resolution 3.0, single frame) | 27.8686 |

| GPU-based Hough transform (resolution 5.0, multiple frames) | 1.8678 |

| GPU-based Hough transform (resolution 10.0, multiple frames) | 8.8794 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, Y.; Song, W.; Chen, L.; Sung, Y.; Kwak, J.; Sun, S. Fast Planar Detection System Using a GPU-Based 3D Hough Transform for LiDAR Point Clouds. Appl. Sci. 2020, 10, 1744. https://doi.org/10.3390/app10051744

Tian Y, Song W, Chen L, Sung Y, Kwak J, Sun S. Fast Planar Detection System Using a GPU-Based 3D Hough Transform for LiDAR Point Clouds. Applied Sciences. 2020; 10(5):1744. https://doi.org/10.3390/app10051744

Chicago/Turabian StyleTian, Yifei, Wei Song, Long Chen, Yunsick Sung, Jeonghoon Kwak, and Su Sun. 2020. "Fast Planar Detection System Using a GPU-Based 3D Hough Transform for LiDAR Point Clouds" Applied Sciences 10, no. 5: 1744. https://doi.org/10.3390/app10051744