A Stronger Aadaptive Local Dimming Method with Details Preservation

Abstract

:1. Introduction

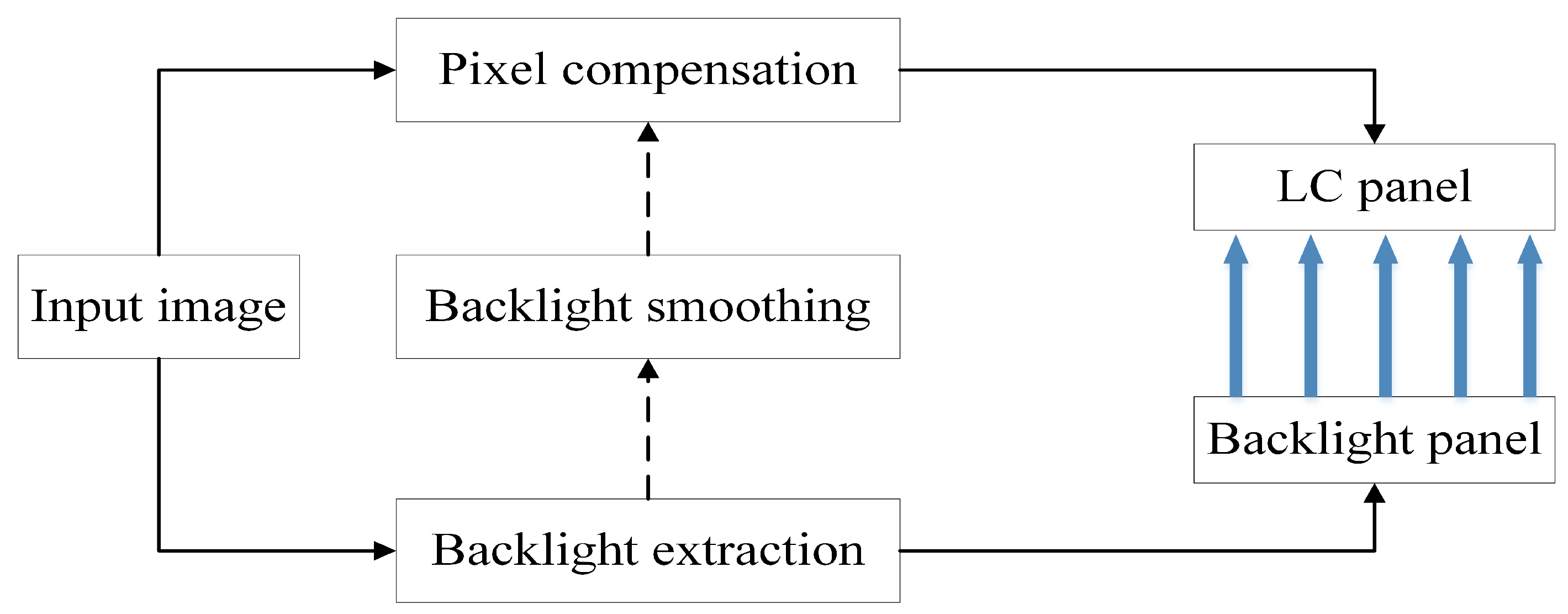

- A backlight extraction method is proposed to acquire a stronger adaption in processing images with diverse contents by combining advantages of several existing methods.

- An IBHE method with Retinex theory is proposed to enhance image quality by preserving abundant details.

- A pixel compensation method is proposed to alleviate overcompensation by leveraging information of both the extracted backlight and the original image based on IBHE.

2. Related Work

2.1. Backlight Extraction

2.2. Pixel Compensation

3. Method

3.1. Adjustable Backlight Extraction Module

3.1.1. Base Backlights Extraction

3.1.2. Optimal Backlight Selection

- Backlight constraint conditions

- Backlight adjustment and optimal backlight selection

3.2. Pixel Compensation Module

| Algorithm 1 Proposed algorithm for breakpoint acquisition. |

| Input:r, ; |

| Output: the breakpoint T; |

|

4. Experiment

4.1. Hardware

4.2. Experiment of Improved Bi-Histogram Equalization

4.3. Experiment of Adjustable Backlight Extraction

4.3.1. Subjective Experiment

4.3.2. Objective Experiment

4.4. Experiment of Simulated Images

4.4.1. Subjective Experiment

4.4.2. Objective Experiment

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kim, S.E.; An, J.Y.; Hong, J.J. How to reduce light leakage and clipping in local-dimming liquid-crystal displays. J. Soc. Inf. Disp. 2009, 17, 1051–1057. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.; Ha, T.H.; Sung, J.H. Evaluation of LCD local dimming backlight system. J. Soc. Inf. Disp. 2012, 18, 57–65. [Google Scholar] [CrossRef]

- Nam, H.; Song, E.; Kim, S.K. Weighted roll-off scheme to remove block artifacts for low power local dimming liquid crystal displays. Opt. Laser Technol. 2014, 58, 8–15. [Google Scholar] [CrossRef]

- Funamoto, T.; Kobayashi, T.; Murao, T. High-picture-quality technique for LCD televisions: LCD-AI. In Proceedings of the International Display Workshops, Kobe, Japan, 29 December 2000; pp. 1157–1158. [Google Scholar]

- Zhang, X.B.; Wang, R.; Dong, D. Dynamic Backlight Adaptation Based on the Details of Image for Liquid Crystal Displays. J. Disp. Technol. 2012, 8, 108–111. [Google Scholar] [CrossRef]

- Cho, H.; Kwon, O.K. A backlight dimming algorithm for low power and high image quality LCD applications. IEEE Trans. Consum. Electron. 2009, 55, 839–844. [Google Scholar] [CrossRef]

- Liu, Y.Z.; Zheng, X.R.; Chen, J.B. Dynamic Backlight Signal Extraction Algorithm Based on Threshold of Image CDF for LCD-TV and its Hardware Implementation. Chin. J. Liq. Cryst. Disp. 2010, 25, 449–453. [Google Scholar]

- Lin, F.C.; Liao, C.Y.; Liao, L.Y. Inverse of Mapping Function (IMF) Method for Image Quality Enhancement of High Dynamic Range LCD TVs. SID Symp. Dig. Tech. Pap. 2007, 38, 1343–1346. [Google Scholar] [CrossRef]

- Nadernejad, E.; Burini, N.; Korhonen, J. Adaptive local backlight dimming algorithm based on local histogram and image characteristics. In Proceedings of the IS&T/SPIE Electronic Imaging, Burlingame, CA, USA, 3–7 February 2013. [Google Scholar]

- Zhang, T.; Wang, Y.F. High-Performance Local Dimming Algorithm Based on Image Characteristic and Logarithmic Function. J. Soc. Inf. Disp. 2019, 27, 85–100. [Google Scholar] [CrossRef]

- Zhang, X.B.; Liu, X.; Liu, B. A Control Algorithm of LCD Dynamic Backlight Based on PSNR. Appl. Mech. Mater. 2012, 241–244, 3014–3019. [Google Scholar] [CrossRef]

- Chen, S.L.; Tsai, H.J. A Novel Adaptive Local Dimming Backlight Control Chip Design Based on Gaussian Distribution for Liquid Crystal Displays. J. Disp. Technol. 2016, 99, 1494–1505. [Google Scholar] [CrossRef]

- Zhang, T.; Zhao, X.; Pan, X.H. Optimal Local Dimming Based on an Improved Shuffled Frog Leaping Algorithm. IEEE Access 2018, 6, 40472–40484. [Google Scholar] [CrossRef]

- Zhang, T.; Zhao, X. Using the Guided Fireworks Algorithm for Local Backlight Dimming. Appl. Sci. 2019, 9, 129. [Google Scholar] [CrossRef] [Green Version]

- Yeo, D.M.; Kwon, Y.H.; Kang, E.J. Smart Algorithms for Local Dimming LED Backlight. SID Symp. Dig. Tech. Pap. 2008, 39, 1343–1346. [Google Scholar] [CrossRef]

- Wang, X.; Su, H.S.; Li, C.L. HDR image display algorithm based on LCD-LED dual modulation HDR display. Chin. J. Liq. Cryst. Disp. 2019, 34, 18–27. [Google Scholar]

- Kim, Y.T. Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans. Consum. Electron. 1997, 43, 1–8. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 2007, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Genesis Microchip. gm6010/gm6015 Programming Guide; Genesis Microchip Company: Anaheim, CA, USA, 2002; pp. 85–90. [Google Scholar]

- LAND, E.H. Lightness and retinex theory. J. Opt. Soc. Am. 1971, 61, 2032–2040. [Google Scholar] [CrossRef]

- Tang, L.; Chen, S.; Liu, W. Improved Retinex Image Enhancement Algorithm. Procedia Environ. Sci. 2011, 11, 208–212. [Google Scholar] [CrossRef] [Green Version]

- Farbman, Z.; Fattal, R.; Lischinski, D. Edge-preserving decompositions for multi-scale tone and detail manipulation. ACM Trans. Graph. 2008, 27, 1–10. [Google Scholar] [CrossRef]

- Lu, X.M.; Zhu, X.Y.; Li, Z.W. A Brightness-scaling and Detail-preserving Tone Mapping Method for High Dynamic Range Images. Acta Autom. Sin. 2015, 41, 1080–1092. [Google Scholar]

- Li, J.X. Research on Image Enhancement Based on JND Curve Property. Master’s Thesis, Lanzhou Jiaotong University, LanZhou, GanSu, China, 2014. [Google Scholar]

- Genesis Microchip. gm6015 Preliminary Data Sheet; Genesis Microchip Company: Anaheim, CA, USA, 2001; pp. 33–34. [Google Scholar]

- Tong, H. Research of LCD Dynamic Control LED Backlight Algorithm. Mater’s Thesis, Hefei University of Technology, Hefei, AnHui, China, 2012. [Google Scholar]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition, IEEE Computer Society, Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Song, S.J.; Kim, Y.I.; Bae, J. Deep-learning-based pixel compensation algorithm for local dimming liquid crystal displays of quantum-dot backlights. Opt. Express 2019, 27, 15907–15917. [Google Scholar] [CrossRef] [PubMed]

| Image | Backlight | CR | DR |

|---|---|---|---|

| (a) | 11,209.24 | 1,630,700 | |

| 11,284.18 | 1,631,900 | ||

| (b) | 8.97 | 2,059,400 | |

| 9.14 | 1,974,200 | ||

| (c) | 3228.41 | 1,056,333 | |

| 3272.41 | 1,156,091 | ||

| (d) | 1913.71 | 1,659,500 | |

| 5464.29 | 1,656,385 |

| Image | Evaluation Metrics | CDF Method | LUT Method | [10] | the Proposed Method |

|---|---|---|---|---|---|

| (a) | 4.88 | 4.93 | 7.00 | 7.50 | |

| 18.40 | 19.43 | 24.78 | 33.19 | ||

| 0.87 | 0.89 | 0.94 | 0.97 | ||

| 0.32 | 0.28 | 0.15 | 0.06 | ||

| (b) | 4.32 | 4.28 | 7.68 | 8.42 | |

| 24.10 | 25.49 | 24.24 | 27.00 | ||

| 0.98 | 0.99 | 0.97 | 0.98 | ||

| 0.13 | 0.12 | 0.16 | 0.12 | ||

| (c) | 5.88 | 5.76 | 6.08 | 7.71 | |

| 20.32 | 21.49 | 23.44 | 24.39 | ||

| 0.91 | 0.92 | 0.93 | 0.90 | ||

| 0.26 | 0.23 | 0.19 | 0.19 | ||

| (d) | 5.69 | 6.00 | 6.50 | 8.00 | |

| 19.81 | 20.49 | 25.85 | 24.80 | ||

| 0.83 | 0.84 | 0.90 | 0.93 | ||

| 0.23 | 0.21 | 0.13 | 0.14 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Du, W.; Wang, H.; Zeng, Q.; Fan, L. A Stronger Aadaptive Local Dimming Method with Details Preservation. Appl. Sci. 2020, 10, 1820. https://doi.org/10.3390/app10051820

Zhang T, Du W, Wang H, Zeng Q, Fan L. A Stronger Aadaptive Local Dimming Method with Details Preservation. Applied Sciences. 2020; 10(5):1820. https://doi.org/10.3390/app10051820

Chicago/Turabian StyleZhang, Tao, Wenli Du, Hao Wang, Qin Zeng, and Long Fan. 2020. "A Stronger Aadaptive Local Dimming Method with Details Preservation" Applied Sciences 10, no. 5: 1820. https://doi.org/10.3390/app10051820