Machine Learning Approaches for Ship Speed Prediction towards Energy Efficient Shipping

Abstract

:1. Introduction

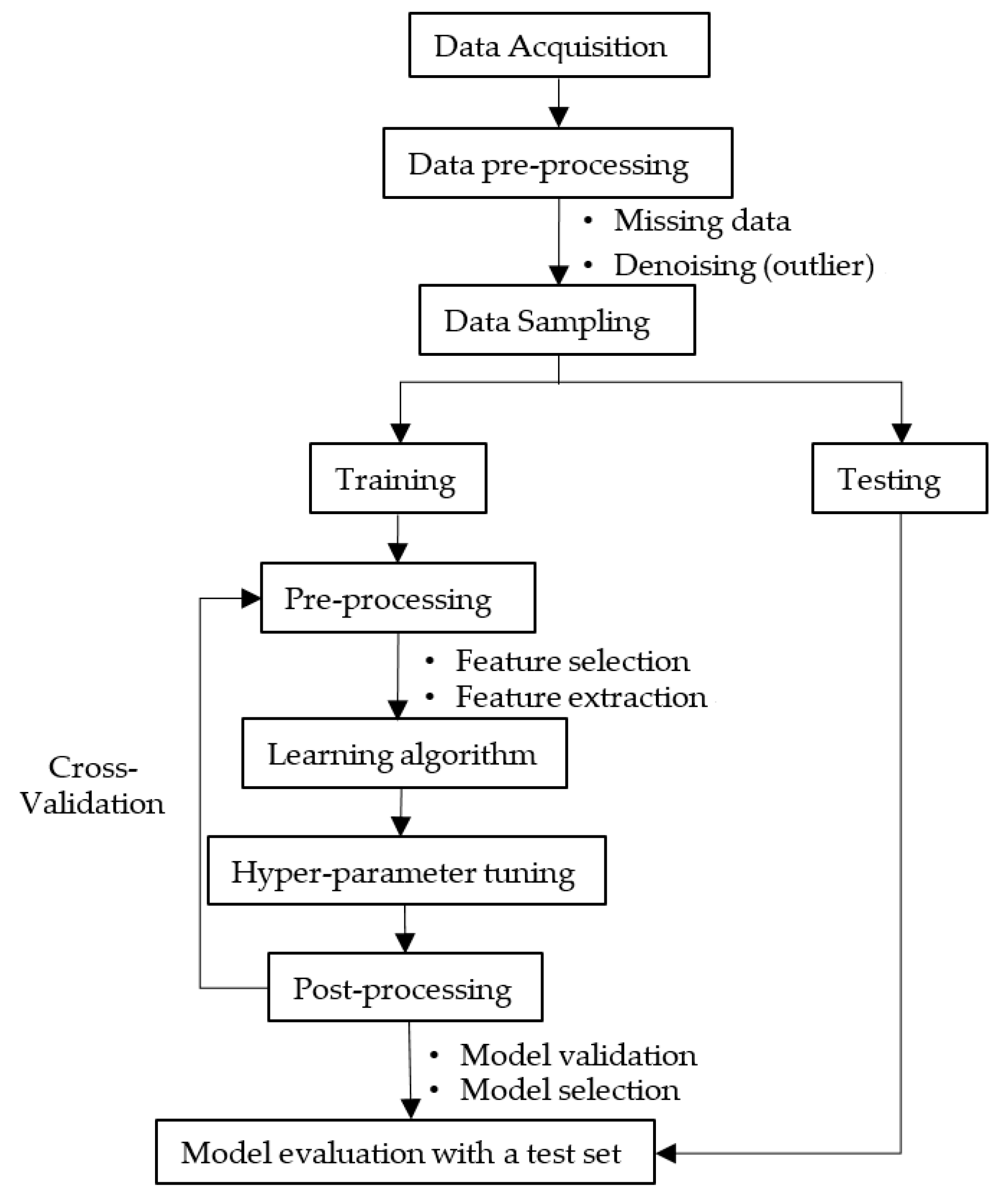

2. Material and Methodology

2.1. Data Acquisition

2.2. Data Preprocessing

- To investigate only the operating periods of the ship, this study extracted the “Underway using engine” data from the Navigational Status features, which meant the mooring and anchoring periods were rejected.

- Shipping speed can decrease due to different sea state resistances; however, there is also a probability that it may be reduced by the operator, especially around the port at the start and end of the voyage. To reduce this kind of measurement error, this study discarded the data with less than 5 knots of SOG, which is considered as maneuvering.

- The scatter plot of the features can be used to show that the data may have noise/outliers because of the inconsistencies in the measurement of the sensors or human errors which must be rejected before training the models. Z-score is a parametric outlier detection method for different numbers of dimensional feature space [21]. However, this method assumed that the data had a Gaussian distribution; hence, the outliers were considered to be distributed at the tails of the distribution, which meant that the data point was far from the mean value. Before deciding a threshold that we set as , the given data point s normalized as using the following equation.where μ and are the mean and standard deviation of all , respectively. An outlier is then a data point that has an absolute value greater than or equal to :.

2.3. Feature Selection and Extraction

2.4. Prediction Models

2.4.1. Decision Tree Regressor

- The maximum depth of the tree (max_depth) which indicates how deep the built tree can be. The deeper the tree, the more splits it has, and it captures more information about the data; however, increasing depth could increase the computation time.

- min_samples_split represents the minimum number of samples required to split an internal node. This can vary between considering only one sample at each node to considering all of the samples at each node. When this parameter is increased, the tree becomes more constrained as it has to consider more samples at each node.

- The minimum number of samples required to exist at each leaf node (min_samples_leaf). This is similar to min_samples_splits, however, this describes the minimum number of samples at the leaves.

- In addition, the number of features (max_features) to consider while searching for the best split should be specified.

2.4.2. Ensemble Methods

2.5. Model Hyperparameter Tuning

2.6. Model Validation

2.6.1. Coefficient of Determination (R2)

2.6.2. Root mean square error (RMSE)

2.6.3. Normalized Root Mean Square Error (NRMSE)

3. Model Development

3.1. Methodology Application

- The acquired dataset was loaded.

- Unnecessary features such as static information in the AIS data were rejected.

- Data where the ship is moored and anchored were identified and discarded.

- Data where the ship has an SOG value of less than 5 knots were discarded.

- The missed data were identified in the AIS data and discarded.

- The outliers were discarded for some of the features based on the z-scores.

- The key features were selected by applying feature selection methods such as a high correlation filter.

- The dataset was subjected to sampling (splitting) into a training and test set.

- The models which can potentially estimate the target were listed down.

- k-fold cross-validation was implemented for each model:

- Each model was trained using hyperparameter optimization by specifying the range of the search space for each hyperparameter, Bayesian optimization was executed over the specified search space, and the results were assessed.

- The model was trained using the whole training set after the optimal hyperparameters were obtained.

- The results of the constructed model results were evaluated using a test set, and the performance metrics were calculated.

- The constructed models were evaluated using three accuracy measures (R2, RMSE, NRMSE) and overall conclusions were drawn.

3.2. Results and Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Stopford, M. The Organization of the Shipping Market. In Maritime Economics, 3rd ed.; Routledge: London, UK; New York, NY, USA, 2009; pp. 47–90. [Google Scholar]

- Psaraftis, H.N.; Kontovas, C.A. Speed Models for Energy-Efficient Maritime Transportation: A Taxonomy and Survey. Transp. Res. Part C Emerg. Technol. 2013, 26, 331–351. [Google Scholar] [CrossRef]

- Roh, M.I. Determination of an Economical Shipping Route Considering the Effects of Sea State for Lower Fuel Consumption. Int. J. Nav. Archit. Ocean Eng. 2013, 5, 246–262. [Google Scholar] [CrossRef] [Green Version]

- ISO15016. Ships and Marine Technology–Guidelines for the Assessment of Speed and Power Performance by Analysis of Speed Trial Data; ISO15016: Geneva, Switzerland, 2015. [Google Scholar]

- Kim, M.; Hizir, O.; Turan, O.; Day, S.; Incecik, A. Estimation of added resistance and ship speed loss in a seaway. Ocean Eng. 2017, 141, 65–76. [Google Scholar] [CrossRef] [Green Version]

- Yoo, B.; Kim, J. Probabilistic Modelling of Ship Powering Performance using Full-Scale Operational Data. Appl. Ocean Res. 2019, 82, 1–9. [Google Scholar] [CrossRef]

- Gan, S.; Liang, S.; Li, K.; Deng, J.; Cheng, T. Long-term ship speed prediction for intelligent traffic signaling. IEEE Trans. Intell. Transp. Syst. 2016, 18, 82–91. [Google Scholar] [CrossRef]

- Liu, J.; Shi, G.; Zhu, K. Vessel trajectory prediction model based on AIS sensor data and adaptive chaos differential evolution support vector regression (ACDE-SVR). Appl. Sci. 2019, 9, 2983. [Google Scholar] [CrossRef] [Green Version]

- Ren, Y.; Yang, J.; Zhang, Q.; Guo, Z. Multi-Feature Fusion with Convolutional Neural Network for Ship Classification in Optical Images. Appl. Sci. 2019, 9, 4209. [Google Scholar] [CrossRef] [Green Version]

- Jeon, M.; Noh, Y.; Shin, Y.; Lim, O.K.; Lee, I.; Cho, D. Prediction of ship fuel consumption by using an artificial neural network. J. Mech. Sci. Technol. 2018, 32, 5785–5796. [Google Scholar] [CrossRef]

- Krata, P.; Vettor, R.; Soares, C.G. Bayesian approach to ship speed prediction based on operational data. In Proceedings of the In Developments in the Collision and Grounding of Ships and Offshore Structures: Proceedings of the 8th International Conference on Collision and Grounding of Ships and Offshore Structures (ICCGS 2019), Lisbon, Portugal, 21–23 October 2019; p. 384. [Google Scholar]

- Beaulieu, C.; Gharb, S.; Ouarda, T.B.; Charron, C.; Aissia, M.A. Improved model of deep-draft ship squat in shallow waterways using stepwise regression trees. J. Waterw. Port Coast. Ocean Eng. 2011, 138, 115–121. [Google Scholar]

- Zhao, F.; Zhao, J.; Niu, X.; Luo, S.; Xin, Y. A Filter Feature Selection Algorithm Based on Mutual Information for Intrusion Detection. Appl. Sci. 2018, 8, 1535. [Google Scholar] [CrossRef] [Green Version]

- Bernard, S.; Heutte, L.; Adam, S. Influence of Hyperparameters on Random Forest Accuracy. In International Workshop on Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2009; pp. 171–180. [Google Scholar]

- Larsson, L.; Rave, H.C. Principles of Naval Architecture Series: Ship Resistance and Flow, 1st ed.; Society of Naval Architects and Marine Engineers: Jersey City, NJ, USA, 2010; pp. 16–77. [Google Scholar]

- van den Boom, H.; Huisman, H.; Mennen, F. New Guidelines for Speed/Power Trials. Level Playing Field Established for IMO EEDI; SWZ Maritime: Breda, The Netherlands, 2013; Volume 134, pp. 18–22. [Google Scholar]

- Chen, H.T. A Dynamic Program for Minimum Cost Ship under Uncertainty. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1978. [Google Scholar]

- Calvert, S. Optimal Weather Routing Procedures for Vessels on Trans-Oceanic Voyages. Ph.D. Thesis, Plymouth South West, Plymouth, UK, 1990. [Google Scholar]

- Class, A. AIS Position Report. Available online: https://www.samsung.com/au/smart-home/smartthings-vision-u999/ (accessed on 17 March 2020).

- Graziano, M.D.; Renga, A.; Moccia, A. Integration of Automatic Identification System (AIS) Data and Single-channel Synthetic Aperture Radar (SAR) Images by Sar-based Ship Velocity Estimation for Maritime Situational Awareness. Remote Sens. 2019, 11, 2196. [Google Scholar] [CrossRef] [Green Version]

- Kreyszig, E. Advanced Engineering Mathematics, 10th ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2009; pp. 1014–1015. [Google Scholar]

- Aggarwal, C.C. Data Mining: The Textbook; Springer: New York, NY, USA, 2015; p. 241. [Google Scholar]

- Yu, L.; Liu, H. Feature selection for high-dimensional data: A fast correlation-based filter solution. In Proceedings of the 20th international conference on machine learning (ICML-03), Washington, DC, USA, 21–24 August 2003; pp. 856–863. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Prediction, Inference and Data Mining, 2nd ed.; Springer: New York, NY, USA, 2009; pp. 241–518. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning. In Information Science and Statistics; Springer: New York, NY, USA, 2006; p. 738. [Google Scholar]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef] [Green Version]

- Friedman, J.H. Stochastic Gradient Boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 13–17. [Google Scholar]

- Mastelini, S.M.; Santana, E.J.; Cerri, R.; Barbon, S. DSTARS: A multi-target deep structure for tracking asynchronous regressor stack. In Brazilian Conference on Intelligent Systems (BRACIS); IEEE: Uberlandia, Brazil, 2017; pp. 19–24. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical Bayesian optimization of machine learning algorithms. Adv. Neural Inf. Process. Syst. 2012, 2, 2951–2959. [Google Scholar]

- Cheung, M.W.; Chan, W. Testing dependent correlation coefficients via structural equation modeling. Organ. Res. Methods 2004, 7, 206–223. [Google Scholar] [CrossRef] [Green Version]

| No | Parameter | Unit | Remark | No | Parameter | Unit | Remark |

|---|---|---|---|---|---|---|---|

| 1 | MMSI | − | AIS data for static info (Msg. type 5) | 24 | Total wave height | m | Weather data (0.5° resolution) |

| 2 | IMO number | − | 25 | Total wave direction | deg. | ||

| 3 | Call sign | − | 26 | Total wave period | sec | ||

| 4 | Name | − | 27 | Wind wave height | m | ||

| 5 | Type of ship | − | 28 | Wind wave direction | deg. | ||

| 6~9 | Dimension A~D | m | 29 | Wind wave period | sec | ||

| 10 | Electronic fixing device | − | 30 | Swell wave height | m | ||

| 11 | ETA | sec | 31 | Swell wave direction | deg. | ||

| 12 | Max draught | m | 32 | Swell wave period | sec | ||

| 13 | Msg type | − | 33 | Wind UV | m/s | ||

| 14 | Date time stamp | KST | 34 | Wind VV | m/s | ||

| 15 | MMSI | − | AIS data for dynamic info (Msg. type 123) | 35 | Mean sea pressure level | hPa | |

| 16 | Latitude | DMS | 36 | Pressure surface | hPa | ||

| 17 | Longitude | DMS | 37 | Ambient temperature | °C | ||

| 18 | SOG | knot | 38 | Sea surface salinity | Psu | ||

| 19 | ROT | deg/min | 39 | Sea surface temperature | °C | ||

| 20 | COG | deg. | 40 | Current UV | m/s | ||

| 21 | True heading | deg. | 41 | Current VV | m/s | ||

| 22 | Navigational status | − | |||||

| 23 | Msg type | − |

| Remark | No. | Features | Units |

|---|---|---|---|

| Input Features | 1 | Max draught | m |

| 2 | Course over the ground (COG) | deg. | |

| 3 | True heading | deg. | |

| 4 | Total wave height | m | |

| 5 | Total wave direction | deg. | |

| 6 | Total wave period | sec | |

| 7 | Wind wave height | m | |

| 8 | Wind wave direction | deg. | |

| 9 | Wind wave period | sec | |

| 10 | Swell wave height | m | |

| 11 | Swell wave direction | deg. | |

| 12 | Swell wave period | sec | |

| 13 | Wind UV | m/sec | |

| 14 | Wind VV | m/sec | |

| 15 | Pressure at mean sea level (MSL) | hPa | |

| 16 | Pressure surface | hPa | |

| 17 | Ambient temperature | °C | |

| 18 | Sea surface salinity | Psu | |

| 19 | Sea surface temperature | °C | |

| 20 | Current UV | m/s | |

| 21 | Current VV | m/s | |

| 22 | Ship length | m | |

| 23 | Ship width | m | |

| 24 | Dead weight | tons | |

| 25 | Gross tonnage | tons | |

| Output | 1 | SOG | knots |

| Remark | No. | Features | Units |

|---|---|---|---|

| Input Features | 1 | Max draught | m |

| 2 | COG | deg. | |

| 3 | Total wave height | m | |

| 4 | Total wave direction | deg. | |

| 5 | Total wave period | sec | |

| 6 | Wind speed | m/sec | |

| 7 | Wind speed | m/sec | |

| 8 | Pressure MSL | hPa | |

| 9 | Ambient temperature | °C | |

| 10 | Sea surface salinity | Psu | |

| 11 | Current speed | m/s | |

| 12 | Current speed | m/s | |

| 13 | Gross tonnage | tons | |

| Output | 1 | SOG | knots |

| Features | Mean | Std. | Min | 25% | 50% | 75% | Max |

|---|---|---|---|---|---|---|---|

| COG | 172.652 | 98.804 | 0.0000 | 85.100 | 162.800 | 263.100 | 360.000 |

| Total wave height | 2.038 | 0.970 | 0.0002 | 1.421 | 1.964 | 2.573 | 6.759 |

| Total wave direction | 175.432 | 76.611 | 0.2243 | 122.246 | 181.793 | 224.441 | 359.720 |

| Total wave period | 8.371 | 2.293 | 0.8933 | 7.051 | 8.455 | 9.907 | 17.384 |

| Pressure MSL | 1016.53 | 7.08 | 980.06 | 1011.61 | 1016.41 | 1021.10 | 1044.42 |

| Ambient temp | 21.146 | 5.794 | −8.0820 | 17.855 | 21.718 | 25.695 | 36.110 |

| Sea surface salinity | 35.034 | 1.148 | 28.7284 | 34.573 | 35.362 | 35.605 | 41.126 |

| Wind speed | 6.914 | 3.088 | 0.0874 | 4.693 | 6.747 | 8.820 | 22.836 |

| Wind direction | 157.916 | 93.067 | 0.3399 | 90.167 | 134.010 | 230.064 | 359.863 |

| Current speed | 0.318 | 0.225 | 0.0020 | 0.166 | 0.257 | 0.399 | 1.515 |

| Current direction | 160.511 | 89.145 | 0.8856 | 85.932 | 146.343 | 232.631 | 360.000 |

| Maximum draught | 12.747 | 5.278 | 0.0000 | 8.900 | 12.200 | 15.300 | 23.200 |

| Gross tonnage | 93137 | 67667 | 8231 | 38400 | 79560 | 199959 | 200679 |

| SOG | 12.107 | 1.882 | 5.000 | 11.000 | 12.100 | 13.200 | 22.200 |

| Features | Correlation Coefficient |

|---|---|

| Ambient temperature | 0.218750 |

| COG | 0.206953 |

| Gross tonnage | 0.192829 |

| Total wave height | 0.161433 |

| Maximum draught | 0.161050 |

| Total wave direction | 0.124137 |

| Wind speed | 0.104488 |

| Sea surface salinity | 0.076264 |

| Wind direction | 0.062621 |

| Total wave period | 0.059107 |

| Pressure MSL | 0.042215 |

| Current direction | 0.039811 |

| Current speed | 0.002221 |

| Model | Hyperparameters Tuned | Range | Optimal Value |

|---|---|---|---|

| DTR | max_depth | [1, 100] | 60 |

| min_samples_split | [2, 10] | 2 | |

| min_samples_leaf | [1, 4] | 1 | |

| max_features | [1, 13] | 6 | |

| RFR | n_estimators | [1, 100] | 89 |

| max_depth | [1, 100] | 50 | |

| min_samples_split | [2, 10] | 2 | |

| min_samples_leaf | [1, 4] | 1 | |

| max_features | [1, 13] | 5 | |

| ETR | n_estimators | [1, 100] | 61 |

| max_depth | [1, 100] | 39 | |

| min_samples_split | [2, 10] | 2 | |

| min_samples_leaf | [1, 4] | 1 | |

| max_features | [1, 13] | 8 | |

| GBR | n_estimators | [1, 100] | 50 |

| learning_rate | [0.01, 1] | 0.1 | |

| max_depth | [1, 50] | 37 | |

| min_samples_split | [2, 10] | 2 | |

| min_samples_leaf | [1, 4] | 1 | |

| max_features | [1, 13] | 7 | |

| XGBR | n_estimators | [1, 100] | 57 |

| learning_rate | [0.01, 1] | 0.2 | |

| max_depth | [1, 50] | 30 | |

| subsample | [0.01, 0.8] | 0.76 | |

| colsample_bytree | [0.01, 0.8] | 0.42 | |

| gamma | [0, 20] | 0.6 |

| LR | Poly | GBR | XGBR | DTR | RFR | ETR | |

|---|---|---|---|---|---|---|---|

| Mean [%] | 23.55 | 40.76 | 65.59 | 97.459 | 97.209 | 98.37 | 98.45 |

| Std. [%] | 0.102 | 0.202 | 0.2357 | 0.056 | 0.0866 | 0.055 | 0.057 |

| Min [%] | 23.38 | 40.45 | 65.07 | 97.37 | 97.10 | 98.26 | 98.36 |

| Median [%] | 23.55 | 40.72 | 65.70 | 97.46 | 97.19 | 98.38 | 98.44 |

| Max [%] | 23.70 | 40.99 | 65.78 | 97.53 | 97.37 | 98.45 | 98.54 |

| Computational time [sec] | 8 | 850 | 6880 | 1514 | 312 | 2804 | 1590 |

| Model | R2 | RMSE | NRMSE | Computational time [sec] |

|---|---|---|---|---|

| GBR | 0.6858 | 1.0608 | 0.0617 | 908 |

| XGBR | 0.9698 | 0.3287 | 0.0191 | 257 |

| DTR | 0.9646 | 0.3559 | 0.0207 | 52 |

| RFR | 0.9831 | 0.2464 | 0.0143 | 489 |

| ETR | 0.9847 | 0.2340 | 0.0136 | 253 |

| LR | 0.2379 | 1.6522 | 0.0961 | 1 |

| 3rd order Polynomial | 0.4008 | 1.4778 | 0.0859 | 120 |

| Vessel Name | Vessel Type | Route | R2 | RMSE | NRMSE | Data Size |

|---|---|---|---|---|---|---|

| A | Tanker | Chiba, JP to Townsville, AUS | 0.9845 | 0.0609 | 0.0077 | 2644 |

| B | Tanker | Burnie, AUS to Yokkaichi, JP | 0.9734 | 0.1506 | 0.0206 | 3351 |

| C | Cargo | Shibushi to Vancouver, CAN | 0.9827 | 0.1178 | 0.0127 | 4481 |

| D | Cargo | Marsden PT. to Singapore | 0.9881 | 0.0543 | 0.0106 | 3004 |

| E | Cargo | Westshore CAN, to Gwangyang. S. KOR | 0.9821 | 0.1038 | 0.0127 | 3014 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abebe, M.; Shin, Y.; Noh, Y.; Lee, S.; Lee, I. Machine Learning Approaches for Ship Speed Prediction towards Energy Efficient Shipping. Appl. Sci. 2020, 10, 2325. https://doi.org/10.3390/app10072325

Abebe M, Shin Y, Noh Y, Lee S, Lee I. Machine Learning Approaches for Ship Speed Prediction towards Energy Efficient Shipping. Applied Sciences. 2020; 10(7):2325. https://doi.org/10.3390/app10072325

Chicago/Turabian StyleAbebe, Misganaw, Yongwoo Shin, Yoojeong Noh, Sangbong Lee, and Inwon Lee. 2020. "Machine Learning Approaches for Ship Speed Prediction towards Energy Efficient Shipping" Applied Sciences 10, no. 7: 2325. https://doi.org/10.3390/app10072325

APA StyleAbebe, M., Shin, Y., Noh, Y., Lee, S., & Lee, I. (2020). Machine Learning Approaches for Ship Speed Prediction towards Energy Efficient Shipping. Applied Sciences, 10(7), 2325. https://doi.org/10.3390/app10072325