1. Introduction

Synthesizers tend to focus on timbral aspects of sound, which contains temporal and spectral features [

1,

2]. This is even true for modern synthesizers that imitate musical instruments by means of physical modeling [

3,

4]. Many samplers and electric pianos on the market use stereo recordings, or pseudostereo techniques [

5,

6] to create some perceived spaciousness in terms of

apparent source width or

perceived source extent, so that the sound appears more natural and vivid. However, such techniques do not capture the sound radiation characteristics of musical instruments, which may be essential for an authentic experience in music listening and musician-instrument-interaction.

Most sound field synthesis approaches synthesize virtual monopole sources or plane waves by means of loudspeaker arrays [

7,

8]. Methods to incorporate the sound radiation characteristics of musical instruments are based on sparse recordings of the sound radiation characteristics [

5], like far field recordings from circular [

9,

10] or spherical [

11] microphone arrays with 24 to 128 microphones. In these studies, a nearfield mono recording is extrapolated from a virtual source point. However, instead of a monopole point source, the measured radiation characteristic is included in the extrapolation function, yielding a so-called

complex point source [

9,

12,

13]. Complex point sources are a drastic simplification of the actual physics of musical instruments. However, complex point sources were demonstrated to create plausible physical and perceptual fields [

5,

14]. These sound natural in terms of source localization, perceived source extent and timbre, especially when listeners and/or sources move during the performance [

5,

15,

16,

17,

18,

19,

20].

To date, sound field synthesis methods to reconstruct the sound radiation characteristics of musical instruments do not incorporate exact nearfield microphone array measurements of musical instruments, as described in [

21,

22,

23,

24,

25]. This is most likely because the measurement setup and the digital signal processing for high-precision microphone array measurements are very complex on their own. The methods include optimization algorithms and solutions to inverse problems. The same is true for sound field synthesis approaches that incorporate complex source radiation patterns.

In this paper we introduce the theoretic concept of a radiation keyboard. We describe on a theoretical basis, and with some practical considerations, which sound field measurement and synthesis methods should be combined, and how to combine them utilizing their individual strengths. All presented results are preparatory for the realization. In contrast to conventional samplers, electric pianos, etc., a radiation keyboard recreates not only the temporal and spectral aspects of the original instrument, but also its spatial attributes. The final radiation keyboard is basically a MIDI keyboard whose keys trigger different driving signals of a loudspeaker array in real-time. When playing the radiation keyboard, the superposition of the propagated loudspeaker driving signals should create the same sound field as the original harpsichord would do. Thus, the radiation keyboard will create a more realistic sound impression than conventional, stereophonic samplers. This is especially true for musical performance, where the instrumentalists moves their heads. The radiation keyboard can serve, for example,

as a means to produce authentic sounding replicas of historic musical instruments in the context of cultural heritage preservation [

26,

27],

as an authentic and immersive alternative to physical modeling synthesizers, conventional samplers, electrical pianos (or harpsichords, respectively) [

3,

4,

28],

as a research tool for instrument building [

29], interactive psychoacoustics [

30], and embodied music interaction [

31].

The remainder of the paper is organized as follows.

Section 2 describes all the steps that are carried out to measure and synthesize the sound radiation characteristics of a harpsichord. In

Section 2.1, we describe the setup to measure impulse responses of the harpsichord and the radiation keyboard loudspeakers. These are needed to calculate impulse responses that serve as raw loudspeaker driving signals. For three different frequency regions, f1 to f3, different methods are ideal to calculate. In

Section 2.2,

Section 2.3 and

Section 2.4, we describe how to derive the loudspeaker impulse responses for frequency regions f1, f2 and f3. In

Section 3, we describe how to combine the three frequency regions, and how to create loudspeaker driving signals that synthesize the original harpsichord sound field during music performance. After a summary and conclusion in

Section 4, we discuss potential applications of the radiation keyboard in the outlook

Section 5.

2. Method

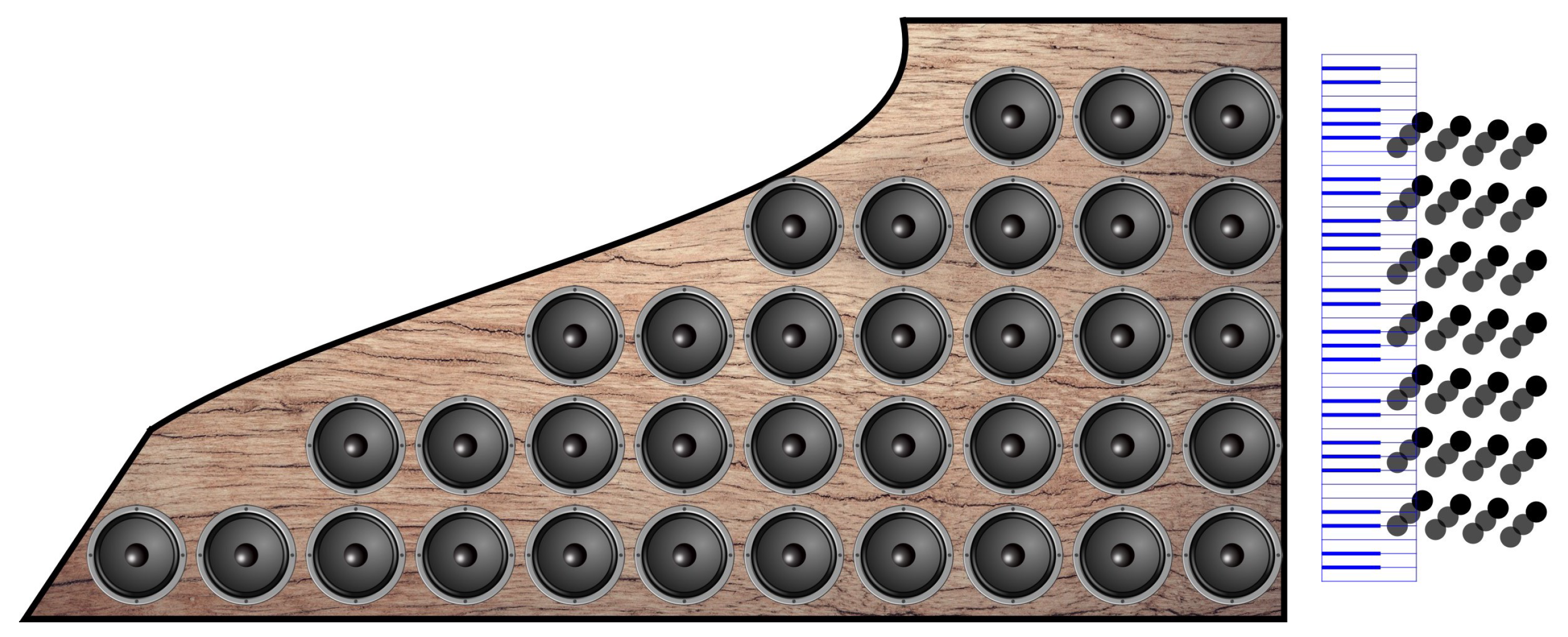

The concept and design of the proposed radiation keyboard are illustrated in

Figure 1. The sound field radiated by a harpsichord is analyzed by technical means. Then, this sound field is synthesized by the radiation keyboard. The radiation keyboard consists of a loudspeaker array whose driving signals are triggered by a MIDI keyboard. The superposition of the propagated loudspeaker driving signals creates the same sound field as a real harpsichord. To date, no sound field synthesis method is able to radiate all frequencies in the exact same way the harpsichord does. Therefore, we combine different sound field analysis and synthesis methods. This combination offers an optimal compromise: low frequencies f1 < 1500 Hz are synthesized with high precision in the complete half-space above the sound board, mid frequencies

f2

Hz are synthesized with high precision within an extended listening region, and higher frequencies f3 >

Hz are synthesized with high precision at discrete listening points within the listening region.

To implement a radiation keyboard, four main steps are carried out.

Figure 2 shows a flow diagram of the main steps: firstly, the sound radiation characteristics of the harpsichord are measured by means of microphone arrays. Secondly, an optimal constellation of loudspeaker placement and sound field sampling is derived from impulse these measurements. As the third step the impulse responses for the loudspeaker array are calculated. These serve as raw loudspeaker driving signals. Finally, loudspeaker driving signals are calculated by a convolution of harpsichord source signals with the array impulse responses. These driving signals are triggered by a MIDI keyboard and play in real-time. The superposition of the propagated driving signals synthesizes the complex harpsichord sound field.

To synthesize the sound field, it is meaningful to divide the harpsichord signal into three frequency regions: frequency region f1 lies below kHz, the Nyquist frequency of the proposed loudspeaker array. Frequency region f2 ranges from kHz to 4 kHz, the Nyquist frequency of the microphone array. Frequency region f3 lies above these Nyquist frequencies. Different sound field measurement and synthesis methods are optimal for each region. They are treated separately in the following sections.

2.1. Setup

The setup for the impulse response measurements is illustrated in

Figure 3 for a piano under construction. In the presented approach the piano is replaced by a harpsichord. An acoustic vibrator excites the instrument at the termination point between the bridge and a string for each key. Successive microphone array recordings are carried out in the near field to sample the sound field at

points parallel to the sound board.

In addition to the near field recordings, the microphone array samples the

listening region. The head of the instrumentalist will be located in this region during the performance (ear channel distance to keyboard y

m, ear channel distance to ground z

m for a grown person). The location is indicated by black dots in

Figure 4.

A lightweight piezoelectric accelerometer measures the vertical polarization of the transverse string acceleration

at the intersection point between string and bridge for each of the

keys. This is not illustrated in

Figure 3 but indicated as brown dots in

Figure 5. The acceleration measured by the sensor is proportional to the force acting on the bridge. Details on the setup can be found in [

32,

33]. Alternatively,

can be recorded optically, using a high speed camera and the setup described in [

34,

35], or it can be synthesized from a physical plectrum-string model [

36]. The string recording represents the

source signal that excites the harpsichord.

Measuring string vibrations isolated from the impulse response measurements of the sound board adds a lot of flexibility to the radiation keyboard. We derive impulse responses for the loudspeaker array of the radiation keyboard. This radiates all frequencies the same way the harpsichord would do. Consequently, any arbitrary source signal can serve as an input for the radiation keyboard. In addition to the measured harpsichord string acceleration , the radiation keyboard can load any sound sample, such as alternative harpsichord tunings, alternative instrument recordings, or arbitrary test signals.

Figure 4 illustrates the radiation keyboard. A rigid board in the shape of the harpsichord sound board serves as a loudspeaker chassis. A regular grid of loudspeakers is arranged on this chassis. The radiated sound field created by each single loudspeaker is recorded in the listening region.

2.2. Low Frequency Region f1

The procedure to calculate the loudspeaker impulse responses for frequency region f1 is illustrated in

Figure 5. Firstly, impulse responses of the harpsichord are recorded in the near field. Next, the recorded sound field is propagated back to

points on the harpsichord sound board. Then, an optimal subset of these points is identified. This subset determines the loudspeaker distribution of the radiation keyboard.

2.2.1. Nearfield Recording

The setup for the near field recordings is illustrated in

Figure 3. A microphone array

with equidistant microphone spacing of 40 mm is installed at a distance of 5 cm parallel to the harpsichord soundboard surface. The index

describes a microphone position above the harpsichord.

An acoustic vibrator excites the instrument at the intersection point of string and bridge; the

string termination point [

32]

. Here, the index

describes the pressed key. For a harpsichord with 5 octaves,

keys exist.

To obtain impulse responses from the recorded data the so-called exponential sine sweep (ESS) technique is utilized [

37]. The method has originally been proposed for measurements of weakly non-linear systems in room acoustics (e.g., loudspeaker excitation in a concert hall) but can also be adapted to structure-borne sound [

38]. For the excitation an exponential sine sweep

is used, where

Here,

is the starting frequency,

24,000

is the maximum frequency and

25

is the signal duration. The vibrator excites the sound board with this signal.

Figure 6 shows the spectrogram of an exemplary microphone recording

. Since the frequency axis has logarithmic scaling, the sweep appears as a straight line. Due to non-linearity in the shaker excitation the recording shows harmonic distortions parallel to the sinusoidal sweep.

A deconvolution process eliminates these distortions. The deconvolution is realized by a linear convolution of the measured output

with the function

Here,

is the temporal reverse of the excitation sweep signal (

2) and

is an amplitude modulation that compensate the energy generated per frequency, reducing the level by 6 dB/octave, starting with 0 at

0

and ending with

at

, expressed as

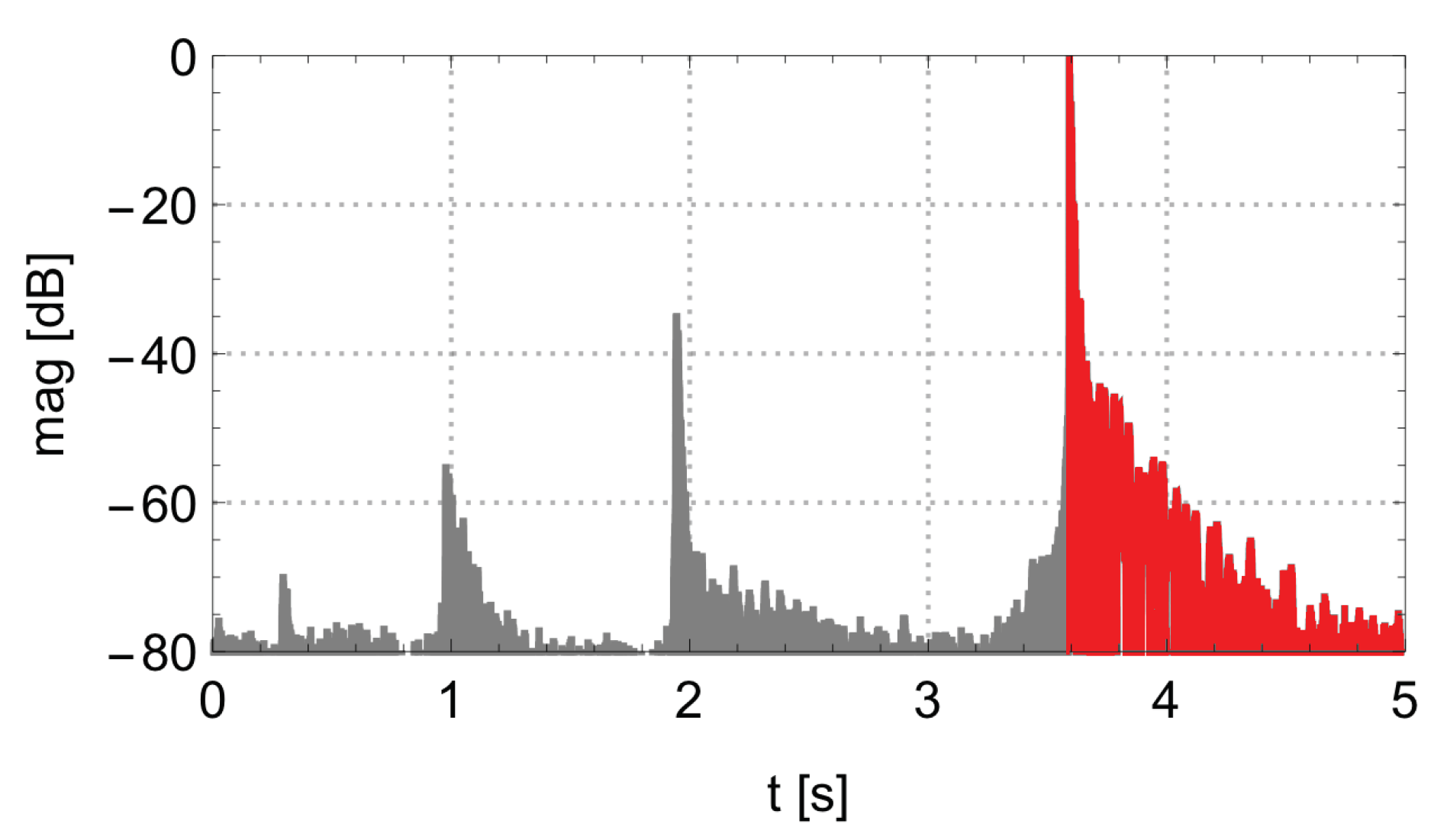

This linear deconvolution delays of an amount of time varying with frequency. The delay is proportional to the logarithm of frequency. Therefore, stretches the signal with a constant slope, and compresses the linear part to a time delay corresponding to the filter length. The harmonic distortions have the same slope as the linear part and are, therefore, also packed to very precise times. If T is large enough, the linear part of an impulse response is temporally clearly separated from the non-linear pseudo IR.

This deconvolution process yields one signal

This signal

is the linear impulse response

preceded by the nonlinear distortion products, i.e., the pseudo-IRs. An example of

and

is illustrated in

Figure 7. The linear impulse response part can be obtained by a peak detection searching for the last peak in the time series. In the figure the final, linear impulse response

is highlighted in red.

The driving signal and the convolution are reproducible. Repeated measurements are carried out to sample the radiated sound field. To cover each key and sample point, this yields 93,000 recordings.

2.2.2. Back Propagation

The harpsichord soundboard is a continuous radiator of sound, but can be simplified as a discrete distribution of

radiating points

, referred to as

equivalent sources [

23]. These equivalent sources sample the vibrating sound board. The validity of this simplification is restricted by the Nyquist-Shannon theorem, i.e., two equivalent sources per wave length are necessary. The following steps are frequency-dependent. Therefore, we transfer functions of time into frequency domain using the discrete Fourier transform. Terms in frequency domain are indicated by capital letters and the

in the argument. For example

represents the frequency spectrum of

.

The relationship between the radiating soundboard

and the spectra of the aligned, linearized microphone recordings

is described by a linear equation system

where

is the Free field Greens’ function. It is a

complex transfer function that describes the

sound propagation of the equivalent sources as monopole radiators. Here, the term

is the Euclidean distance between equivalent sources and microphones,

k is the wave number and

i, the imaginary unit. Equation (

6) is closely related to the Rayleigh Integral which is applied in acoustical holography and sound field synthesis approaches, like wave field synthesis and ambisonics [

39]. One problem with Equation (

6) is that the linear equation system is ill-posed. The radiated sound

is recorded but the source sound

, which created the recorded sound pressure distribution, is sought. When solving the linear equation system, e.g., by means of Gaussian elimination or an inverse matrix of

[

40], the resulting sound pressure levels tend to be huge due to small numerical errors, measurement and equipment noise. This can be explained by the propagation matrix being ill-conditioned when microphone positions are close to one another compared to the considered wavelength. In this case the propagation matrix condition number is high. A regularization method relaxes the matrix and yields lower amplitudes. An overview about regularization methods can be found in [

21,

23,

40]. For musical instruments, the Minimum Energy Method (MEM) [

23,

41] is very powerful. The MEM is an iterative approach, gradually reshaping the radiation characteristic of

from monopole at

to a ray at

using the formulation

where

is multiplied by

in Equation (

6) to reshaped the complex transfer function, like

In Equations (

8) and (

9),

describes the angle between equivalent sources

and loudspeakers

as inner product of both position vectors

The angle

is given by the constellation of source- and receiver positions and is 1 in normal direction

of the considered equivalent source position and 0 in the orthogonal direction. The ideal value for

minimizes the reconstruction energy

where

The energy

E is proportional to the sum of the squared pressure amplitudes on the considered structure. In a first step, the linear equation system is solved for integers from

to

and the reconstruction energy is plotted over

. Around the local minimum, the linear equation system is again solved, this time in steps of

. Typically, the iteration is truncated after the first decimal place. An example of reconstruction energy over

is illustrated in

Figure 8 together with the condition number of

in Equation (

9). Near

, both the signal energy and the matrix condition number tend to be low.

Alternatively, the parameter

can be tuned manually to find the best reconstruction visually; the correct solution tends to create the sharpest edges at the instrument boundaries, with pressure amplitudes near 0. This is a typical result of the truncation effect: the finite extent of the source causes an acoustic short-circuit. At the boundary, even strong elongations of the sound board create hardly any pressure fluctuations, since air flows around the sound board. The effect can be observed in

Figure 9.

The result of the MEM is one source term for each equivalent source on the harpsichord sound board. Below the Nyquist frequency, these 1500 equivalent sources approximate the sound field of a real harpsichord. This is true for the complete half-space above the sound board.

The radiation of numerous musical instruments has been measured using the described microphone array setup and the MEM, like grand piano [

35,

42], vihuela [

23,

43], guitars [

43,

44], drums [

23,

41,

45], flutes [

41,

45], and the New Ireland kulepa-ganeg [

46]. The method is so robust that the geometry of the instruments becomes visible, as depicted in

Figure 9.

2.2.3. Optimal Loudspeaker Placement

The back-propagation method described in

Section 2.2.2 yields one source term for each of the

equivalent sources and

keys. Together, the equivalent sources sample the harpsichord sound field in the region of the sound board. Forward propagation of the source terms approximates the harpsichord sound field in the whole half-space above the sound board. This has been demonstrated, e.g., in [

47]. Replacing each equivalent source by one loudspeaker is referred to as

acoustic curtain, which is the origin of wave field synthesis [

39,

48]. In physical terms, this situation is a spatially truncated discrete Rayleigh integral, which is the mathematical core of wave field synthesis [

7,

8,

39,

49]. A prerequisite is that all equivalent sources are homogeneous radiators in the half-space above the soundboard. This is the case for the proposed radiation keyboard. For low frequencies, loudspeakers without a cabinet approximate dipoles fairly well. Naturally, single loudspeakers with a diameter in the order of 10 cm are inefficient radiators of low frequencies [

50]. However, this situation improves when a dense array of loudspeakers is moving in phase. This is typically happening in the given scenario; when excited with low frequencies, the sound board vibrates as a whole [

51], so the loudspeaker signals for the wave field synthesis will be in phase. While truncation creates artifacts in most wave field synthesis setups, referred to as

truncation error [

8,

39,

48], no artifacts are expected in the described setup due to natural tapering: at the boundaries of the loudspeaker array, an acoustic short-circuit will occur. However, the acoustic short-circuit also occurs in real musical instruments, as demonstrated in

Figure 9. This is because compressed air in the front flows around the sound board towards the rear, instead of propagating as a wave. The acoustic short-circuit of the outermost loudspeakers acts like a natural tapering window. In wave field synthesis installations artificial tapering is applied to compensate the truncation error.

The MEM describes the sound board vibration by

equivalent source terms. Replacing all equivalent sources by an individual loudspeaker is not ideal, because the spacing is too dense for broadband loudspeakers, and it is challenging to synchronize 1500 channels for real-time audio processing. Audio interfaces including D/A-converters for

synchronized channels in audio-cd quality are commercially available, using, for example, MADI or Dante protocol. In wave field synthesis systems, regular loudspeaker distributions have been reported to deliver the best synthesis results [

52]. Covering the complete soundboard of a harpsichord with a regular grid consisting of 128 grid points, yields about one loudspeaker every 12 cm. This is a typical loudspeaker density in wave field synthesis systems and yields a Nyquist frequency of about

kHz for waves in air [

7].

Three exemplary loudspeaker arrays are illustrated in

Figure 10.

Every third equivalent source can be replaced by a loudspeaker with little effect on the sound field synthesis precision below kHz. This yields possible loudspeaker grid positions .

At the ideal location of the loudspeaker grid, all loudspeakers lie near antinodes of all frequencies of all keys. In contrast to regions near the nodes, sound field calculations near the antinodes do not suffer from equipment noise, numerical noise, and small microphone misplacements. Instead, all loudspeakers contribute efficiently to the wave field synthesis. Therefore, the optimal grid location

has the largest signal energy

Equation (

13) is solved for each of the

possible loudspeaker grid positions. The grid with the maximum signal energy is replaced by loudspeakers as indicated in

Figure 5. This ideal grid is the optimal loudspeaker distribution

.

The Nyquist frequency of the loudspeaker array lies around kHz. For reproduction of higher frequencies, other methods are necessary, as described in the following sections.

2.3. Higher Frequency Region f2

The procedure to calculate the loudspeaker impulse responses for frequency region f2 is illustrated in

Figure 11. First, impulse responses of the harpsichord are recorded in the listening region. Next, impulse responses of the final loudspeaker grid

are recorded in the listening region. These are transformed such that the loudspeaker array creates the harpsichord sound field in the listening region. Then, the optimal position of listening points is determined. These listening points are a subset of the microphone locations that sample the listening region.

2.3.1. Far Field Recording

In addition to the near field recordings, the radiated sound is also recorded with a microphone array that samples the region in which the instrumentalist’s head may be located during playing. We refer to this region as the listening region and to the discrete sample points as listening points. The distance between equivalent sources on the sound board and the listening points lies in the order of decimeters to meters. For frequencies above kHz, this means that the listening region lies in the far field.

In the near field measurement,

Section 2.2.1, one microphone array samples a planar region parallel to the sound board. In the far field measurement the microphone array samples a rectangular cuboid. The setup for the far field recordings is illustrated in

Figure 4 and

Figure 11. As described in

Section 2.1 and

Section 2.2.1, the sound board is excited with an exponential sweep. An array of

microphones samples the listening region. The microphones are arranged as a regular grid with a spacing of 4 cm. The array samples the complete sound field in the listening region for all wave lengths above

m, i.e., frequencies below 4 kHz. About

repeated measurements are carried out with a slightly shifted microphone array. Equations (

1)–(

5) describe how to excite the sound board and derive impulse responses for the

87,296 different source-receiver constellations.

These far field impulse responses provide a sample of the

desired sound field in the region in which the instrumentalist is moving her head. In frequency domain, it can be described as the relationship between source signal

, complex transfer function

and microphone array recordings

where the recording of the sweep is aligned to receive the impulse response

The terms

and

in Equation (

14) are known. So instead of microphone array measurements,

can be calculated by this forward-propagation formula. In [

47] it was demonstrated that the forward-propagation equals the measurements.

2.3.2. Radiation Method

To synthesize the desired sound field

with the given loudspeaker array

,

loudspeaker signals

need to be calculated. This is done in two steps. First, the swept sine, Equation (

1), is played trough each individual loudspeaker

and recorded in the listening region. Here,

and

describe the relationship between the source signal, the raw microphone recordings and the final impulse response. Here, the unknown propagation term

is the ratio of the source signal and the recordings. In contrast to a real harpsichord source signal

we know that

for all audible frequencies. Thus, the complex transfer function

between each loudspeaker of the array

and each listening point

is determined by simply recording the propagated swept sine, Equation (

16), of each loudspeaker at each listening point, followed by the deconvolution, Equation (

17).

This complex transfer function is neither the idealized monopole source radiation

, nor the energy-optimized radiation function

. Instead,

is the actual transfer function as measured physically. It includes the frequency and phase response of the loudspeakers, the amplitude decay and the phase-shift from each loudspeaker to each receiver. It can thus be considered the true transfer function. It includes the sound radiation characteristics of the loudspeakers, which tend to deviate from

G and

. Solving the linear equation system

for all

microphone array positions yields the impulse response for the loudspeakers

. This procedure is referred to as

radiation method as it synthesizes a desired sound field by including the measured sound radiation characteristics of the loudspeakers. Accounting for the actual transfer function from each loudspeaker to each listening point has the advantage that the rows in the linear equation system described by Equation (

18) tend to deviate stronger in reality compared to idealized monopole radiators. This has been demonstrated in [

20,

49]. The radiation method is a robust regularization method that has been demonstrated to relax the linear equation system [

9,

20,

39,

49]. It leads to (a) low amplitudes and (b) solutions that vary only slightly, when the source-receiver constellation or the source signal is varied slightly. The method synthesizes a desired sound field as long as (a) the sound field lies in the far field and (b) at least two listening points per wavelength exist. The method is only ideal for frequency region f2, as it does not account for nearfield effects and spatial aliasing [

9,

20,

49].

2.3.3. Optimal Listening Points

So far, Equation (

18) delivers

sets of

impulse responses for each key. The solutions only vary slightly, due to microphone misplacements, equipment and background noise, numerical errors and the spatial variations of the loudspeaker sound radiation characteristics. The solutions are valid inside the listening region. Outside the listening region synthesis errors occur, because loudspeaker signals interfere in an arbitrary manner. Outside an anechoic chamber, this will lead to unnatural reflections. Consequently, the ideal impulse response minimizes synthesis errors outside the listening region. This is achieved by selecting the impulse responses with the lowest signal energy

where

The signal energy is the sum of all

loudspeaker impulse responses. In Equation (

20) the signal energy of each key

is calculated for each of the

microphone array positions. As defined in Equation (

19), the final impulse response

for each specific key is the one where

v creates minimal signal energy. This solution exhibits the most constructive interference inside the listening region. The solution is valid for the considered frequency region f2, i.e., between

and 4 kHz. For higher frequencies, a third method is ideal, as discussed in the following section.

2.4. Highest Frequency Region

In principle, the method to calculate the impulse response for frequency region f3 equals the method described in

Section 2.3. For frequencies over 4 kHz the radiation method only synthesizes the desired sound field at the discrete listening points, but not in between [

49]. For human listeners, neither the exact frequency nor the phase are represented in the auditory pathway ([

39] Chapter 3). Amplitude of such high frequencies mainly contributes to the perception of brightness and the phase contributes to the impulsiveness of attack transients. Both are important aspects of timbre perception ([

53] Chapter 11), ([

39] Chapter 2).

Therefore, it is adequate to approximate the desired amplitudes and phases at discrete listening points by solving Equation (

18). The difference between frequency regions f2 and f3 is the selection of optimal listening points. Instead of choosing the impulse response with minimum signal energy, Equation (

20), the ideal impulse response for f3 is the shortest, because it exhibits the highest impulse fidelity. When convolved with a short impulse response, the frequencies of source signals stay in phase. Quite contrary, long impulse responses indicate out of phase relationships. Phase is mostly audible during transients. Consequently, the shortest impulse response is ideal, because it maintains the characteristic, steep attack transient of harpsichord notes.

Impulse responses for one loudspeaker and three different microphone array locations

v is illustrated in

Figure 12. The shortest impulse response can be identified visually.

3. Calculation of Loudspeaker Driving Signals

Each method described above result in one truncated impulse response per loudspeaker and pressed key

. Here, each impulse response is truncated in frequency. To combine them, the three truncated impulse responses per loudspeaker and pressed key are simply added, i.e.,

The result of Equation (

21) is a broadband impulse response

that covers the complete audible frequency range. An example is illustrated in

Figure 13.

Summing the truncated impulse responses yields one broadband impulse response for each loudspeaker and pressed key, i.e., broadband impulse responses . These impulse responses describe how any frequency is radiated by the loudspeaker array to approximate the sound field that the harpsichord would create, if excited by a broadband impulse.

Naturally, a played harpsichord is not excited by an impulse. Instead, pressing a key creates a driving signal that travels through the string and transfers to the soundboard via the bridge. In

Section 2.1 we provide literature that suggests three different ways to record or model this driving signal

. In order to finally use the radiation keyboard as a harpsichord sampler, the loudspeaker driving signals

are calculated by a convolution of the impulse response with the source signal, i.e.,

This yields sound files. These are imported to multiple instances of a software sampler in a digital audio workstation (DAW). Typically, one instance of a software sampler can address between 16 and 64 output channels. Consequently, between 2 and 8 sampler instances need to be initialized. Technologies like VST and Direct-X are able to handle this parallelism, and several multi-channel DAWs (like Steinberg Cubase, Ableton Live and Magix Samplitude) can handle the high number of output channels. Finally, the original keyboard of the harpsichord is replaced by a MIDI-Keyboard, whose note-on command triggers the 128 samples for the corresponding note.

As the effect of key velocity on the created level and timbre is negligibly, the harpsichord is the ideal instrument to start with; only one sample per note and loudspeaker is necessary. For more expressive instruments, such as the piano, the attack velocity affects the produced level and timbre. Here, several samples per note, or one attack-velocity controlled filter would have to be applied. This implies the need for much higher data rates and specific signal processing, which is out of scope of this paper.

4. Conclusions

In this paper the theoretic foundation of a radiation keyboard has been presented. It includes the complete chain from recording the source sound and the radiated sound of a harpsichord to synthesizing its temporal, spectral and spatial sound within an extended listening region, controlled in real-time. To achieve this, we choose the optimal method for each frequency region and inverse problem, and describe a way to combine so far isolated and fragmented sound field analysis and synthesis approaches.

For the low frequency region f1, a combination of nearfield recordings, the minimum energy method, an energy-efficient loudspeaker grid selection, and wave field synthesis is ideal. It synthesizes the desired sound field in the complete half-space above the sound board.

For the higher-frequency region f2, a combination of far field recordings, the radiation method, and energy efficient listening point selection is ideal. This combination synthesizes the desired sound field in the listening region with a high precision.

For the highest frequency region f3, far field recordings and an in-phase impulse response creation are ideal. It approximates the correct signal amplitudes in the listening region, while supporting the transient behavior of the source sound. The initial outcome of such a radiation keyboard is a sampler that mimics not only the temporal and spectral aspects of the original musical instrument, but also its spatial aspects.

5. Outlook

This paper presented the theoretic framework of our current research project. The effort to implement a radiation keyboard is very high and a number of sound field measurement and synthesis methods need to be combined, leveraging their individual strengths. We have not implemented the radiation keyboard yet; this paper rather describes the necessary means to realize it.

The implemented radiation keyboard is supposed to serve as a research tool to carry out interactive listening experiments that are more ecological than passive listening tests with artificial sounds in a laboratory environment. Note that the radiation keyboard is not restricted to harpsichord sounds. In principle, any arbitrary sound file can act as source signal and be radiated like a harpsichord. This enables us to manipulate the temporal and spectral aspects of the sound, while keeping the sound radiation constant. Loading different source sounds while keeping the sound radiation fixed, could reveal which temporal and spectral parameters affect the perception of source extent and naturalness in the direct sound of musical instruments. The radiation keyboard could answer the question, whether a saxophone sound with the radiation characteristics of a harpsichord sound larger than a real saxophone. Using the radiation keyboard, we can investigate apparent source width and immersion of direct sound both in presence and absence of room acoustics. To date, physical predictors of apparent source with originate in room acoustical investigations [

15,

16,

39]. Findings disagree, which frequency region is of major importance for these listening impression. Different predictors and the discourse are examined in [

5].

The strength of a real-time capable radiation keyboard is the interactivity: musicians can actively play the instrument instead of carrying out passive listening tests. Interactivity creates a dynamic sound and allows for a natural interaction in an authentic musical performance scenario. This is a necessity in the field of performance, gesture, and human-machine-interaction studies and a prerequisite for ecological psychoacoustics [

30,

31].

Author Contributions

Conceptualization, T.Z.; methodology, T.Z. and N.P.; software, T.Z. and N.P.; validation, T.Z. and N.P., formal analysis, T.Z. and N.P., investigation, T.Z. and N.P., resources, T.Z. and N.P.; data curation, T.Z. and N.P.; writing—original draft preparation, T.Z.; writing—review and editing, T.Z. and N.P.; visualization, T.Z. and N.P.; project administration, T.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

We acknowledge the detailed and critical, but also amazingly constructive feedback from the two anonymous reviewers. We thank the Claussen Simon Foundation for their financial support during this project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Beauchamp, J.W. Synthesis by spectral amplitude and “brightness” matching of analyzed musical instrument tones. J. Audio Eng. Soc. 1982, 30, 396–406. [Google Scholar]

- Chowning, J.; Bristow, D. FM Theory & Applications. By Musicians for Musicians; Yamaha Music Foundation: Tokyo, Japan, 1986. [Google Scholar]

- Bilbao, S.; Torin, A. Numerical Modeling and Sound Synthesis for Articulated String/Fretboard Interactions. J. Audio Eng. Soc. 2015, 63, 336–347. [Google Scholar] [CrossRef]

- Pfeifle, F.; Bader, R. Real-Time Finite-Difference Method Physical Modeling of Musical Instruments Using Field-Programmable Gate Array Hardware. J. Audio Eng. Soc. 2016, 63, 1001–1016. [Google Scholar] [CrossRef]

- Ziemer, T. Source Width in Music Production. Methods in Stereo, Ambisonics, and Wave Field Synthesis. In Studies in Musical Acoustics and Psychoacoustics; Schneider, A., Ed.; Springer: Cham, Switzerland, 2017; Volume 4, Current Research in Systematic Musicoogy, Chapter 10; pp. 299–340. [Google Scholar] [CrossRef]

- Faller, C. Pseudostereophony Revisited. In Proceedings of the 118th Audio Engineering Society Convention, Barcelona, Spain, 28–31 May 2005. [Google Scholar]

- Spors, S.; Rabenstein, R.; Ahrens, J. The Theory of Wave Field Synthesis Revisited. In Proceedings of the 124th Audio Engineering Society Convention, Amsterdam, The Netherlands, 17–20 May 2008. [Google Scholar]

- Ziemer, T. Wave Field Synthesis. In Springer Handbook of Systematic Musicology; Bader, R., Ed.; Springer: Berlin/Heidelberg, Germany, 2017; Chapter 18; pp. 175–193. [Google Scholar] [CrossRef]

- Ziemer, T.; Bader, R. Implementing the Radiation Characteristics of Musical Instruments in a Psychoacoustic Sound Field Synthesis System. J. Audio Eng. Soc. 2015, 63, 1094. [Google Scholar]

- Albrecht, B.; de Vries, D.; Jacques, R.; Melchior, F. An Approach for Multichannel Recording and Reproduction of Sound Source Directivity. In Proceedings of the 119th Audio Engineering Society Convention, New York, NY, USA, 7–10 October 2005. [Google Scholar]

- Zotter, F. Analysis and Synthesis of Sound-Radiation with Spherical Arrays. Ph.D. Thesis, University of Music and Performing Arts, Graz, Austria, 2009. [Google Scholar]

- Ziemer, T.; Bader, R. Complex point source model to calculate the sound field radiated from musical instruments. J. Acoust. Soc. Am. 2015, 138, 1936. [Google Scholar] [CrossRef]

- Ziemer, T.; Bader, R. Complex point source model to calculate the sound field radiated from musical instruments. Proc. Mtgs. Acoust. 2015, 25, 035001. [Google Scholar] [CrossRef]

- Ziemer, T. Sound Radiation Characteristics of a Shakuhachi with different Playing Techniques. In Proceedings of the International Symposium on Musical Acoustics (ISMA-14), Le Mans, France, 7–12 July 2014; pp. 549–555. [Google Scholar]

- Lindemann, W. Extension of a binaural cross-correlation model by contralateral inhibition. II. The law of the first wave front. J. Acoust. Soc. Am. 1986, 80, 1623–1630. [Google Scholar] [CrossRef] [PubMed]

- Beranek, L.L. Concert Halls and Opera Houses: Music, Acoustics, and Architecture, 2nd ed.; Springer: New York, NY, USA, 2004. [Google Scholar]

- Böhlke, L.; Ziemer, T. Perception of a virtual violin radiation in a wave field synthesis system. J. Acoust. Soc. Am. 2017, 141, 3875. [Google Scholar] [CrossRef]

- Böhlke, L.; Ziemer, T. Perceptual evaluation of violin radiation characteristics in a wave field synthesis system. Proc. Meet. Acoust. 2017, 30, 035001. [Google Scholar] [CrossRef]

- Corteel, E. Synthesis of Directional Sources Using Wave Field Synthesis, Possibilities, and Limitations. EURASIP J. Adv. Signal Process. 2007, 2007, 90509. [Google Scholar] [CrossRef][Green Version]

- Ziemer, T.; Bader, R. Psychoacoustic Sound Field Synthesis for Musical Instrument Radiation Characteristics. J. Audio Eng. Soc. 2017, 65, 482–496. [Google Scholar] [CrossRef]

- Bai, M.R.; Ih, J.G.; Benesty, J. Acoustic Array Systems. Theory, Implementation, and Application; Wiley & Sons: Singapore, 2013. [Google Scholar] [CrossRef]

- Williams, E.G. Fourier Acoustics. Sound Radiation and Nearfield Acoustical Holography; Academic Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Bader, R. Microphone Array. In Springer Handbook of Acoustics, 2nd ed.; Rossing, T.D., Ed.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 1179–1207. [Google Scholar] [CrossRef]

- Kim, Y.H. Acoustic Holography. In Springer Handbook of Acoustics; Rossing, T.D., Ed.; Springer: New York, NY, USA, 2007; Chapter 26; pp. 1077–1099. [Google Scholar] [CrossRef]

- Hayek, S.I. Nearfield Acoustical Holography. In Handbook of Signal Processing in Acoustics; Havelock, D., Kuwano, S., Vorländer, M., Eds.; Springer: New York, NY, USA, 2008; Chapter 59; pp. 1129–1139. [Google Scholar] [CrossRef]

- Plath, N. 3D Imaging of Musical Instruments: Methods and Applications. In Computational Phonogram Archiving; Bader, R., Ed.; Springer International Publishing: Cham, Switzerland, 2019; Volume 5, Current Research in Systematic Musicology; pp. 321–334. [Google Scholar] [CrossRef]

- Pillow, B. Touching the Untouchable: Facilitating Interpretation through Musical Instrument Virtualization. In Playing and Operating: Functionality in Museum Objects and Instruments; Cité de la Musique—Philharmonie de Paris: Paris, France, 2020; p. 8. [Google Scholar]

- Russ, M. Sound Synthesis and Sampling, 3rd ed.; Focal Press: Burlington, MA, USA, 2009. [Google Scholar]

- Bader, R.; Richter, J.; Münster, M.; Pfeifle, F. Digital guitar workshop—A physical modeling software for instrument builders. In Proceedings of the Third Vienna Talk on Music Acoustics; Mayer, A., Chatziioannou, V., Goebl, W., Eds.; University of Music and Performing Arts Vienna: Vienna, Austria, 2015; p. 266. [Google Scholar]

- Neuhoff, J.G. (Ed.) Ecological Psychoacoustics; Elsevier: Oxford, UK, 2004. [Google Scholar]

- Lesaffre, M.; Maes, P.J.; Leman, M. (Eds.) The Routledge Companion to Embodied Music Interaction; Routledge: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Plath, N.; Pfeifle, F.; Koehn, C.; Bader, R. Driving Point Mobilities of a Concert Grand Piano Soundboard in Different Stages of Production. In Proceedings of the 3rd Annual COST FP1302 WoodMusICK Conference, Barcelona, Spain, 7–9 September 2016; pp. 117–123. [Google Scholar]

- Plath, N.; Preller, K. Early Development Process of the Steinway & Sons Grand Piano Duplex Scale. In Wooden Musical Instruments Different Forms of Knowledge; Pérez, M.A., Marconi, E., Eds.; Cité de la Musique—Philharmonie de Paris: Paris, France, 2018; pp. 343–363. [Google Scholar]

- Plath, N. High-speed camera displacement measurement (HCDM) technique of string vibrations. In Proceedings of the Stockholm Music Acoustics Conference (SMAC), Stockholm, Sweden, 30 July–3 August 2013; pp. 188–192. [Google Scholar]

- Bader, R.; Pfeifle, F.; Plath, N. Microphone array measurements, high-speed camera recordings, and geometrical finite-differences physical modeling of the grand piano. J. Acoust. Soc. Am. 2014, 136, 2132. [Google Scholar] [CrossRef]

- Perng, C.Y.J.; Smith, J.; Rossing, T. Harpsichord Sound Synthesis Using a Physical Plectrum Model Interfaced With the Digital Waveguide. In Proceedings of the 14th International Conference on Digital Audio Effects (DAFx-11), Paris, France, 19–23 September 2011; pp. 329–335. [Google Scholar]

- Farina, A. Simultaneous measurement of impulse response and distortion with a swept-sine technique. In Proceedings of the 108th Audio Engineering Society Convention, Paris, France, 19–22 February 2000. [Google Scholar]

- Boutillon, X.; Ege, K. Vibroacoustics of the piano soundboard: Reduced models, mobility synthesis, and acoustical radiation regime. J. Sound Vib. 2013, 332, 4261–4279. [Google Scholar] [CrossRef][Green Version]

- Ziemer, T. Psychoacoustic Music Sound Field Synthesis; Springer: Cham, Switzerland, 2020; Volume 7, Current Research in Systematic Musicology. [Google Scholar] [CrossRef]

- Bai, M.R.; Chung, C.; Wu, P.C.; Chiang, Y.H.; Yang, C.M. Solution Strategies for Linear Inverse Problems in Spatial Audio Signal Processing. Appl. Sci. 2017, 7, 582. [Google Scholar] [CrossRef]

- Bader, R. Reconstruction of radiating sound fields using minimum energy method. J. Acoust. Soc. Am. 2010, 127, 300–308. [Google Scholar] [CrossRef] [PubMed]

- Plath, N.; Pfeifle, F.; Koehn, C.; Bader, R. Microphone array measurements of the grand piano. In Seminar des Fachausschusses Musikalische Akustik: “Musikalische Akustik Zwischen Empirie und Theorie”; Mores, R., Ed.; Deutsche Gesellsch. f. Akustik: Berlin, Germany, 2015; pp. 8–9. [Google Scholar]

- Bader, R. Radiation characteristics of multiple and single sound hole vihuelas and a classical guitar. J. Acoust. Soc. Am. 2012, 131, 819–828. [Google Scholar] [CrossRef] [PubMed]

- Bader, R. Characterizing Classical Guitars Using Top Plate Radiation Patterns Measured by a Microphone Array. Acta Acust. United Acust. 2011, 97, 830–839. [Google Scholar] [CrossRef]

- Bader, R.; Münster, M.; Richter, J.; Timm, H. Microphone Array Measurements of Drums and Flutes. In Musical Acoustics, Neurocognition and Psychology of Music; Bader, R., Ed.; Peter Lang: Frankfurt am Main, Germany, 2009; Volume 25, Hamburger Jahrbuch für Musikwissenschaft, Chapter 1; pp. 15–55. [Google Scholar]

- Bader, R. Computational Music Archiving as Physical Culture Theory. In Computational Phonogram Archiving; Bader, R., Ed.; Springer International Publishing: Cham, Switzerland, 2019; Volume 5, Current Research in Systematic Musicology; pp. 3–34. [Google Scholar] [CrossRef]

- Richter, J.; Münster, M.; Bader, R. Calculating guitar sound radiation by forward-propagating measured forced-oscillation patterns. Proc. Meet. Acoust. 2013, 19, 035002. [Google Scholar] [CrossRef]

- Ahrens, J. Analytic Methods of Sound Field Synthesis; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

- Ziemer, T. Implementation of the Radiation Characteristics of Musical Instruments in Wave Field Synthesis Application. Ph.D. Thesis, University of Hamburg, Hamburg, Germany, 2016. [Google Scholar] [CrossRef]

- Mitchell, J. Loudspeakers. In Handbook for Sound Engineers, 4th ed.; Ballou, G., Ed.; Elsevier: Burlington, MA, USA, 2008. [Google Scholar]

- Le Moyne, S.; Le Conte, S.; Ollivier, F.; Frelat, J.; Battault, J.C.; Vaiedelich, S. Restoration of a 17th-century harpsichord to playable condition: A numerical and experimental study. J. Acoust. Soc. Am. 2012, 131, 888–896. [Google Scholar] [CrossRef] [PubMed]

- Corteel, E. On the Use of Irregularly Spaced Loudspeaker Arrays for Wave Field Synthesis,Potential Impact on Spatial Aliasing Frequency. In Proceedings of the 9th International Conference on Digital Audio Effects (DAFx-06), Montreal, QC, Canada, 18–20 September 2006; pp. 209–214. [Google Scholar]

- Bader, R. Nonlinearities and Synchronization in Musical Acoustics and Music Psychology; Springer: Berlin/Heidelberg, Germany, 2013; Volume 2, Current Research in Systematic Musicology. [Google Scholar] [CrossRef]

Figure 1.

Design and concept of the radiation keyboard (right). A MIDI keyboard triggers individual signals for 128 loudspeakers, which are arranged like a harpsichord. Replacing the real harpsichord (left) by the radiation keyboard creates only subtle audible differences. Unfortunately, the radiation keyboard cannot synthesizes the harpsichord sound in the complete space. Low frequencies f1 are synthesized with high precision in the complete half-space above the loudspeaker array (light blue zone). Mid frequencies are synthesized with high precision in the listening region (green zone) in which the instrumentalist is located. The sound field of very high frequencies is synthesized with a high precision at discrete listening points within the listening region (red dots).

Figure 1.

Design and concept of the radiation keyboard (right). A MIDI keyboard triggers individual signals for 128 loudspeakers, which are arranged like a harpsichord. Replacing the real harpsichord (left) by the radiation keyboard creates only subtle audible differences. Unfortunately, the radiation keyboard cannot synthesizes the harpsichord sound in the complete space. Low frequencies f1 are synthesized with high precision in the complete half-space above the loudspeaker array (light blue zone). Mid frequencies are synthesized with high precision in the listening region (green zone) in which the instrumentalist is located. The sound field of very high frequencies is synthesized with a high precision at discrete listening points within the listening region (red dots).

Figure 2.

Flow diagram of the proposed method. First, harpsichord Impulse Responses (IR) are measured, then the distribution of loudspeakers and listening points is optimized, and finally, loudspeaker driving signals are calculated.

Figure 2.

Flow diagram of the proposed method. First, harpsichord Impulse Responses (IR) are measured, then the distribution of loudspeakers and listening points is optimized, and finally, loudspeaker driving signals are calculated.

Figure 3.

Measurement setup including a movable microphone array (in the front) above a soundboard, and an acoustic vibrator (in the rear) installed in an anechoic chamber. After the nearfield recordings parallel to the sound board, the microphone array samples the listening region of the instrumentalist in playing position.

Figure 3.

Measurement setup including a movable microphone array (in the front) above a soundboard, and an acoustic vibrator (in the rear) installed in an anechoic chamber. After the nearfield recordings parallel to the sound board, the microphone array samples the listening region of the instrumentalist in playing position.

Figure 4.

Depiction of the radiation keyboard. A regular grid of loudspeakers is installed on a board. The board has the shape of the harpsichord sound board. Microphones (black dots) sample the listening region in front of the keyboard.

Figure 4.

Depiction of the radiation keyboard. A regular grid of loudspeakers is installed on a board. The board has the shape of the harpsichord sound board. Microphones (black dots) sample the listening region in front of the keyboard.

Figure 5.

Procedure to derive the impulse response for each loudspeaker and pressed key in the radiation keyboard. The brown curve represents the bridge, the brown dots depict exemplary excitation points. The black dots represent microphones near the soundboard, the light gray dots represent equivalent sources on the soundboard. The gray dots represent a regular subset of equivalent sources, which are replaced by loudspeakers (circles) in the radiation keyboard.

Figure 5.

Procedure to derive the impulse response for each loudspeaker and pressed key in the radiation keyboard. The brown curve represents the bridge, the brown dots depict exemplary excitation points. The black dots represent microphones near the soundboard, the light gray dots represent equivalent sources on the soundboard. The gray dots represent a regular subset of equivalent sources, which are replaced by loudspeakers (circles) in the radiation keyboard.

Figure 6.

Spectrogram of an exemplary output of one of the array microphones. Harmonic distortions of several orders are observable.

Figure 6.

Spectrogram of an exemplary output of one of the array microphones. Harmonic distortions of several orders are observable.

Figure 7.

Obtained impulse response

of the signal in

Figure 6 after deconvolution. The harmonic distortions are separated in time and precede the linear part

(red), which starts at

.

Figure 7.

Obtained impulse response

of the signal in

Figure 6 after deconvolution. The harmonic distortions are separated in time and precede the linear part

(red), which starts at

.

Figure 8.

Exemplary plot of reconstruction energy (black) and condition number (gray) over . The ordinate is logarithmic and aligned at the minimum at .

Figure 8.

Exemplary plot of reconstruction energy (black) and condition number (gray) over . The ordinate is logarithmic and aligned at the minimum at .

Figure 9.

Sound field recorded 50 mm above a grand piano sound board (left) and back-propagated sound board vibration according to the MEM (right). The black dot marks the input location of the acoustic vibrator.

Figure 9.

Sound field recorded 50 mm above a grand piano sound board (left) and back-propagated sound board vibration according to the MEM (right). The black dot marks the input location of the acoustic vibrator.

Figure 10.

Three regular loudspeaker grids . A subset of the 1500 equivalent sources is replaced by 128 loudspeakers. In the given setup loudspeaker grids are possible.

Figure 10.

Three regular loudspeaker grids . A subset of the 1500 equivalent sources is replaced by 128 loudspeakers. In the given setup loudspeaker grids are possible.

Figure 11.

Procedure to derive the impulse response for each loudspeaker and pressed key in the radiation keyboard. The wooden plate represents the harpsichord sound board. The white plate represents the loudspeaker grid. The black dots represent microphones microphones in the listening region. At first, harpsichord and loudspeaker array create different sound fields in the listening region. Then, the loudspeaker signals are modified as to synthesize the harpsichord sound field at regular subset v of listening points that sample the listening region.

Figure 11.

Procedure to derive the impulse response for each loudspeaker and pressed key in the radiation keyboard. The wooden plate represents the harpsichord sound board. The white plate represents the loudspeaker grid. The black dots represent microphones microphones in the listening region. At first, harpsichord and loudspeaker array create different sound fields in the listening region. Then, the loudspeaker signals are modified as to synthesize the harpsichord sound field at regular subset v of listening points that sample the listening region.

Figure 12.

Three exemplary impulse responses . Here, t is the time in seconds and u is the voltage to drive the loudspeaker. The shortest out of impulse responses is ideal, as it exhibits the best impulse fidelity. It can be identified visually.

Figure 12.

Three exemplary impulse responses . Here, t is the time in seconds and u is the voltage to drive the loudspeaker. The shortest out of impulse responses is ideal, as it exhibits the best impulse fidelity. It can be identified visually.

Figure 13.

Impulse responses truncated to frequency regions f1 (blue), f2 (green) and f3 (red). Adding up the time series yields a broadband impulse response (black). Here, t is the time in seconds and u is a normalized voltage to drive the loudspeaker.

Figure 13.

Impulse responses truncated to frequency regions f1 (blue), f2 (green) and f3 (red). Adding up the time series yields a broadband impulse response (black). Here, t is the time in seconds and u is a normalized voltage to drive the loudspeaker.

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).