Abstract

The purpose of the self-driving car is to minimize the number casualties of traffic accidents. One of the effects of traffic accidents is an improper speed of a car, especially at the road turn. If we can make the anticipation of the road turn, it is possible to avoid traffic accidents. This paper presents a cutting edge curve lane detection algorithm based on the Kalman filter for the self-driving car. It uses parabola equation and circle equation models inside the Kalman filter to estimate parameters of a using curve lane. The proposed algorithm was tested with a self-driving vehicle. Experiment results show that the curve lane detection algorithm has a high success rate. The paper also presents simulation results of the autonomous vehicle with the feature to control steering and speed using the results of the full curve lane detection algorithm.

1. Introduction

The development of the self-driving car is needed for the safety of driver and passenger on the vehicle [1]. Traffic accidents occur for various reasons. The majority of traffic accidents are caused by an improper speed on the road turning or unexpected lane changes when avoiding an obstacle [2]. Some modern cars are already equipped with the emergency braking system, collision warning system, lane-keeping assist system, adaptive cruise control. These systems could be used to help avert traffic accidents when driver is distracted or lost control.

The two most important parts of advanced driver assistance systems are a collision avoidance system and a Lane keeping assist system, which could help to reduce the number of traffic accidents. A fundamental technique for effective collision avoidance and lane-keeping is a robust lane detection method [3]. Especially that method should detect a straight or a curve lane in the far-field of view. A car moving at a given speed will spend a certain time to stop or reduce speed while keeping stability. This means it is necessary to detect road lane in the near field as well as in far-field of view.

Tamal Datta et al. showed a way to detect lane in their lane detection technique [4]. The technique consists of image pre-processing steps (grayscale conversion, canny edge detection, bitwise logical operation) on the image input; it also masked the image according to the region of interest (ROI) in the image. The final stage uses the Hough transformation [5,6] method and detects the lines. Using this method, the parameters for a straight line are achieved. However, their technique did not propose lane detection for curve lanes and can obtain parameters of curve lines (parabola and circle).

A video-based land detection at night was introduced by Xuan He et al. [7]. The method steps include the Gabor filter operator [8] for image pre-processing, adaptive splay ROI, and Hough transform to detect the marker. Lane tracking method that uses Kalman filter [9], is added after lane detection to increase the probability and real-time detection of lane markers. But, their pre-processing steps lack an adaptive auto-threshold method to detect lane in all conditions.

In order to eliminate the uncertainty of lane condition, Shun Yang et al. proposed a replacement of image pre-processing [10]. Their method uses deep learning-based lane detection as a replacement for feature-based lane detection. However, the UNet [11] based encoder-decoder requires high GPU processing unit like Nvidia GPU Geforce GTX 1060 for training and testing. Also, the paper claims CNN-branch is much slower than the feature-based branch in terms of detection rate. The fast detection rate is very important in the case of the autonomous vehicle since the vehicle moves at a very high speed. Also, the result lacks to show results of lane detection in case of the curved lane.

Moreover, Yeongho Son et al. introduced an algorithm [12] to solve the limitation of detecting lane in light illumination change or a shadow or worn-out lanes by using a local adaptive threshold to extract important features from the lane images. Moreover, their paper proposes a feedback RANSAC [13] algorithm to avoid false lane detection by computing two scoring functions based on the lane parameters. They used the quadratic lane model for lane fitting and Kalman filter for smooth lane tracking. However, the algorithm did not provide any close-loop lane keeping control to stay in lane.

Furthermore, a combination of the Hough transform and R-least square method is introduced by Libiao Jiang et al. [14]. They used the Kalman filter to track the lane. Their combination of this method provides results on straight lane marking. Similarly, hazed-based Kalman is used to enhance the information of the road by Chen Chen et al. [15]. Also, Huifend Wang et al. introduced a straight and polynomial curve model to detect the continuity of the lane [16]. The curve fitting method was used to detect the lanes.

In our paper, we introduce a curve lane detection algorithm based on Kalman filter [17]. This algorithm includes Otsu’s threshold method [18,19] to convert RGB to Black-White image, image pre-processing using top view image transform [20,21] to create a top-view image of the road, a Hough transform for detecting the straight lane in the near-field of the sensor [22], and parameter estimation of the curve lane using a Kalman filter. Also, we use two different models for a curve lane in the Kalman filter. One is the parabola model [23], another is the circle model [24]. The Kalman-based linear parabolic lane detection is already tested on consecutive video frames using the parabolic model by K.H. Lim et al. [25]. The paper presents the method which is extended to the circular model. Our proposed method shows robustness against noise. Effective parameter estimation of a curve lane detection could be used to control the speed and heading angle of the self-driving car [26].

Multiple methods have been introduced to detect lane for the self-driving car and advanced driver assistance systems. The vision-based lane detection methods usually used some popular techniques, an edge detector to create a binary image, the classical Hough transform [27,28] to detect straight lines, the color segmentation to extract lane markers, etc.

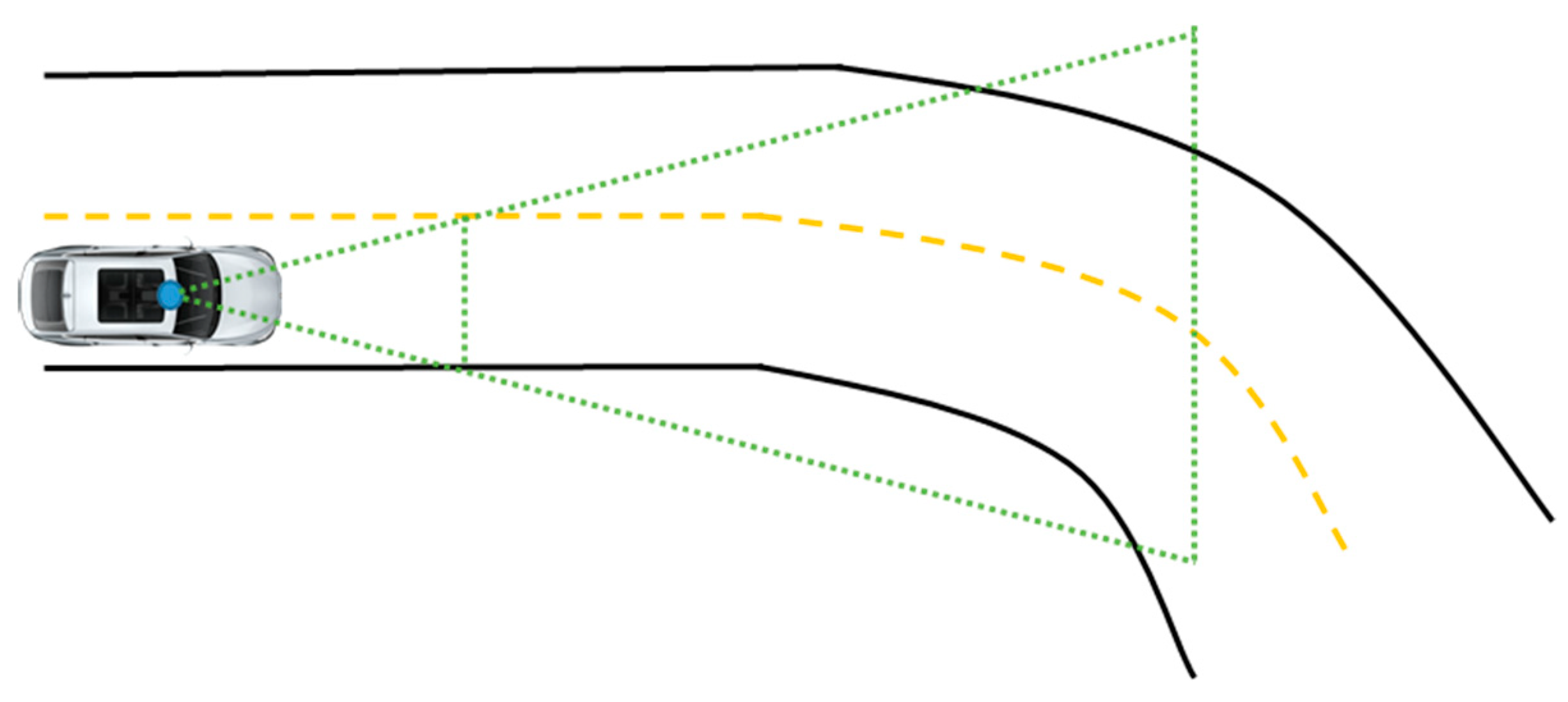

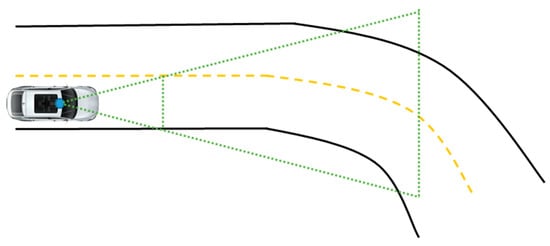

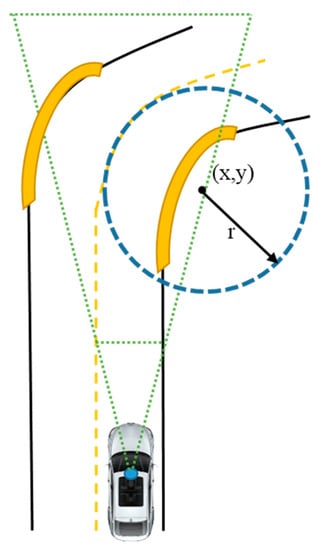

Most of the methods focused on only a straight lane detection in the near distance using a Hough transform or some simple methods. For a curve lane, few number methods used to detect curved roads such as parabola [23] and hyperbola fitting and B-Splines [29,30], Bezier Splines. To enhance the result of lane detection, the area at the bottom of an image is considered as a region of interest (ROI) [31]. Segmenting ROI will increase the efficiency of the lane detection method and eliminate the effect of the upper portion of a road image [32]. The majority of the methods directly detect lanes from the images that are captured by the front-view camera, as shown in Figure 1. However could be robust using the raw image for detect lane, estimating the parameters of road lane may be difficult [33].

Figure 1.

Field of view on the road turning.

This research is considered on a curve lane detection algorithm, which can estimate parameters of the road turning and define geometric shapes based on the mathematical model and the Kalman filter [17].

2. Research Method

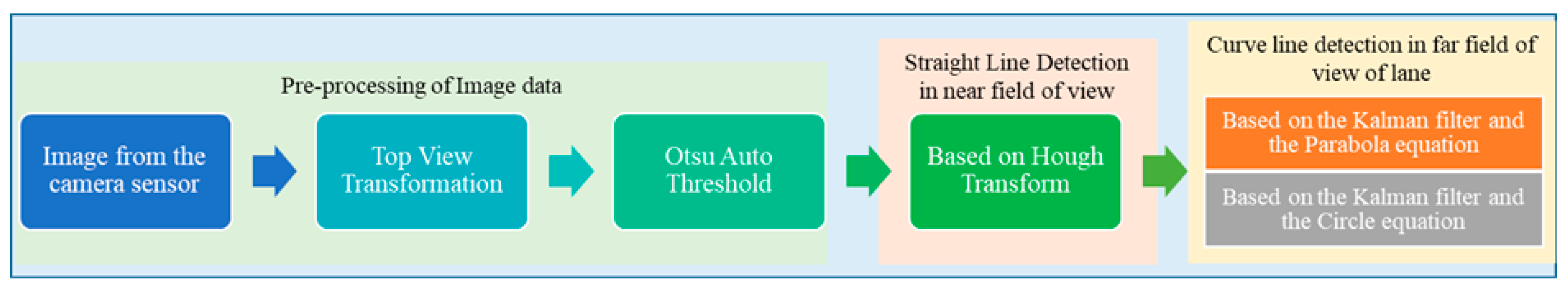

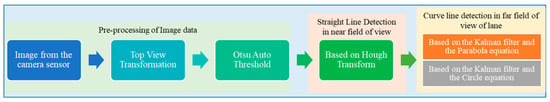

Our new algorithm consists of two main parts. (1) Image pre-processing. It contains Otsu’s threshold method [19] and top view image transform [20] to create a top-view image of the road. Hough transform predict the straight lane in the near-field of view. (2) A curve lane detection. We use the Kalman filter to detect a curve lane in the far-field of view. This Kalman filter algorithm includes two different methods, the first method is based on the parabola model [23], and the second method is based on the circle model [24]. This method shown in Figure 2 can estimate parameters of the road turning and find geometric shapes based on the mathematical model and the Kalman filter.

Figure 2.

The flow diagram of the lane detection algorithms using the Kalman filter.

2.1. Otsu Threshold

In 1978 inventor Nobuyuki Otsu introduced a new threshold technique. The Otsu threshold technique uses statistical analysis, which can be used to determine the optimal threshold for an image. Nobuyuki Otsu introduced a problem with one threshold for two classes and later extended to a problem with multiple thresholds. For the two classes, this technique assumes the image containing two classes of pixels following bi-modal histogram, foreground pixels, and background pixels. The Otsu threshold method minimizes the sum of the weighted class variances. He named this sum within-class variance and defines it as Equation (1):

The criterion tries to separate the pixels, such that the classes are homogeneous in themselves. Since a measure of group homogeneity is the variance, the Otsu criterion follows consequently. Therefore, the optimal threshold is the one, for which the within-class variance is minimal. In order to find the optimal threshold instead of minimizing the within-class variance is defined as Equation (2):

where is the total mean calculated over all gray levels. So the task of finding the optimal set of thresholds in Equation (3) is either to find the thresholds, which minimize the within-class variance or to find the ones, which maximize the between-class variance. The result is the same.

2.2. Top View Image Transformation

The second step in our algorithm is to create a top view image of the road. The output image is the top view or bird’s view of the road where lanes will be parallel or close to parallel after this transformation. Also, this transformation converts from pixels in the image plane to the world coordinate metric. If necessary we can measure distance using that transformed image.

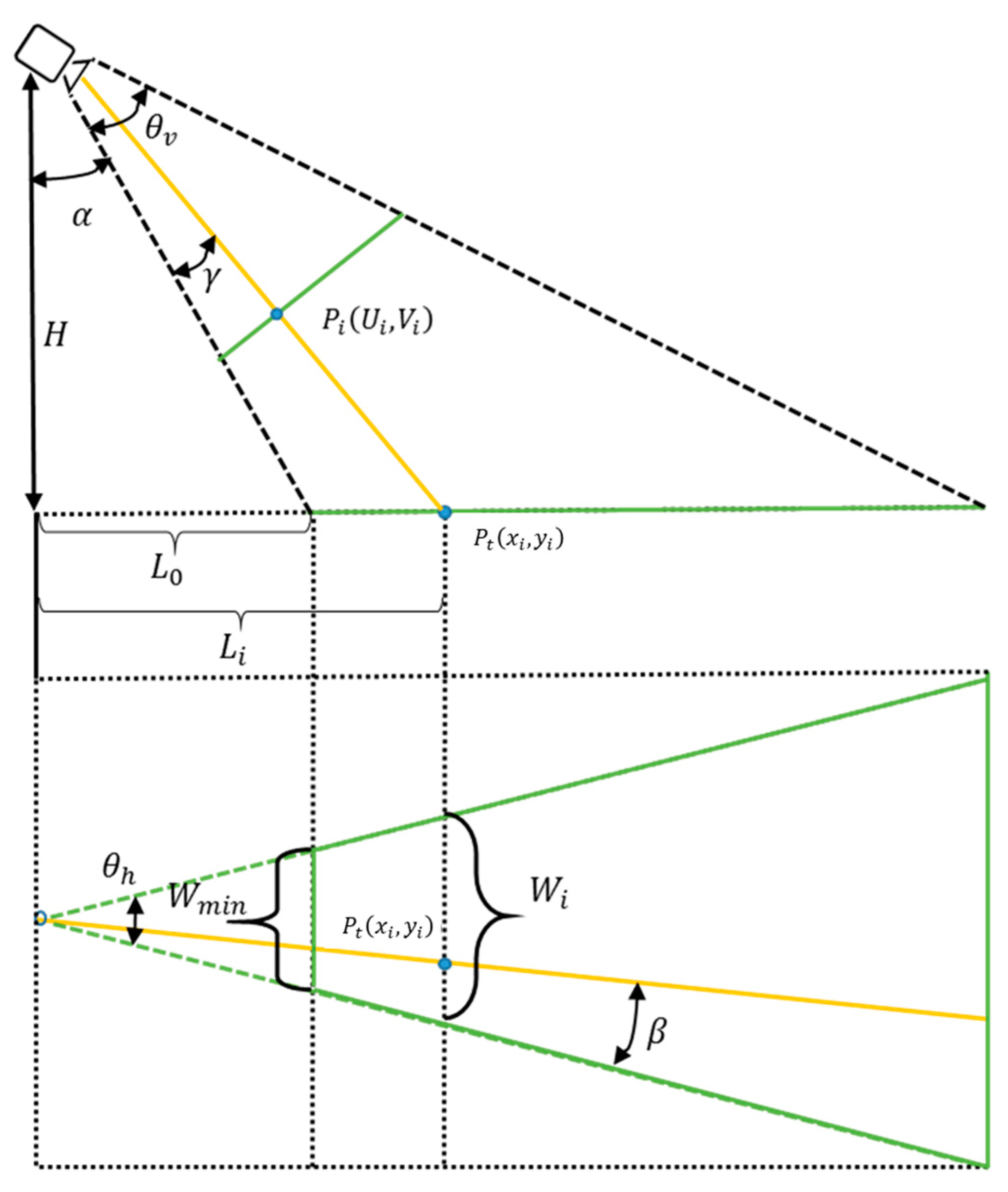

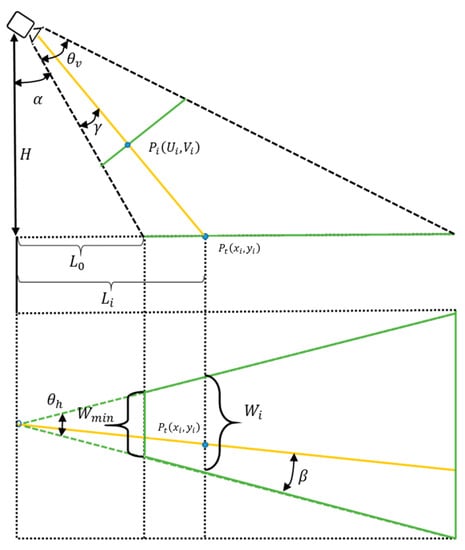

Figure 3 illustrates the geometry of the top-view image transformation. For the transformation, we need some parameters, where is the horizontal view angle of the camera, is the vertical view angle of the camera, is the height of the camera located, and is the tilt angle of the camera.

Figure 3.

Top view image transformation.

The height of the camera located in the vehicle is measured in the metric system. We can create two types of top-view image, one is measured by metric using H parameter, another is measured by pixel using parameter. V is the width of the front view image and is proportional to Wmin of the top view image field illustrated in Figure 3. Equations (4) and (5) show the relationship between the H measured by metric and measured by pixel.

Coefficient K is used to transform the metric into the pixel data.

According to the geometrical description shown in Figure 3, for each point on the front view image, the corresponding sampling point on the top view image can be calculated by using the previous Equations (4)–(6).

Then RGB color data are copied from the position of the front view camera image to the position of the top view image.

After top-view image transformation, line detection becomes a simple process, which only detects parallel lines that are generally separated by a given, fixed distance. The next step is to detect a straight lane using the Hough transform.

2.3. Straight Lane Detection with Hough Transform

In the near view image, a straight line detection algorithm is formulated by using a standard Hough transformation. The Hough transform also detects many incorrect lines. We need to eliminate the incorrect lanes to reduce computational time and complexity [34].

To remove incorrect lines using the same algorithms on the road lane, the removal of detected lines is needed in which the vehicle is not in. For example, after Hough transformation on the binary image, the longest two lines will be chosen to avoid the issue. The detection for curve lane will start at the finishing points of those two longest two lines which were chosen based on length.

2.4. Curve Lane Detection Based on Kalman Filter and Parabola Equation

In this paper, the most important part is the curve line detection part. This method should detect a straight or a curve line in the far-field of view. Image data (white points in the far-field of view) include uncertainties and noise generated during capturing and processing steps. Therefore, as a robust estimator against these irregularities, a Kalman filter was adopted to form an observer [22]. First of all, we need to define the equation of the curve line, which is a non-linear equation. For the curve line, the best-fit equations are the parabola equation and the circle equation.

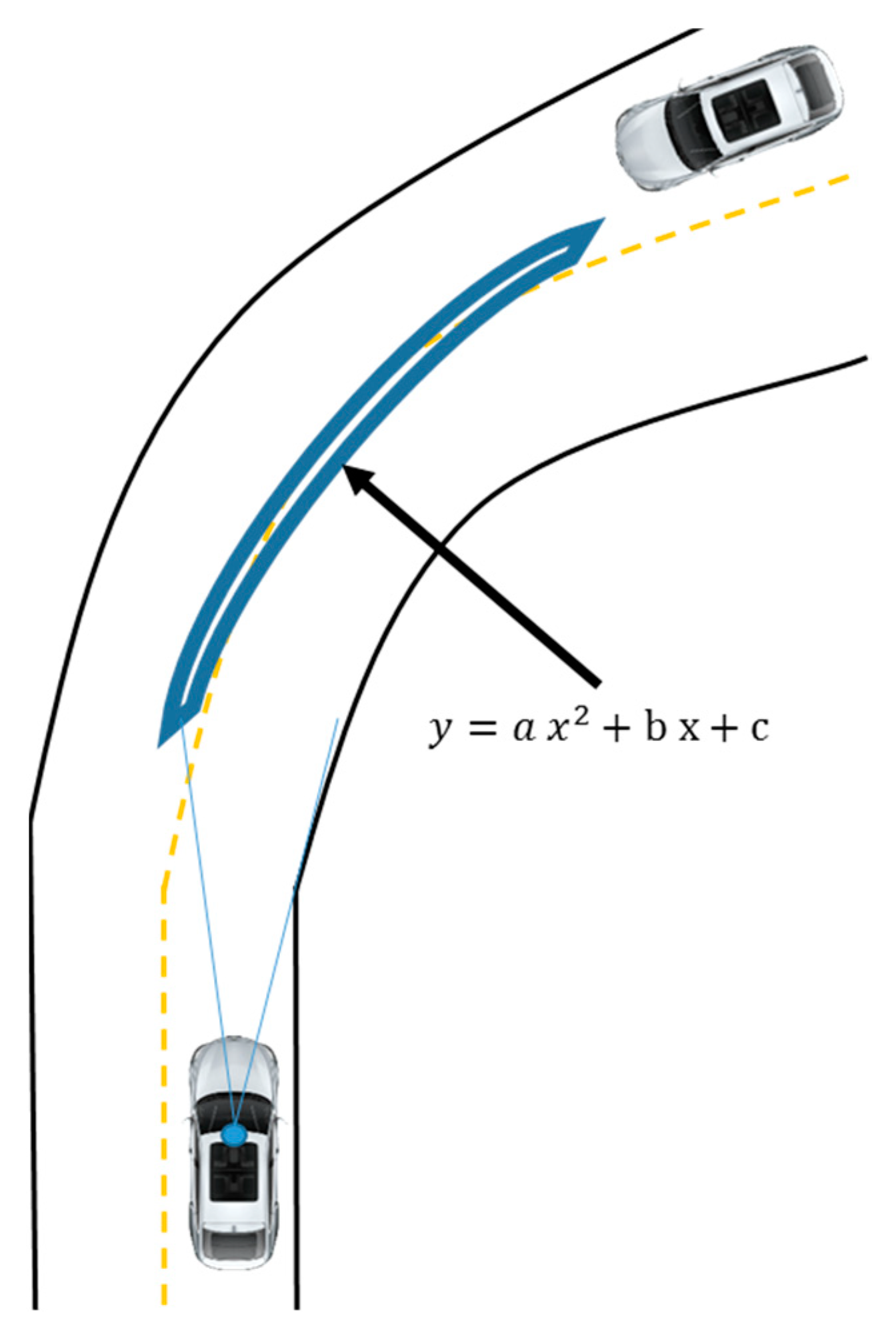

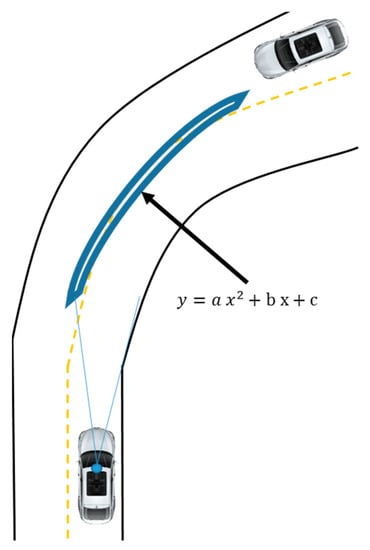

In this part, we consider a curve lane detection algorithm which is based on the Kalman filter and Parabola equation. From parabola equation we need to define three parameters using at least three measurement data. Equations (7) and (8) show system equation of the parabola. Figure 4 illustrates the basic parabolic model of road turning.

where and are the measurement data of the curve line detection process. In our case, the measurement data are the coordinates of the white points in the far section.

Figure 4.

Parabola model of the road turning.

From Equation (9) we can estimate parameters easily.

Using this matrix form we can implement our Kalman filter design for curve lane detection. Two important matrices of the Kalman filter are the measurement transition matrix (H) and the state transition matrix (A). It can be expressed as Equation (10):

In our case, the measurement transformation matrix contains three white points coordinate values of axis rearranging the calculation as Equation (11). But, the transition matrix is the identity matrix, because of our Kalman filter design used for the image process.

These two matrices are often referred to as the process and the measurement models, as they serve as the basis for a Kalman filter. The Kalman filter has two steps: the prediction step and the correction step. The prediction step can be expressed as follows, Equations (12) and (13):

where is the covariance of the predicted state. The correction steps of the Kalman filter can be expressed through the following Equations (14), (15) and (17).

where is the Kalman gain, is the a posteriori estimate state at the -th white point. is the a posteriori estimate error covariance matrix at the -th white point in the Equation (17).

The Equation (16) shows a matrix form of a posteriori estimate state.

To evaluate the viability of the proposed algorithms, we tested the parabola equation using both real data and noisy measurement data in Matlab. The Kalman filter can estimate the parameters of the parabola equation from noisy data. The result section shows a comparison between the measurement value and estimation value, the real value. Figure 5 shows the expected results of left and right turning on the road using the parabolic model based on the Kalman filter [35].

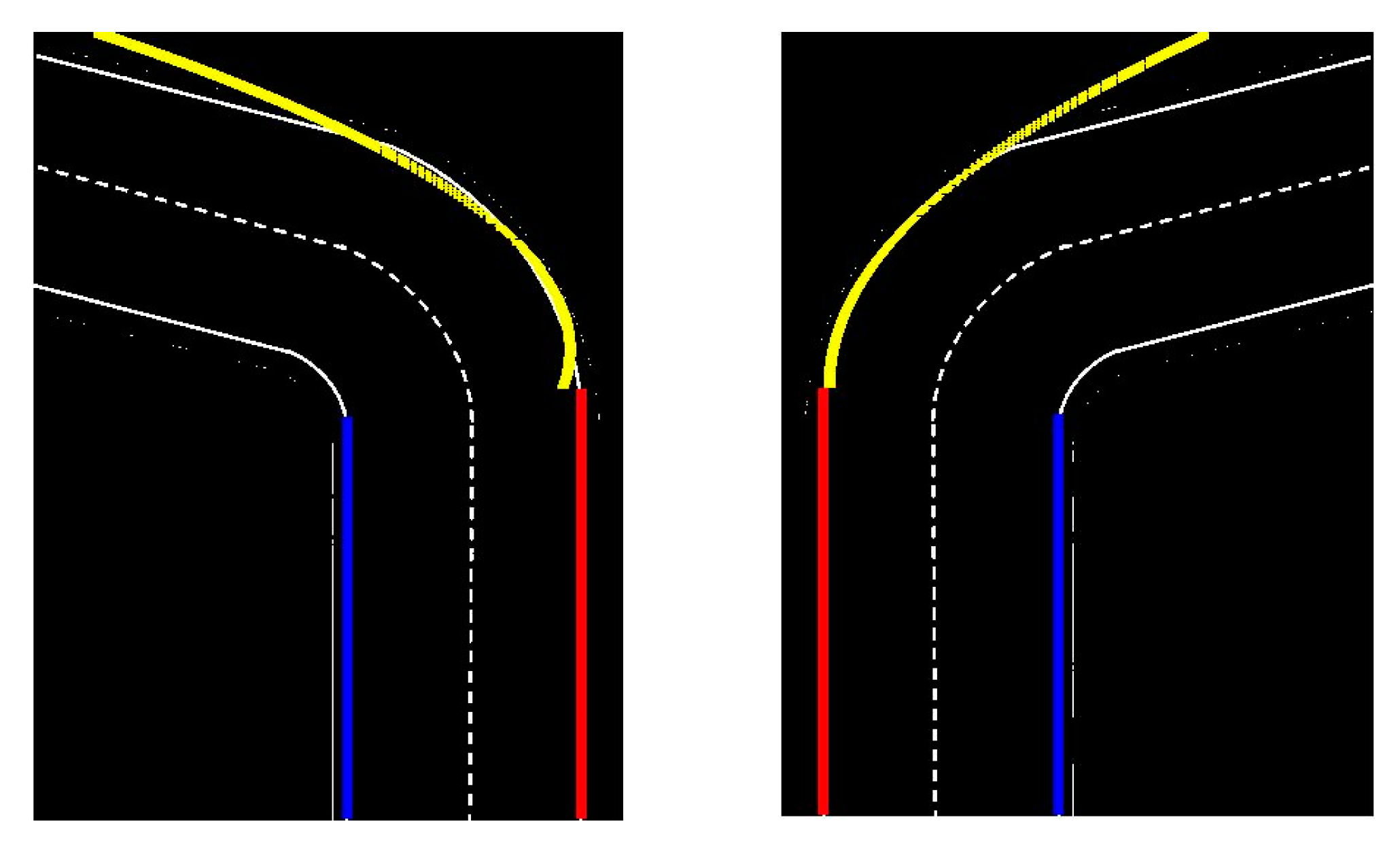

Figure 5.

Left and right turning on the road.

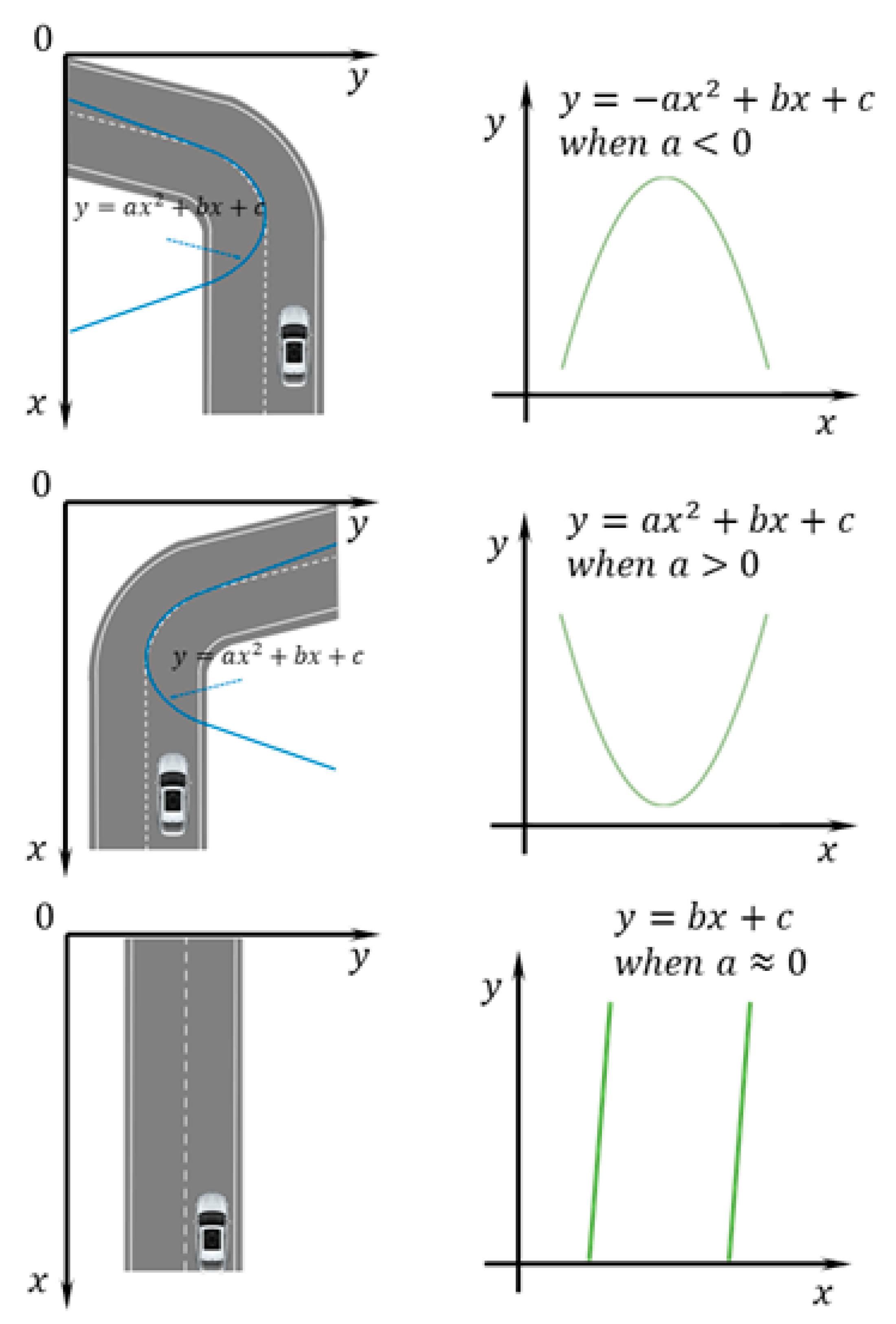

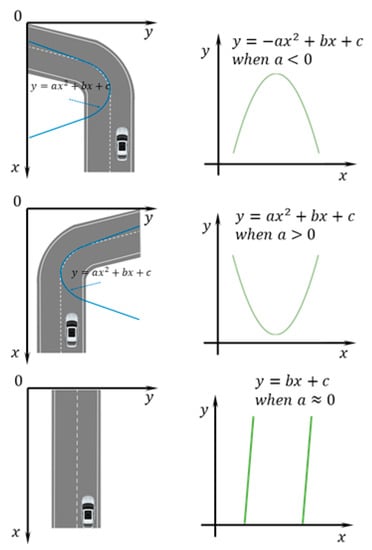

From all these experiment results from the result section, we can see one relationship between road turning and “a” parameter of our approach. If the road is turning toward the left side, the “a” parameter is lower than zero. If the road is turning toward right side, “a” parameter is higher than zero and if the road is straight, “a” parameter is almost equal to zero. This process is shown in Figure 6.

Figure 6.

Relationship between “a” parameter and road turning.

2.5. Curve Lane Detection Based on Kalman Filter and Circle Equation

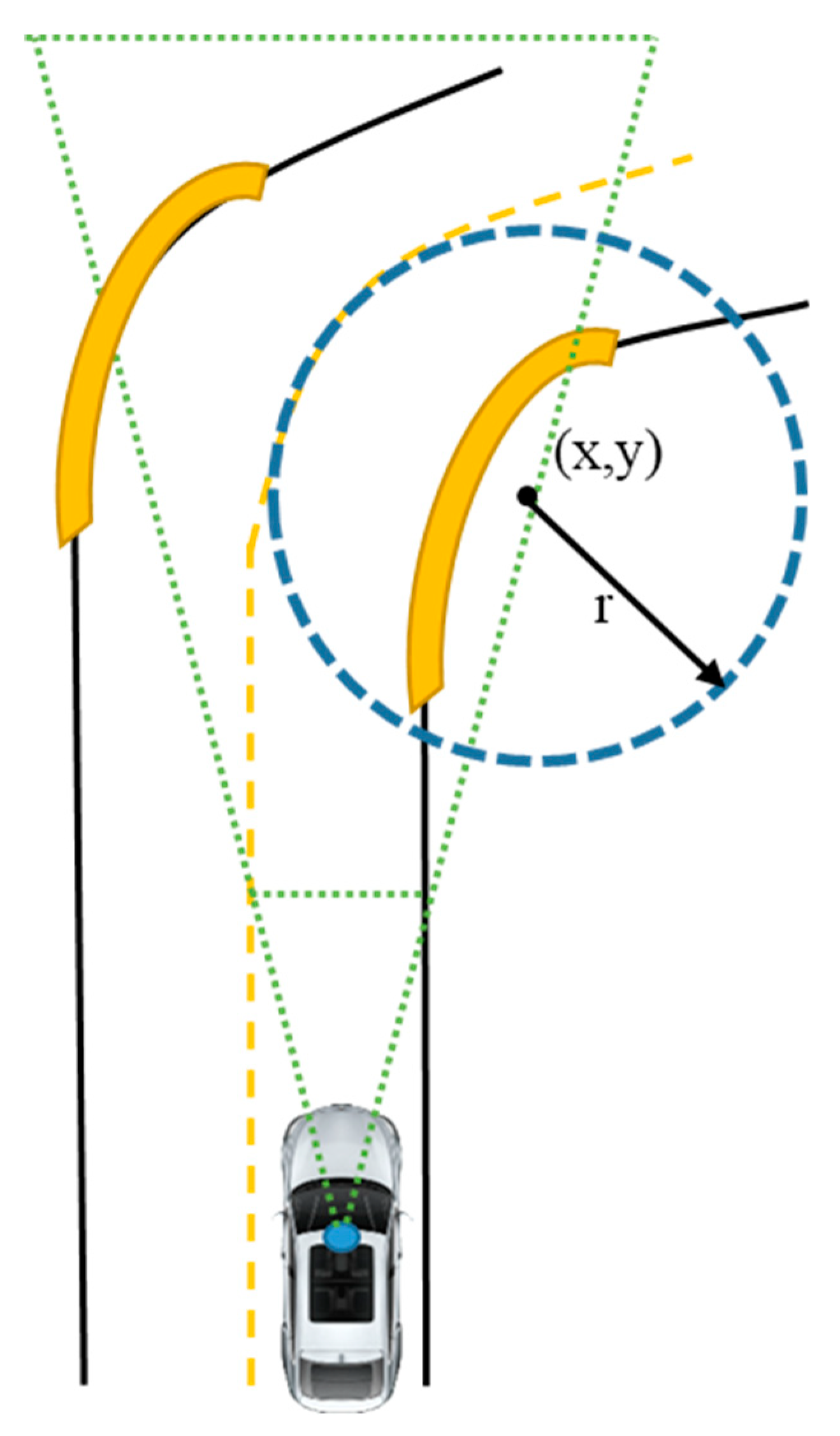

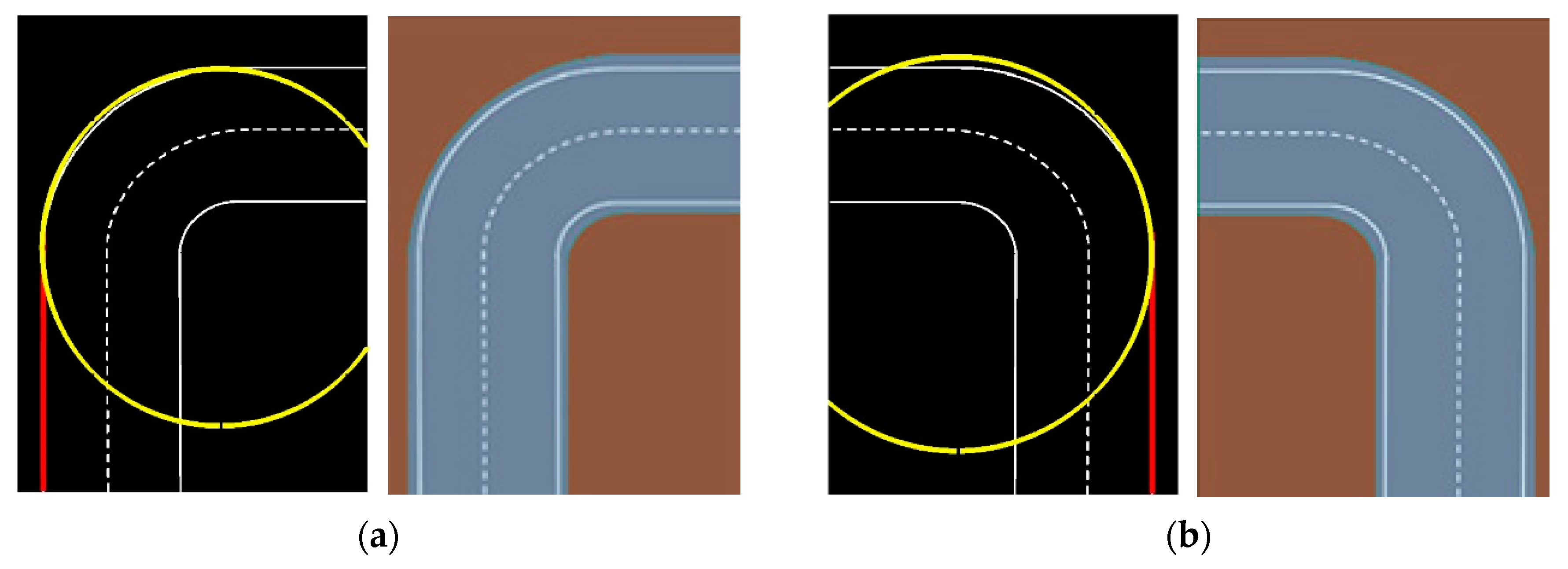

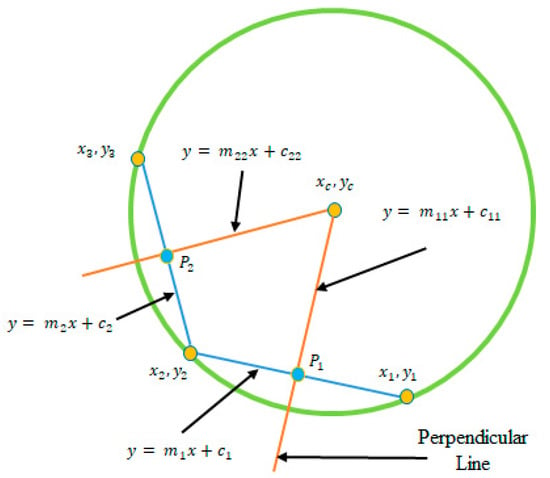

For the curve line, the second-best fit equation is the circle equation, shown in Figure 7. In this part, we consider curve line detection algorithms [24] based on the Kalman filter and circle equation. From the circle equation we need to define circle radius and the center of circle .

Figure 7.

Circle model of the road turning.

Using every three points of a circle we can draw a pair of chords. Based on these two chords we can calculate the center of the circle [36]. If we have number points, it is possible to calculate number center.

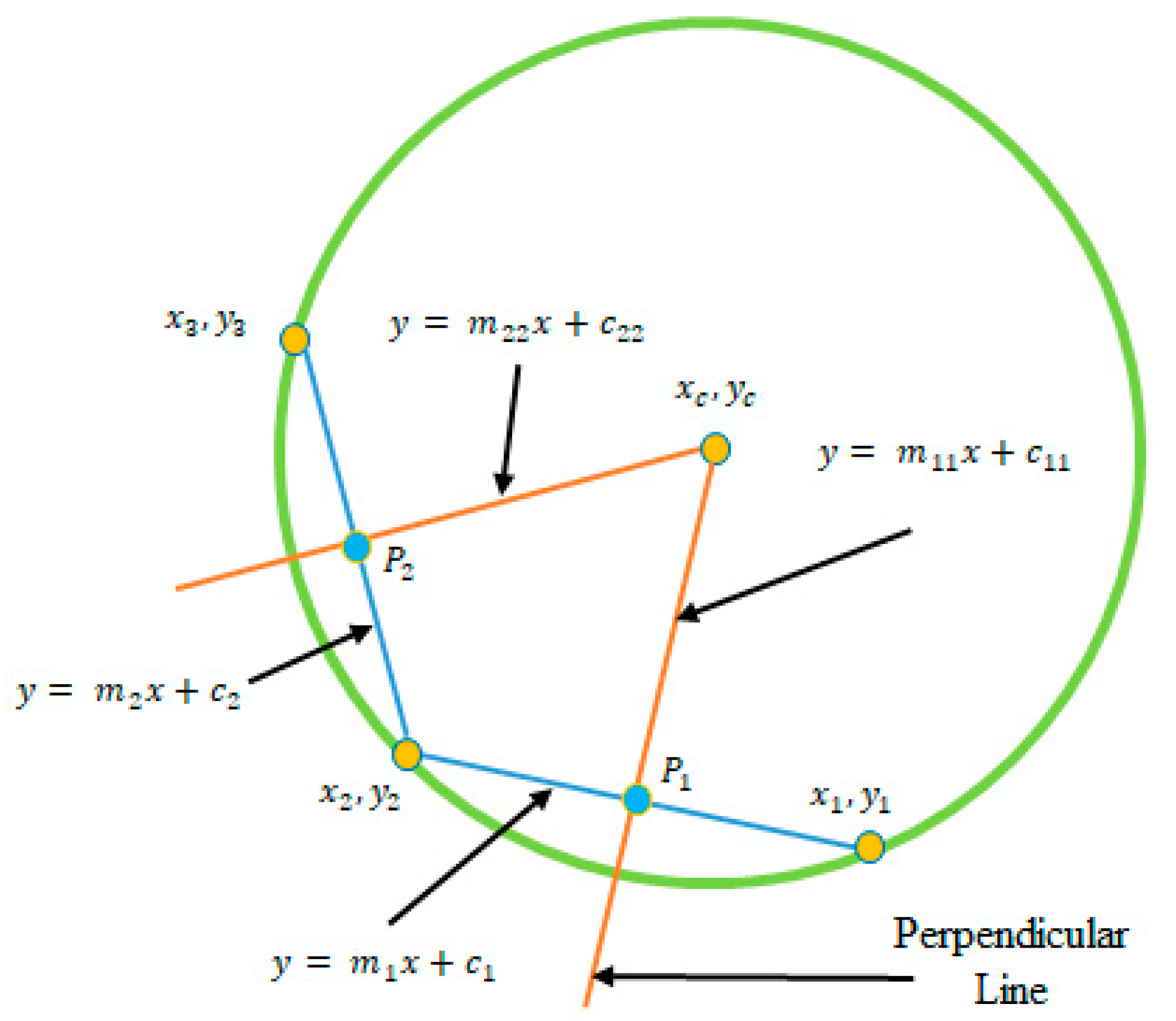

Pairs of chords in each chain are used to calculate the center. Here, , , points divide a circle to three arcs, and are the perpendicularly bisecting lines of the corresponding chords. points, shown in Figure 8 [36], are located in the lines , and the Equations (18) and (21) show the coordinates of points.

Figure 8.

Calculation of the center of the circle.

Perpendicular lines rules and points coordinate are used to calculate the equation of line in Equations (19) and (20)

For the line same calculation runs to estimate the equation of line, expressed in form of Equations (22) and (23).

Based on lines we can calculate the center of the circle expressed in Equations (24) and (25). The intersection of these two lines indicates the center of the circle.

But, this method cannot determine the center correctly, it is easily disturbed by noises. Therefore, we need the second step for the estimation of the correct center using a Kalman filter. The Kalman filter is estimated using the raw data of the center , which is stored by the previous step.

For the x coordinate and y coordinate of the center, we need individual estimation based on the Kalman filter. Equations (26)–(32) show the Kalman filter for the center of the circle. Equations (26)–(28) show an initial value of Kalman gain and is the covariance of the predicted state.

where is the x-coordinate value of the center, which is stored by the previous step. After that, we can run the same process for the y coordinate value of the center. In the end, we can easily estimate the radius of the circle using these correct values of x, y coordinate of center.

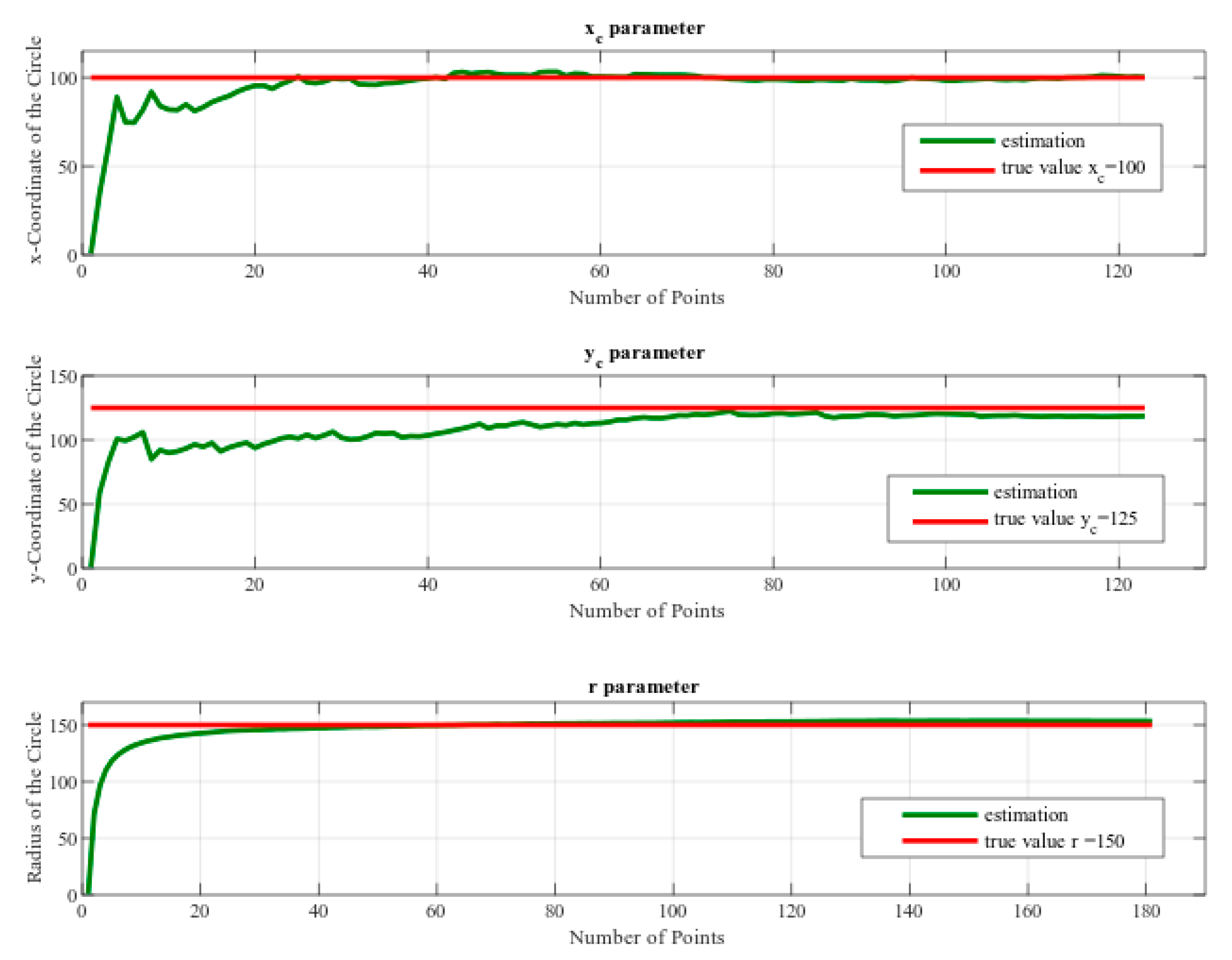

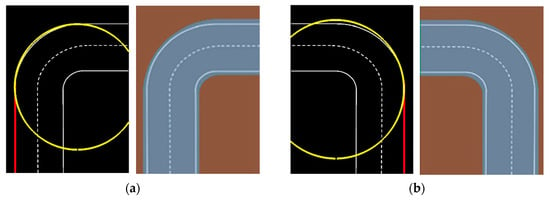

Figure 9 shows a curve lane detection expected result of Kalman filter in the prepared road image [35], this image has a circle shape road turning. The results present good performance for both of them, left turning and right turning.

Figure 9.

The road turning with the circle shape (a) right turning (b) left turning.

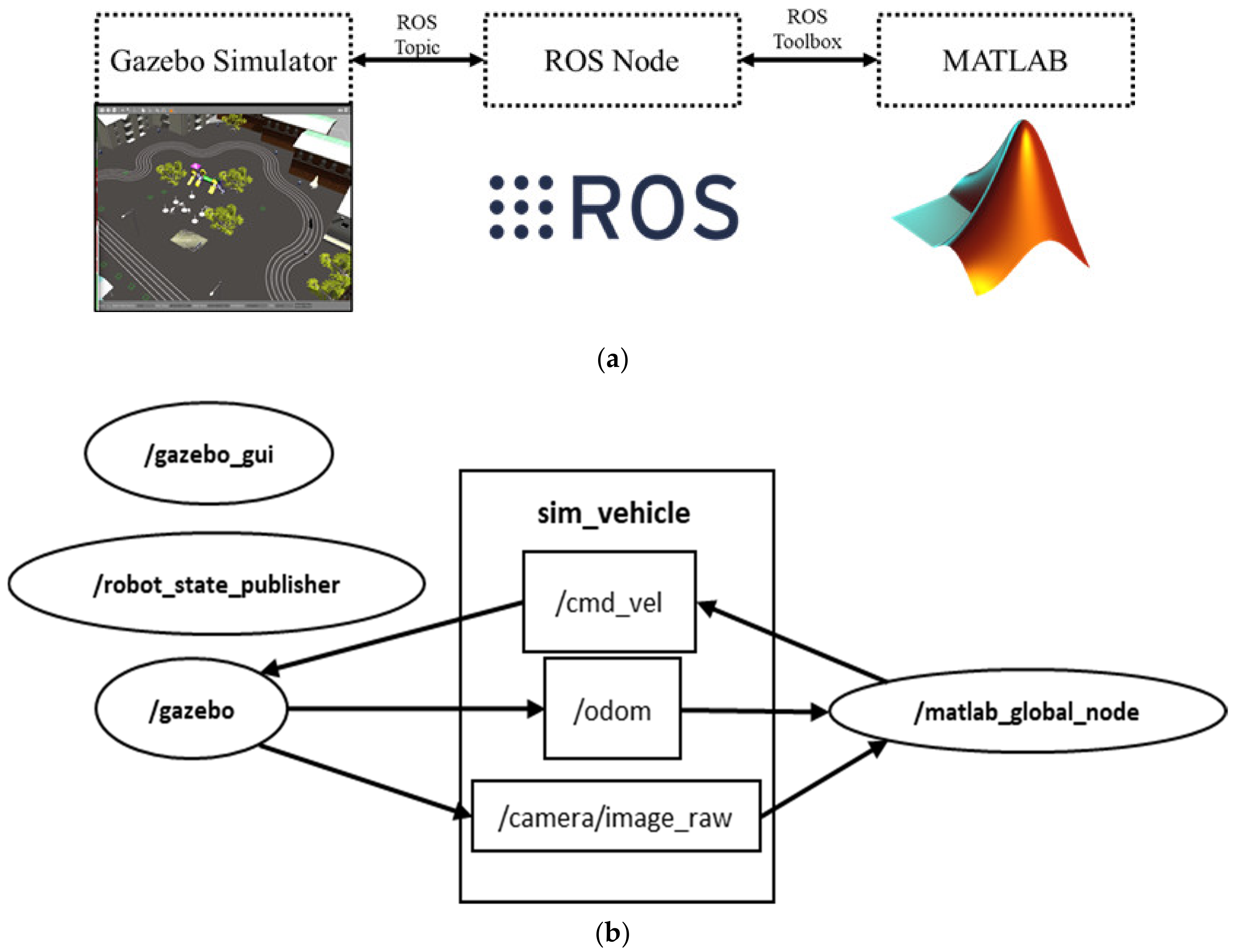

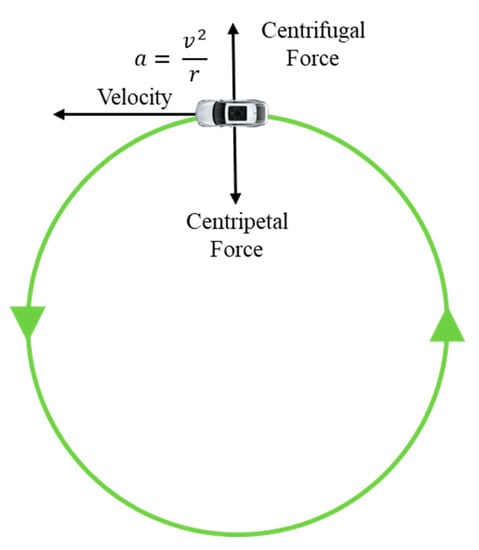

Using this result we can predict road turning and based on this value of radius we can control the speed of the self-driving car. For example, if the radius value is low, the self-driving car needs to reduce speed, if the radius value is high, the self-driving car can be on the same speed (no need to reduce speed). Also using radius value we can estimate suitable velocity based on centrifugal force Equation (33), as shown in Figure 10.

Figure 10.

Centrifugal force when a car goes around a curve.

3. Setup for Simulation in 3D Environments and Results

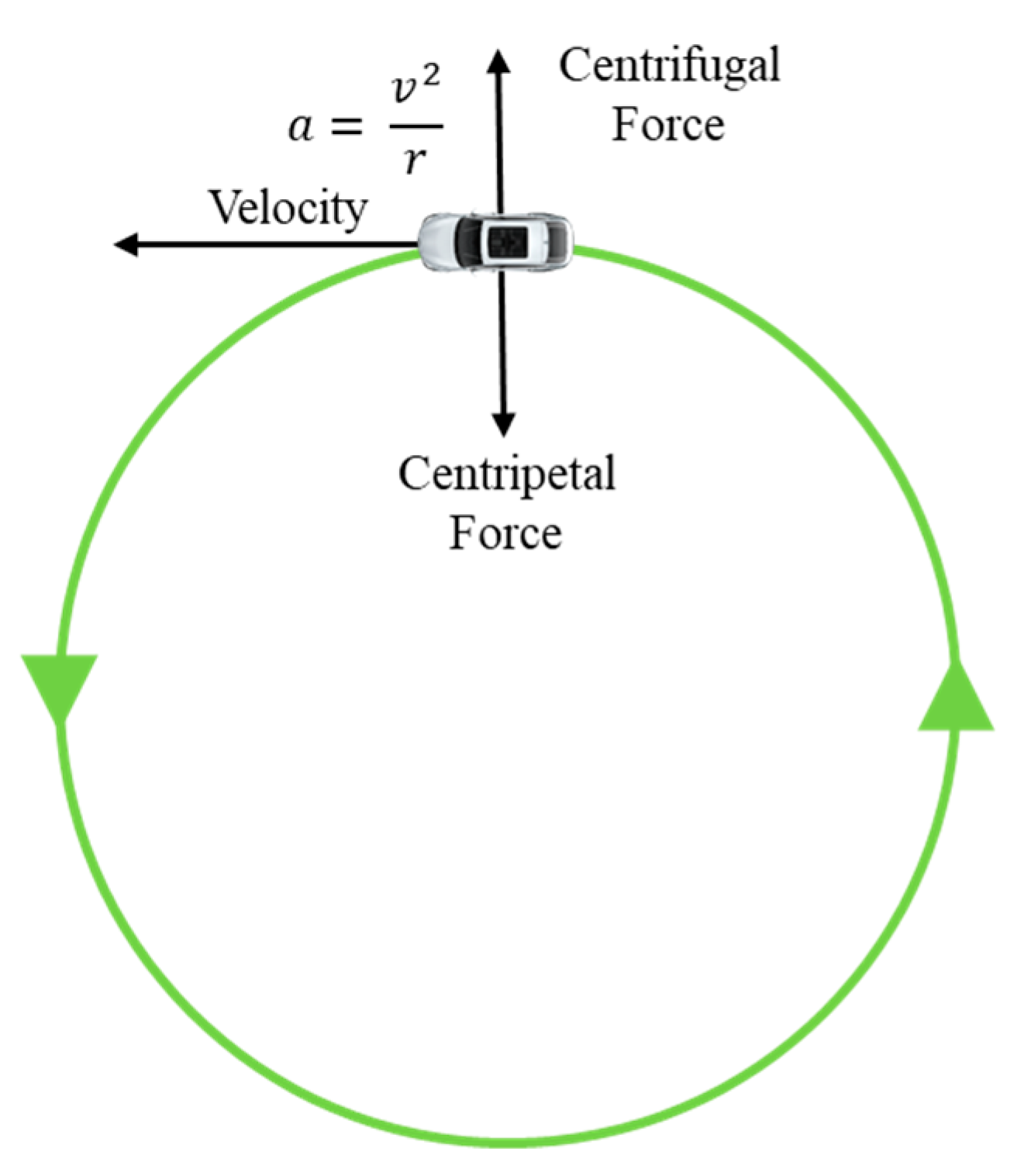

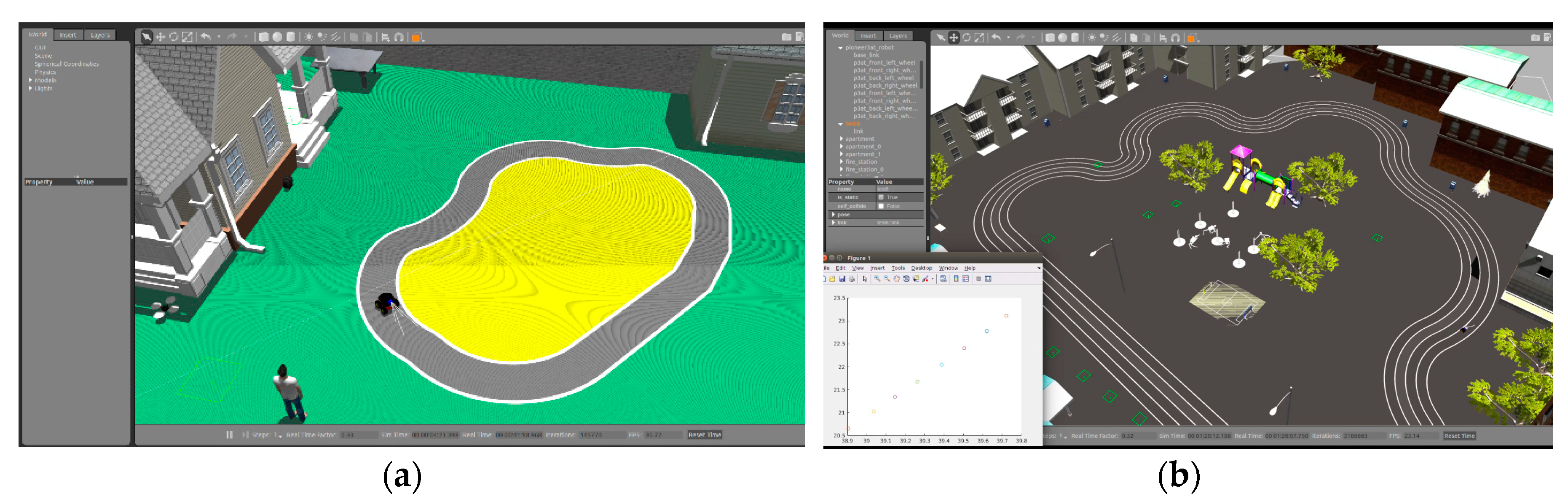

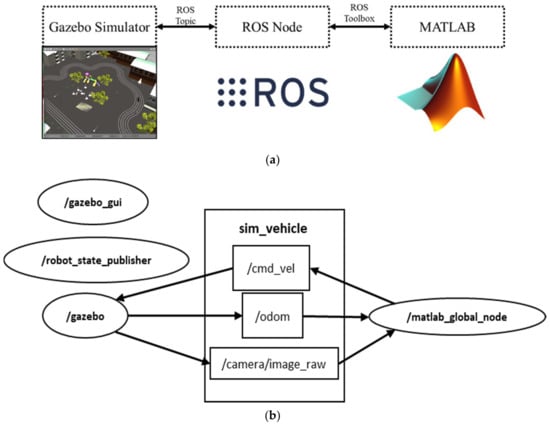

The 3D lane detection environment for simulation is designed using the GAZEBO simulator. MATLAB is used for image preprocessing, lane detection algorithm, and closed-loop lane keeping control, shown in Figure 11a. It shows the software communication in brief.

Figure 11.

Gazebo-MATLAB Software Communication; (a) Brief Visualization of Software Architecture, (b) RQT Graph.

Figure 11b shows the comprehensive connection between nodes and topics in the gazebo simulator. From the gazebo GUI, the camera sensor node gives us the image_row topic. The Matlab node receives the raw RGB image of the lane and processes the frames. Then, the Matlab node generates the heading angle and sends it using cmd_vel topic to the gazebo. In order to get the trajectory of the robot, the odom topic is used to plot the position of the vehicle.

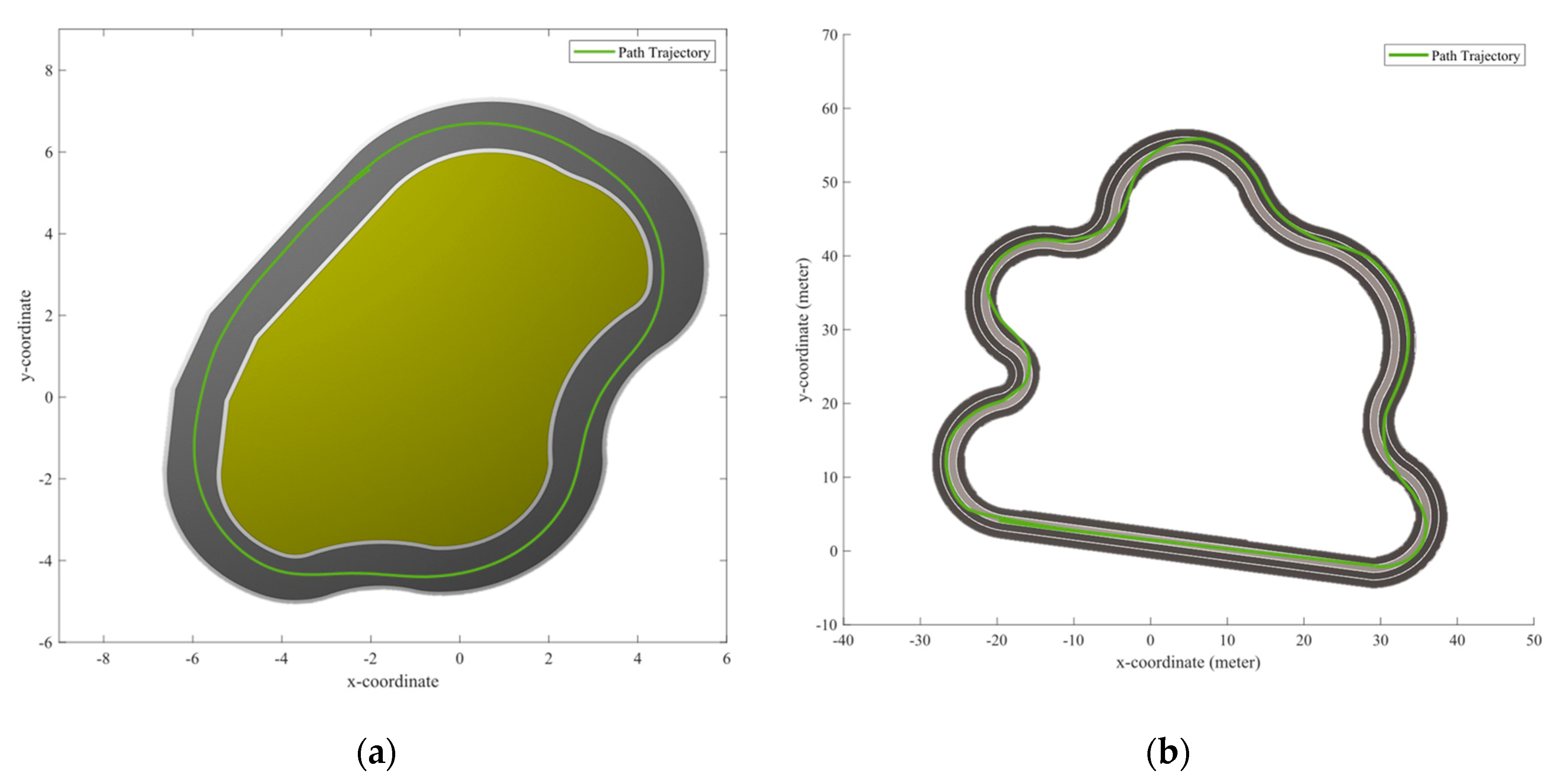

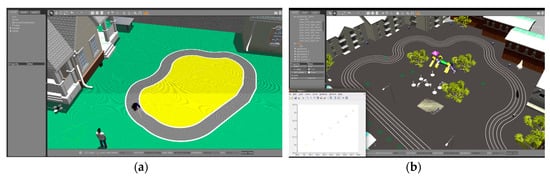

For lane detection and closed-loop lane keeping control, we used two simulated track environments using pioneer robot-vehicle as shown in Figure 12. Using this image input from the Gazebo simulator, the algorithm detects the lane and estimates the vehicle’s angular velocity and linear velocity based on lane detection results and output in Matlab. Initial parameters are set according to the simulation environment. For top view transformation, parameters are set as , and to get the binary image of the lane, the auto threshold for each channel is used. .

Figure 12.

Gazebo real-time physic engine simulation of the environments; (a) Normal Track Environment, (b) Athletic field Track.

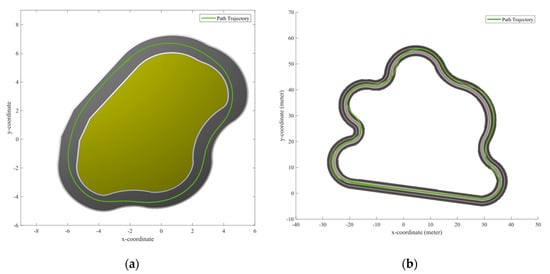

To assess the viability of the introduced algorithms, random noises were added with real values. We performed the experiment with noisy measurement data of the parabola equation in Matlab. Figure 12b illustrates the 3d view and map of the athletic field and Figure 12a illustrates another track environment. The plotted graph of the trajectory path is generated from the odometry data of the robot-vehicle which shows that the curve lane follows the road curve scenario, as shown in Figure 13a,b with respect to the lane tracking of environments from Figure 12a,b.

Figure 13.

Plotted graph of the trajectory path of the ground vehicle; (a) Normal Track Environment, (b) Athletic field Track.

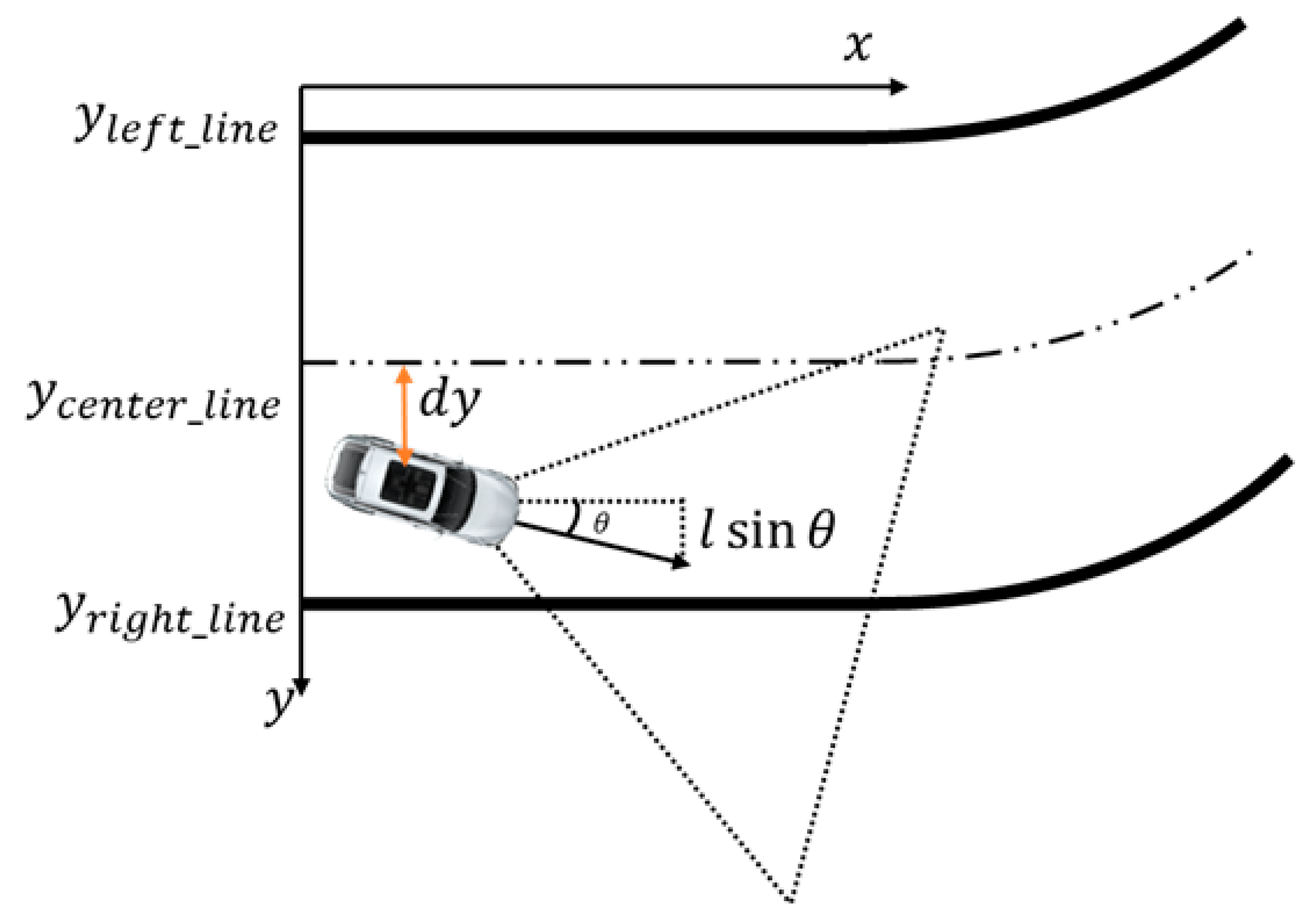

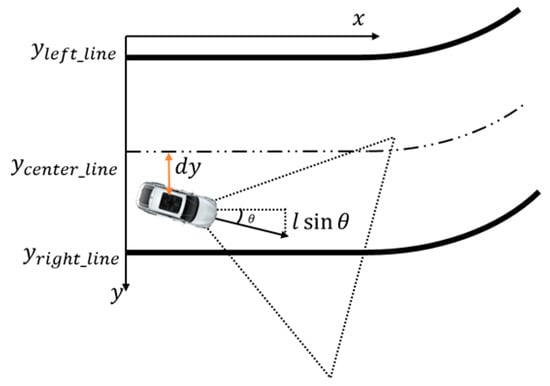

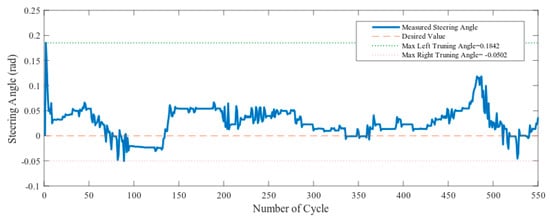

The angular velocity control uses a proportional-integral-differential (PID) controller, which is a control loop feedback mechanism. In PID control, the current output is based on the feedback of the previous output, which is computed to keep the error small. The error is calculated as the difference between the desired and the measured value, which should be as small as possible. Two objectives are executed, keeping the robot driving along the centerline and keeping the robot heading angle, , as shown in Figure 14. The equation can be expressed as from where error term can be written as The steering angle of the car can be estimated using a straight line detection result while also detecting the curve lanes.

Figure 14.

Steering angle calculation.

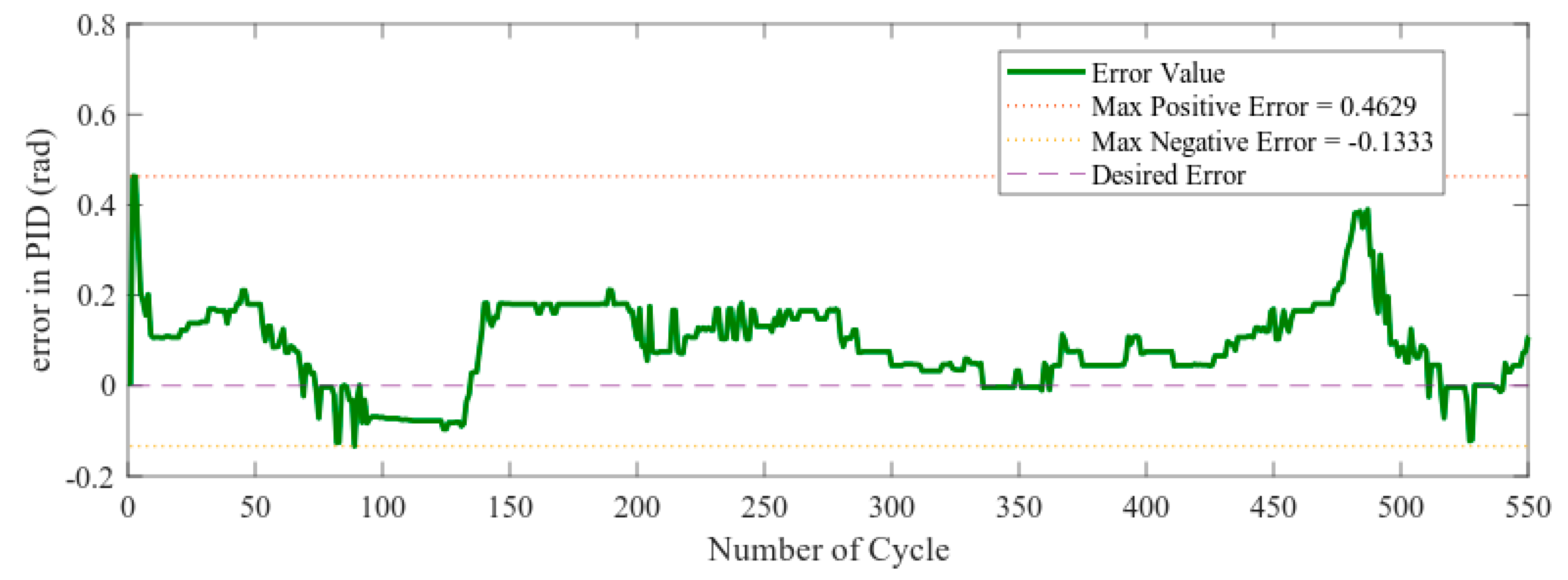

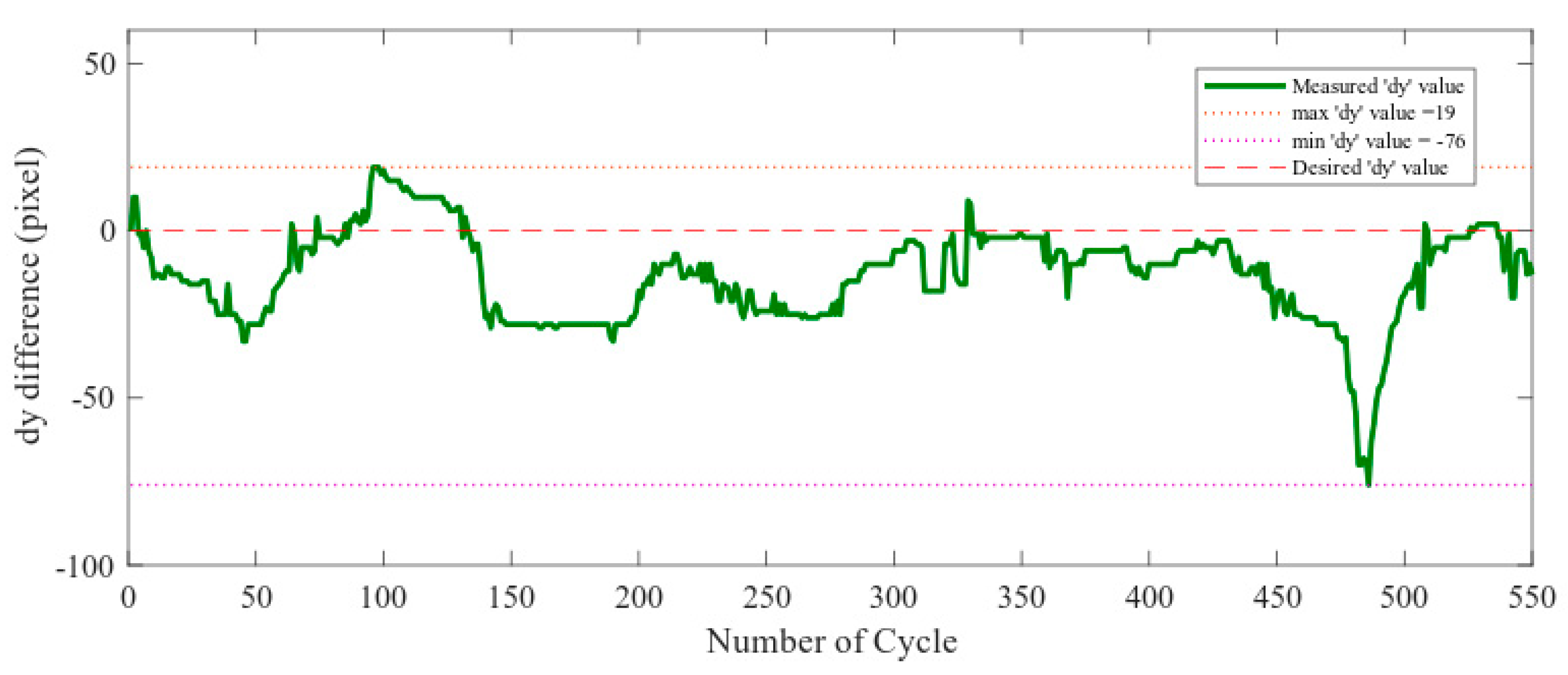

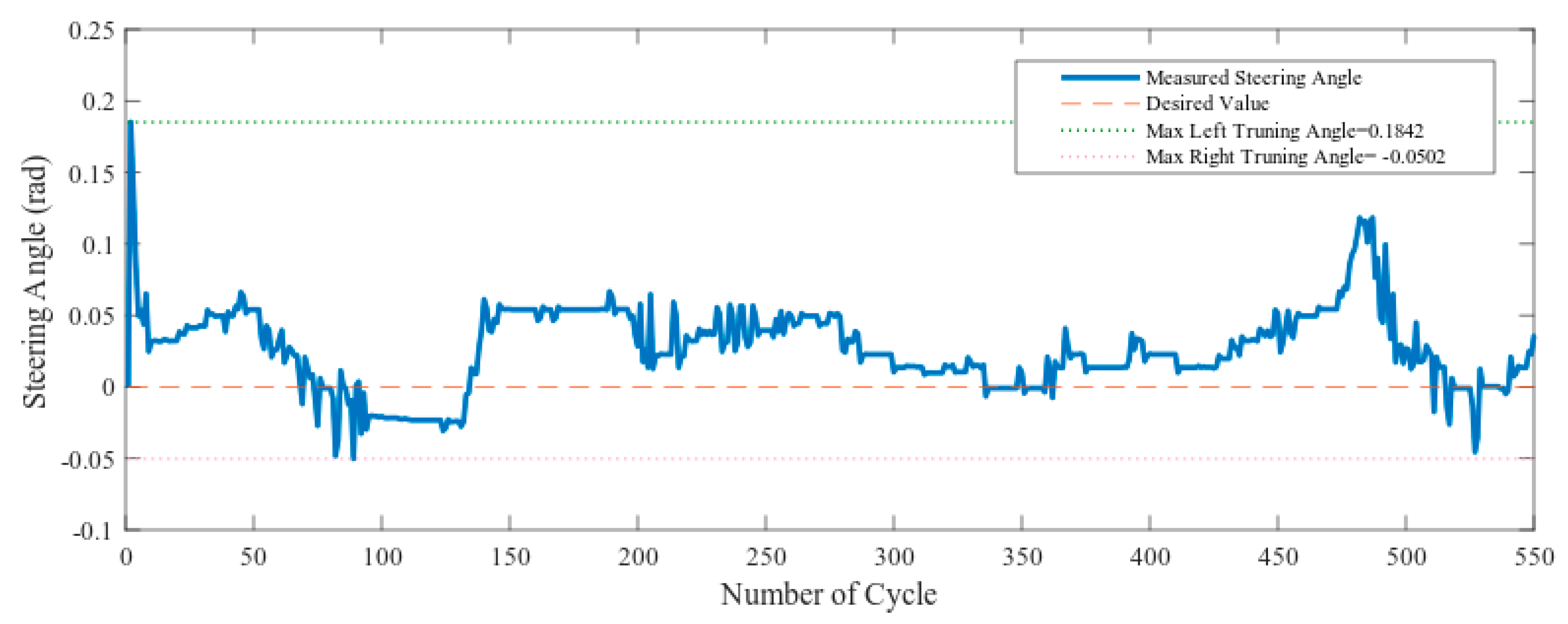

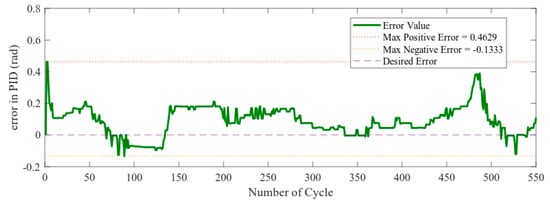

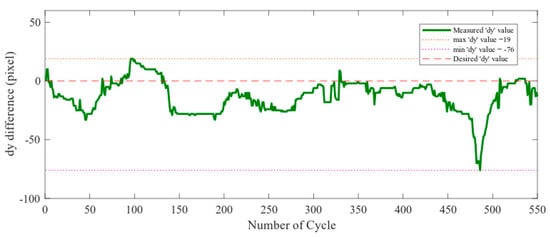

Figure 15 and Figure 16 show the PID error and difference in pixel respectively. The steering angle is derived from the arctangent of the centerline of the vehicle. Here, co-efficient of p-term = 0.3 and co-efficient of d-term = 0.1. Figure 17 shows the steering angle in the simulation experiment in scenario one. Figure 15 shows that most of the time the error is positive. As a result, Figure 17 generates positive steering angles most of the time which means steering left. All the figures below are the result of the simulation from the map of Figure 13a. The vehicle maneuver was performed counter-clockwise. To stay in the center lane, the vehicle needs to take steering on the left. So, that is the reason for error value were greater than zero during the simulation. The portion where the error value is less than zero indicates steering right and also indicates that there is curve going right particularly at that time. The sudden reason for the spike in 480th number of cycle indicates that the value becomes high at that moment. In order to reduce the value and bring the vehicle to the position close to the center line, the error value is increased. Therefore, there steering angle to turn left was higher than its average value.

Figure 15.

Error term for proportional-integral-differential (PID) control.

Figure 16.

Distance between robot position and center-line (lateral error).

Figure 17.

Steering angle output from the vehicle in the simulator.

4. Experimental Results for the Curve Lane Detection

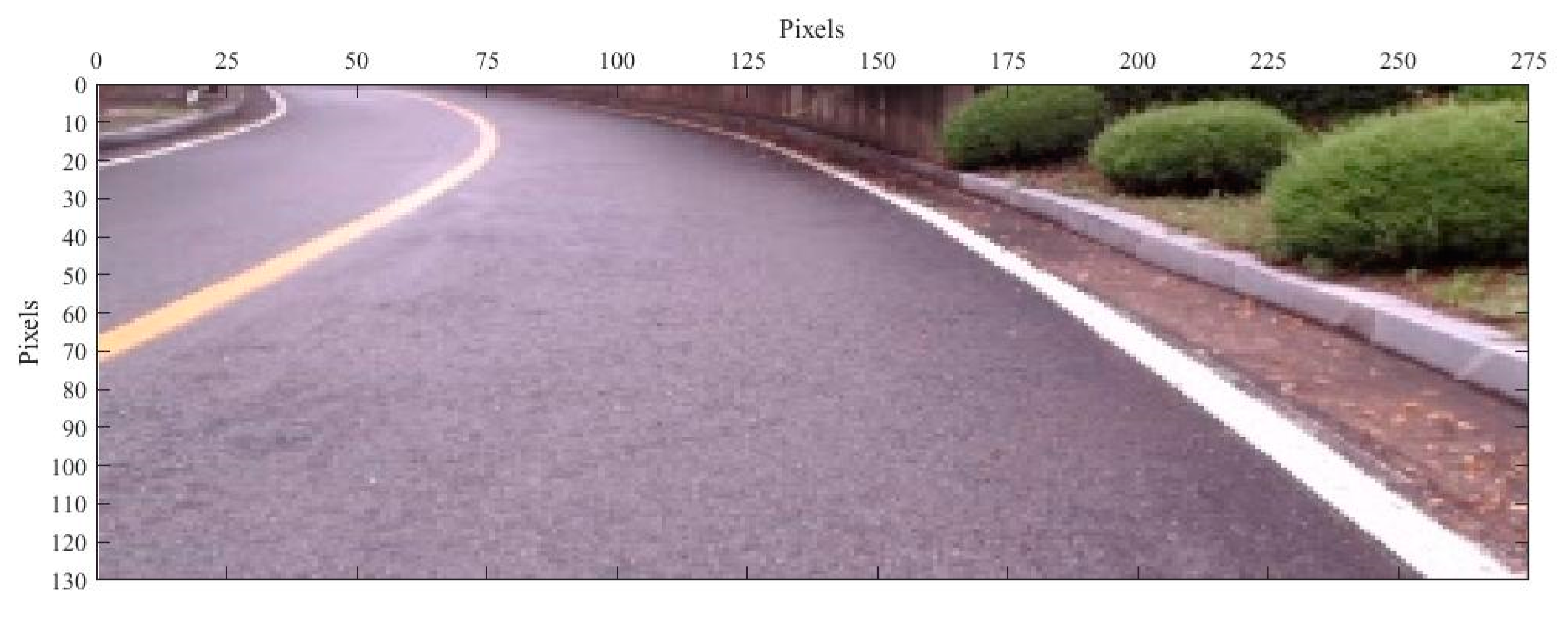

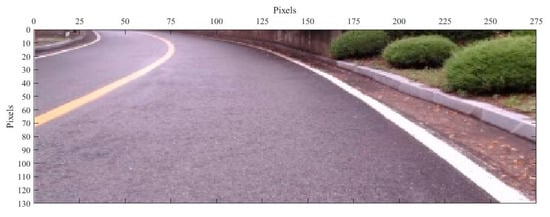

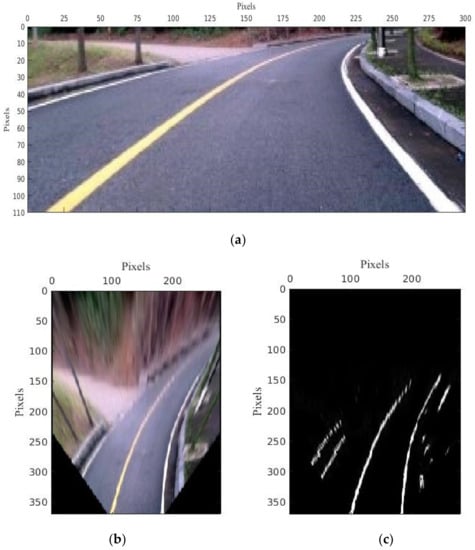

Figure 18 represents an image output from MATLAB in the pixel coordinate system in the algorithm. The image frame generated from the camera on the center is converted to a pixel coordinate system due to convenience.

Figure 18.

Front-view camera image in pixel coordinate system.

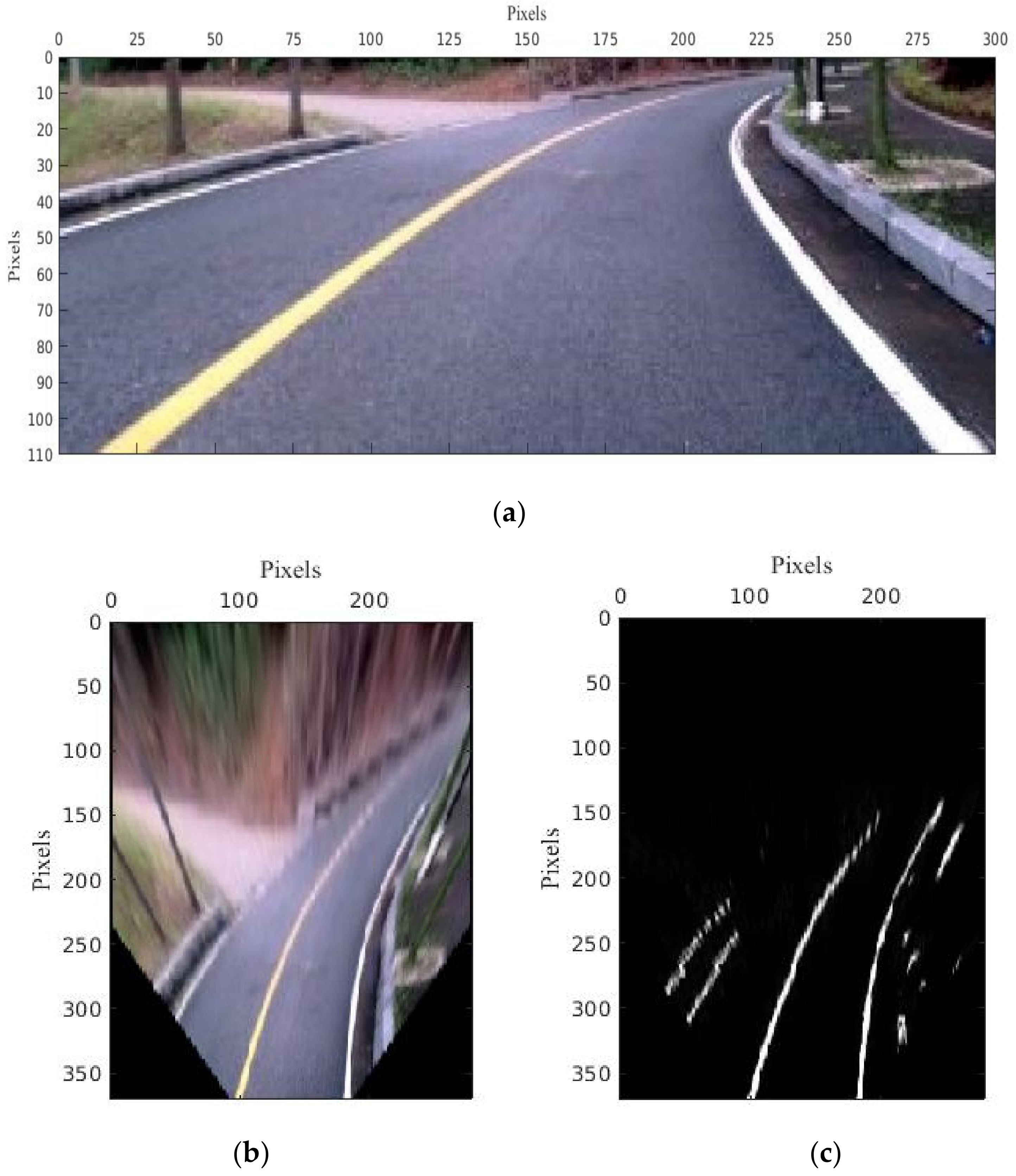

Results of the auto threshold by Otsu are shown in Figure 19b from the output of the Figure 20a. For manual threshold are used. are obtained from here from auto threshold values. For top view transformation, parameters are set as .

Figure 19.

Manual and Otsu threshold from the athletic track lane image.

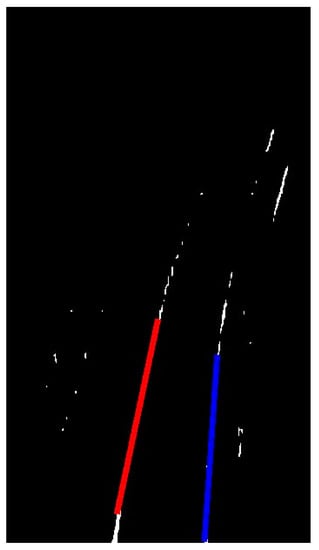

Figure 20.

Top view transformed image; (a) Real image for top view transformation, (b) Top view, (c) Otsu Threshold.

The auto threshold results in Figure 19b are clearer than the manual threshold results in Figure 19a. Also, it can be robust in outside experiments. Top view transformation converts from pixels in the image plane to world coordinates metric as shown in Figure 20.

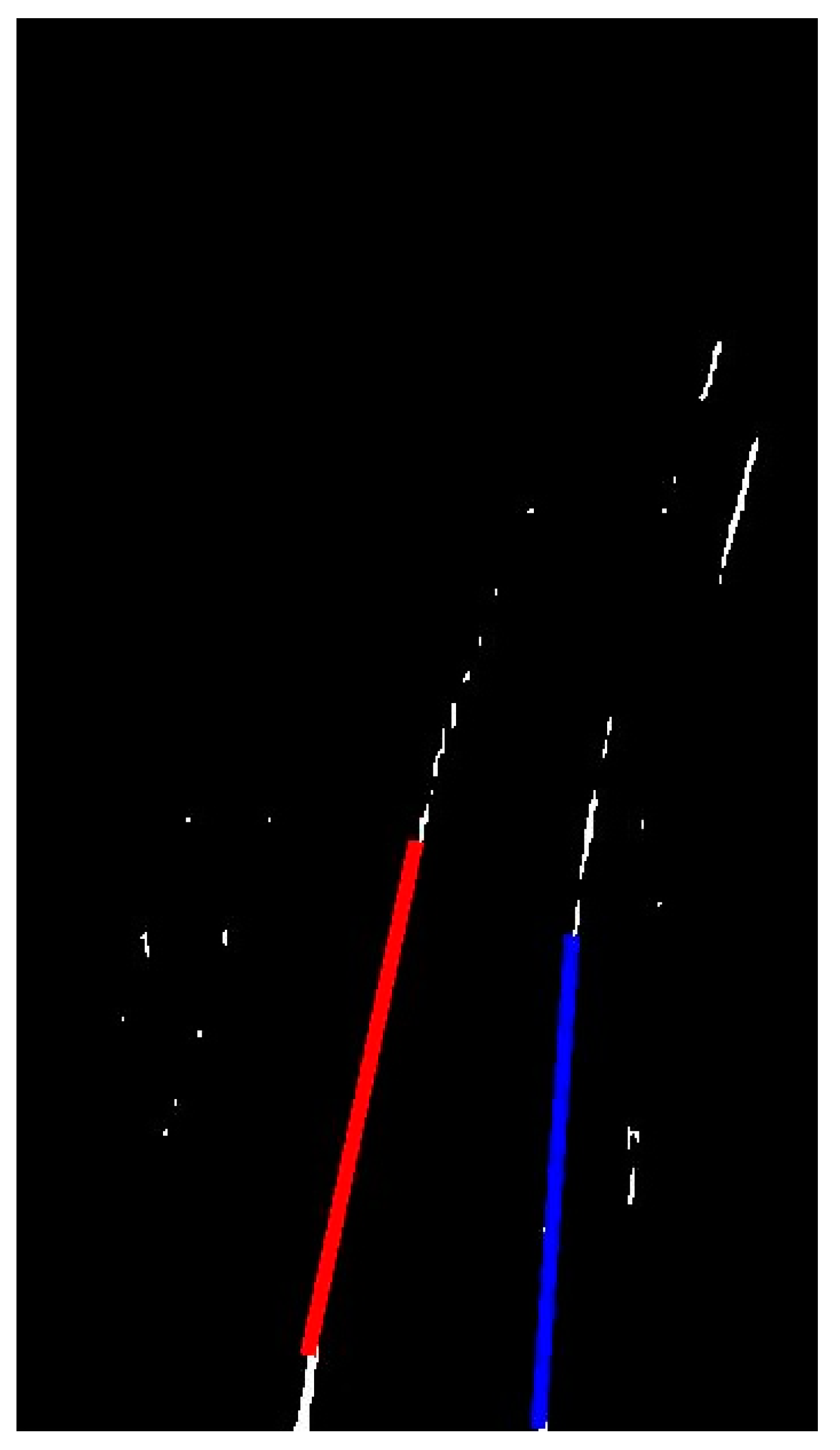

The Hough transform result generates lines that should be almost parallel, as shown in Figure 21. Also, the section to track the curve lane starts at the finishing point of these two longest straight lines.

Figure 21.

Hough transform result (the longest two lines).

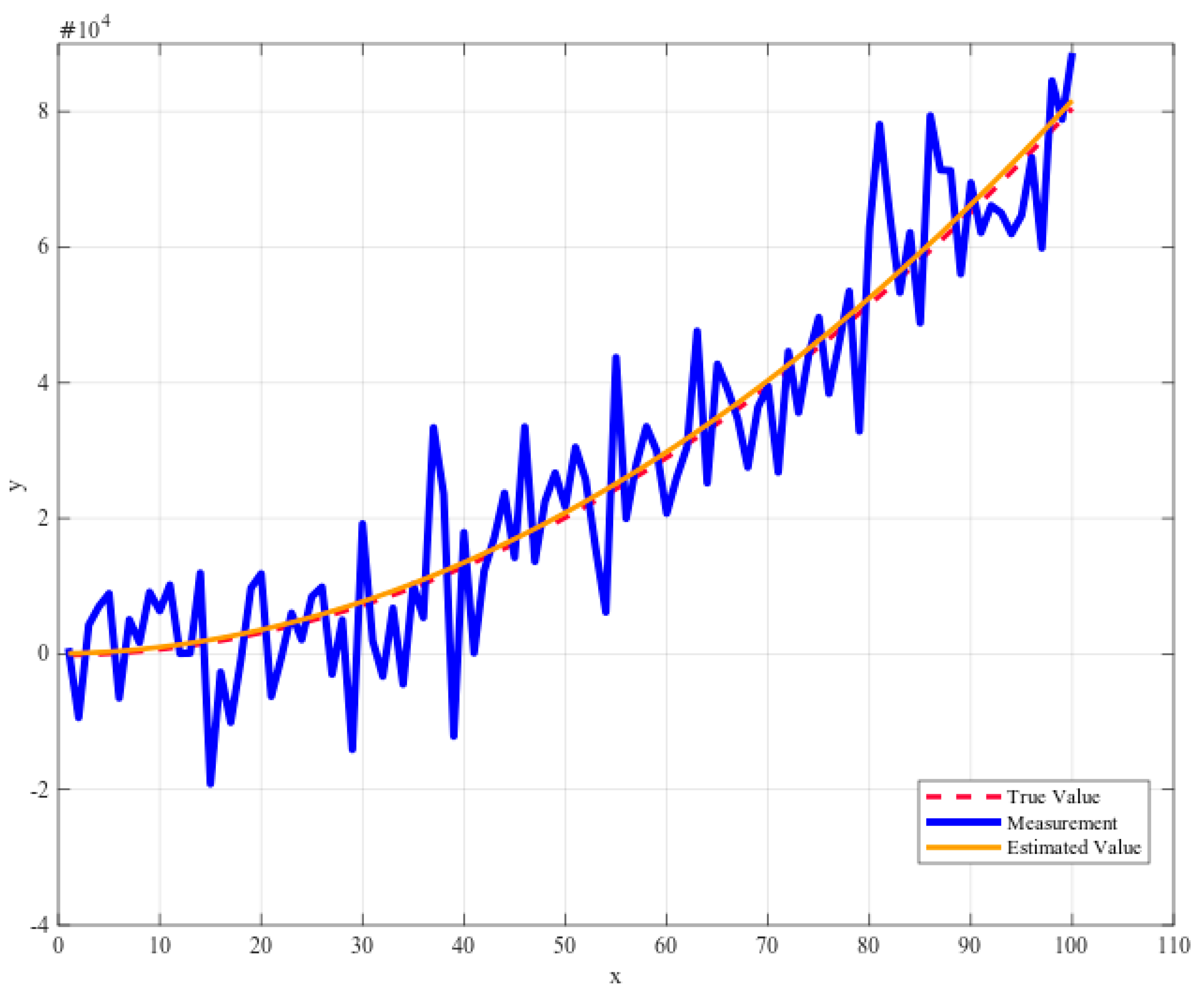

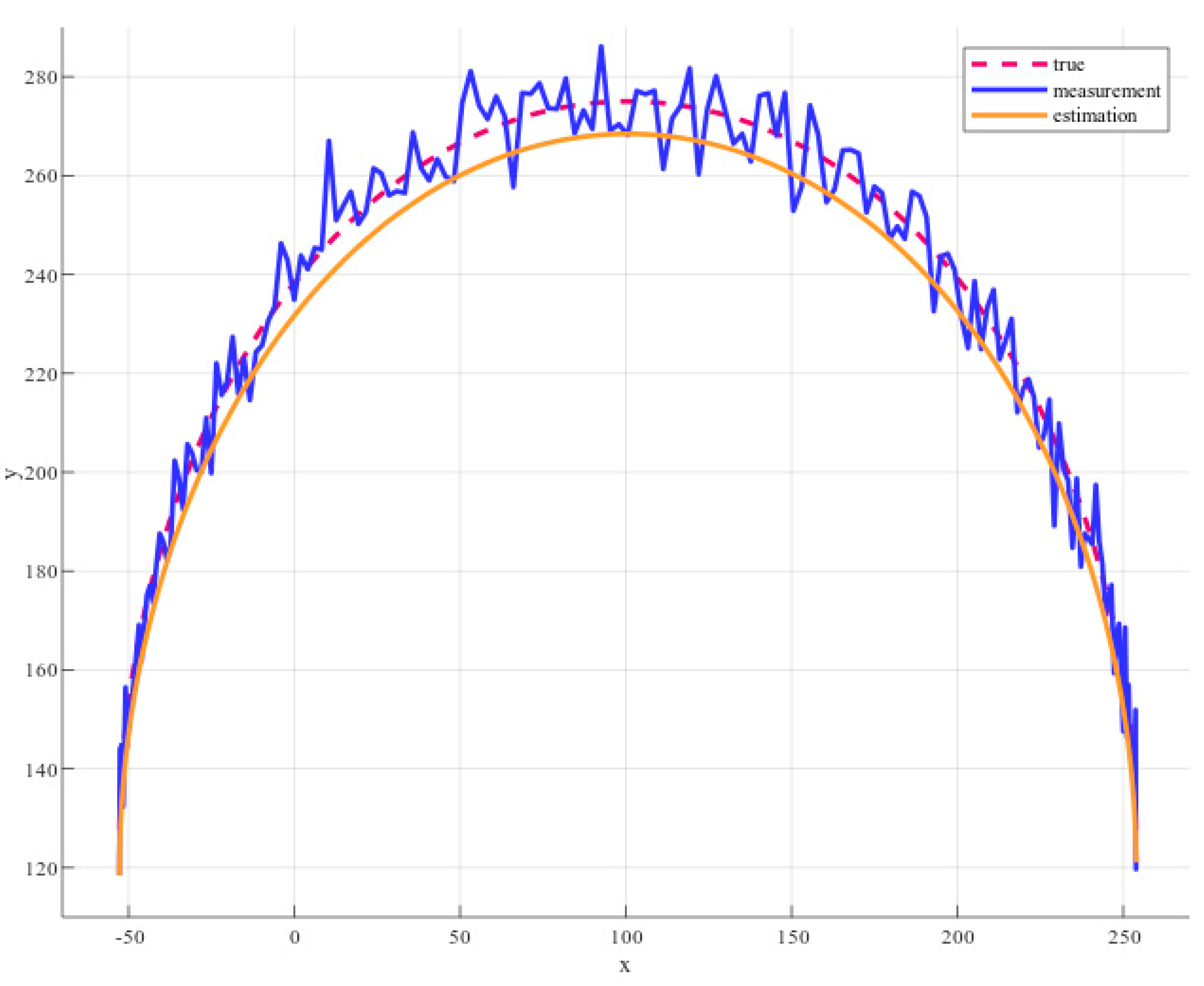

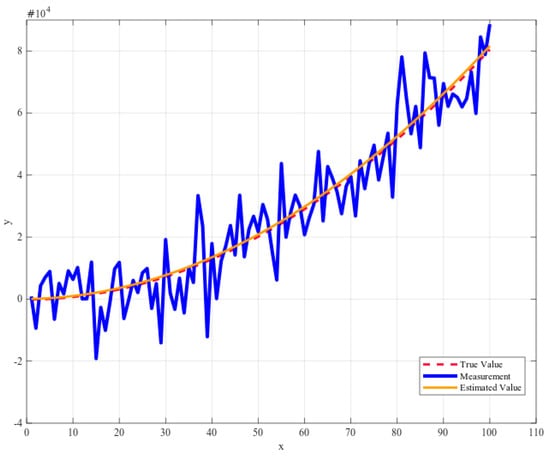

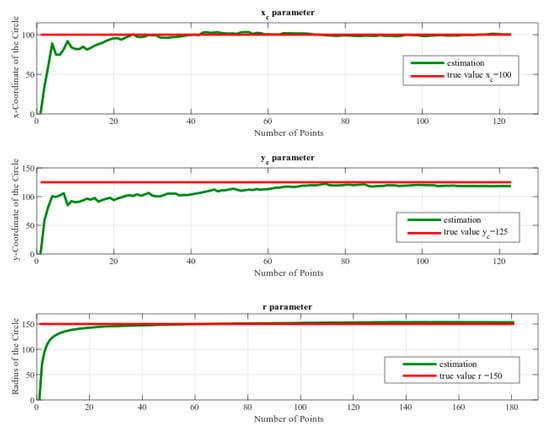

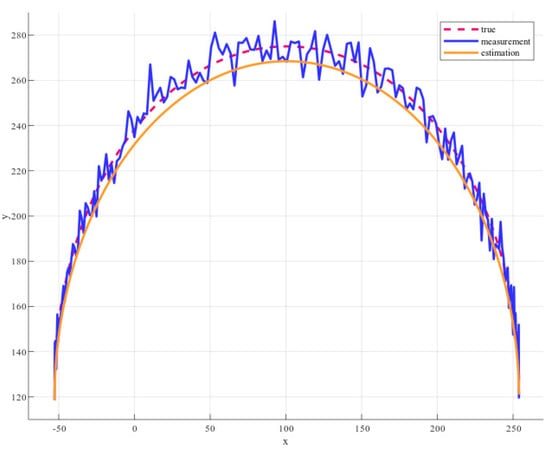

To evaluate the effectiveness of the proposed algorithms, we tested with noisy measurement data of the parabola equation in Matlab. The noisy data is created by adding random value to the true value generated using the random function from Matlab. The measurement legend marked in blue in Figure 22, is the combination of true value and noisy value. Our Kalman filter can estimate parameters of the parabola equation from noisy data. Figure 22 presents a comparison between the measurement value and estimation value, the real value.

Figure 22.

Comparison between measured value (Blue), KF estimation (Orange), and real value (Red) of parabola detection.

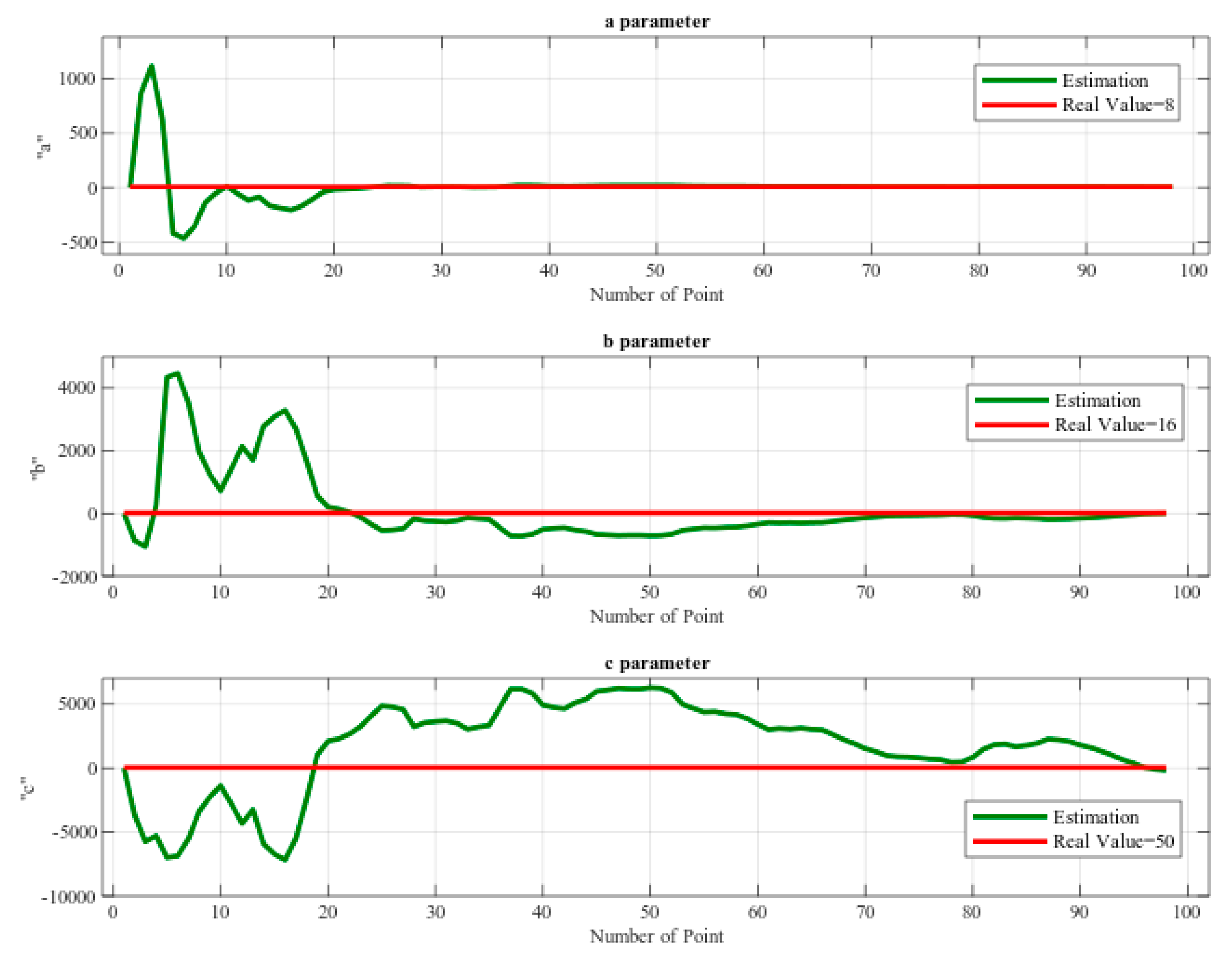

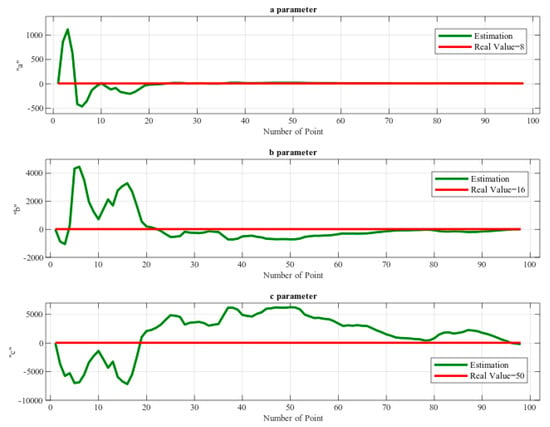

In Figure 23, the graphs illustrated the estimation results of parameters. At the end of the process, estimation results become almost equal to true values. In this simulation, “a” parameter’s true value is 8 and the estimated value is 7.4079, the “b” parameter’s true value is 16 and the estimated value is 22.4366, “c” parameter’s true value 50 and the estimated value is 37.115. There is almost no difference between the estimated value and the true value compared to the noise value ratio. Now, it is possible to apply the proposed algorithms in the processed image to perform the detection process for the curve lane.

Figure 23.

The simulation result of predicting ‘a’, ‘b’ and ‘c’ parameters from the parabolic detection.

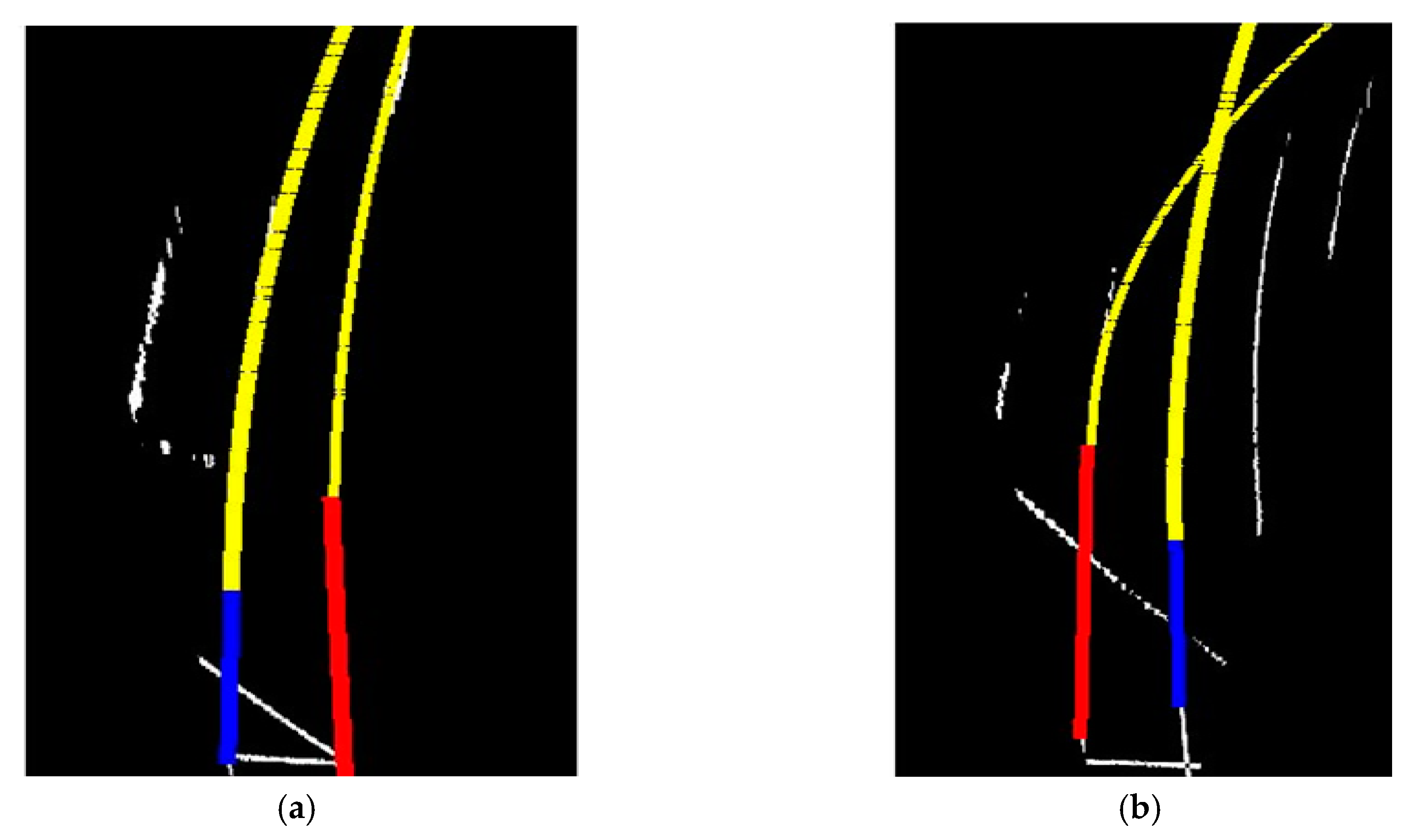

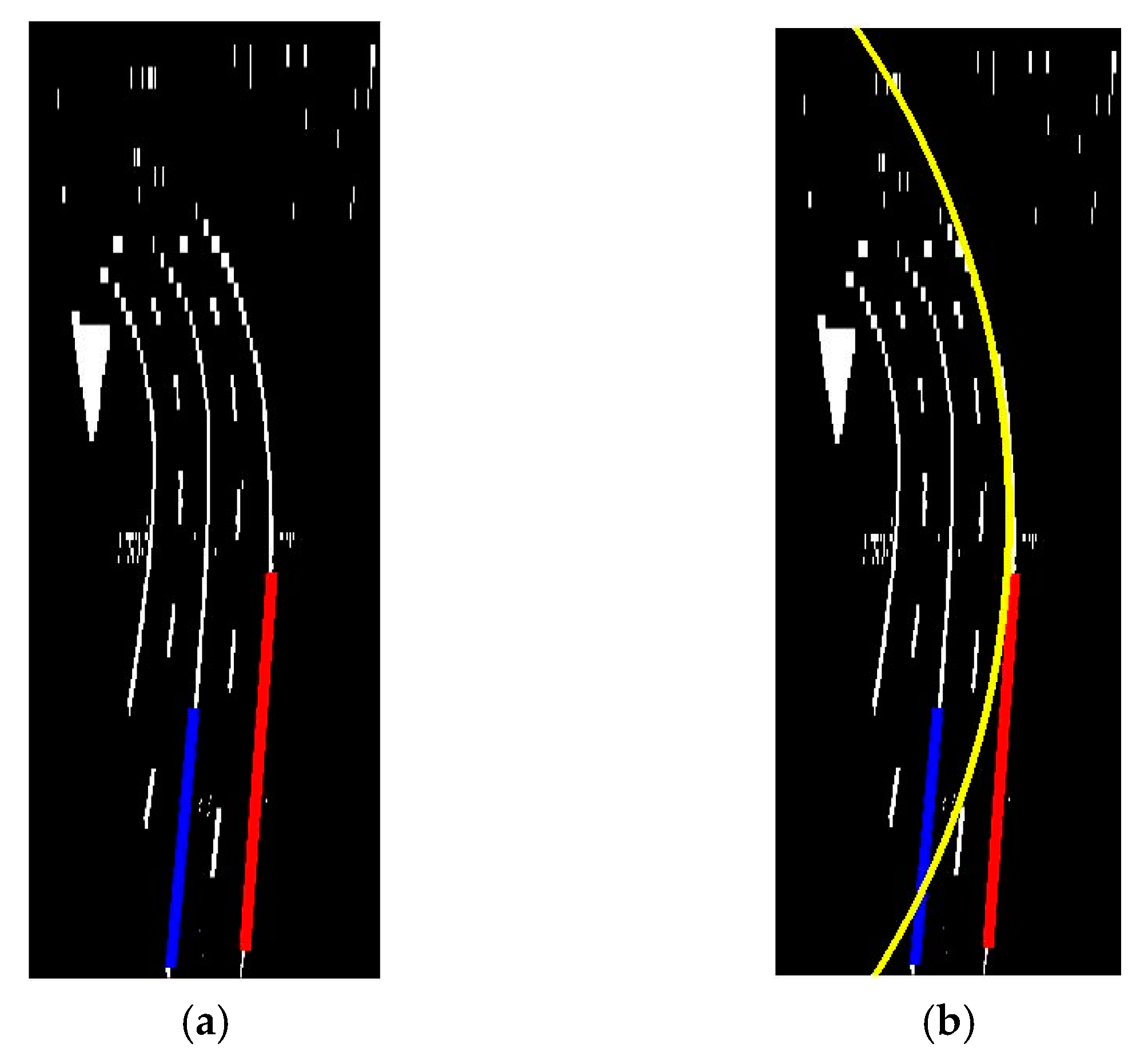

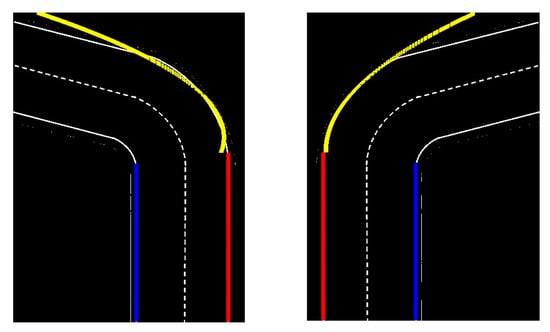

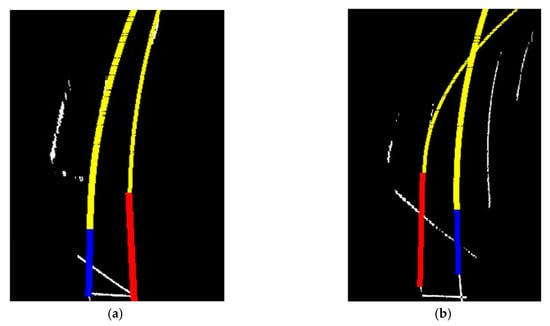

Figure 24 presents the real experimental results of the curve line detection based on the Kalman filter. Where the yellow line is the result of our algorithm and estimation results of first line , , c, and estimation results of second line , , c in the first experiment image of the road. In the second experiment image of the road, estimation results of first line , , c , estimation results of the second line , , c . The simulation result of circle detection is shown in Figure 25. Figure 26 shows a comparison between the measurement value and the estimation value, the true value of circle detection. Of course, the proposed algorithm also has some shortcomings. For example, the right-hand side of Figure 24 is the aftermath of shadow reflection from the left side of the lane. The reflection produces brightness in the lane image, causing a slight change in the detection. Further research is needed later.

Figure 24.

The real experiment result of the cure lane detection based on Kalman filter (yellow); (a) Output Result of Parabolic Curve Detection, (b) Result aftermath of shadow reflection.

Figure 25.

The simulation result of predicting ‘xc’, ‘yc’ and ‘r’ parameters from the circle detection.

Figure 26.

Comparison between measured value (blue), KF estimation (Orange), and true value (Red) of circle detection.

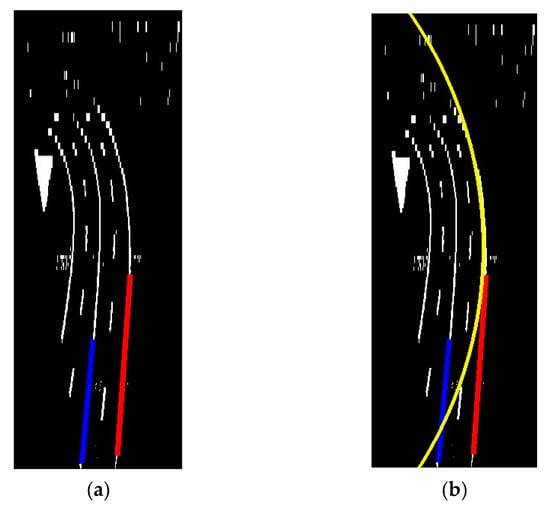

After that, we tested in the top-view transformed image of the different circular real roads. Figure 27a,b illustrates the result of curve line detection using the circle model in real road image.

Figure 27.

The result of curve lane detection based on circle model and Kalman filter (Yellow); (a) Input Image for Circle Detection, (b) Output Result.

Here, the yellow line is the result of our algorithm and radius , the center of the circle is , . Using this result we can predict road turning and based on this value of radius we can control the speed of the self-driving car. For example, if the radius value is low, the self-driving car needs to reduce speed, if the radius value is high, the self-driving car can be at the same speed (no need to reduce speed).

5. Conclusions

In this paper, a curve line detection algorithm using the Kalman filter is presented. The algorithm is split into two sections:

- (1)

- Image pre-processing. It contains Otsu’s threshold method. Top view image transforms to create a top-view image of the road. A Hough transform to track a straight lane in the near-field of view of the camera sensor.

- (2)

- Curve lane detection. The Kalman filter provides the detection result for curve lanes in the far-field of view. This section consists of two different methods, the first method is based on the parabola model, and a second method is based on the circle model.

The experimental results show that the curve lane detection method can be effectively detected even under a very noisy environment and with parabola and circle model. Also, we have deployed the algorithm in the gazebo simulation environment to verify the performance. One advantage of the proposed algorithm is its robustness against noise, as our algorithms are based on the Kalman filter. The viability of the proposed curve lane detection strategy can be applied to the self-driving car systems as well as to the advanced driver assistant systems. Based on our curve lane detection results, we can predict road turning, and also estimate suitable velocity and angular velocity for the self-driving car. Also, our proposed algorithm provides close-loop lane keeping control to stay in lane. The experimental result shows the proposed algorithm achieves an average of 10 fps. Even though the algorithm has an auto threshold method to adjust with different light conditions such as low-light, further study is needed to detect lane in conditions like light reflection, shadows, worn-lane, etc. Moreover, the proposed algorithm does not require high GPU processing unit to perform other CNN-based algorithms. The performance is satisfactory in the CPU-based system according to the fps. However, CNN-based study in the pre-processing step can provide a more efficient result for edge detection.

Author Contributions

Conceptualization, B.D. and D.-J.L.; methodology, B.D. and S.H.; software, S.H. and B.D.; validation, S.H., and B.D.; formal analysis, B.D.; investigation, B.D. and S.H.; resources, D.-J.L.; data curation, D.-J.L.; writing—original draft preparation, B.D. and S.H.; writing—review and editing, S.H.; visualization, S.H. and B.D.; supervision, D.-J.L.; project administration, D.-J.L.; funding acquisition, D.-J.L.. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded and conducted under the Competency Development Program for Industry Specialists of Korean Ministry of Trade, Industry and Energy (MOTIE), operated by Korea Institute for Advancement of Technology (KIAT). (No. N0002428, HRD program for Future Car). This research was supported by the Development Program through the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NO.2019R1F1A1049711).

Acknowledgments

I (Sabir Hossain) would like to express my thanks and appreciation to my supervisor Professor Deok-jin Lee for his direction and support throughout this paper. I would like to thank Byambaa Dorj for his cooperation in this paper. Additionally, I would also like to pay my profound feeling of appreciation to all CAIAS (Center for Artificial Intelligence and Autonomous System) lab members for their support and CAIAS lab for providing me all the facilities that were required from the lab.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fleetwood, J. Public health, ethics, and autonomous vehicles. Am. J. Public Health 2017, 107, 532–537. [Google Scholar] [CrossRef] [PubMed]

- Green, M. “How Long Does It Take to Stop?” Methodological Analysis of Driver Perception-Brake Times. Transp. Hum. Factors 2000, 2, 195–216. [Google Scholar] [CrossRef]

- Vacek, S.; Schimmel, C.; Dillmann, R. Road-marking analysis for autonomous vehicle guidance. In Proceedings of the European Conference on Mobile Robots, Freiburg, Germany, 19–21 September 2007; pp. 1–6. [Google Scholar]

- Datta, T.; Mishra, S.K.; Swain, S.K. Real-Time Tracking and Lane Line Detection Technique for an Autonomous Ground Vehicle System. In Proceedings of the International Conference on Intelligent Computing and Smart Communication 2019; Springer: Singapore, 2020; pp. 1609–1625. [Google Scholar]

- Ballard, D.H. Generalizing the Hough transform to detect arbitrary shapes. In Pattern Recognition; Elsevier: Amsterdam, The Netherlands, 1981; Volume 13, pp. 111–122. [Google Scholar]

- Illingworth, J.; Kittler, J. A survey of the hough transform. Comput. Vision, Graph. Image Process. 1988, 44, 87–116. [Google Scholar] [CrossRef]

- He, X.; Duan, Z.; Chen, C.; You, F. Video-based lane detection and tracking during night. In CICTP 2019: Transportation in China—Connecting the World—Proceedings of the 19th COTA International Conference of Transportation Professionals; A.S.C.E.: Reston, VA, USA, 2019; pp. 5794–5807. ISBN 9780784482292. [Google Scholar]

- Mehrotra, R.; Namuduri, K.R.; Ranganathan, N. Gabor filter-based edge detection. Pattern Recognit. 1992, 25, 1479–1494. [Google Scholar] [CrossRef]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter. In Pract. 2006, 7, 1–16. [Google Scholar]

- Yang, S.; Wu, J.; Shan, Y.; Yu, Y.; Zhang, S. A Novel Vision-Based Framework for Real-Time Lane Detection and Tracking; S.A.E. Technical Paper; S.A.E.: Warrendale, PA, USA, 2019; Volume 2019. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science, Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Son, Y.; Lee, E.S.; Kum, D. Robust multi-lane detection and tracking using adaptive threshold and lane classification. Mach. Vis. Appl. 2019, 30, 111–124. [Google Scholar] [CrossRef]

- Borkar, A.; Hayes, M.; Smith, M.T. Robust lane detection and tracking with Ransac and Kalman filter. In Proceedings of the International Conference on Image Processing, ICIP, Cairo, Egypt, 7–10 November 2009; pp. 3261–3264. [Google Scholar]

- Jiang, L.; Li, J.; Ai, W. Lane Line Detection Optimization Algorithm based on Improved Hough Transform and R-least Squares with Dual Removal. In Proceedings of the 2019 IEEE 4th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chengdu, China, 20–22 December 2019; Volume 1, pp. 186–190. [Google Scholar]

- Chen, C.; Tang, L.; Wang, Y.; Qian, Q. Study of the Lane Recognition in Haze Based on Kalman Filter. In Proceedings of the 2019 International Conference on Artificial Intelligence and Advanced Manufacturing (AIAM), Dublin, Ireland, 16–18 October 2019; pp. 479–483. [Google Scholar]

- Wang, H.; Wang, Y.; Zhao, X.; Wang, G.; Huang, H.; Zhang, J. Lane Detection of Curving Road for Structural Highway with Straight-Curve Model on Vision. IEEE Trans. Veh. Technol. 2019, 68, 5321–5330. [Google Scholar] [CrossRef]

- Salarpour, A.; Salarpour, A.; Fathi, M.; Dezfoulian, M. Vehicle Tracking Using Kalman Filter and Features. Signal Image Process. Int. J. 2011, 2, 1–8. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1996, 9, 62–66. [Google Scholar] [CrossRef]

- Yuan, X.; Martínez, J.F.; Eckert, M.; López-Santidrián, L. An improved Otsu threshold segmentation method for underwater simultaneous localization and mapping-based navigation. Sensors 2016, 16, 1148. [Google Scholar] [CrossRef] [PubMed]

- Dorj, B.; Lee, D.J. A Precise Lane Detection Algorithm Based on Top View Image Transformation and Least-Square Approaches. J. Sens. 2016, 2016, 4058093. [Google Scholar] [CrossRef]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Tseng, C.-C.; Cheng, H.-Y.; Jeng, B.-S. A Lane Detection Algorithm Using Geometry Information and Modified Hough Transform. In Proceedings of the 18th IPPR Conference on Computer Vision, Graphics and Image Processing, Taipei, Taiwan, 21–23 August 2005; Volume 1, pp. 796–802. [Google Scholar]

- Jung, C.R.; Kelber, C.R. A lane departure warning system based on a linear-parabolic lane model. In Proceedings of the IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 891–895. [Google Scholar]

- Luo, L.; Xu, D.; Zhang, Z.; Zhang, J.; Qu, W. A fast and robust circle detection method using perpendicular bisector of chords. In Proceedings of the 2013 25th Chinese Control and Decision Conference, CCDC 2013, Guiyang, China, 25–27 May 2013; pp. 2856–2860. [Google Scholar]

- Lim, K.H.; Seng, K.P.; Ang, L.-M.; Chin, S.W. Lane detection and Kalman-based linear-parabolic lane tracking. In Proceedings of the 2009 International Conference on Intelligent Human-Machine Systems and Cybernetics, IHMSC’09, Hangzhou, China, 26–27 August 2009; Volume 2, pp. 351–354. [Google Scholar]

- Dorj, B.; Tuvshinjargal, D.; Chong, K.; Hong, D.P.; Lee, D.J. Multi-sensor fusion based effective obstacle avoidance and path-following technology. Adv. Sci. Lett. 2014, 20, 1751–1756. [Google Scholar] [CrossRef]

- Assidiq, A.A.M.; Khalifa, O.O.; Islam, M.R.; Khan, S. Real time lane detection for autonomous vehicles. In Proceedings of the International Conference on Computer and Communication Engineering 2008, ICCCE08: Global Links for Human Development, Kuala Lumpur, Malaysia, 13–15 May 2008; pp. 82–88. [Google Scholar]

- Sehestedt, S.; Kodagoda, S. Efficient Lane Detection and Tracking in Urban Environments. In Proceeding of the European Conference on Mobile Robots (ECMR), Freiburg, Germany, 19–21 September 2007; pp. 1–6. [Google Scholar]

- Lim, K.H.; Seng, K.P.; Ang, L.-M. River flow lane detection and Kalman filtering-based B-spline lane tracking. Int. J. Veh. Technol. 2012, 2012, 465819. [Google Scholar] [CrossRef]

- Yonghong, X.; Qiang, J. A new efficient ellipse detection method. In Proceedings of the Object Recognition Supported by User Interaction for Service Robots, Quebec City, QC, Canada, 11–15 August 2002; Volume 16, pp. 957–960. [Google Scholar]

- Hoang, T.M.; Hong, H.G.; Vokhidov, H.; Park, K.R. Road lane detection by discriminating dashed and solid road lanes using a visible light camera sensor. Sensors 2016, 16, 1313. [Google Scholar] [CrossRef] [PubMed]

- Mu, C.; Ma, X. Lane Detection Based on Object Segmentation and Piecewise Fitting. Telkomnika Indones. J. Electr. Eng. 2014, 12, 3491–3500. [Google Scholar] [CrossRef]

- Seo, Y.; Rajkumar, R.R. Use of a Monocular Camera to Analyze a Ground Vehicle’s Lateral Movements for Reliable Autonomous City Driving. In Proceedings of the IEEE IROS Workshop on Planning, Perception and Navigation for Intelligent Vehicles, Tokyo, Japan, 3–7 November 2013; pp. 197–203. [Google Scholar]

- Olson, C.F. Constrained Hough Transforms for Curve Detection. Comput. Vis. Image Underst. 1999, 73, 329–345. [Google Scholar] [CrossRef]

- Dorj, B. Top-view Image Transformation Based Precise Lane Detection Techniques and Embedded Control for an Autonomous Self-Driving Vehicle. Ph.D. Thesis, Kunsan National University, Kunsan, Korea, 2017. [Google Scholar]

- Wang, H.; Liu, N. Design and recognition of a ring code for AGV localization. In Proceedings of the 2003 Joint Conference of the 4th International Conference on Information, Communications and Signal Processing and 4th Pacific-Rim Conference on Multimedia (ICICS-PCM 2003), Singapore, 15–18 December 2003; Volume 1, pp. 532–536. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).