License Plate Image Generation using Generative Adversarial Networks for End-To-End License Plate Character Recognition from a Small Set of Real Images

Abstract

1. Introduction

2. Related Works

2.1. Image-to-Image Translation

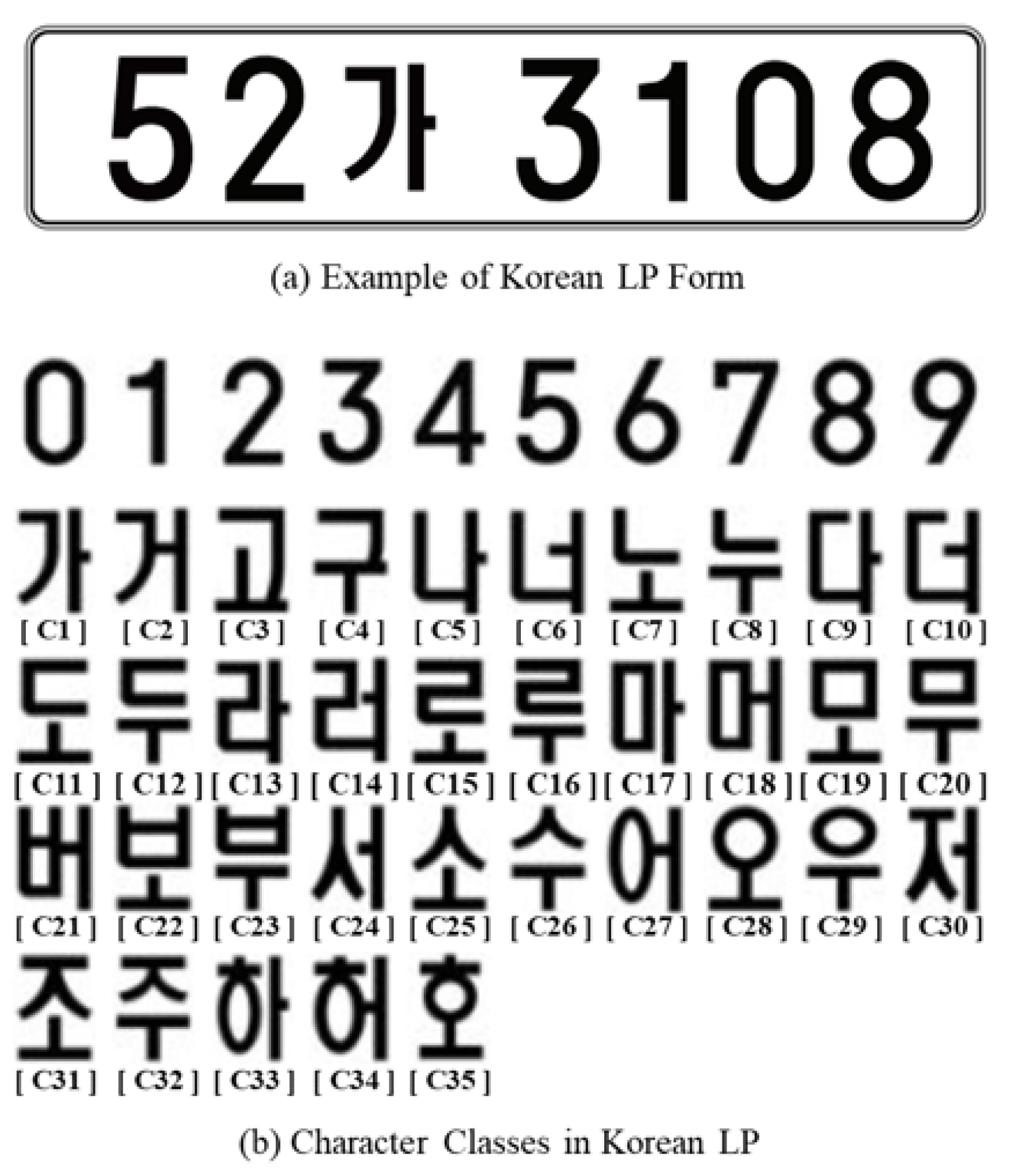

2.2. Automatic License Plate Recognition

3. License Plate Image Generation Via LP-GAN

3.1. GAN Approaches

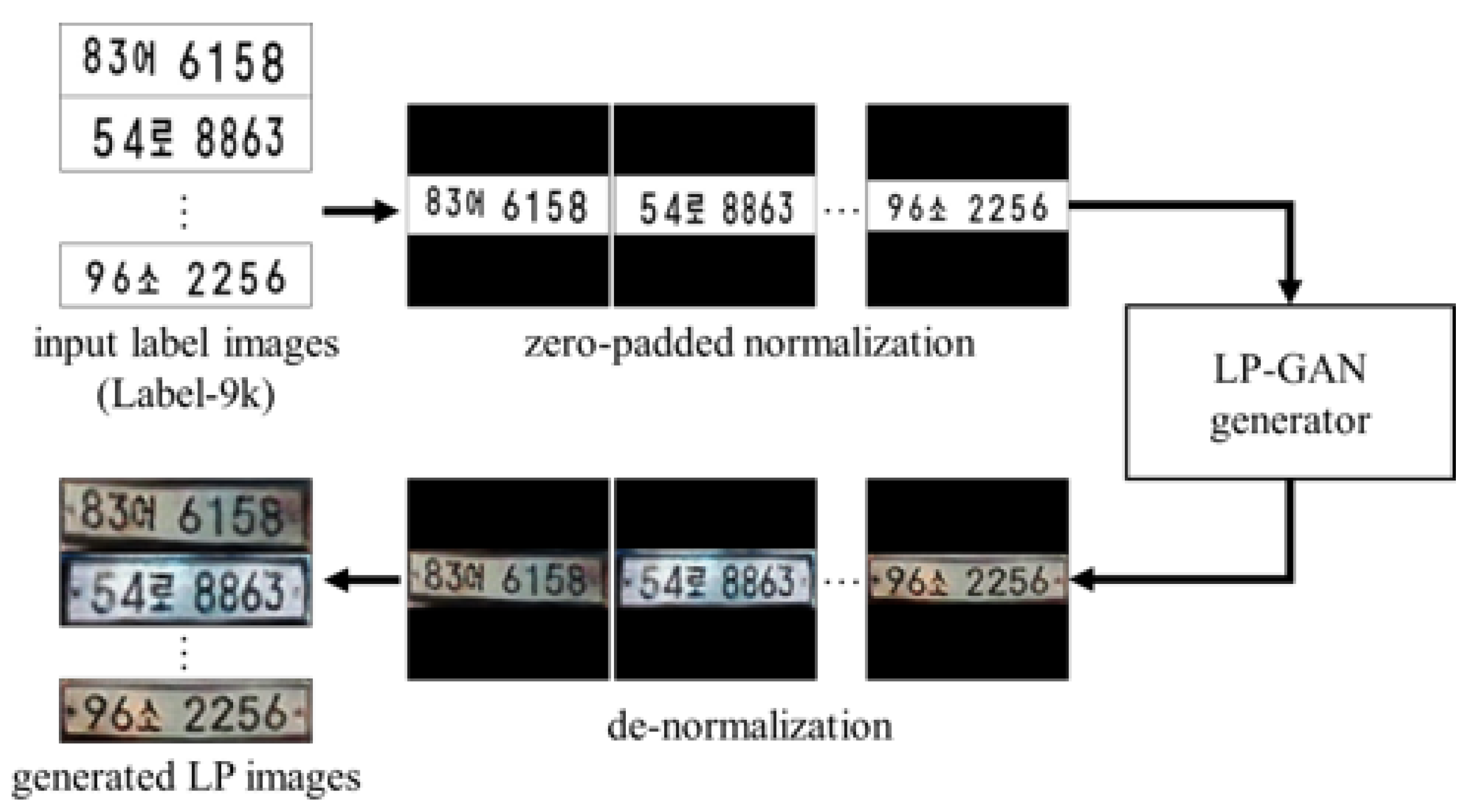

3.2. License Plate Image Generation

4. Segmentation-Free End-to-End LPCR By Object Detector

5. Experimental Section

5.1. Datasets

5.1.1. Web-Scraped Real Images

5.1.2. Generated Datasets by LP-GAN

5.1.3. Real Datasets for Comparison and Testing

5.2. Implementation Details

5.2.1. LP Generation

5.2.2. LP Recognition

5.3. Experimental Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Du, S.; Ibrahim, M.; Shehata, M.; Badawy, W. Automatic license plate recognition (ALPR): A state-of-the-art review. IEEE Trans. Circuits Syst. Video Technol. 2012, 23, 311–325. [Google Scholar] [CrossRef]

- Anagnostopoulos, C.N.E.; Anagnostopoulos, I.E.; Psoroulas, I.D.; Loumos, V.; Kayafas, E. License plate recognition from still images and video sequences: A survey. IEEE Trans. Intell. Transp. Syst. 2008, 9, 377–391. [Google Scholar] [CrossRef]

- Lee, J.T.; Ryoo, M.S.; Riley, M.; Aggarwal, J. Real-time illegal parking detection in outdoor environments using 1-D transformation. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 1014–1024. [Google Scholar] [CrossRef]

- Kim, K.J.; Kim, P.K.; Chung, Y.S.; Choi, D.H. Multi-Scale Detector for Accurate Vehicle Detection in Traffic Surveillance Data. IEEE Access 2019, 7, 78311–78319. [Google Scholar] [CrossRef]

- Weber, M. Caltech Cars Dataset. 1999. Available online: http://www.vision.caltech.edu/Image_Datasets/cars_markus/cars_markus.tar (accessed on 28 February 2020).

- Laroca, R.; Severo, E.; Zanlorensi, L.A.; Oliveira, L.S.; Gonçalves, G.R.; Schwartz, W.R.; Menotti, D. A robust real-time automatic license plate recognition based on the YOLO detector. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio, Brazil, 8–13 July 2018; pp. 1–10. [Google Scholar]

- Srebrić, V. EnglishLP Database. 2003. Available online: http://www.zemris.fer.hr/projects/LicensePlates/english/baza_slika.zip (accessed on 28 February 2020).

- Dlagnekov, L.; Belongie, S. UCSD-Stills Dataset. 2005. Available online: http://vision.ucsd.edu/belongie-grp/research/carRec/car_data.html (accessed on 28 February 2020).

- Zhou, W.; Li, H.; Lu, Y.; Tian, Q. Principal visual word discovery for automatic license plate detection. IEEE Trans. Image Process. 2012, 21, 4269–4279. [Google Scholar] [CrossRef] [PubMed]

- Hsu, G.S.; Chen, J.C.; Chung, Y.Z. Application-oriented license plate recognition. IEEE Trans. Veh. Technol. 2012, 62, 552–561. [Google Scholar] [CrossRef]

- OpenALPR Inc. OpenALPR-EU Dataset. 2016. Available online: https://github.com/openalpr/benchmarks/tree/master/endtoend/eu (accessed on 28 February 2020).

- Gonçalves, G.R.; da Silva, S.P.G.; Menotti, D.; Schwartz, W.R. Benchmark for license plate character segmentation. J. Electron. Imaging 2016, 25, 053034. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Denton, E.L.; Chintala, S.; Szlam, A.; Fergus, R. Deep generative image models using a laplacian pyramid of adversarial networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 1486–1494. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the International Conference on MACHINE Learning, Sydney, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar]

- Zhao, J.; Mathieu, M.; LeCun, Y. Energy-based generative adversarial network. arXiv 2016, arXiv:1609.03126. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8789–8797. [Google Scholar]

- Kim, T.; Cha, M.; Kim, H.; Lee, J.K.; Kim, J. Learning to discover cross-domain relations with generative adversarial networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 1857–1865. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 694–711. [Google Scholar]

- Zhu, J.Y.; Krähenbühl, P.; Shechtman, E.; Efros, A.A. Generative visual manipulation on the natural image manifold. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 597–613. [Google Scholar]

- Hongliang, B.; Changping, L. A hybrid license plate extraction method based on edge statistics and morphology. In Proceedings of the 17th International Conference on Pattern Recognition, ICPR 2004, Cambridge, UK, 23–26 August 2004; Volume 2, pp. 831–834. [Google Scholar]

- Zheng, D.; Zhao, Y.; Wang, J. An efficient method of license plate location. Pattern Recognit. Lett. 2005, 26, 2431–2438. [Google Scholar] [CrossRef]

- Wu, H.H.P.; Chen, H.H.; Wu, R.J.; Shen, D.F. License plate extraction in low resolution video. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 1, pp. 824–827. [Google Scholar]

- Xu, H.K.; Yu, F.H.; Jiao, J.H.; Song, H.S. A new approach of the vehicle license plate location. In Proceedings of the Sixth International Conference on Parallel and Distributed Computing Applications and Technologies (PDCAT’05), Dalian, China, 5–8 December 2005; pp. 1055–1057. [Google Scholar]

- Lee, E.R.; Kim, P.K.; Kim, H.J. Automatic recognition of a car license plate using color image processing. In Proceedings of the 1st International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; Volume 2, pp. 301–305. [Google Scholar]

- Matas, J.; Zimmermann, K. Unconstrained licence plate and text localization and recognition. In Proceedings of the 2005 IEEE Intelligent Transportation Systems, Vienna, Austria, 13–16 September 2005; pp. 225–230. [Google Scholar]

- Han, B.G.; Lee, J.T.; Lim, K.T.; Chung, Y. Real-Time License Plate Detection in High-Resolution Videos Using Fastest Available Cascade Classifier and Core Patterns. Etri J. 2015, 37, 251–261. [Google Scholar] [CrossRef]

- Kanayama, K.; Fujikawa, Y.; Fujimoto, K.; Horino, M. Development of vehicle-license number recognition system using real-time image processing and its application to travel-time measurement. In Proceedings of the 41st IEEE Vehicular Technology Conference, St. Louis, MO, USA, 19–21 May 1991; pp. 798–804. [Google Scholar]

- Rahman, C.A.; Badawy, W.; Radmanesh, A. A real time vehicle’s license plate recognition system. In Proceedings of the IEEE Conference on Advanced Video and Signal Based Surveillance, Miami, FL, USA, 21–22 July 2003; pp. 163–166. [Google Scholar]

- Guo, J.M.; Liu, Y.F. License plate localization and character segmentation with feedback self-learning and hybrid binarization techniques. IEEE Trans. Veh. Technol. 2008, 57, 1417–1424. [Google Scholar]

- Capar, A.; Gokmen, M. Concurrent segmentation and recognition with shape-driven fast marching methods. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 1, pp. 155–158. [Google Scholar]

- Yoon, Y.; Ban, K.D.; Yoon, H.; Lee, J.; Kim, J. Best combination of binarization methods for license plate character segmentation. ETRI J. 2013, 35, 491–500. [Google Scholar] [CrossRef]

- Comelli, P.; Ferragina, P.; Granieri, M.N.; Stabile, F. Optical recognition of motor vehicle license plates. IEEE Trans. Veh. Technol. 1995, 44, 790–799. [Google Scholar] [CrossRef]

- Chang, S.L.; Chen, L.S.; Chung, Y.C.; Chen, S.W. Automatic license plate recognition. IEEE Trans. Intell. Transp. Syst. 2004, 5, 42–53. [Google Scholar] [CrossRef]

- Türkyılmaz, İ.; Kaçan, K. License plate recognition system using artificial neural networks. ETRI J. 2017, 39, 163–172. [Google Scholar] [CrossRef]

- Yuan, Y.; Zou, W.; Zhao, Y.; Wang, X.; Hu, X.; Komodakis, N. A robust and efficient approach to license plate detection. IEEE Trans. Image Process. 2016, 26, 1102–1114. [Google Scholar] [CrossRef] [PubMed]

- Meng, A.; Yang, W.; Xu, Z.; Huang, H.; Huang, L.; Ying, C. A robust and efficient method for license plate recognition. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1713–1718. [Google Scholar]

- Špaňhel, J.; Sochor, J.; Juránek, R.; Herout, A.; Maršík, L.; Zemčík, P. Holistic recognition of low quality license plates by cnn using track annotated data. In Proceedings of the 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical image computing and computer-assisted intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Demir, U.; Unal, G. Patch-based image inpainting with generative adversarial networks. arXiv 2018, arXiv:1803.07422. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Paul Smolley, S. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- CycleGAN and pix2pix in PyTorch. Available online: https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix (accessed on 28 February 2020).

- StarGAN. Available online: https://github.com/yunjey/stargan (accessed on 28 February 2020).

- PyTorch org. Available online: https://pytorch.org (accessed on 28 February 2020).

| Dataset | Number of Images | Country | Year |

|---|---|---|---|

| Caltech Cars [5] | 126 | USA | 1999 |

| EnglishLP [7] | 509 | Europe | 2003 |

| UCSD-Stills [8] | 291 | USA | 2005 |

| ChineseLP [9] | 411 | China | 2012 |

| AOLP [10] | 2049 | Taiwan | 2013 |

| OpenALPR-EU [11] | 108 | Europe | 2016 |

| SSIG-SegPlate [12] | 2000 | Brazil | 2016 |

| UFPR-ALPR [6] | 4500 | Brazil | 2018 |

| No. | Layer Type | Filters | Size / Stride | Output |

|---|---|---|---|---|

| 0 | Convolutional | 16 | 3 × 3 | 416 × 416 × 16 |

| 1 | Maxpool | - | 2 × 2 / 2 | 208 × 208 × 16 |

| 2 | Convolutional | 32 | 3 × 3 | 208 × 208 × 32 |

| 3 | Maxpool | - | 2 × 2 / 2 | 104 × 104 × 32 |

| 4 | Convolutional | 64 | 3 × 3 | 104 × 104 × 64 |

| 5 | Convolutional | 32 | 1 × 1 | 104 × 104 × 32 |

| 6 | Convolutional | 64 | 3 × 3 | 104 × 104 × 64 |

| 7 | Maxpool | - | 2 × 2 / 2 | 52 × 52 × 64 |

| 8 | Convolutional | 128 | 3 × 3 | 52 × 52 × 128 |

| 9 | Convolutional | 64 | 1 × 1 | 52 × 52 × 64 |

| 10 | Convolutional | 128 | 3 × 3 | 52 × 52 × 128 |

| 11 | Maxpool | - | 2 × 2 / 2 | 26 × 26 × 128 |

| 12 | Convolutional | 256 | 3 × 3 | 26 × 26 × 256 |

| 13 | Convolutional | 128 | 1 × 1 | 26 × 26 × 128 |

| 14 | Convolutional | 256 | 3 × 3 | 26 × 26 × 256 |

| 15 | Convolutional | 128 | 1 × 1 | 26 × 26 × 128 |

| 16 | Convolutional | 256 | 3 × 3 | 26 × 26 × 256 |

| 17 | Maxpool | - | 2 × 2 / 2 | 13 × 13 × 256 |

| 18 | Convolutional | 512 | 3 × 3 | 13 × 13 × 512 |

| 19 | Convolutional | 256 | 1 × 1 | 13 × 13 × 256 |

| 20 | Convolutional | 512 | 3 × 3 | 13 × 13 × 512 |

| 21 | Convolutional | 256 | 1 × 1 | 13 × 13 × 256 |

| 22 | Convolutional | 512 | 3 × 3 | 13 × 13 × 512 |

| 23 | Convolutional | 512 | 3 × 3 | 13 × 13 × 512 |

| 24 | Convolutional | 512 | 3 × 3 | 13 × 13 × 512 |

| 25 | Route 16 | - | - | 26 × 26 × 256 |

| 26 | Convolutional | 32 | 1 × 1 | 26 × 26 × 32 |

| 27 | Reorg. | - | / 2 | 13 × 13 × 128 |

| 28 | Route 27 24 | - | - | 13 × 13 × 640 |

| 29 | Convolutional | 512 | 3 × 3 | 13 × 13 × 512 |

| 30 | Convolutional | 250 | 1 × 1 | 13 × 13 × 250 |

| Dataset | Description |

|---|---|

| Web_159 | Real LP images from Web-Scraping |

| pix2pix_cGAN_9k CycleGAN_9k StarGAN_9k | 9000 generated LP images by three state-of-the-art GAN based LP-GAN from Label_9k |

| pix2pix_cGAN_3k CycleGAN_3k StarGAN_3k | Randomly selected from each of pix2pix_cGAN_9k, CycleGAN_9k, StarGAN_9k |

| Ensemble_9k | Combined generated LP images.(pix2pix_cGAN_3k + CycleGAN_3k + StarGAN_3k) |

| Ensemble_3k | Combined randomly selected 1000 LP images from each of pix2pix_cGAN_3k, CycleGAN_3k, StarGAN_3k |

| Real_9k | 9000 real LP images for training data |

| Real_3k | 3000 real LP images randomly selected from Real_9k |

| Real_159 | 159 real LP images randomly selected from Real_3k |

| Test_13k | 13,117 real LP images for LPCR testing |

| Training Dataset | 1st | 2nd | 3rd | 4th | 5th | 6th | 7th | Overall |

|---|---|---|---|---|---|---|---|---|

| (num) | (num) | (char) | (num) | (num) | (num) | (num) | ||

| Web_159 | 99.85 | 99.87 | 94.69 | 99.89 | 99.92 | 99.86 | 99.86 | 94.45 |

| Real_9k | 99.97 | 99.98 | 99.84 | 99.98 | 99.98 | 99.99 | 99.98 | 99.78 |

| Real_3k | 99.96 | 99.95 | 99.80 | 99.98 | 99.98 | 99.99 | 99.98 | 99.72 |

| Real_159 | 99.94 | 99.95 | 97.95 | 99.95 | 99.95 | 99.96 | 99.95 | 97.85 |

| pix2pix_cGAN_9k | 99.85 | 99.86 | 96.57 | 99.92 | 99.94 | 99.95 | 99.83 | 96.33 |

| CycleGAN_9k | 99.35 | 99.38 | 94.97 | 99.38 | 99.56 | 99.45 | 98.98 | 93.59 |

| StarGAN_9k | 99.43 | 99.36 | 95.21 | 99.48 | 99.45 | 99.61 | 99.45 | 94.23 |

| pix2pix_cGAN_3k | 99.87 | 99.86 | 94.16 | 99.89 | 99.91 | 99.92 | 99.80 | 93.91 |

| CycleGAN_3k | 99.13 | 99.32 | 90.56 | 99.29 | 99.46 | 99.51 | 99.13 | 89.48 |

| StarGAN_3k | 99.51 | 99.29 | 93.25 | 99.48 | 99.56 | 99.41 | 99.17 | 92.13 |

| Ensemble_9k | 99.86 | 99.92 | 99.02 | 99.93 | 99.95 | 99.95 | 99.88 | 98.72 |

| Ensemble_3k | 99.80 | 99.86 | 95.94 | 99.90 | 99.94 | 99.92 | 99.81 | 95.56 |

| Overall Accuracy (%) | Average Processing Time (ms) | Required GPU Memory (MB) | Number of FLOPs (Bn) | |

|---|---|---|---|---|

| Original YOLOv2 | 99.95 | 22 | 1006 | 29.41 |

| Proposed YOLOv2 | 99.78 | 13 | 474 | 7.45 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, B.-G.; Lee, J.T.; Lim, K.-T.; Choi, D.-H. License Plate Image Generation using Generative Adversarial Networks for End-To-End License Plate Character Recognition from a Small Set of Real Images. Appl. Sci. 2020, 10, 2780. https://doi.org/10.3390/app10082780

Han B-G, Lee JT, Lim K-T, Choi D-H. License Plate Image Generation using Generative Adversarial Networks for End-To-End License Plate Character Recognition from a Small Set of Real Images. Applied Sciences. 2020; 10(8):2780. https://doi.org/10.3390/app10082780

Chicago/Turabian StyleHan, Byung-Gil, Jong Taek Lee, Kil-Taek Lim, and Doo-Hyun Choi. 2020. "License Plate Image Generation using Generative Adversarial Networks for End-To-End License Plate Character Recognition from a Small Set of Real Images" Applied Sciences 10, no. 8: 2780. https://doi.org/10.3390/app10082780

APA StyleHan, B.-G., Lee, J. T., Lim, K.-T., & Choi, D.-H. (2020). License Plate Image Generation using Generative Adversarial Networks for End-To-End License Plate Character Recognition from a Small Set of Real Images. Applied Sciences, 10(8), 2780. https://doi.org/10.3390/app10082780