A New Differential Mutation Based Adaptive Harmony Search Algorithm for Global Optimization

Abstract

1. Introduction

- (1)

- For the pitch adjustment operator of HS, a larger bandwidth is easier to jump out of the local optimum, while a smaller bandwidth biases to find a promising solution for the fining search. Therefore, a fixed step size is not an ideal choice.

- (2)

- It is difficult to find the optimal solution with a constant execution probability and an adaptive adjusting method is required.

- (3)

- Parameter HMS has an important influence on the performance of algorithms. An adaptive sizing HMS is possible to enhance the performance of the algorithm.

- (1)

- The pitch adjustment strategy is implemented with differential mutation. Adjust the pitch adjusting rate PAR and the scaling factor F with periodic learning strategy. Linear population size reduction strategy is adopted for HMS changing scheme.

- (2)

- The cooperation and effects of several strategies are analyzed step by step.

2. Harmony Search and Several Variants

2.1. Harmony Search Algorithm

| Algorithm 1: | General Framework of the Harmony Search (HS). |

| 1: | //Initialize the problem and algorithm parameters// : objective function HMS: harmony memory size HMCR: harmony memory considering rate PAR: pitch adjusting rate bw: bandwidth DIM: dimension of decision variable : maximum number of function evaluations : the lower and upper bounds of the i-th component for the decision vector |

| 2: | //Initialize the harmony memory// |

| 3: | //Improvise a new harmony// |

| 4: | //Update the harmony memory// |

| 5: | //Check the stopping criterion// If the termination condition is met, stop and output the best individual. Otherwise, the process will repeat from Step 3. |

2.2. The Improved Harmony Search Algorithm (IHS)

2.3. A Self-Adaptive Global-Best Harmony Search (SGHS)

2.4. An Intelligent Global Harmony Search Algorithm (IGHS)

| Algorithm 2: | Main framework of the intelligent global harmony search algorithm (IGHS). |

else | |

3. Adaptive Harmony Search with Differential Evolution

3.1. Differential Evolution

3.2. Linear Population Size Reduction

3.3. Differential Mutation in the Pitch Adjustment Operator

3.4. Self-Adaptive PAR and F

| Algorithm 3: | Parameters updating of the means of PAR (PARm) and F (Fm). |

lp=1; else lp=lp+1 endif |

3.5. aHSDE Algorithm Framework

| Algorithm 4: | Framework of the new adaptive harmony search algorithm (aHSDE). |

| 1: | //Initialize the problem and parameters// : objective function HMSmax: the maximum value of the harmony memory size HMSmin: the minimum value of the harmony memory size HMCR: harmony memory considering rate PARm: the mean of pitch adjusting rate Fm: the mean of the scaled factor bw: bandwidth LP: learning period : maximum number of function evaluation : the lower and upper bounds of the decision vector |

| 2: | //Initialize the harmony memory// |

| 3: | //Improvise a new harmony//

|

| 4: | //Update the harmony memory// Record the generation of PAR, F and the fitness difference. |

| 5: | //Check the stopping criterion// If the termination condition is met, stop and output the best individual. Otherwise, the process will repeat from Step 3. |

4. Experimental Comparison and Analysis

4.1. Parameters and Benchmark Functions

4.2. How HMS Changes

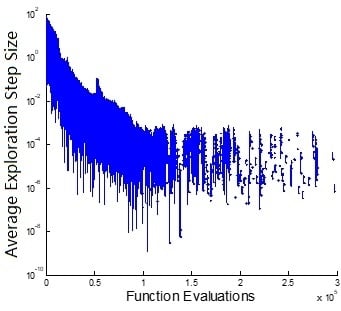

4.3. Effect of Differential Evolution Based Mutation

4.4. How PAR and F Change

4.5. Combined Adaptability Consideration for PAR and F

5. Experimental Comparison with HS Variants and Well-Known EAs

5.1. aHSDE vs. HS Variants

5.2. Overall Statistical Comparison among HS Variants

5.3. Comparison with Other Well-Known EAs

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Geem, Z.W.; Kim, J.H.; Loganathan, G. A New Heuristic Optimization Algorithm: Harmony Search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Geem, Z.W. Optimal cost design of water distribution networks using harmony search. Eng. Optim. 2006, 38, 259–277. [Google Scholar] [CrossRef]

- Mahdavi, M.; Fesanghary, M.; Damangir, E. An improved harmony search algorithm for solving optimization problems. Appl. Math. Comput. 2007, 188, 1567–1579. [Google Scholar] [CrossRef]

- Pan, Q.-K.; Suganthan, P.N.; Tasgetiren, M.F.; Liang, J. A self-adaptive global best harmony search algorithm for continuous optimization problems. Appl. Math. Comput. 2010, 216, 830–848. [Google Scholar] [CrossRef]

- Zhao, F.; Liu, Y.; Zhang, C.; Wang, J. A self-adaptive harmony PSO search algorithm and its performance analysis. Expert Syst. Appl. 2015, 42, 7436–7455. [Google Scholar] [CrossRef]

- Valian, E.; Tavakoli, S.; Mohanna, S. An intelligent global harmony search approach to continuous optimization problems. Appl. Math. Comput. 2014, 232, 670–684. [Google Scholar] [CrossRef]

- Luo, K.; Ma, J.; Zhao, Q. Enhanced self-adaptive global-best harmony search without any extra statistic and external archive. Inf. Sci. 2019, 482, 228–247. [Google Scholar] [CrossRef]

- Geem, Z.W.; Sim, K.-B. Parameter-setting-free harmony search algorithm. Appl. Math. Comput. 2010, 217, 3881–3889. [Google Scholar] [CrossRef]

- Ouyang, H.-B.; Gao, L.-Q.; Li, S.; Kong, X.-Y.; Wang, Q.; Zou, D.-X. Improved Harmony Search Algorithm: LHS. Appl. Soft Comput. 2017, 53, 133–167. [Google Scholar] [CrossRef]

- Assad, A.; Deep, K. A Hybrid Harmony search and Simulated Annealing algorithm for continuous optimization. Inf. Sci. 2018, 450, 246–266. [Google Scholar] [CrossRef]

- Zhu, Q.; Tang, X.; Li, Y.; Yeboah, M.O. An improved differential-based harmony search algorithm with linear dynamic domain. Knowl.-Based Syst. 2020, 187, 104809. [Google Scholar] [CrossRef]

- Zhang, T.; Geem, Z.W. Review of harmony search with respect to algorithm structure. Swarm Evol. Comput. 2019, 48, 31–43. [Google Scholar] [CrossRef]

- Saka, M.; Hasançebi, O.; Geem, Z.W. Metaheuristics in structural optimization and discussions on harmony search algorithm. Swarm Evol. Comput. 2016, 28, 88–97. [Google Scholar] [CrossRef]

- Geem, Z.W. Novel derivative of harmony search algorithm for discrete design variables. Appl. Math. Comput. 2008, 199, 223–230. [Google Scholar] [CrossRef]

- Couckuyt, I.; Deschrijver, D.; Dhaene, T. Fast calculation of multiobjective probability of improvement and expected improvement criteria for Pareto optimization. J. Glob. Optim. 2013, 60, 575–594. [Google Scholar] [CrossRef]

- Manjarrés, D.; Landa-Torres, I.; Gil-Lopez, S.; Del Ser, J.; Bilbao, M.; Salcedo-Sanz, S.; Geem, Z.W. A survey on applications of the harmony search algorithm. Eng. Appl. Artif. Intell. 2013, 26, 1818–1831. [Google Scholar] [CrossRef]

- Ertenlice, O.; Kalayci, C.B.; Kalaycı, C.B. A survey of swarm intelligence for portfolio optimization: Algorithms and applications. Swarm Evol. Comput. 2018, 39, 36–52. [Google Scholar] [CrossRef]

- Geem, Z.W.; Kim, J.; Loganathan, G. Harmony Search Optimization: Application to Pipe Network Design. Int. J. Model. Simul. 2002, 22, 125–133. [Google Scholar] [CrossRef]

- Zhao, X.; Liu, Z.; Hao, J.; Li, R.; Zuo, X. Semi-self-adaptive harmony search algorithm. Nat. Comput. 2017, 16, 619–636. [Google Scholar] [CrossRef]

- Yi, J.; Gao, L.; Li, X.; Shoemaker, C.A.; Lu, C. An on-line variable-fidelity surrogate-assisted harmony search algorithm with multi-level screening strategy for expensive engineering design optimization. Knowl.-Based Syst. 2019, 170, 1–19. [Google Scholar] [CrossRef]

- Vasebi, A.; Fesanghary, M.; Bathaee, S. Combined heat and power economic dispatch by harmony search algorithm. Int. J. Electr. Power Energy Syst. 2007, 29, 713–719. [Google Scholar] [CrossRef]

- Geem, Z.W. Optimal Scheduling of Multiple Dam System Using Harmony Search Algorithm. In Proceedings of the International Work Conference on Artificial Neural Networks, San Sebastin, Spain, 20−22 June 2007; LNCS 4507. pp. 316–323. [Google Scholar]

- Geem, Z.W. Harmony search optimization to the pump-included water distribution network design. Civ. Eng. Environ. Syst. 2009, 26, 211–221. [Google Scholar] [CrossRef]

- Geem, Z.W. Particle-swarm harmony search for water network design. Eng. Optim. 2009, 41, 297–311. [Google Scholar] [CrossRef]

- Lin, Q.; Chen, J. A novel micro-population immune multiobjective optimization algorithm. Comput. Oper. Res. 2013, 40, 1590–1601. [Google Scholar] [CrossRef]

- Manjarres, D.; Ser, J.D.; Gil-Lopez, S.; Vecchio, M.; Landa-Torres, I.; Lopez-Valcarce, R. A novel heuristic approach for distance-and connectivity-based multi-hop node localization in wireless sensor networks. Soft Comput. 2013, 17, 17–28. [Google Scholar] [CrossRef]

- Landa-Torres, I.; Gil-Lopez, S.; Ser, J.D.; Salcedo-Sanz, S.; Manjarres, D.; Portilla-Figueras, J.A. Efficient citywide planning of open WiFi access networks using novel grouping harmony search heuristics. Eng. Appl. Artif. Intell. 2013, 26, 1124–1130. [Google Scholar] [CrossRef]

- Peng, Z.-R.; Yin, H.; Dong, H.-T.; Li, H.; Pan, A. A Harmony Search Based Low-Delay and Low-Energy Wireless Sensor Network. Int. J. Future Gener. Commun. Netw. 2015, 8, 21–32. [Google Scholar] [CrossRef]

- Mohsen, A. A Robust Harmony Search Algorithm Based Markov Model for Node Deployment in Hybrid Wireless Sensor Networks. Int. J. Geomate 2016, 11. [Google Scholar] [CrossRef]

- Nikravan, M. Combining Harmony Search and Learning Automata for Topology Control in Wireless Sensor Networks. Int. J. Wirel. Mob. Netw. 2012, 4, 87–98. [Google Scholar] [CrossRef]

- Degertekin, S.O. Improved harmony search algorithms for sizing optimization of truss structures. Comput. Struct. 2012, 92, 229–241. [Google Scholar] [CrossRef]

- Kim, Y.-H.; Yoon, Y.; Geem, Z.W. A comparison study of harmony search and genetic algorithm for the max-cut problem. Swarm Evol. Comput. 2019, 44, 130–135. [Google Scholar] [CrossRef]

- Boryczka, U.; Szwarc, K. The Harmony Search algorithm with additional improvement of harmony memory for Asymmetric Traveling Salesman Problem. Expert Syst. Appl. 2019, 122, 43–53. [Google Scholar] [CrossRef]

- Seyedhosseini, S.M.; Esfahani, M.J.; Ghaffari, M. A novel hybrid algorithm based on a harmony search and artificial bee colony for solving a portfolio optimization problem using a mean-semi variance approach. J. Cent. South Univ. 2016, 23, 181–188. [Google Scholar] [CrossRef]

- Shams, M.; El-Banbi, A.; Sayyouh, H. Harmony search optimization applied to reservoir engineering assisted history matching. Pet. Explor. Dev. 2020, 47, 154–160. [Google Scholar] [CrossRef]

- Lee, K.S.; Geem, Z.W. A new structural optimization method based on the harmony search algorithm. Comput. Struct. 2004, 82, 781–798. [Google Scholar] [CrossRef]

- Price, K.; Storn, R.; Lampinen, J. Differential Evolution: A Practical Approach to Global Optimization; Springer Science & Business Media: Berlin, Germany, 2006. [Google Scholar]

- Park, S.-Y.; Lee, J.-J. Stochastic Opposition-Based Learning Using a Beta Distribution in Differential Evolution. IEEE Trans. Cybern. 2015, 46, 2184–2194. [Google Scholar] [CrossRef]

- Qin, A.; Forbes, F. Harmony search with differential mutation based pitch adjustment. In Proceedings of the 13th Annual Conference on Genetic and Evolutionary Computation-GECCO’11; Association for Computing Machinery (ACM), Dublin, Ireland, 12−16 July 2011; pp. 545–552. [Google Scholar]

- Tanabe, R.; Fukunaga, A.S.; Tanabe, R. Improving the search performance of SHADE using linear population size reduction. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6−11 July 2014; pp. 1658–1665. [Google Scholar] [CrossRef]

- Pant, M.; Zaheer, H.; Garcia-Hernandez, L.; Abraham, A. Differential Evolution: A review of more than two decades of research. Eng. Appl. Artif. Intell. 2020, 90, 103479. [Google Scholar] [CrossRef]

- Peng, F.; Tang, K.; Chen, G.; Yao, X. Multi-start JADE with knowledge transfer for numerical optimization. In Proceedings of the 2009 IEEE Congress on Evolutionary Computation, Trondheim, Norway, 18−21 May 2009; pp. 1889–1895. [Google Scholar] [CrossRef]

- Zhan, Z.-H.; Zhang, J.; Li, Y.; Chung, H.S.-H. Adaptive particle swarm optimization. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2009, 39, 1362–1381. [Google Scholar] [CrossRef]

- Hansen, N.; Müller, S.D.; Koumoutsakos, P. Reducing the Time Complexity of the Derandomized Evolution Strategy with Covariance Matrix Adaptation (CMA-ES). Evol. Comput. 2003, 11, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Liang, J.J.; Qu, B.Y.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2014 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China; Technical Report, Nanyang Technological University: Singapore, 2013. [Google Scholar]

- Xie, F.; Butt, M.M.; Li, Z. A feasible flow-based iterative algorithm for the two-level hierarchical time minimization transportation problem. Comput. Oper. Res. 2017, 86, 124–139. [Google Scholar] [CrossRef]

- Singh, K.; Jain, A.; Mittal, A.; Yadav, V.; Singh, A.A.; Jain, A.K.; Gupta, M. Optimum transistor sizing of CMOS logic circuits using logical effort theory and evolutionary algorithms. Integration 2018, 60, 25–38. [Google Scholar] [CrossRef]

- Subashini, M.M.; Sahoo, S.K.; Sunil, V.; Easwaran, S. A non-invasive methodology for the grade identification of astrocytoma using image processing and artificial intelligence techniques. Expert Syst. Appl. 2016, 43, 186–196. [Google Scholar] [CrossRef]

- Shang, R.H.; Dai, K.Y.; Jiao, L.C.; Stolkin, R. Improved memetic algorithm based on route distance grouping for Multi-objective Large Scale Capacitated Arc Routing Problems. IEEE Trans. Cybern. 2016, 6, 1000–1013. [Google Scholar] [CrossRef]

- Li, J.-Q.; Pan, Q.-K.; Tasgetiren, M.F. A discrete artificial bee colony algorithm for the multi-objective flexible job-shop scheduling problem with maintenance activities. Appl. Math. Model. 2014, 38, 1111–1132. [Google Scholar] [CrossRef]

| PAR/F | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 |

|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 1.28 × 107 | 1.35 × 107 | 1.17 × 107 | 7.01 × 106 | 8.09 × 106 | 2.08 × 106 | 1.10 × 106 | 1.02 × 106 | 1.17 × 106 |

| 0.2 | 7.20 × 106 | 7.11 × 106 | 5.56 × 106 | 1.27 × 106 | 8.57 × 105 | 7.69 × 105 | 7.01 × 105 | 6.36 × 105 | 7.72 × 105 |

| 0.3 | 8.54 × 106 | 6.17 × 106 | 2.11 × 106 | 5.26 × 105 | 4.86 × 105 | 5.88 × 105 | 6.94 × 105 | 8.08 × 105 | 1.05 × 106 |

| 0.4 | 5.22 × 106 | 4.00 × 106 | 7.65 × 105 | 3.86 × 105 | 3.81 × 105 | 6.83 × 105 | 1.06 × 106 | 1.48 × 106 | 1.63 × 106 |

| 0.5 | 5.69 × 106 | 2.16 × 106 | 3.99 × 105 | 2.53 × 105 | 4.68 × 105 | 1.02 × 106 | 1.65 × 106 | 2.20 × 106 | 2.74 × 106 |

| 0.6 | 3.37 × 106 | 7.32 × 105 | 1.80 × 105 | 3.31 × 105 | 7.86 × 105 | 1.43 × 106 | 2.47 × 106 | 4.55 × 106 | 4.94 × 106 |

| 0.7 | 2.87 × 106 | 4.47 × 105 | 1.14 × 105 | 3.51 × 105 | 1.03 × 106 | 2.52 × 106 | 5.63 × 106 | 9.59 × 106 | 1.52 × 107 |

| 0.8 | 2.92 × 106 | 2.97 × 105 | 5.14 × 104 | 1.19 × 105 | 5.89 × 105 | 2.20 × 106 | 6.67 × 106 | 2.61 × 107 | 3.10 × 107 |

| 0.9 | 2.75 × 106 | 2.10 × 105 | 1.12 × 104 | 3.53 × 103 | 5.00 × 104 | 5.17 × 105 | 2.29 × 106 | 1.03 × 107 | 2.14 × 107 |

| Groups | aHSDE vs | HS(DIM = ) | IHS(DIM = ) | SGHS(DIM = ) | IGHS(DIM = ) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 50 | 100 | 10 | 50 | 100 | 10 | 50 | 100 | 10 | 50 | 100 | ||

| 3 Unimodal Functions | + | 3 | 3 | 3 | 3 | 2 | 3 | 3 | 2 | 3 | 3 | 3 | 3 |

| − | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| ~ | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | |

| 13 Simple Multimodal Functions | + | 6 | 11 | 12 | 5 | 11 | 13 | 8 | 11 | 10 | 9 | 13 | 13 |

| − | 4 | 1 | 1 | 6 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | |

| ~ | 3 | 1 | 0 | 2 | 1 | 0 | 4 | 2 | 3 | 4 | 0 | 0 | |

| 6 Hybrid Functions | + | 4 | 6 | 6 | 4 | 6 | 5 | 5 | 6 | 5 | 6 | 6 | 6 |

| − | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| ~ | 2 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | |

| 8 Composition Functions | + | 4 | 6 | 6 | 4 | 5 | 6 | 6 | 4 | 6 | 4 | 6 | 7 |

| − | 2 | 1 | 2 | 1 | 2 | 2 | 1 | 3 | 2 | 3 | 0 | 0 | |

| ~ | 2 | 1 | 0 | 3 | 1 | 0 | 1 | 1 | 0 | 1 | 2 | 1 | |

| 30 All Functions | + | 17 | 26 | 27 | 16 | 24 | 27 | 22 | 23 | 24 | 22 | 28 | 29 |

| − | 6 | 2 | 3 | 8 | 3 | 2 | 2 | 3 | 2 | 3 | 0 | 0 | |

| ~ | 7 | 2 | 0 | 6 | 3 | 1 | 6 | 4 | 4 | 5 | 2 | 1 | |

| Function | APSO | CMA-ES | aHSDE | Function | APSO | CMA-ES | aHSDE |

|---|---|---|---|---|---|---|---|

| f1 | 2.69 × 109 ± 3.28 × 108 | 9.42 × 104 ± 7.88 × 104 | 2.06 × 105 ± 9.12 × 104 | f16 | 1.42 × 10 ± 2.37 × 10−1 | 1.38 × 10 ± 5.31 × 10−1 | 1.78 × 10 ± 1.00 × 100 |

| f2 | 1.02 × 1011 ± 2.29 × 109 | 2.55 × 10 ± 3.85 × 109 | 5.77 × 103 ± 6.41 × 103 | f17 | 2.86 × 108 ± 1.28 × 108 | 5.49 × 103 ± 3.62 × 103 | 2.66 × 103 ± 1.83 × 103 |

| f3 | 1.19 × 106 ± 1.25 × 106 | 1.45 × 104 ± 5.66 × 103 | 1.25 × 100 ± 1.61 × 100 | f18 | 8.75 × 109 ± 3.11 × 109 | 1.52 × 109 ± 3.93 × 108 | 8.88 × 10 ± 3.11 × 10 |

| f4 | 2.49 × 104 ± 1.54 × 103 | 2.00 × 10 ± 2.63 × 10−5 | 4.43 × 10 ± 3.69 × 10 | f19 | 8.45 × 102 ± 1.15 × 102 | 2.98 × 102 ± 4.25 × 10 | 1.16 × 10 ± 1.42 × 100 |

| f5 | 2.13 × 10 ± 5.61 × 10−2 | 2.08 × 10 ± 6.69 × 10−2 | 2.01 × 10 ± 4.08 × 10−2 | f20 | 1.59 × 107 ± 1.37 × 107 | 4.61 × 103 ± 3.88 × 103 | 4.07 × 10 ± 9.82 × 100 |

| f6 | 4.80 × 10 ± 1.79 × 100 | 4.09 × 103 ± 2.13 × 100 | 2.02 × 10 ± 3.77 × 100 | f21 | 1.33 × 108 ± 7.50 × 107 | 6.86 × 103 ± 2.76 × 103 | 8.35 × 102 ± 2.36 × 102 |

| f7 | 1.06 × 103 ± 3.85 × 10 | 2.31 × 102 ± 2.83 × 10 | 2.14 × 10−3 ± 4.28 × 10−3 | f22 | 1.31 × 104 ± 9.38 × 103 | 1.61 × 103 ± 2.92 × 102 | 8.30 × 102 ± 2.96 × 102 |

| f8 | 5.03 × 102 ± 3.02 × 10 | 2.83 × 102 ± 2.21 × 10 | 7.41 × 10−8 ± 1.94 × 10−8 | f23 | 2.00 × 102 ± 0.00 × 100 | 5.79 × 102 ± 4.94 × 10 | 3.44 × 102 ± 0.00 × 100 |

| f9 | 4.78 × 102 ± 6.30 × 100 | 3.28 × 102 ± 7.65 × 10 | 7.89 × 10 ± 1.80 × 10 | f24 | 2.00 × 102 ± 0.00 × 100 | 2.12 × 102 ± 7.49 × 100 | 2.69 × 102 ± 6.50 × 100 |

| f10 | 9.30 × 103 ± 5.68 × 102 | 2.61 × 102 ± 1.06 × 102 | 1.94 × 10−1 ± 4.50 × 10−2 | f25 | 2.00 × 102 ± 0.00 × 100 | 2.12 × 102 ± 2.97 × 100 | 2.07 × 102 ± 2.04 × 100 |

| f11 | 9.24 × 103 ± 4.86 × 102 | 1.69 × 102 ± 1.98 × 102 | 4.71 × 103 ± 5.61 × 102 | f26 | 1.86 × 102 ± 2.68 × 10 | 1.25 × 10−2 ± 5.51 × 10−1 | 1.00 × 102 ± 6.01 × 10−2 |

| f12 | 5.91 × 100 ± 1.32× 100 | 3.03 × 10−1 ± 2.18 × 100 | 9.57 × 10−2 ± 4.16 × 10−2 | f27 | 2.00 × 102 ± 0.00 × 100 | 1.07 × 103 ± 2.30 × 102 | 8.76 × 102 ± 1.26 × 102 |

| f13 | 1.03 × 10 ± 7.53 × 10−1 | 5.51 × 100 ± 3.07 × 10−1 | 3.31 × 10−1 ± 6.32 × 10−2 | f28 | 2.00 × 102 ± 0.00 × 100 | 2.79 × 103 ± 5.92 × 102 | 1.28 × 103 ± 8.88 × 10 |

| f14 | 3.95 × 102 ± 2.22 × 10 | 7.53 × 10 ± 8.08 × 100 | 3.30 × 10−1 ± 1.12 × 10−1 | f29 | 2.00 × 102 ± 0.00 × 100 | 3.52 × 104 ± 5.34 × 103 | 2.35 × 107 ± 1.69 × 107 |

| f15 | 1.05 × 106 ± 0.00 × 100 | 1.02 × 104 ± 3.24 × 104 | 8.09 × 100 ± 2.32 × 100 | f30 | 2.00 × 102 ± 0.00 × 100 | 6.48 × 105 ± 1.31 × 105 | 8.93 × 103 ± 6.76 × 102 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, X.; Li, R.; Hao, J.; Liu, Z.; Yuan, J. A New Differential Mutation Based Adaptive Harmony Search Algorithm for Global Optimization. Appl. Sci. 2020, 10, 2916. https://doi.org/10.3390/app10082916

Zhao X, Li R, Hao J, Liu Z, Yuan J. A New Differential Mutation Based Adaptive Harmony Search Algorithm for Global Optimization. Applied Sciences. 2020; 10(8):2916. https://doi.org/10.3390/app10082916

Chicago/Turabian StyleZhao, Xinchao, Rui Li, Junling Hao, Zhaohua Liu, and Jianmei Yuan. 2020. "A New Differential Mutation Based Adaptive Harmony Search Algorithm for Global Optimization" Applied Sciences 10, no. 8: 2916. https://doi.org/10.3390/app10082916

APA StyleZhao, X., Li, R., Hao, J., Liu, Z., & Yuan, J. (2020). A New Differential Mutation Based Adaptive Harmony Search Algorithm for Global Optimization. Applied Sciences, 10(8), 2916. https://doi.org/10.3390/app10082916